Abstract

In this study, a novel AI-based modeling approach is introduced to estimate high-fidelity heat transfer calculations and predict thermal distortion in metal additive manufacturing, specifically for the multi-laser powder bed fusion (ML-PBF) process. The effects of start position and printing orientation on deformation and stress distribution in parts produced using the ML-PBF additive manufacturing process are investigated. A total of 512 simulations are executed, and the maximum and minimum deformation values are recorded and compared. A significant reduction, e.g., 53% in deformation, is observed between the best and worst printing cases. A low-fidelity modeling framework, based on a feedforward neural network, is developed for the rapid prediction of thermal displacement with high accuracy. The model with unknown test cases demonstrates a strong positive correlation (R = 0.88) between the high-fidelity and network-predicted low-fidelity outputs. The simplicity, computational efficiency, and ease of use of the developed model make it a valuable tool for preliminary evaluation and optimization in the early stages of the design process. By adjusting controlling factors and identifying trends in thermal history, the model can be scaled to a high-fidelity model for increased accuracy, significantly reducing development time and cost. The findings of this study provide valuable insights for designers and engineers working in the field of additive manufacturing, offering a better understanding of deformation/thermal displacement control and optimization in the ML-PBF process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

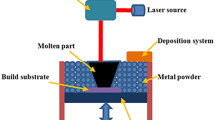

Laser powder bed fusion (L-PBF) is a highly advanced additive manufacturing (AM) process that utilizes a focused scanning laser beam to selectively melt powdered materials and create a solid mass. This technique adheres to the ISO/ASTM 52900:2015 standards, which provide a standardized set of terms and definitions for additive manufacturing concepts, processes, and materials [1]. Both single-laser powder bed fusion (SL-PBF) and multi-laser powder bed fusion (ML-PBF) can be used to fabricate metallic components. ML-PBF uses multiple lasers to melt metal powders and build parts layer-by-layer. Compared to SL-PBF, ML-PBF has several advantages such as higher build rates, improved part quality, and greater design flexibility [2, 3]. ML-PBF has several important process characteristics, including surface roughness, residual thermal stress, and deformation [4,5,6,7]. Process parameters such as the laser power, scan speed, layer thickness, hatch spacing, and powder bed temperature play a crucial role in determining the quality and properties of ML-PBF parts. Higher laser power and scan speed can lead to higher build rates but can also result in higher residual stresses and thermal gradients, leading to distortion and cracking [8, 9]. The layer thickness and hatch spacing affect the build rate and part quality. Thicker layers and wider hatch spacings can reduce build times but result in poor surface quality and mechanical properties. Due to its low productivity, which limits its business cases and market uptake, L-PBF may not be suitable for cost-sensitive industries. Herzog et al. [10] suggested combining L-PBF with hot isostatic pressing to improve part density. Experimental investigations showed that optimizing the process for speed while maintaining density above 95% resulted in a 67% increase in scan speed and a 26% saving in build time in a demonstrator build job. Slodczyk et al. [11] discussed the modulation of laser radiation to increase the processing speed in L-PBF while ensuring product quality in terms of reduced spatter generation. The investigation found that multi-laser distributions preserved the number of spatters per track at higher applied power and reduced the total number of spatters in a representative part. High-speed video recordings (with recording rate of 4000 Hz) showed that melt pools with high volume have a higher stability against impurities of the powder bed and spatters. One way to reduce processing time is to increase scan speed and laser power while maintaining comparable energy densities [12]. This approach is constrained by two limiting factors: the maximum scan speed of galvanometric scanners and the spattering of metal powder due to high laser power [13]. To overcome these challenges, multi-laser and multi-scanner L-PBF processes were developed. Multiple heat sources are employed in these processes in parallel to improve productivity, with independent utilization of multiple beams to melt separate scan fields with slight overlap [14]. In order to increase the part quality, a complementary approach is to use novel scan strategies. Scan patterns play a crucial role in reducing deformation and residual stress by dispersing thermal stress. Common scan strategies include area patching and the use of different scan vectors [15,16,17].

Experimental investigation of scan strategies can be expensive and time consuming. Hence, computational modeling can be used to reduce the time and cost. In the context of additive manufacturing, a high-fidelity model refers to a computational model that accurately represents the physical behavior and characteristics of the system being modeled. A high-fidelity model is expected to accurately predict the behavior of the system over a wide range of conditions, including variations in input parameters, material properties, and part geometry. It is also expected to capture the complex interactions and dependencies that exist between different components of the system. In L-PBF additive manufacturing, a high-fidelity model would accurately predict the thermal behavior, such as temperature gradients and thermal stresses, during the printing process. It would consider various factors that affect the thermal behavior, such as the thermal properties of the materials involved, the geometry and size of the part being printed, and the printing parameters, such as the laser power and scan speed. In L-PBF, the use of a high-fidelity model is important for optimizing the printing process and improving the quality and reliability of the printed parts. By accurately predicting the behavior of the system, a high-fidelity model can identify potential challenges and suggest modifications to the printing process that can lead to improved part quality with minimal defects.

The behavior of the printed part during and after the printing process can be predicted by high-fidelity models. This includes the prediction of distortion or warpage, residual stresses that may exist after the printing process, and the mechanical behavior of the part under different loading conditions. By using simulation models, the number of experimental trials required to optimize the printing process can be reduced, saving time and cost. Several comprehensive studies on the high-fidelity thermomechanical modeling in AM have been published recently [18,19,20,21,22,23,24,25,26]. The finite element (FE) method, which is widely used to predict thermal-induced deformation in L-PBF, is one of the approaches discussed in these articles. However, high-fidelity physics-based models are computationally very expensive in terms of required time and memory space. In L-PBF, material transformations take place in the length scale of 10 to 100 μm, and occur rapidly over a time frame of 10 ms. However, the parts being produced are considerably larger and require a significantly longer time frame of several hours to days to complete. To tackle this challenge, various modeling approaches have been proposed [27,28,29,30].

In this study, a low-fidelity predictive model is developed to replace the expensive high-fidelity physics-based model. The low-fidelity data-driven model utilizes the thermal history of the high-fidelity ML-PBF method as a learning curve. Once developed, the model can predict thermal distortion quickly based on the thermal history of the printed part and reduce the required simulation time and cost significantly. Thus, the developed low-fidelity model uses only the thermal history as an input, and the entire mechanical simulation of the high-fidelity model can be avoided. In addition, this study also investigates the impact of start position and printing orientation on the deformation and stress distribution in parts produced using multiple lasers. A comprehensive analysis of the effects of these parameters on the maximum and minimum deformation in the printed parts is conducted, and the best and worst-performing printing cases are identified. The feedforward neural network-based low-fidelity modeling framework rapidly predicts thermal displacement with high accuracy, providing a computationally efficient and accessible tool for preliminary evaluation and optimization of the ML-PBF process. The rest of the manuscript is organized as follows: Section 2 presents the simulation framework, including a physics-based high-fidelity model, and the mathematical governing equations. Section 3 describes the methodology for designing a data-driven low-fidelity model. Section 4 discusses the results, and finally, Section 5 offers concluding remarks.

2 Physics-based high-fidelity model

A high-fidelity model is a detailed and accurate representation of the process, aimed at precisely predicting and simulating the behavior of materials and process parameters involved. Material properties, such as particle size distribution, shape, and density, along with melting and solidification behavior, are accurately characterized. The interaction between the energy source and the powder bed is modeled in detail, considering energy absorption and reflection, as well as heat conduction and convection. Process parameters, including the laser power, scan speed, layer thickness, and hatch spacing, are thoroughly considered in high-fidelity models. Thermal history, temperature gradients, and the resulting mechanical stresses and strains induced during the L-PBF process are modeled, characterizing part distortion, residual stresses, and microstructural evolution. Detailed simulations of microstructural changes occurring during the L-PBF process, such as grain growth, phase transformations, and porosity development, are incorporated. By providing a better understanding of the underlying physics involved in the L-PBF process, high-fidelity models contribute to the optimization of process parameters, identification of potential defects or issues, improvement of overall part quality, and development of new materials and processes. In this context Netfabb can be used to develop high-fidelity model for ML-PBF method. Netfabb is a powerful software that employs adaptive meshing-based finite element modeling (FEM) techniques to perform complex simulations accurately. This paper utilizes Netfabb to evaluate the thermal distribution, stress values, and displacement magnitudes for the ML-PBF process. The nonlinear Newton-Raphson-based solver of Netfabb accurately forecasts the transient behavior of crucial parameters. Exceptional accuracy in predicting temperature, stresses, and distortion has been demonstrated by the software and the model has been validated in the open literature in the recent past [26, 31,32,33,34]. Netfabb’s modeling methodology assumes decoupled or weakly coupled behavior between thermal and mechanical factors [31]. This means that mechanical behavior is influenced by thermal history, but the reverse is not true. The Netfabb model accounts for various physical phenomena, such as the Marangoni convection, natural convection, radiation heat losses, and temperature-dependent thermophysical properties, that affect the ML-PBF process.

The shape and quality of the printed part can be significantly impacted by fluid flow due to the gradient in surface tension of a liquid, which is known as Marangoni convection. Similarly, natural convection, which is caused by temperature variations and density differences, can also affect the final part’s shape and quality. Radiation heat losses occur due to the transfer of heat from the heated metal to the environment through electromagnetic radiation, which can affect the overall temperature and heat transfer during the printing process. The physical properties of the printed material, such as density, viscosity, and thermal conductivity, may change with temperature due to temperature-dependent thermophysical properties, affecting the material’s flow and behavior during printing. Accurate predictions of the final part’s shape, quality, and microstructure during the metal 3D printing process can be provided through the Netfabb software by incorporating these physical phenomena into the model. The governing equations of heat transfer and solid mechanics are solved through a robust numerical approach to achieve this. Additionally, various process parameters, such as the laser power, scan speed, and layer thickness can be optimized to achieve the desired part quality with the Netfabb software. Therefore, Netfabb’s modeling methodology can be used to optimize the process parameters and ensure high-quality simulation accuracy of the printed parts.

2.1 Governing equations

The energy balance equation is a key equation in thermal investigations. To solve this equation, the Galerkin approach [35] is commonly utilized to convert it into a weak formulation. This method involves selecting a suitable set of basis functions that can accurately represent the solution of the partial differential equation. Then, coefficients of a linear combination of these basis functions are calculated to minimize the residual, which is the difference between the actual equation and the approximation. The transient heat condition for a component with material density of ρ and specific heat of CP, subject to a location and time-dependent heat source Q(x, t) and conductive heat flux q, can be derived as

The Fourier heat flux, which describes the flow of heat across a solid body with temperature-dependent thermal conductivity of k, can be calculated as

The heat loss through thermal radiation is modeled using the Stefan-Boltzmann law:

Here, ε, σSB, Ts, and T∞ are surface emissivity, Stefan-Boltzmann constant, surface temperature of the workpiece, and ambient temperature, respectively. The convective heat loss is defined according to Newton’s law of cooling as follows:

Here, heff is defined as the convective heat transfer coefficient. The quasi-static mechanical analysis records the mechanical response, where the thermal analysis results are imported as a thermal load at the beginning of the study. The governing mechanical stress equilibrium form can be written as follows [36]:

Here, σ describes the stress, and the mechanical constitutive law can be derived as follows:

Here, C is the isotropic material stiffness tensor of fourth order. The total strain can be obtained by taking the sum of the entire thermal (εT), second-order elastic (εe), and plastic (εP) strain tensor components.

To obtain the thermal strain, the following equation is used:

\({\varepsilon}_T^{\prime }=\alpha \left(T-{T}^{\textrm{refTemp}}\right)\) and j = [1 1 1 0 0 0]T. The variables, α, TrefTemp define the thermal expansion coefficient and reference temperature, respectively. The plastic strain is calculated through the von Mises yield criterion, together with the Prandtl-Reuss flow forms, with the yield function represented by the following [37]:

The plastic strain is given by

Here,

and σm, σy, and a are the von Mises’ stress, yield stress, and flow vector, respectively. Interested readers are referred to [31] for more details on the formulation.

2.2 Simulation domain and boundary conditions

In this study, a substrate block measuring 2 mm × 2 mm × 0.2 mm is used. On top of this substrate, a single layer of Inconel 625 is printed. The entire print area is divided into three equal parts, and specific lasers are used to print each part of the area. There are four corner positions for each of the lasers to start with: south-west, south-east, north-west, and north-east as shown in Fig. 1a. A total of (4 × 4 × 4) = 64 different combinations of corner start positions are possible for filling the entire block. The space is filled using a zigzag printing pattern, which could be either vertical or horizontal. This led to a full factorial design-of-experiments (DOE) method involving a total of (2 × 2 × 2 × 64) = 512 simulations. Figure 1b, c shows the vertical and horizontal space filling patterns for the three different lasers with different start positions, respectively.

a The print area is divided into three equal parts: block-1, block-2, and block-3 with each having four possible corner start positions for the lasers. The 2 mm × 2 mm space is filled with b vertical zigzag pattern with south-west starting point and c horizontal zigzag pattern with south-east starting point by three lasers. Here, green dots represent the start position of the laser, and red dots represent the end position of the lasers

The laser power, scan speed, laser spot diameter, layer thickness, platform temperature, and laser start time are held constant, while the printing patterns of the three lasers are varied for each simulation. The overall distortion of the printed part is considered as the output of the model. An initial room temperature of 25 °C is assumed, and the heat sources along with thermal convection and radiation losses, are used as boundary conditions. A uniform convective loss approximation is employed for the conduction into the loose powder [38]. An initial temperature of 80 °C is imposed at the bottom of the substrate. To simulate the effect of a cantilever substrate, it is used as the mechanical boundary condition. An infill density of ~90% is used. The Netfabb software provides the temperature-dependent thermo-physical properties of IN625. The specifications for IN625 are as follows: the density is 0.00844 g/mm3, the liquidus temperature is 1475 °C, the solidus temperature is 1195 °C, and the latent heat of fusion stands at 287 J/g. Regarding laser parameters, the laser radius is maintained at 0.05 mm, and its absorptivity is 0.4. The set value for the effective heat transfer coefficient is 25 W/m2·C. The process parameters and their associated values used in this study are summarized in Table 1.

2.3 Grid convergence study

Autodesk Pan Solver (version 2020) is used to conduct all the thermo-mechanical simulations, which can be accessed through either Netfabb Ultimate or Netfabb Simulation products. To view the results, an open-source, multi-platform data analysis and visualization application called ParaView [39] is utilized. The adaptive meshing features in Netfabb are controlled by the number of fine layers under the heat source, the number of fine elements in the radius of each heat source, and the mesh adaptivity level, which reduce computational time and memory usage by reducing the size of matrices [40]. According to Berger and Oliger [41], adaptive sub-grid can produce more accurate results than fine static meshing with minimal computational cost. Before performing the DOE simulations, a mesh convergence study is conducted, and the results are presented in Fig. 2.

At the start of the simulation, Netfabb generates a finite number of mesh elements based on user inputs to determine the geometry and mesh density. Figure 2(b) displays the mesh utilized in this analysis. The maximum deformation is systematically recorded by gradually increasing the mesh density, starting from a fewer number of elements to a reasonably higher number of elements while ensuring that the aspect ratio of the mesh elements remains as low as possible. The aspect ratio of the surface mesh is the ratio of the longest edge of an element to its shortest element, and for a high-quality mesh, its value should be less than 5 [42]. For this study, a maximum element aspect ratio value of 1.818 is utilized, demonstrating monotonic convergence of distortion with a peak difference of less than 0.5%. However, this slightly increases computation time but ensures much better results. In the subsequent phase of the study, all simulations are carried out using the finalized mesh, and the maximum deformation is recorded for each individual case.

3 Data-driven low-fidelity model

Although physics-based models are essential for a thorough understanding of the underlying physics involved in ML-PBF, their development is often hindered by the computational cost. To overcome this challenge, researchers have turned to data-driven methods that directly model how process parameters affect the quality of the final parts. These methods include artificial intelligence, design-of-experiments, and others, all of which use high-fidelity model data or experimental data to empirically relate process parameters and part features. Since these methods are not solely dependent on domain-specific knowledge, they can be applied to other AM processes by redefining process control parameters and quality measures.

Low-fidelity data-driven models can provide numerous advantages, such as reduced computational resources, which enable faster simulations and quicker design iterations. This facilitates rapid optimization of processes and identification of process anomalies in the development stages. The ease of implementation of low-fidelity models makes them accessible to a wider audience, including those with limited expertise in additive manufacturing or simulation. The simplicity of low-fidelity models contributes to their robustness, as they are less susceptible to errors caused by incomplete or inaccurate input data. Additionally, their scalability allows for seamless adaptation to larger or more complex systems. Overall, low-fidelity models can serve as invaluable resources for preliminary evaluations, trend analysis, and early-stage design exploration in the additive manufacturing field.

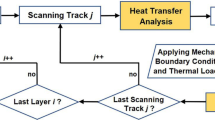

In case of ML-PBF, statistical DOE can be used to systematically plan future simulations based on previous high-fidelity simulation data, while also allowing for empirical learning of the simplified relations between part features and process control parameters. Overall, data-driven methods offer a promising approach for improving the quality of the ML-PBF parts and reducing the computational cost of simulations. In this study, the thermal history is monitored at the point of maximum deformation. The maximum thermal gradient, G (rate of change of temperature), between any time t and t + 1 is obtained by the following rule [43]:

Here,

Figure 3a shows the steps by which G is extracted from the high-fidelity simulations. The maximum negative gradient of the cooling rate is considered, as it has a direct correlation with the maximum deformation [44,45,46]. The temperature and time stamp at the point of the maximum negative gradient, as well as the previous temperature and time stamp, are supplied to the low-fidelity model. Minimizing the deformation at this specific point is essential, as it represents the maximum deformation and reducing it would also lead to a reduction in deformation at other parts of the given geometry.

A feedforward neural network is a type of artificial neural network that is widely used for supervised learning in machine learning and data science applications. It is called “feedforward” because information flows through the network in a single direction, from the input layer to the output layer, without any loops or feedback connections. It consists of three or more layers of neurons: an input layer, one or more hidden layers, and an output layer. The input layer receives the input data, which is then passed through the hidden layers to produce the output. Each neuron in a layer is connected to every neuron in the previous layer, and each connection is assigned a weight that determines the strength of the signal between the neurons. During training, the weights of the connections are adjusted using an optimization algorithm such as backpropagation, which minimizes a loss function that measures the difference between the predicted and the true outputs. The goal of training is to find the set of weights that minimizes the loss function so that the network can accurately predict the output values for new input data sets.

One of the advantages of feedforward neural networks is that they can approximate any continuous function to an arbitrary accuracy, given enough hidden neurons. However, this also means that they are susceptible to overfitting, where the network memorizes the training data rather than learning the underlying patterns. To address this issue, regularization techniques such as dropout, early stopping, and weight decay can be used. Another advantage of feedforward neural networks is that they can handle high-dimensional and nonlinear data, making them well-suited for tasks such as image recognition, speech recognition, natural language processing, and financial forecasting [47,48,49,50,51,52,53].

In general, a common approach is to split the available data into three sets: a training set, a validation set, and a testing set. The training set is used to train the network by adjusting the weights of the connections between neurons, while the validation set is used to monitor the network’s performance during training and prevent overfitting. Finally, the testing set is used to evaluate the performance of the trained network on new data. In this study, 70% of the total data are used for training purposes, and the rest of the 30% of the data are divided equally for validation and testing purposes. A total of 358 data points are used to train the model, and 77 data points are used for validation and testing purposes. The network has three hidden layers, consisting of 9, 5, and 1 hidden neuron, respectively. The Levenberg-Marquardt (LM) algorithm is used as a training method for the selected network. LM is a popular optimization algorithm used for training artificial neural networks, particularly in regression. The LM algorithm is designed to find the set of weights that minimizes the sum of squared errors between the network’s predicted output and the actual target output. Unlike the standard gradient descent algorithm, the LM algorithm uses information about the curvature of the error surface to adjust the step size of the weight update. This means that the algorithm can take larger steps in the directions where the error is decreasing rapidly and smaller steps in the directions where the error is changing slowly. A momentum constant or momentum parameter value of 0.001 is used as the initial value in this study. It is also known as the damping parameter or the regularization parameter. In the LM algorithm, the weight updates are determined by a combination of the gradient descent method and the Gauss-Newton method. The Gauss-Newton method attempts to minimize the sum of the squared errors between the network outputs and the target outputs. However, this can sometimes lead to instability or divergence during training. To address this issue, the LM algorithm introduces a damping parameter (momentum parameter) to adjust the step size of the weight updates. When the value of this parameter is large, the algorithm behaves more like the gradient descent method, and when it is small, it behaves more like the Gauss-Newton method. In this study, it controls the amount of influence that previous weight updates have on the current weight update. The maximum number of validation failures or maximum epoch numbers is used as a stopping criterion of this network. A small value of momentum parameter can make the algorithm converge quickly and accurately, but it may also result in overfitting. In contrast, a large value of the same can prevent overfitting, but it may result in slower convergence. Figure 3b shows the schematic of the data-driven model.

4 Results and discussion

Out of 512 simulations, the maximum deformation recorded is 0.18414 mm, and the minimum deformation is 0.086201 mm, i.e., this study observes about 53% variations in the deformation due the start position (one of four possible starting corner position) and printing orientation (vertical or horizontal zigzag pattern) of the lasers. Figure 4 shows the deformation and stress distribution of four representative cases including the two extreme cases.

Four different scanning patterns (first column), corresponding Cauchy stress (second column), and displacement distribution (third column) at the end of the simulation for a–c case ID 100, d–f case ID 300, g–i worst case ID 464, and j–l best case ID 18. The green dots represent the start positions of the lasers, and the red dots indicate the stop positions of the lasers in respective three blocks. The dotted green triangle is the Euclidean distance of three lasers at the start of the printing process, and the dotted red triangle gives the Euclidean distance of three lasers at the end of the printing process for the best case

Figure 4 represents four different scan patterns, their corresponding Cauchy stress, and displacement at the end of the simulation. Figure 4a–f depicts two random cases (case ID 100 and case ID 300, respectively). Figure 4g depicts the worst printing path, where the printing process generates the maximum displacement value (as given in Fig. 4i). The start positions of the lasers are represented by the green dots, while the stop positions are indicated by the red dots. The movement of the lasers is demonstrated by the arrow direction. It can be observed from block-1 and block-2 in Fig. 4g that both lasers start from the furthest distances at the bottom (block-1 from the southwest corner and block-2 from the southeast corner) and eventually move toward each other. As a result, the material experiences excessive heating when the two lasers are in close proximity and cools down when they are far apart, leading to a non-uniform temperature distribution at different times throughout the printing process, resulting in a non-uniform stress distribution (as can be seen in Fig. 4h) and a high magnitude of displacement (as can be seen in Fig. 4i). Conversely, the best performance, in terms of the minimum displacement value, is exhibited by Fig. 4j–l. Approximately, an improvement of 53% is achieved compared to its worst-case counterpart. It is noteworthy that the start positions of the lasers exhibit uniformity in terms of their Euclidean distances, forming a triangular shape (green dotted line) with the corners of the respective blocks. A similar pattern can be observed for the end positions (red dotted line) as well, with all lasers moving vertically. The green and red dotted lines together form a “W” shape, as shown in Fig. 4j. Since all the lasers employ the same velocity and power, this specific pattern results in a uniform distribution of the thermal gradient, yielding a uniform stress distribution and minimal displacement at the end of the printing process.

The distribution of negative thermal gradient characterizes the cooling cycle of the printing process. In Fig. 5, the temperature history at the point of maximum deformation is displayed, along with the distribution of negative temperature gradients for four distinct scenarios: the best and worst cases, as well as two intermediate cases. Both the magnitude of the negative thermal gradient and its distribution play an important role in the overall displacement of the printed part. It is clearly indicated by the two extreme cases in Fig. 5e–f and Fig. 5g–h. The worst case yields higher deformation/displacement due to very high negative thermal gradient. On the other hand, the best case results in a lower displacement value due to the lower magnitude of the negative thermal gradient. When the distribution of the negative temperature gradients is assessed, an empirical snapshot of the probability density function, provided by the histogram, is given in Fig. 5b, d, f, h. This function indicates the likelihood of various gradient ranges observed in the dataset. Additionally, a theoretical probability density function of a normal distribution is provided by the overlaid line plot (black). This normal distribution is based on the calculated mean and standard deviation of the gradients. Through these two plots, a visual comparison of the observed probability density against a theoretical normal distribution is enabled. If there is a close alignment between the histogram's probability density and the line plot, it is implied that the negative temperature gradients have a probability density that is approximately normally distributed.

Temperature (red line plot) vs. displacement (blue line plot) at the point of maximum distortion during the entire printing process and distribution of temperature gradients for a, b: case ID 100, c, d: case ID 300, e, f: worst case ID 464, and g, h: best case ID 18. The probability density distribution of the observed negative temperature gradients, represented by the blue histogram, is compared against the theoretical normal distribution, represented by the overlaid black line plot

As illustrated in Fig. 5g, h, the best case demonstrates a more favorable distribution of the thermal gradient with lower peaks relative to other cases. It is observed that for Case ID 18, a temperature drop from 746.31 °C to 367.55 °C occurs within a timeframe of 5.854e-03 seconds. Conversely, for Case ID 464, a decrease from 1684.1 °C to 1590.1 °C is recorded in just 3.10e-05 seconds. Hence, Case ID 464 demonstrates a significantly steeper negative temperature gradient over time. All other printing patterns fall between these two extreme cases. In all cases, it is noteworthy that the positive sides of the x-axis are excluded. This is due to the fact that the distortion during the cooling cycle is linked to a negative thermal gradient, which ultimately results in the placement of all bars on the negative side of the x-axis.

Figure 6a depicts the plot of maximum negative thermal gradient and maximum displacement for all the simulations conducted in this investigation. It is evident that there is no significant relationship between the negative thermal gradient and displacement magnitude. This might be due to the complex underlying physics between the factors considered. Therefore, a simple linear model may not accurately capture the intrinsic relationship between the negative thermal gradient and displacement in this study. Hence, to understand the underlying physics of the system and develop a data-driven low-fidelity model, it is crucial to consider not only the magnitude but also the distribution of the negative thermal gradient. However, in the Kolmogorov-Smirnov test [54,55,56,57], the null hypothesis assumes that the data follows a specified distribution, which, in this case, is a normal distribution of the negative thermal gradient during the entire printing process. The p-value represents the probability of observing a test statistic as extreme or more extreme than the one computed from the sample data, assuming the null hypothesis is true. The confidence level is 95% (α = 0.05). A p-value greater than α suggests insufficient evidence to reject the null hypothesis, whereas a p-value less than or equal to α indicates statistically significant evidence to reject the null hypothesis, suggesting that the dataset is not normally distributed. Figure 6b displays the p-value for all simulations, where higher p-values signify higher confidence in the normal distribution of the negative thermal gradient for that specific simulation. In addition to the magnitude of the negative thermal gradient, a higher p-value describes the printing quality in terms of minimum thermal distortion. Notably, in some cases, the p-value is very low, such as in case ID 464, indicating non-normal distribution and higher thermal distortion. Conversely, a higher p-value alone does indicate lower thermal distortion as the magnitude of the negative thermal gradient may be higher. Therefore, to accurately predict thermal distortion and capture the complex relationship, AI models are required.

In the second phase of this study, the research objective is to develop a low-fidelity modeling framework using a feedforward neural network. This approach is adopted due to the potential benefits of low-fidelity models, including simplicity, computational efficiency, and ease of use. Although high-fidelity models offer more precise results, they typically necessitate significantly greater computational resources, leading to extended simulation times and higher costs. Hence, the current study aims to develop a low-fidelity model that can quickly and accurately predict thermal displacement based on thermal history, with comparable accuracy to that of a high-fidelity model.

Figure 7 depicts the regression plots for different datasets. It clearly shows the relationships between the target high-fidelity thermal displacement outputs and the low-fidelity network outputs for the training, validation, and test sets. The correlation coefficient, R, is a statistical measure of the strength and direction of the linear relationship between target output and network-predicted output. Its value can vary between +1 (indicates a perfect positive correlation) and −1 (indicates a perfect negative correlation), while 0 indicates no correlation. In this study, the value of R is 0.88. It describes a clear positive correlation between the high-fidelity output and network-predicted low-fidelity output in terms of thermal displacement if thermal history is given. Most of the data points are located near the diagonal line indicating a strong linear relationship between thermal history and network-predicted displacement magnitude. Figure 8 describes the low-fidelity model-predicted displacement and the high-fidelity target displacement for all 77 test cases which are kept out of the training set. In most cases, the model-predicted displacement magnitudes are very close to the high-fidelity target displacement values based on the input thermal history at the point of maximum displacement. However, the predicted outcomes are far from the actual target displacement in certain cases. Figure 8b depicts a graphical representation of the distribution of errors via an error histogram for training, validation, and test sets. It gives a clear sense of how well the model is performing across different ranges of errors and describes the performance of the model. It is important to mention that the errors are typically calculated as the difference between the high-fidelity target displacement values and the low-fidelity model-predicted output values. By plotting the frequency of these errors in different bins, it is clear that it shows a normal distribution or Gaussian distribution, as the bell-shaped curve is almost symmetrical around the zero mean. Therefore, most of the errors fall to near zero, with fewer errors as the distance from zero increases. This can be a sign that the low-fidelity model accurately captures the underlying patterns in the data and is not overfitting or underfitting. Additionally, this normal distribution makes it easier to interpret the performance of the model. Figure 8c shows that the prediction error of the low-fidelity model is less than 10% for 70.1% of the test cases, between 10% and 20% for about 24.7% of test cases, and more than 20% of error variations for only 5.2% of the test cases. It shows that the low-fidelity model is performing well. A case-wise detailed percentage of errors can be seen in Fig. 8d. Only four out of 77 test cases demonstrate more than 20% error when using the newly developed low-fidelity model.

Comparison between high-fidelity and low-fidelity model in terms of a deviation between the actual (high-fidelity model) and predicted (low-fidelity model) displacement, b error histogram for the entire dataset, c overview of the quality of the model in terms of prediction error, and d details of prediction error for the test dataset

The thermal history, specifically during the cooling cycle in ML-PBF AM, plays a crucial role in determining the displacement magnitude that occurs within the printed part. During the ML-PBF process, the heat source selectively melts a thin layer of powder material according to the given path specifications. As the heat source moves, the molten material solidifies, and a new layer of powder is deposited. This process continues in layers until the entire part is built. The thermal history records the temperature changes as the heat sources move on the metal powder layer, and it depends on the source’s power and scan speed as well as layer thickness and material properties. The deformation or the thermal displacement in the printed part is mainly caused by the non-uniform temperature distribution and the resulting thermal stress that develops during the cooling cycle. As the part cools, the material contracts and stresses are generated due to the constrained shrinkage, resulting in part deformation, further leading to deviations from the intended geometry. In this context, using multiple lasers allows for more even heat distribution, reducing the risk of overheating and therefore mitigating the formation of thermal stresses. Multiple heat sources enable more precise control over the temperature distribution within the built area. The influencing factors of the heat sources (such as power and scan speed) can be tailored to achieve minimum displacement magnitude from the low-fidelity model. After adjusting the controlling factors and identifying the trend for thermal displacement from the low-fidelity ML-PBF model, a similar pattern can be scaled to the high-fidelity model for higher accuracy. In this fashion, the approach can significantly reduce the development time and cost by replacing the high-fidelity model with an inexpensive low-fidelity model. Hence, the developed model provides sufficient insights into the preliminary evaluation of thermal displacement in the ML-PBF process. The simplicity of the model makes it a valuable tool in the early stages of the design and optimization process, which can be easily scaled to accommodate larger systems, incorporating other mechanical and thermal constraints.

5 Conclusion

In conclusion, this study successfully investigates the effects of start position and printing orientation on the deformation of parts produced using multiple lasers in the powder bed fusion additive manufacturing process. A significant reduction of 53% in deformation is demonstrated when comparing the best and worst printing cases. Furthermore, a low-fidelity modeling framework, based on a feedforward neural network, is developed in this study, which is capable of rapidly predicting thermal displacement with a correlation coefficient (R) of 0.88, indicating a strong positive correlation between the high-fidelity and network-predicted low-fidelity outputs. The low-fidelity model developed in this study exhibits accurate predictions, with prediction errors of less than 10% for 70.1% of test cases and less than 20% error for 24.7% of test cases. Only 5.2% of the test cases show errors greater than 20%. The simplicity, computational efficiency, and ease of use of this model make it an accessible and valuable tool for preliminary evaluation and optimization in the early stages of the ML-PBF process. By identifying trends and adjusting controlling factors, the model can be scaled to a high-fidelity model for increased accuracy, significantly reducing development time and cost. The importance of controlling the thermal history during the cooling cycle in the ML-PBF process to minimize part deformation is emphasized in this study. By utilizing multiple lasers and adjusting factors such as power and scan speed, more even heat distribution and thermal stresses can be achieved. The developed low-fidelity model is a useful tool for understanding and predicting thermal displacement in the ML-PBF process, offering valuable insights to designers and engineers working in the field of additive manufacturing.

Data availability

The datasets generated in this paper are available from the corresponding author on reasonable request.

References

Astm I (2015) ASTM52900-15 standard terminology for additive manufacturing—general principles—terminology. ASTM International, West Conshohocken, PA, p 5

Wong H, Dawson K, Ravi GA et al (2019) Multi-laser powder bed fusion benchmarking—initial trials with Inconel 625. Int J Adv Manuf Technol 105:2891–2906. https://doi.org/10.1007/s00170-019-04417-3

Chen C, Xiao Z, Zhu H, Zeng X (2020) Distribution and evolution of thermal stress during multi-laser powder bed fusion of Ti-6Al-4 V alloy. J Mater Process Technol 284:116726. https://doi.org/10.1016/j.jmatprotec.2020.116726

Zhang W, Tong M, Harrison NM (2020) Scanning strategies effect on temperature, residual stress and deformation by multi-laser beam powder bed fusion manufacturing. Addit Manuf 36:101507. https://doi.org/10.1016/j.addma.2020.101507

Wei K, Li F, Huang G et al (2021) Multi-laser powder bed fusion of Ti–6Al–4V alloy: defect, microstructure, and mechanical property of overlap region. Mater Sci Eng A 802:140644. https://doi.org/10.1016/j.msea.2020.140644

Yin J, Wang D, Wei H et al (2021) Dual-beam laser-matter interaction at overlap region during multi-laser powder bed fusion manufacturing. Addit Manuf 46:102178. https://doi.org/10.1016/j.addma.2021.102178

Tsai C-Y, Cheng C-W, Lee A-C, Tsai M-C (2019) Synchronized multi-spot scanning strategies for the laser powder bed fusion process. Addit Manuf 27:1–7. https://doi.org/10.1016/j.addma.2019.02.009

Mower TM, Long MJ (2016) Mechanical behavior of additive manufactured, powder-bed laser-fused materials. Mater Sci Eng A 651:198–213. https://doi.org/10.1016/j.msea.2015.10.068

Khairallah SA, Anderson AT, Rubenchik A, King WE (2016) Laser powder-bed fusion additive manufacturing: physics of complex melt flow and formation mechanisms of pores, spatter, and denudation zones. Acta Mater 108:36–45. https://doi.org/10.1016/j.actamat.2016.02.014

Herzog D, Bartsch K, Bossen B (2020) Productivity optimization of laser powder bed fusion by hot isostatic pressing. Addit Manuf 36:101494. https://doi.org/10.1016/j.addma.2020.101494

Slodczyk M, Ilin A, Kiedrowski T et al (2021) Spatter reduction by multi-beam illumination in laser powder-bed fusion. Mater Des 212:110206. https://doi.org/10.1016/j.matdes.2021.110206

Buchbinder D, Schleifenbaum H, Heidrich S et al (2011) High Power selective laser melting (HP SLM) of aluminum parts. Phys Procedia 12:271–278. https://doi.org/10.1016/j.phpro.2011.03.035

Schleifenbaum H, Meiners W, Wissenbach K, Hinke C (2010) Individualized production by means of high power selective laser melting. CIRP J Manuf Sci Technol 2:161–169. https://doi.org/10.1016/j.cirpj.2010.03.005

Wiesner A, Schwarze D (2014) Multi-laser selective laser melting. In: 8th international conference on photonic technologies LANE, pp 1–3

Cheng B, Shrestha S, Chou K (2016) Stress and deformation evaluations of scanning strategy effect in selective laser melting. Addit Manuf 12:240–251. https://doi.org/10.1016/j.addma.2016.05.007

Kruth J-P, Deckers J, Yasa E, Wauthlé R (2012) Assessing and comparing influencing factors of residual stresses in selective laser melting using a novel analysis method. Proc Inst Mech Eng Part B J Eng Manuf 226:980–991. https://doi.org/10.1177/0954405412437085

Kruth JP, Froyen L, Van Vaerenbergh J et al (2004) Selective laser melting of iron-based powder. J Mater Process Technol 149:616–622. https://doi.org/10.1016/j.jmatprotec.2003.11.051

Gouge M, Michaleris P (2018) An introduction to additive manufacturing processes and their modeling challenges. In: Thermo-mechanical modeling of additive manufacturing. Elsevier, pp 3–18. https://doi.org/10.1016/B978-0-12-811820-7.00002-1

Gouge M, Michaleris P (2018) Thermo-mechanical modeling of additive manufacturing. Elsevier, Cambridge

Luo Z, Zhao Y (2018) A survey of finite element analysis of temperature and thermal stress fields in powder bed fusion additive manufacturing. Addit Manuf 21:318–332. https://doi.org/10.1016/j.addma.2018.03.022

DebRoy T, Wei HL, Zuback JS et al (2018) Additive manufacturing of metallic components – process, structure and properties. Prog Mater Sci 92:112–224. https://doi.org/10.1016/j.pmatsci.2017.10.001

Schoinochoritis B, Chantzis D, Salonitis K (2017) Simulation of metallic powder bed additive manufacturing processes with the finite element method: a critical review. Proc Inst Mech Eng Part B J Eng Manuf 231:96–117. https://doi.org/10.1177/0954405414567522

Bandyopadhyay A, Traxel KD (2018) Invited review article: metal-additive manufacturing—modeling strategies for application-optimized designs. Addit Manuf 22:758–774. https://doi.org/10.1016/j.addma.2018.06.024

Wei HL, Mukherjee T, Zhang W et al (2021) Mechanistic models for additive manufacturing of metallic components. Prog Mater Sci 116:100703. https://doi.org/10.1016/j.pmatsci.2020.100703

Zagade P, Gautham BP, De A, DebRoy T (2021) Analytical estimation of fusion zone dimensions and cooling rates in part scale laser powder bed fusion. Addit Manuf 46:102222. https://doi.org/10.1016/j.addma.2021.102222

Gouge M, Denlinger E, Irwin J et al (2019) Experimental validation of thermo-mechanical part-scale modeling for laser powder bed fusion processes. Addit Manuf 29:100771. https://doi.org/10.1016/j.addma.2019.06.022

Francois MM, Sun A, King WE et al (2017) Modeling of additive manufacturing processes for metals: challenges and opportunities. Curr Opin Solid State Mater Sci 21:198–206. https://doi.org/10.1016/j.cossms.2016.12.001

Zhang Y, Jarosinski W, Jung Y-G, Zhang J (2018) Additive manufacturing processes and equipment. In: Additive manufacturing. Elsevier, pp 39–51. https://doi.org/10.1016/B978-0-12-812155-9.00002-5

Lindgren L-E, Lundbäck A, Fisk M et al (2016) Simulation of additive manufacturing using coupled constitutive and microstructure models. Addit Manuf 12:144–158. https://doi.org/10.1016/j.addma.2016.05.005

Zhang Z, Tan ZJ, Yao XX et al (2019) Numerical methods for microstructural evolutions in laser additive manufacturing. Comput Math Appl 78:2296–2307. https://doi.org/10.1016/j.camwa.2018.07.011

Gouge M, Michaleris P, Denlinger E, Irwin J (2018) The finite element method for the thermo-mechanical modeling of additive manufacturing processes. In: Thermo-mechanical modeling of additive manufacturing, 1st edn. Elsevier, pp 19–38. https://doi.org/10.1016/B978-0-12-811820-7.00003-3

Irwin J, Gouge M (2018) Validation of the American makes builds. In: Thermo-mechanical modeling of additive manufacturing. Elsevier, pp 251–263. https://doi.org/10.1016/B978-0-12-811820-7.00018-5

Peter N, Pitts Z, Thompson S, Saharan A (2020) Benchmarking build simulation software for laser powder bed fusion of metals. Addit Manuf 36:101531. https://doi.org/10.1016/j.addma.2020.101531

Li C, Gouge MF, Denlinger ER et al (2019) Estimation of part-to-powder heat losses as surface convection in laser powder bed fusion. Addit Manuf 26:258–269. https://doi.org/10.1016/j.addma.2019.02.006

Thomée V (2007) Galerkin finite element methods for parabolic problems, vol 25. Springer Science & Business Media

Bathe K-J (2006) Finite element procedures. Pearson Education, Inc, Prentice Hall

Zienkiewicz OC, Taylor RL, Zhu JZ (2005) The finite element method: its basis and fundamentals. Elsevier

Dunbar AJ, Denlinger ER, Heigel J et al (2016) Development of experimental method for in situ distortion and temperature measurements during the laser powder bed fusion additive manufacturing process. Addit Manuf 12:25–30. https://doi.org/10.1016/j.addma.2016.04.007

https://www.paraview.org/. Accessed 4 Jan 2023

Jasak H, Gosman AD (2000) Automatic resolution control for the finite-volume method, part 1: a-posteriori error estimates. Numer Heat Transf Part B Fundam 38:237–256. https://doi.org/10.1080/10407790050192753

Berger MJ, Oliger J (1984) Adaptive mesh refinement for hyperbolic partial differential equations. J Comput Phys 53:484–512. https://doi.org/10.1016/0021-9991(84)90073-1

https://www.solidworks.com/. Accessed 4 Jan 2023

Raghavan N, Dehoff R, Pannala S et al (2016) Numerical modeling of heat-transfer and the influence of process parameters on tailoring the grain morphology of IN718 in electron beam additive manufacturing. Acta Mater 112:303–314. https://doi.org/10.1016/j.actamat.2016.03.063

Hooper PA (2018) Melt pool temperature and cooling rates in laser powder bed fusion. Addit Manuf 22:548–559. https://doi.org/10.1016/j.addma.2018.05.032

Shahabad SI, Zhang Z, Keshavarzkermani A et al (2020) Heat source model calibration for thermal analysis of laser powder-bed fusion. Int J Adv Manuf Technol 106:3367–3379. https://doi.org/10.1007/s00170-019-04908-3

Carraturo M, Viguerie A, Reali A, Auricchio F (2022) Two-level method part-scale thermal analysis of laser powder bed fusion additive manufacturing. Eng Comput 38:4815–4828. https://doi.org/10.1007/s00366-022-01669-8

Huang S-J, Lee T-H (2003) Application of neural networks in injection moulding process control. Int J Adv Manuf Technol 21:956–964. https://doi.org/10.1007/s00170-002-1417-9

Svozil D, Kvasnicka V, Pospichal J (1997) Introduction to multi-layer feed-forward neural networks. Chemom Intel Lab Syst 39:43–62. https://doi.org/10.1016/S0169-7439(97)00061-0

McInerney M, Dhawan AP (1993) Use of genetic algorithms with backpropagation in training of feedforward neural networks. In: IEEE international conference on neural networks. IEEE, pp 203–208. https://doi.org/10.1109/ICNN.1993.298557

Wang X, Cao W (2018) Non-iterative approaches in training feed-forward neural networks and their applications. Soft Comput 22:3473–3476. https://doi.org/10.1007/s00500-018-3203-0

Sharkawy A-N (2020) Principle of neural network and its main types: review. J Adv Appl Comput Math 7:8–19. https://doi.org/10.15377/2409-5761.2020.07.2

Karkoub MA, Elkholy AH, Al-hawaj OM (2002) Modelling deformation of hydroformed circular plates using neural networks. Int J Adv Manuf Technol 20:871–882. https://doi.org/10.1007/s001700200211

Egmont-Petersen M, de Ridder D, Handels H (2002) Image processing with neural networks—a review. Pattern Recognit 35:2279–2301. https://doi.org/10.1016/S0031-3203(01)00178-9

Lilliefors HW (1967) On the Kolmogorov-Smirnov test for normality with mean and variance unknown. J Am Stat Assoc 62:399–402. https://doi.org/10.1080/01621459.1967.10482916

Drezner Z, Turel O, Zerom D (2010) A modified Kolmogorov–Smirnov test for normality. Commun Stat Simul Comput 39:693–704. https://doi.org/10.1080/03610911003615816

Fazlollahtabar H, Olya MH (2013) A cross-entropy heuristic statistical modeling for determining total stochastic material handling time. Int J Adv Manuf Technol 67:1631–1641. https://doi.org/10.1007/s00170-012-4596-z

Vanli OA, Chen L, Tsai C et al (2014) An uncertainty quantification method for nanomaterial prediction models. Int J Adv Manuf Technol 70:33–44. https://doi.org/10.1007/s00170-013-5250-0

Acknowledgements

The authors would like to thank Dr. Michael Gouge, Research Engineer, Autodesk, for his help with Netfabb and Chris Hirsh, IT Consultant, Department of Mechanical Engineering, Penn State, for his help with Netfabb installation and remote operation.

Funding

The work reported in this paper is funded by the Defense Advanced Research Projects Agency through Grant Number D22AP00147-00.

Author information

Authors and Affiliations

Contributions

Conceptualization, A.B and A.K.B.; methodology, A.K.B.; software, A.K.B.; validation, A.K.B.; formal analysis, A.K.B.; investigation, A.K.B.; resources, A.B.; data curation, A.K.B.; writing—original draft preparation, A.K.B.; writing—review and editing, A.B.; visualization, A.K.B.; supervision, A.B.; project administration, A.B.; funding acquisition, A.B. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Disclaimer

Any opinions, findings, and conclusions in this paper are those of the authors and do not necessarily reflect the views of the supporting institution.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ball, A.K., Basak, A. AI modeling for high-fidelity heat transfer and thermal distortion forecast in metal additive manufacturing. Int J Adv Manuf Technol 128, 2995–3010 (2023). https://doi.org/10.1007/s00170-023-11974-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-11974-1