Abstract

First of all, it is necessary to accurately measure the spatial positions of weld grooves when the welding robot performs the automated welding of large workpieces. However, laser stripes are easily affected by light sources and environmental noises in the measurement process based on image recognition. The work designed an automatic measuring system for V-shaped weld seams of large workpieces. First, a mathematical model for calculating the spatial positions of welds was established, and then, a line laser was incident on workpiece grooves to form multiple line segments. Then, image analysis was performed on the intersection of each line segment. These intersection points were brought into the mathematical model after they were mapped to the feature points in the image to calculate the spatial position corresponding to each feature point. Finally, the spatial positions of all discrete points at different positions of the welding seam of the large workpiece were obtained by scanning multiple laser lines to complete the measurement of the entire welding seam. The work proposed a new idea of extracting feature points from weld images that combined Radon transformation, Harris corner detection, and mean clustering to improve the stability and automation of weld location extraction. Multiple groups of different parameter experiments were used to measure two groups of different bevel angles with the length, width, and height of 180 × 60 × 30 mm and 180 × 36.64 × 30 mm, respectively. The maximum measurement error was 0.653 mm, with a minimum measurement error of 0.001 mm, an average measurement error of 0.147 mm, and an average measurement accuracy above 99%. Results showed that the research method with high robustness and noise resistance rapidly could realize the automatic and accurate measurement of weld seams.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Welding is the basic industrial production process, and people focus on achieving high-quality and high-efficiency welding productions. Hand-held welding manner is mainly used in traditional manufacturing. It has high requirements for welding workers and low welding efficiency, which hardly meets the welding production of large-scale structural components. Welding robots work continuously with high stability and efficiency to ensure the welding quality in harsh welding environments [1]. Ordinary robots’ teach-in programming welding method is challenging to adapt to multi-field and multi-form welding for large-scale and complex welding workpieces. Visual technology is gradually applied for extracting weld seams and calculating spatial position information with the development of computer vision detection technology, which improves the automation and intelligence of welding robots.

Studies on the visual inspection of weld seams have recently been carried out. Tian et al. built an automatic identification system for multi-type weld seams based on two laser sources and different welding environments and profiles [2]. After that, the convolutional neural networks are used for classifying weld-seam images. Zou et al. designed a line laser vision sensor and measuring system to analyze the feature-point tracking method and adaptive fuzzy control algorithm in the welding process [3]. The morphological method is used to obtain the initial coordinate value of the feature point before welding. Real-time feature points of weld seams are extracted by the target tracking method based on the Gaussian kernel after welding, with a tracking error of less than 0.32 mm.

Li et al. built a multi-line structured light 3D measuring system to derive a mathematical model [4]. The distance between the camera and laser is adjusted to change the projection direction of light stripes. Besides, measuring precision is improved by increasing light stripes. The line laser vision sensor is easily applied to extract image information in weld inspections without contacting workpieces with high measuring accuracy [5]. Therefore, the line laser vision sensor seizes weld-seam images for information extraction, which is the first choice to solve weld inspection problems.

Regarding weld-seam image processing, Lee used the Otsu method for binarization segmentation after filtering noises by median filters [6]. An improved Hough transform method was proposed to extract weld seam features, which obtains accurate information with less calculation time and memory cost. Tan et al. used the strength image of the weld to obtain the depth value of the weld area. The 3D point cloud of the weld and the weld area was calculated to extract centerlines [7]. Extracting features directly from 3D scene information has high accuracy; however, point cloud data are more complex than images, resulting in increased computational cost. In deep learning–based methods, Xiao et al. applied a classic target detection network of Faster R-CNN for identifying and tracking seams [8]. According to the identification result, the feature point extraction method is adaptively selected to identify discontinuous weld seams. Du et al. accurately identified weld features by feature search algorithms of fast image segmentation and convolutional neural network (CNN), which processes strong noise images in gas metal arc welding (GMAW) [9]. Zou et al. proposed a deep reinforcement learning (CF-DRL) method for locating weld feature points. First, the convolution filter is used to roughly locate the feature points of the weld, and then, the trained neural network is used to further locate the feature points precisely, which can obtain stable and accurate results [10]. However, deep learning methods need to solve insufficient training samples and poor real-time performance problems.

Banafian et al. proposed an improved edge detection algorithm by processing noise images and laser data [11]. The weld seam area is enclosed to determine the welding position. The algorithm is better than other methods in detection speed and accuracy. Ding et al. used multi-angle Radon transformation to obtain a transform domain image after obtaining the image of structured light stripes, and singular points irrelevant to targeted stripes are eliminated; the processed transform-domain image is restored to eliminate noises [12].

Consequently, it is necessary to measure the spatial position of V-shaped weld grooves before the automatic welding of large workpieces by welding robots. However, laser stripes are easily affected by light sources and environmental noises in the measuring process. Most of the existing studies are based on the accuracy of weld extraction. Although there is some literature on the application of Hough transformation to robotic welding, no research has been seen in the way of combining Radon transformation with Harris corner detection and cluster analysis. Technology to improve automated extraction of weld seams remains to be discovered.

The mathematical model of the spatial position of the welding seam was derived by building a welding seam measurement platform in the work, and laser fringe images were collected in real-time. Radon transformation was performed on images, and singular points of the Radon space of images were extracted by combining Harris and clustering algorithms. Then, images were back-projected to image-acquisition space. Intersection coordinates were calculated according to the mathematical model to obtain spatial positions of V-shaped weld grooves accurately. The corner point extraction algorithm was used to obtain the singular point region corresponding to the laser stripe line in the Radon transformation domain. Combined with the mean clustering algorithm to locate singular points, the extraction of laser stripe straight lines and the recognition of weld image feature points could be fully automated. The extraction effect was more stable with high automation, which provided technical guidance for robot intelligent welding.

2 Experimental methods

2.1 Construction of the weld-seam measuring system

The construction of the measuring system is a crucial step in obtaining feature information of weld seams. Figure 1a shows the model of the weld-seam measuring system, where the laser sensor consists of a line-structured light emitter, CCD industrial camera, and housing. The vision measurement is based on the principles of pinhole imaging and triangulation. The line-structured light emitter projects a laser on the surface of the pipeline workpiece to form laser stripes with a specific shape. The CCD camera collects the weld seam image with laser stripes and transmits it to the host computer through USB. The host computer performs image processing to obtain the coordinates of weld feature points in the image coordinate system. The coordinates of weld points in space are calculated by a mathematical model to guide the automatic welding of robots.

The mathematical model of weld-seam features is obtained based on the structured-light measuring principle (see Fig. 1b). The optical axis of the camera is perpendicular to the horizontal plane. The optical center coordinate is taken as the origin to establish the \({O}_{c}-{X}_{c}{Y}_{c}{Z}_{c}\) spatial coordinate system, where L is the horizontal distance from the optical center to the line laser plane; θ is the horizontal angle between the centerline of the line laser sensor and the optical center of the camera; P is the point on laser stripes, with a coordinate of (X, Y, Z); \({P}^{^{\prime}}\) is the imaging point of P on the image plane, with a coordinate of \(({x}^{\mathrm{^{\prime}}},{y}^{\mathrm{^{\prime}}},f)\). According to the image-forming principle, we obtain

where \(\overrightarrow{n}=(\mathrm{sin}\theta ,0,-\mathrm{cos}\theta )\) is the normal vector of the laser projection plane. The vector on the laser projection plane is perpendicular to the normal vector. Therefore, the following equation is derived.

Simultaneous equations are solved to derive the coordinate of P.

Equation 3 shows the mathematical model for calculating feature points of weld seams. L and θ values are to be determined. Besides, pixel coordinate (u, v) of \({P}^{^{\prime}}\) is obtained by image processing. The actual location of P in space is determined after calculating the coordinate of \({P}^{^{\prime}}\) relative to the camera coordinate system.

The measuring system is calibrated to obtain parameters, and camera parameters are calibrated by Zhang’s calibration method [13]. Checkerboards with sides of 10 mm are selected as targets, and parameters are calibrated through 13 checkerboard images at different positions. The calibrated reprojection error is 0.11 pixel. The light plane calibration method is used for baseline L and includes angle \(\theta\) [14], and the robot drives the entire sensor to rise vertically in the Z-axis direction. L and \(\theta\) are calculated by the rising distance of the sensor and the corresponding translation distance of laser stripes on the image. Table 1 shows the parameters of the measuring system after calibrations.

2.2 Weld-seam image acquisition and preprocessing

The image information of weld seams is obtained based on the weld-seam measuring system and mathematical model. The V-shaped weld seam is the most widely used in industry [15], and the number of feature points is more than other welds. The work collects two V-shaped weld-groove images with different angles (see Fig. 2). Feature points A and C are edge points to calculate the width of the weld seam among the cross-sectional dimensions of the weld groove; feature point B, on behalf of the bottom position of the weld seam, is used to determine the base point for multi-layer multi-pass welding.

Figure 3a–b show the V-shaped weld-seam images acquired by the weld-seam measuring system. The accuracy of feature extraction is reduced by interference information (e.g., metal reflections and image transmission noises) and effective laser stripes information in the image. Therefore, the image requires preprocessing.

The weld-seam image is conducted with threshold segmentation to distinguish the target from the background in practical processing. Compared with different threshold segmentation methods [16, 17], the OTSU automatically solves the thresholds of different weld-seam images in this work. It is derived from the decision of the least square principle. The OTSU has a small calculated amount and excellent image segmentation quality without adjusting parameters. \({C}_{0}\in [0,t-1]\) and \({C}_{1}\in [t,L-1]\) are the two types of pixels divided by threshold t; L is the gray level of the image; \({P}_{o}\) and \({P}_{1}\) are the ratios of pixels in areas \({C}_{0}\) and \({C}_{1}\) to the entire image; \({\mu }_{0}\) and \({\mu }_{1}\) are the average gray values of pixels in areas \({C}_{0}\) and \({C}_{1}\), respectivley; μ is the average gray level of the entire image; \({\sigma }_{B}^{2}\) is the interclass variance of the image:

Optimal segmentation threshold \({t}_{0}\) is calculated by the OTSU:

When \(t\) ranges from 0 to L − 1 in order, the optimal segmentation threshold is \({t}_{0}\) maximizing interclass variance \({\sigma }_{B}^{2}\). Figure 4a–b show the threshold segmentation result of the image. Segmented laser stripes are belt-shaped images with certain widths. It is difficult to accurately obtain the feature information of weld seams based on image information. Therefore, the canny operator extracts the edge contour information of laser stripes [18]. After that, the centerline of the stripes is fitted by the edge line. The fringe edge extraction of the 60° weld has less noise; while the 90° weld produces more high-contrast environmental noise interferences due to different lighting positions of external light sources (see Fig. 4c–d). Edges extracted by the two types of welds are not continuous because the canny operator calculates the gradient magnitude and direction of image pixels. According to the gradient amplitude of each pixel in different gradient directions, the pixel value is suppressed by a non-maximum value. A threshold value is set to obtain better edge detection.

2.3 Radon transformation of weld-seam images

Irrelevant factors such as the background are removed to obtain the contour of the laser stripe after the threshold segmentation and edge processing of weld-seam images. However, environmental noises and metal reflection pollutions are not conducive to the direct extraction of weld seams and groove feature points (see Fig. 5).

Original images are transformed into Radon parameter space for extracting linear features, which eliminates noise interferences in preprocessed weld-seam images. Radon is an integral transformation method that can significantly suppress noise interferences and extract stripe features of weld seams [19]. The basic principle of Radon transformation is to integrate any function \(f(x,y)\in {R}^{2}\) originally defined on a 2D plane over straight line L in different directions.

Radon transformation is expressed as:

θ is the angle between projected horizontal axis S and the positive direction of the x-axis; d is the distance between point p(x, y) on S and the origin; line L can be expressed as \(L(x,y)=x\mathrm{cos}\theta +y\mathrm{sin}\theta -d\) (see Fig. 6). Therefore, a set of \((d,\theta )\) is given to calculate an integral value along the L direction. If there is a line with the angle of θ in the original image, the line corresponds to the maximum of function \(R(d,\theta )\) in the transformation domain image. Then, the line singular point is converted into the singular point by Radon transformation in the 2D image.

The image is divided into left and right parts for multi-angle Radon transformation (\(\theta \in [0^\circ ,180^\circ ]\)), respectively, to distinguish the two groups of stripe edges in the horizontal direction. Figures 7 and 8 show the 60° and 90° weld-seam images of Radon transformation, respectively. There are four stripe edge lines in the left and right partial views of preprocessed weld-seam images. Therefore, each Radon parameter space has four singular points \([{P}_{i}\in R(d,\theta ),i=\mathrm{1,2},\mathrm{3,4}]\), which represents four edge lines of laser stripes. Noises of the welding stripe image are generalized to Radon parameter space for suppression after Radon transformation.

2.4 Laser-line feature point extraction of welds

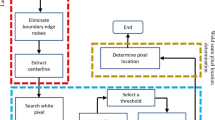

The preprocessed image of weld-seam laser stripes is conducted with Radon transformation to derive the Radon parameter space with multiple singular points, which are extracted by manual extraction [20] and projection methods [21]. The manual extraction method cannot realize automatic feature extraction. The projection method is not conducive to extracting multiple peak points distributed in different positions with a narrow scope of application. Therefore, a module is designed to automatically extract peak points representing image features of weld seams.

Figure 9 shows the Radon transformation result of the left half of the preprocessed 60° weld-seam image and the 3D views of two local regions where peak points are located. Singular points are located in the region where θ ranges from 40 to 90°, and every two singular points approach each other (see Fig. 9). Two laser stripes appear in the original image, where the upper and lower edges of each stripe are roughly parallel and at a small distance. The feature extraction method for weld seams consists of Harris feature point extraction, K-means clustering, and singular point acquisition according to the characteristics of the Radon parameter space.

Harris corner detection is an algorithm for processing dynamic local extrema of images [22]. The principle of the Harris algorithm is applied to detect the region with a significant gradient change in the Radon parameter space, namely, the region where singular points are located. A neighboring window of a pixel in Radon parameter space is taken to observe the average pixel gray value \(E(u,v)\) change in the window when it moves in all directions:

where (x,y) is the pixel coordinate of the Radon parameter space image; (u,v) is the offset of the window in the horizontal and vertical directions; \({I}_{x}\) and \({I}_{y}\) the gradients of the image in horizontal and vertical directions; \(w(x,y)\) is the Gaussian weighting function. Structure tensor matrix M is defined as follows:

where \({\lambda }_{1}\) and \({\lambda }_{2}\) are two eigenvalues of matrix M; \({R}_{H}\) is the feature point response function relevant to eigenvalues of M. The threshold of \({R}_{H}\) is set to determine whether the point in the Radon parameter space image is the Harris point to be extracted. The Harris method is used to extract n Harris points \({P}_{n}^{*}\in R\left(d,\theta \right)\) from the Radon parameter space image. They are distributed in the region where singular points are located (see Fig. 10).

N Harris angular-points \({P}_{n}^{*}\in R(d,\theta )\) extracted from the above are distributed in two singular point areas. Harris angular-points are processed by the K-means clustering algorithm to obtain the center of the singular point area [23]. The K-means clustering algorithm takes Euclidean distance as a similarity index to find the center of K classes in a given dataset by iteration. The clustering aims at minimizing the error sum of squares for each class:

A given dataset containing n data points is divided into K classes by the algorithm as follows:

-

(1)

K objects in data points are selected as the initial center, and each object represents a cluster center \({u}_{K}\).

-

(2)

Other data points \({x}_{i}\) in the sample are assigned to the class with the closest Euclidean distance to the cluster center.

-

(3)

The cluster center is updated by calculating the average data points in class.

-

(4)

The iteration repeats until the class converges or reaches the maximum number of iterations.

Two cluster centers are used to construct singular point areas C1 and C2 in each Radon space image after clustering (see Fig. 11a). Figure 11b shows the contour map of C1 where two peak points are singular points in the Radon parameter space, which represents linear features of the original weld-seam image.

2.5 Characterizations of laser line images of welds

Singular points of the weld laser line are inversely Radon transformed to the original image to obtain the expression of the contour line of the laser stripe and extract the weld laser line. Then, the center line equation is obtained according to the red edge straight line of each segment of the weld laser line to characterize the laser incident line (see Fig. 12a–b).

2.6 Determination of the 3D coordinates

A, B, and C in Fig. 12 are the image feature points of the V-shaped weld, which are the intersections of the center lines of each segment. Based on the pixel coordinates of A, B, and C, the 3D coordinates corresponding to the three points A, B, and C can be obtained through the mathematical model of the weld measurement system (see Table 2 for results).

3 Experiment and analysis

A weld-seam recognition system based on laser vision was built to verify the accuracy of the proposed algorithm in this work. The system platform adopted an industrial robot M-10iA with a control cabinet of FANUC and an industrial camera AVT-Guppy-Pro F201B; the frame rate of the industrial camera was 60 frames, a lens KOWA-LM12HC, and a red line laser with a laser wavelength of 650 nm (see Fig. 13). Weld seam measurement experiments were carried out on 90° welds with length, width, and height of 180 × 60 × 30 mm and 60° welds with 180 × 36.64 × 30 mm, respectively.

The experimental process was described as follows. The calibrated laser vision sensor was installed on the robot, with a working distance of about 150 mm. The robot moved longitudinally along the weld seam; the industrial camera continuously acquired images and collected an image every certain long distance for processing. When the images of the first group were collected, the optical center of the camera was taken as the origin of the world coordinate system to obtain weld-seam images with laser stripes at different positions. The algorithm in the work was used to extract the feature points of the weld image and calculate the coordinates after the acquisition was completed. 3D coordinates of each weld image were collected and calculated to measure the entire weld.

3.1 Robustness test

The obtained weld-seam image is processed by adding Gaussian white noises with a variance of 0.01 to test the robustness of the algorithm. Figure 14a–b indicates the feature extraction results. The extracted centerlines and feature points are consistent with Fig. 12a–b. Results show that the proposed algorithm with high stability can accurately extract laser stripes and feature information under strong noise interferences.

Feature extraction is performed on the weld image with strong metal reflection and the influence of welding arcs to test the ability to resist strong noise interferences in the actual welding environment (see Fig. 15). Figure 15a presents a weld with strong metal reflection caused by an external light source as well as strong interferences caused by welding arcs. Figure 15b–c are the feature extraction results of strong interference images.

The straight line recognition of the laser-stripe edge is accurate. The method in the work can directly extract the edge line of the laser fringe from the strong interference image and does not need to select the laser line area separately. Meanwhile, the extraction of the weld centerline will not be disturbed by noises, which can obtain the stable feature-point recognition of the weld image. Besides, the feature extraction process is automated due to the addition of the corner extraction and clustering algorithm of Radon parameter space. There is no need to set complicated threshold parameters to meet the automation needs of welding robots.

3.2 Accuracy test

Thirty images of the centerline of the laser stripes were collected at 5-mm interval for the two types of welds to test the extraction accuracy of the system (see Fig. 16). The red centerline represents the laser stripe centerline position of the first collected weld-seam image, namely, the position where the robot starts welding. The red arrow is the traveling direction of the welding torch, which is corrected by the robot according to the position of feature points from subsequent scanning.

3D coordinates of weld-seam image feature points A, B, and C in each weld-seam image were calculated to obtain the width and depth of the weld seam, which were compared with actual dimensions. Measuring error \(d=\left|{D}_{m}-{D}_{t}\right|\), and average measurement accuracy ε are expressed as:

where \({D}_{m}\) is the measured value by the proposed algorithm; \({D}_{t}\) the actual dimension of the weld seam.

Figure 17 shows the dimensional measurement errors of two weld seams obtained by the proposed algorithm. In 60° V-shaped weld seams, the maximum error is 0.211 mm for the measured width; the minimum error is 0.008 mm; the average measurement error is 0.105 mm; average measurement accuracy ε is 99.7%. The maximum error is 0.302 mm for the measured depth; the minimum error is 0.014 mm; the average measurement error is 0.087 mm; average measurement accuracy ε is 99.7%.

In 90° V-shaped weld seams, the maximum error is 0.653 mm for the measured width; the minimum error is 0.086 mm; the average measurement error is 0.391 mm; average measurement accuracy ε is 99.4%. The maximum error is 0.242 mm for the measured depth; the minimum error is 0.002 mm; the average measurement error is 0.158 mm; average measurement accuracy ε is 99.5%.

3.3 Stability test

The acquisition interval of adjacent weld images is set to 1.5, 2.0, and 2.5 mm, respectively, to verify the stability of the algorithm. One hundred weld images were collected to test the experimental effect at different intervals. Figure 18 presents the analysis of the width and depth errors of the two grooves at different intervals.

The maximum and average width measurement errors are 0.573 and 0.163 mm, respectively, in Table 3. The maximum and average depth measurement errors are 0.583 and 0.131 mm, respectively, in Table 4. In summary, the average measurement accuracy of the three groups of experiments is 99.6%, which can meet the requirements of automatic welding inspection.

4 Conclusions

An automatic extraction algorithm of weld-seam features was proposed based on Radon transformation and Harris clustering. Then, a weld-seam measuring system was built for experimental verification. The proposed method performed multi-angle Radon transformation on laser fringe images of welds to obtain parameter space images. Meanwhile, the corner extraction and cluster analysis algorithms were combined to automate weld feature extraction, and the effect of feature recognition was more stable and robust. Welding feature points were automatically extracted from the V-shaped weld seams at different angles by the proposed method, which achieved high accuracy in the case of considerable image noises and interferences. The main conclusions were described as follows.

-

(1)

The linear features were accurately extracted from the image after Radon transformation. There was no need for complex image preprocessing, such as image enhancement and filtering.

-

(2)

Based on Harris corner detection and the mean clustering algorithm, the weld-seam feature extraction module adaptively extracted singular points from the transform domain, which achieves high extraction accuracy.

-

(3)

Weld-seam images with strong interference noises were subjected to feature extraction experiments. Results showed that the proposed algorithm had high robustness and anti-interference ability.

-

(4)

A welding seam measurement system was established. The calculated average width measurement error was 0.158 mm, and the average depth measurement error was 0.132 mm on a 60° V-shaped groove. The calculated average width measurement error was 0.209 mm, and the average depth measurement error was 0.125 mm on the 90° V-groove. The maximum error of weld size obtained under multiple sets of experiments was 0.653 mm, the average error was 0.156 mm, and the average measurement accuracy was over 99%, which met the requirements of automatic welding inspection.

-

(5)

When there is a long linear interference in the image, the feature extraction algorithm may identify interferences as laser stripes, resulting in wrong extraction. Moreover, the system also lacks the defect detection of welded seams, which will be solved in the follow-up research.

Data availability

The data supporting the conclusions are included in the article.

Code availability

Not applicable.

References

Rout A, Deepak BBVL, Biswal BB (2019) Advances in weld seam tracking techniques for robotic welding: a review. Robot Comput Integr Manuf 56:12–37. https://doi.org/10.1016/j.rcim.2018.08.003

Tian Y, Liu H, Li L, Yuan G, Feng J, Chen Y, Wang W (2021) Automatic identification of multi-type weld seam based on vision sensor with silhouette-mapping. IEEE Sens J 21(4):5402–5412. https://doi.org/10.1109/JSEN.2020.3034382

Zou Y, Wang Y, Zhou W, Chen X (2018) Real-time seam tracking control system based on line laser visions. Opt Laser Technol 103:182–192. https://doi.org/10.1016/j.optlastec.2018.01.010

Li W, Li H, Zhang H (2020) Light plane calibration and accuracy analysis for multi-line structured light vision measurement system. Optik 207:163882–163893. https://doi.org/10.1016/j.ijleo.2019.163882

Cibicik A, Njaastad EB, Tingelstad L, Egeland O (2022) Robotic weld groove scanning for large tubular T-joints using a line laser sensor. Int J Adv Manuf Technol 120(7):4525–4538. https://doi.org/10.1007/s00170-022-08941-7

Lee JP, Wu QQ, Park MH, Park CK, Kim IS (2015) A study on modified Hough algorithm for image processing in weld seam tracking system. Adv Mater Res 1088:824–828. https://doi.org/10.4028/www.scientific.net/AMR.1088.824

Tan Z, Zhao B, Ji Y, Xu X, Kong Z, Liu T, Luo M (2022) A welding seam positioning method based on polarization 3D reconstruction and linear structured light imaging. Opt Laser Technol 151:108046–108056. https://doi.org/10.1016/j.optlastec.2022.108046

Xiao R, Xu Y, Hou Z, Chen C, Chen S (2019) An adaptive feature extraction algorithm for multiple typical seam tracking based on vision sensor in robotic arc welding. Sens Actuators A 297:111533. https://doi.org/10.1016/j.sna.2019.111533

Du R, Xu Y, Hou Z, Shu J, Chen S (2019) Strong noise image processing for vision-based seam tracking in robotic gas metal arc welding. Int J Adv Manuf Technol 101:2135–2149. https://doi.org/10.1007/s00170-018-3115-2

Zou Y, Chen T, Chen X, Li J (2022) Robotic seam tracking system combining convolution filter and deep reinforcement learning. Mech Syst Signal Process 165:108372–108085. https://doi.org/10.1016/j.ymssp.2021.108372

Banafian N, Fesharakifard R, Menhaj MB (2021) Precise seam tracking in robotic welding by an improved image processing approach. Int J Adv Manuf Technol 114:251–270. https://doi.org/10.1007/s00170-021-06782-4

Ding C, Tang L, Cao L, Shao X, Wang W, Deng S (2021) Preprocessing of multi-line structured light image based on Radon transformation and gray-scale transformation. Multimed Tools Appl 80(5):7529–7546. https://doi.org/10.1007/s11042-019-08031-z

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22(11):1330–1334. https://doi.org/10.1109/34.888718

Zhu Z, Wang X, Zhou F, Chen Y (2020) Calibration method for a line-structured light vision sensor based on a single cylindrical target. Appl Opt 59(5):1376–1382. https://doi.org/10.1364/AO.378638

Zeng J, Cao G, Peng Y, Huang S (2020) A weld joint type identification method for visual sensor based on image features and SVM. Sensors 20(2):471–471. https://doi.org/10.3390/s20020471

Mohana Sundari L, Sivakumar P (2021) Detection and segmentation of cracks in weld images using ANFIS classification method. Russ J Nondestruct Test 57(1):72–82. https://doi.org/10.1134/S1061830921300033

Barros W, Dias L, Fernandes M (2021) Fully parallel implementation of otsu automatic image thresholding algorithm on FPGA. Sensors 21(12):4151–4168. https://doi.org/10.3390/s21124151

Kim YW, Ir J, Krishna AVN (2020) A study on the effect of canny edge detection on downscaled images Pattern Recognit. Image Anal 30(3):372–381. https://doi.org/10.1134/S1054661820030116

Hasegawa M, Tabbone S (2016) Histogram of Radon transform with angle correlation matrix for distortion invariant shape descriptor. Neurocomputing 173(1):24–35. https://doi.org/10.1016/j.neucom.2015.04.100

Silva RRD, Escarpinati MC, Backes AR (2021) Sugarcane crop line detection from UAV images using genetic algorithm and Radon transform. SIViP 15(8):1723–1730. https://doi.org/10.1007/s11760-021-01908-3

Chen J, Li J, He C, Li W, Li Q (2021) Automated pleural line detection based on radon transform using ultrasound. Ultrason Imaging 43(1):19–28. https://doi.org/10.1177/0161734620976408

Mikolajczyk K, Schmid C (2002) An affine invariant interest point detector. ECCV Springer, Berlin, Heidelberg https://doi.org/10.1007/3-540-47969-4_9

Chen L, Shan W, Liu P (2021) Identification of concrete aggregates using K-means clustering and level set method. Structures 34:2069–2076. https://doi.org/10.1016/j.istruc.2021.08.048

Funding

This study was supported by Science and Technology Major Project of Fujian Province (Grant No. 2020HZ03018).

Author information

Authors and Affiliations

Contributions

Fang Guo: methodology, formal analysis, writing — original draft. Weibin Zheng: methodology, investigation, formal analysis, writing — original draft. Guofu Lian: formal analysis, writing — review and editing, supervision. Mingpu Yao: formal analysis, writing — review and editing, supervision.

Corresponding authors

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

All authors agree to give their consent to publish.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, F., Zheng, W., Lian, G. et al. A V-shaped weld seam measuring system for large workpieces based on image recognition. Int J Adv Manuf Technol 124, 229–243 (2023). https://doi.org/10.1007/s00170-022-10507-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-10507-6