Abstract

In this work, machine learning approach based on polynomial regression was explored to analyze the optimal processing parameters and predict the target particle sizes for ball milling of alumina ceramics. Data points were experimentally collected by measuring the particle sizes. Prediction interval (PI)-based optimization methods using polynomial regression analysis are proposed. As a first step, functional relations between processing parameters (inputs) and quality responses (outputs) are derived by applying the regression analysis. Later, based on these relations, objective functions to be maximized are defined by desirability approach. Finally, the proposed PI-based methods optimize both parameter points and intervals of the target mill for accomplishing user-specified target responses. The optimization results show that the PI-based point optimization methods can select and recommend statistically reliable optimized parameter points even though unique solutions for the objective functions do not exist. From the results of confirmation experiments, it is established that the optimized parameter points can produce desired final powders with quality responses quite similar to the target responses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Machine learning (ML) methods have accelerated the scientific advancement in recent times since they are capable of analyzing of large amounts of data (big data), and thus, they can select the optimal processing parameters more precisely. Due to their longer cycles and low efficiencies, traditional methods, such as the empirical trial and error methods, are unable to keep pace with the development of material analysis and design. Accordingly, due to their low computational costs and short development cycles, ML methods coupled with powerful data processing and high prediction performance are widely used. ML methods have been successfully applied in various fields including glasses [1,2,3,4,5], ceramics [6, 7], biomaterials [8], and material science [1, 4, 9, 10] for the prediction of various mechanical properties, kinetic properties, thermal resistance properties, and many more. These ML methods demonstrated their efficacy by the way the input data is processed and analyzed quantitatively. ML methods are successfully applied for the discovery of high-entropy ceramics [11] and alloys [12]. And also, for the prediction of permittivity for microwave dielectric ceramics [13], the melting temperature of ultra-high temperature ceramics [14], and the bending strength of silicon nitride ceramics [15].

Reliable, robust, and accurate experimental input data is essential for ML since many methods are based on supervised learning approaches, in which the models are trained properly through the input data. It is also possible to predict the target values with a great accuracy through these ML methods, and therefore, selection of appropriate method is very important. Regression models are capable of predicting continuous outputs and are generally trained by minimizing a squared error loss function of a training data set. Regression-based ML methods have been applied efficaciously on different materials for the optimization and prediction of various properties [2, 3, 6,7,8]. For instance, Yang et al. [2] predicted the Young’s modulus of silicate glasses by combining ML with high-throughput molecular dynamics. The dissolution kinetics of silicate glasses by topology-informed ML was predicted by Liu et al. [3]. And, interfacial thermal resistance between graphene and hexagonal boron nitride was predicted by Yang et al. by artificial neural network (ANN) models [6]. However, ML methods have been applied very rarely to address the issues in the field of ceramics ball milling.

Ball mill is one of the most popular comminution machines used to produce desired reduced particle sizes and particle size distributions (PSDs) of starting powders [16,17,18]. It has been widely applied for fine grinding of materials in various fields including ceramics, mineral processing, and electronics. Ball milling in general and wet ball milling in particular is a complex process governed by various processing parameters such as slurry amounts [19], powder and ball loadings [20], milling speeds and times [21, 22], container and ball sizes [23], and so on; the quality of produced powders also depends on the types and properties of starting powders. Therefore, optimizing these processing parameters is absolutely needed in ceramics processing to achieve user-specified target values.

Many of present-day industrial applications demand comprehensive theoretical simulations before experimental design. Therefore, for the optimization of engineering manufacturing processes, statistical experiment design techniques (e.g., Taguchi method and response surface method (RSM)) and computer modeling techniques such as ANN and genetic algorithms (GA) have been used. Numerous studies have been made to optimize input parameters of ball milling processes for different materials including TiO2 [24], WC–Co [25], WC–MgO [26], zeolite [27], calcite [28], refractory ores [29, 30], and Al 2024 alloy powders [31]. Hou et al. [24] integrated the parameter design of the Taguchi method, RSM and GA and applied to optimize the milling process parameters for titania nanoparticles. Patil and Anandhan [32] employed Taguchi method to analyse the effect of planetary milling of fly ash and analysis of variance (AVOVA) was used to decide the effect of significance of input parameters. Central composite design (CCD), a standard RSM designed experiment, was implemented by Erdemir [33] to determine the effects of high energy milling parameters for micro and nano boron carbide powders. The adequacy of mathematical models and the significance of the regression coefficients were analyzed using ANOVA. Petrovic et al. [34] optimized the ball milling parameters for TiO2–CeO2 nano powders through RSM by utilising CCD. Regression analysis showed good agreement of experimental data with second-order polynomial model. Taguchi methodology was used by Hajji et al. [35] for the mechanosynthesis of hydroxyfluorapatite using planetary ball milling. Recently, Santosh et al. [36] optimized the mill parameters by carrying out systematic design experiments on the selected low-grade chromite ore. For this purpose, stirrer speed, grinding time, feed size, and solids concentration were varied as per CCD design. However, these studies have several limitations; for instance, most studies focused on optimizing milling parameters using only one quality response (mostly median particle size, d50). If, in addition to the d50, the shape of PSDs is also considered as quality responses, multiple response optimization (MRO) problems should be dealt with. The methods based on the main effect plots cannot handle the MRO problems in which the trade-off between multiple responses should be treated. And also, most studies had little regard to solve such optimization problems in ball milling that desired target values for quality responses are set up. Main effect plots can maximize or minimize quality responses but cannot minimize the differences between the response values and their target values. Moreover, very few studies have attempted to optimize both milling parameter points and their intervals. The optimized parameter intervals can help to operate the target mill more flexibly by considering process uncertainties caused by measurement errors for quality responses, setting errors of processing parameters, and errors occurred by omitting uncontrollable factors (e.g., temperature and humidity).

This paper proposes prediction interval (PI)-based optimization methods for optimizing a wet ball mill using polynomial regression analysis. The aim of the proposed methods is to optimize both parameter points and intervals of the target mill by solving such MRO problems that user-specified target values are set up for some quality responses. After deriving the regression functions from the collected dataset and defining objective functions, the proposed PI-based optimization methods were applied for optimizing both parameter points and intervals of the target mill. To verify the effectiveness of the optimized parameter points, confirmation experiments were also performed several times. Herein, we report on the applicability of polynomial regression-based ML approach for ball milling of alumina ceramics. Experimental data points were collected systematically via particle size analysis. Using this model, the optimal processing parameters can be selected and the response values can be predicted based on the values of processing parameters. In particular, a quantitative analysis of the factors affecting the particle size was conducted. Although this study focuses on the ball milling of alumina ceramics, the proposed new approach reported could be applied for other ceramic materials as well.

2 Experimental details

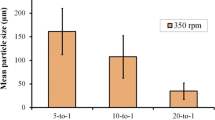

The five processing parameters (inputs) and the three quality responses (outputs) of the target mill are summarized in Table 1. Al2O3 powders (AES-11, purity of 99.9%, d50 = 0.7 μm, d90 = 1.96 μm, Sumitomo Chemical, Japan) were used as starting powders. The value of d50 (y1), and the values of width and skewness (y2 and y3) reflecting the shape of PSDs are employed as quality responses to assess milled alumina powders. The volume percent of slurry (x1), solid content (x2), milling speed (x3), milling time (x4), and ball size (x5) are considered as key processing parameters affecting these responses. In this paper, the proposed PI-based optimization methods are applied to the same experiment dataset used in our recent publication [37]. Except for the milling time (x4) and the ball size (x5), the ranges and levels of the x1, x2, and x3 were set up, and fifteen times milling experiments were designed by CCD. The same set of the milling experiments (i.e., the fifteen times experiments) was repeated with three different ball sizes (i.e., 3, 5, and 10 mm); the total number of conducted experiments were 45 (= 15 × 3). At each experiment, milling was carried out for 24 h, and after 4, 8, 12, and 24 h from the start of milling, the values of the quality responses were measured and calculated. The total of 180 (= 45 × 4) data pairs were prepared, each of which consists of the setting values of the five milling parameters (inputs) and the measured values of the three responses (outputs) (see supplementary information). More experimental details about the ball milling and processing parameters can be found elsewhere [37].

Figure 1 summarizes the procedure for the proposed PI-based optimization methods, which is roughly divided into preliminary and optimization phases. In the preliminary phase, first, functional relations \(\hat{y}_{l} ({\mathbf{x}})\), l = 1,…, L, between p inputs (i.e., processing parameters) and L outputs (i.e., quality responses) are derived by applying the regression analysis to an experimental dataset \(\{ ({\mathbf{x}}_{i} \in \Re^{p} ;{\mathbf{y}}_{i} \in \Re^{L} )\}_{i = 1}^{n}\). Second, based on the \(\hat{y}_{l} ({\mathbf{x}})\), importance values of p inputs for each output are estimated by Monte Carlo (MC)-based method; the importance matrix \({\mathbf{I}}_{imp.} \in \Re^{L \times p}\) that consists of the estimated importance values can be employed to prioritize processing parameters and to optimize parameter intervals. Third, objective functions D(x) to be maximized for solving MRO problems are defined by desirability approach. In the optimization phase, first, the proposed point optimization method is applied to D(x) for obtaining K' optimized parameter points \(\{ {\mathbf{x}}_{(1)}^{*} ,...,{\mathbf{x}}_{{(K^{\prime})}}^{*} \}\) to be recommended for the users (e.g., process operators and engineers). After that, the proposed interval optimization method is used to find upper and lower bound vectors \({\mathbf{x}}_{LB}^{*} = [x_{LB,1}^{*} ,...,x_{LB,p}^{*} ]^{T}\) and \({\mathbf{x}}_{UB}^{*} = [x_{UB,1}^{*} ,...,x_{UB,p}^{*} ]^{T}\) that can define parameter intervals \([x_{LB,j}^{*} ,x_{UB,j}^{*} ]\), j = 1,…, p, enclosing an optimized point x*\(\in \{ {\mathbf{x}}_{(1)}^{*} ,...,{\mathbf{x}}_{{(K^{\prime})}}^{*} \}\).

3 Polynomial regression analysis

Second-order polynomial regression analysis [29, 38,39,40] has been popularly used to optimize processing parameters in various industrial fields including powder processing using ball milling, since it can construct highly interpretable regression models that can appropriately capture the nonlinearities contained in such experimental datasets with relatively low model complexity. For example, RSM was employed by Costa and Garcia [41] to optimize the efficiency of a refrigeration cycle demonstration using MRO approach. Mostafanezhad et al. [42] analysed the formability of aluminium in two-point incremental forming process by employing RSM. Parida et al. [43] employed RSM with full factorial design for the modelling and analysing the response parameters in the case of reduction of emissions in a variable compression ratio engine. RSM based quadratic models have been established between the parameters and proposed characteristics by Yaliwal et al. [44] in the case of biodiesel-producer gas operated compression ignition engine.

Functional relations between p inputs x1,…, xp and lth output yl (l = 1,…, L) are formulated by the regression analysis as follows [37]:

where εl is an error term,\(\beta_{0}^{l}\) is an intercept, and \(\beta_{j}^{l}\),\(\beta_{jk}^{l}\), and \(\beta_{jj}^{l}\) are regression coefficients associated with linear, interaction, and quadratic terms, respectively. In Eq. (1), the total number of coefficients to be estimated is p' = 1 + 2p + p(p − 1)/2. Let yl = [y1l,…, ynl]T \(\in \Re^{n}\) and Z = [z1,…, zn]T \(\in \Re^{{n \times p^{\prime}}}\) be the output vector that consists of n observations for lth output and the design matrix, respectively; the ith row of Z is \({\mathbf{z}}_{i}^{T} = {\mathbf{z}}({\mathbf{x}}_{i} )^{T} =\)[1, xi1,…, xip, xi1xi2,…, xi(p−1)xip,…, \(x_{i1}^{2}\),…,\(x_{ip}^{2}\)], where \({\mathbf{z}}( \cdot ):\Re^{p} \to \Re^{{p^{\prime}}}\). Without loss of generality, it can be assumed that the values of all inputs x1,…, xp have been standardized to be in the range [− 1, 1]. The coefficient vector \({{\varvec{\upbeta}}}^{l} \in \Re^{{p^{\prime}}}\) composed of the p' coefficients can be estimated by the method of least squares as follows:\({\hat{\mathbf{\beta }}}^{l} = ({\mathbf{Z}}^{T} {\mathbf{Z}})^{ - 1} {\mathbf{Z}}^{T} {\mathbf{y}}_{l}\). An unbiased estimator for the standard deviation \(\sigma_{{\varepsilon_{l} }}\) of the εl is sl = \(\sqrt {\tfrac{1}{{n - p^{\prime}}}\sum\nolimits_{i = 1}^{n} {(y_{il} - \hat{y}_{il} )^{2} } }\), where \(\hat{y}_{il} = {\mathbf{z}}_{i}^{T} {\hat{\mathbf{\beta }}}^{l}\), i = 1,…, n. The standard error (SE) of the j'th component \(\hat{\beta }_{{j^{\prime}}}^{l}\) in \({\hat{\mathbf{\beta }}}^{l}\) is \(SE(\hat{\beta }_{{j^{\prime}}}^{l} ) = s_{l} \sqrt {({\mathbf{Z}}^{T} {\mathbf{Z}})_{{j^{\prime}j^{\prime}}}^{ - 1} }\), j' = 1,…, p', where \(({\mathbf{Z}}^{T} {\mathbf{Z}})_{{j^{\prime}j^{\prime}}}^{ - 1}\) is the j'th diagonal element of the matrix (ZTZ)−1. The t-statistic used to test the statistical significance of the j'th term in the vector \({\mathbf{z}} \in \Re^{{p^{\prime}}}\) is calculated as \(t_{{j^{\prime}}}^{l} = {{\hat{\beta }_{{j^{\prime}}}^{l} } \mathord{\left/ {\vphantom {{\hat{\beta }_{{j^{\prime}}}^{l} } {SE(\hat{\beta }_{{j^{\prime}}}^{l} )}}} \right. \kern-\nulldelimiterspace} {SE(\hat{\beta }_{{j^{\prime}}}^{l} )}}\), and it follows the t-distribution t(n − p') with degree of freedom n − p'. The p value of \(t_{{j^{\prime}}}^{l}\) is defined as \(\Pr (\left| T \right| > t_{{j^{\prime}}}^{l} )\), where T ~ t(n − p'); the lower the p value, the higher the statistical significance of the j'th term in z. In this paper, the terms with p values smaller than 0.1 are regarded to be statistically significant.

The output of the lth regression function in Eq. (1) for a new input vector xnew = [xnew,1,…, xnew,p]T can be calculated as \(\hat{y}_{l} ({\mathbf{x}}_{{{\text{new}}}} ) = {\mathbf{z}}({\mathbf{x}}_{{{\text{new}}}} )^{T} {\hat{\mathbf{\beta }}}^{l} = {\mathbf{z}}_{{{\text{new}}}}^{T} {\hat{\mathbf{\beta }}}^{l}\), and its 100(1 − α)% PI,\([PI_{LB}^{l} ({\mathbf{x}}_{{{\text{new}}}} ),\) \(PI_{UB}^{l} ({\mathbf{x}}_{{{\text{new}}}} )]\), is calculated as

where α is significance level for PI, and t1−α/2(n − p') is the 1 − α/2 percentile of the t distribution t(n − p').

3.1 MC-based method for estimating importance values of inputs

In this work, the following Monte Carlo (MC)-based method is used to estimate importance values of p inputs xj (j = 1,…, p) for the lth output yl (l = 1,…, L); this is a modified version of the method presented in [45, 46]. First of all, after generating N uniform random vectors x(1),…, x(N) with p dimensions, these vectors are substituted into the lth regression function \(\hat{y}_{l} ({\mathbf{x}}) = f_{l} ({\mathbf{x}}|{\hat{\mathbf{\beta }}}^{l} )\) to obtain N output values \(y_{l}^{(1)} ,...,y_{l}^{(N)}\), and their median yl,med is calculated. The random vectors can be partitioned into two sets as follows:\(X_{{{\text{larger}}}}^{l} =\){x(i)|\(\hat{y}_{l}^{(i)}\) ≥ yl,med, i = 1,…, N} and \(X_{{{\text{smaller}}}}^{l} =\){x(1),…, x(N)}\\(X_{{{\text{larger}}}}^{l}\). And then, two empirical cumulative distribution functions (ECDFs),\(F_{{{\text{larger}}}}^{l} (x_{j} )\) and \(F_{{{\text{smaller}}}}^{l} (x_{j} )\) for each input xj are estimated based on the jth elements of the vectors in \(X_{{{\text{larger}}}}^{l}\) and \(X_{{{\text{smaller}}}}^{l}\), respectively. In [45, 46], Kolmogorov Smirnov distance between \(F_{{{\text{larger}}}}^{l} (x_{j} )\) and \(F_{{{\text{smaller}}}}^{l} (x_{j} )\) was regarded as the importance value of the jth input xj for the lth response yl. In this paper, to estimate the importance value more precisely, the total area of the region(s) surrounded by the two ECDFs are calculated by numerical integration. Finally, the calculated total area is regarded as the importance value of xj for yl. Finishing the above procedure, importance matrix \({\mathbf{I}}_{{{\text{imp}}{.}}} \in \Re^{L \times p}\) composed of the estimated importance values is returned.

4 Optimization of parameter points and intervals

4.1 Desirability approach

Desirability approach [47,48,49] has been commonly employed for defining objective functions for MRO problems in which multiple quality responses with different ranges are handled. Depending on whether a response yl should be minimized, maximized, or as close as possible to a target value yl,target, different desirability functions are used, and these functions transform the output value \(\hat{y}_{l} ({\mathbf{x}})\) to have the range of 0 to 1. If there are user-specified target values for yl, the following is used as its desirability function:

where yl,min and yl,max are the lower and upper limits of yl, respectively, both of which can be obtained from the observations y1l,…, ynl; yl,target \(\in\)[yl,min, yl,max] is a target value for yl, and s and t are design values that determine the shape of Eq. (3). If the lth response should be minimized, the following desirability function is used:

The desirability function used when the response should be maximized is similar to Eq. (4), and thus omitted due to space constraints. In this paper, both design values of s and t in Eqs. (3) and (4) are set to 1.

The following overall desirability function D(x), a weighted geometric mean of dl(∙), l = 1,…, L, is used as objective functions for MRO problems:

where wl is a weight value assigned to lth response; in this paper, all weight values are set to 1. Depending on the priority of quality responses, their weight values can be differently set up. For example, if making the value of d50 (y1) to be closer to its target value is more preferable than minimizing the value of width (y2), the value of w1 should be set to be larger than the value of w2 (e.g., w1 = 5 and w2 = 1).

4.2 PI-based method for optimizing parameter points

It should be noted that unique solutions do not exist in such MRO problems that some quality responses want to be equal to user-specified target values. That is, whenever executing optimization algorithms (e.g., quadratic programming and particle swarm optimization (PSO)) to maximize D(x), different solutions are discovered each time. It is, therefore, essential to decide which of these different solutions to be selected and then recommended for users. Among these different solutions, the proposed PI-based point optimization method (Fig. 2) selects statistically significant solutions (i.e., optimized parameter points) based on the lengths of their PIs. Here, it is assumed that as the length of PIs for a solution becomes shorter, its uncertainty is reduced from a statistical viewpoint. The proposed method is an advanced version of the method in [50] so that it can be also applicable for MRO problems, and the quality of the different solutions is quantified in terms of PIs instead of confidence intervals. PIs for optimized parameter points need to be calculated beforehand to optimize their parameter intervals, as will be described in Section 5, PIs are also helpful to interpret the prediction results of quality responses and the results of confirmation experiments.

In the proposed method in Fig. 2, firstly, K different solutions \({\mathbf{x}}_{k}^{*}\), k = 1,…, K, are obtained by applying an optimization algorithm to maximize D(x) K times. To do this, any optimization algorithm (derivative-based or derivative-free) can be employed. In this work, PSO [51,52,53], a well-known global optimization algorithm, was applied to find these solutions; the ‘particleswarm’ MATLAB function built into Global Optimization Toolbox can be used to maximize D(x). The detailed explanations for PSO algorithm are redundant, so they are omitted due to space constraints; for more details of PSO, readers are invited to see Refs. [51,52,53]. After finding the K solutions, the lengths of L PIs,\(PI_{k}^{l} =\)\(PI_{UB}^{l} ({\mathbf{x}}_{k}^{*} ) -\)\(PI_{LB}^{l} ({\mathbf{x}}_{k}^{*} )\), l = 1,…, L, for all k are calculated using Eq. (2); the upper and lower limits yl,max and yl,min of yl are used to standardize the values of \(PI_{k}^{l}\). Finally, PIk, k = 1,…, K, obtained by summing all the standardized values of \(PI_{k}^{l}\), are sorted in ascending order (i.e., PI(1) < PI(2) < \(\cdots\)), and then the K' solutions \({\mathbf{x}}_{(1)}^{*} ,...,{\mathbf{x}}_{{(K^{\prime})}}^{*}\) relevant with the first K' PIk are returned.

4.3 PI-based method for optimizing parameter intervals

To deal with the process uncertainties, in addition to an optimized parameter point x* = [\(x_{1}^{*}\),…,\(x_{p}^{*}\)]T, it is also necessary to obtain statistically meaningful optimized parameter intervals \([x_{LB,j}^{*} ,x_{UB,j}^{*} ]\), j = 1,…, p, enclosing the point x*, i.e.,\(x_{j}^{*} \in\)\([x_{LB,j}^{*} ,x_{UB,j}^{*} ],\forall j\). It is desirable that parameter points belonging to the input domain restricted by these p intervals can achieve similar quality responses with those of x*.

In the p-dimensional input space, an hyper-rectangle (i.e., orthotope) that is centered at x* and has 2p vertices vm \(\in \Re^{p}\), m = 1,…, 2p, can be imagined. Figure 3 shows the example of the hyper-rectangle with 8 (= 23) vertices defined in a three-dimensional input space. For each l, while redefining the hyper-rectangle by increasing the length of its edges gradually, one can discover the one defined immediately before any of output values \(\hat{y}_{l} ({\mathbf{v}}_{m} )\) of all the 2p vertices start to depart from the PIs of x*\([PI_{LB}^{l} ({\mathbf{x}}^{*} ),\)\(PI_{UB}^{l} ({\mathbf{x}}^{*} )]\). The parameter intervals can be easily obtained from the L discovered hyper-rectangles. Weight values \(\overline{W}_{lj}\)(j = 1,…, p, l = 1,…, L) calculated from the importance matrix Iimp. can be used to differentially increase the length of edges according to the importance values; the larger the importance value of xj, the shorter the length of the edges in parallel with the jth input direction.

In the proposed PI-based interval optimization method, for each l, the hyper-rectangle in which the length of the edges in parallel with the jth input direction is \(2 \times \delta_{l} \overline{W}_{lj}\) are firstly defined, where δl > 0 is a small positive increment; this can be represented as a Cartesian product of p intervals as follows [54]:\(\times_{j = 1}^{p} [x_{j}^{*} - \delta_{l} \overline{W}_{lj} ,x_{j}^{*} + \delta_{l} \overline{W}_{lj} ]\), where × is the Cartesian product operator. Second, the 2p vertices are organized into the vertex set V = {vm \(\in \Re^{p}\)|m = 1,…, 2p}. While increasing the length of the edges, the elements of vm that become larger than + 1 (or smaller than − 1) are replaced by + 1 (or − 1). The reason for this is that each element of input vector x in the regression functions \(\hat{y}_{l} ({\mathbf{x}})\) must be located in the range [− 1, 1]. Third, the maximum value of δl that makes the outputs \(\hat{y}_{l} ({\mathbf{v}}_{m} )\) of all vm to be included in the lth PI \([PI_{LB}^{l} ({\mathbf{x}}^{*} ),\) \(PI_{UB}^{l} ({\mathbf{x}}^{*} )]\) is found by slightly increasing the value of δl. Fourth, after finding the maximum values of δl,\(\delta_{l}^{\max }\), for all l, the following upper and lower bound vectors \({\mathbf{x}}_{UB}^{l}\) and \({\mathbf{x}}_{LB}^{l}\), are defined:

In Eq. (6), all the components of \({\mathbf{x}}_{UB}^{l}\) and \({\mathbf{x}}_{LB}^{l}\) larger than + 1 (or smaller than − 1) must be replaced by + 1 (or − 1). Based on \({\mathbf{x}}_{UB}^{l}\) and \({\mathbf{x}}_{LB}^{l}\), l = 1,…, L, the L different hyper-rectangles can be defined as:\(\times_{j = 1}^{p} [x_{LB,j}^{l} ,x_{UB,j}^{l} ]\), l = 1,…, L. It is important to note that the L regions occupied by these L hyper-rectangles can be different from each other. Finally, the upper and lower bound vectors that can define the overlap between all the L hyper-rectangles are obtained as follows:

The vectors \({\mathbf{x}}_{UB}^{*}\) and \({\mathbf{x}}_{LB}^{*}\) consist of the upper and lower bounds of the optimized parameter intervals \([x_{LB,j}^{*} ,\)\(x_{UB,j}^{*} ]\), j = 1,…, p, for x*, respectively. The statistically meaningful optimized parameter intervals \([x_{LB,j}^{*} ,x_{UB,j}^{*} ]\) can define the region of input space that contains the input points satisfied with the following property: the output values \(\hat{y}_{l} ({\mathbf{x}})\) of all points in the region are included in the PI \([PI_{LB}^{l} ({\mathbf{x}}^{*} ),PI_{UB}^{l} ({\mathbf{x}}^{*} )]\) of the optimized parameter point x*. In other words, the points included in the region defined by the optimized intervals can obtain statistically similar quality responses with those of the point x*. Owing to this property of the optimized intervals, fine-tuning the point x* within the region can be permissible. The proposed PI-based interval optimization method explained so far is summarized in Fig. 4.

5 Results and discussion

5.1 Results of regression analysis

To derive regression functions between p = 5 inputs and L = 3 outputs, three full models in Eq. (1) with p' = 21 regression coefficients are fitted to the 180 data pairs via the method of least squares; all the input values should be standardized to belong in the range [− 1, 1] in advance. Since the number of data samples are limited, instead of splitting them into training and validation sets, entire samples are employed to build regression models. To validate their generalization abilities, the performance indices in Table 2 will be closely examined. In the full models, the order of polynomial (i.e., hyperparameter) is set to 2; as described in Section 3, the collected dataset by CCD can be thoroughly explained by these models. Although not presented in this paper due to space constraints, the results of explanatory data analysis via scatter plots and main effect plots also indicated that it is proper to set the order of polynomial to be equal to 2. To train the models, the “fitlm” MATLAB function in Statistics and Machine Learning Toolbox can be used.

After training the three full models, statistically significant terms can be identified by testing whether the p values are smaller than 0.1 or not. The reduced models can be obtained by eliminating all the remaining terms except for the statistically significant terms from the full models. The following regression functions can be derived by refitting the reduced models to the 180 data pairs:

where the SEs of the estimated coefficients are presented in parentheses at the bottom of them. The regression models in Eqs. (8)–(10) will be extensively exploited as follows. First, for any setting values of processing parameters, the models can calculate the predicted values of quality responses and their prediction intervals. Second, based on the regression models, response surface and contour plots can be plotted to visually understand how the processing parameters affect quality responses. Third, the importance values of inputs for each output can be quantified by applying the MC-based method to the regression functions.

Table 2 lists performance indices such as root mean squared error (RMSE), R2, adjusted R2, and F-statistic of the full and reduced models. Since the number of coefficients to be estimated is decreased when reducing the complexity of the models, the degree of freedom for the error term εl (l = 1,…, 3) is increased, and thus the RMSE values can be decreased. In the reduced models, the values of R2 are smaller than those in the full models, but the values of adjusted R2 are larger than or equal to those in the full models. This indicates that for the given dataset the generalization capabilities of the reduced models are better than the full models. In the case of F-statistic, its values have become larger after reducing the number of the coefficients, and thus it is valid to say that the reduced models are more statistically significant.

Figure 5 shows response surfaces and contour plots visualizing the input–output relationships of the reduced model \(\hat{y}_{1} ({\mathbf{x}})\) in Eq. (8); as space is limited, those of \(\hat{y}_{2} ({\mathbf{x}})\) and \(\hat{y}_{3} ({\mathbf{x}})\) are not presented here. In Fig. 5, two variables at X and Y axes are relevant to the interaction terms in Eq. (8);. By examining these figures carefully, it is possible to qualitatively understand how the two variables interact with each other for the response. In Fig. 5e–h, as the width of contours become narrower, the change rates of quality responses become larger when we move in the orthogonal direction to contour lines. As shown in Fig. 5a and e, and Fig. 5c and g, in the early stages of milling (i.e., when x4 ≤ 8 h), the changes of x1 and x2 have little effect on y1; when the value of x4 is equal to 24 h, the value of y1 tends to be proportional to the values of x1 and x2. It is also observed that reduction rate in y1 deteriorates with increasing the values of x1 and x2. In Fig. 5b and f, it can be seen that as the value of x5 becomes larger, the change of x1 has more effect on the value of y1. Figures 5d and h show that the longer the milling time, the larger the performance differences with respect to the d50 between 3 and 10 mm balls.

Response surface plots (a–d) and contour plots (e–h) for the reduced model in Eq. (8). For convenience of interpretations, the ranges of X and Y axes have been recovered from the range [− 1, 1] to their original ranges

To estimate the importance values of the five inputs, N = 50,000 uniform random vectors x(i) ~ U[− 1, 1]5 are generated and substituted into Eqs. (8)–(10). Figure 6a–e show the histograms of x1,…, x5 associated with the two sets \(X_{{{\text{larger}}}}^{1}\) and \(X_{{{\text{smaller}}}}^{1}\), and Fig. 6f–j describe the estimated ECDFs \(F_{{{\text{larger}}}}^{1} (x_{j} )\) and \(F_{{{\text{smaller}}}}^{1} (x_{j} )\), j = 1,…, 5; the histograms and the estimated ECDFs relevant to l = 2 and 3 are omitted due to space constraints. Figure 7 depicts bar graphs illustrating the importance values of x1,…, x5 for y1,…, y3 estimated based on the ECDFs in Fig. 6f–j. As can be seen in Fig. 7a, x4 is the most important parameter for changing the value of y1, and followed in order by x5, x2, x3, and x1. Figure 7b and c indicate that x4, x5, and x1 make the first, second, and third largest contributions in determining the values of y2 and y3; the importance values of x2 and x3 for y2 are similar to each other, but x2 is more important parameter for y3 than x3.

Histograms of x1,…, x5 associated with \(X_{{{\text{larger}}}}^{1}\) and \(X_{{{\text{smaller}}}}^{1}\)(a–e) and estimated ECDFs \(F_{{{\text{larger}}}}^{1} (x_{j} )\) and \(F_{{{\text{smaller}}}}^{1} (x_{j} )\), j = 1,…, 5 (f-j). In a–e, for convenience of interpretations, the ranges of X axes have been recovered from the range [− 1, 1] to their original ranges

5.2 Results of optimization

After appointing proper desirability function dl(∙), l = 1,…, 3, to each of the functions in Eqs. (8)–(10)., an overall desirability function D(x) used as an objective function can be defined by Eq. (5). Since some desired target values are set for the value of the d50 (y1), Eq. (3) is employed for d1(∙); in general, width (y2) and skewness (y3) are required to be minimized, Eq. (4) is used for d2(∙) and d3(∙).

Table 3 lists the optimization results of applying the method in Fig. 2 for maximizing D(x) when the following target values were set up for the three responses and the parameter value of x5 was constrained to 3 mm: y1,target = 0.58 μm, y2,target = “min,” and y3,target = “min.” Here, the goal is to find the parameter points that can produce the following milled powder: its PSD is centered at the desired value of d50, and at the same time, its width and both sides are as narrow and symmetrical as possible, respectively. The reason why the ball size (x5) was restricted to be 3 mm is that it is a discrete input parameter, and thus its optimized values without the constrained condition cannot be used in practice. In Fig. 2, the values of α, K, and K' are set to 0.01, 100, and 10, respectively. In Table 3, the optimized parameter points and their model outputs are summarized in the second to sixth columns and the seventh to ninth columns, respectively; the 99% PIs enclosing the outputs are also presented at the bottom of the model outputs. As listed in the table, the outputs of \({\mathbf{x}}_{(1)}^{*} ,...,{\mathbf{x}}_{(10)}^{*}\) are the same or very similar to each other, but the optimized parameter values of \(x_{2}^{*}\) and \(x_{3}^{*}\) in \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(2)}^{*}\) are quite different from those of others; this is due to the fact that since there are infinitely many input points that can achieve the target value y1,target = 0.58 μm (see Fig. 5e), optimization algorithms cannot find a unique solution. The optimized parameter intervals by the method in Fig. 4 with α = 0.01 are presented at the bottom of the parameter values in \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(3)}^{*}\) marked with boldface; the upper and lower bound vectors \({\mathbf{x}}_{UB}^{*}\) and \({\mathbf{x}}_{LB}^{*}\) for \({\mathbf{x}}_{(1)}^{*}\) are [23.55, 70.00, 70.00, 24.00, 4.70]T and [20.00, 55.09, 61.77, 19.12, 3.00]T, respectively. Figure 8 shows the contour plots of the regression function \(\hat{y}_{1} ({\mathbf{x}})\) at which the first solution \({\mathbf{x}}_{(1)}^{*}\) and the input region defined by its optimized parameter intervals are indicated by red asterisks and dashed black lines, respectively. In these plots, it should be noted that the model outputs of the input vectors included in the input region are very close to y1,target = 0.58 μm. This suggests that the optimized intervals allow to know the tolerable setting errors of each parameter, and depending on field situations, it is also possible to flexibly adjust its values within the region. Table 4 summarizes the results of applying the method in Fig. 2 to the optimization problem with the target values y1,target = 0.60 μm, y2,target = “min,” and y3,target = “min,” and with the equality constraint x5 = 3 mm. As presented in the table, although the values of \(x_{2}^{*}\) and \(x_{4}^{*}\) in \({\mathbf{x}}_{(9)}^{*}\) are markedly different from those in others, all the outputs are the same or quite similar. The parameter intervals optimized by the method in Fig. 4 are indicated at the bottom of the parameter values in \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(9)}^{*}\).

Contour plots of \(\hat{y}_{1} ({\mathbf{x}})\) with \({\mathbf{x}}_{(1)}^{*}\) in Table 3 and the input region defined by its optimized parameter intervals. For convenience of interpretations, the ranges of X and Y axes have been recovered from the range [− 1, 1] to their original ranges

The productivity of the target mill is directly related with such input parameters as solid content (x2), milling speed (x3), and milling time (x4) [55]. The solid content determines the amount of produced final powders, and milling speed and time are relevant to the amount of electrical energy consumption (i.e., production costs). Among the K' = 10 solutions listed in Tables 3 and 4, process operators and engineers can select and use appropriate solutions in consideration of the productivity. In Table 3, to satisfy the target values, and at the same time to produce the larger amount of milled powders,\({\mathbf{x}}_{(1)}^{*}\) or \({\mathbf{x}}_{(2)}^{*}\) with higher value of x2 can be used. The values of x2 in \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(2)}^{*}\) are about 10 wt% higher than those of others, but all the values of x1 and x4 in Table 3 are extremely similar to each other. However, if \({\mathbf{x}}_{(1)}^{*}\) or \({\mathbf{x}}_{(2)}^{*}\) is used, the container should be rotated with higher milling speed (i.e.,\(x_{3}^{*}\) = 70%), and thus the amount of the energy consumption increases. In Table 4, to attain the target values and to reduce production time simultaneously,\({\mathbf{x}}_{(9)}^{*}\) can be used; it can save the time by 3.47 h compared to \({\mathbf{x}}_{(1)}^{*}\). However, the value of x2 in \({\mathbf{x}}_{(9)}^{*}\) is 9.33 wt% lower than that in \({\mathbf{x}}_{(1)}^{*}\), and thus the amount of produced powders by \({\mathbf{x}}_{(9)}^{*}\) will be smaller than \({\mathbf{x}}_{(1)}^{*}\).

5.3 Results of confirmation experiments

To verify the effectiveness of the optimized parameter points, confirmation experiments were performed based on \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(3)}^{*}\) in Table 3, and \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(9)}^{*}\) in Table 4. In these experiments, the milling parameters were set up in accordance with the optimized points, the final powders were produced, and then their three quality responses were measured. Considering the process uncertainties, the same experiments were repeated four times for each of the above four optimized parameter points.

Tables 5 and 6 list the measured quality responses from the four milled powders produced by the optimized points \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(3)}^{*}\) in Table 3, respectively; the averaged values of the measured responses are also summarized at the last row in each table. In these tables, the indices 1, 2, 3, and 4 correspond to the four repeated experiments, respectively. Figure 9a and b show the PSDs of the four milled powders in Tables 5 and 6, respectively. In Table 5, the measured values of d50 from the 1st and 2nd powders are 0.603 μm and 0.604 μm, approximately 0.02 μm higher than its target value (i.e., 0.58 μm); their measured values of y2 and y3 are smaller than the model outputs \(\hat{y}_{2} ({\mathbf{x}}_{(1)}^{*} )\) = 1.102 and \(\hat{y}_{3} ({\mathbf{x}}_{(1)}^{*} )\) = 0.239, respectively. The measured values of d50 from 3rd and 4th powders are similar with its target value and their values of y2 and y3 are also smaller than the model outputs. In Table 6, the differences between all the measured values of y1 and its target value are less than or equal to 0.013 μm, and the smaller values of y2 and y3 than the outputs \(\hat{y}_{2} ({\mathbf{x}}_{(3)}^{*} )\) = 1.106 and \(\hat{y}_{3} ({\mathbf{x}}_{(3)}^{*} )\) = 0.245 have been obtained. Since all the measured responses in Tables 5 and 6 belong to the PIs in Table 3, it can be understood that the procedure in Fig. 1 can achieve the statistically reliable optimization results. The average values of the responses in Tables 5 and 6 differ in the d50, width, and skewness by 0.01 μm, 0.035, and 0.009, respectively; since \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(3)}^{*}\) get the similar measured responses to each other, it seems reasonable to suppose that either one can be used to produce the final powder satisfied with the target values.

PSDs of four milled powders in Tables 5 (a) and 6 (b) (#1, #2, #3, and #4 indicate the four different experiments)

Tables 7 and 8 summarize the measured responses of the four powders obtained by \({\mathbf{x}}_{(1)}^{*}\) and \({\mathbf{x}}_{(9)}^{*}\) in Table 4, respectively. It can be confirmed that all the responses are included in the PIs in Table 4. The differences in the averaged values of d50, width, and skewness between Tables 7 and 8 are 0.008, 0.007, and 0.003, respectively; these are negligible taking the process uncertainties into account. Figure 10a and b present the PSDs relevant to the four powders in Tables 7 and 8, respectively. As can be viewed from these figures, the four PSDs measured by the four repeated experiments are extremely similar in shape; the shape of PSDs shown in each of Fig. 10a and b are also quite similar to each other.

6 Conclusions

In this study, ML approach based on polynomial regression models was introduced to quantitatively analyze the optimal processing parameters and predict the target particle sizes for ball milling of alumina ceramics. Median particle size, width, and skewness of PSDs for milled powders were regarded as quality responses. The volume percent of slurry, solid content, milling speed, milling time, and ball size were considered as key input parameters to be optimized. PI-based point and interval optimization methods using polynomial regression analysis were proposed here. After deriving the functional relations between the inputs and the responses, and defining objective functions for solving MRO problems by desirability approach, the proposed methods were used to optimize both parameter points and intervals for accomplishing user-specified target responses.

The main advantages of the proposed PI-based parameter point and interval optimization methods in Figs. 2 and 4 can be emphasized as follows. First, most of previous studies for solving MRO problems did not provide a way to address the situation in which desired target values are established on some quality responses so that there is no unique solution. This paper proposes the PI-based point optimization method in Fig. 2 that can quantify the quality of different candidate solutions for the MRO problems according to their lengths of PIs and then can recommend statistically reliable solutions. Second, the previous studies focused mainly on optimizing parameter points and showed little concern for their interval optimization. This paper proposes the PI-based interval optimization method in Fig. 4 that can optimize parameter intervals enclosing the optimized parameter points in the light of process uncertainties. The optimized intervals can help to assess tolerable setting errors of processing parameters and to fine-tune the optimized points within the input domain restricted by the intervals.

The optimization results showed that the proposed point optimization method can select and recommend statistically reliable optimized parameter points even though unique solutions for the objective functions do not exist. These results are established by additional confirmation experiments. Compared with the PSDs of starting powders with the tails skewed to the right side, those of final powders showed more symmetrical shapes; in addition, the final powders have the similar values of d50 to target values. Finally, although this study emphasized on the ball milling of alumina ceramics, the new approach reported herein could be applicable for other ceramic materials.

Data availability

All data generated or mentioned in this study are included in this published article.

Code availability

No code was provided in this manuscript.

Abbreviations

- L :

-

Number of quality responses (inputs)

- p :

-

Number of processing parameters (outputs)

- y l, x j :

-

lTh output, and jth input

- \(\hat{y}_{l} ({\mathbf{x}})\) :

-

Regression function for lth output yl

- \(\hat{y}_{l} ({\mathbf{x}}_{{{\text{new}}}} )\) :

-

Output of lth regression function for new input vector xnew

- \([PI_{LB}^{l} ({\mathbf{x}}_{{{\text{new}}}} ),PI_{UB}^{l} ({\mathbf{x}}_{{{\text{new}}}} )]\) :

-

Prediction interval for \(\hat{y}_{l} ({\mathbf{x}}_{{{\text{new}}}} )\)

- \(PI_{k}^{l}\) :

-

Length of prediction interval \([PI_{LB}^{l} ({\mathbf{x}}_{k}^{*} ),PI_{UB}^{l} ({\mathbf{x}}_{k}^{*} )]\)

- PI k :

-

Total length of L prediction intervals obtained by summing all the standardized values of \(PI_{k}^{1} ,...,PI_{k}^{L}\)

- \({\mathbf{I}}_{{{\text{imp}}{.}}} \in \Re^{L \times p}\) :

-

Importance matrix that consists of importance values of p inputs for L outputs

- d l(∙):

-

Desirability function for lth output yl

- y l ,target :

-

Target value for lth output yl

- y l ,min, y l ,max :

-

Lower and upper limits of lth output yl

- D(x):

-

Overall desirability function (objective function for multiple response optimization problem)

- \({\mathbf{x}}^{*} = [x_{1}^{*} ,...,x_{p}^{*} ]^{T}\) :

-

Optimized parameter point

- \({\mathbf{x}}_{LB}^{*} ,{\mathbf{x}}_{UB}^{*}\) :

-

Lower and upper bounds for defining optimized parameter intervals

- \([x_{LB,j}^{*} ,x_{UB,j}^{*} ]\) :

-

Optimized parameter interval for jth input

- J L × p :

-

L By p matrix of ones

- δ l :

-

Small positive increment

- W lj, \(\overline{W}_{lj}\) :

-

(l, j)Th element of W = JL×p − Iimp., and standardized values of Wlj

- \(\times_{j = 1}^{p} [x_{j}^{*} - \delta_{l} \overline{W}_{lj} ,x_{j}^{*} + \delta_{l} \overline{W}_{lj} ]\) :

-

p-Dimensional hyper-rectangle centered at x*

- v m, V :

-

Vertices of hyper-rectangle, and vertex set composed of all vm

- ML:

-

Machine learning

- PI:

-

Prediction interval

- ANN:

-

Artificial neural networks

- PSD:

-

Particle size distribution

- RSM:

-

Response surface method

- GA:

-

Genetic algorithm

- AVOVA:

-

Analysis of variance

- CCD:

-

Central composite design

- MRO:

-

Multiple response optimization

- MC:

-

Monte Carlo

- PSO:

-

Particle swarm optimization

- RMSE:

-

Root mean squared error

- ECDF:

-

Empirical cumulative distribution function

References

Schmidt J, Marques MRG, Botti S, Marques MAL (2019) Recent advances and applications of machine learning in solid-state materials science. NPJ Comput Mater 5:83

Yang K, Xu X, Yang B, Cook B, Ramos H, Krishnan NMA, Smedskjaer MM, Hoover C, Bauchy M (2019) Predicting the Young’s modulus of silicate glasses using high-throughput molecular dynamics simulations and machine learning. Sci Rep 9:8739

Liu H, Zhang T, Krishnan NMA, Smedskjaer MM, Ryan JV, Gin S, Bauchy M (2019) Predicting the dissolution kinetics of silicate glasses by topology-informed machine learning. NPJ Mater Degrad 3:32

Wei J, Chu X, Sun X-Y, Xu K, Deng H-X, Chen J, Wei Z, Lei M (2019) Machine learning in materials science. Infomat 1:338–358

Liu H, Fu Z, Yang K, Xu X, Bauchy M (2021) Machine learning for glass science and engineering: a review. J Non-Cryst Solids 557:119419

Yang H, Zhang Z, Zhang J, Zeng XC (2018) Machine learning and artificial neural network prediction of interfacial thermal resistance between graphene and hexagonal boron nitride. Nanoscale 10:19092

He J, Li J, Liu C, Wang C, Zhang Y, Wen C, Xue D, Cao J, Su Y, Qiao L, Bai Y (2021) Machine learning identified materials descriptors for ferroelectricity. Acta Mater 209:116815

Kerner J, Dogan A, Recum HV (2021) Machine learning and big data provide crucial insight for future biomaterials discovery and research. Acta Biomater 130:54–65

Rodrigues JF Jr, Florea L, de Oliveira MCF, Diamond D, Oliveira ON Jr (2021) Big data and machine learning for materials science. Discov Mater 1:12

Liu Y, Niu C, Wang Z, Gan Y, Zhu Y, Sun S, Shen T (2020) Machine learning in materials genome initiative: a review. J Mater Sci Technol 57:113–122

Kaufmann K, Maryanovsky D, Mellor WM, Zhu C, Rosengarten AS, Harrington TJ, Oses C, Toher C, Curtarolo S, Vecchio KS (2020) Discovery of high-entropy ceramics via machine learning. Npj Comput Mater 6:42

Kaufmann K, Vecchio KS (2020) Searching for high entropy alloys: a machine learning approach. Acta Mater 198:178–222

Qin J, Liu Z, Ma M, Li Y (2021) Machine learning approaches for permittivity prediction and rational design of microwave dielectric ceramics. J Materiomics 7:1284–1293

Qu N, Liu Y, Liao M, Lai Z, Zhou F, Cui P, Han T, Yang D, Zhu J (2019) Ultra-high temperature ceramics melting temperature prediction via machine learning. Ceram Int 45:18551–18555

Yang P, Wu S, Wu H, Lu D, Zou W, Chu L, Shao Y, Wu S (2021) Prediction of bending strength of Si3N4 using machine learning. Ceram Int 47:23919–23926

Reed JS (1995) Principles of ceramics processing, 2nd edn. Wiley, New York

Suryanarayana C (2001) Mechanical alloying and milling. Prog Mater Sci 46:1–184

Janot R, Guérard D (2005) Ball-milling in liquid media: applications to the preparation of anodic materials for lithium-ion batteries. Prog Mater Sci 50:1–92

Frances C, Laguerie C (1998) Fine wet grinding of an alumina hydrate in a ball mill. Powder Technol 99:147–153

Shin H, Lee S, Jung HS, Kim JB (2013) Effect of ball size and powder loading on the milling efficiency of a laboratory-scale wet ball mill. Ceram Int 39:8963–8968

Mulenga FK, Moys MH (2014) Effects of slurry pool volume on milling efficiency. Powder Technol 256:428–435

Wagih A, Fathy A, Kabeel AM (2018) Optimum milling parameters for production of highly uniform metal-matrix nanocomposites with improved mechanical properties. Adv Powder Technol 29:2527–2537

Oh HM, Park YJ, Kim HN, Ko JW, Lee HK (2021) Effect of milling ball size on the densification and optical properties of transparent Y2O3 ceramics. Ceram Int 47:4681–4687

Hou TH, Su CH, Liu WL (2007) Parameters optimization of a nano-particle wet milling process using the Taguchi method, response surface method and genetic algorithm. Powder Technol 173:153–162

Zhang FL, Zhu M, Wang CY (2008) Parameters optimization in the planetary ball milling of nanostructured tungsten carbide/cobalt powder. Int J Refract Met Hard Mat 26:329–333

Ma J, Zhu SG, Wu CX, Zhang ML (2009) Application of back-propagation neural network technique to high-energy planetary ball milling process for synthesizing nanocomposite WC-MgO powders. Mater Des 30:2867–2874

Charkhi A, Kazemian H, Kazemeini M (2010) Optimized experimental design for natural clinoptilolite zeolite ball milling to produce nano powders. Powder Technol 203:389–396

Toraman OY, Katırcıoglu D (2011) A study on the effect of process parameters in stirred ball mill. Adv Powder Technol 22:26–30

Celep O, Aslan N, Alp İ, Taşdemir G (2011) Optimization of some parameters of stirred mill for ultra-fine grinding of refractory Au/Ag ores. Powder Technol 208:121–127

Ebadnejad A, Karimi GR, Dehghani H (2013) Application of response surface methodology for modeling of ball mills in copper sulphide ore grinding. Powder Technol 245:292–296

Canakci A, Erdemir F, Varol T, Patir A (2013) Determining the effect of process parameters on particle size in mechanical milling using the Taguchi method: measurement and analysis. Measurement 46:3532–3540

Patil AG, Anandhan S (2015) Influence of planetary ball milling parameters on the mechano-chemical activation of fly ash. Powder Technol 281:151–158

Erdemir F (2017) Study on particle size and X-ray peak area ratios in high energy ball milling and optimization of the milling parameters using response surface method. Measurement 112:53–60

Petrović S, Rožić L, Jović V, Stojadinović S, Grbić B, Radić N, Lamovec J, Vasilić R (2018) Optimization of a nanoparticle ball milling process parameters using the response surface method. Adv Powder Technol 29:2129–2139

Hajji H, Nasr S, Millot N, Salem EB (2019) Study of the effect of milling parameters on mechanosynthesis of hydroxyfluorapatite using the Taguchi method. Powder Technol 356:566–580

Santosh T, Rahul KS, Eswaraiah C, Rao DS, Venugopal R (2020) Optimization of stirred mill parameters for fine grinding of PGE bearing chromite ore, Particul. Sci Technol 9:1–13

Wu J, Jin S-H, Raju K, Lee Y, Lee H-K (2021) Analysis of individual and interaction effects of processing parameters on wet grinding performance in ball milling of alumina ceramics using statistical methods. Ceram Int 47:31202–31213

Draper NR, Smith H (1998) Applied regression analysis, 3rd edn. John Wiley & Sons Inc, New York

Montgomery DC, Peck EA, Vining GG (2012) Introduction to linear regression analysis, 5th edn. Wiley, New Jersey

Aggarwal A, Singh H, Kumar P, Singh M (2008) Optimizing power consumption for CNC turned parts using response surface methodology and Taguchi’s technique-a comparative analysis. J Mater Process Technol 200:373–384

Costa N, Garcia J (2016) Using a multiple response optimization approach to optimize the coefficient of performance. Appl Therm Eng 96:137–143

Mostafanezhad H, Menghari HG, Esmaeili S, Shirkharkolaee EM (2018) Optimization of two-point incremental forming process of AA1050 through response surface methodology. Measurement 127:21–28

Parida MK, Joardar H, Rout AK, Routaray I, Mishra BP (2019) Multiple response optimizations to improve performance and reduce emissions of Argemone Mexicana biodiesel-diesel blends in a VCR engine. Appl Therm Eng 148:1454–1466

Yaliwal VS, Banapurmath NR, Gaitonde VN, Malipatil MD (2019) Simultaneous optimization of multiple operating engine parameters of a biodiesel-producer gas operated compression ignition (CI) engine coupled with hydrogen using response surface methodology. Renew Energy 139:944–959

Deng B, Shi Y, Yu T, Kang C, Zhao P (2018) Multi-response parameter interval sensitivity and optimization for the composite tape winding process. Materials 11:220

Chen Z, Shi Y, Lin X, Yu T, Zhao P, Kang C, He X, Li H (2019) Analysis and optimization of process parameter intervals for surface quality in polishing Ti-6Al-4V blisk blade. Results Phys 12:870–877

Castillo ED (2007) Process optimization: a statistical approach. Springer Science & Business Media, LLC, New York

Candioti LV, De Zan MM, Cámara MS, Goicoechea HC (2014) Experimental design and multiple response optimization using the desirability function in analytical methods development. Talanta 124:123–138

Mohanty S, Mishra A, Nanda BK, Routara BC (2018) Multi-objective parametric optimization of nano powder mixed electrical discharge machining of AlSiCp using response surface methodology and particle swarm optimization. Alex Eng J 57:609–619

Yu J, Yang S, Kim J, Lee Y, Lim KT, Kim S, Ryu S-S, Jeong H (2020) A confidence interval-based process optimization method using second-order polynomial regression analysis. Processes 8:1206

Kennedy J, Eberhart R (1995) Particle swarm optimization. Proceedings of ICNN'95 - International Conference on Neural Networks 4:1942–1948

Engelbrecht AP (2007) Computational intelligence: an introduction, 2nd edn. John Wiley & Sons Ltd, England

Del Valle Y, Venayagamoorthy GK, Mohagheghi S, Hernandez JC, Harley RG (2008) Particle swarm optimization: basic concepts, variants and applications in power systems. IEEE Trans Evol Comput 12:171–195

Dayar T, Orhan MC (2016) Cartesian product partitioning of multi-dimensional reachable state spaces. Probab Eng Inform Sci 30:413–430

Razavi-Tousi SS, Szpunar JA (2015) Effect of ball size on steady state of aluminum powder and efficiency of impacts during milling. Powder Technol 284:149–158

Funding

This work was supported by the Ministry of Trade, Industry and Energy (MOTIE) and the Korea Evaluation Institute of Industrial Technology (KEIT) research funding (Grant No. 20003891), and in part by Electronics and Telecommunications Research Institute (ETRI) grant funded by the Korean government [22ZD1120, Regional Industry ICT Convergence Technology Advancement and Support Project in Daegu-Gyeongbuk].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

This study does not involve ethical issues.

Consent to participate

All authors agreed to participate in this research.

Consent for publication

All authors have reviewed the final version of manuscript and approve it for publication.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, J., Raju, K., Jin, SH. et al. A machine learning approach for ball milling of alumina ceramics. Int J Adv Manuf Technol 123, 4293–4308 (2022). https://doi.org/10.1007/s00170-022-10430-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-10430-w