Abstract

In the manufacture of metal parts, it is crucial to measure the tool wear accurately and quickly to improve machining automation, avoid tool change error, and perform tool wear compensation. In this study, an image-based on-machine and direct measurement model is proposed for the circumferential cutting edge wear of a milling cutter. First, a charge-coupled device sensor is applied to collect the one-dimensional (1D) projected images of the cross section on a circumferential cutting edge. The multiple-frame 1D images under one rotation angle of cutter are stitched into a rectangular image. Then, the multiple rectangular images in one rotation of the cutter are stitched to form a two-dimensional (2D) image. Also, the image edges are positioned through the image edge detection algorithms. Finally, based on the outer edges of the rectangular images for a same cutting edge, the tool diameter is measured; from the relationship of the radius wear and the flank wear on a circumferential cutting edge, the flank wear is obtained. The improved watershed algorithm based on the marked grayscale gradient image, the pixel level positioning algorithm based on Sobel operator, and the sub-pixel level positioning algorithm based on the grayscale moment are adopted for image edge detection. The performed experiments validate that the proposed image-based method is an effective way for measuring wear of the circumferential cutting edge of a milling cutter.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During cutting, the workpiece suffers from a relatively large spring back, which aggravates tool wear. The tool over-wearing will increase cutting force and cutting temperature and even cause vibration. To ensure the workpiece machining quality, frequent tool changes are usually required in this process. Tool condition monitoring (TCM) of tool wear or damage is crucial for reducing unnecessary tool changes and machine tool downtime, and improving the machining efficiency and workpiece quality.

In this regard, some methods have been proposed so far, which can be mainly divided into two categories, i.e., direct measurement and indirect measurement. As for indirect measurement, different signals in the cutting process such as cutting force [1], vibration [2, 3], sound [4], spindle power [5], and cutting temperature [6] can be used to obtain the feature parameters for evaluating the tool wear condition. They are effective to monitor the tool wear during the cutting process, especially the sudden damage. However, the indirect measurement generally has the following limitations: (1) The detection signal often contains various interference that may affect the evaluation to wear condition; (2) Although wear condition can be reflected by the feature parameters, it is usually difficult to obtain quantified tool wear. Laser microscope [7] and scanning microscope [8] can directly measure tool wear, but the direct measurement methods have the following shortcomings: (1) The tool under measurement needs to be disassembled from the machine tool. This not only reduces the machining efficiency but also results in installation errors; (2) These direct measurement methods rely on manual labor to read the measured value, so the measurement accuracy is affected by operative skills.

With applications of image sensors and advances in image processing techniques, tool detection based on image processing has been studied [9,10,11,12,13]. The image-based methods of TCM can be mainly divided into deep learning methods or machine vision methods. The image-based deep learning method can classify tool wear type without complicated data pre-processing. For example, Zhi et al. [14] proposed an edge-labeling graph neural network (EGNN), and the input features extracted from the tool wear image were input to the network to monitor tool wear condition. Wu et al. [15] obtained the images of adhesive wear, tool breakage, rake face wear, and flank wear condition in inserts of a face milling cutter. The image dataset was extended through rotation, scaling, and translation before it was input to the Caffe deep learning framework to classify the wear condition. Bergs et al. [16] detected worn areas on microscopic tool images using a fully convolutional network (FCN). The deep learning networks require a large number of data samples and can only judge the type or area of tool wear instead of determining the accurate value of the wear.

As for image-based machine vision methods, the Gaussian curve fitting method [17], spatial moment method [18], interpolation method [19], and Zernike moment method [20] have been applied to obtain the sub-pixel-level edge. Based on the flank images of a turning tool, Bagga et al. [21] extracted the wear boundaries through threshold segmentation, edge detection, and morphological operators. Zhang et al. [13] used a charge-coupled device (CCD) to capture the flank wear image of the insert on a milling cutter. Also, they extracted the edge of the worn area through column scanning and further extracted the sub-pixel edge using Gaussian fitting. Based on the end blade wear image of a micro-milling tool, Zhu et al. [22] detected the defects and extracted the wear region using the region growing algorithm. This method could reduce the effects of image background and noise on TCM.

The present image-based tool wear measurements are mostly based on the captured specific 2D images. These methods are suitable for the cutting edges whose features can be directly characterized by 2D images. For example, for a turning tool or an embedded insert on a milling cutter [13, 21], using the flank images, the wear is measured through the difference between the edges of the cutting edge before and after wear; for the end face edge of an end mill [22], using the face edge images, the wear is measured through the area between the edges of the cutting edge before and after wear. However, for the circumferential cutting edge of an end mill, which is helical and has multi-dimensional features, using its 2D image for wear measurement has the following problems: (1) If the shooting angle is not exactly facing the maximum wear of the flank, the maximum wear value cannot be obtained; (2) For the different helix angle tools, the shooting angle needs to be changed to fit; (3) For a tool, images of each cutting edge require taking, and even for the same cutting edge, multiple images need to be taken on the full edge to get the wear position.

To this end, this paper proposes a novel model to measure the wear of the helical cutting edge on an end mill. This method is not based on the 2D image of the cutting edge, but is based on the 1D projected image of the tool cross section in the horizontal direction. Then, the multiple 1D images in a rotation are stitched into one 2D image, from which the cutting edge with maximum wear and its diameter are detected. Then, according to the relationship of the measured diameter and the tool wear, the latter can be gotten.

Meanwhile, the improved watershed algorithm based on internal and external markers and the sub-pixel edge detection algorithm are adopted to improve image edge positioning accuracy. Therefore, the methods can perform on-machine, direct, accurate, and rapid measuring for the wear of circumferential cutting edge of a milling cutter.

2 Model for wear measurement

2.1 Relationship between \(\text{VB}\) and \(\text{NB}\)

The ISO standard recommends the use of flank wear \(VB\) to represent the degree of tool wear, but it is hard to directly measure the flank wear of an end mill. It is assumed that edge wear occurs at the distance \(l\) of the cross section. \(l\) is required to be within the height of the cutting edge. Figure 1 shows a cross section A-A at a distance of \(l\) from the tool center point along the axis of the end mill. The diameter of the cross-section is set to \({D}_{\text{m}}\). Due to the circumferential cutting edge wear, the cross section diameter becomes \({D}_{\text{m}}\) after cutting for a period, as shown in Fig. 2. The relationship between flank wear \(VB\) and radial wear \(NB\) is

where \(\alpha_{p}\) is the clearance angle of the cutting edge in the cutting plane. It can be seen from Eq. (1) that \(VB\) can be replaced by \(NB\) to measure the tool wear. \(NB\) is equal to the difference of the measured diameter of the end mill before and after wear.

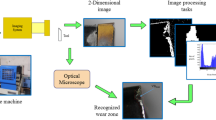

2.2 Image acquisition device

As shown in Fig. 3, the image acquisition device consists of a parallel light source JZ60DL, lens, a linear array CCD TCD1501 with a microcontroller STM32, and a base plate. The image data was transmitted from USB peripheral of STM32 connected with the acquisition software developed through C language. The CCD has 5000 effective pixels. The size of a single pixel, i.e., the CCD resolution, is 7 μm. The parallel light beam emitted by the light source is evenly projected on the tool part to be measured. Based on this, the tool part is projected and imaged on the photosensitive array of the CCD.

2.3 Model of tool diameter measurement

According to the structure of alternating cutting edge and slot in a milling cutter, the image-based model is proposed to measure the tool diameter.

Taking a three-cutting-edge milling cutter as a case, Fig. 4a illustrates a cross section on the circumferential cutting edge with a rotation angle of \(\theta^\circ\). In this figure, \(O\) is the center of the cross section; \(L\) is the cross section projection size. From macro view, a projection on the CCD, shown in Fig. 4c, is a 1D array of pixel grayscale, while from micro view, it is an image with a height of 7 μm. So, it is called 1D image in this paper. A 1D image contains a cross section projection (the target zone) and two out of cross section projections (the background zones). \(L\) is equal to the multiply of the pixel amount in the cross section projection and the size of a single pixel.

In the measurement, the tool is stopped every rotation angle interval \(\Delta \theta^\circ\) to collect the projected 1D images. Therefore, there are \({\kern 1pt} k\) (\({\kern 1pt} k = \frac{360^\circ }{{\Delta \theta }}\)) collections in one rotation of the cutter. Figure 4b presents the variations in the cross section projection size in one rotation of the cutter. These variations constitute a 2D image, that is, stitched from the 1D images. It can be seen that the measured diameter, \(D_{{\text{m}}}\), is equal to the distance between the projections of the outer edges of the two 1D images of a same cutting edge. These two 1D images are distanced by \({\kern 1pt} \frac{180^\circ }{{\Delta \theta }}\) 1D images in the 2D image.

The image-based milling cutter diameter measurement process is designed according to Fig. 4, and shown in Fig. 5. First, to reduce the measurement error and realize the visualization of 1D images, the 1D images projected along a cross section of the circumferential cutting edge at a certain rotation angle are sampled for \(b\) consecutive frames. That is, the sampling number in each collection is \(b\). Then, these \(b\) frames of images are stitched according to the sampling order to form a rectangular image. Subsequently, \({\kern 1pt} k\) rectangular images in one rotation are stitched according to the order of rotation angles to form a 2D image of the cutting edge cross-section. A 2D image contains \((b \times k)\) frames of 1D images in total.

Finally, edges of the 2D image are detected through the algorithms of image segmentation, edge coarse positioning, and edge fine positioning. Meanwhile, the diameter can be measured through multiplying the pixel amount between the respective edges of two rectangular images of a same cutting edge and the size of a pixel.

3 Image edge detection

3.1 Edge detection of the 1D image

The gradient of that stands for a 1D image can be expressed as:

where \(g(x)\) is the gradient and \(\Delta x\) is the point interval. According to the imaging principle, the pixel coordinates corresponding to the maximum value of \(g(x)\) constitute the edge of \(f(x)\). During the imaging process, the tool surface texture and the external lighting can cause impulse noise interference, causing some local maximums outside the edge zone of the gradient image. To preserve the complete edge, the filter window is adaptively adjusted according to the degree of noise sensitivity. Figure 6 shows the edge features extracted from a frame of the 1D image of the tool cross section. In this figure, B indicates the maximum gradient relatively to A. A represents the background zone, C represents the target zone, and B represents the edge zone that is also the edge calculation window.

The edge detection time for a 1D image of a milling cutter is about 3 s. So, when the edge of the 2D image of a cross section of the cutting edges is detected by \((b \times k)\) 1D images one by one, the entire detection time is \(3(b \times k)\) s. However, if the edge is detected directly on the 2D image, detection time can be shortened significantly. Besides, to improve the detection accuracy of the 2D image edge, the detection result of the 2D image can be adjusted according to the comparison between the edge detected in the 2D image at a certain rotation angle and the edge detected in the 1D image at this rotation angle.

3.2 Image segmentation and edge coarse positioning

Before edge positioning of the 2D image, the image segmentation algorithm is adopted to roughly segment the target zone from the background zone and obtain the edge detection zone. Considering that the grayscale gradient at the image edge is higher than that in other zones, the watershed ridges along the image edge can be obtained to roughly distinguish the target zone from the background zone.

However, since the grayscale gradient image also contains tiny changes in grayscale, it is easy to cause over-segmentation, where most watershed ridges do not represent the actual image edges. This phenomenon is presented in the red irregular network in Fig. 7a. To solve this problem, the improved watershed (IW) based on the markers is proposed. Flow of the IW algorithm, shown in Fig. 8, is as follows:

Convert the grayscale image to a binary image through extended maximum transformation to get the external markers marking the background zone.

In order to avoid the marker of the target zone (the internal marker) being too close to the edge zone, firstly, convert the grayscale image into a binary image through threshold transformation, then obtain the distance matrix D from the Euclidean distance transformation of the binary image, and finally, perform watershed transform on D to result the watershed ridge lines as the internal markers.

Add both internal markers and external markers to the gradient image, and set both internal markers and external markers as local minimum zones to get a marker-based gradient image; the process of which is called the minimum coverage process.

Perform watershed transform on the marker-based gradient image and get the segmentation distinguishing the target zone from the background zone, as shown in Fig. 7b.

The grayscale between the pixel point in the target zone and that in the background zone has a step change after image segmentation. The edges are further coarsely positioned by the Sobel operator [23]. The gradient of the 2D image \(f(x,y)\) at the pixel point \((x,y)\) is:

where \(G{}_{x}\) and \(G{}_{y}\) are the horizontal and vertical gradients, and they are calculated by the convolution between the horizontal and vertical templates based on the Sobel operator and the neighborhoods of \((x,y)\). Through adaptive threshold filtering and calculating the Sobel operator in both horizontal and vertical directions, the edge blur can be reduced and the edge is coarsely positioned to the pixel level.

3.3 Edge fine positioning

Since the size of a single-pixel of the CCD is 7 μm, the image edge and the pixel edge may not coincide exactly, but most likely lie within span of a pixel, as shown in Fig. 9. So the sub-pixel algorithms to improve the edge positioning accuracy from pixel to sub-pixel level are adopted. The fine positioning is to make full use of the grayscale information near the edge of a single pixel, to deduct the edge position more accurately. Since the image moment contains the image edge feature, gray moment of the image is used to detect its sub-pixel edge.

Step model of an edge is shown in Fig. 10, and it indicates that the model consists of the pixel points with a grayscale of \(h_{1}\) and \(h_{2}\). The normalized step model is:

where the edge parameters \(\rho\) and \(\theta\) respectively represent the position and direction of the step edge. The first four-order \((j = 1,2,3,4)\) grayscale moment is expressed as

where \(D = \left\{ {(x,y)|x^{2} + y^{2} \le 1} \right\}\) is the area within the unit circle; \(f^{j} (x,y)\) is the grayscale of the pixel point; \(p_{1}\) and \(p_{2}\) respectively represent the proportion of the pixel point with the grayscale of \(h_{1}\) and \(h_{2}\) in \(D\), and \(p_{1} + p_{2} = 1\). The grayscale moment can also be obtained by convolution of the 2D image with a grayscale moment template, i.e.:

where \(n\) is the number of the elements in a \(N \times N\) grayscale moment template; \(z_{i}\) is the grayscale of the \(i\)-th pixel point in the unit circle; \(w_{i}\) is the weight of the \(i\)-th pixel point in the grayscale moment template. Combining Eqs. (4)–(6), the parameters \(p_{1}\), \(p_{2}\), \(h_{1}\), and \(h_{2}\) can be solved.

The sub-pixel edge \((x_{s} ,y_{s} )\) for \(f(x,y)\) can be expressed as:

where \(x_{0}\) and \(y_{0}\) are the grayscale center of the pixels in the unit circle, \(x_{0} = \sum\limits_{i = 1}^{n} {x_{i} z_{i} } /\sum\limits_{i = 1}^{n} {z_{i} }\), and \(y_{0} = \sum\limits_{i = 1}^{n} {y_{i} z_{i} } /\sum\limits_{i = 1}^{n} {z_{i} }\); \(\theta\) can be obtained by:

\(\rho\) can be obtained by:

where \(\beta\) is the half-angle corresponding to the edge. The shaded area in Fig. 10b is:

Assuming \(p = \min (p_{1} ,p_{2} )\), \(\beta\) can be obtained through \(S = \pi p\) and Eq. (10).

4 Experiment and analysis

4.1 Calibration of pixel size

The single-pixel size given by the CCD is 7 μmpixel−1. To improve the measurement accuracy, the pixel size needs to be calibrated. Since the CCD adopts back-illuminated imaging and has no imaging objective lens, the distortion error of the lens can be ignored. Accordingly, the following linear calibration can be used.

where \({\kern 1pt} D_{{\text{e}}}\) is the tool shank diameter measured by a microscope; \(c\) is the calibration coefficient; \({\kern 1pt} \Delta p\) is the pixel difference between the left and right edges positioned by the image-based method, and it represents the pixel amount in the target zone. Using the microscope and the image-based method, the tool with shank nominal diameters of 10 mm, 12 mm, and 16 mm is measured to obtain the value of \({\kern 1pt} D_{{\text{e}}}\) and \({\kern 1pt} \Delta p\). The calibration coefficients are as shown in Fig. 11. The average of all the calibration coefficients is taken as the calibrated pixel size, which is \(\overline{c}\) = 7.04 μmpixel−1.

Thus, in the image-based method, the tool diameter can be measured by:

4.2 Measured diameter of the tool

4.2.1 Setup

A cemented carbide end mill with three cutting edges (type UA100-S3), shown in Fig. 13a, was employed with down milling in the experiment. Its nominal diameter was φ16 mm, and its relief angle was 12°. The workpiece material was aluminum alloy 7075. Milling width \(a_{e} = 2\) mm, milling depth \(a_{p} = 16\) mm, milling speed \(v_{c} = 350\) m/min, and feed rate \(f_{z} = 0.14\) mm/z. After 30-min milling, the tool was moved to the measurement device. Tool rotation angle was positioned by the spindle positioning control command in machine tool SINUMERIK 840D system.

The 1D images projected along the cross Sect. 5 mm away from the tool end were collected. The number of sampling at a rotation angle was set to \(b\) = 60. The number of sampling \({\kern 1pt} k\) indicates the resolution of measurement. The larger the \({\kern 1pt} k\), the higher the measurement accuracy.

Taking a three cutting edges end mill with a diameter of 16 mm as a case, the relationship between the measurement error by the proposed method and the sampling times, \({\kern 1pt} k\), is shown in Table 1. According to the data in Table 1, the curve is fitted as shown in Fig. 12. It can be seen that to ensure that the measurement error is less than one pixel size, \({\kern 1pt} k\) must be at least greater than 12.

According to the diameter measurement principle shown in Fig. 4, the processes for the case are as follows:

-

1)

Collection of the 1D images

At the beginning of measurement, the end mill is rotated to make that the flank face of a cutting edge is roughly align to the CCD projection edge, and this rotation angle is assumed \(0^\circ\).

In the case of \(b\) = 60, \(\Delta \theta = 30^\circ\)(\(k\) = 12), indicating that 60 frames of 1D images were collected every 30°. The image data collected were deposited in a repository that we created [24]. In total, 720 frames of 1D images were collected in one rotation, and the time consumption was 20 s.

-

2)

Stitch of a 2D image

Sixty frame images in a collection form a rectangular image, and then 12 rectangular images are stitched together to form a 2D image, shown in Fig. 13b.

-

3)

2 Detection of the 2D image edge

Three protrusions on the left in Fig. 13b show the three cutting edges, among which the more right has more wear. It can be seen that cutting edge E3 wears the most in the case. A same cutting edge turns \(180^\circ\) on the left and right of the image, and due to \(\Delta \theta = 30^\circ\), it can be seen that every 6 rectangular images from the left to the right are a same cutting edge.

The maximum wear arises at E3, and from the left and right edges of E3, \(D_{{{\text{m}}3}}\) is measured.

-

4)

Wear calculation

According to Eq. (1), the tool wear can be calculated.

4.2.2 Pixel edge of a 1D image

Taking a frame of 1D image collected at \(\theta\) = 60° as an example, the edge calculation window B is determined according to the grayscale threshold. Then, the pixel-level edge was positioned according to the maximum gradient in B, as shown in Fig. 14.

The zone of the left and right edges and the coordinate of the left and right edges are listed in Table 2.

4.2.3 Segmentation of a rectangular image

The accuracy of image edge positioning will be affected by the image segmentation quality. To investigate the effect of image segmentation algorithms on edge positioning, three algorithms including the improved watershed algorithm, the maximum information entropy threshold algorithm [25], and the global iterative threshold algorithm [26] were applied to a rectangular image stitched from 60 frames of 1D images collected at \(\theta\) = 60°. Corresponding to these three segmented images, the left pixel edges using the Sobel operator were marked in the rectangular image, as shown in Fig. 15. The red, blue, and green lines correspond to the pixel edges extracted from the images that are segmented by the improved watershed algorithm, maximum information entropy threshold algorithm, and global iterative threshold algorithm, respectively.

The coordinates of these pixel edges are listed in Table 3. Comparing Tables 2 and 3, it can be seen that the difference between the red line and the edge of the 1D image is only one pixel, while the blue line and the green line are quite different from the edge of the 1D image. This may be related to the consideration of the spatial distribution of the image grayscale in the improved watershed algorithm, thereby improving edge positioning accuracy.

4.2.4 Coarse positioning of edges in a 2D image

In this subsection, the pixel-level edge of the 2D image stitched from the rectangular images in one rotation is obtained using IW and the edge coarse positioning based on Sobel operator. The results are illustrated in Fig. 16, which is a zoom of the circle in Fig. 13b.

4.2.5 Fine positioning of edges in a 2D image

The edge of a 2D image can be further positioned from pixel level to sub-pixel level. In this subsection, the positioning accuracies of three sub-pixel level edge positioning methods including grayscale moment method (GM), Gaussian fitting method (GF), and Lagrange interpolation method (LI) were compared. The comparison results are shown in Fig. 17. Compared with the coarse positioning edge, the wear obtained from the fine positioning edge was more consistent with the microscope measured wear. Compared to GF and LI, GM can achieve the highest edge positioning accuracy with the least computation time.

Following the sub-pixel edge coordinate calculation shown in Eq. (7), the sub-pixel level edge was obtained and shown in Fig. 18. The maximum difference between the sub-pixel coordinates of the left and right edges in the 2D image is the number of pixels in the target zone. By multiplying the maximum difference with the calibrated pixel size, the diameter of the circumferential cutting edge at \(l\) = 5 mm of the end mill is measured, and the result is 15.93588 mm.

4.3 Cutting edge wear

The end mills with the nominal diameters of φ10 mm, φ12 mm, and φ16 mm were used to mill, and the sub-pixel level diameters of their circumferential cutting edge were measured. Since IW has the highest edge positioning accuracy among the coarse positioning methods, only IW is used before fine positioning. The results are listed in Table 4, where No. 1 ~ 6 represent the end mills’ number.

For comparison, a microscope was used to measure the cutting edge wear, and the results are shown in Fig. 19. The processes and time for one measurement by a skilled operator include the following: removing the tool handle from the machine tool (30 s), removing the tool from the tool holder (1 min), placing the tool on the microscope to adjust the measurement parameters (1 min), and taking pictures and readings (30 s). Thus, a measurement using a microscope consumes a total of about 3 min.

The diameter measured by the image-based method was applied to Eq. (1) to estimate the tool wear. The required time of this method for a measurement includes sampling time (20 s) and image processing time (10.6 s). Thus a measurement using the proposed method takes a total of about 30.6 s.

The cutting edge wear measured by a microscope and by the image-based method is also listed in Table 4. Comparing the wear measured by the five methods: a microscope, the IW, the combination of IW, and three fine positioning (Gaussian fitting (GF), Lagrange interpolation (LI), and grayscale moment (GM)), it can be seen that the IW coarse positioning and the GM fine positioning have the highest measurement accuracy.

It can be seen from Table 4 that the maximum difference between the measured wear by the image-based method and microscope was 8.08 μm, and the maximum deviation was 11.3%. The results show that the proposed image measurement method and wear measurement model based on edge positioning are effective to measure the circumferential cutting edge wear in the spiral end mill.

5 Conclusions

The main contribution of this paper is to propose this novel wear measurement method for the cutting edges, such as the helical cutting edge, which have multi-dimensional features and is not suitable for directly adopting the flank image. With this method, the maximum wear can be found quickly and no adjustment of the shooting angle is required. To verify the proposed method, the experiments for measuring the circumferential cutting edge wear of the end mill are conducted. The experiment results indicate the following:

Since the watershed algorithm based on the improved grayscale gradient fully considers the spatial distribution of the image grayscale, it can effectively distinguish the target zone from the background, thereby eliminating the over-segmentation problem.

Based on the coarse positioning edge, the grayscale moment is used for fine positioning of the edge. The wear measured by this positioning method is more consistent with the real wear than that calculated by the coarse positioning or other fine positioning methods.

The maximum difference between the cutting edge wear measured by the proposed method and the microscope is 8.08 μm, and the maximum deviation is 11.3%. The measurement using a microscope requires the tool to be disassembled from the machine tool and put on the microscope. The installation and adjustment of the measurement process take about 3 min. In comparison, the proposed method can avoid the tool replacement error, and the whole measurement process takes about 30.6 s, greatly improving the measurement efficiency.

Data availability

All data included in this study are available in the article.

Code availability

Custom code.

References

Jamshidi M, Rimpault X, Balazinski M, Chatelain J (2020) Fractal analysis implementation for tool wear monitoring based on cutting force signals during CFRP/titanium stack machining. Int J Adv Manuf Technol 106:3859–3868. https://doi.org/10.1007/s00170-019-04880-y

Liu X, Wang W, Jiang R, Xiong Y, Lin K (2020) Tool wear mechanisms in axial ultrasonic vibration assisted milling in-situ TiB2/7050Al metal matrix composites. Advances in Manufacturing 8:252–264. https://doi.org/10.1007/s40436-020-00294-2

Cu F, Zuperl U (2011) Real-time cutting tool condition monitoring in milling. Strojniski Vestnik/Journal of Mechanical Engineering 57:142–150. https://doi.org/10.5545/sv-jme.2010.079

Krishnakumar P, Rameshkumar K, Ramachandran KI (2018) Machine learning based tool condition classification using acoustic emission and vibration data in high speed milling process using wavelet features. Intelligent Decision Technologies 12:265–282. https://doi.org/10.3233/IDT-180332

Da Silva RHL, Da Silva MB, Hassui A (2016) A probabilistic neural network applied in monitoring tool wear in the end milling operation via acoustic emission and cutting power signals. Mach Sci Technol 20:386–405. https://doi.org/10.1080/10910344.2016.1191026

Wu G, Li G, Pan W, Wang X, Ding S (2020) A prediction model for the milling of thin-wall parts considering thermal-mechanical coupling and tool wear. Int J Adv Manuf Technol 107:4645–4659. https://doi.org/10.1007/s00170-020-05346-2

Pattnaik SK, Behera M, Padhi S, Dash P, Sarangi SK (2020) Study of cutting force and tool wear during turning of aluminium with WC, PCD and HFCVD coated MCD tools. Manuf Rev. https://doi.org/10.1051/mfreview/2020026

Du D, Sun J, Yang S, Chen W (2018) An investigation on measurement and evaluation of tool wear based on 3D topography. Int J Manuf Res 13:168–182. https://doi.org/10.1504/IJMR.2018.093263

Kim J, Moon D, Lee D, Kim J, Kang M, Kim KH (2002) Tool wear measuring technique on the machine using CCD and exclusive jig. J Mater Process Technol 130–131:668–674. https://doi.org/10.1016/S0924-0136(02)00733-1

Jurkovic J, Korosec M, Kopac J (2005) New approach in tool wear measuring technique using CCD vision system. Int J Mach Tools Manuf 45:1023–1030. https://doi.org/10.1016/j.ijmachtools.2004.11.030

Wang WH, Hong GS, Wong YS (2006) Flank wear measurement by a threshold independent method with sub-pixel accuracy. Int J Mach Tools Manuf 46:199–207. https://doi.org/10.1016/j.ijmachtools.2005.04.006

Castejon M, Alegre E, Barreiro J, Hernandez LK (2007) On-line tool wear monitoring using geometric descriptors from digital images. Int J Mach Tools Manuf 47:1847–1853. https://doi.org/10.1016/j.ijmachtools.2007.04.001

Zhang J, Zhang C, Guo S, Zhou L (2012) Research on tool wear detection based on machine vision in end milling process. Prod Eng Res Devel 6:431–437. https://doi.org/10.1007/s11740-012-0395-5

Zhi G, He D, Sun W, Zhou Y, Pan X, Gao C (2021) An edge-labeling graph neural network method for tool wear condition monitoring using wear image with small samples. Meas Sci Technol. https://doi.org/10.1088/1361-6501/abe0d9

Wu X, Liu Y, Zhou X, Mou A (2019) Automatic identification of tool wear based on convolutional neural network in face milling process. Sensors (Switzerland). https://doi.org/10.3390/s19183817

Bergs T, Holst C, Gupta P, Augspurger T (2020) Digital image processing with deep learning for automated cutting tool wear detection. Procedia Manufacturing 48:947–958. https://doi.org/10.1016/j.promfg.2020.05.134

Sun X, Xu Q, Zhu L (2019) An effective Gaussian fitting approach for image contrast enhancement. IEEE Access 7:31946–31958. https://doi.org/10.1109/ACCESS.2019.2900717

Gester D, Simon S (2018) A spatial moments sub-pixel edge detector with edge blur compensation for imaging metrology, Houston, TX, United states, 2018[C]. Ins Electrical and Electronics Eng Inc

Fan Q, Zhang Y, Bao F, Yao X, Zhang C (2016) Rational function interpolation algorithm based on parameter optimization. Jisuanji Fuzhu Sheji Yu Tuxingxue Xuebao/Journal of Computer-Aided Design and Computer Graphics 28:2034–2042. https://doi.org/10.11834/jig.170369

Peng S, Su W, Hu X, Liu C, Wu Y, Nam H (2018) Subpixel edge detection based on edge gradient directional interpolation and Zernike moment. 2018 Int Conf Comp Sci Software Eng (CSSE 2018):106–116. https://doi.org/10.12783/dtcse/csse2018/24488

Bagga PJ, Makhesana MA, Patel KM (2021) A novel approach of combined edge detection and segmentation for tool wear measurement in machining. Prod Eng Res Devel 15:519–533. https://doi.org/10.1007/s11740-021-01035-5

Zhu K, Yu X (2017) The monitoring of micro milling tool wear conditions by wear area estimation. Mech Syst Signal Process 93:80–91. https://doi.org/10.1016/j.ymssp.2017.02.004

Nausheen N, Seal A, Khanna P, Halder S (2018) A FPGA based implementation of Sobel edge detection. Microprocess Microsyst 56:84–91. https://doi.org/10.1016/j.micpro.2017.10.011

Wang B, Chen LL, Cheng J (2018) New result on maximum entropy threshold image segmentation based on P system. Optik 163:81–85. https://doi.org/10.1016/j.ijleo.2018.02.062

Chen H, Shen X, Long J (2016) Threshold optimization framework of global thresholding algorithms using gaussian fitting. Jisuanji Yanjiu yu Fazhan/Computer Research and Development 53:892–903. https://doi.org/10.7544/issn1000-1239.2016.20140508

Funding

This study is supported by the Jiangsu Provincial Key Research and Development Program (Grant No. BE2020779) and National Key Laboratory of Science and Technology on Helicopter Transmission (Grant No. HTL-O-21G10).

Author information

Authors and Affiliations

Contributions

Ruijun Liang designed the project and wrote the manuscript. Yang Li and Lei He performed the experiments. All authors participated in the interpretation of the results.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

All the participated persons are listed in the article or acknowledged in the paper.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liang, R., Li, Y., He, L. et al. A novel image-based method for wear measurement of circumferential cutting edges of end mills. Int J Adv Manuf Technol 120, 7595–7608 (2022). https://doi.org/10.1007/s00170-022-09215-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-09215-y