Abstract

The response surface methodology (RSM), which uses a quadratic empirical function as an approximation to the original function and allows the identification of relationships between independent variables xi and dependent variables ys associated with multiple responses, stands out. The main contribution of the present study is to propose an innovative procedure for the optimization of experimental problems with multiple responses, which considers the insertion of uncertainties in the coefficients of the obtained empirical functions in order to adequately represent real situations. This new procedure, which combines RSM with the finite element (FE) method and the Monte Carlo simulation optimization (OvMCS), was applied to a real stamping process of a Brazilian multinational automotive company. For RSM with multiple responses, were compared the results obtained using the agglutination methods: compromise programming, desirability function (DF), and the modified desirability function (MDF). The functions were optimized by applying the generalized reduced gradient (GRG) algorithm, which is a classic procedure widely adopted in this type of experimental problem, without the uncertainty in the coefficients of independent factors. The advantages offered by this innovative procedure are presented and discussed, as well as the statistical validation of its results. It can be highlighted, for example, that the proposed procedure reduces, and sometimes eliminates, the need for additional confirmation experiments, as well as a better adjustment of factor values and response variable values when comparing to the results of RSM with classic multiple responses. The new proposed procedure added relevant and useful information to the managers responsible for the studied stamping process. Moreover, the proposed procedure facilitates the improvement of the process, with lower associated costs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Research background

In today’s intricate industrial environment, it is essential that organisations continuously improve their business processes, to sustain their competitive advantage [1].The field of industrial statistics and optimization has introduced a range of statistical and optimization methods for managing and improving processes in diverse industries [2,3,4].

In response surface methodology—RSM, an important DOE technique, linear or quadratic polynomial functions are employed to describe the problem studied, and to explore experimental conditions to obtain its optimization [5, 6].

In the field of operations research, Optimization via Monte Carlo Simulation (OvSMC) is suitable for problem solving with many local optimal solutions, including objective functions, complex restrictions, and continuous or discrete variables, as pointed out by Conway and [7] .

Monte Carlo simulation (MCS) provides decision-makers with a scenario analysis, with a range of possible outcomes and related probability distributions, besides also offering graphical results and sensitivity analysis [8]. MCS is based on the use of random numbers (sampling) and probability statistics to investigate problems in many areas, such as material science, economics, chemical and biophysics, statistical physics, nuclear physics and traffic flow [9]. Furthermore, MCS can be used to solve complex engineering problems because it can deal with a large number of random variables, various distribution types, and highly nonlinear engineering models [10].

The development of this study was primarily motivated by demands presented by companies in Brazil, in different sectors, which use DOE, mainly RSM, to improve their manufacturing processes and services. The problem observed by managers, in practice, was that, although the adopted RSM models could be statistically significant with respect to the modeled real situation, the adjustment of the optimized factors (or decision variables, xi), as recommended by RSM, sometimes had generated very different response variables values (ys) if compared to those observed in the real process. These situations have ended up creating difficulties to the managers regarding the adjustments that needed to be made in the company’s processes to improve performance, because they lost the confidence that the optimized values of the factors suggested by the RSM were, in fact, appropriate for implementation, since the investments in these changes can be high, as commented by [2].

In this context, a particular and important problem was presented by managers of a Brazilian multinational automotive industry and it is related to how to reduce the weight of stamping parts that, in recent years, has consumed great efforts of the engineers to get an adequate solution [11, 12]. It should be noted that it is not a simple problem, involving many relevant aspects for vehicle manufacturing, because that most of the energy absorbing members in vehicle body are fabricated by stamping process which can result in non-uniform thickness, substantial residual strains/stresses especially for high strength steel or advanced high strength steels, etc. [13, 14].

Besides these previous concerns, maybe it is not a good option to chose independent factors values, related to stamping process, by using a deterministic optimization procedure, because this could result in unreliable or unstable designs with respect to crashworthiness [15]. In fact, the occurrence of uncertainties about material properties, stamping process and geometry usually propagate from manufacturing phase to operational phase, and these can generate problems to the vehicle, for example, associated to crashworthiness [13].

There are two types of sheet metal forming methods: one is the incremental method based on flow theory, and the other is the total deformation theory of plasticity [16]. The former provides high precision, but it is time-consuming, and the latter is somewhat less accurate, but the simulation is rapid; i.e. it is suitable for the simulation of sheet metalstamping in the early design stages [16].

In order to mitigate the effects of uncertainty in stamping processes, since the early 1990s, there has been a significant increase in the use of simulations by finite element (FE) methods to sheet conformation in industry [17,18,19]. For example, FE simulations are useful for reducing lead time in the design and tool development stage, as pointed out by [20].

Pimental et al. [17] stated that problems involving new materials and new technologies constantly require the need to develop more robust, efficient, reliable and accurate FE analysis and simulation tools. These authors analyzed three FE software, AUTOFORMTM, PAM-STAMP TM and DD3IMPTM, and found that their accuracy was roughly the same. Thus, beyond the fundamentals differences in their FE formulation, AutoformTM and Pam-StampTM showed very similar numerical results, which was in good adherence with the real results. Similarly, [21] used simulations by finite element (FE) methods associated with RSM and ANOVA [4] to perform the optimization of multi-point forming process parameters.

In this study, an innovative procedure that include the application of optimization via Monte Carlo simulation—OvMCS [2] combined with response surface methodology—RSM [22] and finite element methods—FE [22] is proposed to analyze the impact of uncertainty occurrences in two objective functions (compression and traction) associated with stamping process parameters.

The main difference of this research when compared with the study developed by [2] is that, besides being a multi-objective problem involving multiple responses, the compression and traction functions are optimized through three agglutination methods: compromise programming—CP [23], desirability function [24] and modified desirability function [25]. Thus, the best option for a stamping process of a Brazilian multinational automotive company is proposed.

1.2 Contributions of this study

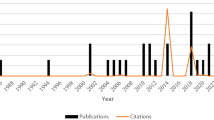

To verify the importance and to identify research opportunities in the area of experiment planning, a literature search was conducted, and the results are presented in Fig. 1. By using combinations of the keywords “Response Surface Methodology”, “Uncertainty” and “Monte Carlo Simulation” in the Scopus database, filtering results from 1989 to 2019, a total of 47 documents were identified, being 38 documents published between 2010 and 2021, which evidences the contemporaneity of the field.

Silva et al. [2] chronologically discussed the scope of these articles. Thus, it is possible to identify the innovative character of the new procedure described here, which propose an experimental design method incorporating uncertainty influences to the parameters.

Herein, the results of the literature survey are further explored by combining the following keywords: “Response Surface Methodology”, “Uncertainty” and “Monte Carlo Simulation”. Figure 2 shows a cluster map constructed using VOSViewer [26], in which the keywords that occurred with a frequency greater than or equal to five in these articles are highlighted.

An analysis of Fig. 2 reveals the formation of several clusters associated with representative keywords, and it can be inferred that there are researchers who combined RSM, FE, uncertainty and MCS, or RSM, as well as uncertainty and optimization. These results show the scientific and practical interest in combining these techniques. However, revealing the originality of our approach, none of the 51 articles published related to the cited keywords has adopted the same procedure proposed herein, i.e. the combination of the RMS, FE, MCS, and optimization for a multiple response problem related to a stamping process, considering uncertainty occurrence on the factors.

Finally, Table 1 presents the results of a search carried out in the Scopus database up to 2019, with the keywords “Design of Experiments”, “Response Surface Methodology”, “Polynomial Function”, “Uncertainty”, “Optimization”, “Monte Carlo Simulation” and “Optimization via Monte Carlo Simulation”. The filters used in this search were “Articles” and “Proceedings Papers”, in which keywords were cited in titles or abstracts.

It should be observed that only 74 publications included the keywords “DOE”, “Uncertainty” and “RSM” together, which indicates that there is still a good opportunity for research involving these subjects. Besides that, it should be pointed out that, when it was included the keyword “Optimization via Monte Carlo Simulation”, along with the three previous keywords, with the filters used, no papers were found in the consulted database.

Therefore, it should be highlighted that the present study provides an interesting academic and practical contribution to the field, since there is a need for new knowledge about experimental problems considering uncertainty. In this context, a proposal of an innovative approach for DOE, involving RSM, OvMCS and uncertainty is included, which was statistically validated by a real stamping process application.

1.3 Research questions and objectives

After the presented contextualization, this paper looked for answer the following research questions:

-

1.

What is a suitable procedure to incorporate uncertainties in the parameters of RSM problems with multiple responses?

-

2.

For a stamping process, for each agglutination method, how to choose weights values (ws) in the optimizations of the response variables?

-

3.

For a stamping process, what is the best agglutination method, with respect to traction and compression, that would provide a feasible solution to be implemented?

To answer these questions, the general objective of this study was to develop a new procedure, considering the uncertainty occurrences in the coefficients of the objective functions of experimental multi responses problems modeled by RSM. As previously mentioned, the method selected to insert uncertainty in the coefficients of the objective functions was Optimization via Monte Carlo Simulation (OvMCS). The specific objectives included applying the proposed procedure to a stamping process in a Brazilian multinational automotive company, aiming:

-

To identify the advantages of the proposed procedure compared to the traditional multiple response RSM, which adopts deterministic optimization.

-

To statistically validate the proposed procedure.

This paper is organized as follows. Section 2 presents definitions and concepts related to RMS and OvMCS. Section 3 describes agglutination methods for processes with multiple responses. Section 4 describes the method and materials and outlines the steps for the application of the proposed procedure. The results of the proposed procedure when applied to a real stamping process, and a comparison among different agglutination methods are in Section 5. Finally, Section 6 presents the conclusions and suggestions for further research.

2 Background of response surface methodology and optimization via Monte Carlo simulation

In this section, it is shown the features of two techniques that were used in the proposed procedure to deal with problems with multiple responses by the response surface methodology (RMS), considering the occurrence of uncertainties in the factors of the studied process. As highlighted by [27]), in process optimization problems, it is of fundamental importance to develop adequate models that mathematically describe the relationship between independent variables xi and response variables ys. The models can be classified in two broad classes [28]: phenomenological models and empirical models. Phenomenological models are based on a prior knowledge of the physical and chemical processes that are in some way associated to the studied systems [27]. On the other hand, empirical models are built from a statistical analysis of experimental observations, using regression [29] and design of experiments (DOE) techniques.

DOE is adopted to identify the important variables (or factors) in a process, and what their values (or conditions) should be to optimize the performance of the studied process. For each factor, based on the results of the experiments, limit values are selected, and, in general, two levels are tested for each factor. Therefore, the total number of experiments for a complete factorial design is given by 2n, with n being the number of studied factors. In this complete factorial experimental scheme, when the number of factors increases, the number of experiments increases exponentially, and it can become infeasible to use it when there are many factors to be considered [4].

An important and very useful DOE technique is RSM, which substitutes a complex optimization problem with a sequence of simpler problems, with objective functions approximated by response surfaces (usually a second degree polynomial), and enabling a faster resolution of large real problems [2, 5, 30]. As explained by [2], there are two traditional ways to model problems in RSM: central composite designs—CCD [4] and Box-Behnken designs—BBD [31]. In general, a response variable of interest y is related to the factors (variables) xi of a process and can be expressed by Eq. (1):

where 𝜖 is a random error, which includes the variations of the response variable y that are not explained by the factors x1,x2,…,xn.

Since, in the majority of practical problems, addressed by RSM, the function (1) is not known, it is adopted an approximation of the real function in the form of a second-order polynomial function [4] and [32], as given by Eq. (2):

where y is the (dependent) response variable, xi is the i th independent variable, xj is the j th independent variable, β0 is the offset term, βi is the linear effect and βii is the squared effect, βij is the interaction effect, and 𝜖 is a random error.

Due to the use of experimental data to estimate the parameters of the empirical function (2), it is necessary to construct Confidence Intervals (CI) for them using (3) [33]:

where \(\hat {\beta }\) is an estimated value for parameter β, SD is the associated standard deviation, and \( z_{1-\frac {\alpha }{2}}\) is obtained on a normal distribution table associated with the value of a significance level α.

An operations research (OR) technique utilized in the procedure proposed herein is Monte Carlo simulation—MCS, which is the common name for a wide variety of probability techniques, and it is a powerful statistical analysis tool. It is based on the use of random numbers (sampling) and probability statistics to investigate problems in fields as diverse as material science, economics, chemistry and biophysics, statistical physics, nuclear physics, flow of traffic flow and many others [9].

MCS is commonly used for solving complex engineering problems, since it can deal with a large number of random variables, various distribution types, and highly nonlinear engineering models [10, 34, 35]. Besides that, MCS is useful to help the manager in industrial-related problems, because of the need to optimize systems or processes that are frequently influenced by uncertainties, for example, in the coefficients of the objective function and in the coefficients in the restrictions [36]. As pointed out by [37], the MCS is an adequate way to evaluate the possible consequences of uncertainties in the optimization problems [38].

Sahinidis [39] pointed out that stochastic optimization is applied in several areas of knowledge, such as in production planning, natural resource management, and finance. One of its advantages is the possibility of extracting a set of relevant information related to the problem in question, and thus, enabling the analysis of different scenarios. In this sense, Optimization via Monte Carlo Simulation (OvMCS), which aims to find the best values for simulation model input parameters in the search of one or more desired outputs, has been shown to be relevant to deal with stochastic optimization problems [40].

According to [41], an OvMCS problem can be formulated as being:

where G: \(\mathbb {R}^{n} \times {\Omega } \to \mathbb {R}\) and the expectation is taken with respect to probability P, defined in a sample space (Ω,F) and X⊂ \(\mathbb {R}^{n}\).

It should be noted that, in practical problems, the search of an adequate solution does not necessarily imply the determination of optimal operating conditions, since it is practically impossible to establish the optimum point, due to the unlimited number of variables that impact an industrial process [27]. In this way, local optimum solution within a feasible search subspace [42] and using Heuristics or Metaheuristics Methods [43] can be performed for process improvement.

For [44], simultaneous optimization of multiple responses has been a priority in many industries, and much of the effort has been directed to researching alternative methods for the efficient determination of process adjustments that achieve predetermined goals. Moreover, multiple-response optimization problems often involve conflicting objectives [45], such as in manufacturing processes related to the minimization of production time versus equipment cost.

According to [46], there is still a gap between theory and practice in the optimization using multiple responses, and it is common to use agglutinated functions of multiple responses to obtain a single objective function to be optimized. Since there are a wide variety of agglutination methods, a comparative study of these methods is necessary to determine their individual efficiency [27]. The most used process optimization method in scientific studies is the joint use of the desirability function with the generalized reduced gradient (GRG) method [25]. In the next section, three agglutination methods are presented, which were tested in the procedure proposed in the present study for optimization with RSM, in the occurrence of uncertainties.

3 Agglutination methods for processes with multiple response

3.1 Compromise programming

Compromise programming (CP) was firstly presented by [47]. This method is characterized by the attempt to identify solutions that might be closer to an “ideal” solution, considering the distance between a given solution and the ideal solution [23]. This ideal (or target) solution is only a point of reference for the decision maker (DM). Thus, CP assumes that any DM seeks a solution as close as possible to an ideal point, and to achieve this closeness, a distance function is introduced into the analysis. It should be noted that the concept of distance is not used in its geometric sense, but as a proxy measure for human preferences [48].

When seeking the minimization of a given function fs(x), CP can be obtained from Eq. (5) and (6):

where, for fs(x), Ts is the ideal value and ws is its weight, usually it is adopted t = 2.

Since the units used to measure various objectives are different, they must be normalized in order to avoid a meaningless summation [49].

3.2 Desirability function

One of the most commonly used techniques to simultaneously optimize multiple responses is to transform the equations that model each of these (answers) responses into individual utility functions [27]. The next step is optimize a global utility function known as total desirability function (DF), given in terms of the individual utility functions [4]. [24] present individual utility functions (d) for nominal-the-best (NTB), larger-the-better s and maller-the-better (STB) answers, as described below:

- When the target value (T) of an answer (\(\hat {y})\) is between a maximum value (U) and a minimum value (L), the response is said to be of the NTB type. The utility function (d) is defined in Eq. (7):

where R and S are weighting factors, which may assume values greater than 1 when it is desired to prioritize the maximization, or the minimization, of the response [27, 50].

- When the target value (T) of an answer (\(\hat {y})\) must reach the minimum value of the function, the response is said of STB type. The utility function (d) is defined in Eq. (8):

- When the target value (T) of an answer (\(\hat {y})\) must reach the maximum value of the function, the response is said to be of LTB type. The utility function (d) is defined in Eq. (9):

According to [4], the agglutination of the multiple responses involved in a problem can be performed by maximizing a global utility function given by Eq. (10):

where s is the number of responses to be optimized.

Moreover, it is possible to use Eq. (11) instead of Eq. (10) to determine the value of the global utility function, which allows assigning weights (ws) to each utility function (ds), as presented by [27]:

3.3 The modified desirability function

Chng et al. [25] propose that the global utility function calculated by Eq. (11) could be modified as an arithmetic mean to avoid obtaining false optimal values. The proposed modifications can be seen in Fig. 3, where \(\hat {y_{s}}\) is the studied response s, \(d_{s}(\hat {y_{s}})\) is the utility function value for the response s, U is an upper limit for response \(\hat {y_{s}}\), L is a lower limit for response \(\hat {y_{s}}\).

Modified desirability function. Source: [25]

As presented and discussed by [25], the utility function ds can be calculated by Eq. (12), and there are three cases to be considered for the (ideal) target value (Ts) and the associated utility function ds value, expressed by Eq. (13), (14) and (15):

Therefore, according to [25], a new global utility function, named by modified desirability function—MDF, can be calculated by Eq. (16):

where \(d_{s}(\hat {y_{s}})\) and ds(Ts) are, respectively, function and target values associated to the estimated response \(\hat {y_{s}}, s\) is the total number of responses that were agglutinated by the function D and ws is the weight assigned to \(d_{s}(\hat {y_{s}})\), with \(\sum \limits _{s=1}^{p} w_{s}=1\).

The following section presents the classification of this study and materials and methods. Moreover, it is described the proposed procedure for optimizing experimental problems under uncertainty, using RSM and OvMCS..

4 Materials and methods

The research described in this study can be classified as being applied in its nature, presenting normative empirical objectives based on a quantitative approach. The technical procedures included the realization of experiments and the use of modeling and simulation [29]. Figure 4 presents a flowchart with the steps for applying the new proposal to optimize an experiment with multiple responses, with uncertainty occurrences, including the combination of RSM, finite element method and OvMCS.

Proposed procedure flowchart. Source: Adapted from [2]

The techniques used to apply of the proposed procedure (see Fig. 4) were:

-

–

Step 2 - Generation of the empirical functions representing the objective functions of RSM was performed with the ordinary least squares (OLS) algorithm [4].

-

–

Step 3 - The traditional (deterministic) optimization was performed with the generalized reduced gradient—GRG algorithm, available in Solver from Ms-ExcelTM.

-

–

Step 5 - OptQuest optimizer from the Crystal-BallTM Trial Version software [51] towas used to complete the optimizations in three stages (Fig. 5). OptQuest incorporates metaheuristics to guide its search algorithm towards better solutions [52].

OptQuest Flow. Source: [52]

The eight steps of the general procedure proposed, as shown in Fig. 5, are described as follows:

Step 1 - To identify the experimental problem to be addressed by RSM, finite element method and OvMCS. This involves understanding the context in which the manufacturing process or services will be studied and the identification of the response variables of interest and the independent variables that influence this process. Data are collected to obtain the experimental matrix with the values of the response variables and the associated independent variables considered in the process. The experimental matrix was coded according to the RSM technique by adopting: central composite designs (considering two levels for each factor) or Box-Behnken designs (considering more than two levels for each factor).

Step 2 –To generate empirical functions associated with the objective functions of RSM, which represent the response variables (ys) as a consequence of the independent variables considered in the process to be studied.

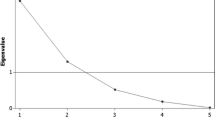

The encoded experimental matrix associated with the process studied, obtained in step 1, it is used to generate the empirical functions and the confidence intervals (CI), of 95% and 99%, for all the coefficients of the independent variables. Based on the results from the F and t-Student statistical tests [4], in Eq. (2), the terms that are not significant with α = 5% are disregarded. Observe that, in Eq. (2), if the terms of the interaction effects, β11x1x2,…,βnxn− 1xn are significant, it is recommended to keep the terms from the linear effects, β1x1,β2x2,…,βnxn, even if they are not significant.

The justification for the choice of these confidence values for the CI that 95% is the standard used in experimental problems addressed by RSM and 99% represents the greatest range of variation of the coefficients of the empirical function in relation to the value estimated by the regression analysis.

Step 3 -To optimize the empirical functions ys, obtained in step 2, by agglutination methods, without considering the occurrence of uncertainties. The GRG algorithm can be used to solve the two optimization problems, it is available in the Solver of MS-ExcelTM [53].

Step 4 - To enter the occurrence of uncertainties in the coefficients (β) of the empirical function, obtained in step 2. For this purpose, based on [4], continuous uniform distribution in the [a, b] interval was chosen, which represents the situation in which any value, among the limits considered, has the same probability of occurring. The values of the lower (a) and upper (b) limits should be tested, associated with the CI of 95% and 99%, for each coefficient of the empirical function, considerando a probability density function (PDF) as shown in Fig. 6:

Continuous uniform distribution. Source: [4]

In this context, [54] pointed out that based on the quantitative approach, the evaluation measures are calculated as the probabilities of occurrence of the main events and the reliability or insecurity of the main events.

Step 5 - To optimize the empirical functions with the occurrence of uncertainties, obtained in step 4, where the decision variables are the factors xi and the response variables are ys. OvMCS is applied with support from the Crystal Ball TM software and the OptQuestTM optimizer.

Step 6 - To analyze the results from the traditional approach (step 3 - deterministic optimization) with the results of the optimization under uncertainty (step 5 - with OvMCS - CI - 95% and OvMCS - CI - 99% This will be done by comparing the optimized values of the factors (xi) and the final value of the optimized objectives functions (ys), observing similarities and differences.

Step 7 - To analyze the behavior of the response variable from the experimental problem optimized by OvMCS and statistical validation. The statistical validation will be done through a confirmation experiment, relating it to the behavior of the objective functions and to the factors optimized by OvMCS.

Step 8- Recommendations.

After statistical validation, it will be possible to use different configurations in the experiment and to accurately predict the confidence interval of the investigated response variables, carrying out more realistic experiments for different experimental configurations, without the need to carry out the confirmation experiment, which is often time-consuming and costly.

For the purposes of illustration and validation of the proposed general procedure, including the occurrence of uncertainties in the coefficients of the objective functions of experimental problems modeled by RSM, a real case involving a stamping process in a Brazilian multinational automotive company was used. The studied instance has five factors, and the response variables were y1 (traction) and y2 (compression), RSM with Box-Behnken design was used to illustrate how to statistically validate the proposed procedure.

To solve the example, we used an Intel Core i7-9750H coffee lake refresh, 12MB cache, GHZ processor 2.6 to 4.5 turbo Boost, with 32 GB RAM, and Windows operational system, with Video car NVIDIATM GeForceTM RTX 2070 GPU (8GB GDDR6) MAX-Q design. The computational time for performing optimization by GRG algorithm was about two seconds, and the simulation stop rule was used to perform OvMCS by optimizer Optquest of the Crystal BallTM software. There were a total of 1000 simulations runs with 1000 replications each, and the computational time was about twenty minutes. In the next Section, the proposed procedure application for this studied real problem is presented in details.

5 A stamping problem solved by the proposed procedure

In the sequence, considering a real stamping problem, the results obtained in each step (see Fig. 8) of the proposed procedure are presented.

Step 1 - To identify the experimental problem

The object of study was a Brazilian multinational automotive company, with 31 units in 14 countries and about 15 thousand employees. It is a manufacturer of steel wheels and stamped automotive components, including chassis of small and heavy vehicles. The studied product was a transmission cross member, with thickness of 6.8 mm, using LNE 380 steel. The products of stamping supports are manufactured using raw material supplied by steel mills in coils or flat sheets. The products obtained with this manufacturing process are made of structural steels with mechanical strengths from 260 to 600 (MPa), thicknesses ranging from 2 to 10 (mm) and lengths from 100 to 2200 (mm), with weights between 0.1 and 20 (kg). The stamping tool is the device by which the most varied stamping components are obtained. The upper part of the device is fixed in the movable part of the press, which is called “hammer”, and in the lower part is called “lower table”, as shown in Fig. 7.

The actuation of the press causes the displacement of the hammer and the upper part of the tool, which provides the mechanical action of the upper part of the tool against the lower part that is fixed in the lower base of the press. With this action, the tool geometry is transferred to the plate, obtaining the product in the desired shape by plastic deformation of the material. The product obtained in the stamping operation might or might not undergo other press operations, such as drilling, cutting, and calibration, among others. Then, it is ready for the final painting operation. Thickness reduction in the critical region of the embossed crosspiece, subjected to tensile stresses during the embossing process, and increased compression thickness in the critical region of the embossed crosspiece might cause cracks to occur during the stamping operation, increasing the risk of product breakage when running into field in the same stretching and compression region. Figure 8 shows the bottom of the tool, currently used by the company, with no compensation in the lower stamp.

The major problem identified by stamping professionals is the lack of parameters to define the compensations in the tool design phase, which undermines the decisions made by the company’s engineers and specialists regarding the geometry to be adopted for the tools.

At this stage, it is common to use specific finite element method simulation software (such as AutoformTM Software). Nevertheless, even with all the experience that engineers have acquired over time, this is a slow process and should be repeated several times. Inadequate decisions are often made, leading to additional costs as well as additional stamping operations.

As an example of this procedure, para o produto estudado, Fig. 9 was constructed with the AutoformTM Software, which plots the stress-strain curve, which is an important tool to evaluate the fracture strength of a material. In fact, in the stamping industry, finite element simulation is a critical step to optimize of the sheet metal forming processes [55], and an indispensable input to the finite element model is the flow stress curve of the sheet material, also known as the true stress-strain curve [55].

Therefore, the studied company has to solve a complex problem associated with the occurrence of cracks in stamped parts, as highlighted in red in Fig. 9, which shows the reduction of the thickness of the crossbeam, from 6.8 mm to 4.857 mm, obtained with the current configuration of the mold during the stamping process. In summary, the problems detected by the engineers were:

-

–

Reduction of thickness in the critical region (traction) of the stamped components during the forming process.

-

–

Increase of thickness in the critical (compression) region of the stamped components during the forming process.

-

–

Incidence of cracks during the forming operation and possibility of cracks during use of the product.

-

–

Lack of parameters to define compensations, in the tool project planning phase.

The following text describes the application of the proposed procedure when dealing with this practical situation.

Step 1 - Identification of the experimental problem to be solved by RMS and FE and OvMCS

The practical situation involves choosing the number of inserts and their position in the mold in the stamping process to mitigate the effects of Traction (response variable y1) and Compression (response variable y2) on the studied product. As presented in [2], the company’s engineers suggested to placing inserts of different heights along the mold to decrease the appearance of cracks. As an experiment in planning strategy, before using the Box-Behnken design, a Plackett-Burman design [4] was used, since initially there were many (in a total of 23) xi factors (positioning the inserts in the mold) to be investigated (see Fig. 10).

Geometry of the mold of the stamped product without and with the inserts. Source: [2]

Therefore, in a similar way like it was made and described in details in [2] for one response variable, from the practical experience of the company’s engineers, and based on the Pareto chart built by the MinitabTM software [4], it was possible to reduce the number of xi factors from 23 to 15, and a Plackett-Burman design N16 experiment was performed for an one-response variable, now considering two response variables y1 and y2.

After using the Plackett-Burman design N16, the number of factors was reduced to five, which was the number used in the application of the RSM with Box-Behnken design, as shown in Table 2.

Figure 11 shows the adjustments of the inserts made in the mold for the execution of the RSM with Box - Behnken design experiment. Appendix A presents all the 243 = 35 possible combinations used in this experiment. As an illustration, a small part of it is reproduced in Table 3.

Positioning the inserts for the RSM and Box-Behnken design. Source: Adapted from [2]

Step 2 - Generate empirical functions for the two responses variables by RSM

By applying the OLS algorithm, with the data from Table 3, the empirical quadratic function (18)–(19) and the constraints (20)–(22), for y1 (traction) were obtained:

subject to:

By applying the OLS algorithm, with the data from Table 3, the empirical quadratic function (23)–(24) and the constraints (25)–(27), for y2 (compression) were obtained:

subject to:

Step 3 - Optimizing the empirical functions without uncertainty

In this step, without considering the occurrence of uncertainty in the coefficients of the empirical functions, three agglutination methods were tested for both response variables: compromise programming (CP), desirability function (DF) and modified desirability function (MDF). The generalized reduced gradient (GRG) method was applied to optimize (19)–(22) and (24)–(27).

Tables 4, 5 and 6 present the results obtained by the GRG algorithm for each agglutination method, highlighting the best-fit values. Appendix B presents the FE simulations for these best fits.

The weight values for each optimization scenario were calculated using a mixture design [4]. Additionally, for the application of the Desirability function, with the support of the company engineers, the values of the parameters U and L, were established, respectively, for \(\hat {y_{1}}\) and \(\hat {y_{2}}\): U= 6.8 and L= 4.857, L= 6.8 and U= 9.193. Finally, to apply the modified desirability function, also with the support of the company’s engineers, the values of the parameters U and L, were established, respectively, for \(\hat {y_{1}}\) and \(\hat {y_{2}}\): U= 6.8 and L= 4.857, L= 6.8 and U= 9.193. In addition, as a suggestion of the consulted engineers, it was adopted T = (L + U)/2 and by using Eq. (14) resulted in ds(Ts) = 1.

Step 4 - Insert the occurrence of uncertainty in the coefficients of empirical functions

Equation (28) presents the new coefficients of the empirical function \(\hat {y_{1}}\) (associated to response variable Traction), with the insertion of uncertainty, as is in Table 7, for CI - 95% and CI - 99%:

Table 7 shows for \(\hat {y_{1}} \) the CI - 95% and the CI - 99%, as is in [2].

Equation (29) presents the new coefficients of the empirical function \(\hat {y_{2}}\) (associated to response variable Compression), with the insertion of uncertainty, as is in Table 8, for CI - 95% and CI - 99%:

Step 5 - Optimize empirical functions with uncertainty

For both studied response variables, Tables 9 and 10, show, respectively, the results of OvMCS - CI - 95% and OvMCS - CI - 99%, using Crystal BallTM software with its OptQuestTM optimizer, with the compromise programming—CP function.

For both studied response variables, Tables 11 and 12 show, respectively, the results of OvMCS - CI - 95% and OvMCS - CI - 99%, using Crystal BallTM software with its OptQuestTM optimizer, with the desirability function—DF function.

For both studied response variables, Tables 13 and 14 show, respectively, the results of OvMCS - CI - 95% and OvMCS - CI -99%, using Crystal BallTM software with its OptQuestTM optimizer using the modified desirability function—MDF.

Step 6 - Analyzing the results of traditional multiple-response optimization with RSM (obtained in step 3), and with RSM and FE and OvMCS (obtained in step 5)

The engineers of the studied company considered that a 20% thickness increase or decrease is acceptable for the studied stamping process, and the best fit should meet the following criteria

-

-

\(\hat {y_{1}}_{\min \limits }\) = 5.440 (mm).

-

-

\(\hat {y_{2}}_{\max \limits }\) = 8,160 (mm).

-

-

keeping the lowest value for the ratio: \(\frac {\hat {y_{2}}}{\hat {y_{1}}}\).

From the 45 scenarios generated by optimization (See Tables 4, 5, 6, 9, 10, 11, 12, 13 and 14), only 5 scenarios met the established criteria:

-

–

Solution by OvMCS - CI - 95%, with weights w1= 0.75 and w2 = 0.25, using CP - Found at the last line of Table 9 .

-

–

Solution by OvMCS - CI - 95%, with weights w1= 0.75 and w2 = 0.25, using DF - Found at the last line of Table 11.

-

–

Solution by OvMCS - CI - 99%, with weights w1= 0.75 and w2 = 0.25, using DF - Found at the last line of Table 12 .

-

–

Solution by GRG algorithm, with weights w1= 0.75 and w2 = 0.25, using CP - Found at the last line of Table 4.

-

–

Solution by GRG algorithm, with weights w1= 0.75 and w2 = 0.25, using DF - Found at the last line of Table 5.

Using the information in Appendix B for illustration, Figs. 12 and 13, show the EF simulation of the best optimization settings for the CP function without the uncertainties (Table 4 in step 3) with the uncertainties (Table 9 in step 5), respectively.

With these results, the engineers of the company under study were asked which solution (without uncertainty - step 3 or with uncertainty - step 5) would provide the best practical effect on the studied stamping process. The answer was that the best performance setting would be that obtained for CP, with weights w1 = 0.75 and w2 = 0.25, using OvMCS - CI - 95% (Fig. 13). This adjustment better met all three criteria already discussed, and thus would provide the best practical tool configuration, resulting in increased quality and reliability of the stamping process.

Step 7 - Analyze the behavior of the response variables from RSM and FE and OvMCS. Validate the results

Model validation of uncertain structures is a challenging research focus because of uncertainties involved in modeling, manufacturing processes, and measurement systems [56].

In order to validate the proposed procedure, graphical analysis was used, as shown in Figs. 14 and 15, respectively, to evaluate the behaviors of both response variables \(\hat {y_{1}}\) and \(\hat {y_{2}}\), associated with the adjustment chosen as being the most appropriate in step 6, which was the solution by OvMCS - CI - 95%, with weights w1 = 0.75 and w2 = 0.25, using CP.

Considering this solution, Figs. 14 and 15 were obtained by performing 106 simulation runs in Crystal-BallTM Trial Version software, using the OptQuest optimizer, according to the flowchart in Fig. 5, for the response variables y1 and y2, respectively. In this simulation, random values were generated for the coefficients of the empirical functions (19) and (24), according to continuous uniform distributions for the factor coefficients, which are shown in the second column of Table 7, associated with the CI - 95% interval.

Figure 16, also considering the CP agglutination method, with w1 = 0.75 and w2 = 0.25 shows the behavior of the solutions obtained by OvMCS - CI - 95% for each of the 106 simulation runs for the CP function. All the solutions were viable, with the Best Solutions arranged parallel to the abscissa axis (number of simulations), drawn at the bottom of Fig. 16. There was only one last best solution, which is highlighted with a green rhombus symbol.

As verified by the simulation with the finite element method, the obtained solution presented good quality in the understanding of the company engineers. Thus, the proposed procedure can be considered validated. Finally, it should be noted that the procedure was very promising, presenting advantages when compared with the traditional methods of optimization of experimental multi-response problems, commonly adopted in companies.

Step 8 - Recommendations

Therefore, at the end of the application of the proposed procedure, it was recommended to the engineers of the company the use the solution obtained for CP, with weights w1 = 0.75 and w2 = 0.25, using OvMCS - CI - 95% (Fig. 13) for the studied stamping process.

6 Conclusions and suggestions for future research

Therefore, with the conduction of the present study, all research questions were answered and the established objectives were achieved. Based on the tests performed for a stamping problem at a Brazilian multinational automotive company, an innovative procedure that combines RSM and FE with OvMCS was described. This procedure proved to be adequate to introduce the occurrence of uncertainties in the objective function coefficients of experimental multi-response problems, adopting the continuous uniform distribution. Furthermore, it was statistically validated by the studied stamping problem.

For the studied real problem, regarding the tested multiple response methods, it was possible to identify the best choices for the weight values for the optimizations, calculated by a mixture design methodology, which would be w1 = 0.75 and w2 = 0.25, met the criteria set by the company’s engineers. Finally, it was evidenced that the best option would be to use OvMCS - CI - 95% with the compromise programming function and the weight values mentioned above.

RSM and FE combined with OvMCS surpasses the use of the procedures that are usually adopted in companies, which is the (deterministic) optimization for experimental problems with multiple responses, using the GRG algorithm and the desirability function. The use of different agglutination methods with different weights was interesting because it allowed the generation of 15 scenarios without considering uncertainties (see Tables 4, 5 and 6) and 30 scenarios for optimization considering uncertainties (see Tables 9, 10, 11, 12, 13 and 14). Thus, it allowed the development of a sensitivity analysis that generated more knowledge about the studied stamping process.

This system provided engineers with useful information that will facilitate their work when seeking improvements to the process. In fact, the application of OvMCS - CI - 95%, with the continuous uniform distribution used to insert the occurrence of uncertainties in the objective function coefficients presented interesting options to the managers. Moreover, it also proposed a higher quality solution than the one generated by the GRG algorithm and the Desirability function.

For future study, it is intended to test the new procedure proposed here in other types of application, such as service companies, inserting the occurrence of uncertainties, which is an important research gap for both the academic as well as the practical point of view.

References

Prashar A (2016) A conceptual hybrid framework for industrial process improvement: integrating taguchi methods, shainin system and six sigma. Production Planning & Control 27:1389–1404

Silva AF, Marins FAS, Dias EX, Oliveira JBS (2019) Modeling the uncertainty in response surface methodology through optimization and monte carlo simulation: an application in stamping process. Materials & Design 173:107776

Prashar A (2016) Using shainin doe for six sigma: an Indian case study. Production Planning & Control 27:83–101

Montgomery DC (2009) Design and analysis of experiments. New York: Wiley Inc.

Babaki M, Yousefi M, Habibi Z, Mohammadi M (2017) Process optimization for biodiesel production from waste cooking oil using multi-enzyme systems through response surface methodology. Renew Energy 105:465–472

Yanga X, GSHZZM (2018) Application of design for six sigma tools in telecom service improvement. Production Planning & Control 29:959–971

Conway RT, Sangaline EW (2017) A monte carlo simulation approach for quantitatively evaluating keyboard layouts for gesture input. International Journal of Human-Computer Studies 99:37–47

Ye W, You F (2016) A computationally efficient simulation-based optimization method with region-wise surrogate modeling for stochastic inventory management of supply chains with general network structures. Comput Chem Eng 87:164–179

Marzouk M, Azab M, Metawie M (2018) Bim-based approach for optimizing life cycle costs of sustainable buildings. J Clean Prod 188:217–226

Gass S, Assad A (2005) Model world: tales from the time linedthe definition of or and the origins of monte carlo simulation. Interfaces 35:429–435

Xiao W, Wang B, Zhou J, Ma W, Yang L (2016) Optimization of aluminium sheet hot stamping process using a multi-objective stochastic approach. Eng Optim 48:2173–2189

Yoon T-J, Oh M-H, Shin H-J, Kang C-Y (2017) Comparison of microstructure and phase transformation of laser-weldedjoints in al-10wtstamping. Mater Charact 128:195–2022

Sun G, Zhang H, Wang R, Lv X, Li Q (2017) Multiobjective reliability-based optimization for crashworthy structures coupled with metal forming process. Struct Multidiscip Optim 56:1571–1587

Lampón JF, R-LE (2021) Modular product architecture implementation and decisions on production network structure and strategic plant roles. Production Planning & Control 0:1–16

Lampón JF, C-P G-BJ (2017) The impact of modular platforms on automobile manufacturing networks. Production Planning & Control 28:335–348

Zhang X, Zhu X, Wang C, Liu H, Zhou Y, Gai Y, Zhao C, Zheng G, Hang Z, Hu P, Ma Z-D (2018) Initial solution estimation for one-step inverse isogeometric analysisin sheet metal stamping. Computer methods in applied mechanics and engineering 330:629–645

Pimental AMF, Alves JLCM, Merendeiro NMS, Vieira DMF (2018) Comprehensive benchmark study of commercial sheet metal forming simulation softwares used in the automotive industry. Int J Mater Form 11:879–899

Bargmann S, Klusemann B, Markmann J, Schnabel JE, Schneider K, Soyarslan C, Wilmers J (2018) Generation of 3d representative volume elements forheterogeneous materials: a review. Prog Mater Sci 96:322–384

González C, Vilatela JJ, Molina-Aldareguía, Lopes CS, LLorca J (2017) Structural composites for multifunctional applications: Currentchallenges and future trends. Prog Mater Sci 89:194–251

Jadhav S, Martin S, Bruno B (2018) Applications of finite element simulation in the development of advanced sheet metal forming processe. erg- und hüttenmännische Monatshefte 163:109–118

Abosaf M, Essa K, Alghawail A, Tolipov A, Sul S, Pham D (2017) Optimisation of multi-point forming process parameters. International Journal Advanced manufucaturing Technology 92:1849–1859

Mori K, Matsubara H, Noguchi N (2004) Micro–macro simulation of sintering process bycoupling monte carlo and finite element methods. Int J Mech Sci 46:841–854

Ringuest JL (1992) Multiobjective optimization:behavioral and computational considerations. Boston College Chestnut Hill, MA 02167-3808 USA

Derringer S, Suich R (1980) Simultaneous optimization of several response variables. J Qual Technol 12:214–219

Ch’ng CK, Quah SH, Low HC (2005) A new approach for multiple-response optimization. Qual Eng 17:621–626

Tomaszewski R (2018) A comparative study of citations to chemical encyclopedias in scholarly articles: Kirk-othmer encyclopedia of chemical technology and ullmanns encyclopedia of industrial chemistry. Scientometrics 117:175–189

Gomes FM, Pereira FM, Silva AF, Silva MB (2019) Multiple response optimization: analysis of genetic programming for symbolic regression and assessment of desirability functions. Knowl-Based Syst 179:21–33

Chen X, Mei C, Xu B, Yu K, Huang X (2018) Quadratic interpolation based teaching-learning-based optimization for chemical dynamic system optimization. Knowl-Based Syst 145:250–263

Bertrand JWM, Fransoo JC (2002) Operations management research methodologies using quantitative modeling. International Journal of Operations and Production Management 22:241–264

Nayak MG, Vyas AP (2018) Optimization of microwave-assisted biodiesel production from papayaoil using response surface methodology. Renewble Energy 138:18–28

Goupy J, Creighton L (2007) Introduction to design of experiments with jpm examples, Third. SAS Institute Inc., Cary, NC, USA

Bobadilla MC, Lorza RL, García RE, Gómez FS, González EPV (2017) An improvement in biodiesel production from waste cooking oil by applying thought multi-response surface methodology using desirability functions. Energies 10:1– 20

Lawson JS (2010) Design and analysis of experiments with sds. Chapman & Hall

Papadrakakis M, Papadopoulos V (1996) Robust and efficient methods for stochastic finite element analysis using monte carlo simulation. Computer methods in applied mechanics and engineering 134:325–340

Hohe J, Paul H, Bechmann C (2018) A probabilistic elasticity model for long fiber reinforced thermoplastics with uncertain microstructure. Mech Mater 122:118–132

Durugbo C, Tiwari A, Alcock J (2013) Modelling information flow for organisations: a review of approaches and future challenges. Int J Inf Manag 33:597–610

Kroese DP, Taimre T, Botev ZI (2011) Handbook of monte carlo methods. Wiley, New York

Gentle JE (2003) Random number generation and monte carlo methods. Springer, New York

Sahinidis NV (2004) Optimization under uncertainty: state-of-the-art and opportunities. Computers & Chemical Engineering 28:971–983

Miranda RC, Montevechi JAB, Silva AF, Marins FAS (2014) A new approach to reducing search space and increasing efficiency in simulation optimization problems via the fuzzy-dea-bcc. Math Probl Eng 1:1–15

Shapiro A (2001) Monte carlo simulation approach to stochastic programming

Dehuri S, Cho S-B (2009) Multi-criterion pareto based particle swarm optimized polynomial neural network for classification: a review and state-of-the-art. Computer Science Review 3:19–40

Dey S, Mukhopadhyay T, Adhikari S (2017) Metamodel based high-fidelity stochastic analysis of composite laminates: a concise review with critical comparative assessment. Compos Struct 171:227–250

Boylan GL, Cho BR (2013) Comparative studies on the high-variability embedded robust parameter design from the perspective of estimators. Computers & Industrial Engineering 64:442–452

Kuriger GW, Grant FH (2011) A lexicographic nelder–mead simulation optimization method to solve multi-criteria problems. Computers & Industrial Engineering 60:555–565

Ehrgott M, Ide J, Schöbel (2014) Minmax robustness for multi-objective optimization problems. Eur J Oper Res 239:17–31

Zeleny A (1974) A concept of compromise solutions and the method of the displaced ideal. Comput Oper Res 1:479– 496

Kanellopoulos A, Gerdessen JC, Claassen GDH (2015) Compromise programming: non-interactive calibration of utility-based metrics. Eur J Oper Res 244:519–524

Jadidi O, Zolfaghari S, Cavalieri S (2015) A new normalized goal programming model for multi-objective problems: a case of supplier selection and order allocation. Int J Prod Econ 148:158–165

Saxena V, Kumar N, Saxena VK (2019) Multi-objective optimization of modified nanofluid fuel blends at different tio2 nanoparticle concentration in diesel engine: Experimental assessment and modeling. Appl Energy 248:330–353

ORACLE (2018) Optquest. www.oracle.com/technetwork/middleware/crystalball/overview/optquest-128316.pdf

Oracle (2018) How optquest works. www.docs.oracle.com/cd/E57185_01/CBOUG/ch02s03.html

Köksoy O, Doganaksoy N (2003) Joint optimization of mean and standard deviation using response surface methods. J Qual Technol 35:239–252

Patel SC, Graham JH, Ralston PAS (2008) Quantitatively assessing the vulnerability of critical information systems: a new method for evaluating security enhancements. Int J Inf Manag 28:483–491

Zhao K, Wang L, Chang Y, Yan J (2016) Identification of post-necking stressstrain curve for sheet metals by inverse method. Mech Mater 92:107–118

Bi S, Deng Z, Chen Z (2013) Stochastic validation of structural fe-models based on hierarchical clusteranalysis and advanced monte carlo simulation. Finite Elem Anal Des 67:22–333

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval

This paper does not use data, which requires ethical approval, since all studies are done in a computational way. However, the main contribution of this article is to propose a new procedure that considers the insertion of uncertainties in the coefficients of the empirical functions in RSM with multiple responses, in practical experimental problems. It was possible to determine that RSM combined finite elements with Optimization via Monte Carlo Simulation (OvMCS) outperforms the use of (deterministic) optimization, using the generalized reduced gradient (GRG) algorithm, which is traditionally employed in RSM applications. Therefore, this paper does not directly involve people or biological information.

Additional information

Author contribution

The author Aneirson Silva was responsible for writing the article as well as programming in VBA-excel for the optimization multiobjectives problems and, also, for the statistical modeling of the data.

The author José Benedito was responsible for the writing of the article as well as the modeling and simulation by finite elements.

The author Fernando Marins was responsible for writing the article as well as for the final correction of the paper.

The author Erica Ximenes was responsible for writing the article and statistical and mechanical analysis.

Consent to participate and consent to publish

All authors are aware of their participation and also regarding the publication of this paper.

Prof. Aneirson Francisco da Silva

Prof. Fernando Augusto Silva Marins

Phd. Erica Ximenes Dias

Msc. José Benedito da Silva Oliveira

Competitive interests

I am submitting the article Multiple Response Optimization and Finite Element Method combined with Optimization via Monte Carlo Simulation in a stamping process under uncertainty. The main contribution of this article is to propose a new procedure that considers the insertion of uncertainties in the coefficients of the empirical functions in RSM with multiple answers, in practical experimental problems. This innovative procedure provides managers with useful information that will facilitate their work in seeking improvements in the analyzed printing process.

The main competitive interests are linked to companies that produce automotive components, since this system proposes an innovative way to analyze and improve stamping process problems.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

da Silva, A.F., Marins, F.A.S., da Silva Oliveira, J.B. et al. Multi-objective optimization and finite element method combined with optimization via Monte Carlo simulation in a stamping process under uncertainty. Int J Adv Manuf Technol 117, 305–327 (2021). https://doi.org/10.1007/s00170-021-07644-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-021-07644-9