Abstract

Milling is among the most commonly used, flexible, and complex machining methods; and adequate selection of process parameters, modeling, and optimization of milling is challenging. Due to complex morphology of cutting process, edge and surface defects and elevated cutting forces and temperature are the main observations if non-adequate cutting parameters are selected. Therefore, modeling surface roughness and cutting forces in the milling process are of prime importance. Due to the ability of neuro-fuzzy networks to maintain appropriate modeling with investigation of uncertainties and the capacity of meta-heuristic approaches to set the coefficients of these networks precisely, in the present study, the coupled models of adaptive neuro-fuzzy inference system (ANFIS-type fuzzy neural networks) and interval type 2 fuzzy neural networks with evolutionary learning algorithms including particle swarm optimization (PSO) and genetic algorithm (GA) were used to predict the mean values of directional cutting forces as well as average surface roughness (Ra) in milling aluminum alloys (AA6061, AA2024, AA7075) under various cutting conditions and insert coatings. The main innovation of the present study refers to implementation of IT2FNN- PSO method in the machining operations. No similar research in this regards was found in the literature. According to the results, it was found that the proposed methods led to excellent and precise modeling results with high correlation rates with experimental outputs. The use of IT2FNN-PSO led to better performance as compared to observations made in other two ANFIS-based models aforementioned.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Milling is one of the most commonly used machining operations in numerous manufacturing sectors which has complex modes of chip formation and interactions between cutting tools and the work part. Therefore, the cutting parameters must be selected adequately; otherwise, several machining difficulties including chatter vibrations, fluctuated cutting force, and elevated cutting temperature are expected which tend to deteriorate the surface quality and prolong the machining time while higher machining cost is expected.

Surface quality and cutting forces are among the most important milling attributes. The average surface roughness (Ra) and the mean values of directional cutting forces are the major attributes of the surface integrity and cutting forces when dealing with milling operation. It is advised to acquire good information and understanding of factors governing these attributes aforementioned. Benardos and Vosniakos [1] divided modeling techniques applied to predict the surface roughness into three major categories: experimental, analytical/numerical, and artificial intelligence (AI) models. Experimental and analytical models are based on experimental information and conventional approaches such as the statistical regression known as the RSM [1,2,3,4]. Artificial intelligence models use various approaches such as artificial neural networks (ANNs) [5,6,7,8], genetic algorithms (GA) [5, 9], fuzzy logic [10], and hybrid systems [11,12,13].

Analytical/numerical-based approaches are mainly dependent to machining kinematics, cutting tool properties, and interaction effects between cutting parameters. Due to complexity of many several cutting and non-cutting factors in the machining process, the aforementioned theory, in spite of its strong theoretical background, is not an accurate model. In addition, several parameters such as wear, cutting tool deflection, or thermal phenomena are not well modeled yet. The experimental approaches, are designed to generate experimentally based data which are not however reliable if different cutting parameters are used. In statistical regression analyses, researchers’ insights play a major role and no systemic formulation is available. In general, the results cover a limited practical range that is regional, and due to ignorance of some factors, there is also the possibility of achieving unexpected results. Furthermore, experimental study for broad range of cutting parameters is time-consuming and costly [1, 14]. Knowing that adequate mathematical equations and comprehensive knowledge about direct and indirect effects of cutting parameters on machining responses are not available, therefore, the use of AI-based methods to develop near-reality models has been the subject of interest for industrial and academic sectors. AI-based approaches have great capability of modeling sophisticated phenomena and uncertainties. Artificial neural networks (ANN), fuzzy logic systems, and network-based fuzzy inference system are among the most popular AI methods which have been widely used for modeling and optimization of machining processes [14,15,16,17,18,19,20,21,22,23,24,25].

In the field of modeling and predicting Ra in milling process, Karayel [15] has introduced and trained an ANN model [16] to predict and control Ra in turning with multi-layer feedforward, which led to an average error of 0.023. Gupta et al. [17] introduced an ANN-based GA model as a hybrid system. Many studies and modeling in the field of AI-based models, including radial basis function networks (RBFN), fuzzy hybrid networks, and GA have been performed with acceptable accuracy and reliability [18, 19]. Similarly, modeling and analyzing of shear forces in milling operation have been an interesting topic in recent decades. In fact, the machining force measurement and analysis are important steps in the monitoring process in order to take acceptable decisions and proper reactions by means of improving and optimizing the quantity and quality of production. In addition, an appropriate estimate can predict the processes involved in machining operations such as tool wear and other items satisfactorily. Kumar et al. [20] and Noordin et al. [21] have shown that RSM is an acceptable approach for predicting cutting forces through experimental data. The GA is also among the approved methods for modeling and predicting cutting forces [22, 23]. Various research studies were conducted about the use of neural and fuzzy-neural fields with Takagi-Sugeno rule base and also ANFIS networks with various types of learning algorithms such as BP [24,25,26].

Nowadays, the applications of aluminum alloys, which exhibit strong features including high weight-to-strength ratio, high thermal and electrical conductivity, as well as acceptable machinability, are growing within numerous manufacturing sectors. It is therefore recommended to expand the use of the proposed approach to highly used aluminum alloys.

Barua et al. [27] used an RSM-based approach to find the influence of cutting speed, depth of cut, as well as feed rate on Ra in machining Al 6061-T4. Under specific cutting conditions used, a reliability of 90% was found. Daniel et al. [28] developed a multi-objective prediction model by using ANN for milling aluminum hybrid metal matrix that yielded which led to better performance as compared to regression models. Karkalos et al. [29] stated that the performance of ANN network is more acceptable than RSM regression when used in milling Ti-6Al-4V. Sharkawy et al. [13] used ANFIS and genetically evolved fuzzy inference system (G-FIS) methods to predict Ra in the milling of Al 6061. It was shown that despite the advantages of these methods over experimental methods, still soft computing methods need to be improved. Despite the appropriate performance of the developed AI methods, there is still a need to improve the modeling performance of this approach. The presence of uncertainties, noise, and complex factors affecting the process of milling of aluminum alloys necessitates the use of more complex and reliable approaches.

According to aforementioned lack of knowledge observed in the open literature, the coupled model of ANFIS networks with PSO and GA meta-heuristic learning algorithms, and the IT2FNN with a PSO learning algorithm were used in this work to predict the mean cutting forces as well as average surface roughness (Ra) recorded in slot milling of AA 6061-T6, AA 7075-T6, as well as AA 2023-T351 when using various levels of cutting parameters. These networks have more ability to adjust fuzzy neural networks' coefficients by their meta-heuristic learning methods. IT2FNN has the most ability of investigation of uncertainties due to its type-2 membership functions.To that end, the artificial networks containing experimental inputs and two machining attributes as output were formed with ANFIS-GA, ANFIS-PSO, and IT2FNN-PSO methods. The main innovation of the present study refers to the implementation of hybrid type-2 neuro-fuzzy networks and PSO evolutionary learning method. No similar study in this regards was found in the literature.

2 Modeling approach

Three types of fuzzy neural networks with meta-heuristic learning algorithms called ANFIS-GA, ANFIS-PSO, and Interval type-2 fuzzy neural network with PSO (IT2FNN-PSO) have been applied on a specific portion of experimental inputs as training data. The rest of the inputs and outputs data were used as testing data. Afterward, the performance of proposed networks as a predictor or estimator was mediated by comparison of networks outputs and experimental data.

In the current study, the AI methods were developed on the basis of the multi-input network. The relationship between inputs and outputs is not necessarily linear, and the reason behind using these networks is their ability to estimate different nonlinear machining factors. The overview of the modeling scheme is presented in Fig. 1. The main proposed equation is as follows:

where x represents the inputs, Yk represents the k-th number of outputs, and fk represents the estimated function. N represents the number of inputs as decision variable. In the current study, the inputs x1 to x4 are depth of cut (ap), feed per tooth (Fz), cutting speed (Vc), and coating material respectively. Moreover, the Y1 represents Ra while Y2 to Y4 are mean cutting forces in three directions.

2.1 A. Adaptive neuro-fuzzy inference system (ANFIS)

An ANFIS system is developed on the basis of combination of fuzzy system and a neural system that takes advantage of human knowledge and experience through fuzzy systems and rules that are in the form of if…then… with the ability of neural systems to create and train the networks. The design steps of ANNs model and neuro-fuzzy networks are shown in Figs. 2 and 3. More information in this regard can be found in [30].

In the proposed ANFIS structure and in order to simplify the structure, it is assumed that, the presented system has following two rules:

pi, qi, zi, hi and ri are linear parameters of the modeling (consequents) part, which are updated during network training.

As shown in Fig. 4, an ANFIS structure consists of five layers:

-

Layer 1: This layer is known as fuzzifier. For each input in this layer, membership functions are considered. In other words, the output of each square is:

where \( {\mu}_{A_{\mathrm{ki}}} \) represents the MFs of each input, and Aki represents the linguistic variables associated with each of these nodes. In most ANFIS structures, the following Gaussian MFs are used as \( {\mu}_{A_{1\mathrm{i}}} \) and \( {\mu}_{A_{2\mathrm{i}}} \):

x is input and [ai, bi, ci] are the default parameters for each proposed MF.

Layer 2: Or rule layer that is the output of each node represents the firing power of the node on the inputs. The operator of this layer can be a minus or multiplication (production) one, which in most cases a product operator is considered. For instance, the outputs of the second layer nodes are as follows:

-

Layer 3: Or the normalized layer. Every node in this layer has a fixed value with the symbol N. The output of each layer is the ratio of input or input firing to the total firing of the rules, and is expressed as:

-

Layer 4: Also known as defuzzification layer. Every node in this layer is adaptive. The output value for each rule is determined based on previous layers and inputs as follows:

Which \( {\overline{w}}_i \) is the normalized firings of the third layer and pi, qi, zi, hi,and ri are the parameters of consequents part (modeling results).

Layer 5: Or total output layer. This layer consists of the summation of all output values of the previous layer that are displayed as follows:

In this article, the ANFIS networks have been used to construct two proposed networks. The clustering method called subtractive clustering has been used to create the initial structure of these networks without network training. In this method, all data points were considered as a center of a cluster with an arbitrary influence radius. The computational programs and codes developed in Matlab toolbox may determine which points are more appropriate as the center of the cluster.

2.2 B. Interval type-2 fuzzy and fuzzy neural network

Zadeh [31] used the concept of type-2 fuzzy sets to extend the approach of the first type of fuzzy system. In this type of system, the type-2 fuzzy membership is considered to be fuzzy, and it leads to define two MFs for type-2 fuzzy sets, which are called primary and secondary memberships and are subsets of the interval [0, 1]. After introducing type-2 fuzzy systems, the next steps to create fuzzy systems and handle the uncertainty of these sets were taken by Karnik and Mendel [32]. Therefore, the KM reduction algorithms were introduced [32, 33]. Nowadays, due to the precision of prediction results, wide applications of KM reduction algorithms have been introduced in various domains and processes [16, 34].

Interval type-2 fuzzy logic systems

All neuro-fuzzy systems have a fuzzifier, a rule base, a fuzzy inference engine, and a defuzzifier, and the only difference between type-2 and type-1 fuzzy steps, has a type reducer before the defuzzifier part in type-2 fuzzy systems to convert the type-2 fuzzy variables into type-1. Considering a Takagi-Sugeno-Kang (TSK) fuzzy logic system with four inputs similar to conducted research including ap, Fz, Vc, and C leads to the following formulations:

In addition, four outputs consist of Ra and cutting mean forces in three dimensions are as follows:

where X1, …X4 and Y1, …, Y4 represent the sets of MFs for each input or output.

An IT2-TSK FLS, like T1FLS, has the if-then fuzzy rules to express the relationships between inputs and outputs, and it is determined in the procedure presented in Fig. 5. Due to type-2 fuzzy structure, since after fuzzifying of the inputs, each input has an interval in the form of [a, b] on its MFs; each output of rules’ firing has a range with a lower and upper limit. Therefore, it is necessary to obtain a range for each rule within rules’ firing calculation. Type reduction step is applied according to the type reduction algorithms known in advance such as KM algorithm [32, 33].

The block diagram of type-2 fuzzy logic [35]

Interval Type-2 Fuzzy Neural Network

The IT2FNN introduced in this article is an adaptive neuro-fuzzy network with six layers (Fig. 6). It is noteworthy that due to the complexity of the network with four inputs, it is assumed that there are two inputs required for the milling process. The first and sixth layers represent the inputs and outputs of the system (inputs are ap, Fz, Vc, and C and outputs are Ra and cutting forces), respectively. The second layer consists of the adaptive nodes of the MFs of the upper and lower values of each member in that related MF. The third layer represents the specified rules, and outputs of this layer determine lower-upper firing degrees of each rule. The fourth layer represents the consequence of each rule, and it is formed by the effects of output firings from the previous step on the adaptive outputs. The fifth layer is related to type-reduction of previous layer’s outputs using the Karnik-Mendel algorithm [32, 33].The sixth layer, which is the last one, produces final crisp outputs.

The proposed system is assumed to have four inputs and two outputs (multi inputs-multi outputs). The general definition of proposed rules in the ITFNN system for the aluminum milling process is as follows:

The procedure and functions or network layers are described as follows:

where k = 1,…,4 and Yk represents the k-th output and i represents the index of i-th rule. \( {\overset{\sim }{A}}_{j,k}^i \) (j = 1,…,4) are interval type-2 consequent sets related to each input. \( \tilde{y}_{i}^k \) is k-th output of i-th rule in the form of type-1 fuzzy set. \( {\overset{\sim }{C}}_{j,k}^i \) are consequent interval type-1 fuzzy sets (more explanation will be expressed in the following).

Layer1: inputs

-

Layer 2: the layer of applying MFs on inputs

The Gaussian MF with constant variance and uncertain mean in [\( \underset{\_}{m} \),\( \overline{m} \)] is considered for inputs (Fig. 7). Each MF’s output contains an upper and a lower amount and is known as \( \underset{\_}{\mu_{k,i}}\left({x}_i\right)\ \mathrm{and}\kern0.5em \overline{\mu_{k,i}}\left({x}_i\right) \), respectively. The following lines present the concepts of MF:

k represents k-th proposed rule, and i represents the i-th input. The Gaussian function is defined as follows:

where:

Thus, in this layer, the intervals associated with different MFs are determined in each output.

Layer 3: or rules’ firing layer. The product in this layer is assumed as the proposed operator.

-

Layer 4: the adaptive inputs and firing layer of the consequences. The equations of this layer are as follows:

In principle, Eq. 21 is formulated to form interval consequences and output in this layer is determined as follows:

where

In the Eq. 23ck, i represents the center of Ck, i and sk, i represents the deviation of Ck, i.The basis of this layer is based on ADALINE, which is considered in NNs [36].

Layer 5: or type reduction layer

The values of L and R can be obtained from the Karnik-Mendel algorithm.

Layer 6: Defuzzification layer

Using the formulations presented in Eqs. (15–26), the developed neuro-fuzzy network can be completed [37]. In the next step, the training of the mentioned networks with the meta-heuristic algorithms will be addressed. The ANFIS network is trained with GA and PSO learning algorithms and the IT2FNN is trained with the PSO algorithm.

2.3 C. Genetic algorithm (GA)

The GA optimization uses biological phenomena such as mutation, inheritance, crossover, and selection to solve the optimization problem in an evolutionary approach. The apparent answers of an optimization problem that include the values of all variables are placed in a set called chromosome. Each chromosome introduces a possible answer to the problem. This process starts with an initial population (the number of random answers) and is created by initializing the chromosomes. Considering the cost function which is the difference between the networks outputs and experimental values in this problem, the problem-solving procedure starts by initializing each gene in the chromosomes. Therefore, it has been planned to reduce the amount of the cost function with assigning a number in the interval [0, 1] through normalization of the problem to each gene, the process of solving in the repetitive loop, and determination of the amount of population of the solving chromosomes and the number of iterations. It is noteworthy that, the cost function in the proposed methods is the difference between experimental outputs and predicted values by introduced networks for each pair of input-output data.

2.4 D. Particle swarm optimization algorithm (PSO)

As similar to GA, the PSO algorithm is an evolutionary algorithm. This algorithm consists of several particles that create a swarm and explore the problem search space for an optimal solution. The motion memory of each particle as well as the motion memory of other particles determine the direction and movement speed of that particle in the search space. The position of each particle is corrected on the basis of current position, particle speed, the position of the best response to the current time related to the proposed particle (pbest), and the distance between the particle position and the best global answer’s position (gbest). The cost function in this algorithm is an error function. The problem is solved by repeating the solving loop, searching through the search space, and investigating the proper number of particles and effective parameters on the speed.

2.5 E. Training ANFIS and IT2FNN using optimization algorithms

The prediction accuracy of the networks introduced in the previous sections is an important factor and should be guaranteed by optimization algorithms. The introduced PSO and GA learning algorithms are applied for ANFIS, and the proposed PSO algorithm is used for IT2FNN. For the ANFIS network, as described in Eq. (5), there are three parameters including [ ai, bi, ci] for each MF in the antecedence section. Therefore, their number is the same as all inputs’ MFs number. In the consequence section, pi, qi and ri representing the coefficients for inputs, are each rule’s variables. There are the same as the rules’ number of these variables for training. All the introduced variables are categorized in a vector variable called chromosome and given to the GA and PSO algorithms.

The parameters of the antecedence and consequence sections are also trained in the IT2FNN. In the antecedence section, variables \( {m}_{k,i}^1 \), \( {m}_{k,i}^2 \) and σk, i that are the means and variance respectively, are given to the algorithm as learning variables. In the consequence section, ck, i, sk, i, ck, 0, and sk, 0 are considered variables for learning. After sorting these values as a swarm, they are applied to the PSO algorithm, and optimization is run with an appropriate number of iterations and speed parameters. The process of these learning algorithms is shown in Fig. 8.

2.6 F. Performance criteria

Several evaluation criteria have been used to evaluate the performance of developed models in terms of measuring predicting accuracy. To obtain the difference between targets and outputs, the MSE criterion is a popular one. The lower values derived from this criterion prove the more suitable performance of predicting. This criterion is expressed as follows:

Another criterion, which shows the standard deviation of predicted errors from the target values, is the RMSE:

The relationship between outputs and goals is shown by R which is defined by the following equation:

That xi, yi, \( \overline{x} \), \( \overline{y,} \) and N represent the observed values, the predicted values, the average of the observed data, the average of the predicted data, and the number of data, respectively.

2.7 G. Mackey-Glass time sseries

The Mackey-Glass time series is used in order to validate the created networks and compare their performances (Fig. 9). The proposed delay in this research for predicting Mackey-Glass time series are: 6, 12, 18, and 24, where

2.8 H. Prediction modeling procedure

In order to validate the proposed networks, several experiments have been performed on Aluminum alloys including AA 6061, AA2024, and AA 7075.

3 Result and discussion

3.1 Experimental plan

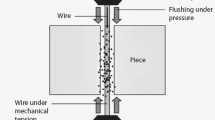

The dry slot milling operations were performed on a 3-axis CNC machine tool (power 50 kW, speed 28000 rpm; torque 50 Nm) using a coated end milling tool (E90-A-D.75-W.75-M) with three teeth and the tool diameter D 19.05 mm. The rectangular blocks of aluminum alloys with 3.5 × 3.5 × 1.2 cm in size were used in milling tests. According to experimental parameters listed in Table 1, in total, 162 tests were conducted. In total, 18 milling tests were conducted with respect to each coated insert, with total 54 tests per material. Experimental works were repeated once, and the average values of responses were considered for results analysis.

The following experimental parameters were used:

Feed per tooth (mm × z−1) [0.01, 0.055, 0.1]

Cutting speed (m × min−1) [300, 750, 1200]

Depth of cut (mm) 1

Insert coating = [TiCN, TiAlN, TiCn+Al203 + Tin] 4

Tested materials: [AA 2024-T351, AA6061-T6, AA 7075-T6]

The following considerations and assumptions were made in the course of experimental works.

A 3-axis dynamometer (Kistler-9255B) was used to record the signals. The sampling frequencies 24 kHz and 48 kHz were used.

As noted earlier, average surface roughness (Ra) was recorded as the surface quality index.

3.2 A. The Mackey-Glass time series prediction

The Mackey-Glass time series was used to validate the proper response of the assumed networks. The differential equation of the time series is as follows:

It is assumed that x(0) = 1.2 and τ = 17. This non-periodic and non-convergent time series is very sensitive to initial conditions. For the times less than zero, it is assumed that x(t) = 0. Developed models report high performance for predicting output data according to MSE, RMSE, and R criteria (Table 2).

The conditions and assumptions used in the models are as follows:

ANFIS-GA: number of clusters = 20; max iteration = 1000; number of population = 40; crossover percentage = 0.7; mutation percentage = 0.8; mutation rate = 0.1

ANFIS-PSO: number of clusters = 20; max iteration = 1000; number of population = 40;

IT2FNN-PSO: number of clusters = 20; max iteration = 1000; number of population = 40; membership function = Gaussian membership function with uncertain mean

It is noteworthy that all the networks and learning algorithm parameters have undeniable effects on modeling and prediction procedure. Determining the number of their quantities as well as adjusting their related values are among the most important steps of modeling process. All the initial coefficient of quantities turns into their desired ones during running the learning algorithms. The conditions and assumptions as introduced earlier have been obtained by repeating the proposed algorithm which different conditions and the best structure for predicting train data among the repetitions was selected (Fig. 10).

3.3 B. Performance of proposed neuro-fuzzy models

3.3.1 Training performance of forecasting milling process

The proposed networks have four outputs that represent the predicted values of Ra and the mean values of directional cutting forces. The calculated values that represent the proposed algorithms for train data are shown in Figs 11, 12, and 13. The linear simple regression models for predicted models of Ra and mean values of directional cutting forces are shown in Figs. 14, 15, and 16. It should be noted that based on Table 1, for each kind of Aluminum and each coating, 18 experiments have been carried out. Therefore, in total, 54 experiments have been carried out on each type of aluminum, which led to 162 tests in total. For clarity, all tests related to each kind of Al alloys are shown in separate figures. Among experimental data, 45 tests were used for training purposes. The horizontal axis of Figs 11, 12, 13, 14, 15, 16, 17, and 18 represents the number of experimental tests used for training.

Figures 11, 12, and 13 show the comparison between experimental predicted values for each responses studied. Similarly, in Figs. 14, 15, and 16 as a sample of the performance of proposed algorithms, correlation coefficients of three algorithms including ANFIS-GA, ANFIS-PSO, and IT2FNN-PSO are provided for Ra. In the vertical axis, the relationship between the targets and the outputs of the given model is presented. The fitting line introduces the desired relationship between both variables. The third algorithm, i.e., IT2FNN-PSO, gives the best performance. Figures 11, 12, 13, 14, 15, and 16 were prepared on the basis of train data. It means inputs and experimental data as targets are provided to the algorithms and the appropriate coefficients of algorithms just need to be adjusted efficiently based on inputs-outputs pairs of data. Therefore, the correlation coefficient is the best criterion to assess their relationship. The next step is to evaluate testing inputs and prediction of Ra and cutting forces and compare them to experimental results.

3.4 Testing performance of forecasting milling process-

In order to evaluate the efficiency of proposed neuro-fuzzy systems presented in Figs. 14, 15, and 16, 20% of experimental results were used to test the efficiency and accuracy of the developed networks. The performance of these systems in the testing process was evaluated by numerous factors including MSE, RMSE, and R criteria. Figures 17 and 18 present the training error and linear regression models between of cutting force in z-direction for AA7075. It can be exhibited that a high correlation can be seen between modeling and experimental values. The capability of the developed models to predict milling outputs is shown in Table 3. In Fig 17, the mean error which is the result of the mean difference between the experimental values and the outputs of the proposed algorithms is presented. Figure 18 refers to the correlation rate of experimental data and tested targets. In the testing part, the experimental data of Ra and mean forces are not used to predict the outputs. Finally, the outputs of proposed algorithms are compared to their experimental values. All proposed models are capable of predicting the proposed milling outputs. In general, using GA and PSO optimization algorithms led to an improvement in modeling results due to their capability of efficient optimization and adjusting the desired coefficient of proposed quantities.In this case, the effect of using PSO is more tangible.

Type-2 neuro-fuzzy systems, because of better handling of uncertainties by their type-2 MFs which instead of assigning a fuzzy number for the output of each membership function, use an interval fuzzy set that indicates holding more uncertainties rather than type-1 fuzzy sets, improves their ability of prediction, and it is expected to achieve more accurate responses and lower values of performance criteria. The comparison between the training and test data of milling outputs for all three alloys is presented in Table 3. As mentioned in the introduction part, due to the complexity of the many involved factors in the milling process, taking an approach which considers uncertainties and unknown phenomena can easily improve modeling performance and IT2FNN because of its capability of handling these factors which also provides better performance.

4 Conclusion

Milling operations play an important role in high precision machining and rapid production of numerous manufacturing processes, products, and materials, including aluminum alloys which exhibit high thermal and electrical conductivity and low ductility. The surface quality of the machined parts as well as mean values of directional cutting forces are among the main machinability attributes which need to be considered and factors governing their variation must be clearly defined.

In the course of this study, main machinability attributes including surface roughness Ra and mean values of directional cutting forces were studied for modeling and consequently optimization purposes. An effective prediction of the machinability attributes aforementioned using proposed strategies may lead to less need to experimental measurement, adequate process parameter selection, as well as lower machining costs.

The main remarkable conclusions of this paper are the following:

- 1.

ANFIS-GA network was established and it was used to predict Ra and mean values of directional cutting forces using GA as the learning algorithm. The best performance was achieved by using 200 neurons in the hidden layer, 20 clusters, 1000 iteration and 40 populations. The experimental values of Ra results could be modeled adequately and with respects to aluminum alloys tested, the correlation coefficients of 0.97, 0.802, and 0.812 were found between the modeling and experimental values of Ra.

- 2.

ANFIS-PSO is the second model that was used in this paper for similar purposes aforementioned. Similar input parameters were used as the one in ANFIS-GA model. Obviously, it can be founded the performance of this model is significant. For instance, the correlation coefficients of predicted models of all three types of aluminum alloys were 0.98, 0.90, and 0.89. The modeling results indicate that the ANFIS-PSO outperforms ANFIS-GA modeling results.

- 3.

The last prediction model used in this work is IT2FNN with PSO learning algorithm. Modeling results exhibited that the best performance belongs to this model where the correlation coefficients between predicted and experimental values of Ra are 0.980, 0.998, and 0.994 respectively for AA 6061, AA2024, and AA7075. Better results were observed as compared to type 2 neuro-fuzzy systems and PSO learning algorithms. MFs of this model provide a more convenient structure and better handling of uncertainties was observed.

4.1 Outlook

This work can be integrated into practical processes in order to conduct online precision and monitoring of machining process by means of cost reduction, accuracy, and quality improvement in the work parts and machine tools. The use of the presented approach may tend to reduce the number of experimental tests and provide precision machining performance, while less operating tests are demanded in terms of off-line measurements of Ra values of the machined parts.

Abbreviations

- Ra:

-

Surface roughness

- ANFIS:

-

Adaptive neuro-fuzzy inference system

- RMSE:

-

Root mean square error

- MSE:

-

Mean square error

- RSM:

-

Response surface modeling

- BP:

-

Backpropagation

- IT2FNN:

-

Interval Type-2 fuzzy neural network

- GA:

-

Genetic algorithm

- PSO:

-

Particle swarm optimization

- MD:

-

Membership function

- ADALIN:

-

Adaptive linear neurons

- ANN :

-

Artificial neural network

- ADALINE:

-

Adaptive linear neurons

- R :

-

Correlation coefficient

- V c :

-

Cutting speed

- f z :

-

Feed per tooth

- a p :

-

Depth of cut

References

Benardos P, Vosniakos G-C (2003) Predicting surface roughness in machining: a review. Int J Mach Tools Manuf 43:833–844

Asiltürk I, Çunkaş M (2011) Modeling and prediction of surface roughness in turning operations using artificial neural network and multiple regression method. Expert Syst Appl 38:5826–5832

Fuh K-H, Wu C-F (1995) A proposed statistical model for surface quality prediction in end-milling of Al alloy. Int J Mach Tools Manuf 8:1187–1200

Zhang JZ, Chen JC, Kirby ED (2007) Surface roughness optimization in an end-milling operation using the Taguchi design method. J Mater Process Technol 184:233–239

Çolak O, Kurbanoğlu C, Kayacan MC (2007) Milling surface roughness prediction using evolutionary programming methods. Mater Des 28:657–666

Chen S, Hong X, Harris CJ, Sharkey PM (2004) Sparse modeling using orthogonal forward regression with PRESS statistic and regularization. IEEE Trans Syst Man Cybern B Cybern 34:898–911

Briceno JF, El-Mounayri H, Mukhopadhyay S (2002) Selecting an artificial neural network for efficient modeling and accurate simulation of the milling process. Int J Mach Tools Manuf 42:663–674

Meddour I, Yallese MA, Bensouilah H, Khellaf A, Elbah M (2018) Prediction of surface roughness and cutting forces using RSM, ANN, and NSGA-II in finish turning of AISI 4140 hardened steel with mixed ceramic tool. Int J Adv Manuf Technol:1–19

Sekulic M, Pejic V, Brezocnik M, Gostimirović M, Hadzistevic M (2018) Prediction of surface roughness in the ball-end milling process using response surface methodology, genetic algorithms, and grey wolf optimizer algorithm. Adv Prod Eng Manag 13:18–30

Dweiri F, Al-Jarrah M, Al-Wedyan H (2003) Fuzzy surface roughness modeling of CNC down milling of Alumic-79. J Mater Process Technol 133:266–275

Ho W-H, Tsai J-T, Lin B-T, Chou J-H (2009) Adaptive network-based fuzzy inference system for prediction of surface roughness in end milling process using hybrid Taguchi-genetic learning algorithm. Expert Syst Appl 36:3216–3222

Harsha N, Kumar IA, Raju KSR, Rajesh S (2018) Prediction of Machinability characteristics of Ti6Al4V alloy using Neural Networks and Neuro-Fuzzy techniques. Mater Today Proc 5:8454–8463

Sharkawy AB, El-Sharief MA, Soliman M-ES (2014) Surface roughness prediction in end milling process using intelligent systems. Int J Mach Learn Cybern 5:135–150

Kumanan S, Jesuthanam C, Kumar RA (2008) Application of multiple regression and adaptive neuro fuzzy inference system for the prediction of surface roughness. Int J Adv Manuf Technol 35:778–788

Karayel D (2009) Prediction and control of surface roughness in CNC lathe using artificial neural network. J Mater Process Technol 209:3125–3137

Narendra KS, Parthasarathy K (1990) Identification and control of dynamical systems using neural networks. IEEE Trans Neural Netw 1:4–27

Gupta M, Jin L, Homma N (2004) Static and dynamic neural networks: from fundamentals to advanced theory. John Wiley & Sons

Cheng C-T, Wang W-C, Xu D-M, Chau K (2008) Optimizing hydropower reservoir operation using hybrid genetic algorithm and chaos. Water Resour Manag 22:895–909

Al Hazza MH and Adesta EY (2013) Investigation of the effect of cutting speed on the Surface Roughness parameters in CNC end milling using artificial neural network. in IOP Conference Series: Materials Science and Engineering, p 012089.

Kumar S, Gupta M, Satsangi P, Sardana H (2012) Cutting forces optimization in the turning of UD-GFRP composites under different cutting environment with polycrystalline diamond tool. Int J Eng Sci Technol 4:106–121

Noordin MY, Venkatesh VC, Sharif S, Elting S, Abdullah A (2004) Application of response surface methodology in describing the performance of coated carbide tools when turning AISI 1045 steel. J Mater Process Technol 145:46–58

Gallova S (2009) Genetic algorithm as a tool of fuzzy parameters and cutting forces optimization. In: Proceedings of the World Congress on Engineering

Wang Z, Rahman M, Wong Y, Sun J (2005) Optimization of multi-pass milling using parallel genetic algorithm and parallel genetic simulated annealing. Int J Mach Tools Manuf 45:1726–1734

Nandi AK (2012) Modelling and analysis of cutting force and surface roughness in milling operation using TSK-type fuzzy rules. J Braz Soc Mech Sci Eng 34:49–61

Radhakrishnan T, Nandan U (2005) Milling force prediction using regression and neural networks. J Intell Manuf 16:93–102

Arotaritei D, Constantin G, Constantin C, Cretu AL (2014) Modelling the cutting process using tesponse surface methodology and artificial intelligence approach: a comparative study. J Control Eng Appl Inform 16:14–27

Barua MK, Rao JS, Anbuudayasankar S, Page T (2010) Measurement of surface roughness through RSM: effect of coated carbide tool on 6061-t4 aluminum. Int J Enterp Netw Manag 4:136–153

Daniel SAA, Pugazhenthi R, Kumar R, Vijayananth S (2019) Multi objective prediction and optimization of control parameters in the milling of aluminum hybrid metal matrix composites using ANN and Taguchi -grey relational analysis. China Ordnance Soc

Karkalos N, Galanis N, Markopoulos A (2016) Surface roughness prediction for the milling of Ti–6Al–4 V ELI alloy with the use of statistical and soft computing techniques. Measurement 90:25–35

Jang J-S (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE Trans Syst Man Cybern 23:665–685

Zadeh LA (1975) The concept of a linguistic variable and its application to approximate reasoning—I. Inform Sci 8:199–249

Mendel J, Hagras H, Tan W-W, Melek WW, Ying H (2014) Introduction to type-2 fuzzy logic control: theory and applications. John Wiley & Sons

Sadeghian A, Mendel JM, Tahayori H (2013) Advances in type-2 fuzzy sets and systems: theory and applications, vol 301. Springer

Sepúlveda R, Castillo O, Melin P, Rodríguez-Díaz A, Montiel O (2007) Experimental study of intelligent controllers under uncertainty using type-1 and type-2 fuzzy logic. Inform Sci 177:2023–2048

Mendel J, Wu D (2010) Perceptual computing: aiding people in making subjective judgments, vol 13. John Wiley & Sons

Hagan MT, Demuth HB, Beale MH, De Jesús O (1996) Neural network design, vol 20. Pws Pub, Boston

Castro JR, Castillo O, Melin P, Rodríguez-Díaz A (2009) A hybrid learning algorithm for a class of interval type-2 fuzzy neural networks. Inform Sci 179:2175–2193

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Asadi, R., Yeganefar, A. & Niknam, S.A. Optimization and prediction of surface quality and cutting forces in the milling of aluminum alloys using ANFIS and interval type 2 neuro fuzzy network coupled with population-based meta-heuristic learning methods. Int J Adv Manuf Technol 105, 2271–2287 (2019). https://doi.org/10.1007/s00170-019-04309-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-019-04309-6