Abstract

The systems engineering of some systems often involves challenging modelling activity (MA). MA presents challenges, which include understanding the context in which it takes place, understanding and managing its impacts on the life cycles of the models it produces. In this paper, we propose a methodology and its underpinning framework for addressing these challenges and for coping with the operation of MA. The first step in our methodology is to characterize MA as a federation of systems. It then consists in iteratively building a system architecture by modelling the models produced by MA and their expected life cycles, modelling the various tasks that constitute MA, and modelling the effects of MA on these life cycles. It then makes it possible to specify expectations over these life cycles and to analyse models of MA in relation to expectations, to check how far expectations are achievable and to synthesize the acceptable behaviours of the system. Finally, a use of the results of this analysis may provide insightful data on how the system is end-to-end operated and how it might behave. On the basis of this information, informed decisions may be made to act on the logistics of MA. The hypotheses, theoretical foundations, the models, the algorithms and perspectives relating to the proposed methodology and its underpinning framework are all presented and discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The engineering of systems will often involve some modelling activity MA. Models generally provide a partial, sometimes incomplete view of the actual modelled thing, and this kind of simplified view is essential when it comes to understand tricky systems. Models also give us a way of preserving and reusing knowledge about the things that they relate to. However, because of the sheer diversity of engineered or studied systems, models are often specific to a particular domain (mechanical, electrical, chemical, hardware, software and systems engineering, purchasing, etc.), and different types of models provide different perspectives on the modelled systems. Combining different models in pursuit of a single objective (such as verification) is not a new challenge, but it remains highly topical.

The dynamic of MA influences and determines the evolution of models. Models arising from modelling activity in industry are often spread over different locations. At the same time, (large engineering) companies will have a number of separate projects and programs running concurrently with different instances of modelling activity that sometimes interact. Models can have useful lifespans ranging from a few days to several months. Thus, the modelling activity itself can be seen as a challenging entity to operate.

We are, therefore, dealing with two distinct levels of complexity, one relating to the engineered systems and the other to the entities and practices that contribute to the modelling of those systems.

As a result, understanding, mastering and engineering systems can be made difficult by the environment of their life-cycle or their tricky nature.

On one hand, the people and other components within these environments are heterogeneous and mature, offering potential opportunities and benefits. On the other hand, these very characteristics can have adverse side effects.

In this context, Systems Engineering aims to create harmony and added value between well-established domains/components (for the resolution of a sub-goal) while targeting the overall system goal.

There is no obvious single approach that can be applied to all types of systems.

If an overview and trend of the dynamics of the MA become difficult to grasp, the following questions may arise.

-

(1)

Existing models: what models are present in a particular location and what do they represent?

-

(2)

Direction of travel: what is the current state of models and how and where are they likely to end up?

-

(3)

Moving towards desired direction and states: what is required for models to reach desired states?

2 Motivation, hypotheses, problem formulation and contributions

Motivation: In seeking to address the three questions above, our immediate concern was the study of MA development and operation. Therefore, the system under study in this paper is MA. By mentioning the system, we refer, unless otherwise specified, to the studied system. MA involves human operators who are called upon to perform tasks that are evolutive and even creative. MA cannot be specified once and for all, but rather, expected behaviour and results need to be continuously reworked and re-specified. This raises the question of whether it is possible to master, in a disciplined way, the dynamics of MA, to contribute to their logistics in an informed manner.

In Kamdem Simo et al. (2015), we reported an attempt to organise some types of modelling activity for system architecture. Modelling activitiy was tailored using a modelling management plan, fed by a Modelling Planning Process (MPP) (Ernadote 2013), itself automated to ease modelling operations. The MPP aligns the MA to the requirements of the projects, ensuring that modelling objectives are defined and prioritized, that they correspond to the various modelling artefacts (project concepts, standards and deliverables), and that the progress of modelling activity can be assessed. The authors concluded that this modelling activity needed to be federated, since the approach proposed did not take account of the autonomous and co-evolving nature of MA. Models have targets and can play a role in different modelling projects in engineering environments.

Consequently, in a subsequent work (Kamdem Simo et al. 2016), we argued that MA can be considered locally as a system and globally as a federation of systems that need to be engineered. Following on from that, in the present paper, we introduce a methodology and its underpinning framework for coping with the operation of MA in systems engineering.

Hypotheses and modelling choices: We consider the environment of the system to be (Ackoff 1971):

“A set of elements and their relevant properties, which elements are not part of the system but a change in any of which can produce a change in the state of the system. Thus, a system’s environment consists of all variables which can affect its state. External elements which affect irrelevant properties of a system are not part of its environment.”

It is also argued in Ackoff (1971) that a system and its environment are relative to an observer, consequently they can be conceptualized in different ways.

We are, therefore, concerned with the system proper and with its environment. The system proper and its environment form the closed system. In this paper, we often use the term system to refer to either the system proper or the closed system, what is meant will be clear from the context. We assume that the environment is autonomous and to all intents and purposes not controllable, but that it may always be represented by a model. This assumption reflects the fact that while models can be expected to reach certain predetermined states, those states may be reached via different modelling tasks and different sequences of modelling tasks.

The system proper is structurally represented by structural models (AM). AM are especially useful in abstracting away the main content of models (M) produced by MA. State models (SM) are used to model the life cycles of M and the expected transitions between the states over these life cycles. Process models (PM) are used to model the behaviour of the environment and sometimes the behaviour of components of the system. In particular, PM capture the modelling tasks which cause changes in the state of M. We model the effects of PM on the system proper by the mapping (MG) of events (from the exploration of PM) onto transitions of SM associated with AM. PM might be also subject to some constraints (C) (e.g., time, cost, etc.) related to modelling tasks. The Expectations (R) that specify preferences on the expected states of the system might be defined something like this: given a set of pairs (component of AM, state in SM)—called context—some pairs complementing this context will be more preferable than others. This paper will formalize these different models (AM, PM, SM, MG and R) and discuss their suitability in abstracting, representing, and understanding MA.

General problem to solve: Given the MA, understood and modelled with the data corresponding to AM, SM, PM, MG and R, what are the possible future points (foreseeable evolutions) starting from an initial point (InitialPoint) and evolving until a stop criterion (StopCriterion) becomes true? Which points are with respect to R and C, more acceptable / less acceptable? Let us call these questions Q1.

A point is a possible configuration of the state of the closed system. InitialPoint is a given point from which the closed system might need to be initialized. StopCriterion is a means for selecting acceptable points among the reachable points. Therefore, the general problem is given by Pb (AM, SM, PM, MG, R, C, InitialPoint, StopCriterion).

We propose addressing the questions Q1 via a six-step methodology that we call MODEF. MODEF is intended to (1) provide an understanding of the current global state of MA, (2) check whether MA is moving in a satisfactory direction (state path), and (3) help stakeholders build processes to ensure that the models (M) that it produces continue to evolve in appropriate ways.

Here, we are not explicitly dealing with the internal practices of MA. The design techniques, methods and tools used in the modelling activity are not explicit, i.e. they are not modelled. The internal practices are black boxes for the proposed methodology. These internal practices of MA are of course relevant and essential for producing M, but we shall argue in this paper why we consider the black-box perspective.

Contributions and organisation:

-

From a general systems engineering perspective, the main contribution of this paper is the introduction of MODEF–the procedural structure of which is depicted on Fig. 1, summarized by Act/Identify-Model-Specify-Verify-Inform-Identify—and with its supporting framework with principles, theoretical and practical arguments for understanding, modelling and analysing MA to inform MA’s logistics.

On Fig 1, a box depicts a step; the starting and entry step is Step 1 (Identify a System X); an arrow from a step (s) to the next one (t) denotes: the outcome (data) of s is necessary for carrying out t; finally, at a given step, it is possible to go back—for instance when new data is available or when a problem is found at the current step—to any previous step, whence the counter clockwise arrows.

The novelties introduced in this work in relation to other work are presented in Sect. 3.

From a narrower perspective, focusing on the different steps of MODEF, the main contributions are the following:

-

To our knowledge, it is the first time a modelling of the architecture of MA and expectations relating to MA is based on the models that we have detailed above, that is to say AM, SM, PM, and MG. MA is considered in terms of a System of Modelling (SoM) and a System of Systems of Modelling (SoSoM) (Kamdem Simo et al. 2016). We explain the meaning of these terms in Sect. 4 below.

-

We introduce an Assume (A)/Preference (P) formalism to support the specification of expectations (or, more generally, expected behaviour) of MA. The stimulus here came from Assume/Guarantee (A/G) contracts (Benveniste et al. 2012). An expectation consists in expressing preferences with respect to the life cycle of the studied elements, given some context or assumption. In particular, these preferences are defined with respect to the states of the studied elements given an assumption (A). In the style of A/G contracts, the G of a contract is replaced by a preference (P) in an expectation. P has a pre-order (a binary relation that is reflexive and transitive) structure. A pre-order structure is generally sufficient to describe preferences among the elements of a given set. Another reason for why we chose to adapt the A/G paradigm is that the verification of consistency and compatibility may be formally defined (like with A/G contracts).

-

We introduce a modular analysis procedure that makes it possible to compute the answer to questions Q1. This procedure applies the uniform-cost search (UCS) algorithm (Russell and Norvig 1995) on the state space described by the co-exploration of SM and PM, both being constrained by MG. The novelty of this procedure is that it makes use of R (and potentially C) in UCS to guide the co-exploration throughout the discovered state space and in some cases to prune regions within this space.

-

Finally, to make the results of analysis understandable by a human, we provide some operating algorithms for building synthetic data. We want, for example, to be able to determine (1) whether, starting from a given point, no further improvements will be possible (in terms of expectations and process constraints) given the input models; (2) the sequence of points or configurations necessary to reach a target point; (3) critical paths, etc. This kind of synthetic data may help stakeholders to operate the system, to prevent issues and to take corrective actions.

The principles, models and algorithms underlying the six steps of MODEF are illustrated on a running example—introduced in Sect. 4.2.1—used as a synthetic case study. Where necessary, we shall give additional examples.

The remainder of this paper is organised as follows. In Sect. 3, we discuss the relevant related work and position MODEF in relation to it. In Sect. 4, we briefly recall what we mean by SoM and SoSoM then present some application examples that illustrate them. In Sect. 5, we present the principles and semantics of three kinds of models (AM, SM and PM) and their mappings (MG). We also present the formalism of Expectations that underpins the specification of R. In Sect. 6, we present the analysis procedure for exploring and analysing models against the expectations (and potentially constraints of modelling tasks). Then we present an application of the analysis procedure. In Sect. 7, we give a brief answer, with related assumptions, to the three questions put forward in the introduction, then we present some limitations and perspectives of this work.

3 Related work

Here, we discuss various approaches that have sought to manage modelling activity or technical-and-programmatic activity and we try to position MODEF in relation to them.

3.1 Systems engineering and modelling activity

Systems Engineering (SE) has been considered for nearly eighty years (see e.g., (INCOSE 2015; AAAS 2016) for the evolution and definitions of SE) to manage the complexity of the engineered systems and the processes to which they give rise. SE has continued to grow out of two significant disciplines (Leonard 1999), namely “the technical knowledge domain in which the systems engineer operates, and systems engineering management.” The former is generally related to technical activity (requirement engineering, design, etc.), whereas the latter is concerned with the planning and management of the former. It should be noted that SE management is different yet complementary to general project management (and to a large extent business processes), since it focuses on technical and engineering aspects (SEBoK 2016; Sage and Rouse 2009; Blanchard 2004).

It can also be said that SE is concerned with two distinct systems: on one hand there is the system that is being engineered, developed, or studied, and on the other hand there is the system that enables the engineering of the studied system. These two systems are also called the system to be made (also referred to as the System-Of-Interest (SOI)) and the system for making respectively (Fiorèse and Meinadier 2012). Consequently, SE comprises activity of a technical nature and activity of a programmatic nature. Technical activity (TA) seeks to answer the question, “Are we engineering the right SOI and in the right way?” Programmatic activity (PA) seeks to answer the question, “Are we operating the right system for making, and are we doing so in the right way?” It is this programmatic activity that corresponds to SE management. Finally, it should be remarked that PA and TA influence each other mutually.

Depending on the difficulties originating from the SOI itself and the engineering environment, different efforts are made on the two kinds of activity. However, within a context characterized by the two levels of complexity described in Sect. 1, both domains (technical and programmatic) are inexorable and critical: obstacles brought about by the engineering environment that then contributes to additional complexity that must be managed (Bar-Yam 2003) in conjunction with the basic complexity of the SOI.

One approach for dealing efficiently with SE has been the use of (formal) models and modelling as the main support of SE. Such an approach refers to Model-Based SE (MBSE) (INCOSE 2015) and to a large extent model-driven engineeering (MDE) (Whittle et al. 2014; Kent 2002). MBSE is not a new discipline different from SE, since (mathematical) models were used many years ago within various fields, including SE. Today’s MBSE seems to focus on diagrams with the risk of multiplying less formal and less explanatory models. The efficiency with MBSE comes from the ability of models and modelling to improve communications among the stakeholders; to give rise to better representations and a better understanding of systems, and to facilitate the preservation and reusing of knowledge relating to SE processes. Models and modelling are central in an MBSE approach.

SE is well documented in terms of standards and handbooks (INCOSE 2015; Sage and Rouse 2009; Fiorèse and Meinadier 2012). Some standards that provide an overarching view on SE processes are succinctly described in the following.

ISO/IEC/IEEE 15288 provides a description of processes involved in a SE process. The SE Management Plan encapsulates the artefacts (SE Master Schedule, Work Breakdown Structure) necessary for planning and controlling these processes. Other standards (ANSI/EIA-632, ISO/IEC 15504:2004, IEEE Std 1220-2005, etc.) provide guidance for describing, improving, and assessing these processes. Systems Engineering actors can (and in fact do) therefore leverage those materials, mainly built from past experiences, for new SE developments. It follows from the foregoing that the SE body of knowledge does contribute to the management of SE processes regardless of how they are actually implemented.

Although the materials and standards mentioned above are clearly useful, they often remain document-based, even if some cover formal content. They are generally descriptive in addressing the question What To Make but they fail to be prescriptive and to deal with the question How to. As a consequence, they are unsatisfactory from the perspective of a model-based management of MA. Indeed, the following problems may arise: reuse, analysis, consistency, traceability. Moreover, the diversity and autonomy of different stakeholders of the MA might prevent an effective use of such documents. Besides, in this latter situation, even with formal artefacts (models or documents), one may encounter the same problems as those encountered with informal artefacts. Nonetheless, formal artefacts offer several advantages (reuse, share, analysis etc.) provided that they are adequately operated. Thereby, if we consider PA interrelated to TA, as a system, SE (or generally design principles) is (are) applicable for its understanding. From this perspective, and provided that research project as understood in Reich (2017), is replaced by (or compared with) that system, the expected benefits as the corollary of the Principle of Reflexive Practice (Reich 2017) and challenges would apply.

SE has been successfully applied many times over the years, particularly in defence and aerospace, and yet today it is still based on heuristics and informal practices as argued in INCOSE (2014). On the other side, many large engineering projects have failed. For a list of past projects see for example (Bar-Yam 2003), whose conclusion is that the various failures may be attributed to the complexity of the projects themselves; see also the story in Friend (2017) of some recent failed projects. Meanwhile, it is expected that SE will continue to be applied more widely across other industries (INCOSE 2014). For SE to spread successfully, formal and unifying models to support SE processes will need to be created using SE’s current body of knowledge.

3.2 Product-and-project approaches in systems engineering

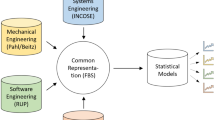

In this section, we start by presenting some relevant model-based product-and-project oriented approaches in systems engineering, and then we attempt to situate MODEF in relation to these various approaches.

The OPM approach In Sharon et al. (2011), the focus is first placed the tandem (project-product dimension) comprising the project (the programmatic aspect) and the product (the technical aspect). The authors compare the methods of project management that are common in SE management together with the Object-Process methodology OPM (Dori 2002) used for project planning and product modelling and design. Among others, these methods include: Earned Value Method (EVM) for project control, Critical Path Method (CPM), Program Evaluation and Reviewing Technique (PERT) and Gantt Chart for project planning and scheduling, System Dynamics (SD) for project planning and dynamic modelling, and Design Structure Matrix (DSM) for project planning and product design.

Using a simplified unmanned aerial vehicle (UAV) as a system use case, their empirical comparison shows that some methods are relevant with respect to particular product and project factors. Factors include Budget/Schedule measurement/tracking, Stakeholder/Agent tracking, Performance quality, and Product measurement/tracking. Only SD, DSM and OPM methods were found to be able to handle the project-product dimension. SD is a way of correlating factors (schedule, budget) relating to project planning in way that may be plotted. DSM represents the interactions among elements (components, tasks, teams) of both the project and product. The authors finally conclude that OPM is the only suitable for the function-structure-behaviour modelling (i.e. Project-Product Model-Based (Sharon et al. 2008)) of both the project and the product inside an integrated conceptual model.

Apart from OPM, all the methods compared are best derived from models that represent the product and project, since they address a particular, specific concern. The OPM method is required for both TA and PA. The authors claim that OPM is especially well adapted to combining PA and TA within a single model. It follows that the structure, function and behaviour OPM models, respectively, describe the structure the function and behaviour of both the product and the project. The structure models together with their states are the possible inputs and outputs of the processes in the project. Another approach that builds on OPM models to support planning and communicating of process and product development is the PROVE-Dev framework (Shaked and Reich 2018).

Coupling of TA and PA In Vareilles et al. (2015), a model and rules are discussed for managing the multi-level interaction between system design processes (typically TA) and project planning processes (typically PA). The rules were integrated into the ATLAS IT platform. In the light of the failures of the A380 Program and Olkiluoto Nuclear Power Plant projects, which were executed within a concurrent engineering environment and based on empirical surveys, the authors argue that there is a vital need to formalize the interactions between the design of a system and its design project. They also highlight the absence of any work formally addressing this need from the perspective of planning and controlling design activity. These interactions have been made explicit in only very few works.

Then, they establish a bijective link at a structural level between a System S and a project P, system requirements SR and the requirement task definition PR, and system alternative SA and alternative development task PA. At the behavioural level, the two processes (TA and PA) are interrelated via their so-called feasibility and verification attributes of elements at the structural level. A meta-model supports the realization of links.

The feasibility attributes have 3 states, namely undetermined (UD), feasible (OK), and unfeasible (KO). The state of an attribute is computed by a design manager and a planning manager based on requirements, constraints, risks and schedule, and resources. Based on those states, precedence rules are established between structural elements and their states. As an example: it is not possible to start working on a solution SA if SR are KO.

The verification attributes likewise have 3 states: undetermined (UD), verified (OK), unverified (KO). Like for feasibility, precedence rules are established. An example: it is not possible to verify PA before PR.

Based on the two kinds of attributes and their states, nine synchronisation rules (for S and P) are finally defined to guarantee the consistent evolution of system design and project design. This yields a 27-state state diagram that supports the synchronisation of S and P. In this diagram, a state corresponds to a seven-tuple (SR.Fa, SA.Fa, SA.Ve, PR.Fa, PR.Ve, PA.Fa, PA.Ve) where Ve and Fa are related to verification and feasibility attributes, respectively. The initial state is given by (UD, UD, UD, UD, UD, UD, UD). The transitions between states are logically determined from rules.

Finally, as a use case, a landing gear system comprising a wheel and brake subsystem and associated projects is considered. This system has 1 SR related to the weight of the system and 1 PR related to the duration of the project. Other works in the same spirit are (Abeille 2011; Coudert 2014).

Other works in product (and process) design development (see e.g., Braha and Maimon 2013; Cho and Eppinger 2001; Melo 2002; Browning and Eppinger 2002; O’Donovan 2004; Wynn 2007; Bonjour 2008; Abeille 2011; Karniel and Reich 2011; Gausemeier et al. 2013) have addressed the association, integration or coupling (explicitly or not) of the two systems, i.e. the product and the project (or the development system) using a variety of means and with a variety of aims. Regarding software development projects, see also (Steward and Tate 2000). Here, the functional requirements and design parameters of the product are mapped onto tasks in a Gantt chart project plan following an Axiomatic Design paradigm (Steward and Tate 2000). The authors claim that such an association between tasks and design enables the rapid delivery of product.

A survey on process models and modelling approaches for design and development process (DDP) appears in Wynn and Clarkson (2017). The authors first focus on three features (novelty, complexity and iteration) of DDPs. DDPs are different from business (typically production and manufacturing) processes insofar as they call for creativity; they are iterative by nature. They occur in large-scale concurrent evolving engineering environments. These features also apply to MA. The authors then discuss the different approaches (see (Wynn and Clarkson 2017, Fig. 1)), from model scope (micro, meso and macro levels) and type (procedural, analytic, abstract and management science/operations research models) axes (again see Wynn and Clarkson 2017 for more details). Their conclusion is that different models provide different insights depending on how they are used, and models should be used according to specific objectives (i.e. requirements and constraints). Orthogonally to these concerns, tooling issues such as modelling notations and tools should be addressed (Abeille 2011) (Eckert et al. 2017).

A summary of a handful of approaches that associate design and planning appears in Abeille (2011) and the author concludes that these approaches are little used in the industrial world, because their tooling is quite limited. Furthermore, they do not take into account the dynamic of design process. Some approaches to the integration of product and process models in engineering design with respect to their purposes (Visualisation, Planning, Execution, Synthesis, Analysis, etc.), their modelling formalisms (Design Structure Matrices, IDEF (Integrated Definition), etc.) and their level of integration (isolated, coupled, integrated) appear in Eckert et al. (2017). The authors remark that few works address the integration of product and process domains. They also remark that approaches appearing theoretically closer to the industrial context would require certain challenges regarding the scope, focus, development and visualisation of models to be overcome.

3.3 Position of MODEF

In this section we position MODEF in relation to the relate work presented above.

3.3.1 Abstracting modelling activity

Not all of the above approaches explicitly deal with the concurrent nature of the engineering environments (comprising autonomous and even independent stakeholders). Although (Vareilles et al. 2015) clearly recognizes the influence of this concurrent nature, the multi-level approach that is presented does not explicitly consider it. Note also that integrated approaches may not be effective in a concurrent context with regard to the capability and autonomy of different stakeholders. This is even more true with engineering activities of Systems of Systems–SoS (Jamshidi 2008). Generally speaking, any traditional approach for SE and management should be reviewed with regard to its ability to meet the requirements of SoS engineering. (Sage and Cuppan 2001) discusses evolutionary principles and implications of the federalism concept for SE and management of SoS. This federalism is important, because engineering development alliances have clearly been taking the form of virtual organizations (Sage and Cuppan 2001; Handy 1995). This encourages autonomy and loosely coupled systems but requires well-defined interfaces between autonomous systems. The authors argue that the components of those system may be: “locally managed and optimized independently.” They also argue this kinds of federations of engineering development projects should be considered as Complex Adaptive Systems, and they discuss the need for federated organisations to be thoroughly understood. They point out the importance of modelling, simulations and analyses.

Unlike the approaches developed in Vareilles et al. (2015) and Sharon et al. (2011), we do not focus on a particular methodology within the TA. We make no stipulations regarding the content (paradigms, methods, languages, tools, etc.) necessary for TA to function, because we consider that such stipulations do not take into account the diversity , heterogeneity of domain-specific approaches in MBSE. For instance, if we were to stipulate that the System-of-Interest should be also modelled with a function structure behaviour approach (Sharon et al. 2008, 2011), this might be restrictive, since TA is generally characterized by several model-based domain-specific practices. Instead, we abstract away details and concentrate on the relevant content of models (M) produced by MA.

The approach in Vareilles et al. (2015) (and even (Sharon et al. 2008, 2011)) imposes a structure for TA and PA. In doing so, it also creates what would appear to be an interesting dynamic for TA and PA. However, this diminishes the flexibility of the approach. We believe a bijective link between the system S and the project P is too strong an assumption and might not always be relevant given the structure of S and its granularity. On the PA side, we only consider the sequencing of MA and its impacts on the state of M. The link between PA and TA is therefore modelled via the mappings.

On the other hand, the verification and validation attributes (Vareilles et al. 2015) are interesting, since they emanate from quantitative data that provide insights into the states of the elements that they relate to. In our proposed methodology, we believe such insights could be considered either as constraints for processes or as a useful addition for corroborating the states of M in practice. In the approach (Vareilles et al. 2015), these attributes are used for both TA and PA.

3.3.2 Modelling modelling activity

One similarity that our methodology has with (Sharon et al. 2011) is that we use structure and behaviour (process) models. But in our methodology, as argued before, the structure models are not used for the same purpose as OPM structure models are used. Likewise, in the OPM-based approach, the structure models and their states are the possible mandatory inputs and outputs of process models, while in our proposed methodology, the processes bring about changes of states of M.

The methodology developed in this paper is rather general in the sense that it is not tailored for particular techniques (Critical Path Method—CPM, Program Evaluation and Reviewing Technique—PERT, etc.) and factors (Budget measurement, Schedule tracking etc.) that are studied and compared in Sharon et al. (2011). Those techniques and factors should either be derived from the models and their analysis or considered as constraints for models. On the other hand, specific approaches focusing on quantitative insights could help in determining the constraints of MA, insofar as particular insights need to be considered in an analysis procedure. Indicators are generally quantitative insights. We believe they should be used in conjunction with the models that describe the processes. Processes give procedural insights that may be useful in anticipating bad states or the deviation of thresholds. More interestingly, it may be possible to specify the required actions for applying remedies and adjustments afterwards.

3.3.3 Analysis with models

Looking at the above approaches, with two exceptions, to a lesser extent, we were unable to find any explicit formal analysis of the models concerned with respect to certain formalized expected properties. The first exception is (Vareilles et al. 2015), where the analysis derives from the synchronisation rules, and the second exception is in the OPM approach (Sharon et al. 2008) (Sharon et al. 2011), where the simulations of OPM models are intended to detect various problems (product and project parameters feasibility, deviations and impacts) and take appropriate actions.

The UCS algorithm that we use (see Sect. 6) to guide the exploration of models could possibly be replaced by another OR (Operations Research) or AI (Artificial Intelligence) algorithm, and the exploration algorithm (see Sect. 6) could even be customized.

3.3.4 Implementing methods

The methodology and its underpinning framework presented in this paper are not intended for use with a specific modelling tool or modelling language. Instead, we detail concepts and algorithms needed to facilitate implementation. The modelling tool provides a way of building models that illustrate the methodology and that may be of great practical use.

Referring to the classification of process models proposed by Wynn and Clarkson (2017) in the wider context of design and development, some models involved in MODEF belong to the following model scope dimension and model type dimension respectively: in between the meso and macro levels and a mix of abstract, procedural, analytic, (and possibly MS/OR models), respectively. Thus, after the analysis carried out within MODEF, it might feed approaches with other model scope dimension and model type dimension. These latter approaches might in turn influence the specification of input models of MODEF. For instance, the detailed information relative to MA may be useful to understand how it quantitatively affects the state—in SM—of models (M). The following pattern summarizes the position of MODEF in that classification: Macro-level model-based approaches \(\leftrightarrow\) MODEF \(\leftrightarrow\) Micro-level model-based approaches”, where \(a \rightarrow b\) or \(b \leftarrow a\) reads: the output of a might contribute to the input of b.

4 Abstractions of modelling activity

4.1 System of modelling (SoM) and system and systems of modelling (SoSoM)

The SoM whose some components and their relations are depicted on Fig. 2, is to a large extent a set of stakeholders and their practices. Broadly speaking it is a system of people, methods, tools, processes, standards and models (M) in interaction, and it may be seen as a system that reflects the engineering/modelling of a System-Of-Interest (SOI). On Fig. 2, we have three main blocks, a blue arrow and two orange arrows.

-

At the top of Fig. 2, we have people and a sequencing of tasks (Step A, then Step B, etc.) they carry out. This green block (B1) represents the sequencing of MA.

-

The purple block B2 contains three boxes corresponding to conceptual models that represent the conceptual contents of M.

-

The blue block B3 contains the actual models (M) that represent the SOI that is being modelled.

-

The left-side and right-side orange arrows indicate that the tasks in B1 generate models in B3 and act on conceptual models with different tools respectively.

-

The blue arrow labelled “are linked to” on Fig. 2 indicates the connection between M and the conceptual models. Such a connection, its definition and implementation, applications are partly reported in Ernadote (2013, 2015, 2016); Kamdem Simo et al. (2015). Those conceptual models are useful as a means of involving different stakeholders in the modelling process (Ernadote 2015). For instance, stakeholders who are unfamiliar with specific metamodel concepts are involved via domain-specific concepts related to their viewpoints. The two kinds of concepts are therefore related via the combination of metamodels and ontologies.

In investigating the SoM, we focus on the sequencing of modelling tasks, the conceptual models that encapsulates the main content of M. To understand the impacts of modelling tasks on M, we link the conceptual models to state models that characterise the expected (or lifecyle) states of M, and we map events relating to the execution of tasks onto transitions of state models, so as to indicate the effects that these tasks have on states. We therefore examine the SoM from three different architectural perspectives.

Actually, several modelling projects are run in parallel. Indeed, many engineering programs involve several organisations located on different sites. Regardless of the geographical distribution, the engineering projects often address concerns that overlap. At the same time, the SoMs are generally autonomous.The question is how best to understand these SoMs and how they change over time.

The answer to this question is to be found in the SoSoM, which is constituted by different SoMs that encompass different projects and programs. The SoSoM is a way of understanding the evolving relationships between SoMs. It addresses the interactions or commonalities between SoMs. The added-value of the SoSoM emerges from these interactions. With the autonomy and the proper operational capabilities of individual SoMs, it is difficult to impose an integrated approach. Instead, as we argue in Kamdem Simo et al. (2016), following (Sage and Cuppan 2001; Handy 1995; Charles 1992), the SoSoM may be characterized as a federation. We recall that a Federation has in the past been considered as a type of System of Systems (Krygiel 1999; Sage and Cuppan 2001).

The views that we look at when analyzing the SoSoM are the same as when analyzing the SoM: that is to say the structure, state and MA processes linking the different SoMs that are its components. The actual content of these views will depend on the problem at hand at the SoSoM level. But the relations between the different models remain the same: the states of some elements of the structure view change under the effects of processes.

This means that the SoM and SoSoM are abstracted from the same perspectives but have different concerns.

4.2 Examples of application

We start by presenting elements that may in practice constitute a SoM, and we then discuss the problems that may need to be addressed at the level of the SoSoM comprising more than one SoM.

4.2.1 Running example: an SoM—modelling the functional coverage of a SOI

Different aspects of the SOI are generally modelled by different stakeholders. And it might be required that at some stage in the development process models satisfy some criteria for purposes such as verification, testing, or integration. Suppose we are only interested in the functional architecture designed to ensure that the modelled SOI covers the functional needs. What characteristics does a modelling project need to possess for it to be defined as a SoM, say SoM0?

The following elements need to be identified:

-

the sequencing of modelling tasks required to achieve some objectives

-

the conceptual models that abstract away the main contents of models (M)

-

the expected states of M and transitions between them corresponding to elements of the conceptual models

-

the effects (mappings) of modelling tasks on these states.

Fig. 3 is an illustration of these elements. This figure contains 5 boxes (PM, AM, SM, MG and R), with dashed borders, interpreted as follows. Note here that the formal specifications of the data related to these boxes are given in the following sections.

The box (PM)—corresponding to B1 according to Fig. 2—represents a high level view of the tasks carried out in SoM0. This process model contains six tasks beginning with the the task named “Model high level functions” and ending with the task named “Validate RefinedFunctions”.

The box (AM)—corresponding to B2 according to Fig. 2—represents the components or entities (named “System Component” and “System Function”) and their relation taken into account in SoM0.

The box (SM)—not depicted in Fig. 2—that has 3 states. The initial state is named “\(\text{Maturity}<30\)”. Typically, a state here describes the maturity level of something that should be known after a mapping.

The box (MG) is a mapping. In Fig 3, there is actually 5 mappings. A mapping is an element of a function TRIGGER defined in Sect. 5.2. A mapping is a triplet \((a,l,e) \in\) TRIGGER. a is an element of AM, l is a transition in SM and e is a set of sequences flow. For convenience, in Fig 3, the symbols \(t_i\), \(i=1..5\) are used to carry the 5 mappings and replace l and e when associated in a mapping. a is “System Function” for all the five mappings. Therefore, the box MG roughly means: the sequence flow labelled “t3” in PM, triggers (dashed green arrow) the transition labelled “t3” in SM, which is associated (dashed blue line) to the component or entity “System Function” in AM. In practice, that implies the state of a model’s component abstracted away by the entity “System Function” remains “\(30<\text{Maturity}<60\)” if it was already in that state and if the sequence flow labelled “t3” in PM is taken in the process. As a result, the task “Model detailed functions” should be carried out again. Additionally, the mapping “t3” does not allow to evolve from the state “\(30<\text{Maturity}<60\)” to the state “\(60<\text{Maturity}<100\)”, while the mapping carried by “t4” does.

The box (R) corresponding to an A/P expectation will be discussed later (Sect. 5.3). We may say here that a preference (Satisfactory, Operational and Critical) is defined on the possible states associated with the entity “System Function”.

Other SoMs, which could for example be a modelling project regarding a different viewpoint of the SOI, might be defined in the same way.

4.2.2 Examples of problems addressed at a SoSoM level

A SoSoM exists to encapsulate the relationships between at least two SoMs. The local view of the SoSoM is characterized by the different SoMs, whereas the global view will depend on the objectives at the SoSoM level. Suppose that these objectives are as follows:

Objective 1: We want to harmonize the models (M) produced by several SoMs. By harmonization we mean to correlate and explicitly identify the relationships among different entities (of the conceptual models) within several SoMs.

Objective 2: Suppose now, we are interested in the co-evolution of the modelling within the SoMs in order to achieve some higher goal. For this reason, although the SoMs are autonomous, there might be a need for agreements between them at some points in their respective life cycles. These agreements may ensure that different SoMs are together able to reach some expected states at some desired time in the future.

For each of these two objectives, we now present a SoSoM and the three main kinds of models that represent it.

Modelling harmonization—SoSoM1 Process models (PM) will abstract the tasks carried out at the SoSoM level. Conceptual models (AM) will correspond to the conceptual models that are relevant at the SoSoM level. State models (SM) will abstract the possible states at the SoSoM level (e.g., Unknown, Ok, Ko) of M within SoM.

Suppose now that the SoSoM1 comprises \(n>1\) SoM working on different modelling projects. The fact that conceptual models are explicitly given by the various SoMs represents a first step in reducing the number of redundant models and enabling model reuse where possible. Using processes (PM), and the expected states (SM) at the SoSoM level, it is possible to compute the attainable points (see modelling choices in Sect. 2) at the SoSoM level. This may have benefits including managing model replication and reconciliation procedures.

It is worth emphasising here that the process model at the SoSoM level (and even at the SoM level) does not indicate by what technical means tasks are executed, but it is instead concerned with how tasks are sequenced and, using the mappings, the effects that different tasks have on the states of elements.

SoSoM1 is not concerned with the state and process models of its constituent SoMs, but only with their conceptual models. However, depending on the objectives of the SoSoM, it might be interesting to consider the state and process models. The following SoSoM SoSoM2 provides an illustration of this.

Modelling evolution—SoSoM2 In this case, where Objective 2 is relevant to the SoSoM, the structure, state and process models of the SoSoM will combine the relevant subsets of the SoM models.

So far in this section, we have characterized the SoM and the SoSoM and we have given some application examples. We now need to discuss how we can make use of the models in these systems in practice.

5 Modelling modelling activity and expectations

This section elaborates the second step (Represent structure, state and process models) (Sect. 5.1), third step (Specify structure-state-process mappings) (Sect. 5.2) and the fourth step (Specify expectations) of MODEF (see Fig. 1) (Sect. 5.3). We start by introducing the principles and semantics underlying these kinds of models, and then we present the expectation-specification formalism.

5.1 Structure, process and state models

When seeking to automate the analysis of models using MODEF it is especially important to define the principles underlying the models. This enables us to decouple the implementation of step 5 (Analysis of models) of MODEF from the logic of a particular modelling tool used to design models. These models are mainly descriptive models.

Let the structure (abstract syntax) of a model of the system correspond to a non-empty finite set of disjoint components. A component is either basic (i.e. without constituent components) or composite (i.e. with a non-empty finite set of constituent components). Since all models are composite structures, we may suppose for the purposes of this section that a component c is given by

where \({V}_{}:=\{c_1, \dots c_n\}\) is the set of internal components of c, and \({E}_{}:=\{l_1, \dots l_m\}\) is the set of directed links/connections between elements of \({V}_{}\) and c itself (when connection ports are considered). n and m are positive integers.

Given the structure of a model, our aim is to obtain an interpretation of the structure in a domain of interest. The interpretation is derived from the structure of a component. When modelling the physical aspect of the system, a component (an element of V) might be interpreted as a physical element and the corresponding E a set of physical or logical connections. However, when modelling the states (and the transitions between them) of the system, a component might be interpreted as a state and the corresponding E the set of transitions between its states.

Note that although the concrete syntax (graphical symbols used to render the models) is important for the end user, it has no importance in MODEF, because our aim is to decouple MODEF from the logic of a particular tool. It is useful only for the user designing and/or visualizing the models.

We now discuss the formal definitions of the semantics of the three kinds of models considered.

5.1.1 Structure model

The semantics of structure models will depend on the system at hand. For example, for the SoM, the structure models are interpreted as conceptual models, while for a physical system, they might be interpreted as computing, physical or human components. Generally speaking, the structure of structure models will be sufficient for the purposes of MODEF.

Running example See the box AM in Fig. 3.

5.1.2 Process model

The semantics of process models may be seen as generators in the sense given to the term by Ramadge and Wonham (1987). Roughly speaking, the exploration or execution of a process model should generate a language where the alphabet (\(\varSigma\)) is the finite set of events and the words are the event-traces or the sequences of events. This yields a non-deterministic automaton where accepting states correspond to possible terminations when the process is run. The set of words that bring the automaton from its initial state to an accepting state is referred to as the language recognized (or “marked”) by the automaton.

The reasoning behind this is that modelling tasks may be considered as discrete-event processes. Also, the class (principal features: discrete, asynchronous and possibly non deterministic) of processes considered in Ramadge and Wonham (1987) is particularly well-suited to our needs, because our main focus is the sequencing of tasks rather than data processing.

We are interested in words of finite length, corresponding to executions of processes that terminate.

Adapting (Ramadge and Wonham 1987), we formally define our automaton as follows. A generator is a 5-tuple

where Q is the set of states q, \(\varSigma\) is the alphabet or finite set of output symbols \(\sigma\), \(\delta : \varSigma \times Q \rightarrow Q\) the transition function; a partial function, \(q_0 \in Q\) the initial state and \(Q_m\) a subset of Q called marker states or final states. \({\mathcal {G}}\) is equivalent to a directed graph with node set Q and an edge \(q\rightarrow q'\) labelled \(\sigma\) for each triple \((\sigma , q,q')\) such that \(q'= \delta (\sigma ,q)\). This edge or state transition is called an event. Events are considered to occur spontaneously, asynchronously and instantaneously. Furthermore, an event may be recognized by an outside observer via its label \(\sigma\) by an outside observer. Distinct events at a given node always have distinct labels.

Running example In the box PM in Fig. 3, the sequence flows (the arrows labelled \(t_i\) in that process) and tasks (the green boxes) will typically correspond to events (i.e. \(\varSigma\)) and states (i.e. Q) respectively. See also Sect. 6.

If \(\varSigma ^*\) denote the set of all finite strings s of elements of \(\varSigma\) including the empty or identity string 1. The extended transition function is given by \(\delta : \varSigma ^* \times Q \rightarrow Q\), \(\delta (1,q)=q, q\in Q\) and \(\delta (s\sigma ,q)=\delta (\sigma ,\delta (s,q))\) whenever \(q'=\delta (s,q)\) and \(\delta (\sigma ,q')\) are both defined.

The language generated by \({\mathcal {G}}\) is

It is also the set of all possible finite sequences of events that may occur.

The language marked or recognized by \({\mathcal {G}}\) is

5.1.3 State model

The semantics of state models is characterized as an Hierarchical Finite State Model (HFSM). By building on (1), the definition of an HFSM is as follows.

If a component c is such that V and E are both empty sets, c is called a basic state, otherwise, c is a composite state. If c is composite, every element of V is a constituent component of c and interpreted either as a basic state or a composite state. An HFSM is structurally (syntactically) a composite component. At the semantics level, this composite component is equipped with an initial state and a state-transition-relation defined in the following:

Current state–The current state of an HFSM \(c^0\), is given by the stack \([c^0,c^1, \dots c^k]\), where \(c^{i}\) is a constituent component of \(c^{i-1}\), \(i=1...k\), \(c^k\) is a basic state.

Base state-transition relation–Let \(\delta _p : E \times V \times V\), the base state-transition relation associated to every composite component \(c^p\). \((l_1,c_1,c_2) \in \delta _p\) means that there is a link \(l_1\) from \(c_1\) to \(c_2\). The actual label or event that will fire the link or semantically the transition, is obtained via a binary relation \(EVENT \subseteq \varSigma \times E\) that associates to a link the trigger event.

Base initial state–Let \(c_0\), \(c_0 \in V\) the base initial state, a particular state which indicates from which constituent component of c, c is locally initialised. “locally” here means that the notion of hierarchy is not considered.

HFSM–Let \(c^0\) an HFSM and \(s([c^0,c^1, \dots c^k])\) a given state of \(c^0\)

-

s is the initial state of \(c^0\) if and only if \(c^{i+1}\) is the base initial state of \(c^{i}\) for all \(i=0...k-1\).

-

the state-transition relation of \(c^0\) consists, given a current state \(s([c^0,c^1, \dots c^k])\), in determining a next state \(s'\).

\(s'\) is given by

-

\(s'([c^0,c^{y_1} \dots , c^{y_j}, c^{m_1} \dots ,c^{m_x}])\) if \(c^{y_j}\) is an HFSM and \(s''([c^{y_j}, c^{m_1} \dots ,c^{m_x}])\) is the initial state of \(c^{y_j}\), \(j>0\);

-

\(s'([c^0,c^{y_1} \dots , c^{y_j}])\) if \(c^{y_j}\) is a basic state;

where \(c^{y_0}:=c^0\), \(c^{y_1}:=c^1\), ..., \(c^{y_{j-1}}:=c^{{j-1}}\) such that whenever \((l_{jy_j},c^j,c^{y_j}) \in \delta _{j-1}\), \(j\in 1..k\), we do not have \((l_{iy_i},c^i,c^{y_i}) \in \delta _{i-1}\), \(i\ne 0\) and \(i \in 1..j-1\).

This means that given a current state \(s([c^0,c^1, \dots c^k])\), the firing of a transition corresponds to the application of one and only one base state-transition relation of a component \(c^i\), \(i \in 0..k-1\) provided that the base state-transition relation of \(c^j\), for all \(j=0..i-1\), \(i \ne 0\) are not defined. In other words, upper components in the constituent hierarchy have priority when a transition needs to be triggered.

-

An HFSM is deterministic if and only if given any base state-transition relation \(\delta _p\) it exists \(\delta '_p : \varSigma \times V \rightarrow E \times V\), a partial function such that \((e, s_1, l, s_2) \in \delta '_p\) if and only if \((l, s_1, s_2) \in \delta _p\) and \((e,l) \in EVENT\).

Example Consider the HFSM \(c^0\) whose structure is depicted on Fig. 4. \(c^0\) is a composite component with constituents: \(c^1\), \(c^2\) and \(c^3\). \(c^1\) is a composite state whose structure is depicted on Fig. 4 by the rectangle with dotted border. \(c^1\) is equally an HFSM. \(c^2\) and \(c^3\) are basic states. For convenience, the HFSM \(c^0\) will be deterministic. Let EVENT, given by couples \((e_i,t_i)\), \(i=1..5\), \(e_i \in \varSigma\). \(\varSigma\) is the set of events that may cause transitions in \(c^0\). The base initial states of \(c^0\) and \(c^1\) are \(c^2\) and \(c^5\), respectively. The initial states of \(c^0\) and \(c^1\) are \([c^0,c^2]\) and \([c^1,c^5]\) respectively. The (base) state-transition relation of \(c^1\) is given by \((s,t,s')\) \(\in\) {\(([c^1,c^5],t_6,[c^1,c^6]),([c^1,c^6],t_7,[c^1,c^5])\)}.

The state-transition relation of \(c^0\) is given by \((s,t,s')\) \(\in\) \(\{([c^0,c^2],t_2,[c^0,c^3]), ([c^0,c^3],t_3,[c^0,c^2]),\) \(([c^0,c^3],t_4,[c^0,c^1,c^5]),\) \(([c^0,c^1,c^5],t_5,[c^0,c^1,c^5]),\) \(([c^0,c^1,c^5],t_6,[c^0,c^1,c^6]), ([c^0,c^1,c^5],t_1,[c^0,c^2]),\) \(([c^0,c^1,c^6],t_7,[c^0,c^1,c^5]), ([c^0,c^1,c^6],t_5,[c^0,c^1,c^5]),\) \(([c^0,c^1,c^6],t_1,[c^0,c^2]) \}.\)

The values of elements of E are fully determined after the events from processes have been mapped or linked to transitions of the state models via EVENT (see the following Sect. 5.2). In virtue of this, linking an HFSM may be considered as a named/labelled transition system (Keller 1976). That is, the transitions of an HFSM are labelled or named with actions or events belonging to the set of the events within processes.

HFSM have similarities with (hierarchical) finite-state machines such as Harel statecharts (Harel 1987). In Harel (1987) we read the following:

“statecharts=state-diagrams+depth+orthogonality+broadcast-communication.”

Just like with statecharts, we are dealing with the depth of states via the composite structure of states. However, in an HFSM, unlike in statecharts, orthogonality and broadcast-communication are not considered. In an HFSM, the transitions are allowed only inside a composite component c and between its constituent components (deeper constituent components i.e. constituent components of constituent components etc. are not considered as constituent components of c). Orthogonality may be managed with the parallel composition of HFSM as described in Sect. 6. In contrast to statecharts, which are a visual modelling techniques for which several semantics exist (Eshuis 2009), there is only one semantics for an HFSM, where history is not considered by the state-transition relation. Therefore, an HFSM must be specified accordingly. An HFSM only defines an initial state and a state-transition relation.

Running example Consider the HFSM \(c^\alpha\) whose structure (the box SM) is depicted on Fig. 3. \(c^\alpha\) is a composite component with constituents: \(c^1\), \(c^3\) and \(c^2\) labelled “\(\text{Maturity}<30\)”, “\(60<\text{Maturity}<100\)” and “\(30<\text{Maturity}<60\)”, respectively.

Let EVENT, given by couples \((e_i,t_i)\), \(i=1..5\), \(e_i \in \varSigma\). \(\varSigma\) is the set of events that may cause transitions in \(c^\alpha\). The base initial states of \(c^\alpha\) is \(c^1\). The initial state of \(c^\alpha\) is \([c^\alpha ,c^1]\). The state-transition relation of \(c^\alpha\) is given by \((s,t,s')\) \(\in\) \(\{([c^\alpha ,c^1],t_1,[c^\alpha ,c^1]),([c^\alpha ,c^1],t_2,[c^\alpha ,c^2]),\) \(([c^\alpha ,c^2],t_3,[c^\alpha ,c^2]),([c^\alpha ,c^2],t_4,[c^\alpha ,c^3]),\) \(([c^\alpha ,c^3],t_5,[c^\alpha ,c^3])\}\)

5.2 Relations between process and state models

Having described the three kinds of models and their exploration semantics, our aim is now to map the events from processes P onto the transitions of state models of components of structure models. In other words, these mappings (or relations) between models encapsulate the expected effects of process models on state models.

We assume that the events generated by P—or, more precisely, their labels or values (\(\varSigma _P\))—are available to an outside observer (see Sect. 5.1.2). Let \({G}_{a}=\left\langle {V}_{a},{E}_{a}\right\rangle\), \({G}_{p}=\left\langle {V}_{p},{E}_{p}\right\rangle\) and \({G}_{s}=\left\langle {V}_{s},{E}_{s}\right\rangle\) be structures (defined by (1)) corresponding to the structure, process and state models, respectively.

Let PHY, TRANS and EVT be the union of all structure components, the union of all sets of \({E}_{s}\) and the union of all sets of \({E}_{p}\), respectively.

TRIGGER is the set of associations of events on the transitions of state models of structure components. The elements of this set are introduced in the running example (Sect. 4.2.1). Indeed, the information of the box MG in Fig. 3, corresponds to an element of the set. TRIGGER is formally defined by

where \((a,t,e) \in\) TRIGGER is to be interpreted as follows: the transition t of (the state model associated to) the structure component a is possibly triggered by consuming the set e of the events, and conversely, since e is explicitly involved in TRIGGER, it has to be consumed. This latter requirement is a semantics one and directly influences the exploration semantics of models.

Running example See the box MG in Fig. 3 and the explanation in Sect. 4.2.1.

The mappings mean that the autonomy and specificity of the different kinds of model are preserved. Autonomy is preserved because each kind of model may be developed separately, while specificity emanates from the fact that the meaning of each kind model is not altered once the model or a part of the model is involved in an interconnection specified via (5). The interconnection has strictly no influence on the development of these models, but rather, a change in the models will result in the necessary changes in TRIGGER and the re-execution of the other following steps of MODEF.

Every element of the triplet (e, a, t) must actually be defined as a stack (see the definition of a state of an HFSM in Sect. 5.1.3) in order that TRIGGER is well-defined as a function. Composite structures also avoid the need for all the models to be systematically flattened before (and while) an exploration is run.

The problem addressed in this paper is not the same as the one addressed in Ramadge and Wonham (1987). Ramadge and Wonham (1987) is concerned with the control of the generator (object to be controlled) by a supervisor (the controller) via a control pattern (the set of all binary assignments to the elements of a subset of \(\varSigma\) (these elements are referred to as controlled events or specifications). Ramadge and Wonham were addressing a problem that the literature sometimes refers to as supervisory control, in other words, the synthesis of a model of a supervisor from the model of the object to be controlled or the plant and the requirements. See for example (Baeten et al. 2016) on the integration of supervisory control in MBSE. However, even though the approach we present in this paper is different from supervisory control, there are nevertheless similarities.

In our approach, the process and state models are seen as models of the master (linked to a generator), rather than a controller and an object to be managed or mastered separately.

The master is a reactive autonomous process for which a complete specification is required. A partial (i.e., without all the labels of transitions) specification of the object to be managed is also required. To obtain a full specification of the object to be managed, TRIGGER must be defined. TRIGGER specifies the expected effects of the master on the object to be managed (which in turn will place constraints on how the master may evolve).

5.3 Expectation-specification

The expectation-specification is the formal modelling of the expected behaviours of the system. It encapsulates expectations over the MA life cycle.

5.3.1 A/P expectations derived from A/G contracts with a pre-order structure on G

There are two good reasons for looking at the problem through the lens of contracts. First, contracts provide a suitable basis for modelling expectations within a system. Second, contracts are easy to describe as rules, yet they are supported by formal conceptual frameworks such as (Benveniste et al. 2012). We are seeking a means for easily involving stakeholders while at the same time allowing expectations to be formally dealt with in the analysis.

Expectations are grounded on Assume/Guarantee (A/G) contracts. We are following in the footsteps of Benveniste et al. (2012) in regard to a meta-theory of contracts.

A contract con for a system or a model is defined by con(A, G) where

-

A corresponds to the assumptions or the “valid environments” for the system or a part of the system.

-

G corresponds to the guarantees or “the commitments of the component (the system or part of it) itself, when put in interaction with a valid environment.”.

-

A and G are built on the set of behaviours related to the system over a domain D not explicit in con.

Example: A system that realises a real division of x by y and assigns the result to z might conform to a contract \(con\_div\)(\((x,y \in {\mathbb {R}}\) and \(y \ne 0)\),\((z:=x/y)\)). Meaning: if the system receives as inputs two real numbers x and y such that \(y \ne 0\), it guarantees that z will be equal to x/y.

According to the meta-theory of contracts (Benveniste et al. 2012), con is consistent, if there exists a model that effectively implements con (satisfies G) for the assumptions A of con. con is compatible if there exists a non empty environment for con. See (Benveniste et al. 2012, Section VII) for more details on A/G contracts definitions and operations.

Continuing with the example of the real division, \(con\_div\) is consistent because there exists a system that effectively takes 2 real inputs x and y, \(y \ne 0\) and computes \(z:=x/y\). \(con\_div\) is compatible because there exists 2 real numbers x and y, and \(y \ne 0\).

Note that a contract con(A, G) for a system (respectively, system models here) does not provide any information on how the system is implemented (respectively, is modelled). Rather, it provides information on how the system is expected to behave.

An expectation consists in expressing preferences on the states of the system given an assumption (A). Preferences are modelled by equipping a guarantee (G) with a pre-order (i.e. a binary relation that is reflexive and transitive) structure. Since A/G contracts (Benveniste et al. 2012) are not defined with a particular structure for G, we are no longer formally dealing with an A/G contract. For this reason, the term preferences (P) is more suitable here.

Formally, an A/P expectation consists of a couple

where both A and P are defined from a domain D. A is theoretically a formula of propositional logic (or zeroth-order logic) where atomic propositions are elements of D. Whenever A is not given for an expectation, we suppose that it is a tautology or that it corresponds to all possible assumptions with regard to the behaviour of the system. P is a pre-order. An element of D is a couple: (object, state) meaning the component object is in state state. Given \(m=(o_1,s_1)\), we call \(s_1\) the underlying state of m.

Running example The box R in Fig 3 is actually an expectation. It specifies the following.

There is no explicit assumption i.e., A is not given. The preferences are as follows. P:= (“System Function”, “\(\text{Maturity}<30\)”) \(\preccurlyeq\) (“System Function”, “\(30<\text{Maturity}<60\)”) \(\preccurlyeq\) (“System Function”, “\(30<\text{Maturity}<60\)”) Such a pre-order (\(\preccurlyeq\)) is derived from the column title MBSELab State Evaluation in Fig 3 where “Critical” is less preferred that “Unsatisfactory” itself less preferred than “Operational”.

Another Example Suppose \(m_1\), \(m_2\), \(m_3\), \(m_4\), \(m_5\), \(m_6\) and \(m_7\) are elements of D. The table below gives two expectations.

\(\text{Expectations}\) | ||

|---|---|---|

\(\text{Id}\) | \({A }\) | \({P }\) |

1 | \(m_1\) | \(m_2 \preccurlyeq m_3, m_2 \preccurlyeq m_4\) |

2 | \(m_5 \wedge m_6\) | \(m_7\) |

\(\dots\) | \(\dots\) | \(\dots\) |

In practice, the expectations with Ids 1 and 2 stand for the following. When \(m_1\) occurs or is true, occurrences of the situations (or the propositions) \(m_4\) and \(m_3\), are more preferred than \(m_2\). And when \(m_5 \wedge m_6\) is true, occurrences of \(m_7\) are preferred (\(m_7\) should be true). It is clear that, the truth value of elements of D is obtained from the behaviour of the system they relate to. For the sake of simplicity, we will assume below that A only consists of atomic propositions.

A basic question that arises is whether operations on contracts also apply to expectations. The answer is certainly no, given the structure of P. Indeed, the interpretation of the operations on a set (G) are not directly translatable on a relation (P). We rather consider properties (compatibility and consistency from contracts) which, defined for expectations, shall determine whether an expectation is well defined and feasible.

Compatibility A basic way of checking the compatibility of an expectation, just like a contract, is to verify that its assumption is valid according to the definitions of the state models that it is related to. Statically, A needs to be well-defined as a proposition. However, checking the consistency of some expectations would mean exploring the state space (of the behaviour of the system) to check whether certain states may all be reached together. If two situations (or propositions) are not defined from the same state model, the validity of an assumption that involves these situations might yield a reachability problem. For example, for the expectation with Id=2, \(A=m_5 \wedge m_6\), compatibility means the possibility of the system being simultaneously in the state(s) underlined by \(m_5\) and \(m_6\).

As a result, an expectation exp(A,P) is compatible if there exists a valid environment (i.e. an environment that makes A true) for exp.

Consistency Thinking in terms of contracts, we might wonder what is meant by “a model that effectively implements exp(A,P) (satisfies P)” mean, that is to say what satisfying the preferences might entail. Before addressing this question it is important to check that P is well defined.

Statically, we need to ensure that P is a pre-order and that P is not contradictory. P is contradictory if, given \(m_2, m_4 \in D\) and \(m_2 \ne m_4\), whenever \(m_2 \preccurlyeq m_4\) is defined for an expectation \(exp_1\) we also have \(m_4 \preccurlyeq m_2\) defined for \(exp_1\) (or another expectation \(exp_2\) such that the assumptions related to both \(exp_1\) and \(exp_2\) might be simultaneously true for the system they relate to).

Using the underlined directed graph of P, it will suffice to check that it is an acyclic graph. Formally, P “is not contradictory” syntactically means that the pre-order P is antisymmetric i.e a partial order.

However, even if P is syntactically well defined, it might be the case that it is not possible, according to the behaviour of the system, to move from situation \(m_4\) to \(m_2\). This is rather a problem of feasibility of the expectations.

If P is well defined, checking that it is satisfied is clearly not a Boolean question, unless there were a requirement that all preferences are satisfied, which would be an excessively heavy requirement. Regarding a utility function for quantitatively evaluating the preferences, it would be possible to compute how far preferences are satisfied. This concern is addressed in Sect. 6.

Consequently, an expectation exp(A,P) is consistent if P is not contradictory and there exists a model for the assumption A, that effectively implements or achieves P at a given level of satisfaction with respect to a quantitative understanding of P.

The fact that a contract (respectively an expectation) does not provide any information on how the system is implemented, but rather on how it is expected to behave, has significant consequences.

As a first consequence, feasibility (that is to say, whether system models exist that satisfy expectations) is not easy to determine. Where multiple models are mapped, given the autonomy of different models and the fact that some models may be subject to constraints, realizability (whether there are models and mappings that satisfy expectations) might also be an issue. This in turn raises the question of the completeness of expectations.

It turns out that feasibility is a trade-off problem which could imply either modifying the expectations or changing the system models and their mappings. Furthermore, on the assumption that we have autonomous processes, trade-offs are not always possible. In this situation, feasibility would be mainly defined in relation to process models and their effects on state models. Since satisfiability is sufficient but not necessary to deduce feasibility, all (less or more) acceptable behaviours might help in dealing with such trade-offs once they are allowed.

5.4 Conclusion and discussion

This section looked at the principles and exploration semantics of models, described how models are related via a mapping, and finally we presented a formalism for specifying the expectations on models. We now need to analyse these models against the expectations. More importantly, the results of the analysis have to be utilized in providing stakeholders with relevant data: we address this concern in the next section.

Contracts theories have been used for component-based design, layered design and platform design (Benveniste et al. 2012). In particular, they have been identified as suitable for open systems for which the context of operation is not fully known in advance. In this paper, we replace guarantees by preferences and we define and consider the notion of Expectation instead of Contract. Additionally, this pre-order structure of preferences is utilized by the analysis procedure, by introducing a utility function that makes the qualitative preferences quantitative (see Section 6.1).

The way that expectations are used in MODEF is different from the traditional use of contracts in system design. In system design, contracts are generally used to specify the interactions between (heterogeneous) components or different viewpoints (Benveniste et al. 2007; Nuzzo et al. 2014; Damm et al. 2011), mainly in software and cyber-physical systems. In MODEF expectations are used to specify the expected behaviour of the system in relation to the system models. This use of contracts is close to how they were first used in programming, where preconditions (in our case assumptions) and postconditions (in our case preferences) are defined for programs (in our case system models)—see e.g., (Hoare 1969; Meyer 1992).

In the area of model checking (Clarke et al. 2000; Baier et al. 2008), property specifications are generally expressed as temporal properties. One advantage of temporal properties is that they are both formally and informally understandable in the sense that natural language (informal properties) may be translated into temporal logic (Tripakis 2016). A/G contracts (and consequently expectations) are also formally and intuitively understandable. Their consistency and compatibility may be verified.

6 What is achievable and what may happen with the modelled system?

This section elaborates the fifth (Analysis of models) and sixth (Providing useful feedbacks to the stakeholders) steps of MODEF (see Fig. 1). We present the analysis algorithms (Sect. 6.1) for analyzing system models in regard to expectations. We then discuss possible uses of outputs from the analysis (Sect. 6.2) and present an application on our running example (Sect. 6.3).

6.1 Analysis of system models against expectations

The fifth step of the MODEF is intended to provide the means to effectively improve the way the system is operated. Given the models of the system and expectations and any changes that occur therein, the stakeholders of the system should be able to permanently identify better ways of operating the system. What do we mean by better ways of operating the system? To answer this question, we will first formulate the problem clearly, and we will then look at the procedure for solving it and the expected benefits.

6.1.1 General problem

A system (S) and its environment (E) are modelled by structure models (AM) and deterministic state models (SM) for S, and process models (PM) for the behaviours of S and E. S is subject to some expectations (R). A mapping (MG) encapsulates the actions (or the effects) of PM on SM of AM. The different types of models AM, SM, PM, R and MG are characterised and defined in Sects. 5.1; 5.3 and 5.2 respectively. Additionally PM might be subject to some constraints (C) for example the cost of the tasks within processes.

The global state of the closed system or its state space is basically given by pt(cst, cev). cst is an array of states of concurrent structure components of S. cev is an array of events of concurrent process components of E (and possibly S). Each event in cev is additionally annotated with a chronological ordering and a status.

We recall from Sect. 2 that the general problem is given by Pb(AM, SM, PM, MG, R, C, InitialPoint, StopCriterion) relating to our question Q1: How to generate the possible future points starting at InitialPoint up to StopCriterion? Which points are, with respect to R and C, more acceptable and less acceptable ones?

6.1.2 General principles for a solution

The answer to the question Q1 basically requires synthesising the possible behaviours (starting from InitialPoint) of the closed system. Furthermore, by speaking of the acceptability of the behaviours, we basically need to compute the “distance” between behaviours in the state space of the system. This raises a second question Q2: How may this distance be defined and computed?

We wish to synthesize the future possible points from InitialPoint up to StopCriterion. The general synthesis procedure for solving question Q1 is summarized in Fig. 5. Before we present this procedure, let us discuss question Q2, since the answer to Q2 is necessary for addressing Q1.