Abstract

The identification of model material parameters is often required when assessing existing structures, in damage analysis and structural health monitoring. A typical procedure considers a set of experimental data for a given problem and the use of a numerical or analytical model for the problem description, with the aim of finding the material characteristics which give a model response as close as possible to the experimental outcomes. Since experimental results are usually affected by errors and limited in number, it is important to specify sensor position(s) to obtain the most informative data. This work proposes a novel method for optimal sensor placement based on the definition of the representativeness of the data with respect to the global displacement field. The method employs an optimisation procedure based on Genetic Algorithms and allows for the assessment of any sensor layout independently from the actual inverse problem solution. Two numerical applications are presented, which show that the representativeness of the data is connected to the error in the inverse analysis solution. These also confirm that the proposed approach, where different practical constraints can be added to the optimisation procedure, can be effective in decreasing the instability of the parameter identification process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In structural engineering, when analysing real systems, an accurate response prediction is required to investigate the structural capacity to withstand specific loading conditions. This is usually performed by adopting a numerical or analytical model of the physical problem characterised by a set of material properties. Their definition is not trivial especially for existing structures, thus the inverse problem of “material parameter identification” represents one of the most critical tasks in the analysis process.

Inverse problems appear in several fields, including medical imaging, image processing, mathematical finance, astronomy, geophysics and sub-surface prospecting (Goenezen et al. 2011; Barbone and Gokhale 2004; Balk 2013; Leone et al. 2003). In structural engineering, they are often related to non-destructive testing (Garbowski et al. 2012; Bedon and Morassi 2014), damage identification (Friswell 2007; Gentile and Saisi 2007) and structural health monitoring (Farrar and Worden 2007) and are generally based on estimating model parameters by the knowledge of some experimental data. Inverse problems are very often ill-posed, where according to Hadamard’s definition (Kabanikhin 2008) a problem is well-posed when i) the solution exists, ii) it is unique, and iii) it is stable, i.e. if a small noise is applied to the known terms, the solution of the “perturbed” problem remains in the neighbourhood of the “exact” solution. Since a perfect match between experimental and computed data is not achievable in practice and thus the solution in this sense does not exist, the existence condition is usually relaxed by searching for the minimum-discrepancy solution. In this way, the existence of the inverse problem solution always holds. The uniqueness and stability are mainly related to the type of experimental setup and the number and type of experimental data. In particular, the experimental test must be representative of the unknown variables. If the test setup is properly chosen, i.e. the global response is sufficiently sensitive to the sought parameters, the inverse problem is globally well-posed and the model material parameters can be identified by using the measured full-field response.

In some inverse problems, e.g. imaging inverse problems (Barbone and Bamber 2002; Ferreira et al. 2012), it is assumed that a full strain or displacement field is known. When this is not the case, it may be possible that a well-posed problem becomes unstable because of the limited experimental measurements. This case can be referred to as a data-induced ill-posed problem where, as shown by Chisari et al. (2015) and Fontan et al. (2014), different sensor layouts applied to the same test setup lead to different errors. The design of the optimal sensor layout is thus paramount for parameter identification. A comprehensive review in the field of dynamic testing can be found in Mallardo and Aliabadi (2013), while some strategies for the optimal sensor placement are proposed by Beal et al. (2008) for structural health monitoring and Bruggi and Mariani (2013) to detect damage in plates.

Independently from the inverse problem to be solved, the general approach is to locate the sensors such that the sensitivity of the recorded response to the sought parameters is as large as possible (Fadale et al. 1995). If the measuring errors of all data are not correlated with each other and have the same variance σ2, the variances of the identified parameters are given by (J T J)-1σ2 according to ordinary least square estimation (Cividini et al. 1983). Here J is the system sensitivity matrix and J T J the Fisher’s information matrix (FIM). The minimisation of the variance of the parameters can be performed considering different criteria (D-, L-, E-, A-, C- Optimality) according to which specific scalar measure of FIM is used, e.g. condition number (Artyukhin 1985), determinant (Mitchell 2000), norm (Kammer and Tinker 2004), trace (Udwadia 1994). The FIM is also utilised in the criterion proposed by Xiang et al. (2003), where a method quantifying the well-posedness of the inverse problem forms the basis for the sensor design. Once the criterion for defining the “fitness” of the sensor layout is chosen, the design turns into a combinatorial optimisation problem. In this respect, Yao et al. (1993) adopted Genetic Algorithms (GAs, Goldberg 1989) to find the optimum solution. The major drawback of these methods is that the sensitivity matrix (and thus the FIM) is a local property of the parameters, implying that the best sensor layout depends upon the solution, which clearly is not known in advance. To overcome this shortcoming, an integrated procedure was proposed by Li et al. (2008), in which the parameter identification and sensor placement design are carried out alternately. However, in practical applications the sensor layout is often defined before the test and should be optimal or near-optimal for any admissible parameter set.

In this work, a novel method for sensor placement is proposed. Instead of considering the sensitivity of the measured data to the parameters in the choice of the optimal sensor layout, which as stated above depends on the parameters themselves, the proposed criterion considers the representativeness of the data with respect to the global displacement field. The representativeness is defined as the ability of inferring the global field from the actual data, and it is based upon a previous Finite Element (FE) discretisation followed by response reduction by means of Proper Orthogonal Decomposition (POD, Liang et al. 2002). A similar approach making use of POD to determine the optimal sensor placement was proposed by Herzog and Riedel (2015) for thermoelastic applications. The underlying reason for the superiority of this approach is that it allows distinguishing the ill-posedness due to the test (global ill-posedness) from the data-induced ill-posedness. The proposed method is aimed at solving this latter problem by defining a set of measurements representative of the global response, and thus minimising the error in the estimation due to the limited number of response outputs recorded. The practicality of the approach is demonstrated in this paper through numerical applications. For simplicity, the discussion is limited to elasto-static problems and to displacements as measured data. Extensions to other cases will be proposed in future work.

2 The inverse structural problem in elasto-statics

Let us consider a mechanical system of volume \( \mathfrak{B} \) and boundary \( \partial \mathfrak{B} \) defined by the position x in the reference configuration. It is known that the equations governing the static behaviour of the system are of three different types: (i) equilibrium, (ii) compatibility and (iii) constitutive relationships.

In direct (forward) problems, the aim is to obtain the vector u representing the displacement field and, consequently, the stress tensor field σ, by solving the system of Partial Differential Equation (PDE) given by (i), (ii), (iii) subjected to specific boundary conditions. The solution of such PDE system is known in closed-form in a very few simple cases, thus in realistic structural problems it is often calculated using numerical techniques as the Finite Element (FE) method.

In identification problems, together with the previously mentioned unknowns, the constitutive material and/or boundary condition parameters p are to be sought. Clearly the problem becomes underdetermined, so some new conditions have to be added. These new conditions may be obtained from experimental measurements taken during the tests. In the following, we suppose that only displacement measurements are available.

Let us consider a mathematical model F(p, x) which, once the geometry and the known material properties and boundary conditions are fixed, gives the displacements as function of the unknown parameters p:

In the hypothetical case in which the full displacement field ũ(x) is known, a necessary condition for the solution of the inverse problem is the equality between the computed and the reference fields:

In globally well-posed inverse problems, condition (2) is also sufficient and can be incorporated in a nonlinear system to be solved employing an optimisation approach:

where ‖ ⋅ ‖ q , with 1 ≤ q ≤ ∞, is the weighted L q -norm measuring the discrepancy between the computed and the reference displacement.

As (2) represents an overdetermined system, the solution is exact only in the absence of noise in ũ(x); otherwise it is a solution in an approximate sense. In the case of q = 2 (Euclidean norm), the solution is in a least-square sense. This is the most common formulation for the inverse problem, which can be derived directly from the assumption that all variables follow a Gaussian probability distribution (Tarantola 2005). Other interesting instances, only mentioned here, occur when different probability distributions are assumed for the observed data values. If a Laplace distribution is considered (presence of outliers), the solution of the inverse problem can be derived from (3), imposing q = 1, i.e. the Least-Absolute-Value criterion. Conversely, when boxcar probability densities are used to model the input uncertainties, the problem is solved using q = ∞. This corresponds to the minimax criterion, in which the maximum residual is minimised.

The hypothesis of a whole displacement field being known is usually only satisfied for small specimens, specific loading conditions and particular measurement equipment, i.e. Digital Imaging Correlation (Hild and Roux 2006). In practice, the most common case is the availability of a discrete number of displacement measurements, usually obtained by extensometers or transducers, hereinafter referred to as sensors.

When the full displacement field ũ(x) is not known, and only a limited set of L data ũ i is available, it is common practice to replace problem (3) with the following (assuming from now on that L2-norm is used):

or sometimes with other formulations having more complicated forms involving weight matrices and/or regularisation terms (as in the Bayesian framework). In (4), x i is the position of the i-th sensor.

While the solution of (3) is the set p G which best fits the global experimental response, nothing is known about its relationship with the solution p L of (4), which only best fits the data provided. It is intuitive that \( { \lim}_{L\to +\infty }{\boldsymbol{p}}_{\boldsymbol{L}}={\boldsymbol{p}}_{\boldsymbol{G}} \), but, for finite values of L, the difference in the solution Δp = p L − p G is not only function of L, but also of the position x i , and there is no guarantee that increasing the amount of data improves the accuracy of the estimation, as shown by Balk (2013) with reference to an inverse problem of gravity.

3 Model reduction and sensor design

3.1 Reducing problem size

Using the Finite Element Method, the domain can be discretised into finite elements and the dependency of u on the position x in the global reference system can be made explicit using the relationship:

where the subscript e indicates the element which the point P, of global coordinates x and local coordinates x e , belongs to. The matrix N u (x e ) collects the so-called shape functions, which depend on the type of finite element considered. The connectivity matrix T e transforms the global nodal displacement vector U into the local reference system. Since both the shape functions and the connectivity are known a priori, the dependence of the full displacement field on the unknown parameters is completely characterized by the knowledge of the relationship U = U(p). Thus, from a theoretical point of view, imposing the equality between the displacement fields, e.g. the functional equality (2), is equivalent (neglecting a weight term given by the shape function integration) to imposing the vectorial equality:

where Ũ is the N-sized vector collecting the displacements of the nodes by which the structure is discretised. If we neglect the possible error given by the shape functions used, the inverse problem is solved once a limited set of displacements, i.e. the nodal displacements, is known, and the infinite-sized system (2) is replaced by the N-sized system (6).

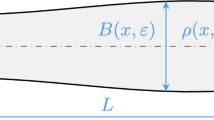

In most cases, the choice of the nodal discretisation in the domain is clearly distinct from the choice of the L nodes, the displacements of which are recorded during the test; furthermore, N ≫ L. What we want to show, however, is that, once L displacements ũ i are available, it can be possible to express the vector Ũ as a linear combination of them.

3.2 Inferring the global field from limited data

Let us suppose that it is possible to exploit the dependence of U on p by simply choosing a convenient basis. In this work, the selection of the new basis has been carried out by analysing the behaviour of the field when p is randomly varied by means of Proper Orthogonal Decomposition (POD). The details are provided in Appendix.

The displacement field expressed in the new basis reads:

where Φ is the N × K matrix representing the new basis, and a(p) is a vector collecting K amplitudes. In this way, the dependence on p is restricted to the amplitudes, while the basis is fixed once and for all. If K = N, U is simply expressed in a different equivalent basis; however, if the variation of the parameters p acts on U simply modifying the relative importance of a limited number K ≪ N of “shapes” φ j , the advantages in expressing U as in (7) become apparent.

In fact, let us consider a nodal displacement u i. From (7), it can be written as:

where Φ i is the 3 × K matrix obtained choosing the rows of Φ corresponding to the displacement u i. Consequently, if u is a vector collecting L displacements u i, we can write:

with:

On the other hand, a relative displacement Δu k between two points (placed at x k,1 and x k,2) along the direction of the line connecting them (as for transducers) can be expressed as:

where c k is the vector of the director cosines of the direction considered. The matrix Φ r now becomes:

It is herein underlined that the basis matrix Φ is evaluated by considering the whole nodal displacement field U, and thus the representation (7) should approximate the global structural response. As an example, in Appendix it is shown that POD minimises the average error of a set of models (snapshots). The sensor displacements correspond to a subset of U (10), possibly linearly combined (12), and thus Φ r is evaluated by extracting and combining rows of matrix Φ. No further analyses on the snapshot set are thus required to evaluate Φ r .

If rank(Φ r ) = K, it is possible to invert (9) in a least-squares sense:

where Φ r † is the pseudo-inverse matrixFootnote 1 of Φ r (Φ r † = Φ r − 1 if Φ r is squared and full rank). From (7) and (13):

A simple example may help clarify the concept. Let us consider an cantilever Timoshenko beam of length l for which we wish to identify elastic properties E and G, loaded by a force F (assumed as known without uncertainty) orthogonal to its axis and applied on the free end. From the analysis of the response at varying E and G, we infer that the displacement field can be expressed as sum of two contributions, a cubic shape φ 1(x) and a linear shape φ 2(x):

From Timoshenko theory we know that \( {a}_1\left(E,G\right)=\frac{F}{6EI} \) and \( {a}_2\left(E,G\right)=\frac{P\chi }{GA} \), with A, I and χ being area, second moment of area and shear factor of the beam cross section, but it is herein assumed that this information is not explicitly known (as for a generic structure). Let us now assume that we experimentally recorded the displacements u m and u e at the middle and free end of the beam as effects of the force F. From (15), they can be expressed as:

and thus:

From (15) and (17), the global field can be written as function of the known displacements:

This shows that the global displacement field can be expressed as a function of the recorded displacements without knowing the explicit relationship between these values and the sought parameters (E and G in the example). The decomposition of the global field can be performed by means of techniques as POD described in Appendix.

3.3 The optimal sensor layout

Expression (14) is a linear relationship between the nodal displacement vector and a limited set of data (absolute displacements (10), or relative displacements (12)). Thus, it is natural to investigate how an error in u propagates into the global response. When the noise in u can be assumed as a Gaussian random variable with zero mean and variance σ 2, the mean square error (MSE) of the least square solution (13) is:

where â is the perturbed solution and λ i is the i-th eigenvalue of the matrix T r = Φ r T Φ r . An interesting approach for the optimal sensor placement in linear inverse problems is proposed by Ranieri et al. (2014), which is defined by minimising (19). Since the MSE presents many local minima, it is not actually used; instead, the research effort is focused on finding tight approximations that can be efficiently optimised.

Here, we disregard any assumptions about the noise distribution and the approximation of MSE. Applying a perturbation to u in (14) and subtracting the unperturbed expression, we obtain:

Considering one of the basic equations for the norm of a matrix:

it is clear that given an error in the measured data u (usually not controllable), an upper bound for the error in the vector U (and, consequently, in the global field) is given by the norm of the matrix P. Hence, the most informative (or representative) set of experimental data is that providing a reconstructed field U characterised by minimal error. Since P changes with changing sensor locations X (through the term Φ † r ), a rational approach in the choice of the measurement data may be the minimisation of the corresponding norm ‖P‖:

where x si indicates the position of the i-th sensor (or the position of the couple of points identifying the i-th transducer) belonging to a subset \( {\tilde{\mathfrak{B}}}_i \) of its domain \( \mathfrak{B} \). The objective function is represented by the norm of the matrix P = Φ Φ r †(X s ), which may be evaluated for a trial sensor setup X s by applying the relevant formulation (10) or (12). Although difficult to express in an analytical form, the optimisation problem (22) can be easily treated by using meta-heuristics, such as Genetic Algorithms.

Incidentally, as consequence of what has been said, it is possible to determine some necessary conditions for the solution of the inverse problem, in terms of inequalities between vector sizes:

where L is the number of experimental data, K is the number of significant modes of the reduced basis and Q is the number of sought material parameters.

3.4 Considerations

If the Frobenius norm is considered in (21), it is possible to give a physical meaning to ‖P‖. In fact, for the linear relationship (14), considering u as a random vector with variance-covariance matrix Var(u), U is also a random vector with variance-covariance matrix:

If we consider all errors in the experimental data as uncorrelated and with variance σ i 2 = σ 2 equal for all components, (24) reduces to:

Using the rules of the trace of a matrix, it gives:

where ‖P‖ F is the Frobenius norm of P. Under the hypothesis of Gaussian uncertainty, MSE(x) = tr[Var(x)], so ‖P‖ F represents the ratio between the root-mean-square-error (RMSE) of the reconstructed field U and the standard deviation of the measurements σ. This implies that if one compares two setups 1 and 2, characterised by matrices P 1 and P 2 and measurement standard deviation σ 1 and σ 2 respectively, the following equality holds:

where U 1 and U 2 are the reconstructed displacement field for setup 1 and 2 respectively. Equation (27) means that, assuming σ 1 = σ 2, the relative quality of the reconstruction (representativeness of the data) between two setups may be compared by examining the ratio of the two norms, even though the two setups consist of different number of measurements. It is explicitly conjectured here that ‖P‖ F can also be used as an indicator of the accuracy of the inverse problem solution when the inverse problem is globally well-posed. If it is not the case, regularisation techniques are to be added to the formulation, independently of the quality of the sensor setup. Even though no formal proof is given here for this assumption, in the numerical application described in the following section it seems to be empirically confirmed. More detailed analyses on the relationship between error in the reconstruction and error in the inverse problem solution are planned in further research.

4 Numerical applications

The numerical applications described in the following regard a mesoscale description for brick-masonry (Macorini and Izzuddin 2011). According to this modelling strategy bricks are modelled by 20-noded elastic solid elements, while mortar and brick-mortar interfaces are lumped into a 16-noded co-rotational interface element, in which the two faces, initially coincident in the undeformed configuration, may translate and rotate with respect to each other. Additional interface elements are inserted in the middle vertical plane of each brick to model possible crack inside the brick in the nonlinear stage. All material nonlinearities of the model are thus accounted for in such interface elements. Here, we are concerned about the elastic behaviour only and so the reader is referred to Macorini and Izzuddin (2011) for further discussion about the post-elastic behaviour. In the elastic branch, the relationship between displacements and stresses at the nodes is expressed through the definition of uncoupled axial k N and shear k V stiffnesses. This material model has been implemented in the general FE code ADAPTIC (Izzuddin 1991).

4.1 Shear test on a masonry panel

The structure examined here is a 770 × 770 mm2, 120 mm-thick masonry panel, made of 250 × 120 × 55 mm3 sized bricks and 10 mm-thick mortar layers. A stiff element is set on top of the panel allowing for a uniform load application. More specifically, a vertical pressure equal to 1 MPa followed by a horizontal monotonic load quasi-statically increasing from 0 to 92.4kN is applied to the stiff top beam. Additional kinematic constraints are applied to the stiff element forcing it to remain horizontal during the application of the horizontal load. The vertical load is modelled as a volume force in the stiff element. This specific loading arrangement simulates a common shear test for masonry panels (Fig. 1).

Here, the aim of the test is to estimate the elastic properties of the constituents, brick and mortar, modelled as described above. The elastic properties considered as variable are listed in Table 1, together with the variation range. In the table, r represents the ratio between head joint and bed joint elastic properties. Hence, it is assumed that \( \frac{k_{N,hj}}{k_{N,bj}}=\frac{k_{V,hj}}{k_{V,bj}}=r \).

In order to construct the POD basis and verify its accuracy, 150 samples (snapshot set) with different material properties have been generated by using Sobol pseudo-random sequence (Antonov and Saleev 1979). Subsequently, 100 additional samples (validation set) have been generated randomly to verify the fitness of the basis and the approximation.

The analysis of the 150 samples by means of the procedure described in the Appendix allows for the definition of the POD basis. This is evaluated by applying (A.3) after choosing the number of modes K. Clearly, the higher K the smaller errors are given in the POD representation. Such errors are evaluated by sequentially applying (A.5) and (A.1) to the FE displacement field U FE . The errors s, associated with the POD approximation and evaluated as in (A.7) and (A.8) for the snapshot set and the validation set respectively, are shown in Fig. 2 as a function of the number of modes K. They represent a cumulative measure of the error due to the compact representation given by POD.

The figure clearly confirms that a few modes are sufficient to retrieve a very accurate response. It is also remarkable that (A.7), valid only for the snapshots, gives an accurate estimate of the error even for random samples. Thus, it can be used as an error indicator for the choice of the number K of modes to be used. According to Fig. 2, the compact POD representation with K = 5 modes (shown in Fig. 3) gives errors less the 0.5 %, which is a very accurate approximation.

The full displacement field is characterised by 5040 degrees of freedom (three components for 1680 nodal displacements). In contrast, the reduced response consists of only 5 degrees of freedom, namely the amplitudes a 1 , a 2 , …, a 5 , that, multiplied by the mode shapes shown in Fig. 3, provide the response in terms of displacements.

Once the minimum number of modes needed for a certain level of accuracy, defined by (A.7), is selected, different sensor layouts can be compared based on their ability in estimating the global field (14), namely their “representativeness” of the global field. Five different setups will be compared: they consist of vertical (z) and in-plane horizontal (x) displacements measured for the different node sets displayed in Fig. 4. Setups (a)-(c) consider 30 nodes, while setup (d) and (e) 20 and 10 nodes respectively. In this example, the setups are compared independently from the possibility to be the optimal placements.

As shown in Section 3.4, the parameter ‖P‖ F is an indicator of the error expected in the reconstruction when a Gaussian noise is applied to the known data. The value of ‖P‖ F for the five setups considered is reported in Table 2. As expected, setups with more data (a–c) generally lead to smaller ‖P‖ F (greater representativeness). However, it can be noticed that increasing the number of data not always gives substantial benefit, as setups (a) and (d) have comparable ‖P‖ F , even if the latter has 33 % less data.

The accuracy of (26) is now investigated numerically. The data for the five setups, which were extracted for each of the models used as validation set, were perturbed with a Gaussian random noise of zero mean and different values of standard deviation: σ = 0.001 mm, σ = 0.005 mm, σ = 0.01 mm and σ = 0.05 mm. For each error range and for each model, 10 samples were generated, thus each giving a perturbed data set u j pert . This response was inserted in (14) to obtain a reconstructed displacement field U j rec . The rooted mean square error of the reconstructed responses is evaluated as:

where P is the number of samples for each error range (10 samples times 100 models) and U j FE is the error-free nodal displacement vector. The results are shown in Fig. 5.

Figure 5 confirms that the error estimation for the reconstructed field calculated using (26) is in excellent agreement with the numerical results for substantial data errors (σ = 0.005–0.05 mm). For low levels of error in the data (σ = 0.001 mm), (26) underestimates the total error. In that case, it is likely that the error due to the truncation of the POD basis is of the same order of magnitude as the error due to the data, and so it cannot be estimated by the procedure described above.

An example of reconstruction error for setups (c) and (e) when the error in the input data has standard deviation equal to 0.05 mm is displayed in Fig. 6. The superiority of setup (c) is evident, as the maximum error in the reconstruction is less than 0.05 mm, while using setup (e) it is amplified (maximum error in the reconstruction equal to 0.15 mm).

The final step of this example concerns the estimation of the material parameters. We want to investigate the accuracy of the material parameter estimation when the five setups displayed in Fig. 4 provide the input data for the inverse problem. This will practically confirm that if a setup is representative of the displacement field and the problem is globally well-posed, then the setup will also provide an accurate solution to the inverse problem. To this aim, the model with properties E b = 10000MPa, ν b = 0.15, k N,bj = 100N/mm 3, k V,bj = 40N/mm 3, r = 0.2 will be considered as “true” model, which represents the model whose parameters we aim to estimate. For each of the five sensor layouts, the data will be evaluated by extracting the response from the “true” model and adding a Gaussian perturbation of zero mean and standard deviation σ = 0.01 mm to each measurement. 20 perturbed instances for each sensor layout will be considered; each corresponds to a series of “experimental” data that will be used as input for the inverse analysis. The unknown parameters will be estimated by solving the optimisation problem (4), by means of a Genetic Algorithm, implemented in the software TOSCA (Chisari 2015) already used for the solution of inverse problems (Chisari et al. 2015). The GA parameters for the algorithm are:

-

Initial population size: 40 individuals;

-

Following populations size: 30 individuals;

-

Number of generations: 20;

-

Crossover type: Blend-α;

-

Crossover probability: 1.0;

-

Mutation probability: 0.01;

-

Scaling type: Linear ranking;

-

Scaling pressure for linear ranking: 1.7;

-

Elitist individuals: 1.

The reader is referred to the works by Chisari et al. (2015) and Chisari (2015) for further explanation on the parameter meaning and on the overall solution scheme.

Firstly, it has been noticed that the problem of estimating the five parameters displayed in Table 1 is globally ill-posed for the proposed setup. It means that even using the error-free global displacement field as reference in (4), the algorithm is likely to find a local optimum. In order to consider a well-posed problem, the number of variables has been decreased to two: k N and k V . The problem is well-posed, as can be seen in Fig. 7, where the solutions of the 20 perturbed problems in the k N - k V plane are displayed, for the case in which the full displacement field is used as input data. It is possible to notice that the solution of the inverse problem is not too sensitive to the data perturbation.

The maximum variance σp,max 2 in the parameter space (and its square root σp,max) can be used as a measure of the expected error in the estimation (Chisari et al. 2015). The maximum variance has been chosen instead of the variance of each parameter, since it is evident from Fig. 7 that uncorrelated errors in the data do not lead to uncorrelated errors in the solution. A comparison between the five setups is shown in Fig. 8a, while in Fig. 8b the data reported in Table 2 are shown as column bars.

In the two figures, it can be observed that the qualitative trend is similar for σp,max and ‖P‖ F : setups (b) and (c) are the best of those considered in this example, while, as expected considering the value ‖P‖ F , setup (e) leads to the largest errors in the inverse procedure. Results obtained by using setups (a) and (d) are similar. These results confirm that the sensor placement influences the solution of an inverse problem, and increasing the number of data is not a guarantee for successful parameter estimation. In fact, layouts (d) and (e), though utilising less sensors than (a) give similar uncertainty in the results.

It is clear that the representativeness of the data (defined by ‖P‖ F ) as proposed in this work should only be regarded as a qualitative indicator for the expected error of the inverse problem σp,max, since the relationship between them is not linear and not explicitly known. As seen in Fig. 8, the proportions between the error in the reconstructed field and the error in σp,max are not always respected.

4.2 Diagonal compression test

This example shows that an effective sensor placement can be determined by assuming the parameter ‖P‖ F as indicator of the representativeness of the data with respect to the global field. Using the material model described before, a diagonal compression test on a masonry panel has been simulated. This test is widely used in practice (Corradi et al. 2003; Milosevic et al. 2013) for the assessment of masonry shear strength; herein, it will be considered for the estimation of the elastic parameters of the mesoscale model.

The panel has dimensions 650 × 650 × 90 mm3, made of 250 × 55 × 90 mm3 solid clay bricks, 10 mm thick horizontal mortar joints and 15 mm thick vertical mortar joints. Each brick has been discretised by eight 20-node solid elements connected by stiff elastic 8-node interfaces (Fig. 9). Mortar joints were modelled by elastic 8-node interface elements, with vertical and horizontal interfaces having different properties (Fig. 10a). The two stiff angles of the loading apparatus at the top and bottom of the panel were modelled using solid elements, where the external nodes of the elements for the bottom angles not in contact with the masonry specimen were fully restrained. Four vertical forces F00, F01, F10, F11, 184 and 90 mm spaced in X and Y directions respectively, were applied on the top angle. Accidental eccentricities of the applied loading in X and Y directions were represented by changing the relative magnitude of the four point loads. Elastic interfaces with low tangential and high normal stiffness were applied between the angles and the panel to simulate a layer of plaster. The full numerical model is displayed in Fig. 10b. In Table 3, the parameters assumed as fully known are reported.

The parameters to be identified are the same described in Table 1, plus the parameters representing the load eccentricity in x-direction e x0 and y-direction e y0. e x0 and e y0 parameterise the load application point as the four forces shown in Fig. 10b are related to the total force F by the expressions:

It is easy to verify that the sum of the forces is equal to F. When e x0 = e y0 = 0.5 the load is perfectly centred; the considered variation range is [0.0; 1.0].

The exploration of the parameter space for building POD basis has been performed by generating 200 samples by the Sobol sequence. The analysis results show that the error in the representation, given by (A.7), is about 1 % when using 7 modes. It means that, based on inequalities (23), at least 7 experimental measurements are needed to solve the inverse problem of estimating the unknown material elastic properties and the two eccentricities.

In the following, we will focus on the problem of choosing the best layout for 14 transducers, each measuring relative displacements between two points. It is equivalent to solving the optimisation problem (22) where x s i is the vector collecting the coordinates of the end points of the i-th transducer. In this application, x s i may vary in the space of the N nodes of the model, with the following limitations:

-

1)

x s1 − 7 must lay on one side of the specimen, while x s8 − 14 are constrained to be symmetrical on the other side;

-

2)

the nodes of the angles are excluded from the selection.

These limitations may be added as constraints to the optimisation problem. Other constraints may be of practical interest. Thus, according to the type of constraint, different analyses were carried out and will be described in the following. To solve the optimisation problem, the same GA software TOSCA described for the solution of the inverse problem in Subsection 4.1 was utilised. The GA parameters are described in the following list:

-

Initial population size: 280 individuals;

-

Following populations size: 280 individuals;

-

Number of generations: 300;

-

Crossover type: Blend-α;

-

Crossover probability: 0.85;

-

Mutation probability: 0.01;

-

Scaling type: Linear ranking;

-

Scaling pressure for linear ranking: 1.7;

-

Elitist individuals: 14.

-

Option 1: In Fig. 11 some statistics of the optimisation analysis are displayed along with the optimal layout found by the algorithm. The minimum solution is attained at around generation 200 and is characterised by ‖P‖ F = 340, remarkably lower than the mean value in the first randomly-generated population ‖P‖ F = 1400, which can be regarded as the expected value of a random choice for the layout. It is clear that the sensor placement is usually determined by the analyst’s intuition, thus never completely random, but these results show that an optimisation analysis can be beneficial.

-

Option 2: Looking at the solution displayed in Fig. 11b, it is clear that some constraints should be added in order to obtain a more practical solution, since many end nodes of the transducers are placed on the interface between mortar and brick, where it is not possible to glue the instrument bases. To this aim, the space of the points that can be chosen by the algorithm was decreased as in Fig. 12a, by removing the point belonging to the brick edges. The new optimal layout shown in Fig. 12b is characterised by the objective value ‖P‖ F = 404. As intuitively expected, in both analyses the algorithm sets at least one transducer (actually two in both cases) along the principal diagonal, meaning that this is recognised as the most important measurement to be processed.

-

Option 3: Another practical constraint that must be allowed for concerns the loading plates. Boundary conditions are often difficult to properly model; in the case of the diagonal compression test, it is very difficult to define the material properties of the plaster spreading the load transferred by the steel plates. In the example, this thin layer was modelled as an elastic interface and its material properties were set as constant (Table 3), but the actual behaviour and the effect on the model response are in fact unknown. In order to decrease the effect of incorrect boundary condition modelling in the inverse estimation, one could avoid considering the nodes close to the steel angles. For this reason, in this analysis the node considered are those displayed in Fig. 13a. The solution is characterised by the transducer layout shown in Fig. 13b. Its objective function value is ‖P‖ F = 844, about twice the minimum obtained in Option 1 and 2.

The procedure developed in this work allows for the comparison of setups of different nature. As an example, it is interesting to compare the use of 14 experimental data as the outcome of Analysis 3 with a full-field displacement monitoring obtained by using Digital Image Correlation (DIC) for the region identified in Fig. 13a as Zone Of Interest (ZOI). By using all nodes on both sides (two cameras), we obtain ‖P‖ F = 41, 20 times smaller than the solution of Option 3. It clearly shows the advantages of using full field acquisition systems with respect to more common discrete instruments.

As last remark, it is worth pointing out that the solutions displayed in Figs. 11, 12, and 13 may depend on the GA run, because of the stochastic nature of the optimisation algorithm. Different runs could provide different solutions, and those displayed have no presumption to be the global optimum of the optimisation problem. If one wishes to verify this, it is highly recommended to perform more than one analysis before choosing the correct setup, as usually suggested when applying Genetic Algorithms. However, in the authors’ experience, any solution of the sensor optimisation is always to be “adjusted” in some way before being realistically applied, because of unavoidable practical issues (superposition of the instruments, difficulty in the application, etc.). Because of this, a near-optimal solution as that given by the single GA run can effectively represent a starting point and the associated ‖P‖ is the reference for comparison between the adjusted solution and the original computed setup.

5 Conclusions

In this work a novel procedure is proposed for specifying the optimal sensor layout for structural identification problems. Unlike previous works in which the effectiveness of a sensor layout was estimated based on the sensitivity of the response to the unknown parameters, herein this is defined by the representativeness of the experimental data with respect to the global field. By means of FE discretisation and POD model reduction, it is possible to express the global field as a linear combination of the experimental data. It is shown that the Frobenius norm of the linking matrix P is a measure of the error expected in the reconstruction, and it is conjectured that it can also be a qualitative measure of the expected error in the inverse analysis when the problem is globally well-posed.

Two examples relating to a meso-scale model for masonry structures are described. The first example, representing a shear test on a small single-leaf panel, considers the estimation of the stiffness of the interfaces modelling the mortar joints. Different setups are compared based on the parameter ‖P‖ F and the error in the inverse procedure solution σp,max. The analysis confirms the above assumption, and the trend of ‖P‖ F with the setup is similar to that of σp,max. It means that ‖P‖ F can be effectively used as a parameter to estimate the effectiveness of a setup. This is the basis for the optimisation analysis utilising Genetic Algorithms described in the second example which considers a diagonal compression test. It is shown that different constraints can be embedded into the procedure; furthermore, the comparison between the optimal sensor layout and the full-field displacement acquisition by means of DIC confirms the superiority of the latter system, which is thus highly recommended for inverse problems.

The advantages of the procedure arise from the possibility of defining the effectiveness of a sensor layout independently from the inverse problem solution. Furthermore, different possibilities can be readily assessed by comparing the associated ‖P‖ F value, and the optimisation procedure may be enriched with several kinds of constraints in order to make it useful for practical applications. If the representativeness of the data is not adequate, the proposed method can suggest the need for changing the setup, or adding regularisation in the inverse problem formulation. It is here underlined that the methodology deals with the choice of the optimal sensor placement given a test, but does not address the problem of assessing if the test is sufficient to estimate parameters. In this, it helps discriminating the case where the inverse problem is ill-posed due to the intrinsic nature of the test, or due to the bad choice of measurements.

Although the exposition and the examples described in this work relate to elasto-static problems and sensors measuring displacements, the procedure can be easily extended to nonlinear static and dynamic problems and other quantities of interest. Further research aimed at elaborating the relationship between the quality of reconstruction and the error in the inverse estimation is currently ongoing.

Notes

The Moore-Penrose inverse (or pseudo-inverse) of a rectangular matrix \( A\in {\mathbb{R}}^{m\times n} \) is the unique matrix \( {A}^{\dagger}\in {\mathbb{R}}^{n\times m} \) satisfying the following four matrix equations (Stewart and Sun 1990):

$$ A{A}^{\dagger }A=A,\kern1em {A}^{\dagger }A{A}^{\dagger }={A}^{\dagger },\kern1em {\left(A{A}^{\dagger}\right)}^T=A{A}^{\dagger },\kern1em {\left({A}^{\dagger }A\right)}^T={A}^{\dagger }A $$

References

Antonov IA, Saleev VM (1979) An economic method of computing LP tau-sequence. USSR Comput Math Math Phys 19(1):252–256

Artyukhin EA (1985) Experimental design of measurement of the solution of coefficient-type inverse heat conduction problem. J Eng Phys 48(3):372–376

Balk P (2013) A priori information and admissible complexity of the model of anomalous objects in the solution of inverse problems of gravity. Izv Phys Solid Earth 49(2):165–176

Barbone P, Bamber J (2002) Quantitative elasticity imaging: what can and cannot be inferred from strain images. Phys Med Biol 47(12):2147–2164

Barbone P, Gokhale N (2004) Elastic modulus imaging: on the uniqueness and nonuniqueness of the elastography inverse problem in two dimensions. Inverse Prob 20(1):283–296

Beal JM, Shukla A, Brezhneva OA, Abramson MA (2008) Optimal sensor placement for enhancing sensitivity to change in stiffness for structural health monitoring. Optim Eng 9(2):119–142

Bedon C, Morassi A (2014) Dynamic testing and parameter identification of a base-isolated bridge. Eng Struct 60:85–99

Bruggi M, Mariani S (2013) Optimization of sensor placement to detect damage in flexible plates. Eng Optim 45(6):659–676

Buljak V (2011) Inverse analyses with model reduction. Springer, Berlin

Chisari C (2015) Inverse techniques for model identification of masonry structures. University of Trieste: PhD Thesis

Chisari C, Macorini L, Amadio C, Izzuddin BA (2015) An inverse analysis procedure for material parameter identification of mortar joints in unreinforced masonry. Comput Struct 155:97–105

Cividini A, Maier G, Nappi A (1983) Parameter estimation of a static geotechnical model using a Bayes’ approach. Int J Rock Mech Min Sci Geomech Abstr 20(5):215–226

Corradi M, Borri A, Vignoli A (2003) Experimental study on the determination of strength of masonry walls. Constr Build Mater 17:325–337

Fadale T, Nenarokomov A, Emery A (1995) Two approaches to optimal sensor locations. J Heat Transf 117(2):373–379

Farrar C, Worden K (2007) An introduction to structural health monitoring. Philos Trans R Soc A Math Phys Eng Sci 365(1851):303–315

Ferreira E, Oberai A, Barbone P (2012) Uniqueness of the elastography inverse problem for incompressible nonlinear planar hyperelasticity. Inverse Prob 28(6):1–25

Fontan M, Breysse D, Bos F (2014) A hierarchy of sources of errors influencing the quality of identification of unknown parameters using a meta-heuristic algorithm. Comput Struct 139:1–17

Friswell M (2007) Damage identification using inverse methods. Philos Trans R Soc A Math Phys Eng Sci 365(1851):393–410

Garbowski T, Maier G, Novati G (2012) On calibration of orthotropic elastic–plastic constitutive models for paper foils by biaxial tests and inverse analyses. Struct Multidiscip Optim 46(1):111–128

Gentile C, Saisi A (2007) Ambient vibration testing of historic masonry towers for structural identification and damage assessment. Constr Build Mater 21(6):1311–1321

Goenezen S, Barbone P, Oberai AA (2011) Solution of the nonlinear elasticity imaging inverse problem: the incompressible case. Comput Methods Appl Mech Eng 200:1406–1420

Goldberg DE (1989) Genetic Algorithms in search, optimization and machine learning. s.l.:Addison-Wesley

Herzog R, Riedel I (2015) Sequentially optimal sensor placement in thermoelastic models for real time applications. Optim Eng 16(4):737–766

Hild F, Roux S (2006) Digital image correlation: from displacement measurement to identification of elastic properties—a review. Strain 42(2):69–80

Izzuddin BA (1991) Nonlinear dynamic analysis of framed structures. Imperial College London: PhD Thesis

Kabanikhin S (2008) Definitions and examples of inverse and ill-posed problems. J Inverse Ill-Posed Prob 16(4):317–357

Kammer D, Tinker ML (2004) Optimal placement of triaxial accelerometers for modal vibration tests. Mech Syst Signal Process 18:29–41

Leone G, Persico R, Solimene R (2003) A quadratic model for electromagnetic subsurface prospecting. AEU Int J Electron Commun 57(1):33–46

Li Y, Xiang Z, Zhou M, Cen Z (2008) An integrated parameter identification method combined with sensor placement design. Commun Numer Methods Eng 24:1571–1585

Liang YC et al (2002) Proper orthogonal decomposition and its applications—part I: theory. J Sound Vib 252(3):527–544

Macorini L, Izzuddin B (2011) A non-linear interface element for 3D mesoscale analysis of brick-masonry structures. Int J Numer Methods Eng 85:1584–1608

Mallardo V, Aliabadi M (2013) Optimal sensor placement for structural, damage and impact identification: a review. Struct Durab Health Monit 9(4):287–323

Milosevic J, Gago A, Lopes M, Bento R (2013) Experimental assessment of shear strength parameters on rubble stone masonry specimens. Constr Build Mater 47:1372–1380

Mitchell T (2000) An algorithm for the construction of “D-optimal” experimental designs. Technometrics 42(1):48–54

Ranieri J, Chebira A, Vetterli M (2014) Near-optimal sensor placement for linear inverse problems. IEEE Trans Signal Process 62(5):1135–1146

Stewart G, Sun J-G (1990) Matrix perturbation theory. Academic, New York

Tarantola A (2005) Inverse problem theory and methods for model parameter estimation. s.l.:SIAM

Udwadia FE (1994) Methodology for optimum sensor locations for parameter identification in dynamic systems. J Eng Mech 120:368–390

Xiang Z, Swoboda G, Cen Z (2003) Optimal layout of displacement measurements for parameter identification process in geomechanics. Int J Geomech 3(2):205–216

Yao L, Sethares WA, Kammer DC (1993) Sensor placement for on-orbit modal identification via a genetic algorithm. AIAA J 31:1922–1928

Acknowledgments

The first author is grateful to Ms Deborah Agbedjro from King’s College London for the fruitful discussions about the relationship between the variances of the displacement field and experimental data.

Author information

Authors and Affiliations

Corresponding author

Appendix. Proper Orthogonal Decomposition

Appendix. Proper Orthogonal Decomposition

Changing the basis for U means that it will be expressed as

with K ≪ N. In (A.1), it is underlined that only the principal component a i is dependent on the material parameter p, while the basis, expressed by the matrix Φ, whose columns are the modal shapes φ i , is fixed and evaluated once and for all. Defining the modal shapes φ i and choosing the number of significant modes K is the core of the procedure.

Let U j denote the j-th (with j = 1,…, M) observation (called snapshot in the POD jargon), i.e. the value assumed by the vector U for a given choice of the material parameter p j. The modal shapes φ i are obtained as the basis minimising the approximation error for the M snapshots. In other words, given a number K of modal shapes, these are obtained minimising the average error:

where ‖ ⋅ ‖ is the Euclidean norm of a vector. Calling “snapshot matrix” Ū, the N × M matrix collecting the M snapshots as columns, and defined the M × M modified correlation matrix D= Ū T Ū, it is possible to prove (Buljak 2011) that the modal shape matrix Φ is:

where:

-

V is the M × K matrix whose columns are the first K eigenvectors of matrix D;

-

Z is the diagonal K × K square matrix whose elements z ii are defined as:

with λ i being the i-th eigenvalue of matrix D. One of the main features of the POD basis is its orthonormality, which means that Φ T Φ = I. Given a computed displacement field U FE , thanks to the orthonormality of the basis, the reduced representation in terms of amplitudes can be evaluated inverting (A.1) by the expression:

As regards the choice of the number K of significant modes, it is also possible to prove that the overall error E may be expressed by the relation:

and so, dividing by ∑ M j = 1 ‖U j‖2 and taking the square root, we obtain the root-mean-square error of the snapshots s snapshots in a non-dimensional form:

where the equality ∑ M j = 1 ‖U j‖2 = ∑ M i = 1 λ i comes from (A.6) considering that, for the M samples considered, taking all modes means expressing the M displacement fields in just another different basis, leading to E = 0.

For a different set of P samples, the expression for s (A.7) is not applicable, and so:

Rights and permissions

About this article

Cite this article

Chisari, C., Macorini, L., Amadio, C. et al. Optimal sensor placement for structural parameter identification. Struct Multidisc Optim 55, 647–662 (2017). https://doi.org/10.1007/s00158-016-1531-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-016-1531-1