Abstract

We present a locally cubically convergent algorithm for topology optimization of Stokes flows based on a Chebyshev’s iteration globalized with Armijo linesearch. The characteristic features of the method include the low computational complexity of the search direction calculation, evaluation of the objective function and constraints needed in the linesearch procedure as well as their high order derivatives utilized for obtaining higher order rate of convergence. Both finite element and finite volumes discretizations of the algorithm are tested on the standard two-dimensional benchmark problems, in the case of finite elements both on structured and quasi-uniform unstructured meshes of quadrilaterals. The algorithm outperforms Newton’s method in nearly all test cases. Finally, the finite element discretization of the algorithm is tested within a continuation/adaptive mesh refinement framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Given a bounded Lipschitz domain \({\Omega } \subset R^{d},d \in \{2,3\}, \mathbf {f} \in [L^{6/5}({\Omega })]^{d}\), u 0∈[H 1/2(∂Ω)]d satisfying the compatibility condition \({\int }_{\partial {\Omega }} \mathbf {u}_{0}\cdot \mathbf {n} = 0\), and a number γ∈(0,1) we consider the minimization problem introduced by Borrvall and Petersson (2003):

where

\(T: [H^{1}({\Omega })]^{d} \rightarrow [H^{1/2}(\partial {\Omega })]^{d}\) is the trace operator, and |⋅| is used to denote the Euclidian norm on \(\mathbb {R}^{d}\), Frobenius norm on \(\mathbb {R}^{d\times d}\), and Lebesgue measure on measurable subsets of \(\mathbb {R}^{d}\). A non-negative coefficient \(\alpha : [0,1] \rightarrow \mathbb {R}_{+}\) featuring in (2) has a physical meaning of inverse permeability of porous material and is typically taken to be \(\alpha (\rho ) = \bar {\alpha } q [(q+1)/(\rho +q) - 1]\), for some large positive parameters \(\bar {\alpha }\) (inverse permeability of the ersatz “solid” material) and q (design parametrization parameter related to the sharpness of the solid/flow interface); see (Borrvall and Petersson 2003).

Due to its simple structure and practical importance the problem has attracted significant attention in the community, see for example (Aage et al. 2008; Othmer 2008; Challis and Guest 2009) for various computational approaches to this and a related and even more practically important problem for Navier–Stokes flows.

A typical computation then consists of solving a sequence of problems (1) for increasing values of q, until a solution (u ∗,ρ ∗) is obtained with ρ ∗ being an approximation to a characteristic function χ ω∗ of some subset \(\omega ^{*} \subset {\Omega }\). In this case, ω ∗ can be viewed as an optimal domain for a Stokes flow; see (Borrvall and Petersson 2003) for more details. The whole procedure relies upon enforcing the boundary conditions through penalties, the idea which may be traced at least to Babuška (1973). As a result, despite the common practice of keeping the penalty parameter \(\bar {\alpha }\) fixed (though some authors also recommend relating it to the inverse of the Reynolds number in the case of Navier–Stokes problems (Kondoh et al. 2012)), this parameter should be increased as one refines the discretization of (1) in order to improve the accuracy of the computed solutions. We refer to Evgrafov (2005) for the discussion of the validity of the limiting process \(\bar {\alpha } \rightarrow +\infty \) in the context of topology optimization.

Recently Carlsson et al. (2009) and Evgrafov (2014) proposed applying Newton’s iteration embedded into a general globalized optimization framework, such as SQP, to a smoothed problem obtained by eliminating the design variables from (1). If such an elimination can be carried out computationally inexpensively and without ruining the sparsity of the problem, which is true for problems without global design regularization, the resulting practical algorithm attains fast local convergence due to the immediate availability of the second order information and its sparsity. Unlike the “1-step” or simultaneous analysis and design based approaches, the method operates in a smaller space, see Evgrafov (2014) for a discussion of advantages and disadvantages of the method. In fact, the complexity of the Newton’s direction finding subproblem in such a method is virtually the same as the cost of computing a steepest descent direction in the traditional nested framework (Borrvall and Petersson 2003) when direct linear solvers are utilized; for iterative solvers drawing the comparison is a slightly more delicate issue owing to the differences in spectral properties of the matrices involved. The method, which is briefly reviewed in Section 2, demonstrates fast convergence, which is almost independent of the utilized PDE discretization or the mesh refinement level.

The main purpose of this note is to further improve the rate of convergence of the method proposed in Evgrafov (2014). Namely, the availability of the higher order derivatives of the objective function/constraints for very little computational effort allows us to apply Chebyshev’s instead of Newton’s algorithm, see Nechepurenko (1954), to the system of the first order necessary optimality conditions for a slight modification of (1) formulated in the state space. Thereby we obtain a method demonstrating third order local convergence, as may be expected (Nechepurenko 2004). One iteration of Chebyshev’s algorithm is computationally relatively inexpensive since it requires solving two linear algebraic systems with the same matrix. Thus if the direct solvers are used, the additional computational effort when compared to the Newton’s algorithm is negligible. Even in the case of Krylov subspace solvers one may reuse the computed preconditioner and utilize deflation techniques to speed up the solution of the second system.

We end this brief introduction by mentioning that there are locally cubically convergent methods, which only require derivatives of the objective function/constraints up to the second order, see for example (Kleinmichel 1968; Bartish 1969). There is also some recent interest and preliminary, but encouraging results on the use of the inexact versions of the methods in Halley class (which includes the Chebyshev’s method), see for example (Steihaug and Suleiman 2013; Gundersen and Steihaug 2012; Deng and Zhang 2004) and references therein.

2 State space Newton’s method for dissipated power minimization of Stokes flows

Instead of (1), we consider a barrier-type problem

for a small barrier parameter μ>0. The first order necessary optimality conditions for (3) is a system of non-linear equations in \((\mathbf {u},p,\rho ,\lambda ) \in \mathcal {U}_{\text {ad}}\times {L^{2}_{0}}({\Omega }) \times \mathcal {D}_{\text {ad}} \times \mathbb {R}\):

Note that at an arbitrary point \((\mathbf {u}, \rho ) \in \mathcal {U}_{\text {ad}} \times \mathcal {D}_{\text {ad}}\) satisfying the inequality \(J_{\mu }(\mathbf {u},\rho ) < \infty \) the expression \(J^{\prime }_{\rho }\), the ρ-derivative of J μ , does not define a bounded linear functional on \(L^{\infty }({\Omega })\). However, when ρ solves (6) for a given (u,λ), which we will denote by writing ρ u,λ , we have the inclusion

owing to the Sobolev embedding \(H^{1}({\Omega }) \subset L^{6}({\Omega })\), which is valid in dimensions 2 and 3. Since both quantities in the left hand side cannot be large simultaneously, we deduce that both μ/(1−ρ) and μ/ρ are in L 3(Ω), and therefore the barrier part of J μ is three times differentiable with respect to ρ.Footnote 1

The Newton’s system for (4)–(7) at a point (u,p,ρ u,λ ,λ) may be formally stated as follows: find \((\mathbf {d}_{\mathbf {u}}, d_{p}, d_{\rho }, d_{\lambda }) \in [{H^{1}_{0}}({\Omega })]^{d} \times {L^{2}_{0}}({\Omega }) \times L^{\infty }({\Omega })\times \mathbb {R}\) such that

where

Unfortunately, the linear operator in the left hand side of (9)–(12) is not a locally Lipschitz function of the parameter ρ, when ρ is considered as an independent variable. Since this may tamper with the local quadratic convergence of the Newton’s algorithm, we eliminate d ρ from this system by taking a Schur complement with respect to \(J^{\prime \prime }_{\rho ,\rho } \geq 8\mu \) and obtain a Newton’s system in a smaller state space \([{H^{1}_{0}}({\Omega })]^{d} \times {L^{2}_{0}}({\Omega }) \times \mathbb {R}\):

where

It is easy to verify that in this space the non-constant elements of the operator in the left hand side of (14)–(16), namely A, \(\rho ^{\prime }_{\mathbf {u}}\), \(\rho ^{\prime }_{\lambda }\) are locally Lipschitz functions of (u,p,λ), see (Evgrafov 2014) for details. Therefore one can expect local quadratic convergence of the Newton’s algorithm with unit steps towards points of local minimum, which satisfy the second order sufficient conditions.

To globalize the convergence of this method we utilize two strategies. Firstly, we perform backtracking linesearch with respect to the augmented Lagrangian function

where ν λ >0, ν p >0 are penalty parameters. Note that the search goes along a curve in the full space, owing to the non-linear dependence of ρ u,λ on its arguments through the equation (6). Secondly, when the Newton’s system is unsolvable (we have not experienced this in our numerical computations) or when the Newton’s direction is not a direction of descent for ψ, we compute a modified Newton’s direction by replacing A in (14) with

If the resulting direction still fails to be a descent direction for ψ, we also increase some or all of the penalty parameters ν λ , ν p .

3 Chebyshev’s method for (3)

Chebyshev’s method for solving a system of equations F(x)=0 is based on the iteration \(x^{k+1} = x^{k} -\{\mathbb {I}+0.5[{\kern 1pt}F^{\prime }(x^{k}){\kern 1pt}]^{-1}F^{\prime \prime }({\kern 1pt}x^{k}{\kern 1pt})[{\kern 1pt}F^{\prime }(x^{k}){\kern 1pt}]^{-1}F(x^{k})\}[F^{\prime }(x^{k})]^{-1}F(x^{k})\). Nechepurenko (2004) has shown that under certain assumptions, including that of local Lipschitz continuity of \(F^{\prime \prime }\), the method attains local third order convergence, which is much faster than the second order local convergence rate of Newton’s iteration. Unlike other methods based on the the second order derivatives of F, such as Halley’s method (method of tangent hyperbolas), see for example (Mertvecova 1953), Chebyshev’s iteration requires solving two systems of equations with the same operator \(F^{\prime }(x)\) in the left hand side.

In the present context we calculate the Chebyshev’s correction \((\mathbf {d}_{\mathbf {u}}^{C}, {d_{p}^{C}}, d_{\lambda }^{C}) \in [{H^{1}_{0}}({\Omega })]^{d} \times {L^{2}_{0}}({\Omega }) \times \mathbb {R}\) to the Newton’s direction (d u ,d p ,d λ ) given by (14)–(16) by solving the following boundary value problem:

where

and

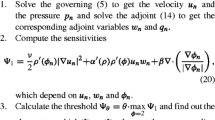

The resulting algorithm is formally summarized as Algorithm 1.

4 Solving the linear systems

Utilizing the exact Chebyshev’s direction (or the exact Newton’s direction) is primarily feasible if a factorization of the left hand side of the system (14)–(15) is available as a result of an application of a direct solver. To keep the number of non-zero elements in the discretized operators to a minimum, instead of enforcing the zero mean pressure constraint \(p, d_{p}, {d_{p}^{C}} \in {L^{2}_{0}}({\Omega })\) we simply prescribe the pressure to be zero at some point in the computational domain. The solution to (14)–(16) is obtained by computing a Schur complement with respect to the first two equations as follows. We first solve the systems

and

which requires only one matrix factorization. Using this information we obtain the Newton’s direction

Furthermore, the Chebyshev’s direction is found by solving the system

after which we similarly to (28) compute

Thus, in the considered case no new matrix factorizations is required and the additional computational work compared to finding the Newton’s direction is truly negligible.

5 Benchmarking Chebyshev’s iteration

In this section we compare the performance of the Chebyshev’s and Newton’s algorithms on a set of two-dimensional benchmarks described in detail in Borrvall and Petersson (2003).

We discretize Algorithm 1 using the approach proposed for the Newton’s method in Evgrafov (2014). Namely, we select a stable discretizationFootnote 2 of the Stokes equations and use it for representing the discrete versions of (u,p) and the search directions (d u ,d p ), \((\mathbf {d}_{\mathbf {u}}^{C}, {d_{p}^{C}})\). The quantity ρ u,λ is not stored, but rather recomputed on the fly by solving the scalar (6) at points determined by the selected discretization of the Newton’s direction finding subproblem (14), (15).

Finite element discretization

In this note we use two popular stable mixed finite element families on quadrilaterals, namely [Q2]2/Q1 (Hood and Taylor 1973) and [Q2-iso-Q1]2/Q1 (Bercovier and Pironneau 1979).Footnote 3 We do not store discretized ρ u,λ but instead recompute it by solving (11) at Gaussian quadrature points whenever needed for evaluating integrals.

Within the Newton’s method framework we typically need to integrate non-polynomial functions (terms involving ρ u,λ ) times polynomials (terms involving u or d u ). For the pure Newton’s direction, the polynomials are at most piecewise bi-quadratic for [Q2-iso-Q1]2/Q1 elements or piecewise bi-quartic for [Q2]2/Q1 elements, which require tensor product Gaussian quadratures of at least degree 2 or 3 over every u-element (thus a reiterated Gaussian quadrature of degree 2 over the macro-element) to compute the integral over the polynomial part exactly. In Evgrafov (2014) we used a rule of thumb of adding another quadrature point along each coordinate axis for evaluating all the non-polynomial integrals, thus leading to tensor product Gaussian quadratures of order 4 for Taylor–Hood elements.

In the case of Chebyshev discretization, integrating the expression (23), which features in the right hand side of (20), times a test function involves some non-polynomial terms including ρ u,λ times piecewise tensor-products of polynomials of the order up to 12 in the case of Taylor–Hood elements or of order 6 in the case of Bercovier–Pironneau elements. To address this we raise the quadrature power to 8 and 5, respectively; however, we report the performance of the Taylor–Hood discretization with the quadrature of order 4 as well. A general comment based on our computational experience is that for coarse discretizations the accuracy of the quadrature plays a much more important role for reducing the KKT residuals to near machine accuracy than for the finer discretizations, which is not surprizing as the volumetric Lebesgue measure of the elements where ρ u,λ deviates significantly from a constant becomes smaller and smaller near optimal solutions for fine discretizations.

We have implemented the FEM-discretized Newton’s and Chebyshev’s algorithms in C++ using deal.II libraries (Bangerth et al. 2007). Umfpack (Davis 2004) is utilized as a linear solver.

Finite volume discretization

We utilize the standard staggered grid approach, see for example Griebel (1997). Since the divergence is exactly zero at the discrete level, we remove the terms involving divergence from the augmented Lagrangian function or the right hand side of the Newton’s system. Additionally, we integrate |curlu|2 instead of |∇u|2 when evaluating J or its derivatives in our implementation. All the terms involving ρ u,λ are evaluated only at the vorticity nodes, see Fig. 1, and then interpolated back to u 1 and u 2 nodes, where momentum conservation is formulated. The algorithm is implemented in Matlab (we used an excellent educational Navier–Stokes code (Seibold 2008) as a basis for the Stokes solver) and utilizes the built-in LDL factorization based on MA27 (Duff 2002) for solving all the linear systems. The full code including setups for the benchmarks is available as Online Resource 1.

For both discretizations

We normally initialize (u,p) to the solution of the pure Stokes problem (ρ=1) in the domain, and put λ 0=100.0. In the case of the “rugby ball” problem, such a starting point satisfies the first order optimality conditions. To escape this critical point we initialize (u,p) to be the solution of Brinkmann’s equations corresponding to ρ=1.0−0.25χ B(0.1) instead, where χ B(0.1) is the characteristic function for the ball of radius 0.1 around the center of Ω.

We begin the iterations by using the modified Newton’s search direction, see (19), and we do this until the linesearch accepts unit steplength and ∥d u ∥[H1(Ω)]d /∥u∥[H1(Ω)]d <10−1. This normally happens within the first few iterations, after which we proceed as outlined in Algorithm 1. We terminate the algorithm when either ∥d u ∥[H1(Ω)]d /∥u∥[H1(Ω)]d <10−10 (success) or the limit of iterations has been exceeded (failure). For the benchmark problems presented in this study we have empirically found out that typically 50 iterations are sufficient for algorithm’s convergence, and therefore we use 50 as the iteration limit throughout our computations. Occasionally the algorithm stagnates–in the sense that a discrete Newton’s directions becomes a direction of ascent for the discretized augmented Lagrangian function with a very small positive directional derivative on the order of the discretization error. This is particularly true on coarse meshes and can often be rectified by increasing the number of quadrature points. The algorithm still makes progress utilizing the modified Newton’s directions, but the fast local rate of convergence is of course lost in this case. Fortunately, this happens at near-optimal points. To address this issue at least partly, we modify the algorithm as follows. In the linesearch routine, if \(|\psi ^{\prime }(0)|<10^{-8}\) and the search direction is based on non-modified Hessian, we accept the unit step. On the other hand, if a modified Newton’s step is taken but the relative improvement in the merit function after linesearch is less than 10−8 we stop the algorithm and declare that it has stagnated. Strictly speaking these criteria should be adjusted with the discretization choice and mesh refinement level. However, in our numerical simulations we observe that stagnation occurs only occasionally, provided that the mesh is “fine enough.”

These stopping conditions are unnecessarily stringent as the final error will be dominated by the errors in the discretization (small parameter h), approximation of the Dirichlet boundary conditions (small parameter \(\bar {\alpha }^{-1}\)), relaxation of the “0–1” affine penalty to α(ρ) (small parameter q −1), or the barrier function (small parameter μ). Nevertheless, owing to the fast local convergence of the algorithm the strictness of the stopping criteria has very little effect on the number of algorithmic iterations.

For other algorithmic parameters we set \(\bar {\alpha } = 2.5\cdot 10^{4}\), q=0.1, μ=10−3. We use a non-monotone heuristic strategy for updating the penalty parameters ν

λ

, ν

p

of the augmented Lagrangian penalty function ψ. At the beginning of every iteration we put \((\nu _{\lambda }, \nu _{p}) =(|\lambda |+\delta , \|p\|_{{L^{2}_{0}}({\Omega })} + \delta )\), where δ=10.0 in our computations. In backtracking/Armijo’s linesearch, we use c

1=10−4 in the sufficient decrease condition, and we successively halve the steplength until this condition is satisfied. The results of the numerical benchmarks are summarized in Table 1 and the first two block rows of Table 2; the snapshots of some of the optimal designs are shown in the top row in Fig. 2. Whether the algorithm terminates successfully, , or

, or  , it converges towards the optimal solutions with the same topology as the ones reported in (Borrvall and Petersson 2003; Challis and Guest 2009). It is undoubtedly possible to fine-tune the algorithmic parameters to further reduce the number of iterations in either Newton’s or Chebyshev’s cases. The purpose of this work is rather to illustrate the effect of the “freely” available high-order search direction on the algorithmic performance. Before proceeding further, we would like to make a few comments about Tables 1 and 2:

, it converges towards the optimal solutions with the same topology as the ones reported in (Borrvall and Petersson 2003; Challis and Guest 2009). It is undoubtedly possible to fine-tune the algorithmic parameters to further reduce the number of iterations in either Newton’s or Chebyshev’s cases. The purpose of this work is rather to illustrate the effect of the “freely” available high-order search direction on the algorithmic performance. Before proceeding further, we would like to make a few comments about Tables 1 and 2:

Snapshots of the final designs computed using Chebyshev’s iteration for some of the benchmark problems. Note: the field has been projected onto the space of piecewise linear polynomials for visualization purposes. In the top two rows, from left to right: “diffuser”, “pipe bend”, “rugby (γ=0.8)”, “dbl. pipe (short)”, and “dbl. pipe (long)”. Top row: designs computed on a quasi-uniform unstructured mesh #3; middle row: designs computed as a result of the adaptive mesh refinement/continuation strategy #2; bottom row: zoom into the leading edge of the “rugby (γ=0.8)” design including the mesh lines for the quasi-uniform and adaptive meshes

Mesh size:

-

For the test case “double pipe (long)” the number of elements along the x axis should be multiplied by a factor 1.5;

-

Quasi-uniform unstructured meshes of quadrilaterals have been generated using Gmsh (Geuzaine and Remacle 2009). Mesh #1, #2, and #3 have approximately 10K, 40K, and 160K quadrilateral elements (×1.5 in the case of “double pipe (long)” problem), which corresponds to the number of elements used in the structured grids;

-

The mesh resolutions taken from Borrvall and Petersson (2003) are measured in u-elements, and therefore should be divided by 2 to get to the resolution in terms of [Q2-iso-Q1]2/Q1 macro-elements, see footnote 3;

-

The mesh resolutions taken from Challis and Guest (2009) are rounded to the nearest hundred, see the cited paper for details.

Timings:

-

In order to get “architecture-independent” results we report the total running time of the algorithm relative to one solution of the Stokes problem, even excluding the finite element assembly time for the latter in the case of FEM implementation. Note that this metric is especially unfair to the FVM discretization as the number of non-zeros in the discretized Jacobian of the Newton’s system is much larger than that for the pure Stokes or Brinkmann system due to the presence of the terms in the momentum equations, which explicitly couple u 1 and u 2. Also note that we were not able to obtain very reliable timings of our algorithm on the multi-user server, on which we performed our computations, and some outliers could be easily spotted in Table 1;

-

For the results taken from Borrvall and Petersson (2003), we simply “guess” the relative timing to be the number of algorithmic iterations. This is a very optimistic estimate as it is based on the assumption that all calculations other than solving the Stokes/Brinkmann system take zero time;

-

For the results taken from (Challis and Guest 2009), we estimate the time relative to the solution of the Stokes problem on the full computational domain based on the data reported in the cited paper. This is done because the level set algorithm utilized in the cited paper needs to solve the flow equations only on a smaller flow domain, and explains the fact that the estimated timing is much smaller than the number of algorithmic iterations. Note that the timing is still proportional to the number of algorithmic iterations.

Objective function values:

-

It is extremely important to keep in mind that the calculations are based on a numerical discretization of Algorithm 1 and not on applying a mathematical programming algorithm to a discretized version of (1). That is, we utilize “optimize, then discretize” rather than “discretize, then optimize” approach. Therefore, the fact that the algorithm terminates successfully with ∥d u ∥[H1(Ω)]d <δ does not imply that the resulting point (u,ρ u,λ ) is an O(δ)-optimal for the discretized problem (1). The computed solutions are only as accurate, as the utilized discretization scheme!

-

One can directly compare the objective function values reported in Borrvall and Petersson (2003) and the ones found by the present algorithm, even though we report the power dissipation J augmented with the positive barrier function;

-

One cannot directly compare the objective function values reported in Challis and Guest (2009) and the ones found by the present algorithm, as we underimpose the no-slip Dirichlet boundary conditions in the present case. This is not unique to our algorithm for solving the topology optimization problem but rather a major weakness of the homogenization based topology optimization approach to fluid mechanics inherent in formulation (1).

In most of the cases Chebyshev’s iteration outperforms the Newton’s iteration with respect to both the number of required iterations and the total running time. The effect seems to be more prominent for the examples where the optimal configurations contain long straight channels, such as the “pipe bend” and “two pipes” test cases, see Fig. 3, bottom. In these cases the linesearch accepts unit Newton’s steps long before the fast decrease of the residuals occurs. On the other hand, for the “diffuser” and “rugby” test cases the Newton’s algorithm behaves extremely well with quadratic convergence occurring almost immediately after the algorithm switches from the initial modified Newton’s to pure Newton’s directions, see Fig. 3, top. This makes it very difficult to outperform such a process.

Convergence of the residuals for the state space Newton/Chebyshev methods. Top: diffuser problem; bottom: pipe bend problem. In both cases, FEM discretization with 8-point quadratures on an unstructured Mesh #3 with approximately 160 K quadrilaterals is used. In the second case, faster-than-Newton local convergence of Chebyshev’s iteration is particularly prominent

Another general statement is that the discretization errors stemming from the interpolation of certain non-linear quantities between the staggered grids in the FVM discretization negatively affects the rate of convergence of Newton’s and to an even larger degree the Chebyshev’s iteration. Nevertheless, benchmarks in Table 1 demonstrate that utilizing Chebyshev’s correction is beneficial within this framework as well.

Note that the level set method utilized in Challis and Guest (2009) does not require continuation with respect to the parameters \((\bar {\alpha }, q, \mu )\), which to a degree compensates for the large number of algorithmic iterations that it requires, which also seems to grow with mesh refinement.

6 Continuation and adaptive mesh refinement

We now apply the fast locally convergent algorithm developed in the previous sections in the framework of adaptive mesh refinement and parameter continuation. For simplicity, we use a residual-based error estimator for (4), (5) (note that (6) is preserved at the discrete level), see for example (Verfürth 1989). Thus for every element T in the mesh we compute the a posteriori error indicator

where [∇u⋅n] e is the jump of the normal derivative of u across the edge e in the mesh. A conceptual continuation/adaptive mesh refinement algorithm is thus stated as Algorithm 2. Note that in step 5 of the algorithm we do not need to project the design field ρ, as it is recomputed on the new mesh using (6). Additionally, the interpolation does not preserve the conservation of mass (in the weak sense) constraint divu=0, which is another reason for adding it to the right hand side of Newton’s system and the merit function.

We test Algorithm 2 in the following fashion. In all cases, we start by computing the optimal solution on unstructured grid #1 using Algorithm 1. We then make four adaptive refinement steps (four iterations of the loop in Algorithm 2) by refining 20 % of cells with the largest indicator, and coarsening 3 % of cells with the smallest indicator. Deal II also needs to refine some adjacent cells (we refer to the library documentation for details), and this strategy leads to roughly speaking doubling of the number of cells at every iteration. For parameters \((\bar {\alpha }, q, \mu ^{-1})\) we test the following strategies: #1: \((\bar {\alpha }, q, \mu ^{-1})\) are constant on all meshes; #2: (q,μ −1) are doubled before each refinement, but \(\bar {\alpha }\) is constant; #3: all parameters are doubled before each refinement. We note that in the topology optimization literature typically a strategy analogous to our strategy #2 is utilized, however without adaptive mesh refinement or parameter μ, which is not found in the original problem (1), see for example (Borrvall and Petersson 2003; Aage et al. 2008).

The results of these benchmarks are summarized in Table 2 and some of the optimal designs obtained are shown in Fig. 2, middle and bottom rows. One can see that both Newton’s and Chebyshev’s algorithms perform incredibly well in the strategy #1, needing in most cases only two iterations for reducing the residuals in Algorithm 1 to the level described in the previous section.

For strategy #2, both algorithms also perform reasonably well, although both Newton’s and Chebyshev’s algorithm stagnated on most instances of “pipe bend” test case. Interestingly enough, Chebyshev’s method in some benchmarks required one or two more iterations when compared to Newton’s algorithm. Even ignoring the advantages of adaptive mesh refinement where most of the iterations are performed on coarse meshes and therefore are very computationally inexpensive, 12+5+5+7+4=33 iterations needed by Chebyshev’s algorithm to solve “rugby ball” problem to a very high precision can be compared with 384 iterations reported in (Aage et al. 2008) for this benchmark problem and a similar continuation procedure based on a first order algorithm and much less strict stopping criteria, resulting in a more than 10-fold speedup.

Strategy #3 is the most challenging one, with both methods struggling to reduce the residual to the requested tolerance on most problem instances. Additionally, increasing \(\bar {\alpha }\) results in larger residuals in the “solid” regions of the domain, which are then refined in accordance with our refinement strategy. This results in meshes, which are much more quasi-uniform when compared with strategies #1 and #2.

When one takes into account the fact that most of the computations are done on low resolution meshes, the savings are quite significant: for example on a multi-user Linux machine with 4×6 core Intel Xeon 2.67 GHz CPUs and 256Gb of memory, one “pipe bend” problem run using Newton’s (Chebyshev’s) method on the unstructured meshes #1, #2, and #3 take respectively 566 (453), 3 330 (2 860), and 29 100 (25 200) seconds.Footnote 4 On the same hardware, adaptive strategies #1, #2, and #3 utilizing Newon’s (Chebyshev’s) iteration take respectively 1 920 (1 740), 3 520 (3 360), and 11 500 (11 500) seconds.

We end this brief discussion by stating that in our opinion a much more thorough analytical/numerical study, providing recommendations for updating many parameters involved in topology optimization problems such as (1) within the adaptive mesh refinement context, would be beneficial for further advancing the application of topology optimization to real-life problems.

7 Conclusions

We have presented, to the best of our knowledge, the first locally cubically convergent method for topology optimization. On quasi-uniform meshes the method demonstrated savings of 8 % to 17 % of iterations when compared with already fast Newton’s algorithm, or 60 % to 90 % when compared with approaches based on the first order algorithms, even disregarding differences in stopping criteria.

The local cubic convergence is attained at the cost of solving one additional linear system only differing in its right hand side per algorithmic iteration when compared with the Newton’s iteration. When direct solvers are used, the increase in the computational cost is negligible. If iterative solvers are utilized, one should use inexact versions of Newton’s or higher order methods, but at least in the case of Chebyshev’s iteration one can reuse the constructed preconditioners and utilize deflation techniques to speed up the solution of the second system.

Both Newton’s and Chebyshev’s iterations have been tested within the framework of parameter continuation and adaptive mesh refinement, where neither of the methods has decisively outperformed the other. Nevertheless we hope that the ideas utilized in deriving a higher order method for this simple benchmark problem are useful in other contexts pertinent to structural and multidisciplinary optimization problems. Additionally, Tables 1 and 2 could be used for benchmarking other optimization algorithms applied to (1).

Notes

In dimension 2, the inclusion in (8) can be further strengthened and thus higher order derivatives may be obtained without assuming any further regularity of u. Alternatively, since the zeroth order term α(ρ)u in (4) is a priori in [L 2(Ω)]d one may appeal to the standard regularity results for the Stokes problem, see for example (Kellogg and Osborn 1976; Amrouche and Girault 1991), to assert that u∈[H s(Ω)]d, 1<s≤2, under the appropriate additional assumptions on f, u 0, and Ω, and in this way further strengthen the inclusion (8) using the Sobolev inequality.

In Evgrafov (2014) we have verified that the state space Newton’s algorithm performs virtually independently of the selected discretization/mesh refinement.

This discretization has been used in the original paper of Borrvall and Petersson (2003). Note that we count the number of elements differently: in (Borrvall and Petersson 2003) the number of u-elements is reported, whereas we list the number of macroelements. As a result the number of degrees of freedom in [Q2]2/Q1 and [Q2-iso-Q1]2/Q1 elements is the same on the same discretization level. In other words our 100×100 discretization [Q2-iso-Q1]2/Q1 corresponds to 200×200 u-elements.

Note the lack of numerical scalability owing to the utilization of the direct linear solver.

References

Aage N, Poulsen TH, Gersborg-Hansen A, Sigmund O (2008) Topology optimization of large scale Stokes flow problems. Struct Multidiscip Optim 35(2):175–180

Amrouche C, Girault V (1991) On the existence and regularity of the solution of Stokes problem in arbitrary dimension. Proc Jpn Acad, Series A, Math Sci 67(5):171–175

Babuška I (1973) The finite element method with penalty. Math Comput 27(122):221–228

Bangerth W, Hartmann R, Kanschat G (2007) deal.II - a general purpose object oriented finite element library. ACM Trans Math Softw 33(4):24/1–24/27

Bartish MJ (1969) On some iterative methods of solving functional equations. Sibirskij matematiceskij zurnal 10(3):488–493

Bercovier M, Pironneau O (1979) Error estimates for finite element method solution of the Stokes problem in the primitive variables. Numer Math 33(2):211–224

Borrvall T, Petersson J (2003) Topology optimization of fluids in Stokes flow. Internat J Numer Methods Fluids 41(1):77– 107

Carlsson J, Sandberg M, Szepessy A (2009) Symplectic Pontryagin approximations for optimal design. ESAIM: Math Model and Numer Anal 43(1):3–32

Challis VJ, Guest JK (2009) Level-set topology optimization of fluids in Stokes flow. Int J Numer Methods Eng 79:1284–1308

Davis TA (2004) Algorithm 832: UMFPACK, an unsymmetric-pattern multifrontal method. ACM Trans Math Softw 30(2):196–199

Deng N, Zhang H (2004) Theoretical efficiency of a new inexact method of tangent hyperbolas. Optim Meth and Softw 19(3–4):247–265

Duff IS (2002) MA57 a new code for the solution of sparse symmetric definite and indefinite systems. Technical Report RAL-TR-2002-024. Rutherford Appleton Laboratory

Evgrafov A (2005) The limits of porous materials in the topology optimization of Stokes flows. Appl Math Optim 52(3):263–277

Evgrafov A (2014) State space Newtons method for topology optimization. Comput Methods Appl Mech Eng 278:272–290

Geuzaine C, Remacle J-F (2009) Gmsh A 3-d finite element mesh generator with built-in pre-and post-processing facilities. Int J Numer Methods Eng 79(11):1309–1331

Griebel M, Dornseifer T, Neunhoeffer T (1997) Numerical simulation in fluid dynamics: a practical introduction. SIAM 3

Gundersen G, Steihaug T (2012) On diagonally structured problems in unconstrained optimization using an inexact super Halley method. J Comput Appl Math 236(15):3685–3695

Hood P, Taylor C (1973) Numerical solution of the Navier–Stokes equations using the finite element technique. Comput Fluids 1:1–28

Kellogg RB, Osborn JE (1976) A regularity result for the Stokes problem in a convex polygon. J Funct Anal 21(4):397–431

Kleinmichel H (1968) Stetige Analoga und Iterationsverfahren für nichtlineare Gleichungen in BANACHräumen. Math Nachr 37(5-6):313–343

Kondoh T, Matsumori T, Kawamoto A (2012) Drag minimization and lift maximization in laminar flows via topology optimization employing simple objective function expressions based on body force integration. Struct Multidiscip Optim 45(5):693–701

Mertvecova MA (1953) Analogue of the process of tangent hyperbolas for general functional equations. Dokl Akad Nauk SSSR 88:611–614

Nechepurenko MI (1954) On Chebyshev’s method for functional equations. Uspekhi Mat Nauk 9(2):163–170

Nechepurenko MI (2004) Refinement of convergence conditions for the Chebyshev method. Sib Zh Vychisl Mat 7(3):249–260

Othmer C (2008) A continuous adjoint formulation for the computation of topological and surface sensitivities of ducted flows. Internat J Numer Methods Fluids 58(8)

Seiboldm B (2008) A compact and fast Matlab code solving the incompressible Navier–Stokes equations on rectangular domains. http://math.mit.edu/cse/codes/mit18086_navierstokes.pdf

Steihaug T, Suleiman S (2013) Rate of convergence of higher order methods. Appl Numer Math 67:230–242

Verfürth R (1989) A posteriori error estimators for the Stokes equations. Numer Math 55(3):309–325

Acknowledgments

The author is grateful to Martin Berggren for pointing out the reference (Carlsson et al. 2009) to us.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Evgrafov, A. On Chebyshev’s method for topology optimization of Stokes flows. Struct Multidisc Optim 51, 801–811 (2015). https://doi.org/10.1007/s00158-014-1176-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-014-1176-x