Abstract

Assistive Device Art derives from the integration of Assistive Technology and Art, involving the mediation of sensorimotor functions and perception from both, psychophysical methods and conceptual mechanics of sensory embodiment. This paper describes the concept of ADA and its origins by observing the phenomena that surround the aesthetics of prosthesis-related art. It also analyzes one case study, the Echolocation Headphones, relating its provenience and performance to this new conceptual and psychophysical approach of tool design. This ADA tool is designed to aid human echolocation. They facilitate the experience of sonic vision, as a way of reflecting and learning about the construct of our spatial perception. Echolocation Headphones are a pair of opaque goggles which disable the participant’s vision. This device emits a focused sound beam which activates the space with directional acoustic reflection, giving the user the ability to navigate and perceive space through audition. The directional properties of parametric sound provide the participant a focal echo, similar to the focal point of vision. This study analyzes the effectiveness of this wearable sensory extension for aiding auditory spatial location in three experiments; optimal sound type and distance for object location, perceptual resolution by just noticeable difference, and goal-directed spatial navigation for open pathway detection, all conducted at the Virtual Reality Lab of the University of Tsukuba, Japan. The Echolocation Headphones have been designed for a diverse participant base. They have both the potential to aid auditory spatial perception for the visually impaired and to train sighted individuals in gaining human echolocation abilities. Furthermore, this Assistive Device artwork instigates participants to contemplate on the plasticity of their sensorimotor architecture.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Assistive Device Art

This paper brings forward the concept of Assistive Device Art (ADA) as coined by the author. It is a definition for the emerging cultural phenomena that integrates Assistive Technology and Art. These works of art involve the mediation of sensorimotor functions and perception, as they relate to psychophysical methods and conceptual mechanics of sensory embodiment. Psychophysics is the methodological quantification of sensory awareness in the sciences, while the theoretical aspects of embodiment have a more exploratory nature—they seek to investigate perception open-ended. The philosophical view of embodied cognition depends on having sensorimotor capacities embedded in a biological, psychological, and cultural context (Rosch et al. 1991). The importance of this concept is that it defines all experience as not merely an informational exchange between environment and the mind, but rather an interactive transformation. This interaction of the sensorimotor functions of an organism and its environment are what mutually create an experience (Thompson 2010).

1.1 Perception spectacles

Assistive Device Art sits between this transformational crossroads of sensory reactions in the form of tools for rediscovering the environment and mediating the plasticity of human experience. This art form interfaces electromechanically and biologically with a participant. It is functional and assistive, and its purpose is, furthermore, a means for engaging the plasticity of their perceptual constructs. Assistive Technology (AT) is an umbrella term that encompasses rehabilitation and prosthetic devices, such as systems which replace or support missing or impaired body parts. This terminology implies the demand for a shared experience, as the scope of restoration drives it to normalcy, rather than the pure pursuit of sensorimotor expansion. Regardless of its medium, technology is inherently assistive. However, AT refers explicitly to systems designed for aiding disabilities. Organisms and their biological mechanisms are a product of the world in which they live. All bodies have evolved as extensions of the sensory interactions with our environment. In our quest as human organisms, the need to reach, manipulate, feel, and hold manifested as a hand. Hutto (2013) defines the hand as “an organ of cognition” and not as subordinate to the central nervous system. It acts as the brain’s counterpart in a “bi-directional interplay between manual and brain activity”.

The prosthesis is an answer to conserve the universal standard of the human body, as well as the impulse to extend beyond our sensorimotor capacities. The device as separate or as a part of us is mechanized as an extension of our procedural tool which is the agency that activates the boundaries of our experience. This intentionality transposed through a machine or device belongs to the body with a semi-transparent relation. In this context, transparency is the ease of bodily incorporation, the adoption of a tool that through interaction becomes a seamless part of the user. Ihde (1979) conceptualizes the meaning of transparency as it relates to the quality of fusion between a human and machine. Interface transparency is the level of integration between the agent’s sensorimotor cortex and its tool.

ADA tools serve the participant as introspective portals to reflect on this process of incorporation. These cognitive prosthetic devices become ever more incorporated with practice, more transparent, enhancing our ability to re-wire our perception. The transformation of our awareness does not only happen by an additive and reductive process of incorporation, but also by the accumulative experience gained through the shift these devices/filters pose on the participant’s reality. Assistive Device artworks are performances that reveal the plasticity of the mind, while it is actively incorporating, replacing, and enhancing sensorimotor functions. These performances are interactive and experiential, aided by an interface worn between the sensory datum and the architecture of our physiology. Art is about communication and the perception of ideas. These performances transpose specific sensations to convey designed experiential models. As the participant performs for themselves by engaging these tools, they explore a new construct of their mediated environment and the blurring of incorporation between body agency and instrument. ADA centralizes and empowers the urge to transcend bodily limitations—both from necessity as Assistive Technology and as a means to playfully engage perceptual expansion. The desire to escape the body is what activates our state as evolving beings impelled by the intentionality of our experience.

1.2 Origins of ADA

The term “Device Art” is a concept within Media Art which encapsulates art, science, and technology with interaction as a medium for playful exploration. Originating in Japan in 2004 with the purpose of founding a new movement for expressive science and technology, it created a framework for producing, exhibiting, distributing, and theorizing this new type of media art (Iwata 2004). This model takes into consideration the digital age reproduction methods which make possible the commercial attainability of artworks to a broader audience. As a result, this movement expanded the art experience from galleries and museums and returned to the traditional Japanese view of art as being part of everyday life (Kusahara 2010). This consumable art framework is common in Japanese art, as it frequently exists between the lines of function, entertainment, and product.

This art movement is part of the encompassing phenomenon of Interactive Art, which is concerned with many of the same guidelines of Device Art, but envelopes a greater scope of work. Interaction in art involves participants rather than passive spectators, where their participation completes the work itself. The concept of activation of artworks by an audience has been part of the discussion of art movements since the Futurists’ Participatory Theater and further explored by others, such as Dada and the Fluxus Happenings. These artists were concerned with bringing the artworks closer to “life” (Dinkla 1996). Interactive Art usually takes the form of an immersive environment, mainly installations, where the audience activates the work by becoming part of it. The ubiquitous nature of computing media boosted this movement, making Interactive Art almost synonymous with its contemporary technology.

Device Art albeit interactive, technologically driven, and with the aim of bringing art back to daily life, has some crucial differences from Interactive Art. Device Art challenges the value model that surrounds the art market by embracing a commercial mass production approach to the value of art. Artworks of this form embody utility by its encapsulation as a device, which is more closely related to a product. The device is mobile or detachable from a specific space or participant; it only requires interaction. Device Art frees itself from the need of an exhibition space; it is available to the public with the intent of providing playful entertainment (Kusahara 2010).

1.3 Cultural aesthetics: products of desire

Assistive Device Art stems from the merging of AT and Device Art, and it is a form of understanding and expanding human abilities. This concept is closely related to the concurrent movements of Cyborg Art and Body Hacking, which include medical and ancient traditions of body modification for aesthetic, interactive, and assistive purposes. They explore the incorporation of contemporary technology such as the implantation of antennas and sensor–transducer devices. For instance, in 2013, Anthony Antonellis performed the artwork Net Art Implant, where he implanted an RFID chip on his left hand. This chip loads re-writable Web media content once scanned by a cell phone, typically a 10-frame-favicon animation (Antonellis 2013). Cybernetics pioneer Dr. Kevin Warwick (2002) conducted neuro-surgical experiments where he implanted the UtahArray/BrainGate into the median muscles of his arm, linking his nervous system directly to a computer with the purpose of advancing Assistive Technology. Works within the Cyborg and Body Hacking movements often include, if not almost require, the perforation or modification of the body to incorporate technology within it.

Assistive Device Art is non-invasive or semi-invasive. It becomes incorporated into our physiology while remaining a separate, yet adopted interactive device. The appeal behind these wearable prosthetic artworks is that users are not compromising or permanently modifying their body; instead, they are temporarily augmenting their experience. Cyborgs, people who use ADA, and those who practice meditation are all engaging neuroplasticity. These engagements fall under various levels of bodily embeddedness, but they all show a willingness to interface with their environments in a new type of transactional event.

The prosthesis is becoming an object of desire, propelled by the appeal of human enhancement. While not only providing a degree of “normalcy” to a disabled participant, it also enables a kind of superhuman ability. Pullin (2009) describes eyeglasses as a type of AT that has become a fashion accessory in his book, “Design Meets Disability”. Glasses are an example of AT that have pierced through the veil of disability by becoming a decorative statement and an object prone to irrationally positive attribution. Somehow, what seems to be a sign of mild visual impairment is a symbol of sophistication. ADA strives to empower people by popularizing concepts of AT and making them desirable. Art is a powerful tool for molding attitudes and proliferating channels of visibility by communicating aesthetic style and behavior. Through the means of playful interaction, phenomenological psychophysics, biomedical robotics, and sensory substitution, ADA is made to appeal aesthetically, become desirable, and useful inclusively to everyone.

In the world of consumer electronics, there are well-defined aesthetics that drive product appeal. ADA often uses the aesthetics of product design resonating with consumer culture similar to Speculative Design. Dunne and Raby (2014) brought forward this concept of Speculative Design a means of exploring and questioning ideas, speculating, rather than solving problems through the design of everyday things, a way to imagine possible futures. The critical aspect of this concept lies in the imagination and possibility that a model represents, rather than its actual technical implementation. Houde and Hill (1997) defined a method for prototyping where each prototype would be designed to fulfill three categories for full integration: “look-and-feel,” “role,” and “implementation”. In their design process, each prototype would lie somewhere between these three categories and slowly become integrated as each new iteration borrowed from the previous one. The “look-and-feel” prototype actualizes an idea by only concerning the sensory experience of using an artifact (Houde and Hill 1997). This type of prototype has no technical implementation, it just looks and feels like a real product. This type of prototype is closely related to “vaporware,” which is the term used in the product design industry referring to a product that is merely representational. It is announced to the public as a product, but not available for purchase. Similarly, in vaporware, the aesthetic design and role are fully implemented, but also void of technical functionality. Finished products of Speculative Design, vaporware, and “look-and-feel” prototypes rely on aesthetics to express an idea. This approach to design resonates with the traditional view of the object in art, where it is devoid of practical function. Its utility has been stripped, and its form reincorporated as part of a concept in the context of art. ADA takes into account the same aesthetic design principles that surround these products of design. However, depending on their purpose, most of these artworks are often entirely integrated prototypes, including their wholly functional technical implementation. Works in this realm are concerned with the experience of incorporation, rather than merely hinting at the idea.

Prototype, artwork, vaporware, venture capital product, and off-the-shelf are ambiguous terms in the cross-fire of defining an idea when it becomes public through the media. Consumers and journalists identify and publicize these artworks as real products on their own, driven by their desire for new technological advancements. Albeit, often Assistive Device artworks are intendedly designed to become viral internet products with the purpose of provoking public reactions that measure the consumer desire for body extensions and technological upgrades to our bodies. This “viralization” validates what these artworks represent—the human transcendental desire to augment and enhance their embodiment.

1.4 Assistive Device artworks: the prosthetic phenomena

Auger and Loizeau (2002) designed an Audio Tooth Implant artwork which consists of a prototype and a conceptual functional model. Their prototype is a transparent resin tooth with a microchip cast within it, floating in the middle. Conceptually, this device has a bluetooth connection that syncs with personal phones and other peripheral devices. It uses a vibrating motor to transduce audio signals directly to the jawbone providing bone conduction hearing. This implant was created with the premise to be used voluntarily to augment natural human faculties. The intended purpose of this artwork was a way to engage the public in a dialog about in-body technology and its impact on society (Schwartzman 2011). This implant device does not function, but it is skillfully executed as a “look and feel” prototype. Because of the artists’ careful product design consideration and their intriguing concept which lied on the fringes of possible technology, this artwork became a viral internet product. Time Magazine selected this artwork as part of their 2002 “Best Inventions” (Schwartzman 2011). In the public eye, this artwork became regarded as a hoax, as the media framed it out of context from its conceptual provenience, and confused it with a consumer product. However, this meant that their work fulfilled its purpose as a catalyst for public dialog about in-body technology, and more importantly, it expressed its eager acceptance.

Another work that resonates with this productized aesthetic is Beta Tank’s Eye-Candy-Can (2007). Through their art, they were searching for consumer attention, a way of validating the desire for sensory-mixing curiosity. This artwork is also a “look-and-feel” prototype that blurred the lines of product, artwork, and assistive technology. They drew inspiration from Paul Bach-Y-Rita’s Brain Port, an AT device that could substitute a blind person’s sight through the tactile stimulation of the tongue. This device includes a pair of glasses equipped with a camera connected to a tongue display unit. This tongue display unit consists of a stamp-sized array of electrodes, a matrix of electric pixels, sitting on the user’s tongue. This display reproduces the camera’s image by transposing the picture’s light and dark information to high or low electric currents (Bach-Y-Rita 1998). Beta Tank used this idea and fabricated a “look-and-feel” prototype, which consisted of a lollipop fashioned with some seeming electrodes and a USB attachment as its handle. They also created a company that would supposedly bring this technology to the general consumer market as an entertainment device that delivered visuo-electric flavors (Beta Tank 2007). However, these electrode pops where once again not functional, but, instead, they conceptually framed the use of AT as a new form of entertainment.

Not all artworks that could be considered ADA have to be desirable products for the consumer market. Most of these artworks are capable of becoming real products, but their primary objective is to provide an experience. They serve as a platform to expand our sensorimotor capacities as a form of feeling and understanding our transcendental embodiment. One of the earliest artworks that could be an example of AT is Sterlac’s (1980) Third Arm, a robotic third limb attached to his right arm. It has actuators and sensors that incorporate the prosthesis into his bodily awareness and agency (Sterlac 1980). This prosthetic artwork does not restore human ability; it expands on the participant’s sensorimotor functions transferring superhuman skills by allowing them to gain control of a third arm. This artwork questions the boundaries of human–computer integration, appropriating Assistive Technology for art as a means of expression beyond medical or bodily restoration. This work is not concerned with the optimal and efficient function of a third hand; it physically expresses the question of this concept’s utility and fulfilling its fantasy.

Another work that expresses this transcendence of embodiment is Professor Iwata’s (2000) Floating Eye. This artwork includes a pair of wearable goggles that display the real-time, wide-angle image received from a floating blimp tethered above the participant (Iwata 2000). This device substitutes the wearer’s perception from their usual scope to a broader, outer-body visual perspective, where the participant can perceive their entire body as part of their newly acquired visual angle. This experience has no specific assistive capabilities regarding restoring human functions, but it widens the perspective of the participant beyond their body’s architecture. It becomes a perceptual prosthesis that extends human awareness.

Some psychophysical experimental devices that are not art have the potential to be considered ADA. Take, for example, the work of Lundborg and his group (1999), “Hearing as Substitution for Sensation: A New Principle for Artificial Sensibility,” where they investigate how sonic perception can inform the somatosensory cortex about a physical object based on the acoustic deflection of a surface. Their system consists of contact microphones applied to the fingers which provide audible cues translating tactile to acoustic information by sensory substitution (Lundborg et al. 1999). While the presentation of this work is not in the context of art, its idea of playing with our sensory awareness resonates with the essence of ADA’s purpose of exploratory cross-modal introspection.

2 Designing an ADA

The Sensory Pathways for the Plastic Mind is a series of ADA that explores consumer product aesthetics as well as delivering new sensory experiences (Chacin 2013). These wearable extensions aim to activate alternative perceptual pathways as they mimic, borrow from, and may be useful as Assistive Technology. This series extends AT to the masses as a means to expand perceptual awareness and the malleability of our perceptual architecture. They are created by appropriating the functionality of everyday devices and re-inventing their interface to be experienced by cross-modal methods. This series includes Echolocation Headphones as well as works such as ScentRhythm and the Play-A-Grill. The ScentRhythm is a watch that uses olfactory mapping to the body’s circadian cycle. This device provides synthetic chemical signals that may induce time-related sensations, such as small doses of caffeine, paired with the scent of coffee to awaken the user. This work is a fully functional integrated prototype. Play-A-Grill is an MP3 player that plays music through the wearer’s teeth using bone conduction, similar to the way cochlear implants transduce sound bypassing the eardrum (Chacin 2013). Play-A-Grill is not just a “look and feel” prototype; its functionality has been implemented and tested, unlike Auger and Loizeau’s Audio Tooth Implant. This series exhibits functional AT that is desirable to the public. The combination of product design aesthetics and the promise of a novel cognitive experience has made some of the works in this series which become part of the viral Internet media. In this way, they have also become validated as a product of consumer desire.

2.1 The Echolocation Headphones

The Echolocation Headphones are a pair of goggles that aid human echolocation, namely the location of objects and the navigation of space through audition. The interface is designed to substitute the participant’s vision with the reception of a focused sound beam. This directional signal serves as a scanning probe that reflects the sonic signature of a target object. Humans are not capable of emitting a parametric echo, but this quality of sound gives the participant an auditory focus, similar to the focal point of vision.

Assistive Device artworks are created with an interdisciplinary approach that considers functionality with practical applications, as well as fulfilling an aesthetic, experiential, and awareness driven purpose. While these strategies are not mutually exclusive, there is a delicate balance for achieving a satisfactory evaluation method, where one approach is not more important than the other. For instance, in the design and concept formulation of the Echolocation Headphones, the technical functionality of this device is crucial to achieving its purpose as a perceptual introspection tool. In this specific case, the Echolocation Headphones first need to aid in human echolocation to provide the experiential awareness of spatial perception. While AT is designed to specifically aid and, perhaps, restore functions, an Assistive Device artwork is concerned with the playful means by which an experience forms perceptual awareness. Assistive Technology aims to develop more efficient systems by evaluating and comparing devices to previously existing products, whereas Assistive Device artworks are less concerned with seamless utility or ability restoration. Instead, these artworks are for a diverse participant base, designed with an experiential basis by which all participants can expand their perception regardless of their need for echolocation as a means of daily navigation.

2.2 Seeing with sound

The perception of space is a visuospatial skill that includes navigation, depth, distance, mental imagery, and construction. These functions occur in the parietal cortex at the highest level of visual cognitive processes (Brain Center 2008). The parietal lobe integrates sensory information including tasks such as the manipulation of objects (Blakemore and Frith 2005). The somatosensory cortex and the visual cortex’s dorsal stream are both parts of the parietal lobe. The “where” of visuospatial processing is referred to as the dorsal stream, and the “how” is referred to as the ventral stream, such as the vision for action or imagination (Goodale and Milner 1992; Mishkin 1982).

Audition also informs visuospatial processes by the sound’s sensed time of arrival, which is processed by the parietal cortex. Manipulating the speed at which audio reaches each ear can result in realistic effects artificially generated. The 3D3A Lab at Princeton University (2010), led by Professor Edgar, studies the fundamental aspects of spatial hearing in humans. They have developed a technique for sound playback that results in the realistic rendering of three-dimensional output, making it possible to simulate the sound of a flying insect circling one’s head with laptop speakers and without headphones. Their research hones on the few cues by which humans perceive the provenience of sound. One signal being the difference between the two intervals of time that happens when audio reaches one ear before the other. The second cue is the amplitude or volume differential of the sound arriving at the two ears (Wood 2010).

Human echolocation uses the same principles of 3D-audio recreations, accounting for time intervals and volume differentials. Echolocation is a means for navigation, object location, and even material differentiation. Mammals such as bats and dolphins have highly sophisticated hearing systems that allow them to differentiate the shape, size, and material of their targets. Their auditory systems are specialized based on their different target needs. Dolphins tend to use broadband, short-duration, narrow beams that are tolerant to the Doppler effect, while bats use more extended echolocation signals that seek the detection of the Doppler shift (Whitlow 1997). In humans, echolocation is a learned process, and while some humans depend on this skill for navigation, target location, and material differentiation. There are no organs in the human auditory system specialized for echolocation. Instead, our brain is adaptable, and sensory substitution can achieve this technique.

The World Access for the Blind is an organization that helps individuals echolocate; their motto being “Our Vision is Sound” (2010). Daniel Kish is a blind, self-taught echolocation expert who has been performing this skill, since he was a year old. He is the president of this organization and describes himself as the “Real Batman” (Kish 2011). Kish is a master of echolocation; he interprets the return of his mouth clicks gaining a rich understanding of his spatial surroundings. His and some other congenitally blind individuals’ early adoption of echolocation techniques seems to develop naturally. Some studies show that blind individuals demonstrate exceptional auditory spatial processing. These results suggest that sensory substitution is taking place, and the functions of the occipital lobe are appropriated for audio processing stemming from their deprived visual inputs (Collignon et al. 2008).

Kish’s (2011) dissemination of his echolocation technique has helped hundreds of blind people to regain “freedom,” as he describes it. Those who have learned to exercise mouth-clicking echolocation can navigate space, ride bikes, skateboards, and ice skate. They may also gain the ability to locate buildings hundreds of yards away with a single loud clap. Their clicking is a language that asks the environment—“Where are you?” with mouth sounds, cane tapings, and card clips on bicycle wheels (2010). These clicks return imprints of their physical encounters with their environment as if taking a sonic mold of space.

The skill of echolocation is a learned behavior that sometimes occurs naturally in congenitally blind individuals. It seems to be a survival mechanism by sensory substitution, where areas of the brain re-wire processing power from one absent signal to another. Some blind individuals have learned to process the auditory feedback as a sonic mold of their environment by instruction, for example, the pupils of Daniel Kish. Since human echolocation skills do not always develop naturally, it is possible that sighted individuals can also achieve this ability while presented with the ideal circumstances. In this case, sighted individuals should, maybe, restrict their vision to allow for the auditory feedback to be processed by the occipital lobe, similar to the perceptual conditions of a blind individual.

2.3 The Echolocation Headphones device

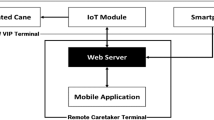

The main component of the Echolocation Headphones (Fig. 1) is a parametric speaker that uses a focused acoustic beam. The wavelength of an audible sound wave is omnidirectional and larger than an ultrasonic wave. The parametric sound is generated from ultrasonic carrier waves, which are shorter and of higher frequency, above 20 kHz. When two intermittent ultrasonic carrier waves collide in the air, they demodulate, resulting in a focused audible beam. This signal becomes perceivable in the audible spectrum while retaining the directional properties of ultrasonic sound. For example, one ultrasonic wave is 40 kHz; the other is 40 + kHz (plus the added audible sound wave). As shown in Fig. 2, the red wave being inaudible to the user and the blue wave returns from the wall as an acoustic signal. It is possible to calculate and affect the spatial behavior of sound by accounting for the time intervals between sonic signatures (Haberken 2012). The original version of parametric speakers is the Audio Spotlight by Holosonics, invented in the late 1990’s by Joseph Pompei (1997).

The speaker used in the Echolocation Headphones device is the Japanese TriState (2014a) single directional speaker. It has 50 ultrasonic oscillators with a transmission pressure of 116bB (MIN) and a continuous allowable voltage at 40 kHz of 10 Vrms. The transducer model is AT 40-10PB 3 made by Nippon Ceramic Co, and they have a maximum rating of 20 Vrms (TriState 2014a). These transducers are mounted on a printed circuit board (PCB) board and connected to the driver unit. The driver includes a PIC microcontroller and an LMC 662 operational amplifier. The operating voltage for this circuit is 12 V, with an average of approximately 300 mA and a peak of about 600 mA. It has a mono 1/8” headphone input compatible with any sound equipment. The sound quality outputted from the Tristate parametric speaker is in the audio frequency band between 400 Hz and 5 kHz. The sonic range of the speaker is detectable from tens of meters (TriState 2014b).

The priority for this interface is to place the sense of hearing at the center of this tool design. The parametric speaker was attached to the single square lens of a pair of welding goggles. Because this device performs sensory substitution from vision to audition, appropriating the functional design of glasses is a natural choice that prevents sight and replaces it with a central audible signal. Furthermore, the signal for an echo usually originates from the frontal direction of navigation, such as from the mouth of a human echolocation expert. Welding goggles are designed to protect the wearer from the harsh light, which makes them attractive for acoustic spatial training necessary for sighted subjects. In informal settings, the goggles do not entirely deprive the sense of sight purposefully, so that sighted participants can get accustomed to spatial mapping from vision to audition. However, when used for experimental purposes, the protective glass is covered with black tape to cover the wearer’s vision completely. Giving the participants the freedom to explore their surroundings is a crucial interaction for experiencing audio-based navigation and object location.

This device is battery operated with a lithium cell of 12 V and 1 A, kept in a plastic enclosure with an on/off switch attached to the back of the strap of the welding goggles. The battery enclosure and the driving circuit are connected to an MP3 player or another sound outputting device, which could be a computer or a Bluetooth sound module. The electronics of the driving circuit are exposed; however, there is another version of these headphones that include two rounded and concave plates that are placed behind the ears of the wearer to which the driving circuit is attached and concealed (Fig. 3). This auricle extension had a sonic amplification effect and helped the recollection of sound. An echolocation expert mentioned that the plates prevented him from perceiving the sound from all directions, neglecting the reflections behind him, and therefore, this feature was removed.

These headphones provide the wearer with focal audition as they scan the surrounding environment. The differences in sound reflection inform a more detailed spatial image. This scanning method is crucial for perceiving the lateral topography of space. The constantly changing direction of the sound beam gives the user information about their spatial surroundings through by hearing the contrast between acoustic signatures. The MP3 player connected to the Echolocation Headphones is loaded with a track of continuous clicks and another of white noise. Experimentation and prior art yielded white noise as is the most effective sound for this echolocation purpose. When demonstrating his echolocation method to an audience, Kish (2011) created a “shh” noise to show the distance of a lunch tray from his face. White noise works well, because it provides an extended range of tonality at random; this eases the detection of the level and speed change. Beyond the applicability of navigation, this tool is also useful for differentiating material properties such as dampening.

3 Performance evaluation of echolocation headphones

The Echolocation Headphones were evaluated quantitatively through three experiments; optimal sound and distance for object location, perceptual resolution by just noticeable difference, and goal-directed spatial navigation for open pathway detection. This device was also evaluated qualitatively in art exhibitions where participants were able to use it freely and asked about their experience in retrospect.

3.1 Experiment 1: Object location: optimal sound type and distance

This first experiment aimed to find the optimal sound type and distance for novice echolocation in detecting an object while using the Echolocation Headphones. The participants asked if they perceived an object placed at six distance points from 0 to 3 m in segments of 0.5 m. The three sound types compared were slow clicks, fast clicks, and white noise. Each position was questioned twice for each sound type with a total of 12 trials per sound type and distance point, all totaling 36 trials per participant. This experiment took place at the VR Lab of Empowerment Informatics at the University of Tsukuba, Japan during July 10th, 12th, and 31st 2016.

3.1.1 Participants

There were 12 participants between the ages of 22 and 31 with a median age of 23 years old, of which two were female, and ten were male. All the participants reported to have normal or corrected to normal vision, and no participants reported having the previous experience with practicing echolocation.

3.1.2 Apparatus

This experiment took place in a room, without any sound dampening, of approximately 4.3 m × 3.5 m × 3.6 m. Participants were asked to sit on a chair with a height of 50 cm in the middle of the room and wear the Echolocation Headphones device (Fig. 4), which covers their eyes, thus disabling their visual input. The object which they were detecting was a rolling erase board with the dimensions of 120 cm in height and a width of 60 cm. The sounds were created using a MacBook Air 2.2 Intel Core i7 with an output audio sample rate of 44100 with a block size of 64 bits, and the software used was Pure Data version 0.43.4-Extended. The computer was connected to the Echolocation Headphones by a 1/8-in. headphone jack in real time.

3.1.3 Audio characteristics

The sonic signature for the noise used was a standard noise function of Pure Data (noise ~), generated by producing 44100 random values per second in the range of − 1 to 1 of membrane positions (Kreidler 2009). White noise is often used in localization tasks, including devices that guide people to fire exits in case of emergency (Picard et al. 2009).

The sonic signature of the clicks follows some essential features from the samples used by Thaler and Castillo–Serrano (2016) in a study where they compared human echolocation mouth-made sound clicks to loudspeaker made sound clicks. They used a frequency 4 kHz with a decaying exponential separated by 750 ms of silence. Both the fast and slow clicks of our experiment modulate a decaying 4-kHz sound wave with a total duration of 200 and 250 ms of silence separated the rapid clicks and the slow clicks by 750 ms, similar to the experiment mentioned above.

Figure 5a shows the decay of the 4-kHz sound wave created by an envelope generator using an object that produces sequences of ramps (vline ~). The series used to generate the envelope was as follows: 10-0-0.1, 5-0.2-0.3, 2.5-0.4-0.6, 1.25-0.8-1.2, 0-2.4-2.4, and 0-0-(750 or 250 depending on fast or slow click). The first number is the amount by which the amplitude increases. The second is the time that it takes for that change to occur in milliseconds. The third is the time that it takes to go to the next sequence from the start of the ramp also in milliseconds, e.g., “5-0.2-0.3” means that the sound will ramp-up to 5 in 0.2 ms and wait 0.3 ms from the start of the ramp (Holzer 2008). Figure 5b shows the amplitude spectrum of the click, both fast and slow, in decibels.

a Amplitude modulation graph of the 4-kHz sound wave decay used for emitting both fast click and slow click sound types (taken from our pure data patch). b Amplitude spectrum in decibels graph (generated with the “pad.Spectogram.pd” pure data patch of Guillot 2016)

3.1.4 Procedure

While wearing the Echolocation Headphones, the participants were shown the control stimulus, the sound of the room with no object for each of the three sound types. Once the experiment began, they were presented with one of the sound types selected at random to begin the object location task. The participants were asked to reply “yes” if they sensed an object or “no” if they did not sense it.

The board was moved to a distance point chosen at random and each of these distance points was questioned twice. Once all distance points were completed twice, another sound type was chosen at random and the same object location task was repeated. The participants wore noise canceling headphones with music playing at a high volume, adjusted for their comfort, every time that the experimenter moved the object to another location in order to prevent other sonic cues to inform their perception about the location of the object. The total duration of the experiment was between 30 and 45 min, depending on the number of explanations which the participant required.

3.1.5 Experiment I: Evaluation

The performance accuracy of participants was measured by the number of total correct answers divided by the number of trials per distance point.

In this experiment, it is clear that the optimal echolocation distance based on the average performance of 12 participants is between 0 and 1.5 m, 1 m having the best results all sound types (Fig. 6). While the slow click sound type also showed a perceived accuracy average of 88%, it underperformed the other sound types with an average of 64.14%. The performance of participants while detecting the object with fast click sound type had an accuracy average of 96% for both 0.5 and 1 m, with an overall performance accuracy average of 80.14%. The noise sound type outranked all other sound types with a performance accuracy average of 85.67%, with the best detectable range being 96% at 1 m of distance location, while 0.5 m and 1.5–2 m of 92% of performance accuracy average.

3.2 Experiment 2: Just noticeable difference

The goal of this experiment was to find the perceptual resolution of the Echolocation Headphones by measuring the sensed difference of distance between two planes through a just noticeable difference (JND) standard procedure.

The experiment was conducted for two reference distances, standard stimuli, of 1 and 2 m. For each reference distance, the object on the right remained in a static position (1 or 2 m) from the participant and the second object was moved back-and-forth. The moving object (MO) started at either 20 cm less or 20 cm more from the static object (SO) (e.g., static object at 1 m; moving object at 1.20 or 80 cm). The starting distance of the MO was selected at random from the above-mentioned starting points and was moved in increments or decrements of 2 cm. This experiment was conducted at the VR Lab of Empowerment Informatics at the University of Tsukuba, Japan in April 11th, 20th, and 30th 2017.

3.2.1 Participants

There were six participants between the ages of 22 and 35 with a median age of 31 years old, of which one was female and five were male. All the participants reported to have normal or corrected to normal vision. Only three of the participants reported having the previous experience with practicing echolocation, since they had participated once in a previous experiment wearing the Echolocation Headphones.

3.2.2 Apparatus

This experiment was conducted in a room without any sound dampening of approximately 4.3 m × 3.5 m × 3.6 m. Participants were asked to sit on a chair with a height of 50 cm at one of the long ends of a table measuring 1 m × 3.5 m × 0.76 m located in the middle of the room (Fig. 7).

The sound was generated from a recorded sound file of white noise, played through an MP3 player connected to a short end-to end headphone jack. The MP3 player was a FastTech mini device with a TransFlash card (miniSD) memory model DX 48426. The objects were two boards of 29.7 cm × 42 cm of medium-density fiberboard (MDF) in portrait orientation. They included an acrylic stand on the back that allowed them to stand perfectly perpendicular to the table surface.

3.2.3 Procedure

The participants were asked to wear the Echolocation Headphones device, which completely covers their vision. Before the experiment began, the participants, while wearing the device, were showed the control stimuli. The MO and SO at the same distance for each reference point of 1 and 2 m showed the MO at 20 cm further from each reference point and 20 cm closer. The experimenter explained the task to the participants to reply “yes” if they noticed a difference between the two objects or “no” if they sensed no difference. They were also asked to not consider their previous answer, since the objects would be moved at random.

For each task, the participant was asked if they detected a difference in distance between the objects, and depending on their answer, the MO would be moved towards the SO or away from it. If the participant replied “Yes”, meaning that they detected a difference, the MO would be adjusted by 2 cm in the direction of the SO, and in the direction away from the MO if they replied “No”. The placement of the MO would change at random between the tasks, picking up from the last answer, changing the further starting point and the closer starting point. Once the answers from the participants reached a sequence of five affirmative and negative answers in both directions, the experiment concluded. The total duration of the experiment varied from 30 to 60 min depending on the ability of the participant to sense the different distance between the object.

3.2.4 Evaluation

The results of the experiment were analyzed by finding the average distance of which the participants sensed the difference between the two objects (MO and SO) (Fig. 8). The final distance of the MO further from the SO was calculated and averaged for all trials, and the final distance of the MO closer from the SO was averaged for all trials. These two averages were then combined returning the final average of perceptual resolution for both constant stimuli of 1 and 2 m. The top standard deviation was selected from the average of the closer MO average, and the bottom was selected from the further average for each of the constant stimuli.

In Fig. 8 above, the average perceived distance resolution for both constant stimuli is around 6 cm from the target distance to the constant stimuli. While there is a slightly more acute resolution for the 2-m constant stimulus, one can observe that the lower standard deviation for both constant stimuli distance points is around 2 cm, which is the shortest distance selected for the experiment as the lowest perceived resolution. As for the top standard deviation, the average for the 1-m constant stimulus is around 13 cm, while the 2-m constant stimulus is around 11 cm. It can be concluded that there is a slight acuity gained from sensing the distance between objects at 2 or 1 m. However, the difference is very small, almost negligible, therefore, concluding that there is a 6-cm perceptual resolution for distances between 80 and 220 cm.

3.3 Task-oriented path recognition

The aim of this experiment was to measure the ability of participants to find an open path while wearing the Echolocation Headphones. The experiment was set in a hallway where participants walked while scanning their surroundings with the objective to find an open door. There were three doors used in the experiment located at various placements along the way, which could be open or closed in random combinations for each trial of one, two, and three open doors, a total of three trials per participant. This experiment was conducted at the VR Lab of Empowerment Informatics at the University of Tsukuba, Japan in May of 2017.

3.3.1 Participants

There were a total of six participants between the ages of 24 and 35, with a median age of 31 years old, of which three were female and three were male. All participants reported having normal or corrected vision to normal. Of the six participants, only three participants had the previous experience with echolocation while wearing the Echolocation Headphones.

3.3.2 Apparatus

The experiment was conducted in a hallway with concrete floors, MDF board walls, and a metal flashing ceiling with exposed fiber insulation bags. The hallway was not fitted with any kind of sound dampening materials, and its measurements were around 10 m × 1.4 m × 3.6 m (Fig. 9). The sound was generated from a recorded sound file of white noise, played through the same FastTech mini MP3 player, model DX 48426, of the previous JND experiment. The doors to be located were around 1-m width and 2.1 m in height.

3.3.3 Procedure

The participants were asked to wear the Echolocation Headphones, which completely cover their vision. The participants, while wearing the device, were showed the control stimuli and a sample of the task. They stood in front of a door which was open and then guided towards the wall where there was no wall to sense the audible difference between an open path and a wall obstruction. They were given the freedom to move from side to side towards the open path and the obstruction.

Once they felt comfortable with their ability to determine the difference in stimuli, the experiment began and received the instructions for the experiment. They were asked to walk through the hallway, slowly scanning their surroundings. They were given two options on how to scan the hallway. One way was to walk sideways scanning one wall first and then the opposite wall on their way back from the end of the hallway. The second option was to scan the hallway by turning their head from left to right, back-and-forth, seeking the feedback of each side one step at a time. The participants were given this freedom to slow their walking speed, because by walking forward too quickly missed a few cues. Once their walking style was determined, they were asked to find the open doors and to point in its direction saying “This is an open door.” Each participant was tested through three trials for each combination of one, two, and three open doors selected at random.

3.3.4 Evaluation

In the Open Path Recognition graph in Fig. 10, the average of perceived open doors is very close to the ideal number of perceived open doors. This means that the participants were able to assess accurately the difference from a path and an obstacle. The top margin of error is the average number of false doors that were perceived for all trials, while the bottom standard deviation was retrieved from the number of doors that participants failed to recognize for each trial.

Of all participants, only two perceived phantom doors. While observing their behavior, it can be determined that those false positives were due to their scanning of the wall at an angle, meaning that the angle provided them with a decrease in volume from the echoing signal, which they had already associated with an open path. This phenomenon should be addressed as it dampens the performance of participants wearing the tool, albeit only a few of them were unable to detect the difference from an open door and an angular reflection of the returning signal. Beyond this anomaly, the overall average performance of the participants was almost perfectly in line with the ideal performance. It can be concluded that the Echolocation Headphones is a helpful tool for aiding navigation in circumstances where audition is required and vision is hindered.

4 Social evaluation: art exhibition interactions and feedback

The Echolocation Headphones has been shown in different kinds of settings, from museum installations to conference demonstrations. It has also been tested by a wide range of participants, who through playful interaction gain a new perspective on their spatial surroundings.

4.1 Novice echolocation behaviors

During most of the exhibition settings, attendants were invited to try the Echolocation Headphones. Each time, the device was always paired with an audio player, either a cell phone or an MP3 player. The sound was always white noise, either from an MP3 file or a noise generating application such as SGenerator by ScorpionZZZ’s Lab (2013). These exhibiting opportunities allowed for Echolocation Headphones to be experienced in a less controlled environment than the lab experiments.

Participants were given simple guidelines and a basic explanation of how the device functions. In this informal setting, the behavior of participants was similar and they were usually able to identify distance and spatial resonance within the first minute of wearing the device. They would often scan their hands, walk around, and when finding a wall, they would approach it closely, touch it, and gauge the distance. After wearing the device for a while longer, they became more aware of the sonic signatures of the space around them, navigating without stumbling with object in their direct line of “sight.” Their navigation improved when they realized that the side-to-side and back-and-forth scanning technique was necessary to gather distance differential. This is a similar movement performed with the eyes, also known as saccades. Without these movements, a static eye cannot observe, since the fovea is very small. This is the part of the eye that can capture in detail and allow for high-resolution visual information to be perceived Iwasaki and Inomara (1986). The scanning behavior was helpful for most situations, but given that the field of “sight” from the device is narrower than that of actual sight, there were some collisions. When there were objects in the way of participants that did not reach the direct line of the sound beam, such as a table which is usually at waist height, they would almost always collide with it.

In one of the art exhibitions, the device was shown alongside three rectangular boards of acrylic with a thickness of 2.5 mm, corrugated cardboard of 3 mm, and acoustic foam with an egg crate formation of 30 mm (Fig. 11). From observation, participants were able to distinguish materials based on their reflection using sound as the only informative stimuli. They were most successful in finding the acoustic foam naturally, since its sound absorption properties of this material are very porous and specifically manufactured for this purpose. Participants are able to detect the difference of materials based on the intensity change of the sound when echolocation is performed, the intensity of the audible reflection is crucial for gathering the properties of the target in space.

4.2 Participant feedback

The participants’ comments when experiencing the Echolocation Headphones were mostly positive, e.g., “The device was efficient in helping navigate in the space around me without being able to actually see.” and “It indicates when an object is nearby that reflects the sound back to the sensor.” Another participant had an observation pertinent to the theories that apply to sensory substitution and brain plasticity where these phenomena happen through a learning process and practice. This participant stated—“It was interesting to listen to, I think it would take time to be able to use it effectively and learn to recognize the subtle details of the changes in sound”. When participants were asked about the white noise generated by the device, they stated—“It actually becomes comforting after a while, because you know it’s your only way of really telling what’s going on around you”. Another comment was more direct stating that the sound was “Pretty annoying”. While white noise is very effective for audio location purposes, it can be jarring because of its frequency randomness. However, one participant noted a wonderful analogy from noise to space. He said that “In blindness, one can finally see it all when it rains”. He meant that the noise of the seemingly infinite drops of rain falling to the ground creates a detailed sonic map of the environment.

5 Conclusion

The Echolocation Headphones device is a sensory substitution tool that enhances human ability to perform echolocation, improving auditory links to visuospatial skills. They are an Assistive Device artwork, designed within the interdisciplinary lines of engineering and art. This device shines a new light on AT and its user base expansion. It brings interaction, playfulness, and perceptual awareness to its participants. This concept popularizes and makes desirable technology that could prove useful for the blind community; however, it is designed to be valuable and attainable to everyone.

From an engineering perspective, this device presents a novel application for parametric speakers and a useful solution for spatial navigation through the interpretation of audible information. Utilizing the parametric speaker as an echolocation transducer is a new application, because these speakers are typically used to contain and target sound towards crowds. They usually are audio information displays for targeted advertising. This prototype also takes into account a trend within the study of perception, which is considering a multimodal perspective in contrast to the reductionist approach of studying sensory modalities independently. According to Driver and Spence (2000), most textbooks about perception are written by separating each sensory modality and regarding them in isolation. However, the senses receive information about the same objects or events and the brain forms multimodal determined percepts. The Echolocation Headphones have been designed not to focus on the translation of visual feedback directly into sound, but rather as a tool that enhances the multimodal precept of space. This tool re-centralizes the user’s attention from gathering audible instead of visual information to determine their location. Thus, their spatial perception remains the same; however, it arrives through a different sonic perspective. The Echolocation Headphones have been evaluated with psychophysical methods to investigate their performance and perceptual cohesion. According to the data gathered through the multiple experiments and exhibition demonstrations, the feasibility of sonic visuospatial location through the Echolocation Headphones as a training device for sighted individuals is positive. Utilizing sound as a means of spatial navigation is not imperative for sighted subjects, but this tool shows that the experience of sensory substitution is possible regardless of skill. This prototype exemplifies the ability and plasticity of the brain’s perceptual pathways to quickly adapt from processing spatial cues from one sense to another.

ADA is about empirical contemplation and a way of reflecting on our perception through physical and sensory extensions. It is a platform for interactive transformation that is the sensorimotor dance of body and environment. AT addresses the necessity of a shared experience, where all bodies have the capability in engaging in the same everyday tasks. In contrast, ADA takes inspiration from atypical physiologies with the aim of creating perceptual models that provide a new shared experience from a modality mediated perspective. Transposing an experience goes beyond orchestrating sensory datum, it relies on understanding the mechanisms of perception from multiple angles. It is an interdisciplinary undertaking where the theoretical models of engineering, psychophysics, design, and philosophy entangle.

Assistive Device Art investigates the limits of a mediated embodiment by reframing Assistive Technology and making it desirable. It presents an avenue for exploring new sensory perspectives and contemplating on the transient nature of our condition as human bodies. This art reimagines our future bodies, often camouflaging itself as a consumer product indulging the illusion of escaping our experiential reality. It measures the public’s willingness to engage with Assistive Technology and to achieve human–computer integration to enhance their experience.

References

Antonellis A (ed) (2013) Net art implant. http://www.anthonyantonellis.com/news-post/item/670-net-art-implant. Accessed 12 Sept 2017

Au Whitlow WL (1997) Echolocation in dolphins with a dolphin–bat comparison. Bioacoustics 8:137–162. doi:10.1080/09524622.1997.9753357

Auger J, Loizeau J (2002) Audio tooth implant. In: http://www.auger-loizeau.com/projects/toothimplant. Accessed 12 Aug 2017

Bach-y-Rita P, Kaczmarek KA, Tyler ME, Garcia-Lara J (1998) Form perception with a 49-point electrotactile stimulus array on the tongue. J Rehabilit Res Dev 35:427–430

Beta Tank, Eyal B, Michele G (2007) Eye candy. In: Studio Eyal Burstein. http://www.eyalburstein.com/eye-candy/1n5r92f24hltmysrkuxtiuepd6nokx. Accessed 12 Aug 2017

Blakemore S-J, Frith U (2005) The learning brain: lessons for education. Blackwell Publishing, Malden. ISBN 1-4051-2401-6

Brain Center America (2008) Brain functions: visuospatial skills. http://www.braincenteramerica.com/visuospa.php. Accessed May 2013

Chacin AC (2013) Sensory pathways for the plastic mind. http://aisencaro.com/thesis2.html. Accessed 25 May 2017

Collignon O, Voss P, Lassonde M, Lepore F (2008) Cross-modal plasticity for the spatial processing of sounds in visually deprived subjects. Exp Brain Res 192:343–358. doi:10.1007/s00221-008-1553-z

D3A Lab at Princeton University (2010) http://www.princeton.edu/3D3A. Accessed 20 May 2013

Dinkla S (1996) From interaction to participation: toward the origins of interactive art. In: Hershman-Leeson (ed) Clicking in: hot links to a digital culture. Bay, Seattle, p 279–290

Driver J, Spence C (2000) Multisensory perception: beyond modularity and convergence. Curr Biol 10:R731–R735. doi:10.1016/s0960-9822(00)00740-5

Dunne A, Raby F (2014) Speculative everything: design, fiction, and social dreaming. MIT Press, Cambridge. ISBN: 9780262019842

Goodale MA, Milner A (1992) Separate visual pathways for perception and action. Trends Neurosci 15:20–25. doi:10.1016/0166-2236(92)90344-8

Guillot P (2016) Pad Library. In: Patch storage. http://patchstorage.com/pad-library/. Accessed 10 May 2017

Haberkern R (2012) How directional speakers work. Soundlazer. http://www.soundlazer.com/. Accessed 26 May 2017

Holosonics Research Labs (1997) About the inventor: Dr Joseph Pompei. In: Audio spotlight by Holosonics. https://www.holosonics.com/about-us-1/. Accessed 26 May 2017

Holzner D (2008) The envelope generator. In: Hyde, Adam (ed 2009) Pure data. https://booki.flossmanuals.net/pure-data/audio-tutorials/envelope-generator. Accessed 26 May 2017

Houde S, Hill C (1997) What do prototypes prototype? Handb Hum Comput Interact. doi:10.1016/b978-044481862-1.50082-0

Hutto D, Myin E (2013) A helping hand. Radicalizing enactivism: minds without content. MIT Press, Cambridge. 46. ISBN 9780262018548

Ihde D (1979) Tecnics and praxis, vol 24. D. Reidel, Dordrecht, pp 3–15. doi:10.1007/978-94-009-9900-8_1

Iwasaki M, Inomara H (1986) Relation between superficial capillaries and foveal structures in the human retina. Investig Ophthalmol Vis Sci 27:1698–1705. http://iovs.arvojournals.org/article.aspx?articleid=2177383.

Iwata H (2000) Floating eye. http://www.iamas.ac.jp/interaction/i01/works/E/hiroo.html. Accessed 25 May 2017

Iwata H (2004) What is Device Art. http://www.deviceart.org/. Accessed 10 April 2017

Kish D (2011) Blind vision. In: PopTech: PopCast http://poptech.org/popcasts/daniel_kish_blind_vision. Accessed 20 May 2013

Kreidler J (2009) 3.3 Subtractive synthesis. In: Programming electronic music in Pd. http://www.pd-tutorial.com/english/ch03s03.html. Accessed 3 Mar 2017

Kusahara M (2010) Wearing media: technology, popular culture, and art in Japanese daily life. In: Niskanen, E (ed) Imaginary Japan: Japanese fantasy in contemporary popular culture. Turku: International Institute for Popular Culture. http://iipc.utu.fi/publications.html. Accessed 25 May 2017

Lundborg G, Rosén B, Lindberg S (1999) Hearing as substitution for sensation: a new principle for artificial sensibility. J Hand Surg 24(2):219–224

Mishkin M, Ungerleider LG (1982) Contribution of striate inputs to the visuospatial functions of parieto-preoccipital cortex in monkeys. Behav Brain Res 6:57–77. doi:10.1016/0166-4328(82)90081-x

Picard DJ, Hulse J, Krajewski J (2009) Integrated fire exit alert system. US Patent 7528700. 5 May 2009

Pullin G (2009) Desing meets disability. Massachusetts Institute of Technology Press, Massachussetts

Rosch E, Thompson E, Varela FJ (1991) The embodied mind: cognitive science and human experience. MIT Press, Cambridge, MA

Schwartzman M (2011) See yourself sensing: redefining human perception. Black Dog Publishing, London, pp 98–99. ISBN: 9781907317293

ScorpionZZZ’s Lab (2013) SGenerator (Signal Generator). http://sgenerator.scorpionzzz.com/en/index.html. Accessed 11 May 2017

Sterlac (1980) Third hand. http://stelarc.org/?catID=20265. Accessed 25 May 2017

Thaler L, Castillo-Serrano J (2016) People’s ability to detect objects using click-based echolocation: a direct comparison between mouth-clicks and clicks made by a loudspeaker. PLoS ONE. doi:10.1371/journal.pone.0154868

Thompson E (2010) Chapter 1: The enactive approach. Mind in life: biology, phenomenology, and the sciences of mind. Harvard University Press, Cambridge. ISBN 978-0674057517

TriState (2014) Supplement for frequency modulation(FM) In: TriState. http://www.tristate.ne.jp/parame-2.htm. Accessed 26 May 2017

TriState (2014) World’s first parametric speaker experiment Kit. In: TriState g. http://www.tristate.ne.jp/parame.htm. Accessed 26 May 2017

WAFB (2010) Daniel Kish, Our President. In: World access for the blind. http://www.worldaccessfortheblind.org/node/105. Accessed 20 May 2013

Warwick K (2002) In: Kevin Warwick Biography. http://www.kevinwarwick.com/. Accessed 25 Sept 2017

Wood M (2010) Introduction to 3D audio with professor choueiri. Video format. Princeton University. https://www.princeton.edu/3D3A/. Accessed 10 May 2013

Acknowledgements

The research conducted for this manuscript and prototype were supported by the Empowerment Informatics department at the University of Tsukuba, University of California, Los Angeles, and the National Science Foundation. This research has been made possible by the careful consideration and advisement of Hiroo Iwata, Victoria Vesna, and Hiroaki Yano, and with the editing help of Tyson Urich and Nicholas Spencer.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chacin, A.C., Iwata, H. & Vesna, V. Assistive Device Art: aiding audio spatial location through the Echolocation Headphones. AI & Soc 33, 583–597 (2018). https://doi.org/10.1007/s00146-017-0766-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00146-017-0766-8