Abstract

Consider L groups of point sources or spike trains, with the lth group represented by \(x_l(t)\). For a function \(g:\mathbb {R}\rightarrow \mathbb {R}\), let \(g_l(t) = g(t/\mu _l)\) denote a point spread function with scale \(\mu _l > 0\), and with \(\mu _1< \cdots < \mu _L\). With \(y(t) = \sum _{l=1}^{L} (g_l \star x_l)(t)\), our goal is to recover the source parameters given samples of y, or given the Fourier samples of y. This problem is a generalization of the usual super-resolution setup wherein \(L = 1\); we call this the multi-kernel unmixing super-resolution problem. Assuming access to Fourier samples of y, we derive an algorithm for this problem for estimating the source parameters of each group, along with precise non-asymptotic guarantees. Our approach involves estimating the group parameters sequentially in the order of increasing scale parameters, i.e., from group 1 to L. In particular, the estimation process at stage \(1 \le l \le L\) involves (i) carefully sampling the tail of the Fourier transform of y, (ii) a deflation step wherein we subtract the contribution of the groups processed thus far from the obtained Fourier samples, and (iii) applying Moitra’s modified Matrix Pencil method on a deconvolved version of the samples in (ii).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background on Super-Resolution

Super-resolution consists of estimating a signal x, given blurred observations obtained after convolution with a point spread function g which is assumed to represent the impulse response of the measurement system, such as for e.g., a microscope in high density single molecule imaging. Mathematically, x is typically modeled as a superposition of K Dirac’s, i.e., a sparse atomic measure of the form

while g is a low pass filter. Denoting

to be the convolution of x and g, one is usually given information about x either as samples of y, or the Fourier samples of y. This problem has a number of important applications arising for instance in geophysics [31], astronomy [49], medical imaging [26] etc. The reader is referred to [13] for a more comprehensive list of applications. Super-resolution can be seen as a highly non-trivial “off the grid” extension of the finite dimensional sparse estimation problem in compressed sensing [22, 24] and statistics [11]. In the new setting, instead of estimating a sparse vector in a finite dimensional space, the goal is to estimate a sparse measure over the real line \(\mathbb R\) endowed with its Borel \(\sigma \)-algebra.

Recently, the problem of super-resolution has received a great deal of interest in the signal processing community, triggered by the seminal work of Candès and Fernandez-Granda [12, 13]. They considered the setup where one is given the first few Fourier coefficients of x, i.e., for a cut-off frequency \(m \in \mathbb {Z}^{+}\), we observe \(f(s) \in \mathbb {C}\) where

Note that this corresponds to taking \(g(t) = 2m\ \text {sinc}(2mt)\) in (1.1), and sampling the Fourier transform of y on the regular grid \(\left\{ {-m,-m+1,\dots ,m}\right\} \).

1.1.1 Total Variation and Atomic Norm-Based Approaches

In [13], the authors consider the noiseless setting and propose solving an optimization problem over the space of measures which involves minimizing the total variation (TV) norm amongst all measures which are consistent with the observations. The resulting minimization problem is an infinite dimensional convex program over Borel measures on \(\mathbb {R}\). It was shown that the dual of this problem can be formulated as a semi-definite program (SDP) in finitely many variables, and thus can be solved in polynomial computational time. Since then, there have been numerous follow-up works such as by Schiebinger et al. [50], Duval and Peyre [18], Denoyelle et al. [17], Bendory et al. [6], Azaïs et al. [3] and many others. For instance, [50] considers the noiseless setting by taking real-valued samples of y with a more general choice of g (such as a Gaussian) and also assumes x to be non-negative. Their proposed approach again involves TV norm minimization with linear constraints. Bendory et al. [6] consider g to be Gaussian or Cauchy, do not place sign assumptions on x, and also analyze TV norm minimization with linear fidelity constraints for estimating x from noiseless samples of y. The approach adopted in [17, 18] is to solve a least-squares-type minimization procedure with a TV norm based penalty term (also referred to as the Beurling LASSO (see for e.g., [3])) for recovering x from samples of y. The approach in [19] considers a natural finite approximation on the grid to the continuous problem, and studies the limiting behaviour as the grid becomes finer; see also [20].

From a statistical view point, Candès and Fernandèz-Granda [13] showed that their approach exactly recovers x in the noiseless case provided \(m \ge 2/\triangle \), where \(\triangle \) denotes the minimum separation between the spike locations. Similar results for other choices of g were shown by Schiebinger et al. [50] (for positive measures and without any minimum separation condition), and by Bendory et al. [6] (for signed measures and with a separation condition). In the noisy setting, the state of affairs is radically different since it is known (see for e.g., [13, Sect. 3.2], [41, Corollary 1.4]) that some separation between the spike locations is indeed necessary for stable recovery. When sufficient separation is assumed and provided the noise level is small enough, then stable recovery of x is possible (see for e.g., [3, 17, 18, 23]).

Recently also, Tang et al. [57, 58] studied approaches based on atomic norm minimization, which can be formulated as a SDP. In [57, Theorem 1.1], the authors considered the signs of the amplitudes of \(u_j\) to be generated randomly, with noiseless samples. It was shown that if \(m \ge 2/\triangle \), and if the Fourier samples are obtained at \(n = \Omega (K \log K \log m)\) indices selected uniformly at random from \(\left\{ {-m,\dots ,m}\right\} \), then x is recovered exactly with high probability. In [58, Thorem 1], the authors considered Gaussian noise in the samples and showed that if \(m \ge 2/\triangle \), then the solution returned by the SDP estimates the vector of original Fourier samples (i.e., \((f(-m),\dots ,f(m))^T\)) at a mean square rate \(O(\sigma ^2 K \frac{\log m}{m})\), with \(\sigma ^2\) denoting variance of noise. Moreover, they also show [58, Theorem 2] that the spike locations and amplitude terms corresponding to the SDP solution are localized around the true values.

From a computational perspective, the aforementioned approaches all admit a finite dimensional dual problem with an infinite number of linear constraints; this is a semi infinite program (SIP) for which there is an extensive literature [27]. For the particular case of non-negative x, Boyd et al. [8] proposed an improved Frank–Wolfe algorithm in the primal. In certain instances, for e.g., with Fourier samples (such as in [12, 13]), this SIP can also be reformulated as a SDP. From a practical point of view, SDP is notoriously slow for moderately large number of variables. The algorithm of [8] is a first order scheme with potential local correction steps, and is practically more viable.

1.1.2 Prony, ESPRIT, MUSIC and Extensions

When one is given the first few Fourier samples of the spike train x (i.e., (1.2)), then there exist other approaches that can be employed. Prony’s method [16] is a classical method that involves finding the roots of a polynomial, whose coefficients form a null vector of a certain Hankel matrix. Prony’s method and its variants have also been recently studied by Potts and Tasche (for e.g., [46, 48]), Plonka and Tasche [44], and others. The matrix pencil (MP) method [29] is another classical approach that involves computing the generalized eigenvalues of a suitable matrix pencil. Both these methods recover x exactly in the absence of noise provided \(m \ge K\) (so \(2K+1\) samples), and are also computationally feasible. Recently, Moitra [41, Theorem 2.8] showed that the MP method is stable in the presence of noise provided the noise level is not too large, and \(m > \frac{1}{\triangle } + 1\). Moitra also showed [41, Corollary 1.4] that such a dependence is necessary, in the sense that if \(m < (1-\epsilon )/\triangle \), then the noise level would have to be \(O(2^{-\epsilon K})\) to be able to stably recover x. Very similar in spirit to the MP method for sum of exponential estimation are the Prony-like methods ESPRIT and MUSIC which can also be used for spike train estimation using the same Fourier domain measurement trick as in [41]. These methods can be interpreted as model reduction methods based on low-rank approximation of Hankel matrices [40]. The ESPRIT method was studied in [45] based on previous resultsFootnote 1 from [5]. Another line of research is about relationships with AAK theory of Adamjan, Arov, and Krein as developed in [44] (see also [43]). The MUSIC method was also studied in great detail in [37, Theorem 4]. The modified Matrix Pencil method, on the one hand, is often considered as less computationally expensive than MUSIC and, on the other hand, is amenable to an error analysis quite similar to the one in [45].

1.2 Super-Resolution with Multiple Kernels

In this paper, we consider a generalization of the standard super-resolution problem by assuming that the measurement process now involves a superposition of convolutions of several spike trains with different point spread functions. This problem, which we call the “multi-kernel unmixing super-resolution problem” appears naturally in many practical applications such as single molecule spectroscopy [30], spike sorting in neuron activity analysis [9, 33], DNA sequencing [34, 36], spike hunting in galaxy spectra [10, 38] etc.

A problem of particular interest at the National Physical Laboratory, the UK’s national metrology institute, is isotope identification [39, 54] which is of paramount importance in nuclear security. Hand-held radio-isotope identifiers are known to suffer from poor performance [54] and new and precise algorithms have to be devised for this problem. While it is legitimately expected for Radio Isotope Identifier Devices to be accurate across all radioactive isotopes, the US Department of Homeland Security requires all future identification systems to be able to meet a minimum identification standard set forth in ANSI N42.34. Effective identification from low resolution information is known to be reliably achievable by trained spectroscopists whereas automated identification using standard algorithms sometimes fails up to three fourth of the time [55]. For instance, the spectrum of \(^{232}Th\) is plotted in Fig. 1, which is extracted from [55, p.9]. Isotope identification involves the estimation of a set of peak locations in the gamma spectrum where the signal is blurred by convolution with kernels of different window sizes. Mixtures of different spectra can be particularly difficult to analyse using traditional methods and a need for precise unmixing algorithms in such generalised super-resolution problems may play an important role in future applications such as reliable isotope identification.

\(^{232}Th\) spectrum showing escape peaks and annihilation peaks [55, p.9]

Another application of interest is DNA sequencing in the vein of [34]. Sequencing is usually performed using some of the variants of the enzymatic method invented by Frederick Sanger [1]. Sequencing is based on enzymatic reactions, electrophoresis, and some detection technique. Electrophoresis is a technique used to separate the DNA sub-fragments produced as the output of four specific chemical reactions, as described in more detail in [34]. DNA fragments are negatively charged in solution. An efficient color-coding strategy has been developed to permit sizing of all four kinds of DNA sub-fragments by electrophoresis in a single lane of a slab gel or in a capillary. In each of the four chemical reactions, the primers are labeled by one of four different fluorescent dyes. The dyes are then excited by a laser based con-focal fluorescent detection system, in a region within the slab gel or capillary. In that process, fluorescence intensities are emitted in four wavelength bands as shown with different color codes in Fig. 2 below. However, quoting [7], “because the electrophoretic process often fails to separate peaks adequately, some form of deconvolution filter must be applied to the data to resolve overlapping events. This process is complicated by the variability of peak shapes, meaning that conventional deconvolution often fails.” The methods developed in the present paper aim at achieving accurate deconvolution with different peak shapes and might therefore be useful for practical deconvolution problems in DNA sequencing.

Capture of the result of a sequencing using SnapGene_Viewer [52]

We will now provide the mathematical formulation of the problem and describe the main idea of our approach along with our contributions, and discuss related work for this problem.

1.3 Problem Formulation

Say we have L groups of point sources where \(\left\{ {t_{l,i}}\right\} _{i=1}^{K} \subset [0,1)\) and \((u_{l,i})_{i=1}^{K}\) (with \(u_{l,i} \in \mathbb {C}\)) denote the locations and (complex-valued) amplitudes respectively of the sources in the lth group. Our signal of interest is defined as

Let \(g\in L_1(\mathbb {R})\) be a positive definite functionFootnote 2 with its Fourier transform \(\bar{g}(s) = \int _{\mathbb R} g(t) \exp \left( \iota 2\pi st\right) dt\) for \(s \in \mathbb {R}\). Consider L distinct kernels \(g_l(t) = g(t/\mu _l)\), \(l=1,\ldots ,L\) where \(0< \mu _1< \cdots < \mu _L\). Let \(y(t) = \sum _{l=1}^L (g_l \star x_l)(t) = \sum _{l=1}^L \sum _{j=1}^K u_{l,j} \ g_l(t-t_{l,j})\) where \(\star \) denotes the convolution operator. Let f denote the Fourier transform of y, i.e., \(f(s) = \int _{\mathbb R} y(t) \exp \left( \iota 2\pi st\right) dt\). Denoting the Fourier transform of \(g_l\) by \(\bar{g}_l\), we get

Assuming black box access to the complex valued function f, our aim is to recover estimates of \(\left\{ {t_{l,i}}\right\} _{i=1}^{K}\) and \((u_{l,i})_{i=1}^{K}\) for each \(l = 1,\dots ,L\) from few possibly noisy samples \({\tilde{f}}(s) = f(s) + w(s)\) of f. Here, \(w(s) \in \mathbb {C}\) denotes measurement noise at location s. We remark that the choice of having K summands for each group is only for ease of exposition, one can more generally consider \(K_l \le K\) summands for each \(l = 1,\dots ,L\).

Gaussian Kernels For ease of exposition, we will from now on consider g to be a Gaussian, i.e., \(g(t) = \exp (-t^2/2)\) so that \(g_l(t) = \exp (-t^2/(2 \mu _l^2)\), \(l=1,\ldots ,L\). It is well known that g is positive definite [60, Theorem 6.10], moreover, \(\bar{g}_l(s) = \sqrt{2\pi } \mu _l \exp (-2\pi ^2 s^2 \mu _l^2)\). We emphasize that our restriction to Gaussian kernels is only to minimize the amount of tedious computations in the proof. However, our proof technique can likely be extended to handle more general positive definite g possessing a strictly positive Fourier transform. Examples of such functions are: (a) (Laplace kernel) \(g(t) = \exp (-|{t}|)\), and (b) (Cauchy kernel) \(g(t) = \frac{1}{1+t^2}\).

1.4 Main Idea of Our Work: Fourier Tail Sampling

To explain the main idea, let us consider the noiseless setting \(w(s) = 0\). Our main algorithmic idea stems from observing (1.3), wherein we notice that for s sufficiently large, f(s) is equal to \(f_1(s)\) plus a perturbation term arising from the tails of \(f_2(s),\dots ,f_{L}(s)\). Thus, \(f(s)/\bar{g}_1(s)\) is equal to \(\sum _{j=1}^K u_{1,j} \exp \left( \iota 2\pi st_{1,j}\right) \) (which is a weighted sum of complex exponentials) plus a perturbation term. We control this perturbation by choosing s to be sufficiently large, and recover estimates \({\widehat{t}}_{1,j}, {\widehat{u}}_{1,j}\) (up to a permutation \(\phi _1\)) via the Modified Matrix Pencil (MMP) method of Moitra [41] (outlined as Algorithm 1). Given these, we form the estimate \({\widehat{f}}_1(s)\) to \(f_1(s)\) where

Provided the estimates are good enough, we will have \(f(s) - {\widehat{f}}_1(s) \approx \sum _{l=2}^{L} f_l(s)\). Therefore, by applying the above procedure to \(f(s) - {\widehat{f}}_1(s)\), we can hope to recover estimates of \(t_{2,j}\)’s and \(u_{2,j}\)’s as well. By proceeding recursively, it is clear that we can perform the above procedure to recover estimates to each \(\left\{ {t_{l,i}}\right\} _{i=1}^{K} \subset [0,1)\) and \((u_{l,i})_{i=1}^{K}\) for all \(l=1,\dots ,L\). A delicate issue that needs to be addressed for each intermediate group \(1< l < L\) is the following. While estimating the parameters for group \(1< l < L\), the samples \(\frac{f(s) - \sum _{i=1}^{l-1} {\widehat{f}}_i(s)}{\bar{g}_l(s)}\) that we obtain will have perturbation arising due to (a) the tails of \(f_{l+1}(s), \dots , f_{L}(s)\) and, (b) the estimates \({\widehat{f}}_{1}(s),\dots ,{\widehat{f}}_{l-1}(s)\) computed thus far. In particular, going “too deep” in to the tail of \(f_l(s)\) would blow up the perturbation term in (b), while not going sufficiently deep would blow up the perturbation term in (a). Therefore, in order to obtain stable estimates to the parameters \(t_{l,j}, u_{l,j}\), we will need to control these perturbation terms by carefully choosing the locations, as well as the number of sampling points in the tail.

Further Remarks Our choice of using the MMP method for estimating the spike locations and amplitudes at each stage is primarily dictated by two reasons. Firstly, it is extremely simple to implement in practice. Moreover, it comes with precise quantitative error bounds (see Theorem 3) for estimating the source parameters—especially for the setting of adversarial bounded noise—which fit seamlessly in the theoretical analysis of our method. Of course, in practice, other methods such as ESPRIT, MUSIC could also be used, and as discussed in Sect. 1.1.2, there also exist some error bounds in the literature for these methods. To our knowledge, error bounds of the form in Theorem 3 do not currently exist for these other methods. In any case, a full discussion in this regard is outside the scope of the paper, and analyzing the performance of our algorithm with methods other than the MMP method is a direction for future work.

1.5 Main Results

Our algorithm based on the aforementioned idea is outlined as Algorithm 2 which we name KrUMMP (Kernel Unmixing via Modified Matrix Pencil). At each stage \(l = 1,\dots ,L\), we choose a “sampling offset” \(s_l\) and obtain the (potentially noisy) Fourier samples at \(2 m_l\) locations \(s_l +i\) for \(i=-m_l\dots ,m_l - 1\). Our main result for the noiseless setting (\(w \equiv 0\)) is stated as Theorem 5 in its full generality. We state its following informal version assuming the spike amplitudes in each group to beFootnote 3\(\asymp 1\). Note that \(d_w:[0,1]^2 \rightarrow [0,1/2]\) is the usual wrap around distance on [0, 1] (see (2.1)).

Theorem 1

(Noiseless case) Denote \(\triangle _l := \min _{i,j} d_w(t_{l,i},t_{l,j}) > 0\) for each \(1 \le l \le L\). Let \(0 < \varepsilon _1 \le \varepsilon _2 \le \cdots \le \varepsilon _L\) satisfy \(\varepsilon _l \lesssim \triangle _l/2\) for each l. Moreover, let \(\varepsilon _{L-1} \lesssim \alpha \varepsilon _L^2\) and \(\varepsilon _l \lesssim \beta _l(\varepsilon _{l+1})^{2(1+\gamma _l)}\) hold for \(1 \le l \le L-1\) with \(\alpha ,\beta _l,\gamma _l > 0\) depending on the problem parameters (see (3.25), (3.27)). Finally, in Algorithm 2, let \(m_L \asymp 1/\triangle _L\), \(s_L = 0\), and

Then, for each \(l=1,\dots ,L\), there exists a permutation \(\phi _l: [K] \rightarrow [K]\) such that

Here, \(E_L(\varepsilon _L) \lesssim \frac{K}{\triangle _L}\), \(E_l(\varepsilon _l) \lesssim \left( \frac{K}{\triangle _l} + \frac{1}{(\mu _{l+1}^2 - \mu _l^2)^{1/2}} \log ^{1/2}\left( \frac{K^{3/2}(L-l)\mu _L}{\varepsilon _l\mu _l}\right) \right) \) for \(1 \le l \le L-1\).

The conditions on \(\varepsilon _l \in (0,1)\) imply that the estimation errors corresponding to group l should be sufficiently smaller than that of group \(l+1\). This is because the estimation errors arising in stage l percolate to stage \(l+1\), and hence need to be controlled for stable recovery of source parameters for the \((l+1)\)th group. Note that the conditions on \(s_l\) (sampling offset at stage l) involve upper and lower bounds for reasons stated in the previous section. If \(\mu _l\) is close to \(\mu _{l+1}\) then \(s_l\) will have to be suitably large in order to distinguish between \(\bar{g}_l\) and \(\bar{g}_{l+1}\), as one would expect intuitively. An interesting feature of the result is that it only depends on separation within a group (specified by \(\triangle _l\)), and so spikes belonging to different groups are allowed to overlap.

Our second result is for the noisy setting. Say at stage \(1 \le p \le L\) of Algorithm 2, we observe \(\widetilde{f}(s) = f(s) + w_p(s)\) where \(w_p(s) \in \mathbb {C}\) denotes noise at location s. Our main result for this setting is Theorem 6. Denoting \(w_p = (w_p(s_p-m_p),w_p(s_p-m_p+1),\dots ,w_p(s_p+m_p-1))^{T} \in \mathbb {C}^{2m_p}\) to be the noise vector at stage p, we state its informal version below assuming the spike amplitudes in each group to be \(\asymp 1\).

Theorem 2

(Noisy case) Say at stage \(1 \le p \le L\) of Algorithm 2, we observe \(\widetilde{f}(s) = f(s) + w_p(s)\) where \(w_p(s) \in \mathbb {C}\) denotes noise at location s. For each \(1 \le l \le L\), let \(\varepsilon _l,m_l,s_l\) be chosen as specified in Theorem 1. Say \(\Vert {w_L}\Vert _{\infty } \lesssim \mu _L e^{-\mu _L^2/\triangle _L^2} \frac{\varepsilon _L}{\sqrt{K}}\), and also

where \(C(\mu _l,\mu _{l+1},\triangle _l) > 0\) depends only on \(\mu _l,\mu _{l+1},\triangle _l\). Then, for each \(l=1,\dots ,L\), there exists a permutation \(\phi _l: [K] \rightarrow [K]\) such that

where \(E_l(\cdot )\) is as in Theorem 1.

As remarked earlier in Sect. 1.3, one can more generally consider \(K_l \le K\) summands for the lth group—our algorithm and results remain unchanged.

2 Notation and Preliminaries

Notation Vectors and matrices are denoted by lower and upper case letters respectively. For \(n \in \mathbb {N}\), we denote \([n] = \left\{ {1,\dots ,n}\right\} \). The imaginary unit is denoted by \(\iota = \sqrt{-1}\). The notation \(\log ^{1/2}(\cdot )\) is used to denote \(|{\log (|{\cdot }|)}|^{1/2}\). The \(\ell _p\) (\(1 \le p \le \infty \)) norm of a vector \(x \in \mathbb {R}^n\) is denoted by \(\Vert {x}\Vert _p\) (defined as \((\sum _i |{x_i}|^p)^{1/p}\)). In particular, \(\Vert {x}\Vert _{\infty } := \max _i |{x_i}|\). For a matrix \(A \in \mathbb {R}^{m \times n}\), we will denote its spectral norm (i.e., largest singular value) by \(\Vert {A}\Vert \) and its Frobenius norm by \(\Vert {A}\Vert _F\) (defined as \((\sum _{i,j} A_{i,j}^2)^{1/2}\)). For positive numbers a, b, we denote \(a \lesssim b\) to mean that there exists an absolute constant \(C > 0\) such that \(a \le C b\). If \(a \lesssim b\) and \(b \lesssim a\) then we denote \(a \asymp b\). The wrap around distance on [0, 1] is denoted by \(d_w: [0,1]^2 \rightarrow [0,1/2]\) where we recall that

2.1 Matrix Pencil (MP) Method

We now review the classical Matrix Pencil (MP) method for estimating positions of point sources from Fourier samples. Consider the signal \(x(t) := \sum _{j=1}^{K} u_{j} \delta (t - t_{j})\) where \(u_{j} \in \mathbb {C}\), \(t_j \in [0,1)\) are unknown. Let \(f: \mathbb {R}\rightarrow \mathbb {C}\) be the Fourier transform of x so that \(f(s) = \sum _{j=1}^K u_j \exp \left( \iota 2\pi s t_j \right) \). For any given offset \(s_0 \in \mathbb {Z}^{+}\), let \(s = s_0 + i\) where \(i \in \mathbb {Z}\); clearly

where \(u'_j = u_j \exp \left( \iota 2\pi s_0 t_j \right) , \quad j=1,\ldots ,K\). Choose \(i \in \left\{ {-m,-m+1,\ldots ,m-1}\right\} \) to form the \(m \times m\) matrices

and

Denoting \(\alpha _j = \exp \left( -\iota 2\pi t_j \right) \) for \(j= 1, \ldots , K\), and the Vandermonde matrix

clearly \(H_0=V D_{u'} V^H\) and \(H_1=VD_{u'} D_\alpha V^H\). Here, \(D_{u'} = \text {diag}(u'_1,\dots ,u'_K)\) and \(D_\alpha = \text {diag}(\alpha _1,\dots ,\alpha _K)\) are diagonal matrices. One can readily verifyFootnote 4 that the K non zero generalized eigenvalues of (\(H_1,H_0\)) are equal to the \(\alpha _j\)’s. Hence by forming the matrices \(H_0, H_1\), we can recover the unknown \(t_j\)’s exactly from 2K samples of f. Once the \(t_j\)’s are recovered, we can recover the \(u'_j\)’s exactly, as the solution of the linear system

Thereafter, the \(u_j\)’s are found as \(u_j = u'_j/\exp (\iota 2\pi s_0 t_j)\).

In [29], the authors elaborate further on this approach and in the noiseless case, propose an equivalent formulation of the Matrix Pencil approach as a standard eigenvalue problem. Using continuity of the eigenvalues with respect to perturbation, they also suggest that this eigenvalue problem provides a good estimator in the noisy case. In the next section, we discuss an alternative approach proposed by Moitra, which involves solving a generalised eigenproblem, and for which Moitra provided a precise quantitative perturbation result.

2.2 The Modified Matrix Pencil (MMP) Method

We now consider the noisy version of the setup defined in the previous section. For a collection of K point sources with parameters \(u_j \in \mathbb {C}\), \(t_j \in [0,1]\), we are given noisy samples

for \(s \in \mathbb {Z}\) and where \(\eta _s \in \mathbb {C}\) denotes noise.

Let us choose \(s = s_0 + i\) for a given offset \(s_0 \in \mathbb {Z}^+\), and \(i \in \left\{ {-m,-m+1,\ldots ,m-1}\right\} \) for a positive integer m. Using \((\widetilde{f}(s_0 + i))_{i=-m}^{m-1}\), let us form the matrices \({\widetilde{H}}_0, {\widetilde{H}}_1 \in \mathbb {C}^{m \times m}\) as in (2.2), (2.3). We now have \({\widetilde{H}}_0 = H_0 + E\), \({\widetilde{H}}_1 = H_1 + F\) where \(H_0, H_1\) are as defined in (2.2), (2.3), and

represent the perturbation matrices. Algorithm 1 namely the Modifed Matrix Pencil (MMP) method [41] outlines how we can recover \({\widehat{t}}_j\), \({\widehat{u}}_j\) for \(j=1,\dots ,K\).

Before proceeding we need to make some definitions. Let \(u_{\max }= \max _j |{u_j}|\) and \(u_{\min }= \min _j |{u_j}|\). We denote the largest and smallest non zero singular values of V by \(\sigma _{\text {max}},\sigma _{\text {min}}\) respectively, and the condition number of V by \(\kappa \) where \(\kappa = \sigma _{\text {max}}/\sigma _{\text {min}}\). Let \(\eta _{\mathrm {max}}:= \max _{i} |{\eta _i}|\). We will define \(\triangle \) as the minimum separation between the locations of the point sources where \(\triangle := \min _{j\ne j'} \ d_w(t_j,t_{j'})\).

The following theorem is a more precise version of [41, Theorem 2.8], with the constants computed explicitly. Moreover, the result in [41, Theorem 2.8] was specifically for the case \(s_0 = 0\), and also has inaccuracies in the proof. We outline the corrected version of Moitra’s proof in Appendix A.1 and fill in additional details (using auxiliary results from Appendix B) to arrive at the statement of Theorem 3.

Theorem 3

[41] For \(0 \le \varepsilon < \triangle /2\), say \( m > \frac{1}{\triangle - 2\varepsilon } + 1\). Moreover, for \(C = 10 + \frac{1}{2\sqrt{2}}\), say

Then, there exists a permutation \(\phi : [K] \mapsto [K]\) such that the output of the MMP method satisfies for each \(i=1,\dots ,K\)

where \({\widehat{u}}_{\phi }\) is formed by permuting the indices of \({\widehat{u}}\) w.r.t \(\phi \).

The following Corollary of Theorem 3 simplifies the expression for the bound on \(\Vert {{\widehat{u}}_{\phi } - u}\Vert _{\infty }\) in (2.7), and will be useful for our main results later on. The proof is deferred to Appendix A.2.

Corollary 1

For \(0 \le \varepsilon < c \triangle /2\) where \(c \in [0,1)\) is a constant, say \(\frac{2}{\triangle - 2\varepsilon } + 1 < m \le \frac{2}{(1-c)\triangle } + 1\). Denoting \(u_{\mathrm {rel}}= \frac{u_{\max }}{u_{\min }}\), \(C = 10 + \frac{1}{2\sqrt{2}}\), and \(B(u_{\mathrm {rel}},K) = \frac{1}{5C\sqrt{K}} (1 + 48u_{\mathrm {rel}})^{-1}\), say

Then, there exists a permutation \(\phi : [K] \mapsto [K]\) such that the output of the MMP method satisfies for each \(i=1,\dots ,K\)

where \(\tilde{C}(\triangle ,c,K,u_{\mathrm {rel}}) = 4\pi K \left( \frac{2}{\triangle (1-c)} + 1\right) + \frac{2}{C\sqrt{K}}\left( u_{\mathrm {rel}}+ 16u_{\mathrm {rel}}^2\right) ^{-1}\), and \({\widehat{u}}_{\phi }\) is formed by permuting the indices of \({\widehat{u}}\) w.r.t \(\phi \).

3 Unmixing Gaussians in Fourier Domain: Noiseless Case

We now turn our attention to the main focus of this paper, namely that of unmixing Gaussians in the Fourier domain. We will in general assume the Fourier samples to be noisy as in (2.5), with f defined in (1.3). In this section, we will focus on the noiseless setting wherein \(\widetilde{f}(s) = f(s)\) for each s. The noisy setting is analyzed in the next section.

Let us begin by noting that when \(L = 1\), i.e., in the case of a single kernel, the problem is solved easily. Indeed, we have from (1.3) that \(f(s) = \bar{g}_1(s) \sum _{j=1}^K u_{1,j} \exp \left( \iota 2\pi st_{1,j} \right) \). Clearly, one can exactly recover \((t_{1,j})_{j=1}^K \in [0,1)\) and \((u_{1,j})_{j=1}^K \in \mathbb {C}\) via the MP method by first obtaining the samples \(f(-m)\), \(f(-m+1)\), ..., \(f(m-1)\), and then working with \(f(s)/\bar{g}_1(s)\).

The situation for the case \(L \ge 2\) is however more delicate. Before proceeding, we need to make some definitions and assumptions.

We will denote \(u_{\max }= \max _{l,j} |{u_{l,j}}|\), \(u_{\min }= \min _{l,j} |{u_{l,j}}|\), and \(u_{\mathrm {rel}}= \frac{u_{\max }}{u_{\min }}\).

The sources in the lth group are assumed to have a minimum separation of

$$\begin{aligned} \triangle _l:= \min _{i \ne j} d_w(t_{l,i},t_{l,j}) > 0. \end{aligned}$$Denoting \(\alpha _{l,j}= \exp \left( -i2\pi t_{l,j} \right) \), \(V_l \in \mathbb {C}^{m_l \times K}\) will denote the Vandermonde matrix

$$\begin{aligned} \begin{bmatrix} 1 &{}\quad 1 &{}\quad \cdots &{}\quad 1 \\ \alpha _{l,1} &{}\quad \alpha _{l,2} &{}\quad \cdots &{}\quad \alpha _{l,K} \\ \vdots &{}\quad \vdots &{}\quad &{}\quad \vdots \\ \alpha _{l,1}^{m_l-1} &{}\quad \alpha _{l,2}^{m_l-1} &{}\quad \cdots &{}\quad \alpha _{l,K}^{m_l-1} \end{bmatrix} \end{aligned}$$for each \(l=1,\dots , L\) analogous to (2.4). \(\sigma _{\max ,l}, \sigma _{\min ,l}\) will denote its largest and smallest non-zero singular values, and \(\kappa _l = \sigma _{\max ,l}/\sigma _{\min ,l}\) its condition number. Recall from Theorem 8 that if \(m_l > \frac{1}{\triangle _l} + 1\), then \(\sigma _{\max ,l}^2 \le m_l+\frac{1}{\triangle _l}-1\) and \(\sigma _{\min ,l}^2 \ge m_l - \frac{1}{\triangle _l}-1\) and thus \(\kappa _l^2 \le \frac{m_l+\frac{1}{\triangle _l}-1}{m_l-\frac{1}{\triangle _l}-1}\).

3.1 The Case of Two Kernels

We first consider the case of two Gaussian kernels as the analysis here is relatively easier to digest compared to the general case. Note that f is now of the form

where we recall \(\bar{g}_l(s) = \sqrt{2\pi } \ \mu _l \exp \left( -2\pi ^2 s^2 \mu _l^2\right) \); \(l=1,2\). The following theorem provides sufficient conditions on the choice of the sampling parameters for approximate recovery of \(t_{1,j}, t_{2,j}, u_{1,j}, u_{2,j}\) for each \(j=1,\dots ,K\).

Theorem 4

Let \({0< \varepsilon _2 < c\triangle _2/2}\) for a constant \(c \in [0,1)\), \(s_2 = 0\), and \(m_2 \in \mathbb {Z}^{+}\) satisfy \(\frac{2}{\triangle _2 - 2\varepsilon _2} + 1 \le m_2 < \frac{2}{\triangle _2 (1-c)} + 1 \ (\ = M_{{2},\mathrm {up}})\). For \(B(u_{\mathrm {rel}},K)\) as in Corollary 1, let \({0< \varepsilon _1 < c\triangle _1/2}\) also satisfy

where \(\bar{C}_1, \bar{C}_2, \bar{C}_3 > 0\) are constants depending (see (3.11), (3.12)) on \(c,u_{\mathrm {rel}},\mu _1,\mu _2,\triangle _1,K\) and a constant \(\widetilde{c} > 1\). Say \(m_1,s_1 \in \mathbb {Z}^{+}\) are chosen to satisfy

where \(S_1 = m_1 + \frac{1}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}} \log ^{1/2}\left( \frac{Ku_{\mathrm {rel}}\mu _2}{\mu _1 B(u_{\mathrm {rel}},K) \varepsilon _1} \right) \). Then, there exist permutations \(\phi _1,\phi _2: [K] \rightarrow [K]\) such that for \(j=1,\dots ,K\),

where

and \(\widetilde{C}(\cdot ), C\) are as defined in Corollary 1.

Interpreting Theorem 4 Before proceeding to the proof, we make some useful observations.

- (a)

We first choose the sampling parameters \(\varepsilon \) (accuracy), m (number of samples), s (offset) for the inner kernel \(\bar{g}_2\) and then the outer kernel, i.e., \(\bar{g}_1\). For group i (\(= 1,2\)), we first choose \(\varepsilon _i\), then \(m_i\) (depending on \(\varepsilon _i\)), and finally the offset \(s_i\) (depending on \(m_i,\varepsilon _i\)).

- (b)

The choice of \(\varepsilon _2\) is free, but the choice of \(\varepsilon _1\) is constrained by \(\varepsilon _2\) as seen from (3.1). In particular, \(\varepsilon _1\) needs to be sufficiently small with respect to \(\varepsilon _2\) so that the perturbation arising due to the estimation errors for group 1 are controlled when we estimate the parameters for group 2.

- (c)

The lower bound on \(s_1\) ensures that we are sufficiently deep in the tail of \(\bar{g}_2\), so that its effect is negligible. The upper bound on \(s_1\) is to control the estimation errors of the source amplitudes for group 1 (see (2.9)). Observe that \(s_2 = 0\) since \(\bar{g}_2\) is the innermost kernel, and so there is no perturbation arising due to the tail of any other inner kernel.

- (d)

In theory, \(\varepsilon _1\) can be chosen to be arbitrarily close to zero; however, this would result in the offset \(s_1\) becoming large. Consequently, this will lead to numerical errors when we divide by \(\bar{g}_1(s_1+i)\); \(i=-m_1,\dots ,m_1-1\) while estimating the source parameters for group 1.

Order Wise Dependencies The Theorem is heavy in notation, so it would help to understand the order wise dependencies of the terms involved. Assume \(u_{\max },u_{\min }\asymp 1\). We have \(\widetilde{C}_1 \asymp K/\triangle _1\) and \(\widetilde{C}_2 \asymp K/\triangle _2\) which leads to

- (a)

For group 2, we have \(\varepsilon _2 \lesssim \triangle _2\), \(m_2 \asymp 1/\triangle _2\), \(s_2 = 0\), and

$$\begin{aligned} d_w({\widehat{t}}_{2,\phi _2(j)}, t_{2,j}) \le \varepsilon _2, \quad |{{\widehat{u}}_{2,\phi _2(j)} - u_{2,j}}| \lesssim \frac{K}{\triangle _2} \varepsilon _2. \end{aligned}$$ - (b)

For group 1, \(\varepsilon _1 \lesssim \triangle _1\) and (3.1) translates to

$$\begin{aligned}&\left( \frac{1}{\triangle _2} + \frac{K}{\triangle _1} + \frac{1}{(\mu _2^2 - \mu _1^2)^{1/2}} \log ^{1/2}\left( \frac{K^{3/2}\mu _2}{\mu _1\varepsilon _1} \right) \right) \varepsilon _1 \nonumber \\&\quad \lesssim \varepsilon _2\left( \frac{\mu _2}{\mu _1 K^{3/2}}\right) \exp \left( -\frac{2\pi ^2(\mu _2^2 - \mu _1^2)}{\triangle _2^2}\right) . \end{aligned}$$(3.3)Moreover, \(m_1 \asymp 1/\triangle _1\) and \(s_1 \asymp \frac{1}{\triangle _1} + (\mu _2^2 - \mu _1^2)^{-1/2}\log ^{1/2}(\frac{K^{3/2} \mu _2}{\mu _1 \varepsilon _1})\). Finally,

$$\begin{aligned} d_w({\widehat{t}}_{1,\phi _1(j)}, t_{1,j})&\le \varepsilon _1, \quad |{{\widehat{u}}_{1,\phi _1(j)} - u_{1,j}}|\nonumber \\&\lesssim \left( \frac{K}{\triangle _1} + \frac{1}{(\mu _2^2 - \mu _1^2)^{1/2}} \log ^{1/2}\left( \frac{K^{3/2} \mu _2}{\mu _1\varepsilon _1}\right) \right) \varepsilon _1. \end{aligned}$$(3.4)

Condition on \(\varepsilon _1,\varepsilon _2\) It is not difficult to verify that a sufficient condition for (3.3) to hold is that for any given \(\theta \in (0,1/2)\), it holds that

where \(C(\mu _1,\mu _2,\triangle _1,\triangle _2,K,\theta ) > 0\) depends only on the indicated parameters. This is outlined in Appendix C for Theorem 5 for the case of L kernels. In other words, \(\varepsilon _1\) would have to be sufficiently small with respect to \(\varepsilon _2\), so that the estimation errors carrying forward from the first group to the estimation of the parameters for the second group, are controlled.

Effect of Separation Between\(\mu _1,\mu _2\). Note that as \(\mu _1 \rightarrow \mu _2\), then (3.3) becomes more and more difficult to satisfy; in particular, \(C(\mu _1,\mu _2,\triangle _1,\triangle _2,K,\theta ) \rightarrow 0\) in (3.5). Hence, we would have to sample sufficiently deep in the tail of \(\bar{g}_1\) in order to distinguish \(\bar{g}_1,\bar{g}_2\) as one would intuitively expect. Next, for fixed \(\mu _2\) as \(\mu _1 \rightarrow 0\), we see that (3.3) becomes easier to satisfy. This is because \(\bar{g}_1(s)\) is now small for all s, and hence the perturbation error arising from stage 1 reduces accordingly. However, notice that \(s_1\) now has to increase correspondingly in order to distinguish between \(\bar{g}_1,\bar{g}_2\) (since \(\bar{g}_1(s) \approx 0\) for all s). Therefore, in order to control the estimation error of the amplitudes (see (3.4)), \(\varepsilon _1\) now has to reduce accordingly. For instance, \(\varepsilon _1 = o(\mu _1^{1/3})\) suffices. On the other hand, for fixed \(\mu _1\), as \(\mu _2 \rightarrow \infty \), satisfying (3.3) becomes more and more difficult. This is because the tail of \(\bar{g}_2\) becomes thinner, and so, the deconvolution step at stage 2 blows up the error arising from stage 1.

Proof of Theorem 4

The proof is divided into two steps below.

Recovering Source Parameters for First Group For offset parameter \(s_1\in \mathbb Z^{+}\) (the choice of which will be made clear later), we obtain the samples \((f(s_1+i))_{i=-m_1}^{m_1-1}\). Now, for any \(i=-m_1,\ldots ,m_1-1\), we have that

$$\begin{aligned} \frac{f(s_1+i)}{\bar{g}_1(s_1+i)}&= \sum _{j=1}^K u_{1,j}\ \exp \left( \iota 2\pi (s_1+i)t_{1,j}\right) \nonumber \\&\quad + \frac{\bar{g}_2(s_1+i)}{\bar{g}_1(s_1+i)} \sum _{j=1}^K \ u_{2,j} \exp \left( \iota 2\pi (s_1+i)t_{2,j}\right) \nonumber \\&= \sum _{j=1}^K \underbrace{u_{1,j} \exp \left( \iota 2\pi s_1 t_{1,j}\right) }_{u'_{1,j}} \exp \left( \iota 2\pi i t_{1,j}\right) \nonumber \\&\quad + \underbrace{\frac{\mu _2}{\mu _1}\exp \left( -2\pi ^2(s_1+i)^2(\mu _2^2-\mu _1^2) \right) \sum _{j=1}^K u_{2,j} \exp (\iota 2\pi (s_1+i)t_{2,j})}_{\eta '_{1,i}} \nonumber \\&= \sum _{j=1}^K u'_{1,j} \exp \left( \iota 2\pi i t_{1,j}\right) +\eta '_{1,i}. \end{aligned}$$(3.6)Here, \(\eta '_{1,i} \in \mathbb {C}\) corresponds to “perturbation” arising from the tail of \(f_2(s)\). Since the stated choice of \(s_1\) implies \(s_1 > m_1\), this means \(\min _{i} (s_1 + i)^2 = (s_1-m_1)^2\), and hence clearly

$$\begin{aligned} |{\eta '_{1,i}}| \le \frac{\mu _2}{\mu _1}\exp \left( -2\pi ^2(s_1-m_1)^2(\mu _2^2-\mu _1^2)\right) Ku_{\max }, \quad i=-m_1,\ldots ,m_1-1. \end{aligned}$$From (3.6), we can see that \(\widetilde{H}_0^{(1)} = V_1 D_{u'_1} V_1^H + E^{(1)}\) and \(\widetilde{H}_1^{(1)} = V_1 D_{u'_1} D_{\alpha _1} V_1^H+ F^{(1)}\). Here, \(D_{u'_1} = \text {diag}(u'_{1,1},\dots ,u'_{1,K})\) and \(D_{\alpha _1} = \text {diag}(\alpha _{1,1},\dots ,\alpha _{1,K})\), while \(E^{(1)}, F^{(1)}\) denote the perturbation matrices consisting of \((\eta '_{1,i})_{i=-m_1}^{m_1-1}\) terms, as in (2.2), (2.3).

We obtain estimates \({\widehat{t}}_{1,j}\), \(\widehat{u}_{1,j}\), \(j=1,\ldots ,K\) via the MMP method. Invoking Corollary 1, we have for \(\varepsilon _1 < c \triangle _1/2\), and \(\frac{2}{\triangle _1 - 2\varepsilon _1} + 1 \le m_1 < \frac{2}{\triangle _1(1-c)} + 1 (= M_{{1},\mathrm {up}})\) that if \(s_1\) satisfies

$$\begin{aligned} \frac{\mu _2}{\mu _1}\exp \left( -2\pi ^2(s_1-m_1)^2(\mu _2^2-\mu _1^2)\right) Ku_{\max }\le {\varepsilon _1 u_{\min }B(u_{\mathrm {rel}},K),} \end{aligned}$$(3.7)then there exists a permutation \(\phi _1: [K] \rightarrow [K]\) such that for each \(j=1,\dots ,K\),

$$\begin{aligned} d_w({\widehat{t}}_{1,\phi _1(j)}, t_{1,j}) \le \varepsilon _1, \quad |{{\widehat{u}}_{1,\phi _1(j)} - u_{1,j}}| < {\left( \widetilde{C}_1 + 2\pi s_1\right) u_{\max }\varepsilon _1}, \end{aligned}$$(3.8)where \(\widetilde{C}_1 = \widetilde{C}(\triangle _1,c,K,u_{\mathrm {rel}}) = 4\pi K M_{{1},\mathrm {up}} + \frac{2}{C\sqrt{K}}\left( u_{\mathrm {rel}}+ 16u_{\mathrm {rel}}^2\right) ^{-1}\). Clearly, the condition

$$\begin{aligned} s_1 \ge m_1 + \frac{1}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}} {\log ^{1/2}\left( \frac{Ku_{\mathrm {rel}}\mu _2}{\mu _1 \varepsilon _1 B(u_{\mathrm {rel}},K)} \right) } \end{aligned}$$implies (3.7). Moreover, since \(s_1 \le \widetilde{c} S_1\) and \(m_1 < M_{{1},\mathrm {up}}\), we obtain

$$\begin{aligned} s_1 < \widetilde{c} \left( M_{{1},\mathrm {up}} + \frac{1}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}} {\log ^{1/2}\left( \frac{K u_{\mathrm {rel}}\mu _2}{\mu _1 \varepsilon _1 B(u_{\mathrm {rel}},K)} \right) }\right) . \end{aligned}$$(3.9)Plugging (3.9) into (3.8) leads to the bound

$$\begin{aligned} |{{\widehat{u}}_{1,\phi _1(j)} - u_{1,j}}| < \left( \bar{C}_1 + \bar{C}_2 \log ^{1/2}\left( \frac{\bar{C}_3}{\varepsilon _1}\right) \right) {u_{\max }} \varepsilon _1; \quad j=1,\dots ,K, \end{aligned}$$(3.10)where \(\bar{C}_1, \bar{C}_2, \bar{C}_3 > 0\) are constants defined as follows.

$$\begin{aligned} \bar{C}_1&= {\widetilde{C}_1 + 2\pi \widetilde{c}M_{{1},\mathrm {up}}}, \end{aligned}$$(3.11)$$\begin{aligned} \bar{C}_2&= {\frac{2\pi \widetilde{c}}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}}}, \quad \bar{C}_3 = {\frac{K u_{\mathrm {rel}}\mu _2}{\mu _1 B(u_{\mathrm {rel}},K)}}. \end{aligned}$$(3.12)Recovering Source Parameters for Second Group Let \(\widehat{f}_1\) denote the estimate of \(f_1\) obtained using the estimates \((\widehat{u}_{1,j})_{j=1}^K, (\widehat{t}_{1,j})_{j=1}^K\), defined as

$$\begin{aligned} \widehat{f}_1(s) = \bar{g}_1(s) \sum _{j=1}^K \widehat{u}_{1,j} \exp \left( \iota 2\pi \widehat{t}_{1,j} s\right) . \end{aligned}$$For suitable \(s_2, m_2 \in \mathbb Z_+\) (choice to be made clear later), we now obtain samples

$$\begin{aligned} \frac{f(s_2+i) - \widehat{f}_1(s_2+i)}{\bar{g}_2(s_2+i)}; \quad i=-m_2,\ldots ,m_2-1. \end{aligned}$$Let us note that

$$\begin{aligned}&\frac{f(s_2+i)-\widehat{f}_1(s_2+i)}{\bar{g}_2(s_2+i)} = \frac{\bar{g}_1(s_2+i)}{\bar{g}_2(s_2+i)} \sum _{j=1}^K\left( u_{1,j} \exp \left( \iota 2\pi (s_2+i) t_{1,j} \right) \right. \nonumber \\&\qquad \left. - {\widehat{u}}_{1,\phi _1(j)} \exp \left( \iota 2\pi (s_2+i){\widehat{t}}_{1,\phi _1(j)}\right) \right) \nonumber \\&\qquad +\sum _{j=1}^K u_{2,j} \exp \left( \iota 2\pi (s_2 + i) t_{2,j} \right) \nonumber \\&\quad = \underbrace{\frac{\mu _1}{\mu _2} \exp (2\pi ^2 (s_2+i)^2 (\mu _2^2 - \mu _1^2)) \sum _{j=1}^K\left( u_{1,j} \exp (\iota 2\pi (s_2+i)t_{1,j}) - {\widehat{u}}_{1,\phi _1(j)} \exp \left( \iota 2\pi (s_2+i){\widehat{t}}_{1,\phi _1(j)}\right) \right) }_{\eta '_{2,i}} \nonumber \\&\qquad + \sum _{j=1}^K \underbrace{u_{2,j} \exp (\iota 2\pi s_2 t_{2,j})}_{u'_{2,j}} \exp (\iota 2\pi i t_{2,j}) \nonumber \\&\quad = \sum _{j=1}^K u'_{2,j} \exp (\iota 2\pi i t_{2,j}) + \eta '_{2,i}. \end{aligned}$$(3.13)Here, \(\eta '_{2,i} \in \mathbb {C}\) corresponds to noise arising from the estimation errors for the parameters in the first group of sources. As a direct consequence of Proposition 3, we have for each \(j=1,\dots ,K\) that

$$\begin{aligned}&|{u_{1,j} \exp (\iota 2\pi (s_2+i)t_{1,j}) - \widehat{u}_{1,\phi _1(j)} \exp (\iota 2\pi (s_2+i){\widehat{t}}_{1,\phi _1(j))}}| \nonumber \\&\quad \le 2\pi u_{\max }|{s_2 + i}| d_w(t_{1,j}, \widehat{t}_{1,\phi _1(j)}) + |{u_{1,j} - \widehat{u}_{1,\phi _1(j)}}| \nonumber \\&\quad < 2\pi u_{\max }|{s_2+i}| \varepsilon _1 + \left( \bar{C}_1 + \bar{C}_2 \log ^{1/2}\left( \frac{\bar{C}_3}{\varepsilon _1}\right) \right) {u_{\max }}\varepsilon _1, \end{aligned}$$(3.14)where the last inequality above follows from the bounds on \(|{u_{1,j} - \widehat{u}_{1,\phi _1(j)}}|\), \(d_w(t_{1,j}, t_{1,\phi _1(j)})\), derived earlier. Now for \(s_2 = 0\), and using the fact \(|{i}| \le m_2 < \frac{2}{\triangle _2(1-c)} + 1 \ (\ = M_{{2},\mathrm {up}})\), we obtain from (3.14) the following uniform bound on \(|{\eta '_{2,i}}|\).

$$\begin{aligned} |{\eta '_{2,i}}|&< \frac{\mu _1}{\mu _2} K e^{(2\pi ^2 m_2^2 (\mu _2^2 - \mu _1^2))} \left( {2\pi m_2} + \bar{C}_1 + \bar{C}_2 \log ^{1/2}\left( \frac{\bar{C}_3}{\varepsilon _1}\right) \right) {u_{\max }} \varepsilon _1 \nonumber \\&< \frac{\mu _1}{\mu _2} K e^{(2\pi ^2 M_{{2},\mathrm {up}}^2 (\mu _2^2 - \mu _1^2))} \left( {2\pi M_{{2},\mathrm {up}}} + \bar{C}_1 + \bar{C}_2 \log ^{1/2}\left( \frac{\bar{C}_3}{\varepsilon _1}\right) \right) {u_{\max }} \varepsilon _1. \end{aligned}$$(3.15)From (3.13), we see that \(\widetilde{H}_0^{(2)} = V_2 D_{u'_2} V_2^H + E^{(2)}\), \(\widetilde{H}_1^{(2)} = V_2 D_{u'_2} D_{\alpha _2}V_2^H +F^{(2)}\). Here, \(D_{u'_2} = \text {diag}(u'_{2,1},\dots ,u'_{2,K})\) and \(D_{\alpha _2} = \text {diag}(\alpha _{2,1},\dots ,\alpha _{2,K})\), while \(E^{(2)}, F^{(2)}\) denote the perturbation matrices consisting of \((\eta '_{2,i})_{i=-m_2}^{m_2-1}\) terms, as in (2.2), (2.3).

We obtain the estimates \(({\widehat{t}}_{2,j})_{j=1}^K\) and \((\widehat{u}_{2,j})_{j=1}^K\) using the MMP method. Invoking Corollary 1 and assuming \(\varepsilon _2 < c \triangle _2/2\), \(\frac{2}{\triangle _2 - 2\varepsilon _2} + 1 \le m_2 < M_{{2},\mathrm {up}}\) hold, it is sufficient that \(\varepsilon _1\) satisfies the condition

$$\begin{aligned}&\frac{\mu _1}{\mu _2} K e^{(2\pi ^2 M_{{2},\mathrm {up}}^2 (\mu _2^2 - \mu _1^2))} \left( {2\pi M_{{2},\mathrm {up}}} + \bar{C}_1 + \bar{C}_2 \log ^{1/2}\left( \frac{\bar{C}_3}{\varepsilon _1}\right) \right) {u_{\max }} \varepsilon _1 \\&\quad \le \varepsilon _2 {u_{\min }B(u_{\mathrm {rel}},K)}. \end{aligned}$$Indeed, there then exists a permutation \(\phi _2: [K] \rightarrow [K]\) such that for each \(j=1,\dots ,K\),

$$\begin{aligned} d_w({\widehat{t}}_{2,\phi _2(j)}, t_{2,j}) \le \varepsilon _2, \quad |{{\widehat{u}}_{2,\phi _2(j)} - u_{2,j}}| < \widetilde{C}_2 \varepsilon _2, \end{aligned}$$where \({\widetilde{C}_2 = \widetilde{C}(\triangle _2,c,K,u_{\mathrm {rel}}) = 4\pi K M_{{2},\mathrm {up}} + \frac{2}{C\sqrt{K}}\left( u_{\mathrm {rel}}+ 16u_{\mathrm {rel}}^2 \right) ^{-1}}\). This completes the proof.

\(\square \)

3.2 The General Case

We now move to the general case where \(L \ge 1\). The function f is now of the form

where we recall that \(\bar{g}_l(s) = \sqrt{2\pi } \ \mu _l \exp (-2\pi ^2 s^2 \mu _l^2)\). Before stating our result, it will be helpful to define certain terms for ease of notation, later on.

- (1)

For \(l=1,\dots ,L\),

$$\begin{aligned} M_{{l},\mathrm {up}} := \frac{2}{\triangle _l(1-c)} + 1, \quad {\widetilde{C}_l := \left[ 4\pi K M_{{l},\mathrm {up}} + \frac{2}{C\sqrt{K}}\left( u_{\mathrm {rel}}+ 16u_{\mathrm {rel}}^2 \right) ^{-1} \right] }. \end{aligned}$$(3.16)with constants \(c \in (0,1)\) and \(C = 10 + \frac{1}{2\sqrt{2}}\) (from Corollary 1).

- (2)

For \(l=1,\dots ,L-1\), and a constant \(\widetilde{c} > 1\),

$$\begin{aligned} \bar{C}_{l,1}&:= \widetilde{C}_l + {2\pi \widetilde{c}M_{{l},\mathrm {up}}}, \ \bar{C}_{l,2} := \frac{{2\pi \widetilde{c}}}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}}, \end{aligned}$$(3.17)$$\begin{aligned} D_l&:= {\frac{K u_{\mathrm {rel}}(L-l)\mu _L}{\mu _l B(u_{\mathrm {rel}},K)}}, \quad \bar{C}_{l,3} := \left\{ \begin{array}{rl} D_l \quad ; &{} l = 1 \\ 2 D_l \quad ; &{} l > 1. \end{array} \right. \end{aligned}$$(3.18)where \(B(u_{\mathrm {rel}},K)\) is as defined in Corollary 1.

- (3)

For \(l = 1,\dots ,L\), define

$$\begin{aligned} E_l(\varepsilon ) := \left\{ \begin{array}{rl} {\left( \bar{C}_{l,1} + \bar{C}_{l,2} \log ^{1/2}\left( \frac{\bar{C}_{l,3}}{\varepsilon }\right) \right) } \quad ; &{} l < L \\ {\widetilde{C}_L} \quad ; &{} l = L. \end{array} \right. \end{aligned}$$(3.19)where \(\varepsilon \in (0,1)\).

- (4)

For \(l = 2,\dots ,L-1\), define

$$\begin{aligned} F_l(\varepsilon )&:= C_{l,1}' + C_{l,2}' \log ^{1/2}\left( \frac{2 D_l}{\varepsilon }\right) ; \end{aligned}$$(3.20)$$\begin{aligned} \text {where} \quad C_{l,1}'&:= {2\pi }(\widetilde{c} + 1)M_{{l},\mathrm {up}}, \ C_{l,2}' = {\bar{C}_{l,2}}. \end{aligned}$$(3.21)Here, \(\widetilde{c} > 1\) is the same constant as in (2).

We are now ready to state our main theorem for approximate recovery of the source parameters for each group.

Theorem 5

For a constant \(c \in (0,1)\), let \(0< \varepsilon _L < c\triangle _L/2\), \(\frac{2}{\triangle _L - 2\varepsilon _L} \le m_L < M_{{L},\mathrm {up}}\) and \(s_L = 0\). Moreover, for \(l = L-1, \dots , 1\), say we choose \(\varepsilon _l,m_l,s_l\) as follows.

- 1.

\({0< \varepsilon _l < c\triangle _l/2}\) additionally satisfies the following conditions.

- (a)

\((2\pi M_{{L},\mathrm {up}} + E_{L-1}(\varepsilon _{L-1})) \varepsilon _{L-1} u_{\mathrm {rel}}\le \varepsilon _{L} e^{-2\pi ^2(\mu _{L}^2-\mu _1^2)M_{{L},\mathrm {up}}^2} \frac{\mu _{L} B(u_{\mathrm {rel}},K) }{K (L-1) \mu _{L-1}}\).

- (b)

If \(l < L-1\), then \(\varepsilon _{l} \le \varepsilon _{l+1}\), \(E_l(\varepsilon _{l}) {\varepsilon _l} \le E_{l+1}(\varepsilon _{l+1}) {\varepsilon _{l+1}}\) and

$$\begin{aligned} {(F_{l+1}(\varepsilon _{l}) + E_{l}(\varepsilon _{l})) \varepsilon _l u_{\mathrm {rel}}\le \varepsilon _{l+1} e^{-(\mu _{l+1}^2-\mu _1^2)\frac{F_{l+1}^2(\varepsilon _{l+1})}{2}} \frac{\mu _{l+1} B(u_{\mathrm {rel}},K)}{2K l \mu _{l}}.}\nonumber \\ \end{aligned}$$(3.22)

- (a)

- 2.

\(\frac{2}{\triangle _l - 2\varepsilon _l} \le m_l < M_{{l},\mathrm {up}}\), and \(S_l \le s_l \le \widetilde{c} S_l\) (for constant \(\widetilde{c} > 1\)) where

$$\begin{aligned} S_l = m_l + \frac{1}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}} \log ^{1/2}\left( {\frac{b_l K u_{\mathrm {rel}}(L-l) \mu _L}{\varepsilon _l B(u_{\mathrm {rel}},K) \mu _l}} \right) , \end{aligned}$$with \(b_l = {1}\) if \(l = 1\), and \(b_l = {2}\) otherwise.

Then, for each \(l=1,\dots ,L\), there exists a permutation \(\phi _l: [K] \rightarrow [K]\) such that

Interpreting Theorem 5 Before proceeding to the proof, we make some useful observations.

- (a)

We first choose the sampling parameters (\(\varepsilon ,m,s\)) for the outermost kernel \(\bar{g}_L\), then for \(\bar{g}_{L-1}\), and so on. For the lth group (\(1 \le l \le L\)), we first choose \(\varepsilon _L\) (accuracy), then \(m_l\) (number of samples), and finally \(s_l\) (sampling offset).

- (b)

The choice of \(\varepsilon _L \in (0,c\triangle _L/2)\), while free, dictates the choice of \(\varepsilon _1,\dots ,\varepsilon _{L-1}\). To begin with, condition 1a essentially requires \(\varepsilon _{L-1}\) to be sufficiently small with respect to \(\varepsilon _L\). Similarly, for \(l = 1,\dots ,L-2\), the conditions in 1b require \(\varepsilon _l\) to be sufficiently small with respect to \(\varepsilon _{l+1}\). It ensures that during the estimation of the parameters for the \((l+1)\)th group, the estimation errors carrying forward from the previous groups (1 to l) are sufficiently small.

- (c)

For each l (\(< L\)), the lower bound on \(s_l\) is to ensure that we are sufficiently deep in the tails of \(\bar{g}_{l+1}, \bar{g}_{l+2}, \dots , \bar{g}_L\). The upper bound on \(s_l\) is to control the estimation errors of the source amplitudes for group l (see (2.9)).

Order Wise Dependencies We now discuss the scaling of the terms involved, assuming \(u_{\max },u_{\min }\asymp 1\).

- (i)

For \(l=1,\dots ,L\), we have \(M_{{l},\mathrm {up}} \asymp \frac{1}{\triangle _l}\), \(\widetilde{C}_l \asymp \frac{K}{\triangle _l}\).

- (ii)

For \(p=1,\dots ,L-1\) we have

$$\begin{aligned} \bar{C}_{p,1} \asymp \frac{K}{\triangle _p}, \ \bar{C}_{p,2} \asymp \frac{1}{(\mu _{p+1}^2 - \mu _p^2)^{1/2}}, \ D_p \asymp \frac{K^{3/2}(L-p)\mu _L}{\mu _p}, \ \bar{C}_{p,3} \asymp D_p. \end{aligned}$$ - (iii)

For \(l = 1,\dots ,L\), we have

$$\begin{aligned} E_l(\varepsilon _l) \asymp \left\{ \begin{array}{rl} {\frac{K}{\triangle _l} + \frac{1}{(\mu _{l+1}^2 - \mu _l^2)^{1/2}} \log ^{1/2}\left( \frac{K^{3/2}(L-l)\mu _L}{\varepsilon _l\mu _l}\right) } \quad ; &{} l < L \\ \\ \frac{K}{\triangle _L} \quad ; &{} l = L. \end{array} \right. \end{aligned}$$(3.23) - (iv)

For \(q = 2,\dots ,L-1\), we have

$$\begin{aligned} F_q(\varepsilon _q) \asymp \frac{1}{\triangle _q} + \frac{1}{(\mu _{q+1}^2 - \mu _q^2)^{1/2}} \log ^{1/2}\left( \frac{K^{3/2} (L-q) \mu _L}{\varepsilon _q\mu _q}\right) . \end{aligned}$$(3.24)

Conditions on \(\varepsilon _l\) Theorem 5 has several conditions on \(\varepsilon _l\), which might be difficult to digest at first glance. On a top level, the conditions dictate that the accuracies satisfy \(\varepsilon _1 \le \varepsilon _2 \le \cdots \le \varepsilon _L\). In fact, they require a stronger condition in the sense that for each \(1 \le l \le L-1\), \(\varepsilon _l\) is required to be sufficiently smaller than \(\varepsilon _{l+1}\) (the choice of \(\varepsilon _L \lesssim \triangle _L/2\) is free). This places the strongest assumption on \(\varepsilon _1\) meaning that the source parameters corresponding to the “outermost kernel” in the Fourier domain should be estimated with the highest accuracy. Below, we state the conditions appearing on \(\varepsilon _l\) in the Theorem up to positive constants; the details are deferred to Appendix C.

- 1.

Condition 1a in Theorem 5 holds if \(\varepsilon _{L-1}, \varepsilon _L\) satisfy

$$\begin{aligned} \varepsilon _{L-1} \lesssim \alpha (\varepsilon _L)^{\frac{1}{1-\theta }} \end{aligned}$$(3.25)for any given \(\theta \in (0,1/2)\). Here, \(\alpha > 0\) depends on \(\theta ,K,L,\triangle _{L-1},\triangle _{L},\mu _{L},\mu _{L-1},\mu _{1}\).

- 2.

For \(l < L - 1\), let us look at condition 1b in Theorem 5. The requirement \(E_l(\varepsilon _l) {\varepsilon _l} \le E_{l+1}(\varepsilon _{l+1}) {\varepsilon _{l+1}}\) holds if \(\varepsilon _l,\varepsilon _{l+1}\) satisfy

$$\begin{aligned} \varepsilon _l \lesssim \lambda _l \log ^{\frac{1}{2(1-\theta )}} \left( \frac{K^{3/2}\mu _{L} {(L-l)}}{\varepsilon _{l+1}\mu _{l+1}}\right) \varepsilon _{l+1}^{\frac{1}{1-\theta }} \end{aligned}$$(3.26)for any given \(\theta \in (0,1/2)\). Here, \(\lambda _l > 0\) depends on \(\triangle _l,\triangle _{l+1},\mu _{l},\mu _{l+1},\mu _{l+2},L,K,\theta \).

Furthermore, the condition in (3.22) is satisfied if \(\varepsilon _l, \varepsilon _{l+1}\) satisfy

$$\begin{aligned} \varepsilon _l \lesssim \beta _l (\varepsilon _{l+1})^{\frac{1+\gamma _l}{1-\theta }} \end{aligned}$$(3.27)for any given \(\theta \in (0,1/2)\). Here, \(\beta _l > 0\) depends on \(L,K,\triangle _l\), \(\triangle _{l+1},\triangle _{L-1},\triangle _{L}\), \(\mu _{l},\mu _{l+1}\), \(\mu _{l+2}, \mu _{L}\), \(\mu _{L-1},\mu _{1},\theta \), while \(\gamma _l > 0\) depends on \(\mu _1,\mu _{l+1},\mu _{l+2},\triangle _{l+1}\). Note that the dependence on \(\varepsilon _{l+1}\) is stricter in (3.27) as compared to (3.26).

Effect of Separation Between\(\mu _l, \mu _{l+1}\)for\(l = 1,\dots ,L-1\) The interaction between \(\mu _{L-1},\mu _L\) occurs in the same manner as explained for the case of two kernels, the reader is invited to verify this. We analyze the interaction between \(\mu _{l},\mu _{l+1}\) below for \(l < L-1\).

- 1.

Consider the scenario where \(\mu _l \rightarrow \mu _{l+1}\) (with other terms fixed). We see that \(s_l\) has to be suitably large now in order to be able to distinguish between \(\bar{g}_l\) and \(\bar{g}_{l+1}\). Moreover, conditions (3.26), (3.27) become stricter in the sense that \(\lambda _l,\beta _l \rightarrow 0\).

- 2.

Now say \(\mu _{l+1}\) is fixed, and \(\mu _{l} \rightarrow 0\) (and hence \(\mu _1,\dots ,\mu _{l-1} \rightarrow 0\)). In this case, the conditions \(E_1(\varepsilon _1) {\varepsilon _l} \le \cdots \le E_{l+1}(\varepsilon _{l+1}) {\varepsilon _{l+1}}\) become vacuous as the estimation error arising from stages \(1,\dots ,l-1\) themselves approach 0. However, \(s_1,\dots ,s_l\) now increase accordingly in order to distinguish within \(\bar{g}_1,\dots ,\bar{g}_l\). Hence, to control the estimation error of the amplitudes, i.e, \(E_i(\varepsilon _i)\); \(1 \le i \le l\), \(\varepsilon _1,\dots ,\varepsilon _l\) have to be suitably small.

Proof of Theorem 5

The proof is divided in to three main steps.

Recovering Source Parameters for First Group For \(i=-m_1,\ldots ,m_1-1\), we have

$$\begin{aligned} \frac{f(s_1+i)}{\bar{g}_1(s_1+i)}&= \sum _{j=1}^K u_{1,j} \exp \left( \iota 2\pi (s_1+i)t_{1,j}\right) \nonumber \\&\quad + \sum _{l=2}^{L}\frac{\bar{g}_l(s_1+i)}{\bar{g}_1(s_1+i)} \sum _{j=1}^K \ u_{l,j} \exp \left( \iota 2\pi (s_1+i)t_{l,j}\right) \nonumber \\&= \sum _{j=1}^K \underbrace{u_{1,j} \exp \left( \iota 2\pi s_1 t_{1,j}\right) }_{u'_{1,j}} \exp \left( \iota 2\pi i t_{1,j}\right) \nonumber \\&\quad + \underbrace{\sum _{l=2}^{L} \frac{\mu _l}{\mu _1}\exp \left( -2\pi ^2(s_1+i)^2(\mu _l^2-\mu _1^2) \right) \sum _{j=1}^K u_{l,j} \exp (\iota 2\pi (s_1+i)t_{l,j})}_{\eta '_{1,i}} \nonumber \\&= \sum _{j=1}^K u'_{1,j} \exp \left( \iota 2\pi i t_{1,j}\right) + \eta '_{1,i}. \end{aligned}$$(3.28)Here, \(\eta '_{1,i}\) is the perturbation due to the tail of \(\bar{g}_2,\bar{g}_3,\dots ,\bar{g}_L\). Since the stated choice of \(s_1\) implies \(s_1 > m_1\), this means \(\min _{i} (s_1 + i)^2 = (s_1-m_1)^2\), and hence clearly

$$\begin{aligned} |{\eta '_{1,i}}|&\le Ku_{\max }\sum _{l=2}^{L} \frac{\mu _l}{\mu _1}\exp \left( -2\pi ^2(s_1-m_1)^2(\mu _l^2-\mu _1^2)\right) \\&\le Ku_{\max }(L-1) \frac{\mu _{L}}{\mu _1}\exp \left( -2\pi ^2(s_1-m_1)^2(\mu _2^2-\mu _1^2)\right) . \end{aligned}$$We obtain estimates \({\widehat{t}}_{1,j}\), \(\widehat{u}_{1,j}\), \(j=1,\ldots ,K\) via the MMP method. Invoking Corollary 1, we have for \(\varepsilon _1 < c \triangle _1/2\), and \(\frac{2}{\triangle _1 - 2\varepsilon _1} + 1 \le m_1 < \frac{2}{\triangle _1(1-c)} + 1 (= M_{{1},\mathrm {up}})\) that if \(s_1\) satisfies

$$\begin{aligned} Ku_{\max }(L-1) \frac{\mu _{L}}{\mu _1}\exp \left( -2\pi ^2(s_1-m_1)^2(\mu _2^2-\mu _1^2)\right) \le \varepsilon _1 {u_{\min }B(u_{\mathrm {rel}},K)} \end{aligned}$$(3.29)then there exists a permutation \(\phi _1: [K] \rightarrow [K]\) such that for each \(j=1,\dots ,K\),

$$\begin{aligned} d_w({\widehat{t}}_{1,\phi _1(j)}, t_{1,j}) \le \varepsilon _1, \quad |{{\widehat{u}}_{1,\phi _1(j)} - u_{1,j}}| < \left( \widetilde{C}_1 + 2 \pi s_1\right) {u_{\max }} \varepsilon _1. \end{aligned}$$(3.30)Clearly, the condition

$$\begin{aligned} s_1 \ge m_1 + \frac{1}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}} \log ^{1/2}\left( {\frac{K u_{\mathrm {rel}}(L-1) \mu _L}{\mu _1 \varepsilon _1 B(u_{\mathrm {rel}},K)} } \right) \end{aligned}$$(3.31)implies (3.29). Moreover, since \(s_1 \le \widetilde{c} S_1\) and \(m_1 < M_{{1},\mathrm {up}}\), we obtain

$$\begin{aligned} s_1&< \widetilde{c} \left( M_{{1},\mathrm {up}} + \frac{1}{(2\pi ^2(\mu _2^2 - \mu _1^2))^{1/2}} \log ^{1/2}\left( {\frac{K (L-1) u_{\mathrm {rel}}\mu _L}{\mu _1 \varepsilon _1 B(u_{\mathrm {rel}},K)}} \right) \right) . \end{aligned}$$(3.32)Plugging (3.32) in (3.30), we obtain

$$\begin{aligned} |{{\widehat{u}}_{1,\phi _1(j)} - u_{1,j}}|&< \left( \bar{C}_{1,1} + \bar{C}_{1,2} \log ^{1/2}\left( \frac{\bar{C}_{1,3}}{\varepsilon _1}\right) \right) \varepsilon _1 {u_{\max }} \nonumber \\&= E_1(\varepsilon _1) {\varepsilon _1 u_{\max }}; \quad j=1,\dots ,K, \end{aligned}$$(3.33)where \(\bar{C}_{1,1}, \bar{C}_{1,2}, \bar{C}_{1,3} > 0\) are constants defined in (3.17), (3.18), and \(E_p(\cdot )\) is defined in (3.19).

Recovering Source Parameters for\(l{\text {th}} (1< l < L)\)Group Say we are at the lth iteration for \(1< l < L\), having estimated the source parameters up to the \((l-1)\)th group. Say that for each \(p = 1,\dots ,l-1\) and \(j=1,\dots ,K\) the following holds.

$$\begin{aligned} d_w(\widehat{t}_{p,\phi _p(j)}, t_{p,j}) \le \varepsilon _p, \quad |{\widehat{u}_{p,\phi _p(j)} - u_{p,j}}| < E_p(\varepsilon _p) {u_{\max }\varepsilon _p}, \end{aligned}$$(3.34)for some permutations \(\phi _p: [K] \rightarrow [K]\), with

- 1.

\(\varepsilon _1 \le \cdots \le \varepsilon _{l-1}; \quad \quad E_1(\varepsilon _1) {\varepsilon _1} \le \cdots \le E_{l-1}(\varepsilon _{l-1}) {\varepsilon _{l-1}}\);

- 2.

\(\varepsilon _{p} < c \triangle _p/2\) ;

- 3.

\({(F_{q+1}(\varepsilon _{q}) + E_{q}(\varepsilon _{q})) u_{\mathrm {rel}}\varepsilon _q \le \varepsilon _{q+1} e^{-(\mu _{q+1}^2-\mu _1^2)\frac{F_{q+1}^2(\varepsilon _{q+1})}{2}} \frac{\mu _{q+1} B(u_{\mathrm {rel}},K)}{2K q \mu _{q}}}\), \(1 \le q \le l-2\).

For \(i=-m_l,\ldots ,m_l-1\), we have

$$\begin{aligned}&\frac{f(s_l + i) - \sum _{p=1}^{l-1} \widehat{f}_p(s_l + i)}{\bar{g}_l(s_l+i)} \\&\quad = \underbrace{\sum _{p=1}^{l-1}\frac{\bar{g}_p(s_l+i)}{\bar{g}_l(s_l + i)} \sum _{j=1}^K[u_{p,j}\exp (\iota 2\pi (s_l+i)t_{p,j}) - \widehat{u}_{p,\phi _p(j)} \exp (\iota 2\pi (s_l+i)\widehat{t}_{p,\phi _p(j)})]}_{\eta '_{l,i,past}} \\&\qquad + \underbrace{\sum _{q=l+1}^{L} \frac{\bar{g}_q(s_l+i)}{\bar{g}_l(s_l + i)} \sum _{j=1}^{K}u_{q,j}\exp (\iota 2\pi (s_l+i)t_{q,j})}_{\eta '_{l,i,fut}} \\&\qquad + \sum _{j=1}^K \underbrace{u_{l,j} \exp (\iota 2\pi s_l t_{l,j})}_{u'_{l,j}} \exp (\iota 2\pi i t_{l,j}) \\&\quad = \sum _{j=1}^K u'_{l,j} \exp (\iota 2\pi i t_{l,j}) + \eta '_{l,i,past} + \eta '_{l,i,fut}. \end{aligned}$$Here, \(\eta '_{l,i,past}\) denotes perturbation due to the estimation errors of the source parameters in the past. Moreover, \(\eta '_{l,i,fut}\) denotes perturbation due to the tails of the kernels that are yet to be processed.

- (i)

\(\underline{\textit{Bounding }\eta '_{l,i,past}}\) To begin with, note

$$\begin{aligned} \eta '_{l,i,past}&= \sum _{p=1}^{l-1}\frac{\mu _p}{\mu _l} \exp (2\pi ^2(\mu _l^2 - \mu _p^2) (s_l + i)^2)\\&\qquad \sum _{j=1}^K[u_{p,j}\exp (\iota 2\pi (s_l+i)t_{p,j}) \\&\quad - \widehat{u}_{p,\phi _p(j)} \exp (\iota 2\pi (s_l+i)\widehat{t}_{p,\phi _p(j)}]. \end{aligned}$$Using Proposition 3, we have for each \(p=1,\dots ,l-1\) and \(j=1,\dots ,K\) that

$$\begin{aligned}&|{u_{p,j} \exp (\iota 2\pi (s_l+i)t_{p,j}) - \widehat{u}_{p,\phi _p(j)} \exp (\iota 2\pi (s_l+i){\widehat{t}}_{p,\phi _p(j)})}| \nonumber \\&\quad \le 2\pi u_{\max }|{s_l + i}| d_w(t_{p,j}, \widehat{t}_{p,\phi _p(j)}) + |{u_{p,j} - \widehat{u}_{p,\phi _p(j)}}| \nonumber \\&\quad < 2\pi u_{\max }|{s_l+i}| \varepsilon _p + E_p(\varepsilon _p) {\varepsilon _p u_{\max }}, \end{aligned}$$(3.35)where the last inequality is due to (3.34). Since \(s_l > m_l\), hence \((s_l + i)^2 < (s_l + m_l)^2\) for all \(i=-m_l,\dots ,m_l-1\). With the help of (3.35), we then readily obtain

$$\begin{aligned} |{\eta '_{l,i,past}}|&< \sum _{p=1}^{l-1} \left( \left( \frac{\mu _p}{\mu _l} \exp (2\pi ^2(\mu _l^2 - \mu _p^2) (s_l + m_l)^2)\right) \nonumber \right. \nonumber \\&\quad \left. (2\pi u_{\max }(s_l+m_l) \varepsilon _p + E_p(\varepsilon _p) {\varepsilon _p u_{\max }}) K\right) \nonumber \\&\le \left( K \frac{\mu _{l-1}}{\mu _l} e^{2\pi ^2(\mu _l^2 - \mu _1^2)(s_l + m_l)^2} \right) \nonumber \\&\quad \left( \sum _{p=1}^{l-1} 2\pi u_{\max }(s_l+m_l)\varepsilon _p + E_p(\varepsilon _p) {\varepsilon _p u_{\max }} \right) \nonumber \\&\le \left( K(l-1) \frac{\mu _{l-1}}{\mu _l} e^{2\pi ^2(\mu _l^2 - \mu _1^2)(s_l + m_l)^2} \right) \nonumber \\&\quad \left( 2\pi u_{\max }(s_l+m_l)\varepsilon _{l-1} + E_{l-1}(\varepsilon _{l-1}) {\varepsilon _{l-1} u_{\max }} \right) , \end{aligned}$$(3.36)where in the last inequality, we used 1.

- (ii)

\(\underline{\textit{Bounding }\eta '_{l,i,fut}}\) We have

$$\begin{aligned} \eta '_{l,i,fut}&= \sum _{q=l+1}^{L} \frac{\mu _q}{\mu _l} \exp (-2\pi ^2(s_l+i)^2(\mu _q^2 - \mu _l^2)) \nonumber \\&\quad \left( \sum _{j=1}^K u_{q,j} \exp (\iota 2\pi (s_l+i)t_{q,j})\right) . \end{aligned}$$Since \(s_l > m_l\), we have \((s_l+i)^2 \ge (s_l-m_l)^2\) for all \(i=-m_l,\dots ,m_l-1\). This, along with the fact \(\frac{\mu _q}{\mu _l} \le \frac{\mu _L}{\mu _l}\) gives us

$$\begin{aligned} |{\eta '_{l,i,fut}}| \le \frac{\mu _L}{\mu _l} Ku_{\max }(L-l) \exp (-2\pi ^2(s_l-m_l)^2(\mu _{l+1}^2 - \mu _l^2)). \end{aligned}$$It follows that if \(s_l \ge S_l\) where

$$\begin{aligned} S_l = m_l + \frac{1}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}} \log ^{1/2}\left( {\frac{2 K u_{\mathrm {rel}}(L-l) \mu _L}{\varepsilon _l \mu _l B(u_{\mathrm {rel}},K)}} \right) \end{aligned}$$then for \(i=-m_l,\dots ,m_l-1\), we have

$$\begin{aligned} |{\eta '_{l,i,fut}}| < \varepsilon _l {\frac{u_{\min }B(u_{\mathrm {rel}},K)}{2}}. \end{aligned}$$(3.37) - (iii)

\(\underline{\textit{Back to }\eta '_{l,i,past}}\) We will now find conditions which ensure that the same bound as (3.37) holds on \(|{\eta '_{l,i,past}}|\), uniformly for all i. To this end, since \(s_l \le \widetilde{c} S_l\) and \(m_l < M_{{l},\mathrm {up}}\), we obtain

$$\begin{aligned} s_l < \widetilde{c} \left( M_{{l},\mathrm {up}} + \frac{1}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}} \log ^{1/2}\left( {\frac{2 K u_{\mathrm {rel}}(L-l) \mu _L}{\varepsilon _l \mu _l B(u_{\mathrm {rel}},K)}}\right) \right) . \end{aligned}$$(3.38)This then gives us the bound

$$\begin{aligned} {2\pi }(s_l + m_l)&< {2\pi } (\widetilde{c} + 1) M_{{l},\mathrm {up}} \\&\quad + \frac{{2\pi } \widetilde{c}}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}} \log ^{1/2}\left( {\frac{2 K u_{\mathrm {rel}}(L-l) \mu _L}{\varepsilon _l \mu _l B(u_{\mathrm {rel}},K)}}\right) \\&= C'_{l,1} + C'_{l,2} \log ^{1/2}\left( \frac{2D_{l}}{\varepsilon _l}\right) = F_{l}(\varepsilon _l), \end{aligned}$$where we recall the definition of \(F_l\), and constants \(C_{l,1}', C_{l,2}', D_l > 0\) from (3.20), (3.21). Since \(\varepsilon _l< 1 < D_l\) and \(\varepsilon _l \ge \varepsilon _{l-1}\), hence \({2\pi } (s_l + m_l) < F_{l}(\varepsilon _l) \le F_{l}(\varepsilon _{l-1})\). Using this in (3.36), we obtain

$$\begin{aligned}&|{\eta '_{l,i,past}}| < \left( K(l-1) \frac{\mu _{l-1}}{\mu _l} {e^{(\mu _l^2 - \mu _1^2) \frac{F^2_l(\varepsilon _l)}{2}}} \right) \nonumber \\&\quad {\left( F_l(\varepsilon _{l-1}) + E_{l-1}(\varepsilon _{l-1}) \right) {u_{\max }\varepsilon _{l-1}}} \end{aligned}$$(3.39)Therefore if \(\varepsilon _{l-1}\) satisfies the condition

$$\begin{aligned} {(F_l(\varepsilon _{l-1}) + E_{l-1}(\varepsilon _{l-1}) ) u_{\max }\varepsilon _{l-1} \le \varepsilon _l e^{-(\mu _l^2-\mu _1^2) \frac{F^2_l(\varepsilon _l)}{2}} \frac{u_{\min }\mu _l B(u_{\mathrm {rel}},K)}{2 K (l-1) \mu _{l-1}}} \end{aligned}$$then it implies \(|{\eta '_{l,i,past}}| < \varepsilon _l {\frac{u_{\min }B(u_{\mathrm {rel}},K)}{2}}\). Together with (3.37), this gives

$$\begin{aligned} |{\eta '_{l,i}}| \le |{\eta '_{l,i,fut}}| + |{\eta '_{l,i,past}}| < \varepsilon _l {u_{\min }B(u_{\mathrm {rel}},K)}. \end{aligned}$$We obtain estimates \({\widehat{t}}_{l,j}\), \(\widehat{u}_{l,j}\), \(j=1,\ldots ,K\) via the MMP method. Invoking Corollary 1, if \(\varepsilon _l < c \triangle _l/2\), then for the stated choice of \(m_l\), there exists a permutation \(\phi _l: [K] \rightarrow [K]\) such that for each \(j=1,\dots ,K\),

$$\begin{aligned} d_w({\widehat{t}}_{l,\phi _l(j)}, t_{l,j})&\le \varepsilon _l; \quad |{{\widehat{u}}_{l,\phi _l(j)} - u_{l,j}}| \nonumber \\&< \left( \widetilde{C}_l + 2\pi s_l \right) \varepsilon _l {u_{\max }} < E_l(\varepsilon _l) {\varepsilon _{l} u_{\max }}. \end{aligned}$$(3.40)The last inequality follows readily using (3.38).

- 1.

Recovering Source Parameters for Last Group Say that for each \(p = 1,\dots ,L-1\) and \(j=1,\dots ,K\) the following holds.

$$\begin{aligned} d_w(\widehat{t}_{p,\phi _p(j)}, t_{p,j}) \le \varepsilon _p, \quad |{{\widehat{u}}_{p,\phi _p(j)} - u_{p,j}}| < E_p(\varepsilon _p) {\varepsilon _p u_{\max }}, \end{aligned}$$(3.41)for some permutations \(\phi _p: [K] \rightarrow [K]\), with

- 1.

\(\varepsilon _1 \le \cdots \le \varepsilon _{L-1}\); \(E_1(\varepsilon _1) {\varepsilon _1} \le \cdots \le E_{L-1}(\varepsilon _{L-1}) {\varepsilon _{L-1}}\);

- 2.

\(\varepsilon _{p} < c \triangle _p/2\);

- 3.

\((F_{q+1}(\varepsilon _{q}) + E_{q}(\varepsilon _{q})) \varepsilon _{q} u_{\mathrm {rel}}\le \varepsilon _{q+1} e^{-(\mu _{q+1}^2-\mu _1^2)\frac{F_{q+1}^2(\varepsilon _{q+1})}{2}} \frac{\mu _{q+1} B(u_{\mathrm {rel}},K)}{2 K q \mu _{q}}\), \(1 \le q \le L-2\).

We proceed by noting that for each \(i = -m_L,\dots ,m_L-1\)

$$\begin{aligned}&\frac{f(s_L + i) - \sum _{p=1}^{L-1} \widehat{f}_p(s_L + i)}{\bar{g}_L(s_L + i)} \\&\quad = \underbrace{\sum _{p=1}^{L-1}\frac{\bar{g}_p(s_L+i)}{\bar{g}_L(s_L + i)} \sum _{j=1}^K[u_{p,j}\exp (\iota 2\pi (s_L+i)t_{p,j}) - \widehat{u}_{p,\phi _p(j)} \exp (\iota 2\pi (s_L+i)\widehat{t}_{p,\phi _p(j)}]}_{\eta '_{L,i}} \\&\qquad + \sum _{j=1}^K \underbrace{u_{L,j} \exp (\iota 2\pi s_L t_{L,j})}_{u'_{L,j}} \exp (\iota 2\pi i t_{L,j}) = \sum _{j=1}^K u'_{L,j} \exp (\iota 2\pi i t_{L,j}) + \eta '_{L,i}. \end{aligned}$$Using Proposition 3, we have for each \(p=1,\dots ,L-1\) and \(j=1,\dots ,K\) that

$$\begin{aligned}&|{u_{p,j} \exp (\iota 2\pi (s_L+i)t_{p,j}) - \widehat{u}_{p,\phi _p(j)} \exp (\iota 2\pi (s_L+i){\widehat{t}}_{p,\phi _p(j)}}| \nonumber \\&\quad \le 2\pi u_{\max }|{s_L + i}| d_w(t_{p,j}, \widehat{t}_{p,\phi _p(j)}) + |{u_{p,j} - \widehat{u}_{p,\phi _p(j)}}| \nonumber \\&\quad < 2\pi u_{\max }|{s_L+i}| \varepsilon _p + E_p(\varepsilon _p) {\varepsilon _p u_{\max }}, \end{aligned}$$(3.42)where the last inequality follows from (3.41). Since \(s_L = 0\), hence \((s_L + i)^2< m_L^2 < M_{{L},\mathrm {up}}^2\) for all \(i=-m_L,\dots ,m_L-1\). Using (3.42), we then readily obtain

$$\begin{aligned} |{\eta '_{L,i}}|&< \sum _{p=1}^{L-1} \left( \left( \frac{\mu _p}{\mu _L} e^{2\pi ^2(\mu _L^2 - \mu _p^2) M_{{L},\mathrm {up}}^2}\right) (2\pi u_{\max }M_{{L},\mathrm {up}}\varepsilon _p + E_p(\varepsilon _p) {\varepsilon _p u_{\max }})K\right) \nonumber \\&\le \left( K \frac{\mu _{L-1}}{\mu _L} e^{2\pi ^2(\mu _L^2 - \mu _1^2)M_{{L},\mathrm {up}}^2} \right) {\sum _{p=1}^{L-1}\left( 2\pi u_{\max }M_{{L},\mathrm {up}}\varepsilon _p + E_p(\varepsilon _p) \varepsilon _p u_{\max }\right) } \nonumber \\&\le \left( K(L-1) \frac{\mu _{L-1}}{\mu _L} e^{2\pi ^2(\mu _L^2 - \mu _1^2)M_{{L},\mathrm {up}}^2} \right) \left( 2\pi u_{\max }M_{{L},\mathrm {up}}\varepsilon _{L-1} \right. \nonumber \\&\quad \left. + E_{L-1}(\varepsilon _{L-1}) {\varepsilon _{L-1} u_{\max }} \right) \end{aligned}$$(3.43)where in the last inequality, we used (1).

We obtain estimates \({\widehat{t}}_{L,j}\), \(\widehat{u}_{L,j}\), \(j=1,\ldots ,K\) via the MMP method. Invoking Corollary 1 and assuming \(\varepsilon _L < c \triangle _L/2\), it follows for the stated conditions on \(m_L\), that it suffices if \(\varepsilon _{L-1}\) satisfies

$$\begin{aligned} {(2\pi M_{{L},\mathrm {up}} + E_{L-1}(\varepsilon _{L-1})) \varepsilon _{L-1} u_{\max }\le \varepsilon _L e^{-2\pi ^2(\mu _L^2 - \mu _1^2)M_{{L},\mathrm {up}}^2} \frac{u_{\min }\mu _L B(u_{\mathrm {rel}},K)}{K (L-1) \mu _{L-1}}.} \end{aligned}$$Indeed, there then exists a permutation \(\phi _L: [K] \rightarrow [K]\) such that for each \(j=1,\dots ,K\),

$$\begin{aligned} d_w({\widehat{t}}_{L,\phi _L(j)}, t_{L,j})&\le \varepsilon _L; \quad |{{\widehat{u}}_{L,\phi _L(j)} - u_{L,j}}| < \widetilde{C}_L \varepsilon _L {u_{\max }} = E_L(\varepsilon _L) {\varepsilon _{L} u_{\max }}. \end{aligned}$$(3.44)This completes the proof.

- 1.

\(\square \)

4 Unmixing Gaussians in Fourier Domain: Noisy Case

We now analyze the noisy setting where we acquire noisy values of the of the Fourier transform of f at the sampling location (frequency) s. In particular, at stage p (\(1 \le p \le L\)) in Algorithm 2, and frequency s, let \(w_p(s)\) denote the additive observation noise on the clean Fourier sample f(s). Denoting the noisy measurement by \(\widetilde{f}(s)\), this means that at stage p,

In addition to the terms defined at the beginning of Sect. 3.2, we will need an additional term (defined below) which will be used in the statement of our theorem.

where \(C_{l,1}'\), \(C_{l,2}'\) are as defined in (3.21).

Theorem 6

For a constant \(c \in (0,1)\), let \(0< \varepsilon _L < c\triangle _L/2\), \(\frac{2}{\triangle _L - 2\varepsilon _L} \le m_L < M_{{L},\mathrm {up}}\) and \(s_L = 0\). Moreover, for \(l = L-1, \dots , 1\), say we choose \(\varepsilon _l,m_l,s_l\) as follows.

- 1.

\(0< \varepsilon _l < c\triangle _l/2\) additionally satisfies the following conditions.

- (a)

\((2\pi M_{{L},\mathrm {up}} + E_{L-1}(\varepsilon _{L-1})) \varepsilon _{L-1} u_{\mathrm {rel}}\le \varepsilon _{L} e^{-2\pi ^2(\mu _{L}^2-\mu _1^2)M_{{L},\mathrm {up}}^2} \frac{\mu _{L} B(u_{\mathrm {rel}},K)}{2 K (L-1) \mu _{L-1}}\).

- (b)

If \(l < L-1\), then \(\varepsilon _{l} \le \varepsilon _{l+1}\), \(E_l(\varepsilon _{l}) \varepsilon _{l} \le E_{l+1}(\varepsilon _{l+1}) \varepsilon _{l+1}\) and

$$\begin{aligned} (F'_{l+1}(\varepsilon _{l}) + E_{l}(\varepsilon _{l})) \varepsilon _{l} u_{\mathrm {rel}}\le \varepsilon _{l+1} e^{-(\mu _{l+1}^2-\mu _1^2)\frac{F_{l+1}^{'^2}(\varepsilon _{l+1})}{2}} \frac{\mu _{l+1} B(u_{\mathrm {rel}},K)}{3 K l \mu _{l}}. \end{aligned}$$

- (a)

- 2.

\(\frac{2}{\triangle _l - 2\varepsilon _l} \le m_l < M_{{l},\mathrm {up}}\), and \(S_l \le s_l \le \widetilde{c} S_l\) (for constant \(\widetilde{c} > 1\)) where

$$\begin{aligned} S_l = m_l + \frac{1}{(2\pi ^2(\mu _{l+1}^2 - \mu _l^2))^{1/2}} \log ^{1/2}\left( {\frac{b_l K (L-l) \mu _L u_{\mathrm {rel}}}{\varepsilon _l \mu _l B(u_{\mathrm {rel}},K)}} \right) , \end{aligned}$$with \(b_l = 2\) if \(l = 1\), and \(b_l = 3\) otherwise.

Assume that the noise satisfies the conditions

Then, for each \(l=1,\dots ,L\), there exists a permutation \(\phi _l: [K] \rightarrow [K]\) such that

Since the organisation and the arguments of the proof of Theorem 6 are almost identical to that of Theorem 5, we defer this proof to Appendix D where we pinpoint the main differences between the noiseless and the noisy settings.

Interpreting Theorem 6 Theorem 6 is almost the same as Theorem 5 barring the conditions on the magnitude of external noise (and minor differences in some constants). Specifically, conditions (4.2)–(4.4) state that at stage l, the magnitude of the noise should be small relative to the desired accuracy parameter \(\varepsilon _l\). This is examined in more detail below. For convenience, we will now assume \(u_{\min },u_{\max }\asymp 1\).

- 1.

(Condition on \(\Vert {w_l(\cdot )}\Vert _{\infty }\) for \(1 \le l \le L-1\)) Let us start with the case \(2 \le l \le L-1\). Condition (4.3) states that

$$\begin{aligned} \left| \frac{w_l(i)}{\bar{g}_l(s_l+i)} \right| \lesssim \frac{\varepsilon _l}{\sqrt{K}}; \quad i=-m_l,\dots ,m_l-1. \end{aligned}$$(4.5)Now, \(|{\frac{w_l(i)}{\bar{g}_l(s_l+i)}}| = \frac{|{w_l(i)}|}{\sqrt{2\pi }\mu _l} e^{2\pi ^2(s_l + i)^2 \mu _l^2}\). Since \(s_l > m_l\), therefore \(s_l + i > 0\) for the given range of i. Hence, \((s_l+i)^2< (s_l+m_l)^2 < F_l^{'^2}(\varepsilon _l)\) for each i. Since \(F_l^{'}(\varepsilon ) \asymp F_l(\varepsilon )\), therefore using the order wise dependency from (3.24), we obtain for each i that

$$\begin{aligned} \frac{|{w_l(i)}|}{\sqrt{2\pi }\mu _l} e^{2\pi ^2(s_l + i)^2 \mu _l^2} \lesssim \frac{|{w_l(i)}|}{\mu _l}\left( \frac{K^{3/2}L \mu _L}{\varepsilon _l\mu _l}\right) ^{C(\mu _l,\mu _{l+1},\triangle _l)}, \end{aligned}$$(4.6)where \(C(\mu _l,\mu _{l+1},\triangle _l) > 0\) depends only on \(\mu _l,\mu _{l+1},\triangle _l\). Hence from (4.5), (4.6), we see that (4.3) is satisfied if

$$\begin{aligned} \Vert {w_l}\Vert _{\infty } \lesssim \frac{(\varepsilon _l\mu _l)^{1+C(\mu _l,\mu _{l+1},\triangle _l)}}{\sqrt{K} (K^{3/2} L \mu _L)^{C(\mu _l,\mu _{l+1},\triangle _l)}}; \quad 2 \le l \le L-1. \end{aligned}$$(4.7)In a similar manner, one can easily show that (4.2) is satisfied if

$$\begin{aligned} \Vert {w_1}\Vert _{\infty } \lesssim \frac{(\varepsilon _1\mu _1)^{1+C(\mu _1,\mu _{2},\triangle _1)}}{\sqrt{K} (K^{3/2} L \mu _L)^{C(\mu _1,\mu _{2},\triangle _1)}}. \end{aligned}$$(4.8) - 2.

(Condition on\(\Vert {w_L}\Vert _{\infty }\)) In this case, \(s_L = 0\) and so \((s_L + i)^2 \le m_L^2\). Therefore for each \(i=-m_L,\dots ,m_L-1\), we obtain

$$\begin{aligned} \left| \frac{w_L(i)}{\bar{g}_L(s_L+i)} \right| = \frac{|{w_L(i)}|}{\sqrt{2\pi }\mu _L} e^{2\pi ^2(i)^2 \mu _L^2} \lesssim \frac{|{w_L(i)}|}{\mu _L} e^{2\pi ^2 \mu _L^2/\triangle _L^2}. \end{aligned}$$(4.9)Hence from (4.9), we see that (4.4) is satisfied if

$$\begin{aligned} \Vert {w_L}\Vert _{\infty } \lesssim \mu _L e^{-\mu _L^2/\triangle _L^2} \frac{\varepsilon _L}{\sqrt{K}}. \end{aligned}$$(4.10)

(4.8), (4.7), (4.10) show the conditions that the noise level is required to satisfy at the different levels. From the discussion following Theorem 5, we know that the \(\varepsilon _i\)’s gradually become smaller and smaller as we move from \(i=L\) to \(i=1\) (with \(\varepsilon _1\) being the smallest). Therefore the condition on \(\Vert {w_1}\Vert _{\infty }\) is the strictest, while the condition on \(\Vert {w_L}\Vert _{\infty }\) is the mildest.

Corollary for the Case \(L = 1\). As noted earlier, the case \(L = 1\) is not interesting in the absence of external noise as we can exactly recover the source parameters. The situation is more interesting in the presence of noise as shown in the following Corollary of Theorem 6 for the case \(L=1\).

Corollary 2

For a constant \(c \in (0,1)\), let \(0< \varepsilon _1 < c\triangle _1/2\), \(\frac{2}{\triangle _1 - 2\varepsilon _1} \le m_1 < \frac{2}{\triangle _1(1-c)} + 1\) and \(s_1 = 0\). Moreover, denoting \(C = 10 + \frac{1}{\sqrt{2}}\), assume that the noise satisfies

Then, there exists a permutation \(\phi _1: [K] \rightarrow [K]\) such that for \(j=1,\dots ,K\), we have

Assuming \(u_{\min },u_{\max }\asymp 1\), we see from (4.10) that (4.11) is satisfied if \(\Vert {w_1}\Vert _{\infty } \lesssim \mu _1 e^{-\mu _1^2/\triangle _1^2} \frac{\varepsilon _1}{\sqrt{K}}\).

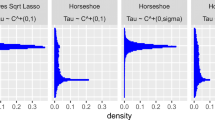

Scatter plots for maximum wrap around error (\(d_{w,l,\max }\)) v/s minimum separation (\(\triangle _l\)) for 400 Monte Carlo trials, with no external noise. This is shown for \(K \in \left\{ {2,3,4,5}\right\} \) with \(L = 4\) and \(C = 0.6\). For each sub-plot, we mention the percentage of trials with \(d_{w,l,\max } \le 0.05\) in parenthesis