Abstract

The guaranteed cost finite-time stability of positive switched fractional-order systems (PSFS) with D-disturbance and impulse is studied based on the \(\Phi \)-dependent average dwell time (\(\Phi \)DADT) strategy. Firstly, the finite-time stability of the studied system is proved by constructing a linear co-positive Lyapunov function. Secondly, the system’s guaranteed cost analysis is given with the estimated upper bound of the cost. In addition, the finite-time certain and robust controllers are designed to ensure the system’s stabilization. A numerical example is finally given to signify the validity of the conclusions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Up to now, fractional-order systems have emerged in various fields [1, 3,4,5, 8, 11]. In particular, as a special kind of fractional-order system, PSFS has attracted more and more attention and its stability has been studied by a large number of scholars. There are many papers on the asymptotic stability of PSFS [2, 25], that is, the stability in sufficiently long or infinite time intervals. However, in many cases, the state of the system only needs to remain in a certain range for a finite time interval. Therefore, the so-called finite-time stability (FTS) was first proposed in the reference [21]. The pieces of the literature [13, 24] applied the concept of FTS to PSFS. Later, the paper [14] studied the guaranteed cost FTS of PSFS. On the basis of FTS, guaranteed cost FTS adds a condition of guarantee cost, that is, an upper bound value of the cost is determined.

There are many factors affecting the stability of PSFS, for example, switching signals, external factors, impulse behavior and unstable subsystems. The reference [24] studied the FTS of the PSFS under average dwell time (ADT) switching, and references [15, 18] studied the FTS of the PSFS under mode-dependent ADT switching. However, there is no conclusion on the stability of PSFS under \(\Phi \)DADT strategy [22], which covers both mode-dependent ADT and ADT schemes and is more effective than the two. At the same time, external factors may also affect the stability of PSFS, such as external disturbance, tool wear and model error, many people take these factors as D-disturbance [17]. The reference [18] gave the conclusion of the guaranteed cost FTS of PSFS with D-disturbance. If there is impulse dynamics behavior, that is, the state of the system changes instantaneously, then it may also affect the stability of the PSFS [9, 12]. The reference [15] studied the FTS of PSFS when the impulse occurred only at the switching time. The reference [16] studied the guaranteed cost FTS of the PSFS with impulse occurring at any time. In addition, it is known that a switched system with unstable subsystems may be stabilized by designing a set of suitable controllers and switching signals. In the process of controller design, some small uncertain factors can affect the efficiency of the designed controller. Therefore, the fragility of the controller may also affect the system’s stability. References [19, 20] eliminated this uncertainty by designing a non-fragile controller to ensure that the system’s performance reaches an ideal bound. The above works only consider one or two factors when studying the stability and control issues of the PSFS. As far as we know, there are no research results on the situation where the four factors are considered simultaneously, and it is worth exploring further.

Inspired by the above works, the article studies the guaranteed cost FTS of PSFS with D-disturbance and impulse under the \(\Phi \)DADT strategy. The main contributions can be summarized as follows: (1) Four kinds of instability factors, switching signals, external factors, impulse behavior and unstable subsystems, are first-ever considered simultaneously for PSFS. (2) The \(\Phi \)DADT strategy is applied to the PSFS for the first time, and better stability results are obtained. (3) The conclusions obtained not only cover most of the existing relevant results but also provide some new situations that were not previously considered.

The outline of this paper is as follows. Section 2 gives some preliminary knowledge. In Sect. 3, the guaranteed cost FTS condition of the PSFS with D-disturbance and impulses are given under the \(\Phi \)DADT strategy, and the finite-time certain and robust controllers are designed to ensure the stability of the closed-loop PSFS. In Sect. 4, an example is given to signify the obtained results’ validity. The final section provides the conclusion.

2 Problem Formulation and Preliminaries

In this paper, \(\mathbb {R}\) (\(\mathbb {R}_{+}\)) and \(\mathbb {N}_{+}\), respectively, represent the set of real (positive) numbers and positive integers. \(\mathbb {R}^{n}\) (\(\mathbb {R}^{n}_{+}\)) and \(\mathbb {R}^{n\times n}\) stand for the set of n-dimensional real (positive) vectors and \(n\times n\) real matrices, respectively. The notation \(\forall \) \((\in ,\) \(\triangleq )\) means “for all” (“in,” “a shorthand for”). The notation \(\Longleftrightarrow \) \((\Longrightarrow ,\Longleftarrow )\) means “if and only if” (“sufficient conditions,” “necessary conditions”). \(\succ \) is used to represent the semi-order relationship between vectors. In addition, T represents the transpose for a vector or matrix, and unless otherwise stated, the matrix dimensions in this paper are adaptive to operation. \(M([t_{0},t_{1}])\) and \(C([t_{0},t_{1}])\) represent the space of integrable and continuous functions on time interval \([t_{0},t_{1}]\), respectively. \(\Gamma (\alpha )\) is the Gamma function of the variable \(\alpha \).

First, we review the fractional-order integral and derivative and some inequalities used in this paper.

Definition 1

For \(\forall g(t)\in M([t_{0},t])\) and \(\alpha \in (0,1)\), the fractional integral of \(\alpha \)-order of g(t) is given as follows:

Definition 2

For \(\forall g(t)\in M([t_{0},t])\) and \(\alpha \in (0,1)\), the Caputo fractional derivative of \(\alpha \)-order of g(t) is given as follows

Lemma 1

(Gronwall–Bellman inequality) Assume \(M\ge 0\), nonnegative function \(u(t),v(t)\in C([t_{0},t_{1}])\), and satisfy \(u(t)\le M+\int _{t_{0}}^{t_{1}}u(s)v(s)\text {d}s,t\in [t_{0},t_{1}]\), then

Lemma 2

(\(C_{p}\) inequality) For \(\alpha \in (0,1)\) and any \(z_{i}\in \mathbb {R}_{+},i=1,2,\cdots ,k\), then

Lemma 3

(Young’s inequality) For \(\forall \) \(g,h\in \mathbb {R_{+}}\) and \(x,z\in \mathbb {R}\), then

Consider the following PSFS

where \(z(t)\in \mathbb {R}^{n}\) and \(u(t)\in \mathbb {R}^{m}\) stand for the system state and control input, respectively. Let \(t_{0}=0\) be the initial time. \(\delta (t):[t_{0},+\infty )\mapsto S=\{1,2,\cdots ,s\}\) is the switching signal, and the impulsive signal \(\varrho (t)\) takes its value from \(H=\{1,2,\cdots ,h\}\), where s and \(h\in \mathbb {N}_{+}\) are the numbers of subsystems and impulsive, respectively. Perturbations \(D_{i}\in [\underline{D}_{i},{\overline{D}}_{i}],(i=1,2,3)\), where \({\overline{D}}_{i}\succeq {\underline{D}}_{i}\succeq 0,\) and matrices \({\overline{D}}_{i},\underline{D}_{i}\) are all diagonal. \(A_{p},B_{p}\) (\(\forall p\in S\)) and \(C_{r}\) (\(\forall r\in H\)) are constant matrices. \(t_{0},t_{1},\cdots ,t_{k},t_{k+1},\cdots \) are the sequence of switching instants over \([t_{0},\infty )\). For \(t\in [t_{k},t_{k+1}),t_{k,i},i=0,1,2,\cdots ,g_{k},\) is the ith impulsive instant over \([t_{k},t_{k+1})\) satisfying \(t_{k}=t_{k,0}\le t_{k,1}<t_{k,2}<\cdots< t_{k,g_{k}}<t_{k+1}\).

Remark 1

In system (1), \(\delta (t)\) and \(\varrho (t)\) are the switching signal and the impulsive signal, respectively. If they are the same (i.e., impulses only occur at switching time), then system (1) only considers the impact of impulse caused by subsystem switching. In this paper, they are different, that is, impulses can occur at any time. Obviously, we consider a more general form of impulsive signal that includes the former.

Lemma 4

[15] The system (1) is positive \(\Longleftrightarrow \) \(\forall m\in S, r\in H,\) \(D_{1}A_{m}\) are Metzler matrices, \(D_{2}B_{m}\succeq 0\) and \(D_{3}C_{r}\succeq 0\).

Let \(\widetilde{A}_{m}=\overline{D}_{1}A_{m},\widetilde{B}_{m}= \overline{D}_{2}B_{m},\widetilde{C}_{r}=\overline{D}_{3}C_{r}\). Then consider the following system:

Remark 2

According to Lemma 4, we know that the system (2) is positive \(\Longleftrightarrow \) \(\forall m\in S, r\in H,\) \({\widetilde{A}}_{m}\) are Metzler matrices, \({\widetilde{B}}_{m}\succeq 0\) and \(\widetilde{C}_{r}\succeq 0\). That is, the stability of system (1) can be obtained by studying system (2).

Now, we present the \(\Phi \)DADT switching strategy required for this article. Let \(\mathcal {O}=\{1,2,\cdots ,u\}\), where \(u\in \mathbb {N}_{+}\) and \(u\le s\). Define the mapping \(\Phi :S\mapsto \mathcal {O}\) to be surjective and \(\Phi _{i}=\{p\in S|\Phi (p)=i\}\).

Definition 3

[22] For a known \(\delta (t)\) and \(\forall t_{2}\ge t_{1}\ge 0\). Let \(N_{\delta \Phi _{i}}(t_{1},t_{2})\) denote total switching numbers of subsystems group \(\Phi _{i}\) being activated over \([t_{1},t_{2})\), and \(T_{\Phi _{i}}(t_{1},t_{2})\) denote the sum of the running time of subsystems group \(\Phi _{i}\) over \([t_{1},t_{2})\). If there are positive constants \(N_{0\Phi _{i}}\) and \(\tau _{l\Phi _{i}}\), such that

then we say \(\delta (t)\) has a \(\Phi \)DADT \(\tau _{l\Phi _{i}}\).

Definition 4

[16] For a impulsive signal \(\varrho (t)\) and any \(r_{2}\ge r_{1}\ge 0\), let \(M_{\varrho }(r_{1},r_{2})\) denote impulse numbers over the interval \([r_{1},r_{2})\), \(T(r_{1},r_{2})\) is the running time over the interval \([r_{1},r_{2})\). If there are positive constants \(M_{0}\) and \(T_{l}\), such that

then \(\varrho (t)\) is said to have an average impulsive interval \(T_{l}\).

Remark 3

Ordinarily the values of \(N_{0\Phi _{i}}\) and \(M_{0}\) do not affect the conclusion, so this paper sets \(N_{0\Phi _{i}}=0\) and \(M_{0}=0\) for the convenience of calculation.

Definition 5

[15] For a given time constant \(T_{f}\) and two vectors \(\sigma \succ \varepsilon \succ 0\), the system (2) is said to be finite time stable (FTS) with respect to \((\sigma ,\varepsilon ,T_{f},\delta (t))\), if

Finally, the cost function of the paper is given as follows:

3 Main Results

3.1 Guaranteed Cost Finite-Time Stability Analysis

This part gives a conclusion on the guaranteed cost FTS of PSFS (2) without u(t) under \(\Phi \)DADT switching.

Theorem 1

Consider the system (2) with average impulsive interval \(T_{l}\). Suppose that there exist positive constants \(\zeta _{1},\zeta _{2}\) \((\zeta _{1}>\zeta _{2}),\) \(T_{i},\) \(\lambda _{i}>1,\) \(\mu _{i}\ge 1,i\in \mathcal {O}\), \(\omega \ge 1\), and positive vectors \(v_{p},p\in S\), \(r\in H\), vectors \(\sigma \succ \varepsilon \succ 0\), and \(R_{1}\succ 0\), \(\Phi (p)=i\), such that

then the FTS with respect to \((\sigma ,\varepsilon ,T_{i},\delta (t))\) for the system (2) with \(u(t)=0\) can be obtained under the \(\Phi \)DADT satisfying

and the system cost is given as follows

Proof

Firstly, we prove the FTS of the PSFS (2).

Constructing the candidate switching Lyapunov function

For \(t\in [t_{k,g_{k}},t_{k+1})\), it follows from (2) that

Then combine (3), one gets

When \(t=t_{k,g_{k}}\), from (2) and (5),

If \(t=t_{k}\), from (6), one has

For \(t\in [t_{k,g_{k}},t_{k+1}]\), take the integral of \(\alpha \)-order on two sides of (13) over the interval \([t_{k,g_{k}},t]\), then

Together with (7) and (11), (16) can overwrite as

Combining the Gronwall–Bellman inequality,

Then for \(t\in [t_{0},T_{f}]\), by (14) and (18),

Again according to (15), we can obtain

where \(M_{i}\triangleq M_{\varrho \Phi _{i}}(t_{0},T_{f}),N_{i} \triangleq N_{\delta \Phi _{i}}(t_{0},T_{f})\), namely \(\sum ^{s}_{i=1}M_{i}=M_{\varrho },\sum ^{s}_{i=1}N_{i}=N_{\delta }\).

From Lemmas 2 and 3, (20) can overwrite as

where \(T_{i}\triangleq T_{\Phi _{i}}(t_{0},T_{f})\), namely \(\sum ^{s}_{i=1}T_{i}=T_{f}\). Then from Definitions 3 and 4, we have

From (4) and (11), it can be further gotten that

and

Substituting (9) into (25), and when \(z^{T}(t_{0})\sigma \le 1\), we get

Therefore, by Definition 5, system (2) is FTS respecting \((\sigma ,\varepsilon ,T_{f},\delta (t))\).

Secondly, we give proof of the guaranteed cost of the PSFS (2).

According to (14) and (16), we can derive

Continue to iterate,

Therefore, we further obtain

From (24), letting \(P=\zeta _{2}\{z^{\textrm{T}}(t_{0})\sigma \}\exp \{\sum ^{s}_{i=1} \frac{\ln \omega }{T_{l}}T_{i}+\sum ^{s}_{i=1} \frac{\ln {\mu _{i}}}{\tau _{l\Phi _{i}}}T_{i} +\frac{\sum ^{s}_{i=1}(\lambda _{i}-1)}{\Gamma (\alpha +1)}[(1-\alpha ) (\frac{T_{i}}{T_{l}}+\frac{T_{i}}{\tau _{l\Phi _{i}}}+1)+\alpha T_{i}]\}\), then we know \(V_{\delta (t)}(t,z(t))\le P\). Therefore, (29) can be rewritten as

Noting that \(V_{\delta (t)}>0\), therefore

Letting \(\lambda =\max _{i\in \mathcal {O}}\{\lambda _{i}\},\mu =\max _{i\in \mathcal {O}}\{\mu _{i}\},\tau _{l}=\max _{i\in \mathcal {O}}\{\tau _{l\Phi _{i}}\}\), so

where \(W_{i}\triangleq (1-\alpha )\left( \frac{T_{i}}{T_{l}}+\frac{T_{i}}{\tau _{l}}+1\right) +\alpha T_{i}\) and \(W_{f}\triangleq (1-\alpha )\left( \frac{T_{f}}{T_{l}} +\frac{T_{f}}{\tau _{l}}+1\right) +\alpha T_{f}.\) \(\square \)

Remark 4

By taking \(\mathcal {O}=\{1\}\), and \(\mathcal {O}=S\) in Theorem 1, we can obtain the guaranteed cost FTS conditions of PSFS (2) under ADT strategy and mode-dependent ADT strategy, respectively.

3.2 Design of Finite-Time (Non-fragile) Controller

Now we first give the design of finite-time controllers for system (2).

Under the controller \(u(t)=K_{\delta (t)}z(t)\), the following closed-loop system is given

Theorem 2

Consider the system (34) with average impulsive interval \(T_{l}\). Suppose that, for \(p\in S\), \(r\in H\), \(\Phi (p)=i\in \mathcal {O}\), there exist positive constants \(\zeta _{1},\zeta _{2} (\zeta _{1}>\zeta _{2})\), \(T_{i}, \lambda _{i}>1, \mu _{i}\ge 1\), \(\omega \ge 1\), and positive vectors \(v_{p}\), \(\sigma \succ \varepsilon \succ 0\), \(R_{1}\succ 0\) and \(R_{2}\succ 0\), such that (4)-(6), (8) and following inequalities hold:

where \(g_{p}=K^{\textrm{T}}_{p}{\widetilde{B}}^{\textrm{T}}_{p}v_{p}\). Then, system (34) is FTS respecting \((\sigma ,\varepsilon ,T_{i},\delta (t))\) under the \(\Phi \)DADT satisfying (9), and the cost is given as follows

Proof

The system positivity can be gotten from Lemma 4 and (35). Replacing \({\widetilde{A}}_{p}\) in (3) with \(\widetilde{A}_{p}+{\widetilde{B}}_{p}K_{p}\), then according to Theorem 1, we conclude that the system (34) is FTS with the cost (38).

Next, take \(u(t)=(K_{\delta (t)}+\Delta K_{\delta (t)})z(t)\), the closed-loop system is as follows

where \(\Delta K_{\delta (t)}\) are the uncertainty matrices satisfying \(\Delta K_{\delta (t)}\in [\overline{K}_{1},\overline{K}_{2}]\), \(\overline{K}_{1}\) and \(\overline{K}_{2}\) are known matrices. \(\square \)

Theorem 3

Consider the system (39) with average impulsive interval \(T_{l}\). Suppose that there exist positive constants \(\zeta _{1},\zeta _{2}(\zeta _{1}>\zeta _{2})\) and positive vectors \(v_{p},p\in S\), \(r\in H\), positive constants \(T_{i},\lambda _{i}(\lambda _{i}>1),\mu _{i}(\mu _{i}\ge 1),i\in \mathcal {O}\), \(\omega (\omega \ge 1)\), vectors \(\sigma \succ \varepsilon \succ 0\), \(R_{1}\succ 0\) and \(R_{2}\succ 0\), \(\Phi (p)=i\), such that (4)-(6), (8) and following inequalities hold:

where \(g_{p}=K^{\textrm{T}}_{p}{\widetilde{B}}^{\textrm{T}}_{p}v_{p}\). Then, system (39) is FTS respecting \((\sigma ,\varepsilon ,T_{i},\delta (t))\) under the \(\Phi \)DADT satisfying (9), and the system cost is given by (38).

Proof

According to \(\Delta K_{\delta (t)}\in [\overline{K}_{1},\overline{K}_{2}]\), we have \({\widetilde{A}}_{p}+{\widetilde{B}}_{p}(K_{p}+\overline{K}_{1})\le {\widetilde{A}}_{p}+{\widetilde{B}}_{p}(K_{p}+\Delta K_{p})\le \widetilde{A}_{p}+{\widetilde{B}}_{p}(K_{p}+\overline{K}_{2})\). From (40) and (41), we get \({\widetilde{A}}_{p}+{\widetilde{B}}_{p}(K_{p}+\Delta K_{p})\) are also Metzler matrices, so (39) is positive. Because \(\widetilde{A}_{p}^{\textrm{T}}v_{p}+g_{p}+\Delta K^{\textrm{T}}_{p}\widetilde{B}^{\textrm{T}}_{p} v_{p}+R_{1}+K^{\textrm{T}}_{p}R_{2}+\Delta K^{\textrm{T}}_{p}R_{2} \prec \widetilde{A}_{p}^{\textrm{T}}v_{p}+g_{p}+\overline{K}^{\textrm{T}}_{2} \widetilde{B}^{\textrm{T}}_{p}v_{p}+R_{1} +K^{\textrm{T}}_{p}R_{2}+\overline{K}^{\textrm{T}}_{2}R_{2}\), \({\widetilde{A}}_{p}^{\textrm{T}}v_{p}+g_{p}+\Delta K^{\textrm{T}}_{p}\widetilde{B}^{\textrm{T}}_{p} v_{p}+R_{1}+K^{\textrm{T}}_{p}R_{2}+\Delta K^{\textrm{T}}_{p}R_{2}\preceq \lambda _{i}v_{p}\). Because \(R_{1}+K^{\textrm{T}}_{p}R_{2}+\overline{K}^{\textrm{T}}_{1}R_{2}\prec R_{1}+K^{\textrm{T}}_{p}R_{2}+\Delta K^{\textrm{T}}_{p}R_{2}\), \(v_{p}\prec R_{1}+K^{\textrm{T}}_{p}R_{2}+\Delta K^{\textrm{T}}_{p}R_{2}\). Replace \(K_{p}\) in (36) and (37) with \(K_{p}+\Delta K_{p}\). Then according to Theorem 2, we conclude that it is FTS with the cost (38). \(\square \)

Remark 5

In Theorems 1-3, there are some unknown nonlinear terms in conditions, for example, \(\lambda _{i}, v_{p}, K_{p}\), and \(g_{p}\). Here are the following steps to solve these problems.

Step 1: Based on the cost function under consideration, one can determine positive constant \(T_{i}\) and vectors \(\sigma \), \(\varepsilon \), \(R_1\), \(R_2\).

Step 2: Based on the system’s information, one can directly calculate the matrices \({\widetilde{A}}_p\), \({\widetilde{B}}_p\), \({\widetilde{C}}_r\), \(\overline{K}_{1}\), \(\overline{K}_{2}\).

Step 3: Select the parameters appropriately \(\zeta _{1}, \zeta _{2}, T_{l}\), \(\alpha \), \(\lambda _i\), \(\mu _i\) and \(\omega \).

Step 4: By adjusting the parameters \(\lambda _i\), \(\mu _i\) and \(\omega \) and solving the inequalities in Theorem 1 via linear programming, we obtain the solution \(v_p\), \(\tau ^{*}_{l\Phi _{i}}\).

Step 5: By solving the inequalities of Theorem 2/3, one can obtain \(g_p\) and \(K_{p}\).

Remark 6

As we know, the Caputo fractional-order derivatives with the non-switched system are relevant to all history states. In fact, there are two types of research structures for switched fractional-order systems, namely fractional-order derivative related to all previous states or only related to all previous states after switching. There are many results for the first type [6, 7, 10]. However, some practical systems can be modeled as the second type, such as manual vehicle driving systems, vertical takeoff and landing helicopters, and the birth and death process. On the other hand, compared to the first one, which is complex and cumbersome, the second reduces a lot of redundant calculations and saves actual costs. Thus, the following example adopts the second type of structure.

4 A Numerical Example

Consider the PSFS (39) with the following parameters:

where \((A_{i},B_{i})\) (\(i=1,2,3\)) represents the ith subsystem and \(C_{i}\) (\(i=1,2,3\)) represents the ith impulsive. Clearly, \(A_{i}\) are unstable.

By Theorem 3, we design the following non-fragile controllers

then

Therefore, the matrices of the closed-loop stabilization subsystem are as follows:

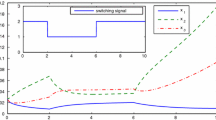

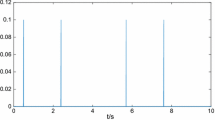

Let \(\alpha =0.9,T_{f}=8,T_{l}=2,\omega =1.6,\zeta _{1}=31,\zeta _{2}=1\). Then we present some different switching signal designs based on the different groups (\(\Phi ,\mathcal {O}\)) by choosing some appropriate parameters.

It can be seen in Table 1.

(1). When \(\mathcal {O}=\{1,2,3\}\) and \(\mathcal {O}=\{1\}\), we can respectively obtain the mode-dependent ADT and ADT strategies. At the same time, it can be clearly seen the relationship between the two strategies.

(2). By choosing different grouping functions \(\Phi \), we obtain the switching signal of each column separately, that is to say, the stability conditions of each column are different, and have their own virtues. For example, \(\mathcal {O}=\{1\}\) only focuses on the compensation among subsystems and \(\mathcal {O}=\{1,2,3\}\) only thinks about the differences of among subsystems, while \(\mathcal {O}=\{1,2\}\) takes into account both.

5 Conclusion

In this paper, the guaranteed cost FTS of PSFS with D-disturbance and impulse is studied under the \(\Phi \)DADT strategy. The impulse can occur at any time of the switched system, namely the switching time and the non-switching time. The impulse time refers to the average impulse interval. In addition, both certain and robust controllers are designed to ensure the closed-loop system’s finite-time stability with guaranteed cost. Finally, a numerical example is given to signify the validity of the conclusions. It is one of our subsequent works that how to extend the results of this article to the guaranteed cost FTS of non-positive fractional-order switched systems. On the other hand, recently, a more general strategy (named “binary F-dependent ADT”) covering the \(\Phi \)DADT has been proposed [23]. The corresponding achievements based on the binary F-dependent ADT will become one of the directions for our future in-depth research.

Data Availability

It is confirmed that the proposed manuscript does not contain any additional data beyond what is already included. If any reader want to obtain additional files to verify the results, which can be obtained from the corresponding author upon reasonable request.

References

M. Aghababa, M. Borjkhani, Chaotic fractional-order model for muscular blood vessel and its control via fractional control scheme. Complexity 20(2), 37–46 (2015)

A. Babiarz, A. Legowski, and M. Niezabitowski, Controllability of positive discrete-time switched fractional order systems for fixed switching sequence. in International conference on computational collective intelligence. Springer, Cham. pp. 303–312 (2016)

D. Baleanu, Z. Guvenc, J. Machado, New Trends in Nanotechnology and Fractional Calculus Applications (Springer, Netherlands, 2010)

R. Elkhazali, Fractional-order (PID \(\mu \))-\(\text{ D}_\lambda \) controller design. Appl. Math. Comput. 66(5), 639–646 (2013)

K. Erenturk, Fractional-order (PID \(\mu \))-\(\text{ D}_\lambda \) and active disturbance rejection control of nonlinear two-mass drive system. IEEE Trans. Industr. Electron. 60(9), 3806–3813 (2013)

M. Fečkan, Y. Zhou, J. Wang, On the concept and existence of solution for impulsive fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 17, 3050–3060 (2012)

T. Feng, L. Guo, B. Wu et al., Stability analysis of switched fractional-order continuous-time systems. Nonlinear Dyn. 102(4), 2467–2478 (2020)

T. Hartley, C. Lorenzo, H. Qammer, Chaos in a fractional-order Chuas system. IEEE Trans. Circuit Syst. I(42), 485–490 (1995)

S. Hong, Y. Zhang, Stability of switched positive linear delay systems with mixed impulses. Int. J. Syst. Sci. 50(16), 1–16 (2019)

J. Hu, Comments on “Lyapunov and external stability of Caputo fractional order switching systems’’. Nonlinear Anal. Hybrid Syst 40, 101016 (2021)

H. Jia, Z. Chen, W. Xue, Analysis and circuit implementation for the fractional-order Lorenz system. Physics 62(14), 31–37 (2013)

H. Li, X. Xu, X. Ding, Finite-time stability analysis of stochastic switched Boolean networks with impulsive effect. Appl. Math. Comput. 347, 557–565 (2019)

J. Liang, B. Wu, Y. Wang, Input-output finite-time stability of fractional-order positive switched systems. Circuits Syst. Signal Process. 38(4), 1619–1638 (2019)

L. Liu, X. Cao, Z. Fu et al., Guaranteed cost finite-time control of fractional-order positive switched systems. Adv. Math. Phys. 3, 1–11 (2017)

L. Liu, X. Cao, Z. Fu, Finite-time control of uncertain fractional-order positive impulsive switched systems with mode-dependent average dwell time. Circuits Syst. Signal Process. 37(9), 3739–3755 (2018)

L. Liu, Y. Di, Y. Shang, Z. Fu, B. Fan, Guaranteed cost and finite-time non-fragile control of fractional-order positive switched systems with asynchronous switching and impulsive moments. Circuits Syst. Signal Process. 40, 3143–3160 (2021)

J. Liu, J. Lian, Y. Zhuang, Robust stability for switched positive systems with D-perturbation and time-varying delay. Inf. Sci. 369, 522–531 (2016)

L. Liu, X. Cao, Z. Fu, Guaranteed cost finite-time control of fractional-order nonlinear positive switched systems with D-perturbations via mode-dependent ADT. J. Syst. Sci. Complexity 32(3), 857–874 (2019)

L. Liu, Z. Fu, X. Cai, Non-fragile sliding mode control of discrete singular systems. Commun. Nonlinear Sci. Numer. Simul. 18(3), 735–743 (2013)

J. Luo, W. Tian, S. Zhong, Non-fragile asynchronous event-triggered control for uncertain delayed switched neural networks. Nonlinear Anal. Hybrid Syst 29, 54–73 (2018)

D. Peter, Short-time stability in linear time-varying systems. Polytechnic Institute of Brooklyn. pp. 83-87 (1961)

Q. Yu, G. Zhai, Stability analysis of switched systems under \(\Phi \)-dependent average dwell time approach. IEEE Access. 8, 30655–30663 (2020)

Q. Yu, and N. Wei, Stability criteria of switched systems with a binary F-dependent average dwell time approach. J. Control Decision. (2023)

J. Zhang, X. Zhao, Y. Chen, Finite-time stability and stabilization of fractional order positive switched systems. Circuits Syst. Signal Process. 35(7), 2450–2470 (2016)

X. Zhao, Y. Yin, X. Zheng, State-dependent switching control of switched positive fractional order systems. ISA Trans. 62, 103–108 (2016)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no relevant conflict of interest to report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yu, Q., Wei, N. Finite-time Guaranteed Cost Control of Positive Switched Fractional-Order Systems Based on \(\Phi \)-Dependent ADT Switching. Circuits Syst Signal Process 43, 1452–1472 (2024). https://doi.org/10.1007/s00034-023-02556-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02556-3