Abstract

Multimedia data have increased dramatically today, making the distinction between desirable information and other types of information extremely important. Speech/music discrimination is a field of audio analytics that aims to detect and classify speech and music segments in an audio file. This paper proposes a novel feature extraction method called Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR). The proposed feature computes the average frequency-domain mean-crossing rate along the frequency axis for each of the perceptual Mel-scaled frequency bands of the signal power spectrum. In this paper, the class-separation capability of this feature is first measured by well-known divergence criteria such as Maximum Fisher Discriminant Ratio (MFDR), Bhattacharyya divergence, and Jeffreys/Symmetric Kullback–Leibler (SKL) divergence. The proposed feature is then applied to the speech/music discrimination (SMD) process on two well-known speech-music datasets—GTZAN and S &S (Scheirer and Slaney). The results obtained on the two datasets using conventional classifiers, including k-NN, GMM, and SVM, as well as deep learning-based classification methods, including CNN, LSTM, and BiLSTM, show that the proposed feature outperforms other features in speech/music discrimination.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Every day, massive amounts of useful data are broadcast and propagated on TV, radio channels, and the Internet, a large percentage of which consists of audio signals. These signals might have been combined with other signals that are not useful or may not be desirable for our goals, like noise, all kinds of music, or anything other than speech. Accordingly, a mechanism is required to distinguish the undesirable and the valueless audio signals from the useful and the valuable audio signals.

A system is required to discriminate valueless data from intended data to reduce the storage volume of audio files. In mobile networks, mobile operators may need to eliminate or differentiate silence from speech to minimize the amount of data transferred. Discriminating systems can also be employed to switch radio channels during commercial breaks that typically involve music. Additionally, SMD (speech/music discrimination) systems can be utilized to detect and categorize music into different classes by distinguishing it from speech in audio signals, which differs from the aforementioned applications. SMD systems can also apply to speech enhancement, noise reduction, speaker identification, speech command recognition, emotion detection, and speech-to-text conversion.

In this paper, speech discrimination was used to detect and classify speech segments of a signal comprising different types of audio. Here, speech/music discrimination does not refer to separating the voice of the singer from a melody in a music track. Additionally, a feature extraction method called Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR) was proposed to discriminate speech from music in audio signals. The characteristics of the audio signal in the frequency domain were used to calculate the FDMCR. The proposed method is a long-term feature that is robust against sudden changes in audio signals. Below are the highlights of this paper:

-

Introducing a new concept of mean-crossing rate in the frequency domain

-

Proposing a novel feature extraction method based on the average frequency-domain mean-crossing rate along the frequency axis for each of the Mel-scaled perceptual frequency bands of a signal spectrum

-

Demonstrating the class-separation ability of the proposed feature extraction method in discriminating between speech and music using well-known divergence criteria, such as MFDR, Bhattacharyya, and SKL

-

Demonstrating higher accuracy of the proposed feature extraction method compared to other features in the speech/music discriminating process using conventional classifiers (SVM, GMM, and k-NN) and deep learning methods (CNN, LSTM, and Bi-LSTM) on two popular speech-music datasets GTZAN and S &S

The rest of the article is organized as follows. In Sect. 2, some of the most recent studies and notable works related to speech/music discrimination are briefly mentioned. The proposed method is described in Sect. 3, along with relevant mathematical equations. Section 4 deals with the comprehensive performance evaluation of the proposed method. Finally, the conclusions are presented in Sect. 5.

2 Literature Review

Discriminating speech from music has various applications. One of the earliest applications of SMD systems involved real-time discrimination of speech from other contents being transmitted on FM radio channels [37]. As advertisements begin on a radio channel, the system changes the channel automatically. It was mentioned in [37] that the performance of this system is high, and the ZCR feature is used for this purpose. In [20], the SMD system was employed to analyse radio channels. Authors in reference [44] used other features (i.e., spectral, rhythmic, and harmonic features) of audio signals, especially music signals, for better classification. In [38], the low-energy measure was introduced for the SMD system. Also, using peak energy in speech signals was discussed in [34]. In [10, 30], MFCC was employed to improve discrimination.

Authors in [14, 31] used the LPC feature for speech/music discrimination. As mentioned, the application of SMD systems is not limited to a few cases. In another case, the SMD system was used for Enhanced Voice Services (EVS) [29]. Authors in [25] used a series of features obtained from empirical mode decomposition, such as mean, absolute mean, variance, skewness, and kurtosis. The proposed method in [3] is based on the Single Frequency Filtering (SFF) approach so that in audio signals, the parts associated with speech have a higher signal-to-noise ratio (SNR) in all frequencies compared to other parts which include noise.

In [12], a set of filter-based features was combined to separate speech from background sounds (noises). In that study, the discrimination accuracy increased by about 24% in all environmental conditions. In [24], features specific to human speech, such as features that represent excitation source, vocal tract, and speech syllable rate, were used for speech/music classification. In [39], features obtained from chroma vectors and combinations with other features were used to discriminate speech from music accurately. The proposed features in that study are effective and efficient.

In [40], the proposed SMD method is founded on the distinction that music carries a melody, while speech does not. The author employs deviation distribution linked with fundamental frequencies of various audio signal components to suggest a technique that can differentiate between speech and music. Additionally, [11] utilizes the Stereo-Input Mix-to-Peripheral Level (SIMPL) feature for discrimination, which is commonly utilized to approximate the speech-to-music ratio. In [41], several methods have been proposed for automatically classifying a large number of audio signals. It has been mentioned that SMD accuracy using the proposed method would be more than 94%.

Certain studies have emphasized the classification stage of the discrimination process. These studies have introduced methods that enhance the discrimination process by suggesting a new machine-learning algorithm, rather than proposing a novel feature extraction method. Among these studies, [18, 26, 32] can be mentioned in which convolutional neural networks (CNN) were applied to music detection from broadcast contents and speech/music discrimination, respectively. Also, in [17], the method proposed for the classification stage is based on the recurrent neural network (RNN) and has higher efficiency and lower error. In [5, 8, 9, 15, 19, 22, 43, 45], other methods were proposed for speech detection and SMD. Moreover, in surveys regarding speech discrimination, especially in [2, 4, 13, 33], more information can be obtained to discriminate speech from the audio signal, including history, previous studies, employed datasets, and methods associated with the discrimination process.

3 Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR)

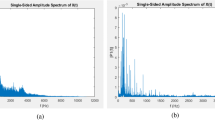

In our extensive research, the behaviour of the frequency signal of speech and music was compared based on previous research data. The main hypothesis here is that the different behaviour of speech and music signals in the time domain (like ZCR) could have different manifestations in the frequency spectrum. Therefore, here, the frequency spectrum of speech and music signals was investigated extensively. As shown in Figs. 1 and 2, each same band of speech and music frequency spectra have different crossing rates of the mean frequency. The crossing rate of the mean frequency in the frequency spectrum of speech signals is lower than that of music. The following lines discuss the implementation steps of the proposed method.

As mentioned, the proposed method uses the frequency characteristics of audio signals. In summary, the FDMCRFootnote 1 is defined as the crossing rate of mean frequency for each pre-specified band on the frequency spectrum of an audio signal.

Here, the FDMCR was calculated by framing the primary signal to frames with 25 ms length and 50% overlap. Then as shown in Fig. 3, a short-time Fourier transform was applied to each frame (Eq. (1)). In the next step, the power spectrum for each frame was calculated (Eq. (2)). Then, it was smoothed in a 25-frame window (Eq. (3)).

The smoothed power spectrum was subsequently partitioned into specific intervals (bands) along the frequency axis. Note that the width of these bands on the frequency axis is not uniform, and the bandwidth increases incrementally. The bands are narrower in lower frequencies and wider in higher frequencies. However, the lengths of these bands are uniform on the perceptual frequency axis and are established using Bilinear Frequency-Warping functions, as shown in Eqs. (4) or (5).

In this step, each band’s mean value for the specific frame was calculated using Eq. (6). The mean-crossing rate for each band was calculated based on Eq. (7). In the final step, according to Eqs. (8, 9), mean-crossing rates obtained in a specific interval were averaged (an interval with 25 frames (312.5 ms)—12 frames before the current frame and 12 frames after it. The obtained result was the FDMCR. All steps of extracting the proposed feature from an audio signal are formulated as follows:

where X is the short-time Fourier transform (STFT) and \(N_w\) is the number of samples in the nth frame or window equal to the number of samples in a frame with a length of 25 ms. Moreover, \(N_{{\text {sh}}}\) is the number of signal samples that is equivalent to a frameshift half of \(N_w\); this means that the overlap of the frames is fifty per cent here. w(.) shows the window function, and k indicates the frequency-bin index for the desired frame.

where P is the power spectrum for the \(n_{th}\) frame.

where \(\overline{P_x}\) is the smoothed power spectrum in an interval with \(M+1\) frames, and M is an even and positive integer equal to 24. Frequency warping can be performed according to Eqs. (4) or (5), which are different in shape but equal to each other. The bilinear frequency warping is used as follows [28]:

in which \(\tilde{\omega }\) is the warped frequency (estimate of human’s perceptual frequency), which is divided into bands with equal width proportional to the number of bands: if the number of bands is eight and the sampling frequency is 8 kHz, there will be eight bands with equal width in the interval [0, Mel (4000)] on the warped frequency axis. It should be noted that bandwidths are not the same after mapping to unwarped frequency (Hz scale, calculated by the inverse of Eqs. (4) or (5)). And \(\omega \) is the normal frequency in the interval [0, \(\pi \)]. \(\alpha \) is the warping factor between [− 1, 1] and determines the degree of nonlinearity in frequency warping, which is equal to 0.3 here(approximately equals Mel scale).

where M(i, n) is the mean smoothed power spectrum of the nth frame and ith band, and \(F_i\) is the set of frequency bins in the ith band and \(|F_i|\) is the number of frequency bins in the ith band (cardinality).

where MCR(i,n) is the mean-crossing rate for the nth frame and ith band. \(P_x(f,n)\) is the nth frame and fth frequency bin’s power spectrum.

where \((\overline{{\text {MCR}}})(i,n)\) is the smoothed mean-crossing rate in an interval with \(N+1\) frames. N is an even and positive integer equal to 24, and n and i are indices for frames and bands, respectively. B is the number of bands, which is equal to 16. The value of all parameters is quantified experimentally to achieve the proposed feature’s best performance. The optimization algorithms like [23] can also be used to initialize parameters to obtain an optimized form.

Finally, the B-dimensional \({\text {FDMCR}}(n)\) feature vector is obtained for each frame. This feature vector, corresponding to the nth frame, is given to a classifier for decision-making, and the classifier labels the frame with speech or music.

4 Experimental Protocols and Results

To measure the proposed method’s efficiency in discriminating speech from music, the FDMCR is evaluated and compared with features mentioned in Sect. 2 and many other well-known features in the speech processing context. First, the separability criteria and then machine learning accuracy metrics were used to that end. Generally, several conventional types of classical and deep techniques are used for machine learning. It should be noted that the proposed method was compared with other methods under the same conditions described below. All results were achieved by running methods in MATLAB (R2020b). In addition, all features were used in the long-term form to further the authenticity of the comparison under identical conditions, meaning that if a feature is not inherently long-term, after computing the feature for each frame, the final value of the feature for the frame would be obtained by averaging among a certain number of frames. This is while in this paper, twelve frames before and after along with the current frame were used. Accordingly, the long-term features were calculated within a 25-frame time window.

For the evaluation, two well-known datasets were used for comparison: GTZANFootnote 2 [44] with 128 30-second audio files, 64 of which are related to speech class, and the rest are related to music and S &SFootnote 3 (LabROSA) [6, 38, 46] with contains 244 15-second audio files, 140 of which are related to speech, and the rest are related to music and noise.

4.1 Comparison Based on Separability

In the first experiment, the class-separability of the proposed feature was compared with other features. Among well-known criteria for measuring the discriminability or distance between two probability distributions used here are DMFDR (Maximum Fisher Discriminant Ratio) [27], \(\hbox {D}^{\textrm{Bhat}}\) (Bhattacharyya Distance), and \(\hbox {D}^{\textrm{SKL}}\) (Symmetric Kullback–Leibler Distance) [1]. The mathematical formulae of the mentioned measures are as follows:

where I is the identity matrix. \(\mu _1\) and \(\mu _2\) are the samples mean of all feature vectors, which belong to speech and music classes, respectively, \(\Sigma _1\) and \(\Sigma _2\) are the sample covariance matrices. If covariance matrices of speech and music classes are assumed to be the same, all the above measures would be the same, and they would only differ in scale. However, this assumption is ignored in our computations.

The separability metrics presented in Eqs. (10–16) were derived through statistical calculations. In simpler terms, this comparison method primarily relies on the difference in the mean value of a feature between speech and music classes. A larger difference value for a feature implies that it is more effective in distinguishing speech from music.

Table 1 shows the features’ class separability for the two speech and music classes on the GTZAN and S &S datasets. The results indicate that the proposed method exhibits greater discriminability across all between-class distance criteria, suggesting its superior ability to accurately classify speeches and music pieces in audio signals.

4.2 The Evaluation Based on Machine Learning Accuracy

In this step, the Equal-Error-Rate (EER) metric is used for comparing methods. This error metric equals the arithmetic mean of the False Positive Rate (FPR) and False Negative Rate (FNR). Also, we use the accuracy measure for collation methods. This metric is equal to the precision criterion. Furthermore, the F-measure or F-score is used for comparing the correctness of methods. This metric is equal to the harmonic mean of precision and recall criterion. Moreover, for a more accurate comparison of methods, another metric called Area-Under-Curve (AUC) is used, which is equal to the AUC of the Receiver Operating Characteristics (ROC) curve. It should be noted that the rates of all comparison metrics for each method are computed in average mode and using the 10-fold way here.

4.2.1 The Comparison Based on Classical Machine Learning Methods

In this part of the evaluation, the k-NN, GMM, and SVM classifiers were used for machine learning. Generally, in cases with the k-NN classifier, 128-NN (k = 128) is selected to ensure better accuracy, lower error, and the practical efficiency of the k-NN with k = 128 compared to other types of k-NN. One feature vector was produced every 12.5-ms (frameshift size). Therefore, the number of generated feature vectors and training samples was remarkably high. Evidently, more training samples improve the efficiency of the k-NN classifier. Also, the Mahalanobis distance is used in the k-NN classifier here. In this study, GMM models with eight Gaussian components for each music and speech class were used. Moreover, the RBF kernel was selected when SVM was used.

Tables 2, 3 and Figs. 4 and 5 compare the various methods’ errors and accuracy using different classifiers according to EER, F-score, and AUC criteria. Here, the AUC metric, which represents the area under the ROC curve, was calculated for each method on average.

First, the features of the GTZAN corpus were compared. As shown in Table 2 and Fig. 4, proposed method produced the best result in this section and among all evaluations using different classifiers on the GTZAN dataset. It was found that the FDMCR with the k-NN classifier has the best performance among the FDMCR results (in terms of EER, F-score, and accuracy measures), followed by the evaluations of FDMCR with SVM and GMM, respectively.

The proposed method ranked first on the GTZAN dataset according to the AUC criterion The AUC shows the average performance for all possible values of decision thresholds. Some of these decision threshold values lead to high levels of False Positive Rates (FPR) or low levels of True Positive Rates (TPR) in the ROC curve, which are not reliable working points for real-world applications. Hence, EER, F-score, or accuracy could be more realistic criteria for ranking methods.

Now we compare features on the S &S dataset. As shown in Fig. 5 and Table 3, the FDMCR produced the best results when using k-NN (in terms of EER, F-Score, accuracy, and AUC). Furthermore, the FDMCR demonstrated a better performance in both SVM and GMM results in this section of the experiments. Overall, the proposed method outperforms other methods on the S &S dataset, yielding the best results.

4.2.2 The Comparison Based on Deep Learning

As mentioned in [7, 32], deep learning methods effectively identify and separate speech from music. Therefore, we intend to use these methods to compare and evaluate various methods’ performance, including our proposed method.

This section compares the three features that had the best results in previous comparisons using deep learning methods. As mentioned in [7, 32], using deep learning methods with image-based features to discriminate speech from music has shown promising results. However, we compared our proposed feature with two other features using deep learning methods while all features are audio-based (not image-based) using deep learning methods, including CNNs and recurrent neural networks (RNNs).

Table 4 specifies the characteristics of the deep learning methods used in this study. The network architecture of deep learning methods has a significant impact on the performance and results of learning methods. Here, the input of learning networks was in the form of a window of the desired feature for a 10-frame neighbourhood (Five frames before and after the desired frame).

As in the previous section, we examined the desired methods on the GTZAN and S &S datasets. As shown in Table 5 and Fig. 6, the proposed method performed best when the GTZAN dataset is used. Also, the FDMCR produced the best results compared to other features when the S &S dataset is used for comparison, as shown in Table 6 and Fig. 7.

Compared to the results of other classical methods, like the results of comparisons in [7, 32], our results indicated that deep methods often outperform classical methods. Nonetheless, it was found that the results are not desirable in some cases because deep network architectures are very influential in this type of learning performance. In addition, deep learning methods are more compatible with image-based methods structurally and functionally. Therefore, these learning methods should not be expected to perform much better when audio-based (non-image-based) features are used.

5 Conclusion and Future Suggestions

This paper proposed a new feature extraction method called Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR) based on the new concept of mean-crossing rate in the frequency domain.

To prove the efficiency of the proposed method, first, the capability of the proposed feature for class discrimination was measured using famous divergence criteria such as Maximum Fisher Discriminant Ratio (MFDR), Bhattacharyya divergence, and Jeffreys/Symmetric Kullback–Leibler (SKL) divergence. This feature was then applied to the speech/music discrimination problem using conventional and deep learning-based classifiers on two popular speech-music datasets, GTZAN and S &S.

It was shown that the proposed feature in this paper leads to more separability between speech and music classes and performs better in the evaluations than other features. The proposed system’s high computational complexity and memory consumption in the deep learning stage pose a limitation, given that a speech/music discrimination system should typically be fast and have low computational overhead. To address this issue, deep neural networks with fixed-point weights or approximate/stochastic computations can be utilized. Additionally, training deep learning systems on large speech-music datasets presents another challenge. One approach to tackle this problem is to divide the dataset into smaller parts and train a deep classifier on each part. The output of these classifiers can then be optimally combined using various ensemble learning methods.

To enhance the system’s efficiency, one potential future approach is to combine the proposed feature with feature vectors from other algorithms. In addition, different algorithms for dimensionality reduction or feature selection must be used to reduce redundancy in the combined vector after combining vectors.

Notes

The source code of the FDMCR has been registered as "Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR) feature" on IEEE DataPort [21] with this DOI: "10.21227/H2NW6G".

Available: “http://opihi.cs.uvic.ca/sound/music_speech.tar.gz". Accessed: 3/13/2021.

Accessible from this address: “http://www.ee.columbia.edu/\(\sim \)dpwe /sounds/musp/".

References

K.T. Abou-Moustafa, F.P. Ferrie, A note on metric properties for some divergence measures: The gaussian case. in Asian Conference on Machine Learning, pp. 1–15 (2012)

F. Alías, J. Socoró, X. Sevillano, A review of physical and perceptual feature extraction techniques for speech, music and environmental sounds. Appl. Sci. 6(5), 143 (2016)

G. Aneeja, B. Yegnanarayana, Single frequency filtering approach for discriminating speech and nonspeech. IEEE/ACM Trans. Audio Speech Lang. Process. 23(4), 705–717 (2015)

M. Anusuya, S. Katti, Front end analysis of speech recognition: a review. Int. J. Speech Technol. 14(2), 99–145 (2011)

R.G. Balamurali, C. Rajagopal, Speech/music discrimination (2017). US Patent 9,613,640

A.L. Berenzweig, D.P. Ellis, Locating singing voice segments within music signals. in Proceedings of the 2001 IEEE Workshop on the Applications of Signal Processing to Audio and Acoustics (Cat. No. 01TH8575), pp. 119–122 (2001)

M. Bhattacharjee, S.M. Prasanna, P. Guha, Speech/music classification using features from spectral peaks. IEEE/ACM Trans. Audio Speech Lang. Process. 28, 1549–1559 (2020)

G.K. Birajdar, M.D. Patil, Speech and music classification using spectrogram based statistical descriptors and extreme learning machine. Multimed. Tools Appl. 78(11), 15141–15168 (2019)

G.K. Birajdar, M.D. Patil, Speech/music classification using visual and spectral chromagram features. J. Ambient Intell. Hum. Comput. 11, 1–19 (2019)

M.J. Carey, E.S. Parris, H. Lloyd-Thomas, A comparison of features for speech, music discrimination. in 1999 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings. ICASSP99 (Cat. No. 99CH36258), vol. 1, pp. 149–152 (1999)

A. Chen, M.A. Hasegawa-Johnson, Mixed stereo audio classification using a stereo-input mixed-to-panned level feature. IEEE/ACM Trans. Audio Speech Lang. Process. 22(12), 2025–2033 (2014)

T. Drugman, Y. Stylianou, Y. Kida, M. Akamine, Voice activity detection: merging source and filter-based information. IEEE Signal Process. Lett. 23(2), 252–256 (2015)

S. Duan, J. Zhang, P. Roe, M. Towsey, A survey of tagging techniques for music, speech and environmental sound. Artif. Intell. Rev. 42(4), 637–661 (2014)

K. El-Maleh, M. Klein, G. Petrucci, P. Kabal, Speech/music discrimination for multimedia applications. In: 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing. Proceedings (Cat. No. 00CH37100), vol. 4, pp. 2445–2448 (2000)

G. Fuchs, A robust speech/music discriminator for switched audio coding. in 2015 23rd European Signal Processing Conference (EUSIPCO), pp. 569–573 (2015)

P.K. Ghosh, A. Tsiartas, S. Narayanan, Robust voice activity detection using long-term signal variability. IEEE Trans. Audio Speech Lang. Process. 19(3), 600–613 (2010)

P. Gimeno, I. Viñals, A. Ortega, A. Miguel, E. Lleida, Multiclass audio segmentation based on recurrent neural networks for broadcast domain data. EURASIP J. Audio Speech Music Process. 2020(1), 1–19 (2020)

B.Y. Jang, W.H. Heo, J.H. Kim, O.W. Kwon, Music detection from broadcast contents using convolutional neural networks with a mel-scale kernel. EURASIP J. Audio Speech Music Process. 2019(1), 11 (2019)

M. Joshi, S. Nadgir, Extraction of feature vectors for analysis of musical instruments. in 2014 International Conference on Advances in Electronics Computers and Communications, pp. 1–6 (2014)

S. Kacprzak, B. Chwiećko, B. Ziółko, Speech/music discrimination for analysis of radio stations. in 2017 International Conference on Systems, Signals and Image Processing (IWSSIP), pp. 1–4 (2017)

M.R. Kahrizi, Long-term multi-band frequency-domain mean-crossing rate (fdmcr) feature. https://doi.org/10.21227/H2NW6G

M.R. Kahrizi, S.J. Kabudian, Long-term spectral pseudo-entropy (ltspe): a new robust feature for speech activity detection. J. Inf. Syst. Telecommun. (JIST) 6(4), 204–208 (2018). https://doi.org/10.7508/jist.2018.04.003

M.R. Kahrizi, S.J. Kabudian, Projectiles optimization: A novel metaheuristic algorithm for global optimiaztion. Int. J. Eng. (IJE) IJE Trans. A Basics 33(10), 1924–1938 (2020). https://doi.org/10.5829/ije.2020.33.10a.11

B.K. Khonglah, S.M. Prasanna, Speech/music classification using speech-specific features. Digit. Signal Process. 48, 71–83 (2016)

B.K. Khonglah, R. Sharma, S.M. Prasanna, Speech vs music discrimination using empirical mode decomposition. in 2015 Twenty First National Conference on Communications (NCC), pp. 1–6 (2015)

A.A. Khudavand, S. Chikkamath, S. Nirmala, N. Iyer, Music/non-music discrimination using convolutional neural networks, in Soft Computing and Signal Processing. ed. by V.S. Reddy, V.K. Prasad, J. Wang, K.T.V. Reddy (Springer Singapore, Singapore, 2021), pp.17–28

S.J. Kim, A. Magnani, S. Boyd, Robust fisher discriminant analysis. in: Advances in neural information processing systems, pp. 659–666 (2006)

A. Makur, S.K. Mitra, Warped discrete-fourier transform: Theory and applications. IEEE Trans. Circ .Syst. I Fundam. Theory Appl. 48(9), 1086–1093 (2001)

V. Malenovsky, T. Vaillancourt, W. Zhe, K. Choo, V. Atti, Two-stage speech/music classifier with decision smoothing and sharpening in the evs codec. in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 5718–5722 (2015)

O.M. Mubarak, E. Ambikairajah, J. Epps, Novel features for effective speech and music discrimination. in 2006 IEEE International Conference on Engineering of Intelligent Systems, pp. 1–5 (2006)

J.E. Muñoz-Exposito, S. Garcia-Galan, N. Ruiz-Reyes, P. Vera-Candeas, F. Rivas-Peña, Speech music discrimination using a single warped lpc-based feature. in Proc. ISMIR, vol. 5, pp. 16–25 (2005)

M. Papakostas, T. Giannakopoulos, Speech-music discrimination using deep visual feature extractors. Expert Syst. Appl. 114, 334–344 (2018)

G. Peeters, A large set of audio features for sound description (similarity and classification) in the cuidado project (2004)

J. Pinquier, J.L. Rouas, R. André-Obrecht, A fusion study in speech/music classification. in 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2003. Proceedings.(ICASSP’03)., vol. 2, pp. II–17 (2003)

J. Ramırez, J.C. Segura, C. Benıtez, A. De La Torre, A. Rubio, Efficient voice activity detection algorithms using long-term speech information. Speech Commun. 42(3–4), 271–287 (2004)

S.O. Sadjadi, J.H. Hansen, Unsupervised speech activity detection using voicing measures and perceptual spectral flux. IEEE Signal Process. Lett. 20(3), 197–200 (2013)

J. Saunders, Real-time discrimination of broadcast speech/music. in 1996 IEEE International Conference on Acoustics, Speech, and Signal Processing Conference Proceedings, vol. 2, pp. 993–996 (1996)

E. Scheirer, M. Slaney, Construction and evaluation of a robust multifeature speech/music discriminator. in 1997 IEEE international conference on acoustics, speech, and signal processing, vol. 2, pp. 1331–1334 (1997)

G. Sell, P. Clark, Music tonality features for speech/music discrimination. in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2489–2493 (2014)

B. Thompson, Discrimination between singing and speech in real-world audio. in 2014 IEEE Spoken Language Technology Workshop (SLT), pp. 407–412 (2014)

W.H. Tsai, C.H. Ma, Automatic speech and singing discrimination for audio data indexing. in Big Data Applications and Use Cases, pp. 33–47. (Springer, 2016)

A. Tsiartas, T. Chaspari, N. Katsamanis, P.K. Ghosh, M. Li, M. Van Segbroeck, A. Potamianos, S. Narayanan, Multi-band long-term signal variability features for robust voice activity detection. in Interspeech, pp. 718–722 (2013)

N. Tsipas, L. Vrysis, C. Dimoulas, G. Papanikolaou, Efficient audio-driven multimedia indexing through similarity-based speech/music discrimination. Multimed. Tools Appl. 76(24), 25603–25621 (2017)

G. Tzanetakis, P. Cook, Musical genre classification of audio signals. IEEE Trans. Speech Audio Process. 10(5), 293–302 (2002)

E. Wieser, M. Husinsky, M. Seidl, Speech/music discrimination in a large database of radio broadcasts from the wild. in 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 2134–2138 (2014)

G. Williams, D.P. Ellis, Speech/music discrimination based on posterior probability features. in Sixth European Conference on Speech Communication and Technology (1999)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kahrizi, M.R., Kabudian, S.J. Long-Term Multi-band Frequency-Domain Mean-Crossing Rate (FDMCR): A Novel Feature Extraction Algorithm for Speech/Music Discrimination. Circuits Syst Signal Process 42, 6929–6950 (2023). https://doi.org/10.1007/s00034-023-02440-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02440-0