Abstract

In this work, a novel weighted kernel least mean square (WKLMS) algorithm is proposed by introducing a weighted Gaussian kernel. The learning behavior of the WKLMS algorithm is studied. Mean square error (MSE) analysis shows that the WKLMS algorithm outperforms both the least mean square (LMS) and KLMS algorithms in terms of transient state as well as steady-state responses. We study the effect of the weighted Gaussian kernel on the associated kernel matrix, its eigenvalue spread and distribution, and show how these parameters affect the convergence behavior of the algorithm. Both of the transient and steady-state mean-square-error (MSE) behaviors of the WKLMS algorithm are studied, and a stability bound is derived. For a non-stationary environment, tracking analysis for a correlated random walk channel is presented. We also prove that the steady-state excess MSE (EMSE) of the WKLMS is Schur convex function of the weight elements in its kernel weight matrix and hence it follows the majorization of the kernel weight elements. This helps to decide which kernel weight matrix can provide better MSE performance. Simulations results are provided to contrast the performance of the proposed WKLMS with those of its counterparts KLMS and LMS algorithms. The derived analytical results of the proposed WKLMS algorithm are also validated via simulations for various step-size values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Kernel-based learning algorithms have gained much attention recently because of their flexibility to deal with nonlinear classification, estimation and regression problems. In all such algorithms, the input data are mapped to a high dimensional inner product space, known as, reproducing kernel Hilbert space (RKHS) or feature space [3, 22, 28, 32, 33]. Mapping is done in such a way that the learning task, or the learning hypothesis becomes linear which allows to use linear adaptive filtering techniques to process the mapped data [22, 32].

Various works are presented for kernel-based online learning algorithms such as online learning using kernels [22, 32], kernel PCA [21], kernel-based Adaline [11], and kernel recursive least squares (KRLS) [9]. The rationale behind kernel filtering is that the linear structures can be used for nonlinear estimation by employing reproducing kernel Hilbert spaces (RKHS) in nonlinear systems [24, 29].

The least mean square (LMS) algorithm [36] is a well-known stochastic gradient-based algorithm which provides approximate solution to the Wiener’s optimum solution [15]. Its kernel-based version, known as the kernel least mean square (KLMS) [24] has recently gained huge popularity in the field of kernel adaptive filtering (KAF) [29]. Like any kernel-based learning algorithm, selecting the best kernel function is an open problem. The most widely accepted kernel is the Gaussian kernel which can be defined for any two vectors \(\textbf{u}_i\) and \(\textbf{u}_j\) as [24]

where h represents the width or size of the kernel. The Gaussian kernel has universal approximation capability as it induces an infinite dimensional feature space [32]. However, a major challenge in using Gaussian kernel in KAF is the selection of the optimum kernel width. There are various existing methods to deal with this issue which can be broadly categorized in two types: (1) fixed kernel size selection techniques such as plug-in methods [14, 16], Silverman method [8], and cross-validation method [2, 5, 30], fast kernel technique [35], least absolute difference-based kernel method [12], kernel learning-based Kalman Filtering [23], Cauchy kernel-based Kalman filter [7], Maximum Versoria criterion-based kernel filtering [18], and (2) online kernel size selection techniques such as adaptive kernel size methods [4, 6, 17, 20, 34, 37] and multi-kernel techniques [13, 19, 26].

However, these techniques are generally computationally expensive. Another major issue with these methods is that most of these are using batch mode calculation which is not suitable for online learning. Moreover, many of these methods are based on density estimation which cannot be used in nonlinear adaptive filtering.

Motivated by the above-mentioned challenges, we propose a weighted Gaussian kernel for the KLMS algorithm. This weight provides a vector width to the Gaussian kernel such that every element in the difference norm has a unique concept never explored before. The main contributions of the paper are: (1) develop a novel weighted KLMS algorithm by introducing a weight matrix in the conventional Gaussian kernel which is named as WKLMS algorithm, (2) perform transient and steady-state mean-square analyses of the WKLMS algorithm and derive expressions for the EMSE, (3) provide the proof for the Schur convexity of the steady-state EMSE of the WKLMS with respect to weight matrix which in turn helps us decide which weighting matrix will give a low EMSE, and (4) finally, design a time-varying mechanism to recursively update the weighted matrix by minimizing the MSE of the estimation process.

The rest of the paper is organized as follows: in Sect. 2, an overview of the KLMS and its adaptive kernel size variants is presented. Section 3 formulates the proposed WKLMS algorithm. In Sect. 4, the convergence analysis of the WKLMS algorithm is detailed. How does the weight matrix influence the performance of the WKLMS algorithm is devised in Sect. 5. Section 6 reports the simulation results. Finally, Sect. 7 concludes the work.

2 Overview of KLMS and its Adaptive Kernel Size Variants

In this section, we present a general overview of the standard KLMS algorithm [24] and two of its most popular adaptive kernel size methods [6, 10]. These methods will be used later to contrast their performance with that of the proposed method.

2.1 The KLMS Algorithm [24]

For continuous input–output mapping \(f:\textbf{u}\rightarrow y\), using a training sequence of input (\(\textbf{u}_{i}\)) and output \(y_{i}\), a function f can be realized by solving the regularized least squares regression in the RKHS (\(\mathscr {H}_{\kappa }\)) accompanying with a continuous, symmetric, and positive-definite function named as Mercer kernel \(\kappa (\textbf{u},\mathbf{{u}^{'}}\)) [3]:

where \(\gamma \) is the regularization factor that controls the smoothness of the solution and \(||\cdot ||^2\) is the Euclidean norm. Using the reproducing propertyFootnote 1, Eq. (2) can be modified as follows:

The solution of (3) can be written as a linear combination of kernel by using the representer theorem [29]

where \(\mathbf{\alpha }=(\textbf{K}+\gamma \textbf{I})^{-1}{} \textbf{y}\) with \(\textbf{y}=[y(1),\ldots ,y(N)]^{T}\) and \(\textbf{K}\in \mathbb {R}^{N\times N}\) is the Gram matrix with elements \(\textbf{K}_{ij}=\kappa (\textbf{u}_{i}{} \textbf{u}_{j})\). One can note that (4) requires considerable large memory and computational complexity due to the involvement of the Gram matrix. In contrast, the KAF builds the solution incrementally and hence provides an efficient alternative. The KLMS algorithm can be expressed as [29]:

where \(\eta \) reads the step-size, \(f_{i-1}\) is the previous system model, \(e_{i}\) is the error and at the ith iteration is defined as

Hence, the mapped output for KLMS at the Nth iteration will be

Note that the solution in (7) utilizes incrementally one step at a time, with a growing radial basis function (RBF) network.

2.2 Chen et al.’s KLMS with Adaptive Kernel Size [6]

An online technique for optimizing the kernel size of the KLMS algorithm is proposed in [6] which employs a stochastic gradient approach to adapt the filter’s weights and kernel’s size. At each iteration, the kernel size \(h_{i}\) in the standard KLMS is optimized by minimizing the instantaneous square error, that is, [6]:

where \(\rho \) is the step-size for the adaptation of the kernel width. After evaluating the derivative term in (8) and using (4), this leads to the KLMS with adaptive kernel size [6] which can be summarized as

2.3 Fan et al.’s KLMS with Adaptive Kernel Width [10]

A kernel online learning (KOL) algorithm with adaptive kernels is proposed and discussed in [10]. Consider the KOL task with m being the size of dictionary using kernel-based representation, then, the predicted output can be expressed as

where \(\varvec{\alpha }_{t}=[\alpha _1(t), \alpha _2(t),\ldots ,\alpha _m(t)]^T\) is the weight vector, while \(\varvec{\kappa }_{t}\) is the kernel vector for size m dictionary.

According to [10], it is proposed to update both the weight vector \(\varvec{\alpha }_{t}\) and the kernel width h(t) by minimizing the MSE which results in the following update recursions:

where \(\textbf{D}^{\alpha }_{t}\) and \(D^h_{t}\) are, respectively, the derivative terms defined as

In (11), the term \(\eta _{t}\) represents a time-varying step-size which is adapted according to the following mechanism:

where \(\bar{\epsilon }_{t}\) is a combination of higher-order approximation error terms and the system noise term. The term \(\rho _t\) appearing in (11) is also a time-varying parameter adapted according to the following rule:

The terms \(M_t\) and \(\lambda _{t}\) are defined, respectively, as follows:

2.4 Limitations of Existing Adaptive Kernel Size Methods

Although there are many existing works that aim to adapt the kernel size or width by employing certain techniques, however, these methods have several limitations. For example, few of these are highly computationally complex as they require many tuning parameters such as [10, 37], few are specific to sparse domain such as [37], and few are specific to Quaternion kernel such as [17]. Moreover, all these methods employ a single width for one kernel which is governed by a scalar parameter \(h_t\) [4, 6, 17, 20, 34, 37]. Thus, there is no technique in the existing literature that provides a vector width for one kernel. Also, there is no prior work discussing majorization properties in KAF-based algorithms.

3 Proposed WKLMS Algorithm

Motivated by the limitations in the existing work highlighted in the previous section, here we aim to propose a novel method with adaptive kernel widths by employing a diagonal weighting matrix in contrast to the scalar width and we will call it the weighted KLMS (WKLMS) algorithm. The weighting matrix can be either fixed or time-varying, depending on the environment, which are discussed in the ensuing sections.

3.1 Weighted KLMS with fixed Weighting Matrix

Here, in this section we aim to introduce the following weighted Gaussian kernelFootnote 2

where \(\varvec{\Sigma }\) is an \(m \times m\) diagonal weighting matrix. It can be noticed that the scalar width \(h^2\) is now replaced by a \(\varvec{\Sigma }\) which provides different scaling factor to every element in the norm \(||\textbf{u}_i - \textbf{u}_j||^2\). The rationale behind this weighted kernel is the concept of multi-dimensional Gaussian distribution. Consider an M dimensional real Gaussian random vector \(\textbf{x}\) with mean \(\bar{\textbf{x}}\) and correlation matrix \(\textbf{R}_x\), its probability density function (pdf) will be

The above pdf has a weighting matrix \(\textbf{R}^{-1}\) which gives an appropriate corresponding weight to each element in the distance norm \(||\textbf{x}-\bar{\textbf{x}}||^2\). Motivated by this, here we propose the weighted Gaussian kernel in (16) with the aim to provide proportionate width to every component of the norm. The impact of this weighting kernel is analytically investigated in Sect. 5 to show how it can be useful to minimize the steady-state MSE.

Thus, the mapped output of the proposed WKLMS for input \(\textbf{u}_n\) at iteration N can be expressed as

where \(\eta \) is the step size and \(e_i\) is the estimation error at the ith iteration and it can be computed as follows:

Equations (18) and (19) together define the proposed WKLMS algorithm. To obtain a recursive formulation of the algorithm, consider a finite size dictionary similar to the work in [27]. Thus, for a dictionary of size M, we can define a vector \(\varvec{\kappa }_{\varvec{\Sigma }}(n)\) that consists of kernels for all the inputs at time n, that is

Now, by representing the weight vector as \(\textbf{w}_n\), its update recursion is given by

where \(e_n\) is the estimation error of the adaptive filter which can be formulated as

3.2 Weighted KLMS with adaptive Weighting Matrix

In this section, we develop a WKLMS with an adaptive weighting \(\varvec{\Sigma }_n\). To do so, we minimize the MSE, \(E[e_n^2]\), using a stochastic gradient optimization approach, that is

Next, using the expression for estimation error in (19) with the aid of chain rule of derivative, we can show that

As a result, the proposed WKLMS with adaptive weighting matrix will take the following form:

Remarks

By observing Eqs. (16), (21), and (22), it can be concluded that the proposed WKLMS algorithm with a fixed weighting matrix is simply giving unequal weighting to the kernel error norm which can provide freedom to enhance the algorithm’s performance. In case of the WKLMS algorithm with an adaptive weighting matrix given in Eqs. (16) and (25), it can be observed that the kernel error norm weighting is intelligently designed such that the MSE of the proposed algorithm is minimized.

3.3 Computational Complexity of the WKLMS Algorithm

By observing the Gaussian kernels for the standard KLMS and the proposed WKLMS algorithm given in Eqs. (1) and (16), respectively, it can be observed that the proposed WKLMS algorithm requires additional m multiplications in contrast to the standard KLMS algorithm. This is due to the fact that the kernel of the WKLMS involves diagonal weighting by matrix \(\varvec{\Sigma }\) of size \(m \times m\). This complexity will be increased further to \(3m+3\) multiplications and \(2m+1\) additions in the case of adaptive WKLMS given in (25). This increase in computations is insignificant to the amount of improvement in the algorithm’s performance.

4 Convergence Analysis of the WKLMS Algorithm

In this section, we provide the convergence analysis of the proposed WKLMS algorithm both in the mean and mean-square sense. For this, we derive expressions to analyze both the transient and the steady-state behavior of the proposed algorithm. Consequently, the stability bounds are thus obtained to guarantee the convergence of the WKLMS algorithm.

4.1 System Model

Consider a system identification scenario in which the desired response \(d_n\) is the output of a channel with impulse response \(\textbf{w}_o\), that is,

where \(v_n\) is a zero mean i.i.d. noise sequence with variance \(\sigma _v^2\). Let \({\tilde{\textbf{w}}}_n=\textbf{w}_o-\textbf{w}_n\) denote the weight error vector. Thus, the estimation error defined in (22) can be expressed as

By subtracting \(\textbf{w}_o\) from both sides of (21) and utilizing the above formulation of \(e_n\), we can show that

In the analysis, we assume that the sequences \(\{v_i\}\) and \(\{\textbf{u}_i\}\) are mutually independent which is well justified in real practice. Consequently, the sequence of kernel vectors \(\{\varvec{\kappa }_{\varvec{\Sigma }}(n)\}\) will also be independent of the noise sequence \(\{v_i\}\). Moreover, we also assume that the input vectors are Gaussian distributed, i.e., \(\textbf{u}_i \sim {\mathcal {N}}~(\textbf{0},\textbf{R}_u)\). We also assume that the input vectors are independent but their entries are correlated.

4.2 Mean Behavior

Subtracting \(\textbf{w}_o\) from both sides of (21) and taking expectation of both sides to obtain

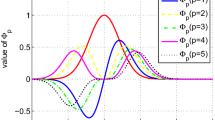

where \(\varvec{R}_{\kappa \kappa }=E[\varvec{\kappa }_{\varvec{\Sigma }}(n)\varvec{\kappa }_{\varvec{\Sigma }}^T(n)]\) is the correlation matrix of the dictionary kernel vector \(\varvec{\kappa }_{\varvec{\Sigma }}(n)\). Taking the eigenvalue decomposition, \(\varvec{R}_{\kappa \kappa }=\varvec{U}{\varvec{\Lambda }}_{\kappa \kappa } \varvec{U}^T\), the above recursion is transformed to

where \(\varvec{\bar{w}}_n=\varvec{U}^T\varvec{\tilde{w}}_n\) is the transformed weight error vector. In order to analyze the above, we need to evaluate the entries of the correlation matrix \(\varvec{R}_{\kappa \kappa }\) which are defined as:

To do so, we introduce the following notations:

where

and

\(\varvec{0}\) here is the null matrix. Finally, by using the result of [27], the entries of \(\varvec{R}_{\kappa \kappa }\) are given by

where \(\varvec{I}_{2\,m}\) and \(\varvec{I}_{3\,m}\) are the identity matrix of the size of \(2\,m \times 2\,m\) and \(3\,m \times 3\,m\), respectively. Since we have assumed that the input vectors \(\{\textbf{u}_n\}\) are independent, we can show that the correlation matrices \(\varvec{R}_{\varvec{x}}\) and \(\varvec{R}_{\varvec{z}}\) can be calculated, respectively, as follows:

Finally, studying recursion (30) with the aid of the eigenvalue decomposition of \(\varvec{R}_{\kappa \kappa }\), the mean behavior of the WKLMS can be evaluated. Ultimately, it can be shown that the WKLMS will converge in the mean sense if the following bound is satisfied:

where \(\lambda _{\text {max}}\) is the maximum eigenvalue of \({\varvec{\Lambda }}_{\kappa \kappa }\).

4.3 Mean-Square Behavior

The main aim of this section is to study the transient and steady-state behaviors of the excess MSE (EMSE) for the WKLMS algorithm. The EMSE (denoted by \(\zeta \)) is defined as

If we define the a priori estimation error as

then, the EMSE of the WKLMS can be expressed as

Next, resorting to the eigenvalue decomposition, \(\varvec{R}_{\kappa \kappa }=\varvec{U}{\varvec{\Lambda }}_{\kappa \kappa } \varvec{U}^T\), the EMSE can be reformulated as

where \({\varvec{\lambda }}\) is the vector containing the diagonal elements of \({\varvec{\Lambda }}_{\kappa \kappa }\). In order to investigate the mean square behavior of the proposed WKLMS algorithm, we employ the approach of energy conservation [31]. As a result, the transient EMSE learning curve is found to be:

where

To obtain the steady-state performance, Equation (43) recursion can be analyzed at \(n\rightarrow \infty \) which results in

The mean square stability can be obtained if the magnitude of the eigenvalues of matrix \(\varvec{F}\) are bounded by unity, i.e., \(\Vert \lambda _{\varvec{F}}\Vert <1\), which results in

where \(\lambda _k\) is the kth eigenvalue of \(\varvec{R}_{\kappa \kappa }\). Similar to the EMSE, transient and steady-state MSD for the WKLMS can also be obtained. Finally, a complete summary of the performance of the WKLMS is provided in Table 1.

5 How weight matrix can influence the performance of the WKLMS?

In this section, we provide an analysis that can help us identify which weights of the WKLMS algorithm can provide us with lower steady-state EMSE. Before proceeding to the analysis, we start the discussion with the question: Given two diagonal weight matrices \(\textbf{W}_1\) and \(\textbf{W}_2\), which of the weights will result in a lower steady-state EMSE for the WKLMS algorithm? To answer this question, the concept of Majorization and Schur convexity as introduced in [1] is used. These concepts are briefly outlined next.

-

Majorization Property Majorization property deals with the spread of elements in vectors [25]. Formally, the concept of majorization can be defined as follows [25]: For any two vectors \(\textbf{x}\in \mathbb {R}^m\) and \(\textbf{y}\in \mathbb {R}^m\) with descending order components \(x_1\ge x_2\ge \ldots \ge x_m\ge 0\) and \(y_1\ge y_2\ge \ldots \ge y_m\ge 0\), the vector \(\textbf{x}\) majorizes the vector \(\textbf{y}\) (written as \(\textbf{x}\succ \textbf{y}\)) if

$$\begin{aligned} \sum _{i=1}^{k}x_i\ge \sum _{i=1}^{k}y_i,~~k=1,2,\ldots ,m-1,~\text {and}~\sum _{i=1}^{m}x_i=\sum _{i=1}^{m}y_i . \end{aligned}$$(47) -

Schur-convex Functions Any function that preserves the ordering of majorization is called Schur-convex of function [25]. More specifically, we can define Schur-convex functions as follows [25]: A Schur-convex function, is a function \(f:\mathbb {R}^M\rightarrow \mathbb {R}\), for which \({\varvec{x}}\succ {\varvec{y}}\) implies \(f({\varvec{x}})\ge f({\varvec{y}})\). Thus, we want to utilize the concept of majorization to quantify the impact spread of the diagonal matrices \(\varvec{\Sigma }_1\) and \(\varvec{\Sigma }_2\) on the steady-state EMSE. By defining the vectors \(\varvec{\sigma }_i \mathop {=}^{\triangle } \text{ diag }(\varvec{\Sigma }_i)\), we can say that \(\varvec{\sigma }_1\) majorizes \(\varvec{\sigma }_2\) as follows [25]:

$$\begin{aligned}{} & {} \sum _{i=1}^{j}\varvec{\sigma }_1(i)\ge \sum _{i=1}^{j}\varvec{\sigma }_2(i),~~~j=1,2,\ldots ,m-1 \end{aligned}$$(48)$$\begin{aligned}{} & {} \sum _{i=1}^{m}\varvec{\sigma }_1(i)= \sum _{i=1}^{m}\varvec{\sigma }_2(i), \end{aligned}$$(49)

where \(\varvec{\sigma }_1(i)\) and \(\varvec{\sigma }_2(i)\) represent the ith elements in the vectors \(\varvec{\sigma }_1\) and \(\varvec{\sigma }_2\), respectively. Our aim is to study the impact of spread of the diagonal matrix on the EMSE of the WKLMS algorithm. It should be noted that the EMSE is the scalar valued function of vectors \(\varvec{\sigma }_i\). Thus, our study is related to the Schur-convexity of the EMSE also which will help us to conclude which weight matrix will result in a lower EMSE of the WKLMS algorithm. The proof for the Schur-convexity of the EMSE is provided next.

5.1 Proof for "The EMSE of the WKLMS Algorithm is Schur Convex w.r.t. weight vector \({\varvec{\sigma }}\)"

In this section, we provide the proof for the Schur-convexity of the EMSE for the WKLMS algorithm. For that, we utilize the approach given in [1] which states that if any scalar function of vector \(\varvec{\sigma }\) (i.e., \(f(\varvec{\sigma })\)) is symmetric in its argument, that is, permutation of the elements of \(\varvec{\sigma }\) does not change the value of \(f(\varvec{\sigma })\), then the Schur-convexity can be proved if the following condition is satisfied:

It can be easily seen from the expression for the steady-state EMSE that is symmetric w.r.t. eigenvalues \(\{\lambda _i\}\). Moreover, since the weight matrices used in WKLMS are diagonal matrices, it can be easily conclude that the EMSE of the WKLMS is symmetric w.r.t. elements in \(\varvec{\sigma }\). Thus, in order to prove that the EMSE of the WKLMS (denoted by \(\zeta _{\infty }\)) is Schur-convex, we need to simply show that

In order to test the above condition, we utilize the expression for the steady-state EMSE of the WKLMS given in (45). We start by defining the following variable

Thus, \(\frac{\partial \zeta _{\infty }}{\partial \varvec{\sigma }(1)}\) can be obtained as

To simplify the analysis, we consider white input (i.e., \(\textbf{R}_u=\textbf{I}_m\)) which results in \(\textbf{R}_{\varvec{x}}=\textbf{I}_{2\,m}\) and \(\textbf{R}_z=\textbf{I}_{3\,m}\). Moreover, we consider the case of dictionary size \(M=2\) in order to make the analysis tractable.Footnote 3 As a result, the correlation matrix \(\varvec{R}_{\kappa \kappa }\) will become

where

Thus, the eigenvalues of the correlation matrix \(\varvec{R}_{\kappa \kappa }\) will be

Thus, for \(M=2\) with \(\lambda _1\) and \(\lambda _2\) given in (56), the derivative \(\frac{\partial S}{\partial \varvec{\sigma }(1)}\) can be obtained as follows:

Consequently, \(\frac{\partial \zeta _{\infty }}{\partial \varvec{\sigma }(1)}\) is found to be

Similarly, we can show that

Next, subtracting (59) from (58) one obtains

To proceed further, the evaluation of the derivative terms \(\frac{\partial r(0)}{\partial \varvec{\sigma }(1)}\) and \(\frac{\partial r(0)}{\partial \varvec{\sigma }(2)}\) is left to Appendix which results in

which is always positive for \(\varvec{\sigma }(1)>\varvec{\sigma }(2)\). Hence, we conclude that the first term inside the bracket in (60) is always positive for \(\varvec{\sigma }(1)>\varvec{\sigma }(2)\).

Using similar approach, we can show that \(\left( \frac{\partial r(1)}{\partial \varvec{\sigma }(1)}-\frac{\partial r(1)}{\partial \varvec{\sigma }(2)}\right) \ge 0\) for \(\varvec{\sigma }(1)>\varvec{\sigma }(2)\). Moreover, we can easily see that \(\left( \dfrac{1}{(2-\eta \lambda _1)^2}-\dfrac{1}{(2-\eta \lambda _2)^2}\right) \ge 0\) as \(\lambda _1 > \lambda _2\). Thus, we finally conclude that

which completes the proof that the steady-state EMSE of the WKLMS is Schur-convex function of \(\varvec{\sigma }\). Thus, \(\varvec{\sigma }_1\succ \varvec{\sigma }_2\) will result in \(\zeta _{\infty }(\varvec{\sigma }_1)\ge \zeta _{\infty }(\varvec{\sigma }_2)\).

Remarks

At this stage, it is important to highlight the major challenges of our work. A major challenge was the design of adaptive weighting matrix for the proposed WKLMS algorithm which was achieved by employing the stochastic gradient optimization strategy given in (23) and (24). Another challenge was in the evaluation of MSE performance measures of the WKLMS algorithm which was overcome by proper evaluation of the required moments and correlation matrices in Sect. 4. Finally, it was very challenging to prove that the EMSE of the WKLMS algorithm is the Schur-convex which was tactfully handled in Sect. 5 using the principles of partial derivatives and linear algebra.

6 Simulation Results

In this section, the performance analysis of the WKLMS algorithm is investigated and compared with those of the linear LMS and the standard KLMS algorithm.

In the simulation tasks, we investigate two different problems: nonlinear channel equalization and nonlinear channel estimation. For these tasks, we consider a nonlinear channel whose output \(y_i\) is obtained using \(y_i=x_i-0.9x_i^2+n_i\) where \(n_i\) is a zero mean additive white Gaussian noise with variance \(\sigma _v^2=0.01\) and \(x_i\) is the linear output via \(x_i=s_i-0.5s_{i-1}\) where \(s_i\) is the input to the communication channel.

6.1 Task 1: Nonlinear Channel Equalization

In this task, we consider a nonlinear channel equalization scenario where the MSE performance of the proposed WKLMS with adaptive width is compared with those of the standard linear LMS, KLMS, Chen-KLMS [6], and Fan-KLMS [10] as shown in Fig. 1. The equalizer length is set to 5, the step-sizes for the weight updates of LMS, KLMS, Chen-KLMS, Fan-KLMS, and proposed WKLMS are 0.01, 0.15, 0.3, 0.1, and 0.2, respectively. For the proposed WKLMS, the weighting matrix \(\varvec{\Sigma }\) is adapted using the approach outlined in Sect. 3.2, while the step-size for the width adaptation is kept 0.01. As depicted in Fig. 1, the performance of the proposed WKLMS is better in comparison with its counterparts in terms of both convergence speed and steady-state MSE.

6.2 Task 2: Nonlinear Channel Estimation

In this task of nonlinear channel estimation, we use the same parameters as in the first task. The nonlinear channel output \(y_i\) is obtained using \(y_i=x_i-0.9x_i^2+n_i\) where \(n_i\) is a zero mean additive white Gaussian noise with variance \(\sigma _v^2=0.01\) and \(x_i\) is the linear output via \(x_i=s_i-0.5s_{i-1}\) where \(s_i\) is binary phase shift keying modulated input to the communication channel. The testing MSE of the aforementioned algorithms is depicted in Fig. 2 which shows that the MSE performance of the compared algorithms for the nonlinear channel estimation task. It can be observed from Fig. 2 that the proposed WKLMS has attained better MSE performance in comparison with its counterparts.

6.3 Task 3: Impact of Weighting Matrix (Majorization Study)

In this task, we investigate the impact of the weighting matrix on the MSE performance of the proposed WKLMS algorithm. More specifically, we want to validate the majorization and Schur-convexity properties of the WKLMS which we have proved analytically in Section V.

For this, we consider the problem of channel equalization with parameters \(\eta \) and \(\sigma _v^2\) set to 0.2 and 0.01, respectively. To contrast the performance, we consider two weighting matrices \((\mathbf{\Sigma })\): one with ascending diagonal elements, i.e., \(\mathbf{\sigma }_1=[0.1,0.1,1,2,2]\) (say WKLMS-1) and second with descending diagonal elements, i.e., \(\mathbf{\sigma }_2=[2,2,1,0.1,0.1]\) (say WKLMS-2). Hence, we have \(\mathbf{\sigma }_2 \succ \mathbf{\sigma }_1\) in this case. The MSE results are reported in Fig. 3. It can be easily seen that the MSE of the WKLMS-2 is greater than that of the WKLMS-1, that is, \(\zeta _{\infty }(\mathbf{\sigma }_2)>\zeta _{\infty }(\mathbf{\sigma }_1)\) which validates our theoretical proof for majorization and Schur-convexity provided in Sect. 5.

In order to further validate our majorization study related to the MSE of the WKLMS algorithm, we compare the steady-state MSE of three weighting vectors \(\varvec{\sigma }_1\), \(\varvec{\sigma }_2\), and \(\varvec{\sigma }_3\) which are reported in Table 2. It can be observed that these vectors satisfy the majorization order as \(\varvec{\sigma }_1 \succ \varvec{\sigma }_2 \succ \varvec{\sigma }_3\). We use a dictionary of size \(M=2\) and the step-size of the algorithm is set to 0.005 (i.e., \(\eta =0.005\)). For each of the three vectors, we evaluate the eigenvalues of the correlation matrix \(\varvec{R}_{\kappa \kappa }\) using the expressions in (36) which are then utilized to evaluate the steady-state EMSE given by (45). These results are reported in Table 2 which shows that \(\zeta _{\infty }(\varvec{\sigma }_3)>\zeta _{\infty }(\varvec{\sigma }_2)>\zeta _{\infty }(\varvec{\sigma }_1)\) which is in-line with the findings of Section V.

6.4 Task 4: Validation of Analytical Results

Next, we validate the theoretical analysis of the WKLMS algorithm. More specifically, we compare the MSE learning curves of the WKLMS algorithm obtained via simulations with the theoretical one. For this purpose, we use white input vectors of length 5. The dictionary size is also set to 5. The weighting matrix has a diagonal weight vector \(\mathbf{\sigma }=[0.1,0.1,1,2,2]\). The MSE learning curves are compared for two different values of step-size, that is, 0.1 and 0.05 which are presented in Fig. 4. The simulated curves are obtained by averaging over 400 independent experiments, whereas the theoretical curves are obtained by plotting the MSE recursion derived in Eq. (25 ). A close match can be observed between the simulation and theory which validates our analysis.

6.5 Task 5: Experiment for Massive MIMO Channel Estimation on DSP Board

Finally, we implement the proposed algorithm for the channel estimation of downlink Massive MIMO system on a DSP board TMS320C6713 which is connected to a computer screen for displaying the results. The experimental set up is shown in Fig. 5. Since the adaptive filter considered has a Finite Impulse Response (FIR) structure, it requires the present and past samples of the data to be stored at every iteration. Thus, we implement it on the DSP using the first-in-first-out (FIFO) buffer. The Massive MIMO downlink channel of length \(M=64\) is randomly generated using Complex Gaussian vector. The input considered is BPSK modulated data. The MSE performance of the proposed algorithm is compared with that of the standard KLMS algorithm. The step-size of both algorithms is set to 0.5. Screen shot of the results display is provided in Fig. 6 which shows the MSE learning curves for the KLMS (lower window) and the proposed WKLMS algorithm (upper window). The x-axis is displaying time in seconds. It can be easily seen from the results that both algorithms attained approximately -53 dB floor at steady state. However, the proposed algorithm converges much faster compared to the KLMS algorithm.

7 Conclusion

In this work, we presented a novel weighted Gaussian kernel-based LMS algorithm which is termed as WKLMS algorithm. We developed two variants of the WKLMS: first with a fixed weighting matrix and the second with an adaptive weighting matrix. The proposed WKLMS algorithm was analyzed using energy conservation approach and consequently transient, and steady-state results are obtained in a closed form. These findings are validated by the simulation results. In addition, we also performed an experiment on the DSP board TMS320C6713 for the channel estimation of downlink Massive MIMO system which further validate the supremacy of the proposed algorithm over the standard KLMS algorithm. Another major contribution of this work is the proof that the steady-state EMSE of the WKLMS is Schur convex function w.r.t. the kernel weighting vector. Thus, the EMSE of the WKLMS follows the majorization of the kernel weight vector. Hence, it was concluded that the majorizing kernel weight vector will result in higher EMSE and vice versa. This fact is validated via simulations also. Finally, the simulation results corroborate very well the theoretical findings. For future work, it will be interesting to explore new variants of the WKLMS using some non-Gaussian kernels as the Gaussian kernels are not optimal for non-Gaussian environments. Moreover, the WKLMS can be explored in distributed estimation tasks of wireless sensor networks.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

Notes

\(\langle f \Vert \kappa (\textbf{u}) \rangle _{\mathscr {H}_{\kappa }}=f(\textbf{u})\)

For any vector \(\varvec{x}\) and matrix \(\varvec{A}\), the notation \(||\varvec{x}||_{\varvec{A}}^2\) represents the weighted norm, i.e., \(||\varvec{x}||_{\varvec{A}}^2=\varvec{x}^T\varvec{A}\varvec{x}\)

However, the analysis can be applied for any dictionary size.

References

K.A. Al-Hujaili, T.Y. Al-Naffouri, M. Moinuddin, The steady-state of the (normalized) lms is schur convex. In: 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4900–4904 (2016). doi: https://doi.org/10.1109/ICASSP.2016.7472609

S. An, W. Liu, S. Venkatesh, Fast cross-validation algorithms for least squares support vector machine and kernel ridge regression. Pattern Recognit. 40(8), 2154–2162 (2007). https://doi.org/10.1016/j.patcog.2006.12.015

N. Aronszajn, Theory of reproducing kernels. Trans. Am. Math. Soc. 68(3), 337–404 (1950)

C. Brunsdon, Estimating probability surfaces for geographical point data: an adaptive kernel algorithm. Comput. Geosci. 21(7), 877–894 (1995). https://doi.org/10.1016/0098-3004(95)00020-9

G.C. Cawley, N.L. Talbot, Efficient leave-one-out cross-validation of kernel fisher discriminant classifiers. Pattern Recognit. 36(11), 2585–2592 (2003). https://doi.org/10.1016/S0031-3203(03)00136-5

B. Chen, J. Liang, N. Zheng, J.C. Príncipe, Kernel least mean square with adaptive kernel size. Neurocomputing 191, 95–106 (2016). https://doi.org/10.1016/j.neucom.2016.01.004

A. Dak, R. R, Non-iterative cauchy kernel-based maximum correntropy cubature kalman filter for non-gaussian systems. Control Theory Technol. 20, 465–474 (2022)

K. Dehnad, Density estimation for statistics and data analysis (1987)

Y. Engel, S. Mannor, R. Meir, The kernel recursive least-squares algorithm. IEEE Trans. Signal Process. 52(8), 2275–2285 (2004). https://doi.org/10.1109/TSP.2004.830985

H. Fan, Q. Song, S.B. Shrestha, Kernel online learning with adaptive kernel width. Neurocomputing 175, 233–242 (2016)

T.T. Frieß, R.F. Harrison, A kernel-based adaline for function approximation. Intell. Data Anal. 3(4), 307–313 (1999)

D.G.W.S.W. Fu, Q. Zhang, Kernel least logarithmic absolute difference algorithm. Math. Probl. Eng. (2022). https://doi.org/10.1155/2022/9092663

M. Gönen, E. Alpaydın, Multiple kernel learning algorithms. J. Mach. Learn. Res. 12, 2211–2268 (2011)

W. Härdle, Applied nonparametric regression. 19. Cambridge university press (1990)

S. Haykin, Adaptive filter theory. Prentice-Hall information and system sciences series. Prentice Hall (2002). https://books.google.cz/books?id=eMcZAQAAIAAJ

E. Herrmann, Local bandwidth choice in kernel regression estimation. J. Comput. Gr. Stat. 6(1), 35–54 (1997)

W. Huang, C. Chen, A novel quaternion kernel lms algorithm with variable kernel width. IEEE Trans. Circuits Syst. II: Express Br. 68(7), 2715–2719 (2021). https://doi.org/10.1109/TCSII.2021.3056452

S. Jain, S. Majhi, Zero-attracting kernel maximum versoria criterion algorithm for nonlinear sparse system identification. IEEE Signal Process. Lett. 29, 1546–1550 (2022)

R. Jin, S.C. Hoi, T. Yang, Online multiple kernel learning: Algorithms and mistake bounds. In: International Conference on Algorithmic Learning Theory, pp. 390–404. Springer (2010)

V. Katkovnik, I. Shmulevich, Kernel density estimation with adaptive varying window size. Pattern Recognit. Lett. 23(14), 1641–1648 (2002)

K.I. Kim, M. Franz, B. Scholkopf, Iterative kernel principal component analysis for image modeling. IEEE Trans. Pattern Anal. Mach. Intell. 27(9), 1351–1366 (2005). https://doi.org/10.1109/TPAMI.2005.181

J. Kivinen, A. Smola, R.C. Williamson, Online learning with kernels. IEEE Trans. Signal Process. 52(8), 2165–2176 (2004)

Y. Li, J. Lou, X. Tan, Y. Xu, J. Zhang, Z. Jing, Adaptive kernel learning kalman filtering with application to model-free maneuvering target tracking. IEEE Acccess 10, 78088–78101 (2022)

W. Liu, P.P. Pokharel, J.C. Principe, The kernel least-mean-square algorithm. IEEE Trans. Signal Process. 56(2), 543–554 (2008). https://doi.org/10.1109/TSP.2007.907881

A.W. Marshall, I. Olkin, B.C. Arnold, Inequalities: theory of majorization and its applications, vol. 143 (Springer, 1979)

F. Orabona, L. Jie, B. Caputo, Multi kernel learning with online-batch optimization. J. Mach. Learn. Res. 13(2), 227–253 (2012)

W.D. Parreira, J.C.M. Bermudez, C. Richard, J.Y. Tourneret, Stochastic behavior analysis of the gaussian kernel least-mean-square algorithm. IEEE Trans. Signal Process. 60(5), 2208–2222 (2012)

P. Pokharel, W. Liu, J. Principe, Kernel lms. In Proceedings of International Conference Acoustics, Speech, Signal Processing (ICASSP)03(3), III–1421 (2007)

J.C. Príncipe, W. Liu, S. Haykin, Kernel adaptive filtering: a comprehensive introduction. John Wiley & Sons (2011)

J. Racine, An efficient cross-validation algorithm for window width selection for nonparametric kernel regression. Commun. Stat. - Simul. Comput. 22(4), 1107–1114 (1993). https://doi.org/10.1080/03610919308813144

A.H. Sayed, Adaptive filters. Wiley-IEEE Press (2008)

B. Scholkopf, A.J. Smola, Learning with kernels, support vector (MIT Press, Cambridge, MA, USA, 2001)

J. Shawe-Taylor, N. Cristianini et al., Kernel methods for pattern analysis (Cambridge University Press, 2004)

L. Shi, H. Zhao, Y. Zakharov, An improved variable kernel width for maximum correntropy criterion algorithm. IEEE Trans. Circuits Syst. II: Express Br. 67(7), 1339–1343 (2018)

Y. Tang, Y.H.T.C.J.Y.R.: A fast kernel least mean square algorithm. In: 2022 IET International Conference on Engineering Technologies and Applications (IET-ICETA) pp. 1–12 (2022)

B. Widrow, E. Walach, Adaptive signal processing for adaptive control. IFAC Proc. Vol. 16(9), 7–12 (1983)

J. Zhao, H. Zhang, J.A. Zhang, Gaussian kernel adaptive filters with adaptive kernel bandwidth. Signal Process. 166, 107270 (2020)

Acknowledgements

The authors would like to acknowledge the support provided by the Deanship of Research Oversight and Coordination (DROC) at King Fahd University of Petroleum & Minerals (KFUPM) for funding this work under the Interdisciplinary Research Center for Communication Systems and Sensing through project No. INCS2101.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Moinuddin, M., Zerguine, A. & Arif, M. A Weighted Gaussian Kernel Least Mean Square Algorithm. Circuits Syst Signal Process 42, 5267–5288 (2023). https://doi.org/10.1007/s00034-023-02337-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02337-y