Abstract

Signal modeling is an important technique in many engineering applications. This paper is concerned about signal modeling problem for the sine multi-frequency signals or periodic signals. In terms of different characteristics between the signal output and the signal parameters, a separable modeling scheme is presented for estimating the signal parameters. In order to seize the real-time information of the signals to be modeled, a sliding measurement window is designed for using the observations dynamically and implementing accurate parameter estimates. Because the amplitude parameters are linear with respect to the signal output and the angular frequency parameters are nonlinear with respect to the signal output, the signal parameters are separated into a linear parameter set and a nonlinear parameter set. Based on these separable parameter sets, a nonlinear optimization problem is converted into a combination of the optimization quadric and the nonlinear optimization. Then, a separable multi-innovation Newton iterative signal modeling method is derived and implemented to estimate sine multi-frequency signals and periodic signals. The simulation results are found to be effective of modeling dynamic signals. For the reason that the proposed method is based on dynamic sliding measurement window, it can be used for online estimation applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Estimating for the parameters of multi-frequency signal models and dynamical systems has many engineering applications, such as channel sounding, power quality analysis, wireless communication systems [16,17,18,19,20,21,22]. Moreover, a periodic signal can be transformed into the sum of multi-sine or multi-cosine signals in accordance with the Fourier transform. In addition, parameter estimation of sinusoidal signals plays a vital role for the quality monitoring and reliability assessment of power systems [55, 58, 99]. Sine waves are easily generated within a wide frequency range and can be simply analyzed in frequency domain and time domain which are widely used as excitation signals in devices, circuits and control systems [62, 68]. Many engineering applications need to accurately estimate the signal parameters of sine waves, and many parameter estimation algorithms for sine waves were proposed in time domain and frequency domain such as the three-parameter sine-fitting least squares method or four parameter least squares method [1, 5, 34]. All these methods are supposed that the frequencies of the noisy sine-waves are known. However, many practical applications need to accurately obtain the parameter estimates of signals in real-time in the condition that no priori knowledge on frequencies is known [4]. Practically, the unknown signal parameters describing circuits or devices can be estimated by discrete measurements based on the minimization of the squared residual error between a practical signal and the available output measurements of the signal [35]. In most cases, for the sine-wave signals, all of the characteristic parameters comprising the amplitude, frequency and phase of the basic wave and each harmonic wave component are unknown. Therefore, it is meaningful to estimate all of the signal parameters under the circumstances that frequency, phase and amplitude parameters are unknown in advance.

Various parameter estimation algorithms with regard to multi-sine signals have been addressed and used widely in engineering fields. Zhao et al. [96] proposed a least squares multi-frequency identification approach to estimate the amplitude and phase of signals having multiple frequencies, but this method is realized on the premise of known main frequency values. Chaudhary et al. proposed a fractional order LMS parameter estimation algorithm to estimate power signal with the form of a multi-frequency sine signal. However, all of the characteristic parameters cannot be estimated simultaneously [9]. Moreover, some estimation methods by means of discrete-time Fourier transform have been presented for identifying the parameters of sine-waves or multi-sine signals. Belega et al. [2, 6] developed a two-point interpolated discrete-time Fourier transform method to estimate the amplitude and phase of the noisy sine-waves. Moreover, they studied the performance of interpolated DFT algorithms based on few observed cycles [3]. Wang et al. proposed a four-point interpolated discrete-time Fourier transform estimation method simultaneously taking into consideration the fundamental component, frequency parts and direct-current component [75]. These methods based on the discrete-time Fourier transform need signal transformation, which can result in new errors. Therefore, the estimation processing is complicated and the parameter estimates are not accurate. In this study, we try to develop a parameter estimation method aiming to obtain all of the characteristic parameter estimates simultaneously and directly.

Separable techniques are used widely to solve nonlinear problems with a special structure in which the objective function to be minimized with respect to a number of parameters can separate two different characteristic parameter sets in accordance with different parameter characteristics. It is noted that one parameter set enters the objective function through a linear form and the other parameter set enters in a nonlinear form [10,11,12, 26,27,28,29,30]. By means of the separable techniques, the dimension of the parameter space is reduced for solving nonlinear optimization problems and the conditioned optimization problem can be improved. In system identification, the separable techniques are adopted to reduce the complexity of the identification algorithms, which are also called hierarchical identification methods [79]. The basic principle of the separable techniques is parameter decomposition or model decomposition. Generally, the identification model is separated into several identification models after decomposition. Then, many complicated nonlinear optimization problems can be solved by some linear optimal methods [85,86,87,88,89,90,91]. For example, Ngia proposed a separable nonlinear least-squares algorithm for offline and online identification through Kautz and Laguerre filters, in which a nonlinear least squares minimization problem became separable regarding the linear coefficients and got better condition than the original unseparated one [56]. Mahata et al. investigated a separable nonlinear least squares estimation algorithm in terms of the condition of complex valued data and established the convergence properties of the proposed parameter estimation algorithm [54]. In this study, the separable technique is expanded into the field of signal modeling to develop more effective parameter estimation methods.

It is well know that the observations contain the information of the systems or signals. If the observed data are collected in real time and used in identification methods, the obtained parameter estimates are more accurate than those obtained by offline measurements [33, 42, 67]. In general, the dynamical measurements can be single datum or batch data containing the dynamical information of the systems or signals to be identified. If more measurements are employed into identification algorithms, one can get more accurate estimation results [15, 69, 70]. Moreover, sliding window is widely employed in communication, control engineering and data processing, which are used to capture dynamical information [8, 66, 76]. In this study, we design the dynamical sliding window data to estimate the signal parameters by iterative strategies, which is called the multi-innovation iterative algorithm. In this method, the sliding window data dynamically change with time increasing. After new observed data are introduced to the sliding window, an iterative process happens until the satisfactory estimates are obtained. The multi-innovation has been used widely for the identification of linear systems and nonlinear systems [78]. Multi-innovation identification algorithms are proposed based on dynamical batch data and recursive estimation, which can be used in online identification. The multi-innovation is effective to improve the identification accuracy by expanding a scalar innovation into the innovation vector. Moreover, external disturbances, modeling errors and various uncertainties in real systems will influence the model accuracy. In order to overcome these problems, the filtering techniques are effective to cope with them [13, 23, 64, 65, 101]. In this paper, we study the parameter estimation methods for the multi-frequency signals by means of the multi-innovation and iterative estimation for presenting high-performance identification algorithms.

The main contributions of this paper are summarized as follows:

-

In accordance with different features of the parameters of the multi-frequency signals, the parameters are separated into a linear parameter set and a nonlinear parameter set to reduce parameter dimensions. Based on the separable parameter sets, two different objective functions are constructed, which shows linear form and nonlinear form.

-

In terms of different objective functions, two dynamical iterative sub-algorithms are derived by Newton optimization. Because the signal parameters are separated into a linear set and a nonlinear set, one of the sub-algorithms derived by the Newton optimization becomes the linear least squares method.

-

In order to capture the dynamical information of the signals to be modeled and obtain higher estimation accuracy, a sliding window and an iterative scheme are designed to employ the observations into the estimation computation. In the proposed signal modeling method, the iterative process and the real-time acquisition happen interactively, which can seize more dynamical information and obtain higher estimation accuracy.

The remainder of this paper is outlined as follows. In Sect. 2, the parameter estimation problems are described and the separable principle for the multi-frequency sine signal is introduced. In Sect. 3, we derive a multi-innovation Newton iterative parameter estimation sub-algorithm for the linear amplitude parameters. In Sect. 4, we present a multi-innovation Newton iterative estimation algorithm for the nonlinear angular frequency parameters. In Sect. 5, a separable multi-innovation Newton iterative parameter estimation algorithm is developed by combining two sub-algorithms. In Sect. 6, some numerical examples are provided to test the performance of the proposed method. Finally, we summarize the conclusions of this paper in Sect. 7.

2 Problem Description

Consider the following identification problem of the multi-frequency sine signal:

where \(a_i\) is the amplitude parameters, \(\omega _i\) is the angular frequency parameter, \(i=1,2,\ldots ,n\), y(t) is the output of the signal model and v(t) is the noise with mean zero.

For measuring technique, oscilloscopes are convenient instruments for signal test and are used widely in many applications. However, the oscilloscopes only can obtain the single frequency of the periodic signal or the sine signal with a few frequencies. In statistical identification, the system models can be obtained by means of the measurement data from identification experiments. According to the statistical identification theory, we use the discrete measurement data \(y(t_k)\), \(k=1,2,\ldots \) to fit the multi-frequency sine signal. Using these discrete sampled data \(y(t_k)\) to fit the multi-frequency sine signal, we can obtain the fitting signal, see Fig. 1. The procedure for obtaining the parameter estimates of the signal models is called signal modeling.

From (1), we can see that the amplitude parameters \(a_i\), \(i=1,2,\ldots ,n\) are linear regarding y(t) while the angular frequencies \(\omega _i\) are nonlinear regarding y(t). This inspires us to decompose the characteristic parameters of the multi-frequency sine signal into separated parameter sets with different characteristics. Then, we separate the total parameter vector into two parameter sets, i.e., the amplitude parameter vector \(\varvec{a}\) and the angular frequency parameter vector \(\varvec{\omega }\):

The current sampling moment is denoted by \(t=t_k\), \(k=0,1,2,\ldots \). Define the error between the observed output \(y(t_k)\) and the model output \(\sum \limits _{i=1}^na_i\sin (\omega _it_k)\) as

Using the latest window data with the length p up to the current time \(t=t_k\) to define the objective function of the sliding window data gives

Based on the separable parameter sets, the above objective function can be separated into two objective functions: One is with respect to the linear parameter set \(\varvec{a}\), and the other is with respect to the nonlinear parameter set \(\varvec{\omega }\). Therefore, the parameter decomposition leads to two identification sub-models and two identification sub-algorithms. In the next sections, we give the separable signal modeling method.

3 Multi-innovation Newton Iterative Parameter Estimation Sub-algorithm for Amplitude Parameters

In this section, the proposed algorithm is on the condition that the angular frequency parameters are known. In this case, the criterion function \(J_1(\varvec{a},\varvec{\omega })\) is a function with respect to the amplitude parameter vector \(\varvec{a}\), which can be rewritten as

Define the information vector \(\varvec{\varphi }_a(\varvec{\omega },t_k)\) and the piled information matrix as

Define the piled observation output as

Then, the criterion function \(J_2(\varvec{a})\) can be further expressed as

The gradient vector of the criterion function \(J_2(\varvec{a})\) with respect to the parameter vector \(\varvec{a}\) is obtained by taking the first-order derivative:

Taking the second-order derivative of the criterion function \(J_2(\varvec{a})\) with respect to the amplitude parameter vector \(\varvec{a}\) obtains the Hessian matrix:

Let l be an iterative variable and let \(\hat{\varvec{a}}_l(t_k)\) \(:=[\hat{a}_{1,l}(t_k)\), \(\hat{a}_{2,l}(t_k)\), \(\cdots \), \(\hat{a}_{n,l}(t_k)]^{\tiny \text{ T }}\in {\mathbb R}^n\) denote the lth iterative estimate of the amplitude parameter vector \(\varvec{a}\) at time \(t=t_k\). By means of the Newton iterative principle, minimizing the criterion function \(J_2(\varvec{a})\) yields the multi-innovation Newton iterative algorithm (MINI) for estimating the amplitude parameter vector \(\varvec{a}\):

Remark 1

From (2), we can see that when the criterion function is the quadratic function of the parameter vector \(\varvec{a}\), the multi-innovation Newton iterative algorithm is reduced to the least squares algorithm, which is a sliding window least squares algorithm in (2)–(6).

Remark 2

The proposed MINI sub-algorithm in (2)–(6) is derived on the premise that the angular frequency parameter vector \(\varvec{\omega }\) is known. If the angular frequency parameter vector \(\varvec{\omega }\) is unknown, the algorithm cannot calculate the estimate of the amplitude parameter vector \(\varvec{a}\).

4 Multi-innovation Newton Iterative Estimation Sub-algorithm for Angular Frequency Parameters

When the amplitude parameters \(a_i\) \((i=1,2,\ldots ,n)\) are known, the parameter vector \(\varvec{a}\) does not need to be identified. Under this condition, the criterion function \(J_1(\varvec{a},\varvec{\omega })\) is the function with respect to the angular frequency parameter vector \(\varvec{\omega }\), which can be denoted by

Taking the first-order derivative of the criterion function \(J_3(\varvec{\omega })\) with respect to the parameter vector \(\varvec{\omega }\) obtains the gradient vector of the criterion function as follows:

Define the information vector:

Define the piled information matrix:

Define the error vector:

Then, the gradient vector \(\mathrm{grad}[J_3(\varvec{\omega })]\) can be rewritten as

Taking the second-order derivative of the criterion function \(J_3(\varvec{\omega })\) with respect to the parameter vector \(\varvec{\omega }\) obtains the Hessian matrix as follows:

Let \(\hat{\varvec{\omega }}_l(t_k):=[\hat{\omega }_{1,l}(t_k), \hat{\omega }_{2,l}(t_k),\ldots ,\hat{\omega }_{n,l(t_k)}]^{\tiny \text{ T }}\) denote the lth iterative estimate of the amplitude parameter vector \(\varvec{\omega }\) at time \(t=t_k\). According to the Newton search, minimizing the criterion function \(J_3(\varvec{\omega })\) gives the multi-innovation Newton iterative algorithm for estimating the angular frequency parameter vector \(\varvec{\omega }\):

Remark 3

The multi-innovation Newton iterative sub-algorithm in (7)–(15) for estimating the angular frequency parameter vector \(\varvec{\omega }\) is proposed under the hypothesis that the amplitude vector \(\varvec{a}\) is known. If the amplitude vector \(\varvec{a}\) is unknown, the proposed multi-innovation Newton iterative algorithm in (7)–(15) cannot estimate the angular frequency parameter vector \(\varvec{\omega }\).

5 Separable Multi-innovation Newton Iterative Parameter Estimation Algorithm

In practice, for modeling the multi-frequency sine signal, all of the signal parameters are unknown. In order to develop the signal modeling method for estimating the whole signal parameters, the proposed two sub-algorithms for estimating parameter vector \(\varvec{a}\) in (2)–(6) and \(\varvec{\omega }\) in (7)–(15) are combined to construct an interactive estimation algorithm. It is noted that the combination of these two sub-algorithms contains unknown related parameters, i.e., the unknown parameters lie in the sub-algorithms. Therefore, we present a separable algorithm to remove the related parameter terms by interactive estimation. Jointing the MINI sub-algorithm in (2)–(6) for estimating parameter vector \(\varvec{a}\) and the MINI sub-algorithm in (7)–(15) for estimating parameter vector \(\varvec{\omega }\) and replacing the unknown parameter vectors \(\varvec{\omega }\) and \(\varvec{a}\) with their previous iterative estimates \(\hat{\varvec{\omega }}_{l-1}(t_k)\) and \(\hat{\varvec{a}}_{l-1}(t_k)\) yield the separable multi-innovation Newton iterative (SMINI) parameter estimating algorithm for estimating all parameters of the multi-frequency sine signal as follows:

The computing procedure of the proposed SMINI algorithm in (16)–(29) is shown in Fig. 2. The steps for computing the parameter estimation vectors \(\hat{\varvec{a}}_l(t_k)\) and \(\hat{\varvec{\omega }}_l(t_k)\) are as follows:

-

(1)

Initialize: Set the innovation length \(p\gg n\) and let \(k=p\). Give the maximal iterative length \(l_{\max }\) and the parameter estimation accuracy \(\varepsilon \). Set the initial values \(\hat{\varvec{a}}_0(t_k)=[\hat{a}_{1,0}(t_k)\), \(\hat{a}_{2,0}(t_k)\), \(\cdots \), \(\hat{a}_{n,0}(t_k)]^{\tiny \text{ T }}\) as a random real vector, \(\hat{\varvec{\omega }}_0(t_k)=[\hat{\omega }_{1,0}(t_k)\), \(\hat{\omega }_{2,0}(t_k)\), \(\cdots \), \(\hat{\omega }_{n,0}(t_k)]^{\tiny \text{ T }}\) as a random real vector. Collect the observations \(y(t_m)\), \(m=0,1,\ldots , p-1\).

-

(2)

Let \(l=1\). Collect the observed data \(y(t_k)\), construct the output vector \(\varvec{Y}(p,t_k)\) in accordance with (17).

-

(3)

Compute the information vector \(\hat{\varvec{\varphi }}_{a,l}(t_{k-j})\) by (19), compute the information vector \(\hat{\varvec{\varphi }}_{\omega ,l}(t_{k-j})\) by (22), and compute the residual \(\hat{v}_l(t_{k-j})\), \(j=0,1,\ldots ,p-1\) by (24).

-

(4)

Construct the stacked information matrix \(\hat{\varvec{\Phi }}_{a,l}(p,t_k)\) by (18), construct the stacked information matrix \(\hat{\varvec{\Phi }}_{\omega ,l}(p,t_k)\) by (21), and construct the residual vector \(\hat{\varvec{V}}_l(p,t_k)\) by (23).

-

(5)

Compute each element \(\hat{h}_{jr,l}(t_k)\) and \(\hat{h}_{jj,l}(t_k)\), \(j,r=1,2,\ldots ,n\) of the Hessian matrix in accordance with (26)–(27) and construct the Hessian matrix \(\hat{\varvec{H}}_{\omega ,l}(p,t_k)\) by (25).

-

(6)

Update the amplitude parameter estimation vector \(\hat{\varvec{a}}_l(t_k)\) by (16); update the angular frequency parameter estimation vector \(\hat{\varvec{\omega }}_l(t_k)\) by (20). Read the amplitude parameter estimate \(\hat{a}_{i,l}(t_k)\) from (28) and read the angular frequency parameter estimate \(\hat{\omega }_{i,l}(t_k)\) from (29), \(i=1,2,\ldots ,n\).

-

(7)

If \(l<l_{\max }\), then \(l:=l+1\) and go to Step 3); otherwise, jump to the next step.

-

(8)

If \(\Vert \hat{\varvec{a}}_l(t_k)-\hat{\varvec{a}}_{l-1}(t_k)\Vert +\Vert \hat{\varvec{\omega }}_l (t_k)-\hat{\varvec{\omega }}_{l-1}(t_k)\Vert >\varepsilon \), then \(\hat{\varvec{a}}_0(t_{k+1})=\hat{\varvec{a}}_l(t_k)\), \(\hat{\varvec{\omega }}_0(t_{k+1})=\hat{\varvec{\omega }}_l(t_k)\) and increase k by 1 and go to Step 2); otherwise, obtain the parameter estimates \(\hat{\varvec{a}}_l(t_k)\) and \(\hat{\varvec{\omega }}_l(t_k)\) and terminate the iterative procedure.

Remark 4

The proposed SMINI algorithm in (16)–(29) is composed of two MINI sub-algorithms. For the linear parameter identification, the MINI sub-algorithm reduces to the least squares algorithm for estimating the linear parameter set \(\varvec{a}\). Moreover, because the SMINI algorithm uses the sliding window data, the iterative procedure alternates with the real-time datum collection. However, the sub-algorithm for estimating the nonlinear parameters cannot be realized by the linear least squares optimization, i.e., the least squares method for estimating the whole parameters of the multi-frequency sine signal cannot exist.

Remark 5

The goal of the proposed separable multi-innovation Newton iterative parameter estimation method is to reduce the computational burden compared with the inseparable multi-innovation Newton iterative parameter estimation method. In this study, an obvious problem is the parameter vector \(\varvec{\theta }:=[a_1,\ldots ,a_n,\omega _1,\ldots ,\omega _n]^{\tiny \text{ T }}\) is separated into two parameter vectors \(\varvec{a}\) and \(\varvec{b}\). Because the Newton search needs to compute the Hessian matrix and its inverse matrix, the computation burden is reduced obviously after the parameter separation, in which the dimension of the Hessian matrix with respect to \(\varvec{\theta }\) is 2n and the dimension of the Hessian matrix with respect to \(\varvec{a}\) and \(\varvec{\omega }\) is n. Comparing the proposed algorithm with the gradient-based method, the computation burden of the SMINI algorithm is heavier than the gradient-based method because of the Hessian matrix and its inverse. Therefore, in order to obtain better estimation accuracy, the algorithm needs more computational burden in some cases. The methods proposed in this paper can combine other estimation approaches [14, 50, 57, 80,81,82,83] to study the parameter estimation problems of linear systems [52, 53, 59,60,61] and bilinear systems [43,44,45,46,47,48] and nonlinear systems [25, 36, 37, 39, 49] and can be applied to some engineering application systems.

6 Numerical Scenarios

This section presents two parameter estimation examples of a power signal and a periodic signal to illustrate the performance of the proposed method. In the simulation, a comparison is provided under different noise levels. The parameter estimation error is defined as

Example 1

The parameter vector of the power signal to be estimated involves the amplitudes and phases

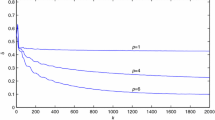

In the simulations, the noise v(t) corresponds to a normal distributed noise signal with zero mean and constant variance, i.e., \(N(0,\sigma ^2)\), where the noise variance \(\sigma ^2=0.50^2\) is evaluated. Moreover, the data length is 200 and the window lengths are \(p=10\) and \(p=30\); the sampling period is 2s. The maximum iteration is \(l_{max}=20\) at each data window. The collected observations with noise are shown in Fig. 3. The parameter estimates and their estimation errors obtained by the proposed SMINI method are illustrated in Table 1 and the estimation errors versun l is shown in Fig. 4.

In accordance with the estimated parameters when \(p=30\) and \(k=3000\), we obtain the following estimated multi-frequency sine signal:

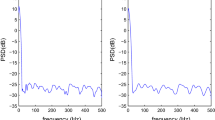

In order to test the accuracy of the estimated signal, the power spectrum density of the true signal and the estimated signal is shown in Fig. 5, where the blue line is the estimated signal and the black line is the true signal.

Example 2

In order to evaluate the performance of the proposed SMINI algorithm for estimating other periodic signals, a triangular wave is provided to test the proposed method which is shown in Fig. 6.

The mathematical description of the above triangular wave is illustrated as follows:

As we all know, any periodic function f(t) can be expanded the combination of sine and cosine terms according to the Fourier series, i.e.,

where \(a_0/2\) is the direct current component, \(\omega \) is the angular frequency of the basic wave, \(a_n\) and \(b_n\) are the coefficients of each fundamental and harmonic waves.

Because f(t) is an odd function, coefficients of the Fourier series are as zero, i.e., \(\frac{a_0}{2}=0, \quad a_n=0, \quad n=1,2,\ldots \). The coefficients \(b_n\) are determined in accordance with

As a result, the triangular wave in (30) can be described by the following Fourier series:

The first three terms in (31) can be used to represent the original triangular wave in (30) because of the fast attenuation of the higher harmonic, i.e.,

Define \(a_1=\frac{8A}{\pi ^2}\), \(a_2=-\frac{8A}{9\pi ^2}\), \(a_3=\frac{8A}{25\pi ^2}\), \(\omega _1=\omega \), \(\omega _2=3\omega \) and \(\omega _3=5\omega \). Then, f(t) in (32) becomes

Therefore, the proposed signal modeling methods can be used to estimate the parameters of the triangular wave in (30). In the simulation, the parameters of the triangular wave are \(A=6\), \(T=0.4\omega \). The simulation conditions are set as follows: (1) The white noise is \(\sigma ^2=0.05^2\), \(\sigma ^2=0.30^2\), \(\sigma ^2=0.60^2\) and \(\sigma ^2=1.00^2\); (2) The data length is \(L=150\); (3) The frequency sampling period is \(h_\omega =0.15\); (4) The innovation length \(p=10\); (5) The maximum iteration at each data window is \(l_{max}=5\). The discrete measurements of the triangular wave contained different white noise in this example are shown in Fig. 7.

Measurements of the triangular wave of Example 2

Employing the proposed SMINI algorithm to model the triangular wave, the parameter estimates and the estimation errors under different noise are listed in Table 2 and the estimation errors versus iteration l is shown in Fig. 8.

Parameter estimation errors versus iteration l of Example 2

Based on the estimated parameters in Table 2, we get four estimated signals obtained under different noise variance as follows:

The Fourier series with three terms of the original triangular waves is

In order to test the accuracy of the estimated signals, we compare the signal waves and the power spectrum density between the estimated signals and the original signals. The signal waves are shown in Fig. 9, where the blue dot line denotes the estimated signal waves and the black dash line denotes the original signals. The power spectral density curves of the signals are shown in Fig. 10, where the blue solid line denotes the original signal and the green dot line denotes the estimated signals.

Parameter estimation errors versus iteration l of Example 2

From the simulation results of Examples 1 and 2, we can conclude the following remarks.

-

Example 1 shows a multi-sine signal with three different angular frequencies, in which the signal parameters are estimated by the proposed SMINI method. From the obtained parameter estimates, we can see that the parameter estimates are obtained when the innovation length \(p=30\) is more accurate than those when the innovation length \(p=10\). Because the multi-innovation method dynamically uses sliding window measurements, the larger innovation length p means more dynamical data can be absorbed to the iterative estimation computation. Therefore, the SMINI method using larger innovation length can adequately use the dynamical information of the signal to be modeled and obtain higher estimation accuracy.

-

Example 2 shows a triangular wave signal to test the performance of the proposed SMINI method for modeling periodic signals. Figure 8 shows parameter estimation errors versus iteration l under different noise. An obvious phenomenon is that the parameter estimation errors are lager when the noise variance is larger. Moreover, with the increasing of iteration l, the parameter estimation errors become smaller in general confirming the effectiveness of the SMINI algorithm. When the observation data contain large disturbance, the parameter estimation errors fluctuate greatly.

-

Figures 9 and 10 show the signal waves and power spectral density curves of the estimated signals and original signals. From them, we can see that the signal waves and power spectral density curves of the estimated signals and original signals are close, which means the estimated signals can seize the characteristic of the original signal. In other words, the proposed SMINI method is effective for signal modeling.

7 Conclusions

This paper studies the modeling problem for the sine multi-frequency signals or periodic signals. A parameter decomposition method is presented in terms of different characteristics between the signal output and the signal parameters. Based on a separated linear parameter set and a nonlinear parameter set, a full nonlinear optimization problem is converted into a combination of linear and nonlinear optimization problems. Then, a separable multi-innovation Newton iterative signal modeling method is derived and implemented to estimate the sine multi-frequency signals and periodic signals, in which the measurements are sampled and used dynamically. By testing the performance of the proposed SMINI algorithm to a multi-sine signal and a periodic signal via the simulation experiments, the results are found to be effective of modeling dynamic signals. For the reason that the proposed method is based on dynamic sliding measurement window, it can be used for online estimation applications. The proposed separable multi-innovation Newton iterative modeling algorithm for multi-frequency signals based on the sliding measurement window can combine other techniques and strategies [24, 31, 32, 51, 63, 84, 93, 94, 98] to explore new identification methods of some linear and nonlinear systems [7, 73, 74] and can be applied to other fields [38, 40, 41, 71, 72, 77, 92, 95, 97, 100] such as information processing and communication.

References

D. Belega, D. Petri, Accuracy analysis of the sine-wave parameters by means of the windowed three-parameter sine-fit algorithm. Digit. Signal Proc. 50, 12–23 (2016)

D. Belega, D. Petri, Sine-wave parameter estimation by interpolated DFT method based on new cosine windows with high interference rejection capability. Digit. Signal Proc. 33, 60–70 (2014)

D. Belega, D. Petri, Effect of noise and harmonics on sine-wave frequency estimation by interpolated DFT algorithms based on few observed cycles. Sig. Process. 140, 207–218 (2017)

D. Belega, D. Petri, D. Dallet, Amplitude and phase estimation of real-valued sine wave via frequency-domain linear least-squares algorithms. IEEE Trans. Instrum. Meas. 67(5), 1065–1077 (2018)

D. Belega, D. Petri, D. Dallet, Noise power estimation by the three parameter and four-parameter sine-fit algorithms. IEEE Trans. Instrument. Measurement 61(12), 3234–3240 (2012)

D. Belegaa, D. Petrib, D. Dallet, Accurate amplitude and phase estimation of noisy sine-waves via two-point interpolated DTFT algorithms. Measurement 127, 89–97 (2018)

N. Bu, J.X. Pang, M. Deng, Robust fault tolerant tracking control for the multi-joint manipulator based on operator theory. J. Franklin Inst. 357(5), 2696–2714 (2020)

C. Chang, Y. Wang, S. Chen, Anomaly detection using causal sliding windows. IEEE J. Selected Topics Appl. Earth Observ. Remote Sens. 8(7), 3260–3270 (2015)

N.I. Chaudhary, R. Latif, M.A.Z. Raja, J.A.T. Machado, An innovative fractional order LMS algorithm for power signal parameter estimation. Appl. Math. Model. 83, 703–718 (2020)

G.Y. Chen, M. Gan et al., Modified Gram-Schmidt method-based variable projection algorithm for separable nonlinear models. IEEE Trans. Neural Netw. Learn. Syst. 30(8), 2410–2418 (2019)

G.Y. Chen, M. Gan, C.L.P. Chen et al., A regularized variable projection algorithm for separable nonlinear least-squares problems. IEEE Trans. Autom. Control 64(2), 526–537 (2019)

G.Y. Chen, M. Gan, C.L.P. Chen, H.X. Li, Basis function matrix-based flexible coefficient autoregressive models: A framework for time series and nonlinear system modeling. IEEE Trans. Cybern. 51(2), 614–623 (2021)

Z. Chen, B. Zhang, V. Stojanovic, Y. Zhang, Z. Zhang, Event-based fuzzy control for T-S fuzzy networked systems with various data missing. Neurocomputing 417, 322–332 (2020)

T. Cui et al., Joint multi-innovation recursive extended least squares parameter and state estimation for a class of state-space systems. Int. J. Control Autom. Syst. 18(6), 1412–1424 (2020)

J.L. Ding, Recursive and iterative least squares parameter estimation algorithms for multiple-input-output-error systems with autoregressive noise. Circuits, Syst. Signal Process. 37(5), 1884–1906 (2018)

F. Ding, L. Lv, J. Pan et al., Two-stage gradient-based iterative estimation methods for controlled autoregressive systems using the measurement data. Int. J. Control Autom. Syst. 18(4), 886–896 (2020)

F. Ding, F.F. Wang, T. Hayat et al., Parameter estimation for pseudo-linear systems using the auxiliary model and the decomposition technique. IET Control Theory Appl. 11(3), 390–400 (2017)

F. Ding, X.H. Wang, L. Mao et al., Joint state and multi-innovation parameter estimation for time-delay linear systems and its convergence based on the Kalman filtering. Digit. Signal Proc. 62, 211–223 (2017)

F. Ding et al., Iterative parameter identification for pseudo-linear systems with ARMA noise using the filtering technique. IET Control Theory Appl. 12(7), 892–899 (2018)

F. Ding et al., Gradient estimation algorithms for the parameter identification of bilinear systems using the auxiliary model. J. Comput. Appl. Math. 369, 112575 (2020)

F. Ding et al., Performance analysis of the generalised projection identification for time-varying systems. IET Control Theory Appl. 10(18), 2506–2514 (2016)

F. Ding et al., The innovation algorithms for multivariable state-space models. Int. J. Adapt. Control Signal Process. 33(11), 1601–1608 (2019)

X.F. Dong, S. He, V. Stojanovic, Robust fault detection filter design for a class of discrete-time conic-type nonlinear Markov jump systems with jump fault signals. IET Control Theory & Applications. 14(14), 1912–1919 (2020)

H. Dong, C.C. Yin, H.S. Dai, Spectrally negative Levy risk model under Erlangized barrier strategy. J. Comput. Appl. Math. 351, 101–116 (2019)

Y.M. Fan, X.M. Liu, Two-stage auxiliary model gradient-based iterative algorithm for the input nonlinear controlled autoregressive system with variable-gain nonlinearity. Int. J. Robust Nonlinear Control 30(14), 5492–5509 (2020)

M. Gan, G.Y. Chen, L. Chen, C.L.P. Chen, Term selection for a class of separable nonlinear models. IEEE Trans. Neural Netw. Learn. Syst. 31(2), 445–451 (2020)

M. Gan, X.X. Chen et al., Adaptive RBF-AR models based on multi-innovation least squares method. IEEE Signal Process. Lett. 26(8), 1182–1186 (2019)

M. Gan, C.L.P. Chen, G.Y. Chen, L. Chen, On some separated algorithms for separable nonlinear squares problems. IEEE Trans. Cybern. 48(10), 2866–2874 (2018)

M. Gan, Y. Guan, G.Y. Chen, C.L.P. Chen. Recursive variable projection algorithm for a class of separable nonlinear models. IEEE Transactions on on Neural Networks and Learning System (2021). https://doi.org/10.1109/TNNLS.2020.3026482

M. Gan, H.X. Li, H. Peng, A variable projection approach for efficient estimation of RBF-ARX model. IEEE Trans. Cybern. 45(3), 476–485 (2015)

F.Z. Geng, Piecewise reproducing kernel-based symmetric collocation approach for linear stationary singularly perturbed problems. AIMS Math. 5(6), 6020–6029 (2020)

F.Z. Geng, X.Y. Wu, Reproducing kernel function-based Filon and Levin methods for solving highly oscillatory integral. Appl. Math. Comput. 397, Article Number: 125980 (2021)

O. Gomez, Y. Orlov, I.V. Kolmanovsky, On-line identification of SISO linear time-invariant delay systems from output measurements. Automatica 43(12), 2060–2069 (2007)

P. Händel, Amplitude estimation using IEEE-STD-1057 three-parameter sine wave fit: Statistical distribution, bias and variance. Measurement 43(6), 766–770 (2010)

P. Händel, Parameter estimation employing a dual-channel sine-wave model under a Gaussian assumption. IEEE Trans. Instrum. Meas. 57(8), 1661–1669 (2008)

Y. Ji, X.K. Jiang, L.J. Wan, Hierarchical least squares parameter estimation algorithm for two-input Hammerstein finite impulse response systems. J. Franklin Inst. 357(8), 5019–5032 (2020)

Y. Ji, Z. Kang, Three-stage forgetting factor stochastic gradient parameter estimation methods for a class of nonlinear systems. Int. J. Robust Nonlinear Control 31(3), 871–987 (2021)

F. Ji, L. Liao, T.Z. Wu, C. Chang, M.N. Wang, Self-reconfiguration batteries with stable voltage during the full cycle without the DC-DC converter. J. Energy Storage 28, Article Number: 101213 (2020)

Y. Ji, C. Zhang, Z. Kang, T. Yu, Parameter estimation for block-oriented nonlinear systems using the key term separation. Int. J. Robust Nonlinear Control 30(9), 3727–3752 (2020)

X.B. Jin, R.J. RobertJeremiah, T.L. Su, et al., state estimation: from model-driven to hybrid-driven methods. Sensors 21(6), Article Number: 2085 (2021)

X.B. Jin, X.H. Yu, T.L. Su, et al., Distributed deep fusion predictor for a multi-sensor system based on causality. Entropy 23(2), Article Number: 219 (2021)

J. Li, X. Li, Online sparse identification for regression models. Systems & Control Letters 141, Article 104710 (2020)

M.H. Li, X.M. Liu, Filtering-based maximum likelihood gradient iterative estimation algorithm for bilinear systems with autoregressive moving average noise. Circuits Syst. Signal Process. 37(11), 5023–5048 (2018)

M.H. Li, X.M. Liu, The least squares based iterative algorithms for parameter estimation of a bilinear system with autoregressive noise using the data filtering technique. Sig. Process. 147, 23–34 (2018)

M.H. Li, X.M. Liu et al., The filtering-based maximum likelihood iterative estimation algorithms for a special class of nonlinear systems with autoregressive moving average noise using the hierarchical identification principle. Int. J. Adapt. Control Signal Process. 33(7), 1189–1211 (2019)

M.H. Li, X.M. Liu, Maximum likelihood least squares based iterative estimation for a class of bilinear systems using the data filtering technique. Int. J. Control Autom. Syst. 18(6), 1581–1592 (2020)

M.H. Li, X.M. Liu, Maximum likelihood hierarchical least squares-based iterative identification for dual-rate stochastic systems. Int. J. Adapt. Control Signal Process. 35(2), 240–261 (2021)

M.H. Li, X.M. Liu, Iterative parameter estimation methods for dual-rate sampled-data bilinear systems by means of the data filtering technique. IET Control Theory Appl. 15(9), 1230–1245 (2021)

X.M. Liu, Y.M. Fan, Maximum likelihood extended gradient-based estimation algorithms for the input nonlinear controlled autoregressive moving average system with variable-gain nonlinearity. Int. J. Robust Nonlinear Control 31(9), 4017–4036 (2021)

S.Y. Liu, Y.L. Zhang et al., Extended gradient-based iterative algorithm for bilinear state-space systems with moving average noises by using the filtering technique. Int. J. Control Autom. Syst. 19(4), 1597–1606 (2021)

L.L. Lv, J.B. Chen, Z. Zhang et al., A numerical solution of a class of periodic coupled matrix equations. J. Franklin Inst. 358(3), 2039–2059 (2021)

H. Ma, J. Pan et al., Partially-coupled least squares based iterative parameter estimation for multi-variable output-error-like autoregressive moving average systems. IET Control Theory Appl. 13(18), 3040–3051 (2019)

H. Ma, X. Zhang, Q.Y. Liu et al., Partiallly-coupled gradient-based iterative algorithms for multivariable output-error-like systems with autoregressive moving average noises. IET Control Theory Appl. 14(17), 2613–2627 (2020)

K. Mahata, T. Soderstrom, Large sample properties of separable nonlinear least squares estimators. IEEE Trans. Signal Process. 52(6), 1650–1658 (2004)

I.C. Mituletu, G. Gillich, N.M.M. Maia, A method for an accurate estimation of natural frequencies using swept-sine acoustic excitation. Mech. Syst. Signal Process. 116, 693–709 (2019)

L.S.H. Ngia, Separable nonlinear least-squares methods for efficient off-line and on-line modeling of systems using Kautz and Laguerre filters. IEEE Trans. Circuits Syst. II: Analog Digital Signal Process. 48(6), 562–579 (2001)

J.Y. Ni, Y.L. Zhang, Parameter estimation algorithms of linear systems with time-delays based on the frequency responses and harmonic balances under the multi-frequency sinusoidal signal excitation. Signal Processing 181, Article Number: 107904 (2021)

V. Pálfi, An improved sine wave histogram test method for ADC characterization. IEEE Trans. Instrum. Meas. 68(10), 3446–3455 (2019)

J. Pan, X. Jiang, X.K. Wan, W. Ding, A filtering based multi-innovation extended stochastic gradient algorithm for multivariable control systems. Int. J. Control Autom. Syst. 15(3), 1189–1197 (2017)

J. Pan, W. Li, H.P. Zhang, Control algorithms of magnetic suspension systems based on the improved double exponential reaching law of sliding mode control. Int. J. Control Autom. Syst. 16(6), 2878–2887 (2018)

J. Pan, H. Ma, X. Zhang et al., Recursive coupled projection algorithms for multivariable output-error-like systems with coloured noises. IET Signal Proc. 14(7), 455–466 (2020)

S.R.P. Reddy, U. Loganathan, Offline recursive identification of electrical parameters of VSI-Fed induction motor drives. IEEE Trans. Power Electronics. 35(10), 10711–10719 (2020)

X.Y. Sha, Z.S. Xu, C.C. Yin, Elliptical distribution-based weight-determining method for ordered weighted averaging operators. Int. J. Intell. Syst. 34(5), 858–877 (2019)

V. Stojanovic, S. He, B. Zhang, State and parameter joint estimation of linear stochastic systems in presence of faults and non-Gaussian noises. Int. J. Robust Nonlinear Control 30(16), 6683–6700 (2020)

V. Stojanovic, D. Prsic, Robust identification for fault detection in the presence of non-Gaussian noises: application to hydraulic servo drives. Nonlinear Dyn. 100(5), 2299–2313 (2020)

K. Tshiloz, S. Djurović, Scalar controlled induction motor drive speed estimation by adaptive sliding window search of the power signal. Int. J. Electrical Power Energy Syst. 91, 80–91 (2017)

C.M. Verrelli, A. Savoia, M. Mengoni, R. Marino, P. Tomei, L. Zarri, On-line identification of winding resistances and load torque in induction machines. IEEE Trans. Control Syst. Technol. 22(4), 1629–1637 (2014)

N.M. Vučijak, L.V. Saranovac, A simple algorithm for the estimation of phase difference between two sinusoidal voltages. IEEE Trans. Instrum. Meas. 29(12), 3152–3158 (2010)

L.J. Wan et al., Decomposition- and gradient-based iterative identification algorithms for multivariable systems using the multi-innovation theory. Circuits Syst. Signal Process. 38(7), 2971–2991 (2019)

L.J. Wan et al., A new iterative least squares parameter estimation approach for equation-error autoregressive systems. Int. J. Control Autom. Syst. 18(3), 780–790 (2020)

X.K. Wan, Z.Y. Jin, H.B. Wu, et al., Heartbeat classification algorithm based on one-dimensional convolution neural network. J. Mech. Med. Biol. 20(7), Article Number: 2050046 (2020)

L.J. Wang, J. Guo, C. Xu, T.Z. Wu, H.P. Lin, Hybrid model predictive control strategy of supercapacitor energy storage system based on double active bridge. Energies 12(11), Article Number: 2134 (2019)

L.J. Wang, Y. Ji, H.L. Yang et al., Decomposition-based multiinnovation gradient identification algorithms for a special bilinear system based on its input-output representation. Int. J. Robust Nonlinear Control 30(9), 3607–3623 (2020)

L.J. Wang, Y. Ji, L.J. Wan, N. Bu, Hierarchical recursive generalized extended least squares estimation algorithms for a class of nonlinear stochastic systems with colored noise. J. Franklin Inst. 356(16), 10102–10122 (2019)

Y. Wang, W. Wei, J. Xiang, Multipoint interpolated DFT for sine waves in short records with DC components. Sig. Process. 131, 161–170 (2017)

K. Wang, L. Zhang, H. Wen, et al., A sliding-window DFT based algorithm for parameter estimation of multi-frequency signal. Digital Signal Process. 97 (2020) Article 102617

M.H. Wu, H.H. Yue, J. Wang et al., Object detection based on RGC mask R-CNN. IET Image Proc. 14(8), 1502–1508 (2020)

H.F. Xia, Y. Ji, Y.J. Liu et al., Maximum likelihood-based multi-innovation stochastic gradient method for multivariable systems. Int. J. Control Autom. Syst. 17(3), 565–574 (2019)

L. Xu et al., Hierarchical Newton and least squares iterative estimation algorithm for dynamic systems by transfer functions based on the impulse responses. Int. J. Syst. Sci. 50(1), 141–151 (2019)

L. Xu et al., Separable multi-innovation stochastic gradient estimation algorithm for the nonlinear dynamic responses of systems. Int. J. Adapt. Control Signal Process. 34(7), 937–954 (2020)

L. Xu et al., Separable recursive gradient algorithm for dynamical systems based on the impulse response signals. Int. J. Control Autom. Syst. 18(12), 3167–3177 (2020)

L. Xu et al., Auxiliary model multiinnovation stochastic gradient parameter estimation methods for nonlinear sandwich systems. Int. J. Robust Nonlinear Control 31(1), 148–165 (2020)

L. Xu, G.L. Song, A recursive parameter estimation algorithm for modeling signals with multi-frequencies. Circuits Syst. Signal Process. 39(8), 4198–4224 (2020)

C.C. Yin, Y.Z. Wen, An extension of Paulsen-Gjessing’s risk model with stochastic return on investments. Insurance Math. Econom. 52(3), 469–476 (2013)

X. Zhang et al., Adaptive parameter estimation for a general dynamical system with unknown states. Int. J. Robust Nonlinear Control 30(4), 1351–1372 (2020)

X. Zhang et al., Recursive parameter estimation and its convergence for bilinear systems. IET Control Theory Appl. 14(5), 677–688 (2020)

X. Zhang et al., Hierarchical parameter and state estimation for bilinear systems. Int. J. Syst. Sci. 51(2), 275–290 (2020)

X. Zhang et al., Recursive parameter identification of the dynamical models for bilinear state space systems. Nonlinear Dyn. 89(4), 2415–2429 (2017)

X. Zhang et al., Highly computationally efficient state filter based on the delta operator. Int. J. Adapt. Control Signal Process. 33(6), 875–889 (2019)

X. Zhang et al., State estimation for bilinear systems through minimizing the covariance matrix of the state estimation errors. Int. J. Adapt. Control Signal Process. 33(7), 1157–1173 (2019)

X. Zhang et al., Recursive identification of bilinear time-delay systems through the redundant rule. J. Franklin Inst. 357(1), 726–747 (2020)

Y. Zhang, Z. Yan, C.C. Zhou, T.Z. Wu, Y.Y. Wang, Capacity allocation of HESS in micro-grid based on ABC algorithm. Int. J. Low-Carbon Technol. 15(4), 496–505 (2020)

Y.X. Zhao, P. Chen, H.L. Yang, Optimal periodic dividend and capital injection problem for spectrally positive Levy processes. Insurance Math. Econom. 74, 135–146 (2017)

X.H. Zhao, H. Dong, H.S. Dai, On spectrally positive Levy risk processes with Parisian implementation delays in dividend payments. Stat. Probability Lett. 140, 176–184 (2018)

N. Zhao, Y. Liang, D. Niyato et al., Deep reinforcement learning for user association and resource allocation in heterogeneous cellular networks. IEEE Trans. Wireless Commun. 18(11), 5141–5152 (2019)

S. Zhao, F. Wang, H. Xu, J. Zhu, Multi-frequency identification method in signal processing. Digit. Signal Proc. 19(4), 555–566 (2009)

Z.Y. Zhao, X.Y. Wang, P. Yao, Y.T. Bai, A health performance evaluation method of multirotors under wind turbulence. Nonlinear Dyn. 102(3), 1701–1715 (2020)

Y.X. Zhao, C.C. Yin, The expected discounted penalty function under a renewal risk model with stochastic income. Appl. Math. Comput. 218(10), 6144–6154 (2012)

R. Zheng, H. Chen, D. Vandepitte, S. Gallas, B. Zhang, Generation of sine on random vibrations for multi-axial fatigue tests. Mech. Syst. Signal Process. 126, 649–661 (2019)

Y.M. Zhou, S.J. Mei, J.J. Feng et al., Effects of PEDOT:PSS:GO composite hole transport layer on the luminescence of perovskite light-emitting diodes. RSC Adv. 10(44), 26381–26387 (2020)

L. Zhou, H. Tao, W. Paszke, V. Stojanovic, H.Yang, PD-type iterative learning control for uncertain spatially interconnected systems. Mathematics 8 (9), Article Number: 1528 (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61873111), the Qing Lan Project of Jiangsu Province, the “333” Project of Jiangsu Province (No. BRA2018328) and the Jiangsu Overseas Visiting Scholar Program for University Prominent Young & Middle-Aged Teachers and Presidents and the High Training Project for Teachers’ Professional Leaders in Higher Vocational Colleges of Jiangsu Province (No. 2021GRGDYX073).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Data Availability Statement

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xu, L. Separable Multi-innovation Newton Iterative Modeling Algorithm for Multi-frequency Signals Based on the Sliding Measurement Window. Circuits Syst Signal Process 41, 805–830 (2022). https://doi.org/10.1007/s00034-021-01801-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-021-01801-x