Abstract

This paper addresses a modified Newton (MNT) algorithm for the source localization problem utilizing time difference of arrival. As the improvement of the Newton (NT) method, the proposed algorithm can guarantee convergence stability in the case of bad initial values by using the Tikhonov (TI) regularization theory. Moreover, a two-stage MNT algorithm is proposed for the source localization with sensor position errors. Theoretical analysis is provided to illustrate that the two-stage MNT algorithm has less computational load and faster convergence speed compared with the MNT algorithm. Simulation results show the superior location accuracy and better convergence performance of the proposed MNT and two-stage MNT algorithms in comparison with relative methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Time difference of arrival (TDOA)-based source localization is a key technology in many fields such as navigation, surveillance, wireless sensor networks and wireless communications [8, 15, 19]. The source localization is not a simple problem for the high nonlinearity of TDOA equations related to the unknown emitting source. Researchers have developed the closed-form algorithms to linearize the nonlinear localization problem, which can be categorized into the linear least squares (LS) [7], two-step weighted least squares (WLS) [3], constrained total least squares (CTLS) [22], constrained weighted least squares (CWLS) [5], and multidimensional scaling (MDS) analysis [18]. The closed-form algorithms can only attain the CRLB in a mild noise environment, and the bias of the location result becomes significant in large noise levels. Due to the drawback, researchers have improved the closed form to reduce the bias in [1, 11]. Another method to handle the nonlinearity is to use iteration through linearization. Foy [6] and Chernoguz [4] provide such a solution using Gauss–Newton implementation of the maximum likelihood estimator (MLE) under Gaussian noise. Such a technique requires carefully chosen initial guesses that are near the actual solution. Convergence is not guaranteed, and one could end up with a local minimum solution. Moreover, some researchers combine the closed-form algorithm and the iterative method. Weng et al. [20] and Yu et al. [23] use the closed-form solution as the initial value and then improve the location accuracy by the iterative methods.

Beside the problem mentioned above, most of the localization algorithms assume the sensor positions are precisely known. However, the location of the receiver is not entirely known in practical application, the existence of the sensor position error will have a greater adverse effect on the TDOA location accuracy, and even the CRLB will rise with the sensor error. Although some closed-form algorithms take the sensor error into account to reduce the estimation error, such as the work [12, 13, 16], these algorithms still have the threshold effect and can only be used in slight noise environment. What’s more, semidefinite programming (SDP) algorithm [17, 21] is proposed to relax the non-convex localization problem. This algorithm is heavily dependent on the tightness of relaxation that transforms the non-convex optimization problem into a convex one. Moreover, the iterative method is also employed to refine the SDP solutions in order to obtain better estimation accuracy approaching the CRLB.

This paper mainly studies the solution to the convergence problem in iterative algorithms. First, the objective function is determined based on the maximum likelihood estimator and the Newton method is used to solve the TDOA equations. It is known that the Newton method is based on the quadratic approximation of the Taylor series, which ignores the terms higher than second order of Taylor-series expansion. However, due to the high nonlinearity of the TDOA localization, a bad initial value which may cause the contribution of the higher terms significant to the solution can still lead the iteration to diverge. Then, a modified Newton algorithm (MNT) is proposed for the stable convergence problem. In the MNT algorithm, we present the Tikhonov (TI) method in regularization theory to modify the ill-posed Hessian matrix caused by the bad initial value into a well-conditioned one. In addition, an L-curve method is developed to determine the significant loading parameter, which makes the proposed algorithm converge quickly on the desired solution. The theory of the MNT algorithm can also be used to propose the modified Taylor-series (MTS) algorithm instead of the TS method. The proposed MNT and MTS algorithms exhibit robustness to the convergent problem and better capability to distinguish and remove the local minimums from the global solutions compared with the TS and NT methods. Last, a two-stage Newton algorithm is proposed for the source localization in the presence of sensor errors, which reduces the large calculations of the high-dimensional Hessian matrix inversion caused by the sensor position errors and improves the convergence speed. Simulation results demonstrate that the proposed MNT and two-stage MNT algorithms have better convergence performance compared with the NT method and more precise location accuracy compared with the closed-form algorithms at moderate and large noises.

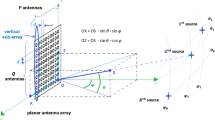

2 Problem Formulation

Consider a three-dimensional (3-D) scenario where \( M \) passive sensors collaborate to determine an unknown emitting source. The source location is \( {\mathbf{x}} = [x,y,z]^{T} \), and the positions of the sensor are located at \( {\mathbf{s}}_{i} = [x_{i} ,y_{i} ,z_{i} ]^{T} \), \( i = 1,2, \ldots ,M \). The distance between the source and the ith sensor is

where symbol \( \left\| {{\kern 1pt} \cdot {\kern 1pt} } \right\|_{2} \) denotes the 2-norm. Without loss of generality, the first sensor is chosen as the reference and the TDOA measurement can be expressed as

where c is the known signal propagation speed, \( n_{i1} /c \) is the TDOA measurement noise, and \( d_{i1} \) is often referred to as range difference of arrival (RDOA). The RDOA measurements can be formed as

where

where \( {\mathbf{n}}_{t} \) denotes the RDOA measurement noise vector that is satisfied to be zero mean Gaussian with covariance matrix \( E({\mathbf{n}}_{t} {\mathbf{n}}_{t}^{T} ) = {\mathbf{Q}}_{t} \). Based on the ML estimator, the objective is to find \( {\mathbf{x}} \) to minimize the following cost function:

3 Proposed MNT and MTS Methods

The Newton method is a classical iterative algorithm which improves the source location at each iteration using the quadratic approximation of the Taylor series. Denoting \( k = 1,2, \ldots ,K \) as the iterative index, the next point \( {\mathbf{x}}_{k + 1} \), we have

where \( \nabla f({\mathbf{x}}) \) and \( \nabla^{2} f({\mathbf{x}}) \) denote the gradient and Hessian, respectively. From (7), they can be formed as

where

Notice that the Hessian matrix \( \nabla^{2} f({\mathbf{x}}) \) from (10) is well conditioned when the initial value is close to the source, and then the iteration can converge on the desired solution. Due to the high nonlinearity of the TDOA localization, the Newton’s method has a critical problem that it cannot guarantee the convergence stability. When the initial value usually provided by the closed-form solution deteriorates at large noise levels, the contribution of the higher terms in the Taylor-series expansion becomes significant to the solution, which may cause the Hessian matrix ill-posed and lead the Newton’s method to diverge. The reason for the ill-posed Hessian matrix problem follows two criteria[2, 9]: (i) The eigenvalues of the Hessian decay gradually to zero and (ii) the condition number (the ratio of the largest to the smallest nonzero eigenvalues) is large.

In order to improve the convergence stability of Newton’s method, we first analyze the influence of the ill-posed Hessian matrix on convergence. Setting \( {\mathbf{b}} = - \nabla f({\mathbf{x}}) \) and \( {\mathbf{A}} = \nabla^{2} f({\mathbf{x}}) \) from (9) and (10), the SVD of \( {\mathbf{\rm A}} \) is decomposition of the form

where \( {\mathbf{U}} = ({\mathbf{u}}_{1} , \ldots ,{\mathbf{u}}_{n} ) \) and \( {\mathbf{V}} = ({\mathbf{v}}_{1} , \ldots ,{\mathbf{v}}_{n} ) \) are matrices with orthonormal columns \( {\mathbf{U}}^{T} {\mathbf{U}} = {\mathbf{V}}^{T} {\mathbf{V}}{\mathbf{ = }}{\mathbf{I}}_{n} \) and \( {\varvec{\Sigma}} = {\text{diag}}(\sigma_{1} , \ldots ,\sigma_{n} ) \). The \( \sigma_{i} \), \( {\mathbf{u}}_{i} \), and \( {\mathbf{v}}_{i} \) are the singular value and their corresponding left and right singular vectors of \( {\mathbf{A}} \), respectively. From (8), we can obtain

Since the Fourier coefficients \( \left| {{\mathbf{u}}_{i}^{T} {\mathbf{b}}} \right| \) corresponding to the smaller eigenvalues \( \sigma_{i} \) do not decay as fast as the eigenvalues, the solution \( \Delta {\mathbf{x}} \) is dominated by the terms in the sum corresponding to the smallest \( \sigma_{i} \). As a consequence, the solution \( \Delta {\mathbf{x}} \) has sign changes frequently and thus appears completely random.

For the Newton’s method in the source localization, it is necessary to avoid the appearance of ill-posed matrices, which needs to dampen or filter out the contributions to the solution corresponding to the small singular values. Here, a TI technique is introduced to modify the Hessian matrix which transforms (17) into solving the form of

Then, the regularized solution \( \Delta {\mathbf{x}}_{\text{reg}} \) can be given as

where \( f_{i} = \frac{{\sigma_{i}^{2} }}{{\sigma_{i}^{2} + \lambda^{2} }} \) means the filter factor.

Remark 1

For the source localization, it is known that the first term in (10) has a greater contribution than the second term, which easily satisfies the two ill-posed criteria with a bad initial value. By using the TI technique, the smallest singular value becomes \( \sigma_{ \hbox{min} } + \lambda^{2} /\sigma_{ \hbox{min} } \) which drives farther away from zero than the original value \( \sigma_{ \hbox{min} } \), and the condition number changes into \( \text{(}\sigma_{ \hbox{max} } + \lambda^{2} /\sigma_{ \hbox{max} } \text{)}/\text{(}\sigma_{ \hbox{min} } + \lambda^{2} /\sigma_{ \hbox{min} } \text{)} \) which becomes smaller and more reasonable than the original value \( \sigma_{ \hbox{max} } /\sigma_{\hbox{min} } \). Hence, compared with the NT algorithm, the proposed algorithm reduces the condition number to ensure stable convergence in the ill-posed condition.

Here the parameter \( \lambda \) denotes the regularization parameter, and it is an important quantity which controls the properties of the regularized solution. By introducing the parameter \( \lambda \), the condition number of the Hessian matrix modified by the TI technique decreases and the regularized solution \( \Delta {\mathbf{x}}_{\text{reg}} \) becomes more proper. This paper exploits an L-curve method to determine the loading parameters, which is based on a log–log plot of corresponding residual norm \( \left\| {{\mathbf{A}}\Delta {\mathbf{x}} - {\mathbf{b}}} \right\|_{2} \) as abscissa and regularized norm \( \left\| {\Delta {\mathbf{x}}} \right\|_{2} \) as ordinate, where the expressions of the norm are formulated as \( \left\| {{\mathbf{A}}\Delta {\mathbf{x}} - {\mathbf{b}}} \right\|_{2}^{2} = \sum\limits_{i = 1}^{n} {((1 - f_{i} {\mathbf{)u}}_{i}^{T} {\mathbf{b)}}^{2} } \) and \( \left\| {\Delta {\mathbf{x}}} \right\|_{2}^{2} = \sum\limits_{i = 1}^{n} {(f_{i} \frac{{{\mathbf{u}}_{i}^{T} {\mathbf{b}}}}{{\sigma_{i} }})^{2} } \). Then, it is found that this curve has a particular “L” shape and that the optimal loading parameter corresponds to a point on the curve near the “corner” of the L-shaped region [10, 14]. For the localization problem, the L-curve can be defined by a smooth, computable formula. Thus, the loading parameter is found by locating the inflexion with the highest curvature \( \kappa = \frac{{\rho^{\prime}\theta^{\prime\prime} - \rho^{\prime\prime}\theta^{\prime}}}{{[(\rho^{\prime})^{2} + (\theta^{\prime})^{2} ]^{{\frac{3}{2}}} }} \), where \( \rho = \left\| {{\mathbf{A}}\Delta {\mathbf{x}} - {\mathbf{b}}} \right\|_{2}^{{}} \) and \( \theta = \left\| {\Delta {\mathbf{x}}} \right\|_{2}^{{}} \), and the \( ( \cdot )^{\prime} \) denotes differentiation with respect to the loading parameter \( \lambda \).

Remark 2

To analyze the behavior of the L-curve, we denote \( \Delta {\bar{\mathbf{x}}} \) as the exact solution corresponding to the exact \( {\bar{\mathbf{b}}} \) in (17), and then the error is defined as \( \Delta {\mathbf{x}}_{\text{reg}} - \Delta {\bar{\mathbf{x}}}\varvec{ = }\sum\limits_{i = 1}^{n} {f_{i} \frac{{{\mathbf{u}}_{i}^{T} {\mathbf{(b}} - {\bar{\mathbf{b}}})}}{{\sigma_{i} }}} {\mathbf{v}}_{i} {\kern 1pt} + \sum\limits_{i = 1}^{n} {(f_{i} - 1)\frac{{{\mathbf{u}}_{i}^{T} {\bar{\mathbf{b}}}}}{{\sigma_{i} }}} {\mathbf{v}}_{i} {\kern 1pt} \). Here, the first term is the perturbation error due to the perturbation \( {\mathbf{e}} = {\mathbf{b}} - {\bar{\mathbf{b}}} \), and the second term is the regularization error caused by regularization of the unperturbed component \( {\mathbf{b}} \). Furthermore, the horizontal part of the L-curve corresponding to solutions dominated by the regularization error is very sensitive to changes in \( \lambda \), while the \( \left\| {\Delta {\bar{\mathbf{x}}}} \right\|_{2} \) changes a little with \( \lambda \). In contrast, the vertical part of the L-curve corresponding to solutions dominated by the perturbation error varies dramatically with \( \lambda \), while simultaneously, the residual norm does not change much. In this way, the L-curve clearly displays the trade-off between minimizing the residual norm and the side constraint.

One should note that ignoring the second term of (10), the NT method falls into the TS method, so the regularization theory can also be used to modify the TS method. Without the second term of (10), using (8), (9), (10), and (19), the MTS method can be proposed to improve the convergent capability compared with the TS method.

Remark 3

Compared with the TS method, the NT method gives superior performance of location accuracy since the second term of (10) provides a more precise Hessian matrix. However, the Hessian matrix may be negative definite with a bad initial value in large noises, which causes the iteration to diverge. Ignoring the second term of (10), the TS method has better convergent capability since the first term of (10) is positive definite, but the solution of the TS method may be easily trapped in the local minimum. The proposed methods inherit the character of the TS and NT methods. Compared with the MTS method, the MNT method has better location accuracy but slower convergence speed.

Remark 4

We judge whether the iteration is stable and convergent by observing the objective function value. The iteration is considered to be divergent when the objective function value \( f({\mathbf{x}}_{k + 1} ) > f({\mathbf{x}}_{k} ) \). Due to the nonlinear source location problem, sometimes although the iteration is convergent, the solution may be trapped into the local minimum, which causes the accuracy of location estimate to become worse. Notice that the iterative methods just take a few times to converge on the global solution, but the local minimum solution requires more times to converge. Thus, the threshold of iterative time should be set to remove the local minimums. If the threshold we set is high, there will still be some local minimum values in the solutions. On the other hand, several global solutions will be missed with the low threshold. With the regularization theory, the MTS and MNT methods actually slow the downtrend of the objective function. When the solution is local minimum, the proposed methods need more times to converge than the TS and NT methods, which can easily reach the threshold. And the proposed methods can still converge on the global minimum in a few times. Therefore, the proposed methods have better capability to recognize the local minimum compared with the TS and NT methods.

4 Two-Stage Modified Newton Method in the Presence of Sensor Position Errors

4.1 The Two-Stage MNT Algorithm in the Sensor Position Errors

In practice, it is difficult to obtain the exact estimates of sensor positions. With inaccurate sensor positions, the objective function parameterized on the unknown vector \( \varvec{\theta}= [{\mathbf{x}}^{T} ,{\tilde{\mathbf{s}}}^{T} ]^{T} \) changes into

The gradient \( \nabla f(\varvec{\theta}_{k} ) \) and the Hessian \( \nabla^{2} f(\varvec{\theta}_{k} ) \) can be formed as

where

Then, the next point \( \varvec{\theta}_{k + 1} \) is

Notice that this approach requires not only the estimate of the source location, but also the estimate of the sensor positions. Therefore, the \( \Delta\varvec{\theta} \) contains both the change in the positions of source and that in the sensors, and the first three elements of \( \Delta\varvec{\theta} \) imply the source location change that is of interest.

Here, we present the two-stage MNT algorithm. First, the solution \( {\mathbf{x}} \) is solved using (8) by the regularization method with (19), and this approach ensures the solution converges on a point closed to the source quickly. Second, the sensor position errors are considered to pursue more precise accuracy using (26). Before introducing the advantages of the algorithm, we first prove two theorems.

Theorem 1

The existence of\( \nabla^{2} f(\varvec{\theta})^{ - 1} \)depends on whether\( {\mathbf{X}} \)is invertible. Since the sensor position errors are only considered in the second stage, and the initial value of the source is improved in the first stage, the terms\( \frac{\partial }{{\partial {\mathbf{x}}^{T} }}({\mathbf{G}}\frac{{\partial {\mathbf{r}}}}{{\partial {\mathbf{x}}}})^{T} {\mathbf{Q}}_{t}^{ - 1} ({\mathbf{Gr}} - {\mathbf{d}}) \)in (10), \( \frac{\partial }{{\partial {\tilde{\mathbf{s}}}^{T} }}({\mathbf{G}}\frac{{\partial {\mathbf{r}}}}{{\partial {\mathbf{x}}}})^{T} {\mathbf{Q}}_{t}^{ - 1} ({\mathbf{Gr}} - {\mathbf{d}}) \)in (23), and\( \frac{\partial }{{\partial {\tilde{\mathbf{s}}}^{T} }}({\mathbf{G}}\frac{{\partial {\mathbf{r}}}}{{\partial {\tilde{\mathbf{s}}}}})^{T} {\mathbf{Q}}_{t}^{ - 1} ({\mathbf{Gr}} - {\mathbf{d}}) \)in (25) can be ignored in the proposed algorithm; then, the Hessian\( \nabla^{2} f(\varvec{\theta}) \)in (22) can be written as

Assuming the Hessian\( \nabla^{2} f(\varvec{\theta}) \)is invertible, the\( \nabla^{2} f(\varvec{\theta})^{ - 1} \)becomes

From (31), we see that the existence of\( \nabla^{2} f(\varvec{\theta})^{ - 1} \)needs the matrix\( {\mathbf{X}} \)and\( {\mathbf{Z}} - {\mathbf{Y}}^{T} {\mathbf{X}}^{ - 1} {\mathbf{Y}} \)to be invertible. It is known that\( {\mathbf{Z}} - {\mathbf{Y}}^{T} {\mathbf{X}}^{ - 1} {\mathbf{Y}} \)is positive definite in [12]. Therefore, the existence of\( \nabla^{2} f(\varvec{\theta})^{ - 1} \)only depends on whether\( {\mathbf{X}} \)is invertible. It is noteworthy that\( {\mathbf{X}} \)denotes the main contribution of Hessian matrix in the case of no sensor position errors, and thus the MNT algorithm is addressed to ensure\( {\mathbf{X}} \)to be well conditioned.

Theorem 2

Sensor position errors slow the downtrend of the objective function, which takes lots of iterative times to find correct solution from the initial value. The change\( \Delta\varvec{\theta} \)in (26) is

The change\( \Delta {\mathbf{x}} \)denotes the first three elements of\( \Delta\varvec{\theta} \), using the estimation sensor positions as initial guess of the true sensor positions, and we have

Let\( \varvec{\phi}= ({\mathbf{Z}} - {\mathbf{Y}}^{T} {\mathbf{X}}^{ - 1} {\mathbf{Y}})^{ - 1} \), then (33) is rewritten as

Notice that the first term in\( [ \cdot ] \)of (34) is the\( \Delta {\mathbf{x}} \)in the case of no sensor position errors and the other term in\( [ \cdot ] \)of (34) implies\( \Delta {\mathbf{x}} \)that is affected by the sensor position errors. Utilizing some conclusions in [12], we have\( {\mathbf{Q}}_{t}^{ - 1} = {\mathbf{LL}}^{T} \)by Cholesky decomposition. Let\( \varvec{\alpha}= {\mathbf{L}}^{T} ({\mathbf{G}}\frac{{\partial {\mathbf{r}}}}{{\partial {\tilde{\mathbf{s}}}^{T} }}) \), \( \varvec{\beta}= {\mathbf{L}}^{T} ({\mathbf{G}}\frac{{\partial {\mathbf{r}}}}{{\partial {\mathbf{x}}^{T} }}) \), the formula in the\( [ \cdot ] \)of (34) can be expressed as

rewrite (35) as

It is worth noticing that\( \varvec{\alpha}\cdot\varvec{\phi}\cdot\varvec{\alpha}^{T} \text{(}{\mathbf{I}} -\varvec{\beta}\text{(}\varvec{\beta}^{T}\varvec{\beta}\text{)}^{ - 1}\varvec{\beta}^{T} ) \)is positive definite since\( {\mathbf{I}} -\varvec{\beta}\text{(}\varvec{\beta}^{T}\varvec{\beta}\text{)}^{ - 1}\varvec{\beta}^{T} \)is a projection matrix, which predicates that the affection of\( \Delta {\mathbf{x}} \)that is brought by the sensor errors decreases the downtrend of the objective function in the iterations.

Since pursuing the inverse of the Hessian \( \nabla^{2} f(\varvec{\theta}) \) is computationally intensive especially when the number of sensors M is large, the little change \( \Delta {\mathbf{x}} \) leads to slow convergence rate and huge computation, but these sensor position errors have to be considered for a more accurate solution of the source. Therefore, the first stage is to increase the change of \( \Delta {\mathbf{x}} \) in each iteration, and then the location will be solved by the second stage in considering the sensor position errors. The proposed two-stage MNT algorithm can reduce the degree of Hessian matrix and improve the convergence speed, which makes the algorithm feasible and efficient.

4.2 Supplement and Analysis

In summary, the procedure and analysis of the two-stage MNT algorithm with the sensor position errors are given here.

4.2.1 First-Stage Processing

-

Input the initial \( {\mathbf{x}}_{0} \) and the tolerance \( \varepsilon \). Set k = 0.

-

Compute the gradient \( \nabla \varvec{f}({\mathbf{x}}_{k} ) \) and the Hessian \( \nabla^{2} \varvec{f}({\mathbf{x}}_{k} ) \) using (9) and (10).

-

Compute \( \Delta {\mathbf{x}}_{k} \) by the modified Newton method using (19), and set \( {\mathbf{x}}_{k + 1} = {\mathbf{x}}_{k} + \Delta {\mathbf{x}}_{k} \).

-

If \( \left\| {\Delta {\mathbf{x}}_{k} } \right\| < \varepsilon \), then do output \( {\mathbf{x}}_{1} = {\mathbf{x}}_{k + 1} \), and go to the second stage;

Otherwise, set k = k+1 and repeat.

4.2.2 Second-Stage Processing

-

Input the initial \( {\mathbf{x}}_{1} \). Set l = 0.

-

Compute the gradient \( \nabla \varvec{f}(\varvec{\theta}_{l} ) \) and the Hessian \( \nabla^{2} \varvec{f}(\varvec{\theta}_{l} ) \) using (21) and (22).

-

Compute \( \Delta\varvec{\theta}_{l} \) and set \( {\mathbf{x}}_{l + 1} = {\mathbf{x}}_{l} + \Delta {\mathbf{x}}_{l} \) using (26), where \( \Delta {\mathbf{x}}_{l} \) is the first three elements of \( \Delta\varvec{\theta}_{l} \).

-

If \( \left\| {\Delta {\mathbf{x}}_{l} } \right\| < \varepsilon \), then do output \( {\mathbf{x}} = {\mathbf{x}}_{l + 1} \), and stop;

Otherwise, set l = l+1 and repeat.

The covariance matrix of the estimated vector \( \varvec{\theta} \) can be obtained by using the perturbation approach. In the presence of sensor noises, we have

The \( \nabla \varvec{f}(\varvec{\theta}) \) and \( \nabla^{2} \varvec{f}(\varvec{\theta}) \) can be rewritten as

Since \( {E\{ }{\mathbf{w}}{\} } \approx {\mathbf{0}}_{M - 1 + 3M} \) and \( {E\{ }{\mathbf{ww}}^{T} {\} = }{\mathbf{Q}} \), the covariance matrix is given by

The MNT method needs to calculate the inverse of the Hessian matrix in each iteration, which is computationally intensive when the Hessian is extended with respect to inaccurate sensor positions. Utilizing the MNT algorithm of (26) directly, the complexity of the MNT method is approximately \( {\mathcal{O}}(N(3M + 3)^{3} ) \) (M is the number of sensors, N is the iteration times), where N may be quite large for the reason of the slow downtrend of objective function. However, the complexity of the two-stage MNT algorithm is approximately \( {\mathcal{O}}(L_{1} (3M + 3)^{3} + L_{2} \cdot 3^{3} ) \), where \( L_{1} \) and \( L_{2} \) denote the iterative times with and without considering the sensor position errors, respectively. The total \( L(L = L_{1} + L_{2} ) \) is much less than N. This approach makes the algorithm feasible. It is acknowledged that the MNT algorithm is computationally intensive compared with the closed-form algorithm since it is an iterative method. However, sometimes we have to pursue more precise accuracy of the source location, especially in the case of moderate and large noises, and the MNT algorithm is fit to be applied to this condition.

5 Simulations

5.1 Experiment 1: MNT and MTS Algorithms for Source Localization

Five sensors are used in the simulation to find the source location, with the positions as (300, 100, 150), (400, 150, 100), (300, 500, 200), (350, 200, 100), (− 100, − 100, − 100) m. The near-field source or the far-field source is located at (280, 325, 275) or (2800, 3250, 2750) m. The estimation accuracy in terms of root-mean-square error (RMSE) is defined as \( \text{RMSE} = \sqrt {E\{ \left\| {{\mathbf{x}} - {\hat{\mathbf{x}}}} \right\|_{2}^{2} \} } \). The RDOA measurements are obtained by adding the real values of zero mean Gaussian noise and covariance matrix \( {\mathbf{Q}}_{t} = \sigma_{t}^{2} \varTheta \), where \( \sigma_{t}^{2} \) is the RDOA noise power and \( \varTheta \) is equal to 1 in the diagonal elements and 0.5 otherwise. In practice, the initial value can be chosen from the solution of the closed-form algorithms. In this simulation, the famous two-step WLS algorithm is used to pursue the solution as the initial value. The threshold of iterative time is set to be 10. The statistical results come from 5000 independent simulations.

Figures 1 and 2 depict the convergent probability of the TS, NT, MTS and MNT in the near-field and far-field source. From Fig. 1, we can see the TS and NT methods may easily diverge in the iterations as \( \sigma_{t}^{2} \) increases. The proposed methods MTS and MNT have better capability to make the iteration convergence. The improvement in the probability can attain about 10 percent at large noise. In Fig. 2, all the TS, MTS, and MNT methods are robust to make the iteration convergence except the NT method. Although the TS method has high convergent probability, the solutions contain several local minimums in the simulations.

Figures 3 and 4 show the accuracy of location estimate of the proposed methods in terms of RMSE, compared with the two-step WLS algorithm, the MDS algorithm, the TS, NT methods, and the CRLB in the near-field and far-field source. In Fig. 3, all the algorithms can attain the CRLB in small noises. When \( \sigma_{t}^{2} \) increases, the threshold effect resulting from the nonlinear nature of the two-step WLS and MDS algorithms occurs at some large noise level. The RMSE of the TS, NT methods also deviates further from the CRLB caused by the local minimums. The proposed method MNT performs better compared with the other methods in large noises. Notice that the MTS method has worse precise accuracy than the MDS algorithm; the reason is that a few local minimums still exist in the MTS solutions since the threshold of iterative time set in the simulations is not too strict. In Fig. 4, we can see that the proposed MTS and MNT methods have the same results. The reason is that the second term of (10) in the iterations is quite small in far-field scenario when the solution is the global minimum. They are lower than the other algorithms and even the CRLB at large noises. This is because they have become biased estimators in sufficient noise conditions.

Figures 5 and 6 depict the impact of the initial value comparing the NT method and the MNT method in the near-field and far-field source. Good initial values are provided in Figs. 5a and 6a while poor initial values are given in Figs. 5b and 6b. As clearly seen, the NT method and the MNT method both can find the precise source location with good initial values. For the worse initial values, the NT method diverges in the iterations while the MNT method is still robust to make the iteration convergence and pursue the true location. It is acknowledged that with better initial value, the MNT algorithm may have a little worse performance than the NT method in the convergence speed. The reason is that the modified Hessian matrix slows the downtrend of the function. Based on the simulation result, we can give the conclusion that the proposed MNT algorithm exhibits much better iterative stability with some acceptable loss of the convergence speed, which fits to low SNR environment.

5.2 Experiment 2: Two-Stage MNT Algorithm for Source Localization with Sensor Position Error

In the presence of sensor position errors, the true positions of the sensor are also generated by adding zero mean Gaussian noise with covariance matrix \( {\mathbf{Q}}_{s} = \sigma_{s}^{2} \text{diag[5,5,5,2,2,2,2,2,2,1,1,1,3,3,3]} \), where \( \sigma_{s}^{2} \) denotes the sensor position error power. It is worth noting that the TDOA noises and the sensor position noises are uncorrelated.

The RMSEs of the proposed two-stage MNT, two-step WLS methods, and CRLB are shown in Figs. 7 and 8 for the near-field and far-field source in the presence of sensor position errors. In this experiment, we fix \( \sigma_{t}^{2} = 1 \) with the near-field source or \( \sigma_{t}^{2} = 0.1 \) with the far-field source and vary \( \sigma_{s}^{2} \). It is seen that the two-stage MNT method gives better performance compared with the two-step WLS method. As \( \sigma_{s}^{2} \) increases, the estimation accuracy of the two-stage MNT method has a smaller deviation from the CRLB than the two-step WLS method.

From Fig. 9, we can observe the convergence speed of the MNT method and the two-stage MNT method with the sensor position errors. In this simulation, set \( \sigma_{t}^{2} = 1 \) and \( \sigma_{s}^{2} = 10 \). Figure 9a, b shows the change of the RMSE and the objective function f in an iterative way, and some head values of the function f are cut for better vision in Fig. 9b. Under the same tolerance, it is obvious that the downtrend of RMSE and objective function is very slow when the MNT method is directly used for the sensor position uncertainty condition. Using the two-stage MNT algorithm, the solution has fast convergence speed with the same initial value, which reduces huge computation.

6 Conclusion

In this paper, we present the MNT method for the TDOA source localization and the two-stage MNT method for source localization with sensor position errors. The regularization theory is introduced in the proposed methods to solve the instability of the convergence problem of the iterative methods. Compared with the NT method, the proposed methods have superior capability of making the iteration stably converge with bad initial values. Compared with the closed-form algorithms, the proposed methods give much better location accuracy in large noises.

References

R.J. Barton, D. Rao, Performance capabilities of long-range UWB-IR TDOA localization systems. EURASIP J. Adv. Signal Process. 2008(1), 236791 (2008)

D. Calvetti, S. Morigi, L. Reichel et al., Tikhonov regularization and the L-curve for large discrete ill-posed problems. J. Comput. Appl. Math. 123(1), 423–446 (2000)

Y.T. Chan, K.C. Ho, A simple and efficient estimator for hyperbolic location. IEEE Trans. Signal Process. 42(8), 1905–1915 (2002)

N.G. Chernoguz, A smoothed Newton–Gauss method with application to bearing-only position location. IEEE Trans. Signal Process. 43(8), 2011–2013 (1995)

K.W. Cheung, H.C. So, W.K. Ma et al., A constrained least squares approach to mobile positioning: algorithms and optimality. EURASIP J. Adv. Signal Process. 2006(1), 020858 (2006)

W.H. Foy, Position-location solutions by Taylor-series estimation. IEEE Trans. Aerosp. Electron. Syst. 12(2), 187–194 (2007)

B. Friedlander, A passive localization algorithm and its accuracy analysis. IEEE J. Ocean. Eng. 12(1), 234–245 (1987)

T.T. Ha, R.C. Robertson, Geostationary satellite navigation systems. IEEE Trans. Aerosp. Electron. Syst. AES- 23(2), 247–254 (1987)

P.C. Hansen, Regularization tools: a Matlab package for analysis and solution of discrete ill-posed problems. Numer. Algorithms 6(1), 1–35 (1994)

P.C. Hansen, T.K. Jensen, G. Rodriguez, An adaptive pruning algorithm for the discrete L-curve criterion. J. Comput. Appl. Math. 198(2), 483–492 (2015)

K.C. Ho, Bias reduction for an explicit solution of source localization using TDOA. IEEE Trans. Signal Process. 60(5), 2101–2114 (2012)

K.C. Ho, L. Yang, On the use of a calibration emitter for source localization in the presence of sensor position uncertainty. IEEE Trans. Signal Process. 56(12), 5758–5772 (2008)

K.C. Ho, X. Lu, L. Kovavisaruch, Source localization using TDOA and FDOA measurements in the presence of receiver location errors: analysis and solution. IEEE Trans. Signal Process. 55(2), 684–696 (2007)

D.P. O’Leary, P.C. Hansen, The use of the L-curve in the regularization of discrete Ill-posed problems. SIAM J. Sci. Comput. 14(6), 1487–1490 (1993)

A.H. Sayed, Network-based wireless location. IEEE Signal Process. Mag. 22, 24–40 (1996)

M. Sun, L. Yang, K.C. Ho, Accurate sequential self-localization of sensor nodes in closed-form. Signal Process. 92(12), 2940–2951 (2012)

G. Wang, Y. Li, N. Ansari, A semidefinite relaxation method for source localization using TDOA and FDOA measurements. IEEE Trans. Veh. Technol. 62(2), 853–862 (2013)

H.W. Wei, R. Peng, Q. Wan et al., Multidimensional scaling analysis for passive moving target localization with TDOA and FDOA measurements. IEEE Trans. Signal Process. 58(3), 1677–1688 (2010)

E. Weinstein, Optimal source localization and tracking from passive array measurements. IEEE Trans. Acoust. Speech Signal Process. 30(1), 69–76 (2003)

Y. Weng, W. Xiao, L. Xie, Total least squares method for robust source localization in sensor networks using TDOA measurements. Int J Distrib. Sensor Netw. 7, 1063–1067 (2011)

K. Yang, G. Wang, Z. Luo, Efficient convex relaxation methods for robust target localization by a sensor network using time differences of arrivals. IEEE Trans. Signal Process. 57(7), 2775–2784 (2009)

K. Yang, J. An, X. Bu et al., Constrained total least-squares location algorithm using time-difference-of-arrival measurements. IEEE Trans. Veh. Technol. 59(3), 1558–1562 (2010)

H. Yu, G. Huang, J. Gao et al., An efficient constrained weighted least squares algorithm for moving source location using TDOA and FDOA measurements. IEEE Trans. Wirel. Commun. 11(1), 44–47 (2012)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grants 61801363.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Fang, J., He, Z. Robust Modified Newton Algorithms Using Tikhonov Regularization for TDOA Source Localization. Circuits Syst Signal Process 38, 5342–5359 (2019). https://doi.org/10.1007/s00034-019-01098-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-019-01098-x