Abstract

In this paper we prove some geometric inequalities for closed surfaces in Euclidean three-space. Motivated by Gage’s inequality for convex curves, we first verify that for convex surfaces the Willmore energy is bounded below by some scale-invariant quantities. In particular, we obtain an optimal scaling law between the Willmore energy and the isoperimetric ratio under convexity. In addition, we address Topping’s conjecture relating diameter and mean curvature for connected closed surfaces. We prove this conjecture in the class of simply-connected axisymmetric surfaces, and moreover obtain a sharp remainder term which ensures the first evidence that optimal shapes are necessarily straight even without convexity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout this paper, we call a closed surface \(\Sigma \) smoothly immersed into \({\mathbb {R}}^3\) simply a surface, if not specified. The purpose of this paper is to obtain some geometric inequalities involving mean curvature in some classes of surfaces. The contents are mainly two-fold: The first part aims at extending Gage’s classical isoperimetric inequality for convex curves to surfaces: The second one is devoted to Topping’s conjecture relating mean curvature and diameter.

1.1 Gage-type inequalities for convex surfaces

A classical isoperimetric inequality by Gage [9] asserts that

holds for every convex Jordan curve \(\gamma \) in \({\mathbb {R}}^2\), where \(\kappa \), L, A denote the curvature, the length, and the enclosed area, respectively. The equality is attained only by a round circle. Inequality (1.1) multiplied by L relates the normalized bending energy and the isoperimetric ratio, which are different order measurements of roundness, as both are scale invariant and minimized by round circles. As a corollary we deduce that every curve shortening flow of convex curves decreases the isoperimetric ratio. This is a direct consequence of (1.1) and the derivative formula along a curve shortening flow \(\{\gamma _t\}_{t\in [0,T)}\):

The convexity assumption in (1.1) is necessary due to a dumbbell-like curve with a long thin neck, for which the length can be solely large. See also recent progress [4, 8] in which a weaker inequality is established even for nonconvex curves.

In this paper we first aim at extending Gage’s inequality to convex surfaces. To this end we first look at the behavior of the isoperimetric ratio under mean curvature flow, namely a one-parameter family of smooth closed surfaces \(\{\Sigma _t\}_{t\in [0,T)}\) whose normal velocity coincides with the mean curvature. Standard first variation formulae imply that the isoperimetric ratio \(I=A^{\frac{3}{2}}/V\) satisfies

where A denotes the surface area, V the enclosed volume, and H the inward mean curvature scalar, defined by the average of principle curvatures so that \(H\equiv 1\) for the unit sphere. Note that for round spheres the right-hand side of (1.2) is always zero, and this is compatible with the fact that round spheres are self-shrinkers.

Our main result ensures that for convex surfaces the so-called Willmore energy \(\int H^2\) can be related with the remaining term \(\frac{A}{V}\int H\) in a similar way to (1.1).

Theorem 1.1

There exists a universal constant \(C\ge 4\) such that

holds for every convex surface \(\Sigma \subset {\mathbb {R}}^3\). In addition, for every \(C<4\) there exists a convex surface for which (1.3) does not hold.

We thus obtain a higher dimensional version of Gage’s inequality up to a universal constant (for example, we can take \(C=108\pi \)). On the other hand, we also discover the necessary lower bound \(C\ge 4\) due to a cigar-like surface \(\Sigma _\varepsilon \), namely a cylinder of radius \(\varepsilon \ll 1\) and height 1 capped by hemispheres, which satisfies

In particular, \(C=3\) is not allowable; this fact with (1.2) highlights the significant difference from curve shortening flow that there exists a convex mean curvature flow that increases the isoperimetric ratio in a short time interval. This should be also compared with classical well-known results by Huisken [11], which give several evidences that “convex mean curvature flows become spherical”; namely, every convex initial surface retains convexity before shrinking to a point in finite time, and a normalized flow converges to a round sphere. In addition, we should also recall that under mean curvature flow the isoperimetric difference \(A^\frac{3}{2}-6\sqrt{\pi }V\) always monotonically decreases, see e.g. [21, 25].

Since it turned out that an “optimal shape” for (1.3) is not a round sphere, we are now led to seek another form that is potentially optimized by a sphere. From this point of view it is worth mentioning that, combining (1.3) with Minkowski’s inequality (see e.g. (24) in [5, §20], [20, Notes for Section 6.2], or recent [1]):

we can directly relate the Willmore energy and the isoperimetric ratio \(I=A^\frac{3}{2}/V\).

Corollary 1.2

There exists a universal constant \(C' \ge 3/(2\sqrt{\pi })\) such that

holds for every convex surface \(\Sigma \subset {\mathbb {R}}^3\). For \(C'<3/(2\sqrt{\pi })\) a round sphere does not satisfy (1.5).

The lower bound of \(C'\) is due to a round sphere, in contrast to C. In fact, we expect that the nature of (1.5) is quite different from that of (1.3) in view of the optimal constants \(C=\sup _\Sigma E\) and \(C'=\sup _\Sigma E'\) for convex \(\Sigma \), where \(E:=(\frac{A}{V}\int H)(\int H^2)^{-1}\) and \(E':=I(\int H^2)^{-1}\). One reason is that for a cigar-like surface \(\Sigma _\varepsilon \) with \(\varepsilon \ll 1\), we already know \(E(\Sigma _\varepsilon )\approx 4>3=E({\mathbb {S}}^2)\), while \(E'(\Sigma _\varepsilon )=O(\varepsilon ^{1/2})\rightarrow 0\). Finding the optimal values of C and \(C'\) seems out of scope and is left open. At this time, only \(E'\) has potential to be optimized by a round sphere.

Both estimates (1.3) and (1.5) are optimal in view of the scaling laws. Indeed, for a pancake-like surface \(\Sigma _\varepsilon \) with \(\varepsilon \ll 1\), namely the surface surrounding the \(\varepsilon \)-neighborhood of a flat disk, both sides in (1.5) (and hence (1.3)) diverge as \(O(\varepsilon ^{-1})\).

The convexity assumption in (1.3) and (1.5) is unremovable as in Gage’s result. Indeed, for a well-known example of a nearly double-sphere connected by a catenoid, the left-hand sides in (1.3) and (1.5) diverge, while the Willmore energy remains less than \(8\pi \), cf. [13, 22].

Estimate (1.5) is meaningful only in the large-deviation regime (\(I\gg 1\)), although Gage’s inequality is optimal even for nearly round curves. A kind of small-deviation counterpart of (1.5) is already obtained by Röger–Schätzle [19]. They show that every surface \(\Sigma \) with \(I(\Sigma )-I({\mathbb {S}}^2)\le \sigma \) (not necessarily convex) satisfies

The presence of \(\sigma >0\) is in general necessary due to nearly double-spheres, but Corollary 1.2 now implies that if we assume that \(\Sigma \) is convex, then (1.6) holds for some universal constant \({\bar{C}}\) (not depending on \(\sigma \)). The proof of (1.6) is based on de Lellis–Müller’s rigidity estimate for nearly umbilical spheres [7]. We remark that the optimal constant in (a version of) de Lellis–Müller’s estimate is known if \(\Sigma \) is convex [18] or outward-minimizing [1], but in order to know the optimal \({\bar{C}}\) in (1.6) a substantial progress seems necessary even if we assume convexity.

The main issue in proving Theorem 1.1 is how to relate the Willmore energy and other quantities. Röger–Schätzle’s idea is applicable to nearly umbilical surfaces but not to our large-deviation regime. Recently, a potential theoretic approach is developed for obtaining several old and new geometric inequalities [1,2,3], but it seems not directly applicable to our problems. In addition, although many inequalities involving total mean curvature are known for convex surfaces, e.g. by using the mean-width representation (cf. [5]), much less is known about the Willmore energy. In particular, we cannot obtain our estimates via the Cauchy–Schwarz inequality \(\int H^2 \ge A^{-1}(\int H)^2\) since this is not sharp for pancake-like surfaces.

Our idea is to establish and employ the following estimate for convex surfaces:

where \({{\,\mathrm{diam}\,}}(\Sigma )\) denotes the extrinsic diameter, and \({\mathcal {D}}\) the “degeneracy” (defined in Sect. 2), which is comparable with the minimal width under \({{\,\mathrm{diam}\,}}(\Sigma )=1\). Theorem 1.1 then follows by (1.7) with \(p=2\) and by the additional estimate that \({\mathcal {D}}\gtrsim \frac{A}{V}\int H\). The proof of (1.7) is based on a slicing argument with the help of geometric restriction due to convexity. As a key ingredient we also use the general scaling law \(\int |\kappa |^p \gtrsim r^{1-p}\) for each cross section plane curve, where r is the minimal width of the curve. We finally indicate that our explicit choice of \(c_p\) in (1.7) is not optimal in general but sharp as \(p\rightarrow 1\), and in particular \(c_1=\pi \) agrees with the optimal constant in Topping’s conjecture, the details of which are given below.

1.2 Topping’s conjecture for axisymmetric surfaces

In his 1998 paper [25] Topping poses the following

Conjecture

Let \(\Sigma \subset {\mathbb {R}}^3\) be an immersed connected closed surface. Then

The constant \(\pi \) cannot be improved due to cigar-like surfaces. In [26] Topping himself already proves a modified version of (1.8), which weakens \(\pi \) to \(\frac{\pi }{32}\) but strengthens \({{\,\mathrm{diam}\,}}(\Sigma )\) to the intrinsic diameter (and also deals with higher dimensions). The exact form of (1.8) is classically known for convex surfaces, where a degenerate segment is optimal (see Sect. 3.3). To the author’s knowledge, the other known case is only for constant mean curvature (CMC) surfaces [24]. For CMC surfaces, even \(\int _\Sigma |H| \ge 2\pi {{\,\mathrm{diam}\,}}(\Sigma )\) holds true, with equality only for a round sphere, and hence this class does not contain optimal shapes. Therefore, the unique nature of Topping’s conjecture seems not well understood for nonconvex surfaces.

In this paper we gain more insight into Topping’s conjecture by focusing on axisymmetric surfaces. This class is flexible enough to include both nearly optimal and highly nonconvex surfaces. The assumption of axisymmetry fairly reduces the freedom of surfaces, but certainly keeps substantial difficulties; for example, even for the simplest dumbbell-like surface, the diameter may not be attained in the axial direction; even if attained, the co-area formula in that direction may involve a part where the mean curvature vanishes, so that we cannot directly extract the diameter and do need further quantitative controls.

Our main result, however, gives an affirmative answer to Topping’s conjecture for every simply-connected axisymmetric surface (including dumbbells). In fact, we obtain a stronger assertion by discovering a sharp remainder term. For a simply-connected axisymmetric surface \(\Sigma \), we define a scale-invariant quantity U by

where \(\omega _\mathrm {axis}\) is a unit vector parallel to an axis of symmetry \(L_\mathrm {axis}\), and T denotes the unit tangent of a constant-speed minimal geodesic \(\gamma _\Sigma :[0,1]\rightarrow \Sigma \subset {\mathbb {R}}^3\) in \(\Sigma \) connecting a (unique) pair of points in \(\Sigma \cap L_\mathrm {axis}\) such that \(\gamma _\Sigma (1)-\gamma _\Sigma (0)=\lambda \omega _\mathrm {axis}\) holds for some \(\lambda \ge 0\). Note that \(\Sigma \) is generated by revolving \(\gamma _\Sigma \) around \(L_\mathrm {axis}\); we call \(\gamma _\Sigma \) a generating curve of \(\Sigma \) as usual. We also remark that unless \(\Sigma \) is a round sphere, \(L_\mathrm {axis}\) is unique so that \(\omega _\mathrm {axis}\) is unique up to the sign, and \(\gamma _\Sigma \) is unique up to the revolution and the choice of parameter-orientation. In particular, \(U(\Sigma )\) is well defined and strictly positive for any given \(\Sigma \). The quantity \(U(\Sigma )\) measures a certain deviation from a “unidirectional” shape since \(U(\Sigma )\ll 1\) corresponds to \(|T-\omega _\mathrm {axis}|\ll 1\) in a certain sense.

Here is our main result concerning Topping’s conjecture.

Theorem 1.3

There exists a universal constant \(\sigma >0\) such that

holds for every simply-connected axisymmetric surface \(\Sigma \subset {\mathbb {R}}^3\).

Theorem 1.3 is sharp in the sense that there is a sequence such that the ratio \(\frac{1}{U(\Sigma _\varepsilon )}(\frac{1}{{{\,\mathrm{diam}\,}}(\Sigma _\varepsilon )}\int _{\Sigma _\varepsilon }|H|-\pi )\) converges as \(\varepsilon \rightarrow 0\). However, for a cigar-like surface \(\Sigma _\varepsilon \) this ratio diverges and behaves like \(\varepsilon ^{-1}\). One example for which the ratio converges is a “double-cone” surface, which is made by connecting the circular bases of two thin cones (see Remark 3.6). This reveals that conical ends are more favorable than round caps at a higher order level.

Estimate (1.9) not only directly verifies Topping’s conjecture for a new class of surfaces, but also implies that a minimizing sequence in that class needs to degenerate into a segment, thus giving the first evidence for nonconvex surfaces that optimal shapes are necessarily almost straight.

Corollary 1.4

Topping’s conjecture (1.8) holds true for every simply-connected axisymmetric surface. Moreover, for a sequence \(\{\Sigma _n\}_n\) of such surfaces, if

then up to similarity a sequence \(\{\gamma _{\Sigma _n}\}_n\) of generating curves of \(\Sigma _n\) converges to a unit-speed segment \({\bar{\gamma }}:[0,1]\rightarrow {\mathbb {R}}^3\) in the sense of \(W^{1,p}\) for every \(1\le p <\infty \).

The convergence in \(W^{1,p}\) is optimal in the sense that \(\gamma _n\) does not converge to \({\bar{\gamma }}\) in \(W^{1,\infty }\) since at the endpoints \(\gamma _n\) is perpendicular to an axis of symmetry and hence to \({\bar{\gamma }}\); even in the interior, \(\gamma _n\) may have small loops that vanish as \(n\rightarrow \infty \).

In the proof of Theorem 1.3, given an axisymmetric \(\Sigma \), we construct a comparison convex surface \(\Sigma '\) such that \(\int _\Sigma |H| \ge \int _{\Sigma '}|H|\) and \({{\,\mathrm{diam}\,}}(\Sigma ')\ge {{\,\mathrm{diam}\,}}(\Sigma )\) by using a rearrangement argument introduced in [6], in which Minkowski’s inequality (1.4) is extended to certain axisymmetric surfaces. Since the mean curvature is already well studied in [6], our main contribution in this argument is concerning the diameter, which is less tractable due to its nonlocal nature. In addition, in order to extract the remainder U, we need essentially new quantitative controls in the rearrangement procedures.

This paper is organized as follows. In Sect. 2 we first prove estimate (1.7) and then prove Theorem 1.1. In Sect. 3 we first recall the rearrangement arguments and establish general diameter estimates, and then prove Theorem 1.3.

1.3 Notation

The notation \(f\lesssim g\) means that there is a universal \(C>0\) such that \(f\le C g\) holds. We also define \(f\gtrsim g\) similarly, and use \(f\sim g\) in the sense that both \(f\lesssim g\) and \(f\gtrsim g\) hold. In addition, the notation \(f\ll g\) in an assumption means that there exists \(\varepsilon >0\) such that if \(f\le \varepsilon g\), then the assertion holds.

2 Gage-type inequalities for convex surfaces

We first define the degeneracy of a convex surface \(\Sigma \). For a unit vector \(\omega \in {\mathbb {S}}^2\subset {\mathbb {R}}^3\) the width (breath) of \(\Sigma \) in the direction \(\omega \) is defined by

where \(\cdot \) denotes the inner product in \({\mathbb {R}}^3\). The extrinsic diameter of \(\Sigma \) is given by

Then we define the degeneracy \({\mathcal {D}}\) of \(\Sigma \) by

Note that the degeneracy \({\mathcal {D}}\) is comparable with the ratio (diameter)/(minimal width) up to universal constants, but slightly different as \(\omega \) is taken from the orthogonal complement of a diameter-direction \(\omega _0\). Here we adopt this \({\mathcal {D}}\) for computational simplicity.

Now we are in a position to state (1.7) rigorously.

Theorem 2.1

Every convex surface \(\Sigma \subset {\mathbb {R}}^3\) satisfies

where \(c_p\) is a positive constant depending only on p. In particular, we can take

Remark 2.2

The above choice yields the optimal constant \(c_1=\pi \) only for \(p=1\). In the case of \(p=2\), we have \(c_2= 2 (\frac{1}{2}\mathrm {B}(\tfrac{1}{2},\tfrac{3}{4}))^2 (\sqrt{5}-2) = 0.6777700... \ge \frac{2}{3}\), where \(\mathrm {B}\) denotes the beta function.

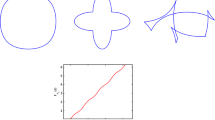

Our proof of Theorem 2.1 is based on a slicing argument, and hence it is important to gain scale-analytic insight into the curvature energy for plane curves; such a point of view played important roles in previous variational studies of elastic curves, see e.g. [15,16,17]. In this paper we use the fact that each cross section curve of a convex surface has a lower bound (also valid for nonconvex curves).

Lemma 2.3

Let \(p\ge 1\). For an immersed closed plane curve \(\gamma \) bounded by two parallel lines of distance \(r>0\), we have

where \(\kappa \) denotes the curvature and s the arclength parameter of \(\gamma \), and

Proof

Up to rescaling we may assume that \(r=1\).

In addition, up to a rigid motion, we may assume that \(\gamma \) lies in the strip region \([0,1]\times {\mathbb {R}}\). Then the curve \(\gamma \) contains at least two disjoint graph curves represented by functions \(u_i:(a_i,b_i)\rightarrow {\mathbb {R}}\) (\(i=1,2\)) with \(0\le a_i\le b_i\le 1\) such that \(u_i'(c_i)=0\) at some \(c_i\in (a_i,b_i)\), and \(|u_i'(x)|\rightarrow \infty \) both as \(x\downarrow a_i\) and as \(x\uparrow b_i\); indeed, such graphs are found near the maximum and minimum of \(\gamma \) in the vertical direction. Dropping the index i, we now prove for the graph curve \(G_u:=\{(x,u(x)) \in {\mathbb {R}}^2 \mid x\in [a,b]\}\) that

which implies (2.3) after addition with respect to the two graphs.

We begin with the direct computation that

where \(f(t):=\int _0^{t}(1+\tau ^2)^{\frac{1-3p}{2p}}d\tau \). Applying the Hölder inequality to the right-hand side, and recalling that \(b-a\le 1\), we have

Now for (2.4) it suffices to prove

This follows by decomposing the left-hand side’s integration interval at \(c\in (a,b)\) (where \(u'(c)=0\)) and by using, for each of the two integrals, the triangle inequality \(\int |(f(u'))'|\ge |\int (f(u'))'|\), the boundary conditions \(|u'(a+0)|=|u'(b-0)|=\infty \) and \(u'(c)=0\), and also the oddness of f. \(\square \)

In the case of \(p=2\), the same kind of lemma is obtained in [16, Lemma 4.3]. In addition, Henrot-Mounjid [10] study a closely related problem, which minimizes the same curvature energy with \(p=2\) among convex curves of prescribed inradius \(r_\mathrm {in}\); the inradius is always bounded by the half-width r/2. The constants in both [10, 16] are represented by using \(\cos \theta \), but they are in fact the same as our constant \({\tilde{c}}_p\) with \(p=2\) after a change of variables. In view of this, we can also represent \({\tilde{c}}_p\) as

Remark 2.4

(Optimality of \({\tilde{c}}_p\)) Compared to \(c_p\) in Theorem 2.1, the value of \({\tilde{c}}_p\) is more important because of its optimality. Below we briefly argue the optimality, assuming \(p>1\); the case of \(p=1\) is trivial. Let f be as in the proof of Lemma 2.3. Let \(u:[-1,1]\rightarrow {\mathbb {R}}\) be the primitive function of the increasing function \(f^{-1}({A}x)\), where \({A}:=\lim _{t\rightarrow \infty }f(t)\in (0,\infty )\), such that \(u(0)=0\). Then u is a symmetric convex function such that \(\lim _{x\rightarrow \pm 1}|u'(x)|=\infty \), and also \(\lim _{x\rightarrow \pm 1}|u(x)|\) is defined as a finite value because for \(x\in (0,1)\) we have

and \(zf'(z)\sim z^{\frac{1-2p}{p}}\) as \(z\rightarrow \infty \), where the exponent \(\frac{1-2p}{p}\) is strictly less than \(-1\). In addition, we have the identity \((f(u'))'\equiv {A}\) so that in view of the Hölder inequality in the proof of Lemma 2.3, it is straightforward to check that a closed convex curve made by connecting the graph curve of u and its vertical reflection attains the equality in (2.3) for \(r=2\). Notice that the resulting closed curve is of class \(C^2\) (but not \(C^3\)); the only nontrivial point is whether the curvature is well defined where the two graph curves are connected, but in fact the curvature vanishes there since \((f(u'))'=|\kappa |^p\sqrt{1+|u'|^2}\) is constant while \(|u'(x)|\rightarrow \infty \) as \(|x|\rightarrow 1\). We finally remark that when \(p=2\), the graph curve of u corresponds to the so-called rectangular elastica, and our closed curve coincides with the one constructed by Henrot-Mounjid.

Now we turn to the proof of Theorem 2.1.

Proof of Theorem 2.1

Up to rescaling we may assume that \({{\,\mathrm{diam}\,}}(\Sigma )=1\) and only need to prove that

Step 1. Choose one direction \(\omega \in {\mathbb {S}}^2\) such that \(b_\Sigma (\omega )=1\) (\(={{\,\mathrm{diam}\,}}(\Sigma )\)). Up to a rigid motion, we may assume that the height function \(h(q) := q\cdot \omega \) maps \(\Sigma \) to [0, 1]. Let \(\Sigma _t\) denote the cross section \(\{q\in \Sigma \mid h(q)=t\}\) for \(t\in (0,1)\). In addition, let \(\theta _\omega \in [0,\pi ]\) denote the angle between \(\omega \) and the outer unit normal \(\nu \) of \(\Sigma \), so that \(\cos \theta _\omega =\nu \cdot \omega \). Note that \(\sin \theta _\omega >0\) for \(t\in (0,1)\). Then the co-area formula yields

where \({\mathcal {H}}^d\) denotes the d-dimensional Hausdorff measure. Moreover, at any point \(q\in \Sigma \) such that \(t=h(q)\in (0,1)\), let \(k_{\Sigma _t}\) be the inward curvature of the cross section curve \(\Sigma _t\), and let \(\kappa _\omega \) be the inward curvature of a (unique) curve contained in \(\Sigma \) and the plane \(P:=q+\mathrm {span}\{\nu (q),\omega \}\). Then from a simple geometric calculation we deduce

Therefore, inserting (2.7) into (2.6), and using the fact that \((X+Y)^p \ge X^p\) for \(X,Y\ge 0\) with equality only for \(Y=0\), we obtain

The strict positivity follows since otherwise \(\kappa _\omega \equiv 0\) but this contradicts the fact that \(\Sigma \) is closed e.g. in view of the Gauss–Bonnet theorem.

Step 2. We then prove that for every \(t\in (0,1)\) and \(q\in \Sigma \) with \(t=h(q)\),

By symmetry we only need to argue for \(t\le \frac{1}{2}\) and prove that

Fix \(q\in \Sigma \) (and hence also \(t=h(q)\le \frac{1}{2}\)). Up to a rigid motion, we may assume that the maximum (resp. minimum) of the height function h is attained by (1, 0, 0) (resp. (0, 0, 0)), so that \(\omega =(1,0,0)\) in particular, and also that there is some function

such that \(q \in G_f \subset \Sigma \), where \(G_f:=\{(x,0,f(x))\in {\mathbb {R}}^3 \mid x\in [0,1]\}\). Note that the upper bound \(f\le 1\) follows since \({{\,\mathrm{diam}\,}}(\Sigma )=1\). Then an elementary geometry implies that \(\sin \theta _\omega \ge \sin \theta _\omega '\) for the angle \(\theta _\omega '\) between \(\omega \) and the normal \((-f'(t),0,1)\) of f, that is,

In addition, by the geometric restriction (2.11) of f, we have for \(t\le \frac{1}{2}\),

Indeed, \(f'(t)\le 1/t\) holds since otherwise \(f'(x)>1/t\) for \(x\in (0,t)\) by concavity but this contradicts \(f\le 1\) and \(f(0)=0\); by symmetry, using \(f(1)=0\), we also obtain \(f'(t)\ge 1/(1-t)\); since \(t\le \frac{1}{2}\), these two estimates imply (2.13). From (2.12) and (2.13) we deduce the desired (2.10).

Step 3. We finally complete the proof. Inserting (2.9) into (2.8), we now obtain

By definition of \({\mathcal {D}}\) (and \({{\,\mathrm{diam}\,}}(\Sigma )=1\)), we can apply Lemma 2.3 with \(r=1/{\mathcal {D}}\) to \(\Sigma _t\) to the effect that

where the right-hand side does not depend on t. Therefore, inserting (2.15) to (2.14), we obtain the desired (2.5) for \(c_p:=2^{-p}{\tilde{c}}_p\big (\int _0^1g(t)^{p-1}dt\big )\); this constant agrees with the one in the statement of Theorem 2.1 after simple calculations. \(\square \)

We now estimate the degeneracy \({\mathcal {D}}\) to prove Theorem 1.1. A key fact we use is the following scaling law (whose prefactor is not optimal).

Lemma 2.5

For a convex surface \(\Sigma \), we have

Proof

Up to rescaling we may assume that \({{\,\mathrm{diam}\,}}(\Sigma )=1\). Let \(r:=1/{\mathcal {D}}\) for notational simplicity. Fixing a diameter direction \(\omega _0\in {\mathbb {S}}^2\) such that \(b_\Sigma (\omega _0)=1\), we let \(\rho _1\in [r,1]\) denote the maximal width among all directions orthogonal to \(\omega _0\), that is, \(\rho _1:=\max \{b_\Sigma (\omega )\mid \omega \cdot \omega _0=0\}\), and \(\omega _1\in {\mathbb {S}}^2\) be a maximizer so that \(b_\Sigma (\omega _1)=\rho _1\) and \(\omega _1\cdot \omega _0=0\). We now separately prove

which immediately imply \(A/V \le 36/r = 36 {\mathcal {D}}\).

To this end we use the fact that there exists a rectangular (convex body) \(Q\subset {\mathbb {R}}^3\) containing \(\Sigma \) such that each side is perpendicular to one of \(\omega _0,\omega _1,\omega _2\), where \(\omega _2\in {\mathbb {S}}^2\) is chosen to be orthogonal to both \(\omega _0\) and \(\omega _1\), and such that the side-lengths of Q are \(1,\rho _1,\rho _2\), where \(\rho _2:=b_\Sigma (\omega _2)\in [r,\rho _1]\). Then the first estimate in (2.17) follows by the area-monotonicity of convex surfaces that \(\Sigma \subset Q \Rightarrow A(\Sigma )\le A(\partial Q)\), which combined with \(\rho _2\le \rho _1\le 1\) implies that \(A(\Sigma )\le A(\partial Q)=2(\rho _2+\rho _1+\rho _1\rho _2)\le 6\rho _1\). For the second estimate in (2.17) we further use the fact that \(\Sigma \) touches all sides of Q. More precisely, assuming without loss of generality that \(\omega _0,\omega _1,\omega _2\) form the standard basis of \({\mathbb {R}}^3\) and that \(Q=[0,1]\times [0,\rho _1]\times [0,\rho _2]\), we can find points in \(\Sigma \cap \partial Q\) of the form

In addition, since \(\omega _0\) and \(\omega _1\) are defined via maximization, we have

Then the polyhedron P defined by the convex hull of the points in (2.18) is enclosed by \(\Sigma \), and in addition under the constraint (2.19) we deduce from a direct computation that the enclosed volume of P is \(\rho _2\rho _1/6\), so that \(V(\Sigma )\ge \rho _2\rho _1/6 \ge r\rho _1/6\). The proof is complete. \(\square \)

We are now in a position to complete the proof of Theorem 1.1.

Proof of Theorem 1.1

By Theorem 2.1 with \(p=2\) and Lemma 2.3, we obtain

In addition, since the total mean curvature of a convex surface can be represented by the mean width, namely \(\int _\Sigma H = 2\pi B\), where \(B = \frac{1}{|{\mathbb {S}}^2|}\int _{{\mathbb {S}}^2}b_\Sigma (\nu )dS(\nu )\) (cf. (19) & (22) in [5, Chapter 4]), and since \(b_\Sigma (\nu )\le {{\,\mathrm{diam}\,}}(\Sigma )\) in every direction \(\nu \) by definition of diameter, we have

completing the proof with \(C=72\pi /c_2\). (As \(c_2\ge 2/3\), we can take \(C=108\pi \).) \(\square \)

In the rest of this section we briefly observe that \({\mathcal {D}}\) can be also related with other scale invariant quantities with optimal exponents; the reader may skip this part as these estimates are not used any other part of this paper. For notational simplicity, we let \(d:={{\,\mathrm{diam}\,}}(\Sigma )\) and \(M:=\int _\Sigma H\).

We begin with indicating that in fact the converse of Lemma 2.3 also holds, i.e.,

Indeed, again letting \(d=1\), \(r=1/{\mathcal {D}}\), and \(\omega _0\) be such that \(b_\Sigma (\omega _0)=1\), if we choose \(\omega _2\) to be attaining the minimal width so that \(b_{\Sigma }(\omega _2)=r=:\rho _2\), and \(\omega _1\) to be orthogonal to both \(\omega _0\) and \(\omega _2\), and write \(\rho _1=b_{\Sigma }(\omega _1)\), and in addition if we similarly take a rectangular Q and a polyhedron P to the proof of Lemma 2.3, then we have \(A(\Sigma )\ge A(\partial P) \gtrsim \rho _1\) and \(V(\Sigma )\le V(\partial Q) \lesssim \rho _1\rho _2=\rho _1r\) so that \(A/V \gtrsim 1/r\). Therefore, after retrieving d and combining with Lemma 2.3, we obtain the first relation in (2.21). The second one follows by the fact that \(d\sim M\) holds under convexity; in fact, we have \(d \lesssim M\) (even without convexity by Topping’s inequality [26]), while (2.20) implies that \(M \lesssim d\) under convexity.

Next we focus on the isoperimetric ratio \(I=A^\frac{3}{2}/V\), for which we have

The first one is already observed, cf. (2.21) and (1.4), while the second one follows since \(3VM\le A^2\) (cf. [5, p.145]). Note that both sides in (2.22) are optimal because for a pancake-like (resp. cigar-like) surface \(\Sigma _\varepsilon \), we have \(I=O(\varepsilon ^{-1})\sim {\mathcal {D}}\) (resp. \(I^2=O(\varepsilon ^{-1})\sim {\mathcal {D}}\)).

We finally indicate that the ratio \(R:=r_\mathrm {out}/r_\mathrm {in}\), where \(r_\mathrm {out}\) and \(r_\mathrm {in}\) are the circumradius and the inradius, respectively, is completely comparable with \({\mathcal {D}}\):

Indeed, assuming that \(d=1\) up to rescaling, we obviously have \(r_\mathrm {out}\sim 1\) and \(r_\mathrm {in}\le 1/{\mathcal {D}}\), and hence \({\mathcal {D}} \lesssim R\); in addition, since the polyhedron P in the proof of Lemma 2.3 encloses a ball of radius \(\sim 1/{\mathcal {D}}\), we also have \({\mathcal {D}} \gtrsim 1/r_\mathrm {in}\sim R\).

3 Topping’s conjecture for axisymmetric surfaces

In this section we prove Theorem 1.3, namely Topping’s conjecture with a rigidity estimate. Throughout this section, for notational simplicity, we let

Since we focus on axisymmetric surfaces, we may hereafter assume the following

Hypothesis 3.1

(Simply-connected axisymmetric surface) A surface \(\Sigma \) is represented by using an immersed \(C^{1,1}\) plane curve \(\gamma =(x,z):[0,L]\rightarrow {\mathbb {R}}^2\) as

where \(\gamma \) is parametrized by the arclength \(s\in [0,L]\), and satisfies \(x(0)=z(0)=x(L)=0\), \(z(L)\ge 0\), \(\gamma _s(0)=(1,0)\), \(\gamma _s(L)=(-1,0)\), and also \(x(s)>0\) for any \(s\in (0,L)\). (The notation \(\gamma _s\) means the derivative with respect to s.) For such a surface, throughout this section, we let \(\theta :[0,L]\rightarrow {\mathbb {R}}\) denote a unique Lipschitz function such that for \(s\in [0,L]\),

and such that \(\theta (0)=0\). Note that \(\theta (L)\in \pi +2\pi {\mathbb {Z}}\).

Our strategy is to reduce the problem into the convex one by constructing, for a given \(\Sigma \) satisfying Hypothesis 3.1, a comparison axisymmetric convex surface \(\Sigma '\) such that \(M(\Sigma )\ge M(\Sigma ')\) and \({{\,\mathrm{diam}\,}}(\Sigma )\le {{\,\mathrm{diam}\,}}(\Sigma ')\). To this end, following the strategy in [6], we perform two kinds of rearrangement. The first one is to make the curve not going down vertically, while keeping the horizontal behavior. The second one is to make the curve convex by rearranging the tangential angle. The reason why we weaken the regularity of \(\Sigma \) to \(C^{1,1}\) in Hypothesis 3.1 is that the best regularity retained in these rearrangements is the Lipschitz continuity of \(\theta \), that is the \(C^{1,1}\)-regularity of \(\Sigma \). (Notice that the \(C^{1,1}\)-regularity is enough for defining M since \(C^{1,1}=W^{2,\infty }\).)

3.1 First rearrangement

Given \(\theta \) as in Hypothesis 3.1, we define \(\theta ^\sharp \) by

so that \(\theta ^\sharp ([0,L])=[0,\pi ]\). Note that \(\theta ^\sharp \) is Lipschitz, \(\theta ^\sharp (0)=0\) and \(\theta ^\sharp (L)=\pi \). For the corresponding curve \(\gamma _\sharp =(x_\sharp ,z_\sharp )\) starting from the origin, we have \(x_\sharp (L)=0\) as \(\cos \theta ^\sharp =\cos \theta \), and \(z_\sharp (L)>0\) as \(\sin \theta ^\sharp \ge 0\) and \(\sin \theta ^\sharp \not \equiv 0\). Hence the corresponding surface \(\Sigma _\sharp \) still satisfies Hypothesis 3.1.

For the first rearrangement we have

Since the equality for M is already known (cf. [6] or Appendix A), we only need to check the diameter control, which is not difficult.

Lemma 3.2

(Diameter control: first rearrangement) Let \(\Sigma \) satisfy Hypothesis 3.1, and let \(\Sigma _\sharp \) be obtained by the first rearrangement of \(\Sigma \). Then \({{\,\mathrm{diam}\,}}(\Sigma )\le {{\,\mathrm{diam}\,}}(\Sigma _\sharp )\).

Proof

Notice the general fact for an axisymmetric surface that, using the reflection operator \(R:(x,z)\mapsto (-x,z)\), we can represent \({{\,\mathrm{diam}\,}}(\Sigma )\) by the maximum of \(\mathrm {dist}(\gamma (s_1),R\gamma (s_2))\) over \(s_1,s_2\in [0,L]\). Notice that \(x\equiv x_\sharp \) holds since \(\cos \theta \equiv \cos \theta ^\sharp \) follows by definition of the first rearrangement. Therefore, it now suffices to show that the vertical distance of any two points does not contract, i.e.,

This follows by the fact that \(\sin \theta ^\sharp =|\sin \theta |\) so that

The proof is complete. \(\square \)

3.2 Second rearrangement

Given \(\theta \) as in Hypothesis 3.1 such that \(\theta ([0,L])=[0,\pi ]\), we define \(\theta ^*:[0,L]\rightarrow [0,\pi ]\) by the standard nondecreasing rearrangement of \(\theta \):

where \(m(\{\theta \ge c\})\) means the Lebesgue measure of \(\{s\in [0,L] \mid \theta (s)\ge c\}\) (see e.g. [12] for details of the rearrangement argument). Then the resulting surface \(\Sigma _*\) is clearly convex as \(\theta ^*\) is monotone. In addition, \(\Sigma _*\) still satisfies Hypothesis 3.1; indeed, thanks to well-known properties of the rearrangement, the function \(\theta ^*\) inherits the Lipschitz continuity of \(\theta \) [12, Lemma 2.3], and the corresponding curve \(\gamma _*=(x_*,z_*)\) starting from the origin retains all the boundary conditions, i.e.,

where in particular the last condition follows by the integration-preserving property: \(\int _0^Lf(\theta (s))ds=\int _0^Lf(\theta ^*(s))ds\) for any continuous function f [12, p.22, (C)].

For the second rearrangement we have

Here the remaining task is again only to establish the diameter control (cf. [6] or Appendix A). This second diameter control needs a more delicate argument, but it turns out that the following fine property holds.

Lemma 3.3

(Diameter control: second rearrangement) Let \(\Sigma \) satisfy Hypothesis 3.1. Suppose that \(\theta ([0,L])=[0,\pi ]\). Let \(\Sigma _*\) be the convex surface obtained by the second rearrangement of \(\Sigma \). Then \(\Sigma _*\) encloses \(\Sigma \). In particular, \({{\,\mathrm{diam}\,}}(\Sigma _*)\ge {{\,\mathrm{diam}\,}}(\Sigma )\).

Proof

Step 1. We first reduce the problem by using symmetry. Fix a unique point \({\bar{s}}\in [0,L]\) such that \(\theta ^*({\bar{s}})=\pi /2\) and such that \(\theta ^*(s)<\pi /2\) for every \(s<{\bar{s}}\); in other words, \({\bar{s}}\) is the first point where \(x_*\) attains the maximum. Then we can represent the convex curve \(\gamma _*\) (corresponding to \(\Sigma _*\)) on the restricted interval \([0,{\bar{s}}]\) by a graph curve, namely,

Notice that by symmetry it is sufficient for Lemma 3.3 to prove that the image of \(\gamma \) is included in the epigraph of \(U_*\):

Indeed, if we establish (3.7), then since the procedures of rearrangement and vertical reflection are commutative, using (3.7) also for the reflected curve \({\tilde{\gamma }}(s)=(x(L-s),z(L)-z(L-s))\), we find that the same kind of inclusion as (3.7) also holds for the subgraph of the upper-part of \(\gamma _*\), so that the desired assertion holds.

For later use we put down an elementary geometric property of \(G_*^+\), cf. (3.6):

Step 2. Now we prove (3.7); more precisely, we fix an arbitrary \(s_\dagger \in [0,L]\) and prove that \((x(s_\dagger ),z(s_\dagger ))\in G_*^+\). Let \(\theta ^\dagger :=(\theta |_{[0,s_\dagger ]})^*\), i.e., the nondecreasing rearrangement of the restriction \(\theta |_{[0,s_\dagger ]}\). Let \(\gamma _\dagger =(x_\dagger ,z_\dagger )\) be the corresponding convex curve defined on \([0,s_\dagger ]\), which in particular satisfies

by the integration-preserving property of rearrangement. Thus we only need to prove that \(\gamma _\dagger (s_\dagger )\in G_*^+\). In what follows we prove the stronger assertion that

We first notice that as the general property of rearrangement,

Indeed, letting \(\varphi :=\theta \chi _{[0,s_\dagger ]}+\pi \chi _{(s_\dagger ,L]}\), where \(\chi \) denotes the characteristic function, we have \(\varphi \ge \theta \) on [0, L] and hence \(\varphi ^*\ge \theta ^*\) by the order-preserving property [12, p.21, (M1)], and also \(\theta ^\dagger (s)=\varphi ^*(s)\) for \(s\in [0,s_\dagger ]\).

Using (3.11), we prove that

Indeed, since \(\cos \theta \) is decreasing on \([0,\pi ]\), and since \(\theta ^\dagger \) is nondecreasing on \([0,s_\dagger ]\) and valued into \([0,\pi ]\), the function \(x_\dagger (s)=\int _0^s\cos \theta ^\dagger \) is concave on \([0,s_\dagger ]\) and hence \(x_\dagger (s)\ge \min \{x_\dagger (0),x_\dagger (s_\dagger )\}\ge 0\), cf. (3.9). In addition, thanks to (3.11), we have \(x_\dagger (s)=\int _0^s\cos \theta ^\dagger \le \int _0^s\cos \theta ^*=x_*(s)\), completing the proof of (3.12).

We now prove (3.10) by considering the behaviors of \(z_*\) and \(z_\dagger \). Below we separately consider the two intervals \([0,\sigma _\dagger ]\) and \([\sigma _\dagger ,s_\dagger ]\), where \(\sigma _\dagger \in [0,s_\dagger ]\) denotes the maximal \(s\in [0,s_\dagger ]\) such that \(\theta _\dagger (s)\le \pi /2\). (Note that \(\sigma _\dagger \) may coincide with \(s_\dagger \).) Concerning the first interval \([0,\sigma _\dagger ]\), we have

Indeed, if \(s\le \sigma _\dagger \), then \(\theta ^*([0,s])\subset \theta ^\dagger ([0,s])\subset [0,\pi /2]\) by (3.11) and by definition of \(\sigma _\dagger \); hence, by (3.11) and by the fact that \(\sin \theta \) is increasing on \([0,\pi /2]\), we obtain \(z_\dagger (s)=\int _0^s\sin \theta ^\dagger \ge \int _0^s\sin \theta ^*=z_*(s)\), completing the proof of (3.13). Therefore, by using (3.12), (3.13), and the obvious inclusion \(\gamma _*([0,\sigma _\dagger ]) \subset G_*^+\), we deduce from the geometric property (3.8) that

Concerning the remaining part \([\sigma _\dagger ,s_\dagger ]\), since \(\theta ^\dagger ([\sigma _\dagger ,s_\dagger ])\subset [\pi /2,\pi ]\) by definition of \(\sigma _\dagger \), we have \((x_\dagger )_s=\cos \theta ^\dagger \le 0\) and \((z_\dagger )_s=\sin \theta ^\dagger \ge 0\) on \([\sigma _\dagger ,s_\dagger ]\), and hence

Using this property with the facts that \(x_\dagger \ge 0\), cf. (3.12), and that \((x_\dagger (\sigma _\dagger ),z_\dagger (\sigma _\dagger ))\in G_*^+\), cf. (3.14), we deduce from the geometric property (3.8) that \(\gamma _\dagger ([\sigma _\dagger ,s_\dagger ])\subset G_*^+.\) This combined with (3.14) implies (3.10), thus completing the proof. \(\square \)

3.3 Rigidity estimates

Topping’s conjecture itself is now already proved for simply-connected axisymmetric surfaces by the above two subsections. In this final subsection we establish Theorem 1.3 by giving a more quantitative analysis of the rearrangements. More precisely, we prove that the deficit \(\frac{M(\Sigma )}{{{\,\mathrm{diam}\,}}(\Sigma )}-\pi \) is bounded below by the sum of quantities measuring “axial expansion in the first rearrangement” and “coaxial deviation after the second rearrangement”.

We first prepare Lemma 3.4 below about “coaxial deviation” in the framework of convex geometry. To this end we briefly recall some classical facts in convex geometry. Since M has the mean-width representation, M is naturally defined even for singular (Lipschitz) convex surfaces, and moreover M has strict monotonicity with respect to the inclusion property for enclosed convex sets. In addition, we can also define M even for a degenerate convex body K (of dimension \(\le 2\)) by \(M(K):=\lim _{\varepsilon \rightarrow 0}M(\partial K_\varepsilon )\), where \(K_\varepsilon :=\{x\in {\mathbb {R}}^3 \mid \mathrm {dist}(x,K)\le \varepsilon \}\) denotes the \(\varepsilon \)-neighborhood of K. For example, for a segment S of length \(\ell \), we have \(M(S)=\pi \ell \). Notice that the above monotonicity is valid even for degenerate objects. These facts in particular imply (1.8) for any convex surface \(\Sigma \); indeed, if we take a segment S attaining the diameter of \(\Sigma \), then from the monotonicity of M and the fact that \({{\,\mathrm{diam}\,}}(\Sigma )={{\,\mathrm{diam}\,}}(S)\) we deduce that

Therefore, in order to obtain a lower bound for the deficit \(\frac{M(\Sigma )}{{{\,\mathrm{diam}\,}}(\Sigma )}-\pi \), it is natural to look at the quantity \(M(\Sigma )-M(S)\).

We are now ready to rigorously state a lemma concerning coaxial deviation.

Lemma 3.4

(Coaxial deviation) Let \(\Sigma \) be an axisymmetric convex surface. Then

where b denotes the maximal distance from an axis of symmetry \(L_\mathrm {axis}\), i.e., \(b:=\max _{q\in \Sigma }\mathrm {dist}(q,L_\mathrm {axis})\), and S denotes a segment attaining \({{\,\mathrm{diam}\,}}(\Sigma )\).

Before entering the proof, we compute the energy M for a useful example of a (singular) convex surface, which plays an important role in the proof of Lemma 3.4 as a comparison surface.

Remark 3.5

(A useful example \(\Gamma ^h_{a,A}\)) Given \(h>0\) and \(0< a\le A\), we let \(\Gamma ^h_{a,A}\) denote the surface defined by the boundary of the convex hull of the two circles \(C_\pm :=\{x^2+y^2=a^2,\ |z|=\pm h/2\}\) and the additional intermediate circle \(C_0:=\{x^2+y^2=A^2,\ z=0\}\). We compute

Indeed, an explicit calculation shows that the smooth (conical) parts \(\Gamma ^h_{a,A}\cap \{0<|z|<h/2\}\) has the energy \(\pi h\), not depending on a nor A. The energy on the singular parts \(C_\pm \) and \(C_0\) only depend on the angles and the lengths of the edges, in view of approximation by the \(\varepsilon \)-neighborhood. Since the above \(\theta \) denotes the angle between the z-axis and a generating line of the conical part, the deviation angle on \(C_\pm \) is \(\pi /2-\theta \), and that on \(C_0\) is \(2\theta \). Then we compute the energy on \(C_+\) (or \(C_-\)) is \(\frac{1}{2}\cdot (\pi /2-\theta )\cdot 2\pi a\), and that on \(C_0\) is \(\frac{1}{2}\cdot 2\theta \cdot 2\pi A\). Summing up all implies (3.16). Note that the above computation is valid not only for a cylinder (\(0<a=A\)) but also for degenerate cases: e.g. double-cone (\(0=a<A\)), segment (\(a=A=0\)), and disk (\(h=0\), and hence \(\theta =\pi /2\)).

Now we turn to the proof of Lemma 3.4.

Proof of Lemma 3.4

Let r be the maximal distance from \(L_{\mathrm {axis}}\) concerning S, i.e., \(r:=\max _{p\in S}\mathrm {dist}(p,L_\mathrm {axis})\). Since S is of length \(d:={{\,\mathrm{diam}\,}}(\Sigma )\), up to a rigid motion, the surface \(\Sigma \) encloses the cylinder \(\Gamma ^h_{r,r}\) of radius r and height \(h:=\sqrt{d^2-4r^2}\). Recall that \(M(\Gamma ^h_{r,r}) = \pi (h+\pi r)\), cf. Remark 3.5. Using the monotonicity \(M(\Sigma )\ge M(\Gamma ^h_{r,r})\ge M(S)=\pi d\), and noting that \(h-(d-2r)\ge 0\), we have

In the case of \(r\not \ll b\), say \(r\ge b/4\), this implies (3.15) with the help of the obvious estimate \(b\le d\).

We now consider the case of \(r\le b/4\). By convexity of \(\Sigma \) and definition of b, the surface \(\Sigma \) also encloses \(\Gamma ^h_{r,b/2}\). Then from monotonicity, Remark 3.5, and the assumption \(r\le b/4\), we deduce that

where \(\theta \in [0,\pi /2]\) satisfies that \(\tan \theta =(b-2r)/h\gtrsim b/h\). If \(\theta \ge \pi /4\), then (3.17) and \(b\le d\) again directly imply (3.15). If \(\theta \le \pi /4\), then we can use the estimate \(\theta \gtrsim \tan \theta \) for (3.17) to the effect that

Then the obvious estimate \(h\le d\) implies (3.15), completing the proof. \(\square \)

With Lemma 3.4 at hand, we are now in a position to prove Theorem 1.3.

Proof of Theorem 1.3

We may assume Hypothesis 3.1 on \(\Sigma \). Throughout the proof, we let \(\Sigma _\sharp \) denote the surface given by the first rearrangement of \(\Sigma \), and \(\Sigma _*\) by the second rearrangement of \(\Sigma _\sharp \). For notational simplicity we let d (resp. \(d_\sharp \), \(d_*\)) denote \({{\,\mathrm{diam}\,}}(\Sigma )\) (resp. \({{\,\mathrm{diam}\,}}(\Sigma _\sharp )\), \({{\,\mathrm{diam}\,}}(\Sigma _*)\)). Notice that \(d\le d_\sharp \le d_*\le L\), cf. Lemmata 3.2 and 3.3, where L denotes the (same) length of generating curves of those three surfaces. We divide the proof into three steps.

Step 1. We first prove that

Using (3.2) and \(d\le L\), we obtain

Similarly, using (3.5) and \(d_\sharp \le L\), we get

Then, using Lemma 3.4 for a segment \(S_*\) attaining the diameter \(d_*\) of \(\Sigma _*\), and also using \(d_*\le L\), we deduce that there is a universal constant \(\sigma >0\) such that

Estimates (3.19), (3.20), and (3.21) imply (3.18).

Step 2. Now we verify the main geometric estimate

where \(a:=z(L)=\int _0^L\sin \theta (s)ds\) and \(a_*:=z_*(L)=z_\sharp (L)=\int _0^L|\sin \theta (s)|ds\). (Below we essentially use the hypothesis \(a\ge 0\).) Throughout this step we may assume that \(L=1\) up to rescaling. In addition, we may assume that both \(b_*\ll 1\) and \(d_*-d\ll 1\) hold since otherwise (3.22) is trivial in view of \(0\le a_*-a \le a_*\le L=1\). For later use we introduce

which is nothing but the width in the axial direction \(b_\Sigma (\omega _\mathrm {axis})\) of the original \(\Sigma \).

We first check the (optimal) estimate

by showing the equivalent one \(2{\bar{a}}\le a_*+a\). Notice the representation

where here and in the sequel we let \(f_\pm \ge 0\) denote the sign-decomposition \(f=f_+-f_-\).

Choose \(s_1<s_2\) attaining the maximum in definition of \({\bar{a}}\), and let \(J:=[s_1,s_2]\).

Then we see that if \(\alpha :=\int _J\sin \theta (s)ds\ge 0\), then \({\bar{a}}=\int _J\sin \theta (s)ds\) and hence

while if \(\alpha \le 0\), then \({\bar{a}}=-\int _J \sin \theta (s)ds\) and hence, noting that \(a=\int _0^1\sin \theta (s)ds\ge 0\), we also have

completing the proof of (3.23).

We now prove that if \(b_*\ll 1\) and \(d_*-d\ll 1\), then

Since \(\Sigma \) is contained in a cylinder of radius \(b_*\) and height \({\bar{a}}\), we have

We now observe that the smallness assumptions imply

Since \(\gamma _*\) (generating \(\Sigma _*\)) is convex and of length 1, we have \(d_*\gtrsim 1\). This and the assumption \(d_*-d\ll 1\) imply that \(d\gtrsim 1\). Using this and the assumption \(b_*\ll 1\) for (3.25), we obtain \({\bar{a}}^2 \ge d^2-4b_*^2 \gtrsim 1\) and hence (3.26) as desired. Inserting (3.26) into (3.25), we get

Combining this with the obvious estimate \(a_*\le d_*\), we obtain (3.24). Estimate (3.22) now follows from (3.23) and (3.24).

Step 3. We finally complete the proof. Estimates (3.18) and (3.22) imply that

Using the representations \(2b_* = \int _0^L |\cos \theta (s)| ds\) and \(a_*-a = 2\int _0^L (\sin \theta (s))_- ds\), and the change of variables \(t=s/L\), we find that

Simply letting \(X^2+Y\) denote the right-hand side of (3.27), we have \(X^2+Y\gtrsim (X+Y)^2\) since \(X,Y\in [0,1]\). In addition, the integrand of \(X+Y\) has the lower bound of the form \(|\cos \theta |+(\sin \theta )_-\gtrsim \sqrt{1-\sin \theta }\), where both sides linearly vanish if and only if \(\theta \in \pi /2+2\pi {\mathbb {Z}}\). Therefore,

The relation \(\sin \theta (Lt)=T(t)\cdot \omega _\mathrm {axis}\) for \(\omega _\mathrm {axis}=(0,0,1)\) completes the proof. \(\square \)

Here we discuss the optimality of Theorem 1.1. As is mentioned in the introduction, the optimality can be observed by using the double-cone, which is nothing but the degenerate case \(\Gamma ^1_{0,\varepsilon }\) in Remark 3.5.

Remark 3.6

(Optimality of Theorem 1.3 via the double-cone \(\Gamma ^1_{0,\varepsilon }\)) Let \(\Sigma _\varepsilon \) be the thin double-cone \(\Gamma ^1_{0,\varepsilon }\) with \(\varepsilon \ll 1\). Obviously, \({{\,\mathrm{diam}\,}}(\Sigma _\varepsilon )=1\). In addition, using the computation of M in Remark 3.5, and in particular noting that the corresponding angle \(\theta _\varepsilon \) in (3.16) is given by \(\tan \theta _\varepsilon =2\varepsilon \) so that \(\theta _\varepsilon /\varepsilon \rightarrow 2\) as \(\varepsilon \rightarrow 0\), we have

On the other hand, a simple computation yields

and hence the ratio \(\frac{1}{U(\Sigma _\varepsilon )}(\frac{M(\Sigma _\varepsilon )}{{{\,\mathrm{diam}\,}}(\Sigma _\varepsilon )}-\pi )\) converges as desired. Note that by a suitable approximation of higher order than \(\varepsilon \), we can even construct a sequence of smooth surfaces (nearly double-cone) for which the ratio also converges.

We additionally remark a difference between the axial and coaxial directions.

Remark 3.7

(Optimal remainder in the axial direction) In the third step of the proof of Theorem 1.3, the remainder is eventually simplified into U, but this is just for notational convenience to state Theorem 1.3. The simplified remainder U is still sharp with respect to coaxial deviation as in Remark 3.6, but in fact not sharp axially. To see this fact, we let \(\Sigma _{\varepsilon }\) be the surface generated by revolving the broken line connecting \((x,z)=(0,0),(\varepsilon ^2,-\varepsilon ),(\varepsilon ^2,1+\varepsilon ),(0,1)\) around the z-axis. Then we have

so that the ratio \(\frac{1}{U}(\frac{M}{{{\,\mathrm{diam}\,}}}-\pi )\) diverges. However, if we replace U by a more sharp remainder V such as the right-hand side in (3.27), i.e.,

where N(t) denotes the unit normal of \(\Sigma \) at \(\gamma _\Sigma (t)\), then the ratio \(\frac{1}{V}(\frac{M}{{{\,\mathrm{diam}\,}}}-\pi )\) converges even for the above \(\Sigma _\varepsilon \).

We now complete the proof of Corollary 1.4.

Proof of Corollary 1.4

We only need to argue for a minimizing sequence \(\{\Sigma _n\}\). We may assume up to rescaling that \({{\,\mathrm{diam}\,}}(\Sigma _n)=1\), and up to a rigid motion that \(\Sigma _n\) satisfies Hypothesis 3.1. Let \(\theta _n:[0,1]\rightarrow {\mathbb {R}}\) denote the angle function in Hypothesis 3.1 corresponding to \(\Sigma _n\) after the change of variables \(t=s/L_n\), where \(L_n\) is the length of a generating curve of \(\Sigma _n\). Since the distance of the two points in the z-axis (i.e., the endpoints of a generating curve) is bounded above by the diameter, we have

The assumption of convergence \(M(\Sigma _n)/{{\,\mathrm{diam}\,}}(\Sigma _n)\rightarrow \pi \) and Theorem 1.3 imply that \(\int _0^1\sqrt{1-\sin \theta _n}\rightarrow 0\); hence, \(\sin \theta _n(t)\rightarrow 1\) and also \(\cos \theta _n(t)\rightarrow 0\) for a.e. \(t\in [0,1]\). Using the bounded convergence theorem, we deduce that \(\sin \theta _n\rightarrow 1\) and \(\cos \theta _n\rightarrow 0\) in \(L^p((0,1))\) for any \(1\le p < \infty \). In addition, also noting that \(L_n\le 1\) (\(={{\,\mathrm{diam}\,}}(\Sigma _n)\)), we deduce from (3.28) that \(L_n\rightarrow 1\). Hence, for the derivative \({\dot{\gamma }}_{\Sigma _n}=L_n(\cos \theta _n,0,\sin \theta _n)\) of the generating curve \(\gamma _{\Sigma _n}\) chosen to lie in the xz-plane, we see that \({\dot{\gamma }}_{\Sigma _n}\) converges in \(L^p((0,1);{\mathbb {R}}^3)\) to the derivative \(\dot{{\bar{\gamma }}}=(0,0,1)\) of the segment \({\bar{\gamma }}(t)=(0,0,t)\). The lower order convergence of \(\gamma _{\Sigma _n}\) to \({\bar{\gamma }}\) easily follows from this first order one. \(\square \)

We finally recall that Topping’s conjecture is also related to finding the optimal constant in Simon’s inequality of the form

The constant \(\pi /2\) is explicitly obtained by Topping [25] following Simon’s original strategy [23]. It is also conjectured that \(\pi /2\) can be replaced with \(\pi \) by the same reason as Topping’s conjecture. Since Simon’s inequality is implied by Topping’s inequality via the Cauchy–Schwarz inequality, our result also gives the optimal constant for Simon’s one under the same assumption as in Theorem 1.3.

References

Agostiniani, V., Fogagnolo, M., Mazzieri, L.: Minkowski inequalities via nonlinear potential theory. arXiv:1906.00322

Agostiniani, V., Fogagnolo, M., Mazzieri, L.: Sharp geometric inequalities for closed hypersurfaces in manifolds with nonnegative Ricci curvature. Inventiones Mathematicae 222(3), 1033–1101 (2020)

Agostiniani, V., Mazzieri, L.: Monotonicity formulas in potential theory. Calc. Var. Partial Differ. Equ. 59(1), 32 (2020)

Bucur, D., Henrot, A.: A new isoperimetric inequality for elasticae. J. Eur. Math. Soc. (JEMS) 19(11), 3355–3376 (2017)

Burago, Y.D., Zalgaller, V.A.: Geometric inequalities, Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 285, Springer-Verlag, Berlin, 1988, Translated from the Russian by A. B. Sosinskiĭ, Springer Series in Soviet Mathematics

Dalphin, J., Henrot, A., Masnou, S., Takahashi, T.: On the minimization of total mean curvature. J. Geom. Anal. 26(4), 2729–2750 (2016)

De Lellis, C., Müller, S.: Optimal rigidity estimates for nearly umbilical surfaces. J. Differ. Geom. 69(1), 75–110 (2005)

Ferone, V., Kawohl, B., Nitsch, C.: The elastica problem under area constraint. Math. Ann. 365(3–4), 987–1015 (2016)

Michael, E.: Gage, An isoperimetric inequality with applications to curve shortening. Duke Math. J. 50(4), 1225–1229 (1983)

Henrot, A., Mounjid, O.: Elasticae and inradius. Arch. Math. (Basel) 108(2), 181–196 (2017)

Huisken, G.: Flow by mean curvature of convex surfaces into spheres. J. Differ. Geom. 20(1), 237–266 (1984)

Kawohl, B.: Rearrangements and Convexity of Level Sets in PDE. Lecture Notes in Mathematics, vol. 1150. Springer, Berlin (1985)

Kuwert, E., Li, Y.: Asymptotics of Willmore minimizers with prescribed small isoperimetric ratio. SIAM J. Math. Anal. 50(4), 4407–4425 (2018)

Leoni, G.: A first course in Sobolev spaces, 2nd edn. Graduate Studies in Mathematics, vol. 181. American Mathematical Society, Providence, RI (2017)

Miura, T.: Singular perturbation by bending for an adhesive obstacle problem. Calc. Var. Partial Differ. Equ. 55(1), 24 (2016)

Miura, T.: Overhanging of membranes and filaments adhering to periodic graph substrates. Phys. D 355, 34–44 (2017)

Miura, T.: Elastic curves and phase transitions. Math. Ann. 376(3–4), 1629–1674 (2020)

Perez, D.R.: On nearly umbilical hypersurfaces. Ph.D. thesis, Universität Zürich (2011)

Röger, M., Schätzle, R.: Control of the isoperimetric deficit by the Willmore deficit. Analysis 32(1), 1–7 (2012)

Schneider, R.: Convex Bodies: The Brunn–Minkowski Theory, Encyclopedia of Mathematics and Its Applications, vol. 44. Cambridge University Press, Cambridge (1993)

Schulze, F.: Nonlinear evolution by mean curvature and isoperimetric inequalities. J. Differ. Geom. 79(2), 197–241 (2008)

Schygulla, J.: Willmore minimizers with prescribed isoperimetric ratio. Arch. Rat. Mech. Anal. 203(3), 901–941 (2012)

Simon, L.: Existence of surfaces minimizing the Willmore functional. Commun. Anal. Geom. 1(2), 281–326 (1993)

Topping, P.: The optimal constant in Wente’s \(L^\infty \) estimate. Comment. Math. Helv. 72(2), 316–328 (1997)

Topping, P.: Mean curvature flow and geometric inequalities. J. Reine Angew. Math. 503, 47–61 (1998)

Topping, P.: Relating diameter and mean curvature for submanifolds of Euclidean space. Comment. Math. Helv. 83(3), 539–546 (2008)

Acknowledgements

The author would like to thank Felix Schulze and Peter Topping for reading an earlier version of this manuscript. This work is in part supported by JSPS KAKENHI Grant Nos. 18H03670, 20K14341, 21H00990, and by Grant for Basic Science Research Projects from The Sumitomo Foundation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Mean curvature estimates in rearrangements

Appendix A: Mean curvature estimates in rearrangements

We briefly recall the arguments in [6] about how the rearrangements defined in Sect. 3 control mean curvature.

We first address the first rearrangement, \(\Sigma \rightarrow \Sigma _\sharp \), and prove that \(\int _{\Sigma _\sharp }|H|=\int _\Sigma |H|\). A direct computation yields the representation

As is already observed in the above proof, we have

and in addition that \(|\sin \theta |=\sin \theta ^\sharp \); more precisely,

Furthermore, since \(\theta ^\sharp =f\circ \theta \), where both \(f:=\mathrm {dist}(\cdot ,2\pi {\mathbb {Z}})\) and \(\theta \) are Lipschitz, we have the chain rule \(\theta ^\sharp _s=(f'\circ \theta )\theta _s\) with the understanding that \([(f'\circ \theta )\theta _s](s)=0\) whenever \(\theta _s(s)=0\) (cf. [14, Corollary 3.66]). Since \(f'\equiv \pm 1\) on \(S_\pm \), we get

Inserting (A.2), (A.3), and (A.4) into (A.1), we deduce that \(\int _{\Sigma _\sharp }|H|=\int _{\Sigma }|H|\).

We now turn to the second rearrangement, \(\Sigma \rightarrow \Sigma _*\), and prove that \(\int _{\Sigma _*}|H|\le \int _\Sigma |H|\). Since \(\int _{\Sigma _*}|H|=\int _{\Sigma _*}H\) by convexity of \(\Sigma _*\), in view of the triangle inequality it suffices show that \(\int _{\Sigma _*}H=\int _\Sigma H\). By a direction computation and an integration by parts, using that \(x(0)=x(L)=0\), we have the representation

where \(g(\theta ):= \sin \theta -\theta \cos \theta \). The same representation \(\int _{\Sigma _*}H=\pi \int _0^Lg(\theta ^*(s))ds\) also holds for the rearranged surface \(\Sigma _*\) since \(x_*(0)=x_*(L)=0\). We then deduce the desired identity \(\int _{\Sigma _*}H=\int _\Sigma H\) from these representations and the integration-preserving property of rearrangement.

Rights and permissions

About this article

Cite this article

Miura, T. Geometric inequalities involving mean curvature for closed surfaces. Sel. Math. New Ser. 27, 80 (2021). https://doi.org/10.1007/s00029-021-00696-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s00029-021-00696-5