Abstract

We provide a new proof of a result of Baxter and Zeilberger showing that \({{\,\mathrm{inv}\,}}\) and \({{\,\mathrm{maj}\,}}\) on permutations are jointly independently asymptotically normally distributed. The main feature of our argument is that it uses a generating function due to Roselle, answering a question raised by Romik and Zeilberger.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

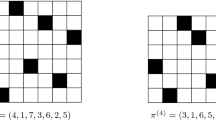

For a permutation w in the symmetric group \(S_n\) written in one-line notation \(w = w_1 \dots w_n\), the inversion and major index statistics are given by

It is well known that \({{\,\mathrm{inv}\,}}\) and \({{\,\mathrm{maj}\,}}\) are equidistributed on \(S_n\) [Mac, §1] with common mean and standard deviation

These results also follow easily from our arguments; see Remark 2.7. In [BZ10], Baxter and Zeilberger proved that \({{\,\mathrm{inv}\,}}\) and \({{\,\mathrm{maj}\,}}\) are jointly independently asymptotically normally distributed as \(n \rightarrow \infty \). More precisely, define normalized random variables on \(S_n\)

Theorem 1.1

(Baxter–Zeilberger [BZ10]). For each \(u, v \in \mathbb {R}\), we have

See [BZ10] for further historical background. Baxter and Zeilberger’s argument involves mixed moments and recurrences based on combinatorial manipulations with permutations. Romik suggested a generating function due to Roselle, quoted as Theorem 2.2 below, should provide another approach. Zeilberger subsequently offered a $300 reward for such an argument, which has happily now been collected. Our overarching motivation, and Romik’s original impetus for suggesting Roselle’s formula, is to give a local limit theorem, i.e. a formula for the counts \(\#\{w \in S_n : {{\,\mathrm{inv}\,}}(w) = u, {{\,\mathrm{maj}\,}}(w) = v\}\) with an explicit error term, which will be the subject of a future article. For further context, see [Zei] and [Thi16].

2 Consequences of Roselle’s Formula

Here we recall Roselle’s formula, originally stated in different but equivalent terms, and derive a generating function expression which quickly motivates Theorem 1.1.

Definition 2.1

Let \(H_n\) be the bivariate \({{\,\mathrm{inv}\,}}, {{\,\mathrm{maj}\,}}\) generating function on \(S_n\), i.e.

Theorem 2.2

(Roselle [Ros74]). We have

where \((p)_n := (1-p)(1-p^2) \cdots (1-p^n)\).

The following is the main result of this section. An integer partition \(\mu \vdash n\) is a weakly decreasing list of positive integers \(\mu _1 \ge \mu _2 \ge \cdots \ge \mu _k > 0\) summing to n. The length of \(\mu \) is \(\ell (\mu ) = k\).

Theorem 2.3

There are constants \(c_\mu \in \mathbb {Z}\) indexed by integer partitions \(\mu \vdash n\) such that

where

and \([n]_p! := [n]_p [n-1]_p \cdots [1]_p\), \([c]_p := 1 + p + \cdots + p^{c-1} = (1-p^c)/(1-p)\).

An explicit expression for \(c_\mu \) is given below in (12). The rest of this section is devoted to proving Theorem 2.3. Straightforward manipulations with (2) immediately yield (3), where

and \(\{z^n\}\) here refers to extracting the coefficient of \(z^n\). Thus it suffices to show (5) implies (4). By standard arguments, the \(z^n\) coefficient of the product over a, b in (5) is the bivariate generating function of size-n multisets of pairs \((a, b) \in \mathbb {Z}_{\ge 0}^2\), where the weight of such a multiset is \(p^{\sum _i a_i} q^{\sum _i b_i}\). We will use this same componentwise-sum weight throughout.

Definition 2.4

For \(\lambda \vdash n\), let \(M_\lambda \) be the bivariate generating function for multisets of pairs \((a, b) \in \mathbb {Z}_{\ge 0}^2\) of type \(\lambda \), meaning some element has multiplicity \(\lambda _1\), another element has multiplicity \(\lambda _2\), etc.

We clearly have

though the \(M_\lambda \) are inconvenient to work with, so we perform a change of basis.

Definition 2.5

Let P[n] denote the lattice of set partitions of \([n] := \{1, 2, \ldots , n\}\) with minimum \(\widehat{0} = \{\{1\}, \{2\}, \ldots , \{n\}\}\) and maximum \(\widehat{1} = \{\{1, 2, \ldots , n\}\}\). Here \(\Lambda \le \Pi \) means that \(\Pi \) can be obtained from \(\Lambda \) by merging blocks of \(\Lambda \). The type of a set partition \(\Lambda \) is the integer partition obtained by rearranging the list of the block sizes of \(\Lambda \) in weakly decreasing order. For \(\lambda \vdash n\), set

which has type \(\lambda \).

Definition 2.6

For \(\Pi \in P[n]\), let \(R_\Pi \) denote the bivariate generating function for lists \(L \in (\mathbb {Z}_{\ge 0}^2)^n\) where for each block of \(\Pi \) the entries in L from that block are all equal. Similarly, let \(S_\Pi \) denote the bivariate generating function of lists L where in addition to entries from the same block being equal, entries from two different blocks are not equal.

We easily see that

and that

so that, by Möbius inversion on P[n],

where \(\mu (\Pi , \Lambda )\) is the Möbius function of the lattice of set partitions. Under the “forgetful” map from lists to multisets, a multiset of type \(\lambda \vdash n\) has fiber of size \(\left( {\begin{array}{c}n\\ \lambda \end{array}}\right) \). It follows that

where \(\lambda ! := \lambda _1! \lambda _2! \cdots \). Combining in order (5), (6), (10), (9), and (7) gives

Now (4) follows from (11), where

This completes the proof of Theorem 2.3.

Remark 2.7

From (12), \(c_{(1^n)} = 1\) since the sum only involves \(\Lambda = \widehat{0}\), where \((1^n)\) refers to the partition with n parts of size 1. Letting \(p \rightarrow 1\) (or, symmetrically, \(q \rightarrow 1\)) in (4), the only surviving term is \(d=0\) and \(\mu = (1^n)\). Consequently, \(H_n(1, q) = [n]_q!\), recovering a classic result of MacMahon [Mac, §1]. Explicitly,

The mean and standard deviation may be extracted by recognizing \([n]_q!/n!\) as the probability generating function of the sum of independent discrete random variables.

Remark 2.8

Using (3), we see that the probability generating function (discussed below in Example 4.3) \(H_n(p, q)/n!\) differs from \([n]_p! [n]_q!/n!^2\) by precisely the correction factor \(F_n(p, q)\). Using (5), this factor has the following combinatorial interpretation:

Intuitively, the numerator and denominator should be the same “up to first order.” Theorem 3.1 will give one precise sense in which they are asymptotically equal.

3 Estimating the Correction Factor

This section is devoted to showing that the correction factor \(F_n(p, q)\) from Theorem 2.3 is negligible in an appropriate sense, Theorem 3.1. Recall that \(\sigma _n\) denotes the standard deviation of \({{\,\mathrm{inv}\,}}\) or \({{\,\mathrm{maj}\,}}\) on \(S_n\).

Theorem 3.1

Uniformly on compact subsets of \(\mathbb {R}^2\), we have

We begin with some simple estimates starting from (11) which motivate the rest of the inequalities in this section. We may assume \(|s|, |t| \le M\) for some fixed M. Setting \(p=e^{is/\sigma _n}, q=e^{it/\sigma _n}\), we have \(|1-p| = |1-\exp (is/\sigma _n)| \le |s|/\sigma _n\). For n sufficiently large compared to M and \(1 \le c \le n\), we claim that \(|[c]_p| \ge 1\). Assuming this for the moment, for n sufficiently large, (11) gives

As for the claim, one finds \(|[c]_p| = |\sinh (cs/2\sigma _n)/\sinh (s/2\sigma _n)|\). For sufficiently large n, \(cs/2\sigma _n \ll 1\), and \(\sinh (z)\) is increasing near 0, which gives the claim. We now simplify the inner sum on the right-hand side of (13).

Lemma 3.2

Suppose \(\lambda \vdash n\) with \(\ell (\lambda ) = n-k\), and fix d. Then

and the terms on the left all have the same sign \((-1)^{d-k}\). The sums are empty unless \(n \ge d \ge k \ge 0\).

Proof

The upper order ideal \(\{\Lambda \in P[n] : \Pi (\lambda ) \le \Lambda \}\) is isomorphic to \(P[n-k]\) by collapsing the \(n-k\) blocks of \(\Pi (\lambda )\) to singletons. This isomorphism preserves the number of blocks. Furthermore, recall that in P[n] the Möbius function satisfies

from which it follows easily that

The result follows immediately upon combining these observations. \(\square \)

Lemma 3.3

Let \(\lambda \vdash n\) with \(\ell (\lambda ) = n-k\) and \(n \ge d \ge k \ge 0\). Then

Proof

Using (14), we can interpret the sum as the number of permutations of \([n-k]\) with \(n-d\) cycles, which is a Stirling number of the first kind. There are well-known asymptotics for these numbers, though the stated elementary bound suffices for our purposes. We induct on d. At \(d=k\), the result is trivial. Given a permutation of \([n-k]\) with \(n-d\) cycles, choose \(i, j \in [n-k]\) from different cycles. Suppose the cycles are of the form \((i'\ \cdots \ i)\) and \((j\ \cdots \ j')\). Splice the two cycles together to obtain

This procedure constructs every permutation of \([n-k]\) with \(n-(d+1)\) cycles and requires no more than \((n-k)^2\) choices. The result follows. \(\square \)

Lemma 3.4

For \(n \ge d \ge k \ge 0\), we have

Proof

For \(\lambda \vdash n\) with \(\ell (\lambda ) = n-k\), \(\lambda !\) can be thought of as the product of terms obtained from filling the ith row of the Young diagram of \(\lambda \) with \(1, 2, \ldots , \lambda _i\). Alternatively, we may fill the cells of \(\lambda \) as follows: put \(n-k\) one’s in the first column, and fill the remaining cells with the numbers \(2, 3, \ldots , k+1\) starting at the largest row and proceeding left to right. It’s easy to see the labels of the first filling are bounded above by the labels of the second filling, so that \(\lambda ! \le (k+1)!\). Furthermore, each \(\lambda \vdash n\) with \(\ell (\lambda ) = n-k\) can be constructed by first placing \(n-k\) cells in the first column and then deciding on which of the \(n-k\) rows to place each of the remaining k cells, so there are no more than \((n-k)^k\) such \(\lambda \). The result follows from combining these bounds with (16). \(\square \)

Lemma 3.5

For n sufficiently large, for all \(0 \le d \le n\) we have

Proof

For \(n \ge 2\) large enough, for all \(n \ge k \ge 2\) we see that \((k+1)! < n^{k-1}\). Using (17) gives

\(\square \)

We may now complete the proof of Theorem 3.1. Combining Lemma 3.5 and (13) gives

Since \(\sigma _n^2 \sim n^3/36\) and M is constant, \((Mn)^{2d}/\sigma _n^{2d} \sim (36^2M^2/n)^d\). Since M is constant, using a geometric series it follows that

completing the proof of Theorem 3.1.

Remark 3.6

Indeed, the argument shows that \(|F_n(e^{is/\sigma _n}, e^{it/\sigma _n}) - 1| = O(1/n)\). The above estimates are particularly far from sharp for large d, though for small d they are quite accurate. Working directly with (11), one finds the \(d=1\) contribution to be

Letting \(p = e^{is/\sigma _n}, q = e^{it/\sigma _n}\), straightforward estimates shows that this is \(\Omega (1/n)\). Consequently, the preceding arguments are strong enough to identify the leading term, and in particular

4 Deducing Baxter and Zeilberger’s Result

We next summarize enough of the standard theory of characteristic functions to prove Theorem 1.1 using (3) and Theorem 3.1.

Definition 4.1

The characteristic function of an \(\mathbb {R}^k\)-valued random variable \(X = (X_1, \ldots , X_k)\) is the function \(\phi _X :\mathbb {R}^k \rightarrow \mathbb {C}\) given by

Example 4.2

It is well known that the characteristic function of the standard normal random variable with density \(\frac{1}{\sqrt{2\pi }} e^{-x^2/2}\) is \(e^{-s^2/2}\). Similarly, the characteristic function of a bivariate jointly independent standard normal random variable with density \(\frac{1}{2\pi } e^{-x^2/2 - y^2/2}\) is \(e^{-s^2/2 - t^2/2}\).

Example 4.3

If W is a finite set and \({{\,\mathrm{stat}\,}}= ({{\,\mathrm{stat}\,}}_1, \ldots , {{\,\mathrm{stat}\,}}_k) :W \rightarrow \mathbb {Z}_{\ge 0}^k\) is some statistic, the probability generating function of \({{\,\mathrm{stat}\,}}\) on W is

The characteristic function of the corresponding random variable X where the w are chosen uniformly from W is

From Example 4.3, Remark 2.7, and an easy calculation, it follows that the characteristic functions of the random variables \(X_n\) and \(Y_n\) from (1) are

An analogous calculation for the random variable \((X_n, Y_n)\) together with (18) and (3) gives

Theorem 4.4

(Multivariate Lévy Continuity [Bil95, p. 383]). Suppose that \(X^{(1)}\), \(X^{(2)}\), \(\ldots \) is a sequence of \(\mathbb {R}^k\)-valued random variables and X is an \(\mathbb {R}^k\)-valued random variable. Then \(X^{(1)}, X^{(2)}, \ldots \) converges in distribution to X if and only if \(\phi _{X^{(n)}}\) converges pointwise to \(\phi _X\).

If the distribution function of X is continuous everywhere, convergence in distribution means that for all \(u_1, \ldots , u_k\) we have

Many techniques are available for proving that \({{\,\mathrm{inv}\,}}\) and \({{\,\mathrm{maj}\,}}\) on \(S_n\) are asymptotically normal. The result is typically attributed to Feller.

Theorem 4.5

([Fel68, p. 257]). The sequences of random variables \(X_n\) and \(Y_n\) from (1) each converge in distribution to the standard normal random variable.

We may now complete the proof of Theorem 1.1. From Theorem 4.5 and Example 4.2, we have for all \(s, t \in \mathbb {R}\)

Combing in order (20), (19), and Theorem 3.1 gives

References

Patrick Billingsley. Probability and measure. Wiley Series in Probability and Mathematical Statistics. John Wiley & Sons, Inc., New York, third edition, 1995. A Wiley-Interscience Publication.

Andrew Baxter and Doron Zeilberger. The Number of Inversions and the Major Index of Permutations are Asymptotically Joint-Independently Normal (Second Edition!), 2010. arXiv:1004.1160.

William Feller. An introduction to probability theory and its applications. Vol. I. Third edition. John Wiley & Sons, Inc., New York-London-Sydney, 1968.

P. A. MacMahon. Two Applications of General Theorems in Combinatory Analysis: (1) To the Theory of Inversions of Permutations; (2) To the Ascertainment of the Numbers of Terms in the Development of a Determinant which has Amongst its Elements an Arbitrary Number of Zeros. Proc. London Math. Soc., S2-15(1):314.

D. P. Roselle. Coefficients associated with the expansion of certain products. Proc. Amer. Math. Soc., 45:144–150, 1974.

Marko Thiel. The inversion number and the major index are asymptotically jointly normally distributed on words. Combin. Probab. Comput., 25(3):470–483, 2016.

Doron Zeilberger. The number of inversions and the major index of permutations are asymptotically joint-independently normal. Personal Web Page. http://sites.math.rutgers.edu/~zeilberg/mamarim/mamarimhtml/invmaj.html (version: 2018-01-25).

Acknowledgements

The author would like to thank Dan Romik and Doron Zeilberger for providing the impetus for the present work and feedback on the manuscript. He would also like to thank Sara Billey and Matjaž Konvalinka for valuable discussion on related work, and he gratefully acknowledges Sara Billey for her very careful reading of the manuscript and many helpful suggestions. Finally, thanks also go to the anonymous referees for their careful reading of the manuscript and useful suggestions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author states that there is no conflict of interest.

Additional information

Communicated by Eric Fusy.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Swanson, J.P. On a Theorem of Baxter and Zeilberger via a Result of Roselle. Ann. Comb. 26, 87–95 (2022). https://doi.org/10.1007/s00026-022-00570-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00026-022-00570-x