Abstract

The concept of penumbra of a convex set with respect to another convex set was introduced by Rockafellar (1970). We study various geometric and topological properties of penumbras, their role in proper and strong separation of convex sets, and their relation to polyhedra and M-decomposable sets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Separation of convex sets by hyperplanes is among the core topics of convexity. Initiated by Minkowski [7] on the turn of 20th century, it became a useful tool in many mathematical disciplines, especially in convex analysis and optimization, convex geometry, and linear analysis.

In what follows, we consider separation of convex sets in the Euclidean space \(\mathrm {R}^n\). Existing results in this domain either deal with sufficient conditions for the existence of hyperplanes separating a given pair of convex sets, say \(K_1\) and \(K_2\) (see, for instance, [9, 10]), or with analytical descriptions of all hyperplanes separating \(K_1\) and \(K_2\) (see, e.g., [1, 3, 4, 8] for the case of polytopes or polyhedra, and [13] for the case of arbitrary convex sets).

In this paper, we describe in geometric terms the locus of all hyperplanes separating (properly, or strongly) a given pair of nonempty convex sets in \(\mathrm {R}^n\). This description generalizes the existing results, obtained for the case of polytopes and polyhedral sets, and gives a new geometric insight into the separation properties of convex sets.

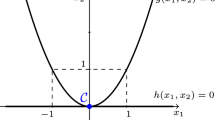

Our approach is based on the properties of penumbras introduced by Rockafellar [9, p. 22] for the case of disjoint sets. We recall that the penumbra of a convex set \(K_1\) with respect to another convex set \(K_2\), denoted below \(P(K_1, K_2)\), is defined by

Geometrically, \(P(K_1, K_2)\) is the union of all closed halflines initiated at the points of \(K_1\) in the directions of vectors from \(K_1 - K_2\) (see Fig. 2).

We study various geometric and topological properties of penumbras (Sect. 2), their role in proper and strong separation of convex sets (Sects. 3–5), and their relation to polyhedra and M-decomposable sets (Sect. 6).

We conclude this section with necessary definitions, notation, and auxiliary results (see, e. g., [9, 10] for details). The elements of \(\mathrm {R}^n\) are called vectors (or points). In what follows, o stands for the zero vector of \(\mathrm {R}^n\). We denote by [u, v] and (u, v) the closed and open line segments with endpoints \(u, v \in \mathrm {R}^n\). Also, \(u \! \cdot \!v\) will mean the dot product of u and v.

By an r-dimensional plane L in \(\mathrm {R}^n\), where \(0 \le r \le n\), we mean a translate of a suitable r-dimensional subspace S of \(\mathrm {R}^n\): \(L = c + S\), where \(c \in \mathrm {R}^n\). It is known that a nonempty set \(M \subset \mathrm {R}^n\) is a plane if and only if \((1 - \lambda ) x + \lambda y \in M\) whenever \(x, y \in M\) and \(\lambda \in \mathrm {R}\).

The open \(\rho \)-neighborhood of a nonempty \(X \subset \mathrm {R}^n\), denoted \(U_\rho (X)\), is the union of all open balls \(U_\rho (x)\) of radius \(\rho > 0\) centered at \(x \in X\). Nonempty sets \(X_1\) and \(X_2\) in \(\mathrm {R}^n\) are called strongly disjoint provided \(U_\rho (X_1) \cap U_\rho (X_2) = \emptyset \) for a suitable \(\rho > 0\); the latter occurs if and only if the inf-distance \(\delta (X_1, X_2)\), defined by

is positive. For a nonempty set \(X \subset \mathrm {R}^n\), the notations \(\mathrm {cl\,}X\) and \(\mathrm {int\,}X\) stand, respectively, for the closure and interior of X. In a standard way, \(\mathrm {conv\,}X\) and \(\mathrm {span\,}X\) mean, respectively, the convex hull and the span of X, and the affine span of X, denoted \(\mathrm {aff\,}X\), is defined as the intersection of all planes containing X. Clearly, \(\mathrm {aff\,}X = \mathrm {span\,}X\) if \(o \in X\). The dimension of a nonempty set \(X \subset \mathrm {R}^n\), denoted \(\mathrm {dim\,}X\), is defined by the equality \(\mathrm {dim\,}X = \mathrm {dim\,}(\mathrm {aff\,}X)\).

A hyperplane in \(\mathrm {R}^n\) is a plane which can be described as

Every hyperplane of the form (1) determines the opposite closed halfspaces

and the opposite open halfspaces

We recall that a nonempty set C in \(\mathrm {R}^n\) is a cone with apex \(z \in \mathrm {R}^n\) if \(z + \lambda (x - z) \in C\) whenever \(\lambda \ge 0\) and \(x \in C\). (Obviously, this definition implies that \(z \in C\), although a stronger condition \(\lambda > 0\) can be beneficial; see, e. g., [6].) The cone C is called convex if it is a convex set. For a convex set \(K \subset \mathrm {R}^n\) and a point \(z \in \mathrm {R}^n\), the generated cone \(C_z (K)\) with apex z is defined by

It is known that both sets \(C_z(K)\) and \(\mathrm {cl\,}C_z(K)\) are convex cones with apex z.

2 Properties of Penumbras

Theorem 1

For convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), the assertions below hold.

-

1)

The set \(P(K_1, K_2)\) is convex and \(K_1 \subset P(K_1, K_2)\).

-

2)

\(\mathrm {aff\,}P(K_1, K_2) = \mathrm {aff\,}(K_1 \cup K_2)\).

-

3)

\(P(K_1, K_2) \cap P(K_2, K_1) = \emptyset \) if and only if \(K_1 \cap K_2 = \emptyset \).

-

4)

\(\delta (P(K_1, K_2), P(K_2, K_1)) = \delta (K_1, K_2)\).

Proof

1) For the convexity of \(P(K_1, K_2)\), choose any points \(u, v \in P(K_1, K_2)\) and a scalar \(\lambda \in [0, 1]\). Then

for suitable scalars \(\eta , \theta \ge 1\) and points \(x_i, y_i \in K_i\), \(i = 1, 2\). One has

Put \(\gamma = (1 - \lambda ) \eta + \lambda \theta \). Clearly, \(\gamma \ge 1\). Since \(\alpha K + \beta K = (\alpha + \beta )K\) whenever K is convex and \(\alpha \beta \ge 0\) (see, e. g., [9, Theorem 3.2]), we have

Therefore,

which proves the convexity of \(P(K_1, K_2)\).

Expressing any point \(x_1 \in K_1\) as \(x_1 = 1 x_1 + (1 - 1) x_2\), where \(x_2 \in K_2\), we obtain the inclusion \(x_1 \in P(K_1, K_2)\). Hence \(K_1 \subset P(K_1, K_2)\).

2) The inclusion \(P(K_1, K_2) \subset \mathrm {aff\,}(K_1 \cup K_2)\) follows from the fact that any point \(x \in P(K_1, K_2)\) is an affine combination of points from \(K_1 \cup K_2\). Consequently,

For the opposite inclusion, choose points \(x_1 \in K_1\) and \(x_2 \in K_2\). Put \(z = 2 x_1 - x_2\). Since \(z \in P(K_1, K_2)\) and \(K_1 \subset P(K_1, K_2)\), one has

So, \(K_2 \subset \mathrm {aff\,}P(K_1, K_2)\). Thus \(K_1 \cup K_2 \subset \mathrm {aff\,}P(K_1, K_2)\), which gives the desired inclusion

3) If \(P(K_1, K_2) \cap P(K_2, K_1) = \emptyset \), then \(K_1 \cap K_2 = \emptyset \) due to part 1). Conversely, let \(K_1 \cap K_2 = \emptyset \). Assume for a moment the existence of a point

Then

for suitable scalars \(\gamma , \mu \ge 1\) and points \(x_1, y_1 \in K_1\) and \(x_2, y_2 \in K_2\). Clearly,

Now, put

Obviously, \(\xi , \eta \in [0, 1]\). Based on (4) and the convexity of \(K_1\), one has

Similarly,

Thus \(a \in K_1 \cap K_2\), in contradiction with the hypothesis \(K_1 \cap K_2 = \emptyset \).

4) The inequality

is trivial. For the opposite one, choose points \(u \in P(K_1, K_2)\) and \(v \in P(K_2, K_1)\). Then

for suitable scalars \(\gamma , \mu \ge 1\) and points \(x_1, y_1 \in K_1\) and \(x_2, y_2 \in K_2\). Now, put

With \(\xi \) and \(\eta \) defined by (5), we obtain, based on (4), that

Hence

which implies the desired inequality

\(\square \)

Definition 1

Given convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), let

We will need the following auxiliary lemma.

Lemma 1

([9], §6) For a convex set \(K \subset \mathrm {R}^n\), the assertions below hold.

-

1)

A point \(x \in K\) belongs to \(\mathrm {rint\,}K\) if and only if for any point \(y \in K\) there is a scalar \(\eta > 1\) such that \(\eta x + (1 - \eta ) y \in K\).

-

2)

If \(x \in \mathrm {rint\,}K\) and \(y \in \mathrm {cl\,}K\), then \([x, y) \subset \mathrm {rint\,}K\). Consequently, \(\mathrm {cl\,}K = \mathrm {cl\,}(\mathrm {rint\,}K)\).

\(\square \)

Topological properties of penumbra are described in the following theorem.

Theorem 2

For convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), the assertions below hold.

-

1)

\(\mathrm {rint\,}P(K_1, K_2) = P_0 (\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\).

-

2)

\(P(\mathrm {cl\,}K_1, \mathrm {cl\,}K_2) \subset \mathrm {cl\,}P(K_1, K_2) = \mathrm {cl\,}P_0 (\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\).

-

3)

If \(K_2\) is bounded and \(\mathrm {cl\,}K_1 \cap \mathrm {cl\,}K_2 = \emptyset \), then \(P(\mathrm {cl\,}K_1, \mathrm {cl\,}K_2) = \mathrm {cl\,}P(K_1, K_2)\).

Proof

1) First, we will prove the inclusion

For this, choose any point \(x \in P_0(\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\). Then \(x = \mu x_1 + (1 - \mu ) x_2\) for a suitable scalar \(\mu > 1\) and points \(x_1 \in \mathrm {rint\,}K_1\) and \(x_2 \in \mathrm {rint\,}K_2\). Let y be any point in \(P(K_1, K_2)\). Then \(y = \gamma y_1 + (1 - \gamma ) y_2\), where \(\gamma \ge 1\), \(y_1 \in K_1\), and \(y_2 \in K_2\). The obvious inequality \(\gamma - 1 > \gamma - \mu \) implies the existence of a scalar \(\varphi _0 > 1\) such that

Consequently, the scalar \(\eta = \varphi \mu + (1 - \varphi ) \gamma \) satisfies the inequality \(\eta > 1\) for all \(1< \varphi < \varphi _0\). We are going to show the existence of a scalar \(\varphi ^* \in (1, \varphi _0)\) such that

Indeed, for any \(1< \varphi < \varphi _0\), let

It is easy to see that \(\lambda _1(\varphi ) > 1\) and \(\lambda _2(\varphi ) \ge 1\) for all \(1< \varphi < \varphi _0\), and that \(\lim _{\varphi \rightarrow 1} \lambda _i(\varphi ) = 1\), \(i = 1, 2\). We observe that the inequality \(\lambda _2(\varphi ) \ge 1\) is equivalent to \((1 - \gamma ) (1 - \varphi ) \ge 0\); whence \(\lambda _2(\varphi ) = 1\) if and only if \(\gamma = 1\).

Lemma 1 shows the existence of a sufficiently small \(\varphi ^* \in (1, \varphi _0)\) such that the scalars \(\lambda _i^* = \lambda _i(\varphi ^*)\) satisfy the conditions: \(\lambda _1^* > 1\), \(\lambda _2^* \ge 1\), and

Let

Because \(\eta ^* > 1\), we obtain \(z^* \in P(K_1, K_2)\). Furthermore,

Since the point y was arbitrarily chosen in \(P(K_1, K_2)\), Lemma 1 implies the inclusion \(x \in \mathrm {rint\,}P(K_1, K_2)\).

For the opposite inclusion,

choose any point \(x \in \mathrm {rint\,}P(K_1, K_2)\). Let y be a point in \(P_0(\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\). Then \(y = \gamma y_1 + (1 - \gamma ) y_2\), where \(\gamma > 1\), \(y_1 \in \mathrm {rint\,}K_1\) and \(y_2 \in \mathrm {rint\,}K_2\). By the above argument, \(y \in \mathrm {rint\,}P(K_1, K_2)\).

Since the case \(x = y\) is trivial, we may assume that \(x \ne y\). By Lemma 1, there is a scalar \(\nu > 1\) such that the point \(z = \nu x + (1 - \nu ) y\) belongs to \(P(K_1, K_2)\). With \(\alpha = \nu ^{-1}\), we can write \(x = (1 - \alpha ) y + \alpha z\), where \(0< \alpha < 1\). Because \(z \in P(K_1, K_2)\), one has \(z = \beta z_1 + (1 - \beta ) z_2\), where \(\beta \ge 1\), \(z_1 \in K_1\), and \(z_2 \in K_2\). Now, let \(\mu = \alpha \beta + (1 - \alpha ) \gamma \). Clearly, \(\mu > 1\). Next, put

It is easy to see that \(\alpha _1, \alpha _2 \in [0, 1)\). Therefore, Lemma 1 implies the inclusions

Furthermore, the equalities

give the desired inclusion \(x \in P_0(\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\).

2) Let u be any point in \(P(\mathrm {cl\,}K_1, \mathrm {cl\,}K_2)\). Then \(u = \mu x_1 + (1 - \mu ) x_2\) for a suitable scalar \(\mu \ge 1\) and points \(x_1 \in \mathrm {cl\,}K_1\) and \(x_2 \in \mathrm {cl\,}K_2\). Choose any scalar \(\varepsilon > 0\) and let \(\rho = \varepsilon /(2 \mu - 1)\). There are points \(x_1' \in K_1\) and \(x_2' \in K_2\) such that \(\Vert x_1 - x_1' \Vert < \rho \) and \(\Vert x_2 - x_2' \Vert < \rho \). Put \(u' = \mu x_1' + (1 - \mu ) x_2'\). Then \(u' \in P(K_1, K_2)\) and

So, \(u \in \mathrm {cl\,}P(K_1, K_2)\), as desired.

3) Suppose that \(K_2\) is bounded and \(\mathrm {cl\,}K_1 \cap \mathrm {cl\,}K_2 = \emptyset \). By the above argument, it suffices to prove the inclusion

For this, choose any point \(u \in \mathrm {cl\,}P(K_1, K_2)\) and a sequence of points \(u_1, u_2, \dots \) in \(P(K_1, K_2)\) converging to u. We can write

Therefore,

By a compactness argument, there is an increasing sequence of integers \(i_1, i_2, \dots \) such that \(\mu _{i_r}^{-1} \rightarrow \alpha \in [0, 1]\) and \(y_{i_r} \rightarrow y \in \mathrm {cl\,}K_2\) as \(r \rightarrow \infty \). Consequently, there exists the limit

Clearly, \(x \in \mathrm {cl\,}K_1\) due to the inclusions \(x_{i_r} \in K_1\), \(r \ge 1\).

We observe that \(\alpha \ne 0\). Indeed, if \(\alpha = 0\), then (7) would give \(x = y\), contrary to the assumption \(\mathrm {cl\,}K_1 \cap \mathrm {cl\,}K_2 = \emptyset \). So, \(\alpha \ne 0\). Then \(\alpha ^{-1} > 1\), and (7) gives

as desired. Finally, by Lemma 1,

\(\square \)

Remark 1

Generally, both inclusions

may be proper. Indeed, in the plane \(\mathrm {R}^2\), consider the closed convex sets

With \(M = \{ (x, y) : y > 1 \}\), one has \(P(K_1, K_2) = K_1 \cup M\) and

3 Penumbras and Separation

We recall that convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\) are separated by a hyperplane \(H \subset \mathrm {R}^n\) provided \(K_1\) and \(K_2\) lie in the opposite closed halfspaces determined by H. Furthermore, \(K_1\) and \(K_2\) are properly separated if \(K_1 \cup K_2 \not \subset H\), and they are nontrivially separated if \(K_1 \not \subset H\) and \(K_2 \not \subset H\). Also, \(K_1\) and \(K_2\) are strictly separated by H if \(K_1 \cap H = K_2 \cap H = \emptyset \). Finally, \(K_1\) and \(K_2\) are strongly separated by H if suitable open \(\rho \)-neighborhoods \(U_\rho (K_1)\) and \(U_\rho (K_2)\) of these sets are separated by H (see [9, p. 95]). The following lemma provides well-known criteria for proper and strong separation.

Lemma 2

([9], §11) If \(K_1\) and \(K_2\) are convex sets in \(\mathrm {R}^n\), then

-

1)

\(K_1\) and \(K_2\) are properly separated if and only if \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \),

-

2)

\(K_1\) and \(K_2\) are strongly separated if and only if \(\delta (K_1, K_2) > 0\).

\(\square \)

Theorem 3

Let \(K_1\) and \(K_2\) be convex sets in \(\mathrm {R}^n\), and \(H \subset \mathrm {R}^n\) be a hyperplane. The assertions below hold.

-

1)

H separates \(K_1\) and \(K_2\) if and only if H separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\).

-

2)

H properly separates \(K_1\) and \(K_2\) if and only if H nontrivially separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\).

-

3)

H strictly separates \(K_1\) and \(K_2\) if and only if H strictly separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\).

-

4)

H strongly separates \(K_1\) and \(K_2\) if and only if H strongly separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\).

Proof

1) Let H separate \(K_1\) and \(K_2\). Denote by \(V_1\) and \(V_2\) the closed halfspaces determined by H and containing \(K_1\) and \(K_2\), respectively. Without loss of generality, we may suppose that \(V_1\) and \(V_2\) are given by (2).

We assert that \(P(K_1, K_2) \subset V_1\). Indeed, choose any point \(u \in P(K_1, K_2)\). Then \(u = \mu x_1 + (1 - \mu ) x_2\) for a suitable scalar \(\mu \ge 1\) and points \(x_1 \in K_1\) and \(x_2 \in K_2\). Since \(x_1 \in V_1\) and \(x_2 \in V_2\), one has \(x_1 \! \cdot \!e \le \gamma \) and \(x_2 \! \cdot \!e \ge \gamma \). Therefore,

Hence \(u \in V_1\), and thus \(P(K_1, K_2) \subset V_1\). Similarly, \(P(K_2, K_1) \subset V_2\), which implies that H separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\).

The proof of the “if” part immediately follows from the inclusions \(K_1 \subset P(K_1, K_2)\) and \(K_2 \subset P(K_2, K_1)\).

2) Assume that H properly separates \(K_1\) and \(K_2\) such that \(K_1 \subset V_1\) and \(K_2 \subset V_2\). Let, for instance, \(K_1 \not \subset H\). Then \(P(K_1, K_2) \not \subset H\) due to the inclusion \(K_1 \subset P(K_1, K_2)\). Now, choose points \(x_1 \in K_1 \setminus H\) and \(x_2 \in K_2\). Then \(x_1 \! \cdot \!e < \gamma \), and, with \(\mu > 1\), the point \(v = \mu x_2 + (1 - \mu ) x_1 \in P(K_2, K_1)\) satisfies the inequality

Hence \(P(K_2, K_1) \not \subset H\), which shows that H nontrivially separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\). The proof of the “if” part is similar.

The proofs of assertions 3)–4) are similar to that of 1) and use the following refinements of (8):

-

a)

if \(x_1 \! \cdot \!e < \gamma \) and \(x_2 \! \cdot \!e > \gamma \), then \(u \! \cdot \!e < \gamma \),

-

b)

if \(x_1 \! \cdot \!e \le \gamma - \varepsilon \) and \(x_2 \! \cdot \!e \ge \gamma + \varepsilon \), where \(\varepsilon > 0\), then \(u \! \cdot \!e \le \gamma - \varepsilon \).

\(\square \)

Remark 2

Part 2) of Theorem 3 implies that \(P(K_1, K_2)\) and \(P(K_2, K_1)\) are properly separated by a hyperplane H if and only if they are nontrivially separated by H.

We recall (see [9, p. 100]) that a hyperplane \(H \subset \mathrm {R}^n\) nontrivially supports a convex set \(K \subset \mathrm {R}^n\) if H supports K such that \(K \not \subset H\).

Theorem 4

Let \(K_1\) and \(K_2\) be convex sets in \(\mathrm {R}^n\). If a hyperplane \(H \subset \mathrm {R}^n\) supports (nontrivially supports) \(\mathrm {cl\,}P(K_1,K_2)\), then H separates (properly separates) \(K_1\) and \(K_2\). If, additionally, \(K_1\) is bounded, then H supports \(\mathrm {cl\,}K_1\).

Proof

Let H support \(\mathrm {cl\,}P(K_1,K_2)\). Expressing H in the form (1), we may suppose that the opposite closed halfspaces \(V_1\) and \(V_2\) determined by H are given by (2). Assume that \(\mathrm {cl\,}P(K_1, K_2) \subset V_1\). By Theorem 1, \(K_1 \subset P(K_1, K_2) \subset V_1\). Consider the hyperplane \(H' = \{ x \in \mathrm {R}^n : x \! \cdot \!e = \gamma ' \}\), where

Clearly, \(K_1\) is contained in the closed halfspace \(V_1' = \{ x \in \mathrm {R}^n : x \! \cdot \!e \le \gamma ' \}\). We assert that \(K_2\) is contained in the opposite closed halfspace \(V_2' = \{ x \in \mathrm {R}^n : x \! \cdot \!e \ge \gamma ' \}\). Indeed, assume for a moment the existence of a point \(x_2 \in K_2 \setminus V_2'\). Then \(x_2 \! \cdot \!e < \gamma '\). Choose a point \(x_1 \in K_1\) so close to \(H'\) that \(x_2 \! \cdot \!e < x_1 \! \cdot \!e \le \gamma '\). For a scalar \(\mu \ge 1\), let \(z_\mu = \mu x_1 + (1 - \mu ) x_2\). Then \(z_\mu \in P(K_1, K_2) \subset V_1\). On the other hand,

for a sufficiently large \(\mu \). Consequently, \(z_\mu \notin V_1\), in contradiction with the choice of \(V_1\). Summing up, \(K_2 \subset V_2'\).

So, \(H'\) separates \(K_1\) and \(K_2\). Theorem 3 implies that \(P(K_1,K_2) \subset V_1' \subset V_1\). Since H supports \(\mathrm {cl\,}P(K_1, K_2)\), it follows that \(V_1 = V_1'\) and \(H = H'\), as desired.

Assume now that H nontrivially supports \(\mathrm {cl\,}P(K_1,K_2)\). If \(K_1 \cup K_2\) contained in H, then, by Theorem 1, we would have \(P(K_1, K_2) = \mathrm {aff\,}(K_1, K_2) \subset H\), contrary to the assumption. Hence H properly separates \(K_1\) and \(K_2\).

The second assertion of the theorem follows from the choice of \(H'\) and compactness of \(\mathrm {cl\,}K_1\). \(\square \)

Remark 3

The hyperplane H in Theorem 4 may not support \(\mathrm {cl\,}K_1\) if \(K_1\) is unbounded. For instance, let the closed convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^2\) be given by

Then \(\mathrm {cl\,}P(K_1, K_2) = \{ (x, y) : y \ge 1 \}\) and \(y = 1\) is the only line that supports \(\mathrm {cl\,}P(K_1, K_2)\). On the other hand, this line is asymptotic to \(K_1\) (that is, \(H \cap K_1 = \emptyset \) and \(\delta (H, K_1) = 0\)).

Theorem 5

If convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\) satisfy the condition \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \), then \(\mathrm {cl\,}P(K_1, K_2)\) is the intersection of all closed halfspaces containing \(P(K_1, K_2)\) such that their boundary hyperplanes properly separate \(K_1\) and \(K_2\). If, additionally, \(K_1\) is bounded, then the above halfspaces can be chosen such that their boundary hyperplanes support \(\mathrm {cl\,}K_1\).

Proof

First, we assert that \(\mathrm {cl\,}P(K_1, K_2)\) is not a plane. For this, let \(L = \mathrm {aff\,}(K_1 \cup K_2)\) and \(r = \mathrm {dim\,}L\). Lemma 2 shows the existence of a hyperplane \(H \subset \mathrm {R}^n\) properly separating \(K_1\) and \(K_2\). Denote by \(V_1\) and \(V_2\) the closed halfspaces determined by H and containing \(K_1\) and \(K_2\), respectively. Since \(K_1 \cup K_2 \not \subset H\), the affine span \(\mathrm {aff\,}(K_1 \cup K_20\) is not included in H. Then the plane \(L = H \cap \mathrm {aff\,}(K_1 \cup K_2)\) has dimension \(r - 1\) and determines two closed halfplanes \(M_1 = L \cap V_1\) and \(M_2 = L \cap V_2\) (see [10], Lemma 2.72 and Theorem 2.73). By Theorem 3, \(P(K_1, K_2) \subset M_1\), while \(\mathrm {aff\,}P(K_1, K_2) = L\) (see Theorem 1). Hence \(P(K_1, K_2) \ne \mathrm {aff\,}P(K_1, K_2)\), which implies that \(P(K_1, K_2)\) is not a plane.

Since \(P(K_1, K_2)\) is not a plane, \(\mathrm {cl\,}P(K_1, K_2)\) is the intersection of all closed halfspaces nontrivially supporting \(\mathrm {cl\,}P(K_1, K_2)\) (see [10], Theorem 9.39). By Theorem 4, the boundary hyperplanes of these halfspaces properly separate \(K_1\) and \(K_2\), as desired. If, additionally, \(K_1\) is bounded, then the boundary hyperplanes of these halfspaces support \(\mathrm {cl\,}K_1\). \(\square \)

Remark 4

As it shown in the proof of Theorem 5, the penumbra \(P(K_1, K_2)\) is not a plane if \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \). In this regard, it would be interesting to know whether \(P(K_1, K_2) = \mathrm {aff\,}(K_1 \cup K_2)\) provided \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 \ne \emptyset \).

4 Properly Separating Hyperplanes

Definition 2

Given convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), denote by \({{\mathcal {H}}}_1 (K_1, K_2)\) (respectively, by \({{\mathcal {H}}}_2 (K_1, K_2)\) and \({{\mathcal {H}}}_3 (K_1, K_2)\)) the family of all hyperplanes properly (respectively, strictly and strongly) separating \(K_1\) and \(K_2\). Also, let

A combination of Theorem 5.3 from [13] and the above Theorem 2 implies the following assertion.

Theorem 6

([13]) If convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\) satisfy the condition \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \), then

Theorem 7

Let \(K_1\) and \(K_2\) be convex sets in \(\mathrm {R}^n\) satisfying the condition \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \). A hyperplane \(H \subset \mathrm {R}^n\) properly separates \(K_1\) and \(K_2\) if and only if \(H \subset E_1 (K_1, K_2)\) and \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \).

Proof

Let a hyperplane H properly separate \(K_1\) and \(K_2\). Then the inclusion \(H \subset E_1 (K_1, K_2)\) immediately follows from Definition 2. Denote by \(V_1\) and \(V_2\) the closed halfspaces determined by H and containing \(K_1\) and \(K_2\), respectively. Choose distinct points \(x_1 \in K_1\) and \(x_2 \in K_2\) (this is possible due to the assumption \(\mathrm {rint\,}K_1 \cap \mathrm {rint\,}K_2 = \emptyset \)). Then the line through \(x_1\) and \(x_2\) lies in \(\mathrm {aff\,}(K_1 \cup K_2)\) and meets H (see [13, Theorem 2.35]). Hence \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \).

Conversely, let a hyperplane H satisfy the conditions \(H \subset E_1 (K_1, K_2)\) and \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \). Theorem 6 shows that H is disjoint from the set

Hence \(Q \subset \mathrm {R}^n \setminus H\). We assert that \(P_0(\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\) and \(P_0(\mathrm {rint\,}K_2, \mathrm {rint\,}K_1)\) are contained, respectively, in the opposite open halfspaces determined by H. Indeed, assume for a moment that both sets

are contained in the same open halfspace W determined by H. By Theorem 1, both sets \(\mathrm {rint\,}K_1\) and \(\mathrm {rint\,}K_2\) are contained in W. Expressing H in the form (1), we may suppose that W is given by

Choose any points \(x_1 \in \mathrm {rint\,}K_1\) and \(x_2 \in \mathrm {rint\,}K_2\). For any scalar \(\mu > 1\), one has

Therefore,

These inequalities hold for all \(\mu > 1\) only if \(x_1 \! \cdot \!e \le x_2 \! \cdot \!e\). In a similar way, considering the points

we obtain that \(x_2 \! \cdot \!e \le x_1 \! \cdot \!e\). Hence \(x_1 \! \cdot \!e = x_2 \! \cdot \!e\) for all \(x_1 \in \mathrm {rint\,}K_1\) and \(x_2 \in \mathrm {rint\,}K_2\). This argument shows that the set \(\mathrm {rint\,}K_1 \cup \mathrm {rint\,}K_2\) lies in a hyperplane \(H' \subset W\) of the form \(H' = \{ x \in \mathrm {R}^n : x \! \cdot \!e = \gamma ' \}\), where \(\gamma ' < \gamma \). Consequently, by Lemma 1,

which gives the inclusion \(\mathrm {aff\,}(K_1 \cup K_2) \subset H'\). Because \(H \cap H' = \emptyset \), the latter inclusion contradicts the assumption \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \).

Summing up, \(P_0(\mathrm {rint\,}K_1, \mathrm {rint\,}K_2)\) and \(P_0(\mathrm {rint\,}K_2, \mathrm {rint\,}K_1)\) are contained in the opposite open halfspaces determined by H. By Theorem 2, \(P(K_1, K_2)\) and \(P(K_2, K_1)\) are contained in the opposite closed halfspaces determined by H such that neither of these set lies in H. Theorem 3 shows that H properly separates \(K_1\) and \(K_2\). \(\square \)

The following example illustrates Theorem 7.

Example 1

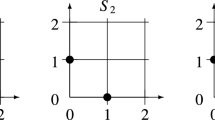

In the plane \(\mathrm {R}^2\), consider the closed convex sets

Then \(P(K_1, K_2) = K_1\) and \(P(K_2, K_1) = K_2\). Any horizontal line \(y = b\), where \(b \ne 0\), lies in \(E_1 (K_1, K_2) = \mathrm {R}^2 \setminus (K_1 \cup K_2)\), while only slant lines which meet the x-axis at the segment \(\{ (x, 0) : 0 \le x \le 1 \}\) properly separate \(K_1\) and \(K_2\).

Corollary 1

Let \(K_1\) and \(K_2\) be convex sets in \(\mathrm {R}^n\) satisfying the condition \(\mathrm {cl\,}K_1 \cap \mathrm {cl\,}K_2 = \emptyset \). A hyperplane \(H \subset \mathrm {R}^n\) strictly separates \(\mathrm {cl\,}K_1\) and \(\mathrm {cl\,}K_2\) if and only if H is contained in the set

and \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \).

Proof

Let a hyperplane H strictly separate \(\mathrm {cl\,}K_1\) and \(\mathrm {cl\,}K_2\). By Theorem 3, H strictly separates \(P(\mathrm {cl\,}K_1, \mathrm {cl\,}K_2)\) and \(P(\mathrm {cl\,}K_2, \mathrm {cl\,}K_1)\). Hence \(H \subset F_2 (K_1, K_2)\). Since H properly separates \(K_1\) and \(K_2\), Theorem 7 shows that \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \).

Conversely, suppose that \(H \subset F_2 (K_1, K_2)\) and \(H \cap \mathrm {aff\,}(K_1 \cup K_2) \ne \emptyset \). Then H is disjoint from both \(P(\mathrm {cl\,}K_1, \mathrm {cl\,}K_2)\) and \(P(\mathrm {cl\,}K_2, \mathrm {cl\,}K_1)\), and Theorem 1 shows that H is disjoint from both \(\mathrm {cl\,}K_1\) and \(\mathrm {cl\,}K_2\). By Theorem 7, H properly separates \(K_1\) and \(K_2\). Hence H strictly separates \(\mathrm {cl\,}K_1\) and \(\mathrm {cl\,}K_2\). \(\square \)

Remark 5

Corollary 1 shows that \(E_2 (K_1, K_2)\) is contained in the set \(F_2 (K_1, K_2)\). This inclusion may be proper, as illustrated by the convex sets \(K_1\) and \(K_2\) from Remark 1. Indeed, for these sets, \(E_2 (K_1, K_2) = \{ (x, y) : 0< y < 1 \}\), while

5 Strongly Separating Hyperplanes

Theorem 8

If \(K_1\) and \(K_2\) are strongly disjoint convex sets in \(\mathrm {R}^n\), then

Proof

For any scalar \(\rho > 0\), both sets \(U_\rho (K_i)\), \(i = 1, 2\), are open. So, part 1) of Theorem 2 gives

Hence the set \(P_0(U_\rho (K_1), U_\rho (K_2))\) also is open. Therefore, the obvious inclusion

and the same part 1) give

Thus

To prove the opposite inclusion, let

Clearly, Q is a convex set as the intersection of convex sets \(P_0(U_\rho (K_1), U_\rho (K_2))\), \(\rho > 0\). Assume for a moment the existence of a point \(u \in Q \setminus \mathrm {cl\,}P(K_1, K_2)\) and denote by z the metric projection of u on \(\mathrm {cl\,}P(K_1, K_2)\). It is known that z is uniquely determined by u due to the convexity of \(\mathrm {cl\,}P(K_1, K_2)\).

Since \(K_1\) and \(K_2\) are strongly disjoint, Lemma 2 implies the existence of a hyperplane \(H \subset \mathrm {R}^n\) strongly separating \(K_1\) and \(K_2\). Denote by \(W_1\) and \(W_2\) the opposite open halfspaces determined by H containing \(K_1\) and \(K_2\), respectively. Choose a pair of hyperplanes \(H_1 \subset W_1\) and \(H_2 \subset W_2\) which are parallel to H and each of them strongly separates \(K_1\) and \(K_2\). Denote by \(W_i'\) the open halfspace determined by \(H_i\) and contained in \(W_i\), \(i = 1, 2\). Choose a scalar \(\rho > 0\) such that \(U_\rho (K_i)\) is contained in \(W_i'\) and is strongly disjoint from \(H_i\), \(i = 1, 2\).

Expressing H in the form (1), we may suppose that

with \(\gamma _1< \gamma < \gamma _2\) and

By the above argument, each of the hyperplanes \(H_1\) and \(H_2\) strongly separates \(U_\rho (K_1)\) and \(U_\rho (K_2)\). Consequently, Theorem 3 implies that each of \(H_1\) and \(H_2\) strongly separates \(P(U_\rho (K_1), U_\rho (K_2))\) and \(P(U_\rho (K_2), U_\rho (K_1))\). Hence

Since the open segment (u, z) lies in Q and is disjoint from \(\mathrm {cl\,}P(K_1, K_2)\), we can choose a point \(v \in (u, z) \subset Q\) so close to z that \(v \in W_1'\). Denote by \(v_1\) and \(v_2\) the orthogonal projections of v on \(H_1\) and \(H_2\), respectively.

For any integer \(r \ge 1\), we can write

Choose an integer \(r_0\) such that \(1/r_0 < \rho \). Then \(x_r \in W_1'\) and \(y_r \in W_2'\) for all \(r \ge r_0\). In particular, \(x_r \ne y_r\) and the line segment \([x_r, y_r]\) meets \(H_1\) and \(H_2\) at distinct points \(x_r'\) and \(y_r'\), respectively, \(r \ge r_0\). Since the hyperplanes \(H_1\) and \(H_2\) are parallel, one has

The equalities

give

Let G be the hyperplane through z orthogonal to the segment [v, z]. It is well known that G supports \(\mathrm {cl\,}P(K_1, K_2)\) at z and separates v from \(\mathrm {cl\,}P(K_1, K_2)\). By Theorem 4, G separates \(K_1\) and \(K_2\). Denote by \(T_1\) and \(T_2\) the opposite closed halfspaces determined by G and containing \(K_1\) and \(K_2\), respectively. Expressing G as

we may suppose that \(T_1\) and \(T_2\) are given by

Then \(v \! \cdot \!c > \eta \) due to the inclusion \(v \in \mathrm {int\,}T_2\). Furthermore, dividing both c and \(\eta \) by \(\Vert c \Vert \), we may assume that c is a unit vector.

Since \(x_r \in U_{1/r} (K_1)\), there is a point \(b_r \in K_1\) such that \(\Vert x_r - b_r \Vert < 1/r\). Consequently,

Because \(b_r \! \cdot \!c \le \eta \) due to the inclusion \(b_r \in K_1 \subset T_1\), we obtain that

Similarly, \(y_r \! \cdot \!c > \eta - 1/r\). Hence

Since \(v \! \cdot \!c > \eta \), one has

Because (10) and (11) contradict each other, our assumption on the existence of a point \(u \in Q \setminus \mathrm {cl\,}P(K_1, K_2)\) is false, as desired. \(\square \)

The following lemma will be used in the proof of Theorem 9.

Lemma 3

([13]) Let \(M_1\) and \(M_2\) be disjoint convex sets in \(\mathrm {R}^n\). If a point z belongs to the set \(\mathrm {R}^n \setminus (P_0(M_1, M_2) \cup P_0(M_2, M_1))\), then the generated cones \(C_z (M_1)\) and \(C_z (M_2)\) satisfy the conditions

Theorem 9

If convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\) are strongly disjoint, then

Proof

First, we assert that

Indeed, H be a hyperplane strongly separating \(K_1\) and \(K_2\). By Theorem 3, H strongly separates \(P(K_1, K_2)\) and \(P(K_2, K_1)\). So, there is a scalar \(\rho > 0\) such that H separates \(U_\rho (P(K_1, K_2))\) and \(U_\rho (P(K_2, K_1))\). Since the set \(U_\rho (P(K_1, K_2))\) is open, one has \(H \cap U_\rho (P(K_1, K_2)) = \emptyset \). Finally, the inclusion \(\mathrm {cl\,}P(K_1, K_2) \subset U_\rho (P(K_1, K_2))\) shows that \(H \cap \, \mathrm {cl\,}P(K_1, K_2) = \emptyset \). Consequently, (12) holds.

In a similar way, \(E_3 (K_1, K_2) \cap \mathrm {cl\,}P(K_2, K_1) = \emptyset \). Therefore,

For the opposite inclusion, choose any point

Since \(K_1\) and \(K_2\) are strongly disjoint, there is a scalar \(\rho > 0\) such that the sets \(U_\rho (K_1)\) and \(U_\rho (K_2)\) are strongly disjoint. By Theorem 8, the scalar \(\rho \) can be chosen so small that

Lemma 3 implies that

Hence Lemma 2 shows the existence of a hyperplane \(H \subset \mathrm {R}^n\) that properly separates the cones \(C_z (U_\rho (K_1))\) and \(C_z(U_\rho (K_2))\). Consequently, H separates the open sets \(U_\rho (K_1)\) and \(U_\rho (K_2)\). In other words, H strongly separates \(K_1\) and \(K_2\). So, \(H \in {{\mathcal {H}}}_3 (K_1, K_2)\) and \(z \in H \subset E_3 (K_1, K_2)\), as desired. \(\square \)

Remark 6

An assertion similar to Theorem 7 and Corollary 1 does not hold for the case of strong separation. For instance, let the closed convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^2\) be given by

Then \(P(K_1, K_2) = K_1\) and \(P(K_2, K_1) = K_2\). Furthermore, the coordinate axes of \(\mathrm {R}^2\) are subsets of \(E_3 (K_1, K_2)\) and meet \(\mathrm {aff\,}(K_1 \cup K_2) = \mathrm {R}^2\), while each of these axes separates \(K_1\) and \(K_2\) strictly, but not strongly.

6 Further Properties of Penumbra

Theorem 10

If \(K_1\) and \(K_2\) are convex sets in \(\mathrm {R}^n\), then

Furthermore, \(\mathrm {dim\,}C_o (K_1 - K_2) = \mathrm {dim\,}(\mathrm {aff\,}(K_1 \cup K_2))\).

Proof

By the definition of \(P(K_1, K_2)\) and \(C_o (K_1 - K_2)\),

For the opposite inclusion, choose any point \(u \in K_1 + C_o (K_1 - K_2)\). Then \(u = x_1 + \lambda (y_1 - y_2)\) for suitable points \(x_1, y_1 \in K_1\), \(y_2 \in K_2\), and a scalar \(\lambda \ge 0\). Put

Clearly, \(x_1' \in [x_1, y_1] \subset K_1\) due to the convexity of \(K_1\). Furthermore,

For the second assertion, we observe first that

which implies the equality \(\mathrm {span\,}C_o (K_1 - K_2) = \mathrm {span\,}(K_1 - K_2)\). Consequently,

where the second equality is proved in [13]. \(\square \)

We recall that a convex set \(K \subset \mathrm {R}^n\) is called M-predecomposable if it can be expressed as a sum \(K = B + C\), where B is a compact convex set B and C is a convex cone. If, additionally, the cone C is closed, then K is called M-decomposable (see [2, 5, 11, 12] for priority publications and further results).

Theorem 11

For convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), the following assertions hold.

-

1)

If \(K_1\) is compact, then \(P(K_1, K_2)\) is an M-predecomposable set.

-

2)

If both \(K_1\) and \(K_2\) are compact and \(K_1 \cap K_2 = \emptyset \), then \(P(K_1, K_2)\) is an M-decomposable set.

Proof

1) If \(K_1\) is compact, then, according to Theorem 10, the set \(P(K_1, K_2)\) is M-decomposable as the sum of \(K_1\) and the convex cone \(C_o(K_1 - K_2)\).

2) If both \(K_1\) and \(K_2\) are compact and \(K_1 \cap K_2 = \emptyset \), then \(o \notin K_1 - K_2\) and the convex set \(K_1 - K_2\) is compact. Consequently, the generated cone \(C_o(K_1 - K_2)\) is closed (see [10], Theorem 5.45). By Theorem 10, the set \(P(K_1, K_2)\) is M-decomposable. \(\square \)

Problem 1

Characterize those M-decomposable (M-predecomposable) sets in \(\mathrm {R}^n\) which are penumbras of suitable convex sets. In particular, which M-decomposable sets \(K_1\) and \(K_2\) satisfy the conditions \(K_1 = P(K_1, K_2)\) and \(K_2 = P(K_2, K_1)\)?

The concept of penumbra is routinely extendable to the case of arbitrary nonempty sets. In this regard, the following assertion holds.

Theorem 12

If X and Y are nonempty sets in \(\mathrm {R}^n\), then

Proof

The obvious inclusion \(P(X, Y) \subset P(\mathrm {conv\,}X, \mathrm {conv\,}Y)\) and the convexity of \(P(\mathrm {conv\,}X, \mathrm {conv\,}Y)\) (see Theorem 1) give

For the opposite inclusion, choose any point \(u \in P(\mathrm {conv\,}X, \mathrm {conv\,}Y)\). Then \(u = \mu x + (1 - \mu ) y\), where \(\mu \ge 1\), \(x \in \mathrm {conv\,}X\), and \(y \in \mathrm {conv\,}Y\). We can express x and y as convex combinations of suitable points from X and Y, respectively:

Since

the equalities

show that u is a convex combination of points from \(\mu X + (1 - \mu ) Y\). By the definition of P(X, Y), the set \(\mu X + (1 - \mu ) Y\) is contained in P(X, Y) for any choice of \(\mu \ge 1\). Hence

\(\square \)

We recall that a polyhedron is the intersection of finitely many closed halfspaces, and a polytope is a bounded polyhedron.

Theorem 13

For convex sets \(K_1\) and \(K_2\) in \(\mathrm {R}^n\), the following assertions hold.

-

1)

If both \(K_1\) and \(K_2\) in \(\mathrm {R}^n\) are polyhedra, then \(\mathrm {cl\,}P(K_1, K_2)\) is a polyhedron.

-

2)

If both \(K_1\) and \(K_2\) are polytopes, then \(P(K_1, K_2)\) is a polyhedron.

Proof

1) If both \(K_1\) and \(K_2\) are polyhedra, then (see, e. g., [10, Theorem 13.16]) they can be expressed as \(K_i = \mathrm {conv\,}(X_i \cup Y_i)\), where \(X_i = \{ x_{i1}, \dots , x_{ip_i} \}\) is a finite set of points and \(Y_i = \{ h_{i1}, \dots , h_{iq_i} \}\) is a finite (possibly, empty) family of closed halflines, \(i = 1, 2\). By Theorem 12,

Obviously, \(P((X_1 \cup Y_1), (X_2 \cup Y_2))\) is the union of finitely many sets of the form

where \(x_{1r} \in X_1\), \(x_{2s} \in X_2\), \(h_{1r} \in Y_1\), and \(h_{2s} \in Y_2\).

Elementary geometric arguments show that the sets from (15) can be described as follows (see illustration of cases c), d), and e) in Example 2 below).

-

a)

\(P(x_{1r}, x_{2s})\) is either a singleton or a closed halfline.

-

b)

\(P(x_{1r}, h_{2s})\) is either a closed halfline, a line, or the convex hull of the union of two closed halflines with common endpoint.

-

c)

\(P(h_{1r}, x_{2s})\) is either a closed halfline, a line, or the (nonclosed) convex hull of the union of two closed halflines with distinct endpoints.

-

d)

If the halflines \(h_{1r}\) and \(h_{2s}\) are contained in a 2-dimensional plane, say L, then \(P(h_{1r}, h_{2s})\) is either a closed halfline, a line, the convex hull of the union of two closed halflines, or the whole plane L.

-

e)

If the halflines \(h_{1r}\) and \(h_{2s}\) are not contained in a 2-dimensional plane, then \(P(h_{1r}, h_{2s})\) is a 3-dimensional convex set, which is the convex hull of the union of three closed halflines.

It it easy to show (see, e. g., [10, Theorem 4.2]) that, for any family \(\{ Z_\alpha \}\) of sets in \(\mathrm {R}^n\), one has

This property of convex hulls and the above descriptions a)–e) of particular penumbras imply that \(\mathrm {conv\,}P((X_1 \cup Y_1), (X_2 \cup Y_2))\) is the convex hull of the union of finitely many points and closed halflines. Consequently (see, e. g., [10, Theorem 13.18]), the closure of this set is a polyhedron. Equivalently, due to (14), \(\mathrm {cl\,}P(K_1, K_2)\), is a polyhedron.

2) Suppose that both \(K_1\) and \(K_2\) are polytopes. Then the set \(K_1 - K_2\) is a polytope, and \(C_o(K_1 - K_2)\) is a polyhedral cone with apex o (see, e. g., [10, Theorem 5.46]). Theorem 10 shows that \(P(K_1, K_2)\) is a polyhedron as the sum of the polytope \(K_1\) and the polyhedral cone \(C_o(K_1 - K_2)\) (see, e. g., [10, Theorem13.18]). \(\square \)

The following example illustrates items c)–e) in the proof of Theorem 13.

Example 2

c) In \(\mathrm {R}^2\), if \(h_{1r} = \{ (x, 0) : x \ge 0 \}\) and \(x_{2s} = (0, 1)\), then the nonclosed set

is the convex hull of the union of closed halflines

d) In \(\mathrm {R}^2\), if \(h_{1r} = \{ (x, 0) : x \ge 0 \}\) and \(h_{2s} = \{ (x, 1) : x \le 1 \}\), then the set \(P(h_{1r}, h_{2s})\) is \(\mathrm {R}^2\).

e) In \(\mathrm {R}^3\), if \(h_{1r} = \{ (x, 0,0) : x \ge 0 \}\) and \(h_{2s} = \{ (0, y, 1) : y \ge 0 \}\), then the nonclosed set

is the convex hull of the union of closed halflines

Problem 2

Characterize those polyhedra in \(\mathrm {R}^n\) which are penumbras of suitable polyhedra. In particular, which polyhedra \(K_1\) and \(K_2\) satisfy the conditions \(K_1 = P(K_1, K_2)\) and \(K_2 = P(K_2, K_1)\)?

References

Gabidullina, Z.R.: A theorem on strict separability of convex polyhedra and its applications in optimization. J. Optim. Theory Appl. 148, 550–570 (2011)

Goberna, M.A., González, E., Martínez-Legaz, J.E., Todorov, M.I.: Motzkin decomposition of closed convex sets. J. Math. Anal. Appl. 364, 209–221 (2010)

Golikov, A.I., Evtushenko, YuG, Ketabchi, S.: On families of hyperplanes that separate polyhedra. Comput. Math. Math. Phys. 45, 227–242 (2005)

Grygarová, L.: On a calculation of an arbitrary separating hyperplane of convex polyhedral sets. Optimization 43, 93–112 (1998)

Iusem, A.N., Martínez-Legaz, J.E., Todorov, M.I.: Motzkin predecomposable sets. J. Global Optim. 60, 635–647 (2014)

Lawrence, J., Soltan, V.: On unions and intersections of nested families of cones. Beitr. Algebra Geom. 57, 655–665 (2016)

Minkowski, H.: Gesammelte Abhandlungen. Bd 2. Teubner, Leipzig (1911)

Nievergelt, Y.: Parameter spaces of separating hyperplanes. Optimization 64, 1409–1438 (2015)

Rockafellar, R.T.: Convex Analysis. Princeton Universty Press, Princeton, NJ (1970)

Soltan, V.: Lectures on Convex Sets, 2nd edn. World Scientific, Hackensack, NJ (2020)

Soltan, V.: On M-decomposable sets. J. Math. Anal. Appl. 485, 123816 (2020)

Soltan, V.: On M-predecomposable sets. Beiträge Algebra Geom. (2020). https://doi.org/10.1007/s13366-020-00515-6

Soltan, V.: Separating hyperplanes of convex sets. J. Convex Anal. 28(4), 18 (2021)

Acknowledgements

The author is thankful to the referee for the careful reading and considered suggestions leading to a better presented paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Soltan, V. Penumbras and Separation of Convex Sets. Results Math 76, 25 (2021). https://doi.org/10.1007/s00025-020-01329-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00025-020-01329-7