Abstract

Anthropogenic seismicity (AS) is the undesired dynamic rockmass response to technological processes. AS environments are shallow hence their heterogeneities have important impact on AS. Moreover, AS is controlled by complex and changeable technological factors. This complicated origin of AS explains why models used in tectonic seismicity may be not suitable for AS. We study here four cases of AS, testing statistically whether the magnitudes follow the Gutenberg–Richter relation or not. The considered cases include the data from Mponeng gold mine in South Africa, the data observed during stimulation of geothermal well Basel 1 in Switzerland, the data from Acu water reservoir region in Brazil and the data from Song Tranh 2 hydropower plant region in Vietnam. The cases differ in inducing technologies, in the duration of periods in which they were recorded, and in the ranges of magnitudes. In all four cases the observed frequency–magnitude distributions statistically significantly differ from the Gutenberg–Richter relation. Although in all cases the Gutenberg–Richter b value changed in time, this factor turns out to be not responsible for the discovered deviations from the Gutenberg–Richter-born exponential distribution model. Though the deviations from Gutenberg–Richter law are not big, they substantially diminish the accuracy of assessment of seismic hazard parameters. It is demonstrated that the use of non-parametric kernel estimators of magnitude distribution functions improves significantly the accuracy of hazard estimates and, therefore, these estimators are recommended to be used in probabilistic analyses of seismic hazard caused by AS.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The frequency–magnitude distribution (FMD) presents the proportions of specific magnitude intervals in a population of earthquakes. A model of this distribution has a fundamental impact on the results of estimation of probabilistic characteristics of the seismic process. The most popular models of the FMD are derived from the classic Gutenberg–Richter (G–R) relation (Gutenberg and Richter 1944), which can be presented in the following exponential form:

where N is the number of events of magnitude ≥M, and a and b denote constants, whose values are specific for a seismogenic process. The a parameter characterizes the overall level of seismicity in the region under consideration, calculated as the common logarithm of the number of events with M ≥ 0, a = log10 N(M ≥ 0). The b parameter characterizes the ratio of the number of stronger earthquakes to the number of weaker ones. Its values usually range from 0.5 to 1.5, depending on the distribution of stresses and regional tectonics (e.g. Mogi 1967; Tsapanos 1990), although some papers claim its universal value of 1.0 (e.g. Frohlich and Davis 1993; Kagan 1997, 1999; Wesnousky 1999; Godano and Pingue 2000; Bird and Kagan 2004; Wech et al. 2010). The lower the b coefficient, the larger the probability of occurrence of stronger events is.

The linear relation of the logarithm of the number of events and their size is maintained only for a certain range of magnitudes. The deficit of the number of observed earthquakes with respect to the number of earthquakes anticipated from G–R relation for magnitudes below a certain value is explained by incompleteness of observation as no such a deficit was observed down to picoseismicity (Kwiatek et al. 2010, 2011). It is assumed that G–R relation is valid from the magnitude threshold of catalogue completeness, and the magnitude probability distribution is presented as the left-hand side truncated exponential distribution or the double-truncated exponential distribution in case when an existence of the upper physical limit of the magnitude range is assumed (e.g. Cosentino et al. 1977). The models of FMD derived from the G–R relation are often used in the probabilistic seismic hazard analysis.

However, there are also observations, which indicate deviations from the linear G–R relation for magnitudes from the complete part of catalogue (e.g. Wesnousky et al. 1983; Schwartz and Coppersmith 1984; Leonard et al. 2001). Using a version of the smoothed bootstrap test for multimodality (Silverman 1986) to study magnitude distributions of earthquakes from seismogenic regions from Greece, Lasocki and Papadimitriou (2006) evidenced highly confidently complex, multimodal structures of these distributions. On the other hand, there are also papers maintaining that no such deviations exist (e.g. Frohlich and Davis 1993; Kagan 1999; Schorlemmer et al. 2004, 2005; Page et al. 2011). The problem of the shape of the distribution for the seismic source magnitude is still under investigation and so far no definite conclusion on the issue has been attained.

The phenomenon of seismic activity induced by human technological undertaking is the undesired dynamic rockmass response to technological processes. Anthropogenic seismicity (AS) results from a combination of human influence on rocks and rock’s potential to respond. Variations of technological inducing factors cause the earthquakes generation process to be time dependent, of dynamics virtually unknown. The variety and diversity of technological factors inducing or triggering anthropogenic earthquakes may result in significant deviations of the observed FMD from the models derived from G–R relation. Such deviations have been long noticed in mining-induced seismicity (e.g. Kijko et al. 1987, Lasocki 2001, Maghsoudi et al. 2013) and explained in connection with a bi-component seismicity generating process in mining environment (e.g. Johnston and Einstein 1990; Gibowicz 1990; McGarr 2000, Maghsoudi et al. 2014). Recently, a breakdown of the G–R relation was also shown for AS associating hydraulic fracturing (Eaton et al. 2014). It is probable that the complicated origin of AS, which is controlled by complex and changeable technological factors, may imply the incoherence of the observed frequency–magnitude distribution with the G–R relation as to be a dominant feature in AS, regardless of the technology inducing earthquakes. The present work investigates the shape of FMD and its conformity to the G–R relation on four AS cases associated with different technological activities: underground mining, reservoir impoundment and well stimulation for geothermal energy production. The cases differ in the durations of periods in which they were recorded, from 10 years to less than 2 days, and in the ranges of magnitudes, from [−4.3; −1.4] to [1.0; 4.7]. Since the G–R relation is equivalent to the exponential distribution for earthquake magnitudes (Utsu 1999), two goodness-of-fit tests are applied to check the conformity of the observed distributions of magnitude to the exponential distribution, namely: the Kolmogorov–Smirnov one-sample test and the Pearson’s chi-square test.

2 Method

The empirical G–R relation corresponds to the left-hand side truncated exponential distribution with probability density function (PDF) in the following form:

and the cumulative distribution function (CDF) in the form of:

for M ≥ M c and 0 otherwise, where β = b·ln(10), b is the parameter of the G–R relation, M c is the magnitude threshold of observation completeness, i.e. the magnitude from which the FMD follows the power-law decay. In the present study, we test the null hypothesis H 0 , stating that the distribution of magnitude is exponential.

The two most widely used statistical goodness-of-fit tests are used here to test the null hypothesis: the Kolmogorov–Smirnov (K–S) one-sample test and the Pearson’s chi-squared test (χ 2). They belong to a group of non-parametric tests allowing the verification of the hypothesis about the concordance between a sample and a specified distribution. To verify H0, a function of the tested theoretical distribution for a random variable is compared with a respective empirical distribution of this variable determined in a random sample. In both tests we estimate p values and compare them with the adopted significance level α = 0.05. A small value of p suggests that the null hypothesis may be false; when p < α then the null hypothesis is rejected with the probability of making error α. When p ≥ α then there are no grounds for null hypothesis rejection at the significance level α. The goodness-of-fit tests do not estimate the probability of making the type II error, i.e. the probability of incorrect acceptation of the null hypothesis being false. Therefore, the lack of grounds to reject the null hypothesis is not equivalent to the suggestion that this hypothesis is true, although results of goodness-of-fit tests are often interpreted incorrectly in that way.

The K–S one-sample test is based on the maximum difference between the empirical CDF, F E(M) and the hypothetical CDF, F H(M). When M i , i = 1, …, N is a sample data the K–S test statistics is \(D_{\text{m}} = \mathop {\hbox{max} }\nolimits_{1 \le i \le N} \left[ {F_{\text{H}} \left( {M_{i} } \right) - F_{\text{E}} \left( {M_{i - 1} } \right),F_{\text{E}} \left( {M_{i} } \right) - F_{\text{H}} \left( {M_{i} } \right)} \right]\). The distribution, F H(M), must be fully specified. If its parameters are estimated from the data the critical values of D m statistics are no longer valid. Since we estimate the shape parameter of (2, 3) from a sample data we use a modified test statistics, presented together with tables of critical values in Pearson and Hartley (1972). For the exponential distribution case the modified test statistics is \(T\left( {D_{\text{m}} } \right) = \frac{1}{\sqrt N }\left( {D_{\text{m}} - {{0.2} \mathord{\left/ {\vphantom {{0.2} N}} \right. \kern-0pt} N}} \right)\left( {N + 0.26\sqrt N + 0.5} \right)\).

The K–S test is applicable only to continuous random variables for which no value repeats in a sample data. Although the magnitude is a continuous variable, it is provided with a finite number of digits, most often with one digit after the decimal point. Due to that there are many repetitions of the same value in the sets of magnitude observations. Hence, to apply the K–S test, we randomize first each magnitude within its round off interval. Randomization of magnitude is carried out by transforming the magnitude according to the formula (Lasocki and Papadimitriou 2006):

where M is the magnitude value taken from catalogue, δM is the length of the magnitude round off interval, u is the random value drawn from the uniform distribution in the [0,1] interval, F(·) is the CDF (3), F −1(·) denotes its inverse function, and \(\mathop {\hat{M}}\limits^{{}}\) is the randomized value of magnitude. Because an estimate of p value depends somewhat on randomization, the randomization is performed 105 times, the average of all p values, \(\hat{p}\), is accepted as the final p value; the standard deviation of the p value estimate, σ, is also determined.

The χ 2 test compares observed frequencies, N i , with the frequencies expected from the hypothesized distribution, N H(i), in k consecutive, non-overlapping intervals that cover the whole range of sample values. The test statistics, \(\chi^{2} = \sum\nolimits_{i = 1}^{k} {\frac{{\left[ {N_{i} - N_{\text{H}} \left( i \right)} \right]^{2} }}{{N_{\text{H}} \left( i \right)}}}\), follows approximately the chi-square distribution with (k – c − 1) degrees of freedom, c is the number of estimated parameters. This test can be applied for both continuous as well as discrete random variables, whose values can repeat, hence in this case the magnitude randomization is not carried out.

2.1 Data

Four catalogues of earthquakes related to various anthropogenic seismic processes are used. They represent anthropogenic seismicity accompanying:

-

underground exploitation of solid minerals—the Mponeng deep gold mine,

-

exploitation of geothermal energy—Basel 1 well,

-

reservoir impoundment—Song Tranh 2 reservoir,

-

changes in the level of filling of a surface water reservoir—Açu reservoir.

The catalogues differ both in the number of earthquakes from the least N = 644 (Açu) to the most populated, N = 4304 (Mponeng), in the periods of recording from 1.6 days (Basel 1) to more than 10 years (Açu), in the range of magnitudes from [−4.3; −1.4] (Mponeng) to [1.0; 4.7] (Song Tranh 2) and in the Gutenberg–Richter b value from 0.75 (Song Tranh 2) to 1.90 (Açu).

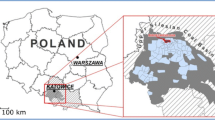

The Mponeng deep gold mine is located in South Africa with the exploitation depths exceeding 3.5 km. The JAGUARS (JApanese–German Underground Acoustic Emission Research in South Africa) seismic network was installed about 90 m below the exploitation level. The high sensitivity and small spacing of the network sensors allowed for recording events with the focal size up to a few centimetres. Following the earthquake M w 2.2, which occurred on 27 Dec 2007, more than 25,000 aftershocks were recorded, with magnitudes from less than −5 up to −0.8 (Kwiatek et al. 2011; Kozłowska et al. 2015). Aftershocks were divided into 3 space zones (Kwiatek et al. 2010). Here we analyse the set of events from the best populated F zone, which included events that occurred along the fault plane. The catalogue is complete from magnitude −4.3. The FMD plot is shown in Fig. 1a.

Located in Basel in Switzerland the 5-km-deep Basel 1 geothermal well was a facility for the enhanced geothermal system (EGS) of geothermal energy production (Haering et al. 2008). EGS is a technology that allows extracting heat energy from the dry and impermeable rocks located at great depths. It requires injection of a large amount of pressurized water that forms cracks in the rocks. Injection of water began on 2 Dec 2006 and was planned to last 21 days. Several days after beginning of the stimulation, on 8 Dec an M L 2.7 event occurred. Its size was greater than the accepted safety level and led to closing the well. The strongest event of magnitude M L = 3.4 occurred about 5 h after the well had been closed. Over the next 2 months, 3 more events M L > 3 were recorded (Häge et al. 2012). The dataset studied here comprise 1111 events, which occurred during the injection of water between 6 and 8 December 2006. The events were recorded by two mini arrays located at the surface at a distance of about 2.1 and 4.8 km from the Basel 1 well. The dataset magnitude of completeness threshold is M w = 1.1. The FMD plot for these data is presented in Fig. 1b.

Song Tranh 2 dam locates on the River Song Tranh in Quang Nam province in central Vietnam. Natural seismicity of the area is very low, only 13 events were noted in the larger region of central Vietnam between 1775 and 1992, out of which only one occurred close to the present dam location. The dam was built as a part of hydropower plant. At the expected head of 175 m, the reservoir’s capacity exceeds 740 × 106 m3. Filling of the reservoir started in November 2010. Since that time two remote seismic stations reported several weak earthquake in the Quang Nam province. Up to the beginning of 2011, the seismic activity in this area increased significantly. From March 2011 also stronger events began to occur. The maximum water level of 175 m was reached in October 2011 and was kept until February 2012, when due to technical reasons it was quickly reduced to the level of some 140 m. Two strongest earthquakes of magnitudes 4.6 and 4.7 took place on 22nd October and on 15th November 2012, respectively, and caused minor damage to housing in the area. Since 2012, the area has been monitored by a local network of short-period seismometers (Wiszniowski et al. 2015). The completeness threshold is 1.0. The complete part of Song Tranh 2 catalogue contains 822 events, and the b value is very small equal to 0.75. The FMD plot for these data is shown in Fig. 1c.

Açu reservoir with a capacity of 2.4 × 109 m3 is located in Rio Grande do Norte province in north-eastern Brazil. It is a shallow reservoir featuring the head of 34 m. Seismic monitoring of the reservoir started in 1987, 4 years after the construction of the dam, and 2 years after the final filling of the reservoir (do Nascimento et al. 2004). The monitoring setup changed over time. Until 1989, the events were recorded by a single station. In 1989, additional three stations were installed, and the next four stations in the years 1990–1991 (Ferreira et al. 1995). Between 1994 and 1997, the reservoir was monitored by at least 8 stations in 5 different configurations (do Nascimento et al. 2004, El Hariri et al. 2010). The data from the vicinity of Açu reservoir were acquired from the longest (10-year) observation period from the datasets analysed in the present work. During this period 644 events were recorded above the completeness threshold of 1.2. Events magnitudes were determined from signal durations. The FMD plot for these data is shown in the Fig. 1d.

Basic parameters of the analysed datasets are summarized in Table 1. The values of the Gutenberg–Richter b value, both here and in subsequent analyses, were determined by the maximum likelihood method (Aki 1965; Utsu 1965; Bender 1983):

where \(\mathop {\bar{M}}\limits^{{}}\) denotes the mean value of the magnitudes greater or equal to M c, M c is the catalogue completeness level, and δM is the length of the magnitude round off interval.

3 Results and Discussion

Basel 1, Song Tranh 2 and Açu catalogues contain numerous repetitions of magnitude values hence these catalogues are randomized. The magnitudes from Mponeng gold mine have been provided with six digits after the decimal point, so there is no need to perform randomization.

For χ 2 test purpose, the whole magnitude ranges are divided into non-overlapping intervals as follows:

-

the Mponeng data range is divided into 19 intervals. Frequencies in these intervals are from 896 to 7.

-

the Basel 1 data range is divided into 7 intervals. Frequencies in these intervals are from 409 to 12.

-

the Song Tranh 2 data range is divided into 19 intervals. Frequencies in these intervals are from 122 to 6.

-

the Açu data range is divided into 10 intervals. Frequencies in these intervals are from 201 to 8.

Results of the K–S and χ 2 tests for all four catalogues are shown in Table 2. The modified K–S test statistics exceeds in all four cases the critical value for α = 0.05. Likewise, all p values from the χ 2 test, varying from 5.56 × 10−14 to 0.03, are less than α. The tested null hypothesis is rejected at the significance level α = 0.05. The size distribution of seismic sources for these earthquake populations seems to be not exponential.

Figure 2 shows a graphic comparison of the empirical CDF, F E(M), with the values of the exponential CDF fitted to the observed magnitudes, F H(M). In the case of catalogues that have been randomized, the values of distribution functions are averages of all for individual randomizations.

For all four catalogues, the difference between the theoretical and observed FMDs, D(M), can be observed typically at the lower limit of the magnitude range. For the Mponeng data, the largest D(M) values are observed near M = −4.2, for Basel 1 and Song Tranh 2 data near M = 1.3, and for Açu data near M = 1.27. It fits the expectations, as empirical CDFs reveal the largest random fluctuations at the beginning of the range of observed values. However, the next and interesting considerable differences are at about the middles of magnitude ranges: for Mponeng data approximately at −3.5, for Basel 1 at about 1.5 and 1.9, for Song Tranh 2 at some 2.4, for Açu approximately at 1.55.

The test results indicate that in all considered cases the actual magnitude distributions in the earthquake populations do not follow the G–R relation. One of the possible reasons for that may have been time changes of b value. The samples (whole catalogues) being mixtures of subsamples drawn from exponential distributions with different shape parameters, relevant for different parts of the periods of observation, would not fit the exponential distribution.

Time variability of the Gutenberg–Richter b value in anthropogenic seismic processes has been long known (e.g. Gibowicz 1979; Volant et al. 1992; Holub 1995; Feustel 1997; Grob and van der Baan 2011a, b; Mallika et al. 2012; Davies et al. 2013). The property has been used in algorithms for evaluation of the time-dependent seismic hazard (e.g. Lasocki 1989, 1993a, b; Kijko et al. 1993; Stewart and Spottiswoode 1993; Trifu et al. 1997; Convertito et al. 2012).

To check this possibility of explaining the ascertained violations of the G–R relation each of the four datasets is divided into a number of non-overlapping, consecutive subsets jointly covering the whole observation period and the null hypothesis is tested in the subsets. The subsets for a specific division contain more or less the same number of earthquakes. Figure 3 presents the estimated p values in combinations of the case, the subset and the test. Additionally, the reference to the modifications for the K–S test for unspecified shape parameter is provided with colours of the points from dark maroon when the test statistics T(D m) allows for rejection H0 at the significance level 0.01 to white when H0 cannot be rejected even at the level 0.15. It can be seen that with increasing number of subdivisions, leading to increasing number of decreasingly populated subsets, in both tests p values generally increase.

4304 seismic events from Mponeng gold mine are subdivided up to 37 subsets. In the χ 2 test p values, which do not support rejection of H0 at α = 0.05, begin to appear with the division of the dataset into 4 subsets; for one of these subsets p > 0.30 is obtained. In the K–S test the first larger p value (>0.15) appears with catalogue division into 6 subsets. For the division into 30 and more subsets, p approaches values reaching up to 1.0.

1111 seismic events from Basel 1 are subdivided up to 11 subsets. In the χ 2 test, already at the division into 3 subsets two values of p > 0.10 are obtained. In the K–S test, when dividing the dataset into 6 and more groups one value of p > 0.50 appears.

822 seismic events from Song Tranh 2 are subdivided up to 8 subsets. First larger p value (>0.15) appears already at catalogue division into 2 subsets in both tests although in K–S test the p value is about 0.15 whereas in χ 2 test p value reaches about 0.3.

644 seismic events of Açu dataset is subdivided up to 6 subsets. Larger estimates of p value are obtained in both tests already for the division into 3 subsets when for one of these subsets the value of p > 0.60 appears.

The observed effect that the null hypothesis has not been rejected for some of the subsets, whereas it has been rejected for the complete datasets may be due to two reasons. First, as mentioned before, the deviations of the observed FMD from the G–R relation in the complete datasets may have resulted from time changes of b value within the periods of observation. For those of shorter time intervals, which experienced strong b variations, the null hypothesis would be rejected, whereas it would not be for those other ones, in which b varied mildly.

Indeed, b value varied with time in all four AS cases analysed in this work. Figure 4a presents the time variation of b value for Mponeng data. The catalogue containing 4304 tremors has been split into 12 consecutive, non-overlapping data windows. Each window contains approximately the same (\(\pm 1\)) number of events, N = 358 or 359. The b value for the whole catalogue equals b c = 1.27, and its standard deviation is σ b = 0.02. Here, as well as in other three cases below, σ b is an asymptotic standard deviation of the maximum likelihood estimator of b, \(\sigma_{b} = {b \mathord{\left/ {\vphantom {b {\sqrt N }}} \right. \kern-0pt} {\sqrt N }}\). Initially, b value quickly increases above b c. Subsequently, b value decreases and stabilizes close to b c.

Figure 4b presents the changes of b with time for the Basel 1 data. The catalogue containing 1111 earthquakes has been divided into 11 consecutive, non-overlapping data windows. Each window contains the same number of events N = 101. The value of b changes from approximately 1.8 to 1.3. b value for the whole catalogue equals b c = 1.66, and its standard deviation is σ b = 0.05. In the initial period b is greater than b c. By the end of observation, b is significantly smaller than b c.

b value variations in time for the Song Tranh 2 data are detailed in Fig. 4c. The catalogue containing 822 earthquakes has been divided into 8 consecutive, non-overlapping data windows. Each window contains approximately the same (\(\pm 1\)) number of events, N = 102 or 103. The b value for the whole catalogue equals b c = 0.75, and its standard deviation is σ b = 0.03. From September 2012 to June 2013 b value was significantly smaller than b c. Later, it increased and stabilized above b c.

The time changes of b for the Açu data are presented in Fig. 4d. The values of b have been calculated in the data window of 110 events, advanced by 10 events. The b value for the whole catalogue equals b c = 1.90, and its standard deviation is σ b = 0.07. In general, b oscillates about b c, changing in the range from approximately 1.5 to about 2.5. Three periods of b growth and decline can be identified. Initially, between 1988 and 1990, a slight increase is observed, followed by a significant decrease of b value. The second period extends over 1990–1992. In the third period, 1992–1996, the most significant increase of the b value can be observed.

If the time changes of b value are responsible for the diverse increase of the tests’ p values when decreasing the time intervals, from which the data samples have been obtained, then the p values for subsets should negatively correlate with the dispersion of b value in these subsets. This correlation is studied for Mponeng and Basel-1 datasets, which are numerous enough for this purpose.

We form 25 groups of subsets from the Mponeng dataset. The groups consist, respectively, of 6, 7, …, 30 consecutive, non-overlapping subsets of the complete dataset, extending jointly over the whole period of observation. The subsets of a specific group contain more or less the same number of events, ranging from some 717 to some 143. For each subset in the group our null hypothesis is tested by the K–S and χ 2 tests, resulting in p values. Next, every subset is divided into smaller consecutive parts, of some 15 sample values each, and the Gutenberg–Richter b value is estimated for each part. The resultant b values for parts are used to evaluated the mean b value, <b>, for the subset and the standard deviation of b, \(s_{b} = \sqrt {\frac{{\sum\nolimits_{i = 1}^{m} {\left( {b_{i} - \left\langle b \right\rangle } \right)^{2} } }}{m - 1}}\), where b i are the b values in m 15-element parts of the subset. The b value estimation from 15 element samples is strongly uncertain; however, we believe that the degree of variation of such estimates within a subset, expressed by s b , reflects the dispersion of actual b over the subset. If in the period of time, to which the specific subset corresponds, b fluctuated significantly, the s b value becomes large.

For example, when the Mponeng data are divided into 6 subsets, each subset containing some 717 data values, then these subsets are further subdivided into 48 parts of 14–15 events. Consequently, s b is calculated from 48 b values. When dividing the catalogue into 30 subsets of some 143 data values each, the further subdivision of subsets into 14–15-element parts leads to 10 parts in each subset, hence s b is calculated from 10 b values.

Figure 5a presents the relation between s b and p, for the division of the Mponeng catalogue into 30 non-overlapping subsets. No apparent correlation between these two parameters is visible. The Spearman’s rank correlation coefficient for the pair s b and p value from the K–S test is R S = 0.15. The p value associating the null hypothesis that there is no correlation between s b and p is p S = 0.42. For the pair s b and p obtained from the χ 2 test R S = 0.02 and p S = 0.93. The obtained values of the R S coefficient do not indicate any traces of correlation between s b and p.

Results of analysis of correlation between the p value of goodness-of-fit tests examining the hypothesis on conformity of the observed FMD to the exponential distribution and the standard deviation of changeable Gutenberg–Richter b value for the Mponeng data. a p against s b for the division of data into 30 subsets, b Spearman’s rank correlation of the (p, s b ) pair, R S and the test p S value for “no correlation” hypothesis against the number of catalogue subdivisions. See text for further explanations

Similar results and the same conclusions are drawn for the other divisions of the Mponeng catalogue. The correlation coefficient, R S, versus the number of catalogue divisions is presented in Fig. 5b. The value of the correlation coefficient R S varies in the interval from about −0.3 up to 0.6, approaching zero with the growing number of catalogue subdivisions. The p s value of the test investigating the hypothesis of the lack of correlation between s b and p varies, but never falls below 0.1.

5 groups of subsets of the Basel 1 catalogue result from its divisions into 5, 6 until 9 consecutive, non-overlapping subsets, respectively. Further steps of the analysis are the same as described above for Mponeng data. In every case, the Basel 1 data are randomized 105 times to undergo the K–S test. Figure 6a presents the relation between s b and p, for the division of the Basel 1 catalogue into 9 subsets. No correlation can be seen between these two parameters. The correlation coefficient, R S and the p s value of “no correlation” hypothesis test, are R S = 0.18, p S = 0.64 for the K–S test and R S = 0.32, p S = 0.41 for the χ 2 test. The correlation coefficient against the number of catalogue subdivisions is presented in Fig. 6b. The value of the correlation coefficient R S varies between −0.6 and 0.4. The p s value varies but never falls below 0.2.

Results of analysis of correlation between the p value of goodness-of-fit tests examining the hypothesis on conformity of the observed FMD to the exponential distribution and the standard deviation of changeable Gutenberg–Richter b value for the Basel-1 data. a p against s b for the division of data into 9 subsets, b Spearman’s rank correlation of the (p, s b ) pair, R S and the test p S value for “no correlation” hypothesis against the number of catalogue subdivisions. See text for further explanations

The correlation study performed on Mponeng and Basel 1 data does not support the theses on the relation between the degree of concordance between the observed and the exponential distribution for magnitude and the time variability rate of the Gutenberg–Richter b value. The differences between the observed magnitude distributions and the Gutenberg–Richter-led models are not due to variations of b value in the time intervals of observation.

The catalogues Açu and Song Tranh 2 are too small to carry out a similar correlation analysis.

The other reason why larger p values of the goodness-of-fit tests are obtained for some, generally less numerous subsets, might be the loss of representativeness of such smaller samples. In such a case larger p values would result from the test failure rather than from the correctness of the null hypothesis. Note, however, that the null hypothesis has not been rejected in a quite big sample resulting from the division of Mponeng catalogue into four subsets, i.e. the sample comprising 1076 magnitude values.

We check this reason using the Mponeng dataset. First, the dataset is shuffled to deprive it of any time-dependent properties. The shuffled dataset is divided into non-overlapping subsets, jointly covering the whole dataset and the null hypothesis on the concordance between the observed FMD and the exponential distribution is tested in the subsets. The numbers of subsets, into which the shuffled dataset is divided, are the same as when the null hypothesis has been tested in time-sequential subsets of the original Mponeng dataset. The shuffling and the further steps of the analysis are repeated 1000 times.

The results obtained for an individual shuffling case are similar to that presented in Fig. 3 for Mponeng data. The average result from all 1000 experiments is as shown in Fig. 7. The mean of the test p value related to the null hypothesis grows with the decrease of sample size. When the number of events in the sample becomes equal to and less than 600, the average p value of the K–S test becomes greater than 0.05. For the χ 2 test the p value becomes greater than 0.05 when the sample size is less than 800. Smaller samples are not representative enough for the considered magnitude population to indicate by means of the used goodness-of-fit tests, violations of the G–R relation, which are ascertained in larger samples. It is interesting that such a loss of representativeness occurs on average already for samples as big as of 600–800 events. Such a situation may take place when the actual distribution in the population is not far from the model.

The deviations from the Gutenberg–Richter law have been ascertained in all four considered cases of seismicity induced by different technological activities. Although this suggests that such deviations can be characteristic in general for the induced seismicity regardless of its inducing factor, the specific cause in each of these cases is likely different. A full physical interpretation of the observed effects requires far more detailed studies of every case and is out of the scope of this paper. However, some initial remarks can be formulated.

Eaton et al. (2014) and Eaton and Maghsoudi (2015) suggest that the breakdown of the Gutenberg–Richter relation for seismicity induced by hydraulic fracturing, which they has observed, may result from different scaling of brittle deformations during fracking and tectonic earthquakes. This may have explained the Mponeng case, whose extremely small magnitudes link these events to brittle cracking rather than to fault slips.

It is also shown in the above-cited paper (Eaton and Maghsoudi 2015) that the combination of two (or more) Gutenberg–Richter law-controlled earthquake populations with different b values can result in only positive deviations from the straight line in larger magnitude range. Such kind of deviations are seen for the Song Tranh 2 case (Fig. 1c). The epicentres of Song Tranh 2 events, whose distribution are shown in Fig. 8, form distinct two clusters. The one is partially located in the north-western part of the reservoir and then extends north of the reservoir up to some 10 km apart. The other forms begin at the south bank of the reservoir and continues roughly along NW–SE direction until some 10 km from the reservoir’s south bank. The clusters are well separated in space. Such a picture implies that the earthquakes were generated in two different tectonic systems, which is supported by the seismotectonic interpretation of Song Tranh 2 seismicity provided by Wiszniowski et al. (2015). In addition, the earthquake productivity within the second cluster became more significant in the later part of the period of observation. In this connection, the first cluster productivity could be an immediate response and the second cluster productivity—a delayed response of the rockmass to the stimulation by reservoir impoundment, according to the reservoir-induced seismicity classification and interpretation provided by Simpson et al. (1988). Such different types of reservoir seismicity involve the activity of different faults. Thus, the Song Tranh 2 sample may have been a mixture of two samples of different b values.

Basel burst of earthquakes occurred in less than 2 days before the first major event. This sample comes from the period preceding and likely covering fault activation. Some of the events may have been just brittle deformations linked to injections and some of them may have been signals of the nucleation process. This would lead to multicomponent sample and the breakdown of Gutenberg–Richter relation. The presence in this sample of events related to the fault activation would explain a relatively low b value for the sample, as fault activation can be connected with a drop of b to about 1.0 (Eaton and Maghsoudi 2015 after Maxwell et al. 2009).

No reasonable speculations about the result for Açu seismicity can be done because even event locations are not known.

4 Non-Parametric Estimation of Magnitude Distribution for Anthropogenic Seismicity

For all four studied datasets of induced earthquakes the goodness-of-fit tests indicate that the respective FMD-s do not follow the Gutenberg–Richter relation. The considered seismicity episodes are linked to quite different technologies. We have analysed all cases available at the moment. Hence, it can be expected that the ascertained disagreement between the observed magnitudes and the Gutenberg–Richter-born model is met in many instances of anthropogenic seismic processes.

Facing the same problem of losing applicability of the exponential distribution model for magnitude in mining-induced seismicity cases Lasocki and his colleagues proposed to adopt non-parametric kernel estimators (Silverman 1986) to represent magnitude distributions (e.g. Lasocki et al. 2000; Kijko et al. 2001; Orlecka-Sikora and Lasocki 2005; Lasocki and Orlecka-Sikora 2008). The presently most often used version of this estimator utilizes Gaussian adaptive kernels. Its details are provided in Appendix 1.

The ascertained here deviation of observed magnitudes from the Gutenberg–Richter relation-based distribution model is statistically significant but tiny. This can be inferred from the fact that it becomes “invisible” even for samples as big as of 800 elements. We consider now two questions. First, whether or not a non-parametric kernel estimator performs better than the “close-to-observation” exponential model in case of such tiny deviations. Second, how the use of the disqualified exponential distribution model for magnitude reflects on accuracy of the source effect modelling in the probabilistic seismic hazard analysis.

True magnitude distributions for our AS cases are not known. Therefore, we can provide an answer to the second question only through comparisons of the results obtained when the exponential model is applied with the results obtained when a better distribution estimate is used. If the answer to the first question is positive, the non-parametric estimator performs better than the exponential model, then the non-parametric estimator will be used as the reference for the comparisons related to the second question.

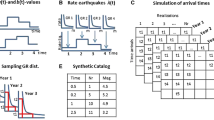

We investigate the posed questions on the Song Tranh 2 case. The p value for the K–S test is 0.96 and for the χ 2 test is 0.91 when the sample data are compared with the kernel estimates of magnitude distribution. The respective values for the exponential model are 0.041 and 0.026 (see Table 2). From the point of view of both the K–S and the χ 2 goodness-of-fit tests, the kernel estimate of magnitude distribution fits to the data much better than the exponential model. It is not surprising because the kernel estimator is data driven, though the scale of the improvement is impressive. Does, however, the kernel estimate fit to the underlying magnitude distribution better than the exponential distribution does? In an attempt to answer this question we investigate a synthetic data drawn from the distribution, which differs from the exponential distribution similarly slightly as the actual Song Tranh 2 data underlying distribution do. As the investigated synthetic distribution we use a bi-linear hard end point distribution model for magnitude defined by the equation:

The CDF for this model reads:

where β = b·ln(10). We set M c = 1.0, M T = 1.8, M max = 4.9, b 1 = 0.8, b 2 = 1.4. The magnitude range corresponds with the range of Song Tranh 2 data. The bi-linear model was occasionally proposed to represent magnitude populations for which the classic Gutenberg–Richter law might be inappropriate (e.g. Utsu 1999; Qin 2005; Tsukakoshi 2010). We draw from distribution (7) a sample of the same size as the Song Tranh 2 dataset, i.e. of 822 elements. Next, we fit an exponential distribution model to the generated sample. The best fit b value is b = 0.85. The K–S test turns down the exponential model at the significance level 0.05 with p = 0.036 and T(D m) = 1.42. (The critical value of the modified test statistics, T(D m), at the significance 0.05 is 1.094, see Table 2). M c, M max and the estimated b value indicate that the generated sample is to some extent similar to the Song Tranh 2 dataset whereas the p value suggests that the respective underlying distributions of the Song Tranh 2 dataset and the generated sample deviate to similar degrees from the exponential distribution.

The distribution underlying the generated sample is known hence direct comparisons of the distribution functions are possible. The non-parametric (kernel) and parametric (exponential model) estimates of CDF are compared with the actual CDF in Fig. 9.

The importance of proper modelling of the magnitude CDF for probabilistic seismic hazard analysis relies on the fact the source size effect is represented there by the exceedance probability function (EPF) of magnitude, R(M) = Prob{Μ ≥ M} = 1 − F(M). Furthermore, R(M) enters positively into the exponent of the equations for hazard parameters, like the exceedance probability conditional upon event occurrence, the mean return period, the maximum credible magnitude, etc. For this reason the most important are discrepancies between actual and modelled R for greater M, firstly because larger magnitude events are of primary targets of the hazard analysis and, secondly, because for growing M, R(M) tends to zero and the estimation error can become of the range of the estimate itself.

Figure 10 presents relative errors of the estimates of EPF of magnitude. The relative error of estimate, \(\hat{R}\left( M \right)\), is \(\frac{{R\left( M \right) - \hat{R}\left( M \right)}}{R\left( M \right)} \times 100\,\%\). Although, as it is seen from Fig. 9a, the discrepancies between the parametric and actual CDF do not look big, the relative error of EPF estimate takes up to nearly 300 %. The non-parametric estimator performs definitely better. Its maximum relative error of EPF estimate is 50 %.

Having ascertained on the synthetic dataset the outperformance of the kernel estimation over the parametric one we return to the Song Tranh 2 dataset. We compare the kernel, more accurate estimate of magnitude distribution with the estimate resulting from the inappropriate for the case of Song Tranh 2 data exponential magnitude distribution model. Figure 11 compares the parametric estimate of CDF with its reference—the non-parametric estimate. The greatest deviations are for M = 1.25 and M = 2.35.

One of the most important functions used in the probabilistic seismic hazard analysis is the above-mentioned exceedance probability conditional upon event occurrence, i.e. the probability that the earthquake of magnitude equal to or greater than M will occur in the time period of length T. When the earthquake process is Poissonian then this exceedance probability takes the form of:

where λ is the mean event rate and R(M) is the exceedance probability function of magnitude. We check how much the deviations of the parametric CDF estimate from the reference estimate impact the exceedance probability. To illustrate the effect we take magnitude 2.5, which is close to the greatest deviation point, and 4.5, which as being close to the upper limit of magnitude range could be of utmost importance in hazard analyses. The exceedance probability estimates are presented in Fig. 12. For M = 2.5 the use of the exponential distribution model leads to overestimation of the exceedance probability. The greatest difference between the parametric estimate and the reference is for some T = 0.5 month and amounts some 10 %. On the contrary, for M = 4.5 the use of the exponential distribution model leads to underestimation of the exceedance probability, with the greatest difference of more than 6 % for T = 26.5 month. According to the parametric approach, the 95 % probability to have an earthquake greater or equal to 4.5 needs more than 7 years whereas the reference indicates that it needs only 6 years.

5 Summary and Conclusions

For all four datasets concerning earthquakes induced by human technological activity: underground exploitation, geothermal energy exploration, reservoir impoundment and filling a surface water reservoir, the statistical tests have indicated with a high significance that the FMD of these earthquakes does not follow the Gutenberg–Richter relation. The examined samples are huge, from about 4300 events from the mine to about 650 earthquakes from the water reservoir area. The data under consideration are due to the seismic processes induced by quite different technologies. Therefore, it appears that the incoherence of the FMD with the Gutenberg–Richter relation may be a general feature of anthropogenic seismicity, regardless of the technology inducing earthquakes.

Although the Gutenberg–Richter b value changes significantly in time in each of the considered datasets, the disagreements between the observed FMD-s and the theoretical Gutenberg–Richter relation-born exponential model cannot be attributed to these changes.

The disagreement between the exponential distribution model and the actual magnitude distributions is not large and can be ascertained only while testing large samples of sizes more than 600 to 800 elements.

The deviations from Gutenberg–Richter law are not big but they statistically significant and significantly diminish the accuracy of assessment of seismic hazard parameters, which may be unacceptable in practical applications.

As a remedy, the non-parametric kernel estimators of magnitude distribution functions are recommended to be used in probabilistic analyses of seismic hazard caused by anthropogenic seismicity. Even for so small discrepancies between the observed distribution of earthquake magnitude and the exponential distribution model, as those ascertained in this study, the non-parametric estimates of distribution functions are significantly more accurate than the ones drawn from the exponential model.

References

Aki, K. (1965), Maximum Likelihood Estimate of b in the Formula log N = a - b M and its Confidence Limits, Bull. Earthq. Res. Inst. Tokyo Univ. 43, 237-239.

Bender, B. (1983), Maximum Likelihood Estimation of b Values for Magnitude Grouped Data, Bull. Seismol. Soc. Am. 73, 831-851.

Bird, P., and Kagan, Y. (2004), Plate-tectonic Analysis of Shallow Seismicity: Apparent Boundary Width, Beta-value, Corner Magnitude, Coupled Lithosphere Thickness, and Coupling in 7 Tectonic Settings, Bull. Seismol. Soc. Am. 94, 2380–2399, doi:10.1785/0120030107.

Bowman, A. W., Hall, P., and Titterington, D. M. (1984), Cross-validation in non-parametric estimation of probabilities and probability densities, Biom. 71, 341–351.

Convertito, V., Maercklin, N., Sharma, N., and Zollo, A. (2012), From Induced Seismicity to Direct Time-dependent Seismic Hazard, Bull. Seismol. Soc. Am. 102, 2563–2573, doi:10.1785/0120120036.

Cooke, P. (1979), Statistical Inference for Bounds of Random Variables, Biometrika 66, 367–374.

Cosentino, P., Ficarra, V., and Luzio, D. (1977), Truncated Exponential Frequency-magnitude Relationship in Earthquake Statistics, Bull. Seismol. Soc. Am. 67, 1615-1623.

Davies, R., Foulger, G., Bindleya, A., and Styles, P. (2013), Induced Seismicity and Hydraulic Fracturing for the Recovery of Hydrocarbons, Marine and Petroleum Geology 45, 171–185, doi:10.1016/j.marpetgeo.2013.03.016.

do Nascimento, A.F., Cowie, P.A., Lunn, R.J., and Pearce, R.G. (2004), Spatio-temporal Evolution of Induced Seismicity at Açu Reservoir, NE Brazil, Geophys. J. Int. 158, 1041–1052, doi:10.1111/j.1365-246X.2004.02351.x.

Eaton, D.W., and Maghsoudi, S. (2015), 2b… or not 2b? Interpreting Magnitude Distributions From Microseismic Catalogs, First Break 33.

Eaton, D.W., Davidsen, J., Pedersen, P.K., and Boroumand, N. (2014), Breakdown of the Gutenberg-Richter Relation for Microearthquakes Induced by Hydraulic Fracturing: Influence of Stratabound Fractures, Geophysical Prospecting 62, 806-818, doi:10.1111/1365-2478.12128.

El Hariri, M., Abercrombie, R.E., Rowe, C.A., and do Nascimento, A.F. (2010), The Role of Fluids in Triggering Earthquakes: Observations From Reservoir Induced Seismicity in Brazil, Geophys. J. Int. 181, 1566–1574, doi:10.1111/j.1365-246X.2010.04554.x.

Ferreira, J.M., Oliveira, R.T., Assumpção, M., Moreira, J. A.M., Pearce, R.G., and Takeya, M.K. (1995), Correlation of Seismicity and Water Level—An Example From Northeastern Brazil, Bull. Seismol. Soc. Am. 85, 1483–1489.

Feustel, A.J., Temporal-spatial b-values and observed relationships to structurally controlled ground falls in an open-stope mine, In Rockbursts and Seismicity in Mines (eds. Gibowicz, S.J. and Lasocki, S.) (Balkema, Rotterdam 1997) pp. 45-47.

Frohlich, C., and Davis, S. (1993), Teleseismic b-Values: Or, Much Ado About 1.0, J. Geophys. Res. 98, 631 – 644, doi:10.1029/92JB01891.

Gibowicz, S.J. (1979), Space and Time Variations of the Frequency-Magnitude Relation for Mining Tremors in the Szombierki Coal Mine in Upper Silesia, Poland, Acta Geophys. Pol. 27, 39-50.

Gibowicz, S.J. (1990), The mechanism of seismic events induced by mining. In Rockbursts and Seismicity in Mines (ed. Fairhurst, C.) (Balkema, Rotterdam 1990), pp. 3-27.

Godano, C., and Pingue, F. (2000), Is the Seismic Moment-Frequency Relation Universal?, Geophys. J. Int. 142, 193– 198, doi:10.1046/j.1365-246x.2000.00149.x.

Grob, M., and van der Baan, M. (2011a), Statistical Analysis of Microseismic Event Locations and Magnitudes, and Their Geomechanical Implications, SEG Expanded Abstracts 30: 1570–1575.

Grob, M., and van der Baan, M. (2011b), Inferring In-Situ Stress Changes by Statistical Analysis of Microseismic Event Characteristics, The Leading Edge 30, 1296–1301. doi:10.1029/2010GL043066.

Gutenberg, B., and Richter, C.F. (1944), Frequency of earthquakes in California, Bull. Seismol. Soc. Am. 34, 185-188.

Haering, M.O., Schanz, U., Ladner, F., and Dyer, B.C. (2008), Characterization of the Basel 1 Enhanced Geothermal System. Geothermics, 37, 469–495, doi:10.1016/j.geothermics.2008.06.002.

Häge M., Blascheck, P., and Joswig, M. (2012), EGS Hydraulic Stimulation Monitoring by Surface Arrays—Location Accuracy and Completeness Magnitude: The Basel Deep Heat Mining Project Case Study, J Seismol. 17, 51–61, doi:10.1007/s10950-012-9312-9.

Holub, K. (1995), Analysis of b-Value in the Frequency-energy Distributions. Publs. Inst. Geophys. Polish Acad. Sc. M-19(281), 153-161.

Johnston, J.C., and Einstein, H.H., A survey of mining associated rockbursts, In Rockbursts and Seismicity in Mines (ed. Fairhurst, C.) (Balkema, Rotterdam 1990), pp. 121-128.

Kagan, Y.Y. (1997), Seismic Moment-frequency Relation for Shallow Earthquakes: Regional Comparison, J. Geophys. Res. 102, 2835–2852,doi:10.1029/96JB03386.

Kagan, Y. (1999), Universality of the Seismic Moment-frequency Relation, Pure Appl. Geophys. 155, 537–573, doi:10.1007/s000240050277.

Kijko, A., and Graham, G. (1998), Parametric-Historic Procedure for Probabilistic Seismic Hazard Analysis. Part I: Estimation of Maximum Regional Magnitude Mmax. Pure Appl. Geophys. 152, 413–442.

Kijko, A., Drzęźla, B., and Stankiewicz, T. (1987), Bimodal Character of the Distribution of Extreme Seismic Events in Polish Mines, Acta Geophys. Pol. 35, 157-166.

Kijko, A., Funk, C. W., and Brink, A. v. Z., Identification of anomalous patterns in time-dependent mine seismicity. In Rockbursts and Seismicity in Mines (ed Young, R.P), (Balkema, Rotterdam, 1993), pp. 205-210.

Kijko, A., Lasocki, S., and Graham, G. (2001), Nonparametric Seismic Hazard Analysis in Mines. Pure Appl. Geophys. 158, 1655-1676.

Kozłowska, M., Orlecka-Sikora, B., Kwiatek, G., Boettcher, M.S., and Dresen, G. (2015), Nanoseismicity and Picoseismicity Rate Changes From Static Stress Triggering Caused by a M w 2.2 Earthquake in Mponeng Gold Mine, South Africa, J. Geophys. Res. 120, doi:10.1002/2014JB011410.

Kwiatek, G., Plenkers, K., Nakatani, M., Yabe, Y., Dresen, G., and JAGUARS-Group (2010), Frequency-Magnitude Characteristics Down to Magnitude -4.4 for Induced Seismicity Recorded at Mponeng Gold Mine, South Africa, Bull. Seismol. Soc. Am. 100, 1165–1173, doi: 10.1785/0120090277.

Kwiatek, G., Plenkers, K., Dresen, G., and JAGUARS Research Group (2011), Source Parameters of Picoseismicity Recorded at Mponeng Deep Gold Mine, South Africa: Implications for Scaling Relations, Bull. Seismol. Soc. Am. 101, 2592–2608, doi: 10.1785/0120110094.

Lasocki S. Some estimates of rockburst danger in underground coal mines from energy distribution of M.A. events, In Proc. 4th Conf. Acoustic Emission/Microseismic Activity in Geologic Structures and Materials (Hardy H.R.Jr. ed), (Trans Tech Publications, Clausthal 1989), pp 617-633.

Lasocki, S. (1993a), Statistical Prediction of Strong Mining Tremors, Acta Geophys. Pol., 41, 197-234.

Lasocki S., Statistical Short-term Prediction in Mining Induced Seismicity, In Rockbursts and Seismicity in Mines (ed Young, R.P), (Balkema, Rotterdam, 1993), pp. 211-216.

Lasocki, S., Quantitative evidences of complexity of magnitude distribution in mining-induced seismicity: Implications for hazard evaluation, In Rockbursts and Seismicity in Mines: Dynamic rock mass response to mining (van Aswegen, G., Durrheim, R.J., Ortlepp, W.D. eds.) (SAIMM S27, Johannesburg, 2001), pp. 543-550.

Lasocki, S., and Orlecka-Sikora, B. (2008), Seismic Hazard Assessment Under Complex Source Size Distribution of Mining-Induced Seismicity, Tectonophysics 456, 28-37, doi:10.1016/j.tecto.2006.08.013.

Lasocki, S., and Papadimitriou, E.E. (2006), Magnitude Distribution Complexity Revealed in Seismicity From Greece, J. Geophys. Res. 111, B11309, doi:10.1029/2005JB003794.

Lasocki, S., and Urban, P. (2011), Bias, Variance and Computational Properties of Kijko’s Estimators of the Upper Limit of Magnitude Distribution, Mmax. Acta Geophys 59, 659-673, doi:10.2478/s11600-010-0049-y.

Lasocki, S., Kijko, A., and Graham, G. Model-free seismic hazard estimation. In Proc. Int. Conf. Earthquake Hazard and Risk in the Mediterranean Region, EHRMR’99 (Gokcekus H. ed.) (Educational Foundation of Near East University, Lefkosa, T. R. N. Cyprus, 2000), pp. 503-508.

Leonard, T., Papasouliotis, O., and Main, I.G. (2001), A Poisson Model for Identifying Characteristic Size Effects in Frequency Data: Application to Frequency-Size Distributions for Global Earthquakes, “Starquakes,” and Fault Lengths, J. Geophys. Res. 106, 13473–13484, doi:10.1029/2000JB900429.

Maghsoudi, S., Cesca, S., Hainzl, S., Kaiser, D., Becker, D., and Dahm, T. (2013), Improving the Estimation of Detection Probability and Magnitude of Completeness in Strongly Heterogeneous Media, an Application to Acoustic Emission (AE), Geophys. J. Int. 193, 1556–1569, doi:10.1093/gji/ggt049.

Maghsoudi, S., Hainzl, S., Cesca, S., Dahm, T., and Kaiser, D. (2014), Identification and Characterization of Growing Large-Scale En-Echelon Fractures in a Salt Mine, Geophys. J. Int. 196, 1092-1105, doi:10.1093/gji/ggt443.

Mallika, K., Gupta, H., Shashidar, D., Purnachandra Rao, N., Yadav, A., Rohilla, S., Satyanarayana, H.V.S., and Srinagesh, D. (2012), Temporal Variation of b Value Associated With the M4 Earthquakes in the Reservoir-Triggered Seismic Environment of the Koyna–Warna Region, Western India, J Seismol 17, 189-195, doi:10.1007/s10950-012-9318-3.

Maxwell, S.C., Jones, M., Parker, R., Miong, S., Leaney, S., Dorval, D., DAmico, D., Logel, J., Anderson, E., and Hammermaster, K., Fault activation during hydraulic fracturing. In SEG Houston, International Exposition and Annual Meeting (2009), pp. 1552–1556.

McGarr, A. (2000), Energy budgets of mining-induced earthquakes and their interactions with nearby stopes, Int J Rock Mech Min Sci 37, 437-443, doi:10.1016/S1365-1609(99)00118-5.

Mogi, K. (1967), Regional Variation in Magnitude-Frequency Relation of Earthquake, Bull. Earthq. Res. Inst. 45, 313-325.

Orlecka-Sikora, B., and Lasocki, S. Nonparametric characterization of mining induced seismic sources, In Rockbursts and Seismicity in Mines: Controlling Seismic Risk (Potvin, Y., and Hudyma, M., eds.), (Australian Centre for Geomechanics, Nedlands 2005), pp. 555-560.

Page, M. T., Alderson, D., and Doyle, J. (2011), The Magnitude Distribution of Earthquakes Near Southern California Faults, J. Geophys. Res. 116, B12309, doi:10.1029/2010JB007933.

Pearson, E. S., and Hartley, H. O., eds, Biometrika Tables for Statisticians, Vol. 2, (Cambridge University Press, Cambridge 1972).

Qin Changyuan (2005) Nonlinear Analysis of the Frequency-Magnitude Relationship in the Western Circum-Pacific Region, Pure Appl. Geophys. 162, 91–107, doi:10.1007/s00024-004-2581-7.

Schorlemmer, D., Wiemer, S., and Wyss, M. (2004), Earthquake Statistics at Parkfield: 1. Stationarity of b Values, J. Geophys. Res. 109, B12307, doi:10.1029/2004JB003234.

Schorlemmer, D., Wiemer, S., and Wyss, M. (2005), Variations in Earthquake-Size Distribution Across Different Stress Regimes, Nature 437, 539–542, doi:10.1038/nature04094.

Schwartz, D. P., and Coppersmith, K. J. (1984), Fault Behavior and Characteristic Earthquakes: Examples From the Wasatch and San Andreas Fault Zones, J. Geophys. Res. 89, 5681–5698, doi:10.1029/JB089iB07p05681.

Silverman, B. W., Density Estimation for Statistics and Data Analysis, (Chapman Hall, London 1986).

Simpson, D.W., Leith, W.S., and Scholz, C.H. (1988), Two Types of Reservoir-induced Seismicity, Bull. Seismol. Soc. Am. 78, 2025-2040.

Stewart, R. D., and Spottiswoode, S.M., A technique for determining the seismic risk in deep-level mining, In Rockbursts and Seismicity in Mines (ed Young, R.P), (Balkema, Rotterdam, 1993), pp. 123-128.

Stock, C., and Smith E. (2002a), Adaptive Kernel Estimation and Continuous Probability Representation of Historical Earthquake Catalogs, Bull. Seismol. Soc. Am. 92, 901-912.

Stock, C., and Smith E. (2002b), Comparison between Seismicity Models Generated by Different Kernel Estimations, Bull. Seismol. Soc. Am. 92, 913-922.

Trifu, C.-I., Shumila, V., and Urbancic, T.I., Space-time analysis of microseismicity and its potential for estimating seismic hazard in mines, In Rockbursts and Seismicity in Mines (eds. Gibowicz, S.J. and Lasocki, S.) (Balkema, Rotterdam 1997) pp. 295-298.

Tsapanos, T. (1990), b-value of Two Tectonic Parts in the Circum-Pacific Belt, Pure Appl. Geophys. 143, 229-242, doi:10.1007/BF00876999.

Tsukakoshi Y. (2010), Mapping the η-Value and the Test Results on the Hyper-Gutenberg–Richter Relation for Microseismicity Around the Japanese Islands, Bull. Seismol. Soc. Am. 100, 876–881, doi:10.1785/0120080307.

Utsu, T. (1965), A Method for Determination of the Value of b in Formula log N = a - b M Showing the Magnitude-frequency Relation for Earthquakes, Geophys. Bull. Hokkaido Univ. 13, 99-103.

Utsu, T. (1999), Representation and Analysis of the Earthquake Size Distribution: A Historical Review and Some New Approaches, Pure Appl. Geophys. 155, 509–535, doi:10.1007/s00024005027.

Volant, P., Grasso, J. R., Chatelain, J. L., and Frogneux M. (1992), b-Value, Aseismic Deformation and Brittle Failure Within an Isolated Geological Object: Evidences From a Dome Structure Loaded by Fluid Extraction, Geophys. Res. Lett. 19, 1149–1152.

Wech, A., Kenneth, W., Creager, C., Houston, H., and Vidale, J. (2010), An Earthquake-Like Magnitude–Frequency Distribution of Slow Slip in Northern Cascadia, Geophys. Res. Lett. 37, L22310, doi:10.1029/2010GL044881.

Wesnousky, S.G. (1999), Crustal Deformation Processes and the Stability of the Gutenberg–Richter Relationship, Bull. Seismol. Soc. Am. 89, 1131-1137.

Wesnousky, S. G., Scholz, C. H., Shimazaki, K., and Matsuda, T. (1983), Earthquake Frequency Distribution and the Mechanics of Faulting, J. Geophys. Res. 88, 9331–9340, doi:10.1029/JB088iB11p09331.

Wiszniowski, J., Nguyen Van Giang, Plesiewicz, B., Lizurek, G., Lasocki, S., Dinh Quoc Van, Le Quang Khoi (2015), Preliminary Results of Anthropogenic Seismicity Monitoring in the Region of Song Tranh 2 Reservoir, Central Vietnam, Acta Geophysica 63, 843–862, doi:10.1515/acgeo-2015-0021.

Acknowledgments

This work was done in the framework of the project IS-EPOS: Digital Research Space of Induced Seismicity for EPOS Purposes (POIG.02.03.00-14-090/13-00), funded by the National Centre for Research and Development in the Operational Program Innovative Economy in the years 2013–2015. The work was also partially supported within statutory activities No 3841/E-41/S/2015 of the Ministry of Science and Higher Education of Poland. JAGUARS seismic data used in this study were provided by GFZ German Research Centre for Geosciences, Department 3: Geodynamics and Geomaterials, Section 3.2: Geomechanics and Rheology, Telegrafenberg, D14473 Potsdam, Germany and they are available on request. The Authors want to thank M. Nakatani, J. Philipp, Y. Yabe, H. Ogasawara, and co-workers from JAGUARS group for their work in installing and maintaining the JAGUARS network. AFdN thanks CNPq (National Counsel of Technological and Scientific Development—Brazil) for his fellowship.

Author information

Authors and Affiliations

Corresponding author

Appendix 1

Appendix 1

1.1 The Non-Parametric Kernel Estimator of Magnitude Distribution

Below the non-parametric kernel estimator of magnitude is presented in the version, which we use in probabilistic seismic hazard analyses and similar topics. A general description of the kernel estimation of distribution functions can be found, e.g. in the book by Silverman (1986).

The probability density function (PDF) of magnitude takes a non-zero value for the completeness level of catalogue, M c. In such cases the kernel estimation is performed on the doubled data set \(\left\{ {y_{1} ,{\kern 1pt} y_{2} , \ldots ,y_{2M} } \right\} = \left\{ {2M_{\text{c}} - M_{1} ,\,2M_{\text{c}} - M_{2} ,\, \ldots ,\,2M_{\text{c}} - M_{N} ,\,M_{1} ,\,M_{2} ,\, \ldots ,\,M_{N} } \right\}\), where {M i ), i = 1, …, N is the sample data.

When a magnitude distribution is believed to have no hard end point, the non-parametric kernel magnitude PDF estimator with the Gaussian kernel reads:

for M ≥ M c, and zero otherwise, where h is the positive smoothing factor, and λ i , i = 1, …, 2N are the local bandwidth factors widening the kernels associated with data points from the range where the data are sparse (e.g. Stock and Smith 2002a, b; Orlecka–Sikora and Lasocki 2005).

The magnitude cumulative distribution function (CDF) estimator reads:

for M ≥ M c, and zero otherwise, where \(\varPhi \left( u \right) = \frac{1}{{\sqrt {2\pi } }}\,\int\limits_{ - \infty }^{u} {e^{{ - \frac{{\xi^{2} }}{2}}} } d\xi\) is the CDF of the standard Gaussian distribution and the other symbols are as above.

The smoothing factor, h, is selected so as to minimize the integrated squared difference between the estimated and actual probability density (e.g. Bowman et al. 1984). Kijko et al. (2001) showed that in the case of Gaussian kernel function h is approximately a root of the equation:

The bandwidth factors are:

where

and

When a hard end point of magnitude distribution, M max, is assumed then the PDF and CDF magnitude estimators take the form of:

for M c ≤ M ≤ M max, \(\hat{f}\left( M \right) = \hat{F}\left( M \right) = 0\) for M < M c, and \(\hat{f}\left( M \right) = 0\) and \(\hat{F}\left( M \right) = 1\) for M > M max. We estimate M max using the generic formula (Cooke 1979; Kijko and Graham 1998) in the version recommended by Lasocki and Urban (2011)

where \(M_{\hbox{max} }^{\text{obs}}\) is the largest value in the data sample. This M max estimator is biased, and nomograms for the bias estimation can be found in Lasocki and Urban (2011).

Rights and permissions

About this article

Cite this article

Urban, P., Lasocki, S., Blascheck, P. et al. Violations of Gutenberg–Richter Relation in Anthropogenic Seismicity. Pure Appl. Geophys. 173, 1517–1537 (2016). https://doi.org/10.1007/s00024-015-1188-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-015-1188-5