Abstract

We prove that for a sequence of nested sets \(\{U_n\}\) with \(\Lambda = \cap _n U_n\) a measure zero set, the localized escape rate converges to the extremal index of \(\Lambda \), provided that the dynamical system is \(\phi \)-mixing at polynomial speed. We also establish the general equivalence between the local escape rate for entry times and the local escape rate for returns. Examples include a dichotomy for periodic and non-periodic points, Cantor sets on the interval, and submanifolds of Anosov diffeomorphisms on surfaces.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, there has been an increasing interest in open dynamical systems, which are dynamical systems with an invariant measure where one places a trap or hole in the phase space, and looks at the decay rate of the measure of points that are not caught by the trap up to some time (the survival set). This rate is known to be related to the rate of the correlations decay for the system (see [16]). When the correlations decay exponentially fast, the decay rate for the measure of the survival set is typically exponential and depends on the location and size of the trap. We invite the readers to the review article [5] for a general overview on this topic.

When the decay rate for the measure of the survival set is normalized by the measure of the trap, one obtains the localized escape rate as the measure of the trap goes to zero. Such problems are loosely related to the entry times and return times distribution but this similarity does not allow to deduce limiting statistics from each other since the limits are taken in different ways, and the rate of convergence for the entry times to its limiting distribution is usually insufficient for the study of escape rates.Footnote 1

In the past, local escape rates have been associated with either metric holes centered at a point whose radius decreased to zero or, in the presence of partitions, with cylinder sets which decrease to a single point. In this case, a dichotomy has been established for many systems which shows the local escape rate to be equal to one at non-periodic points and equal to the extremal index at periodic points. See the classical work [8] for conformal attractors, [2, 4] for the transfer operator approach for interval maps, and [14] for a probabilistic approach which applies to systems in higher dimension. This mirrors the behavior of the limiting return times distributions that are Poisson at non-periodic points, and Pólya–Aeppli compound Poisson at periodic points in which case the compounded geometric distribution has the weights given by the extremal index \(\theta \in (0,1)\). See [10, 12].

In this paper, we will generalize the concept of localized escape rates to the cases when the limiting set of the shrinking neighborhoods are not any longer points, periodic or non-periodic, but instead are allowed to be any null sets.Footnote 2 One of the key motivations is when the holes are opened around some lower dimensional submanifold in the phase space. We will use the recent progress developed in [13] which shows that the limiting return times distribution at null sets is compound Poisson in a more general sense where the associated parameters form a spectrum that is determined by the limiting cluster size distribution (for this see below the coefficients \(\alpha _k\)). Unlike the singleton case, however, the general relation between local escape rate and extremal index for null sets has not been discussed before.

We would like to point out that the conventional transfer operator method studied in [2] following the general setup in [16] (see also the book [19] and the references therein) heavily relies on the conformal structure and the fact that in dimension one, the indicator functions of the geometric balls \(B_r(x)\) have bounded BV normFootnote 3, uniform in r. This makes it difficult to generalize the results in [2] to the case where the limiting set \(\Lambda \) is a non-trivial null set, or to higher dimensional systems (especially those that are invertible, where the Banach space that the transfer operator acts are quite complicated). Our approach in this paper uses \(\phi \)-mixing to avoid those problems. In addition, we only assume the system to be \(\phi \)-mixing at polynomial speed (surprisingly, this is enough to deduce that the escape rate is exponential!) as opposed to [2, 16] where the unperturbed transfer operator needs to have a spectral gapFootnote 4. This assumption may still not be optimal. However, we believe that the same results does not hold for \(\alpha \)-mixing systems in general. See the counter examples in [2] for systems modeled by Young towers with first return map and polynomial tail, and note that such systems are \(\alpha \)-mixing at the same rate.

2 Statement of Results

Throughout this paper, we will assume that \((\mathbf{{M}}, T, {{\mathcal {B}}}, \mu )\) is a measure preserving system on some compact metric space \(\mathbf{{M}}\), with \({{\mathcal {B}}}\) the Borel \(\sigma \)-algebra. Unless otherwise specified, T is assumed to be non-invertible, although our result also holds in the invertible case, see Remark 2.5 and Theorems 4.12, 5.2 below. Let \({\mathcal {A}}\) be a measurable partition of \(\mathbf{{M}}\) (finite or countable). Denote, by \({\mathcal {A}}^n=\bigvee _{j=0}^{n-1}T^{-j}{\mathcal {A}},\) its nth join (in the invertible case, see Remark 2.5). Then \({\mathcal {A}}^n\) is a partition of \(\mathbf{{M}}\) and its elements are called n-cylinders. We assume that \({\mathcal {A}}\) is generating, that is \(\bigcap _nA_n(x)\) consists of the singletons \(\{x\}\).

Definition 2.1

-

(i)

The measure \(\mu \) is left \(\phi \)-mixing with respect to \({\mathcal {A}}\) if

$$\begin{aligned} |\mu (A \cap T^{-n-k} B) - \mu (A)\mu (B)| \le \phi _L(k)\mu (A) \end{aligned}$$for all \(A \in \sigma ({\mathcal {A}}^n)\), \(n\in {\mathbb {N}}\) and \(B \in \sigma (\bigcup _{j\ge 1} {\mathcal {A}}^j )\), where \(\phi (k)\) is a decreasing function which converges to zero as \(k\rightarrow \infty \). Here \(\sigma ({\mathcal {A}}^n)\) is the \(\sigma \)-algebra generated by n-cylinders.

-

(ii)

The measure \(\mu \) is right \(\phi \)-mixing w.r.t. \({\mathcal {A}}\) if

$$\begin{aligned} |\mu (A \cap T^{-n-k} B) - \mu (A)\mu (B)| \le \phi _R(k)\mu (B) \end{aligned}$$for all \(A \in \sigma ({\mathcal {A}}^n)\), \(n\in {\mathbb {N}}\) and \(B \in \sigma (\bigcup _j {\mathcal {A}}^j )\), where \(\phi (k)\searrow 0\).

-

(iii)

The measure \(\mu \) is \(\psi \)-mixing w.r.t. \({\mathcal {A}}\) if

$$\begin{aligned} |\mu (A \cap T^{-n-k} B) - \mu (A)\mu (B)| \le \psi (k)\mu (A)\mu (B) \end{aligned}$$for all \(A \in \sigma ({\mathcal {A}}^n)\), \(n\in {\mathbb {N}}\) and \(B \in \sigma (\bigcup _j {\mathcal {A}}^j )\), where \(\psi (k)\searrow 0\). Clearly \(\psi \)-mixing implies both left and right \(\phi \)-mixing with \(\phi (k) = \psi (k)\).

Remark 2.2

If it is clear from context which type of mixing we are referring to (as is always the case in this paper), then we will suppress the subscripts R, L.

We write, for any subset \(U\subset \mathbf{{M}}\),

the first entry time to the set U. Then \(\tau _U|_U\) is the first return time for points in U. We can now define the escape rate into U by

whenever the limit exists. It captures the exponential decay rate for the set of points whose orbits have not visited U before time t. Observe that if \(U\subset U'\) then \({\mathbb {P}}(\tau _{U'}>t)\le {\mathbb {P}}(\tau _{U}>t)\) and consequently \(\rho (U)\le \rho (U')\). We define the conditional escape rate as

where \({\mathbb {P}}_U\) is the conditioned measure on U.

We are particularly interested in the asymptotic behavior of \(\rho (U)\) along a sequence of positive measure sets \(\{U_n\}\) whose measure goes zero. For this purpose, we call \(\{U_n\}\) a nested sequence of sets if \(U_{n+1}\subset U_n\), and if \(\lim _n\mu (U_n) = 0\). For the measure zero set \(\Lambda = \cap _n U_n\), we define the localized escape rate at \(\Lambda \) as:

provided that the limit exists. We will show that under certain conditions, the localized escape rate of \(\Lambda \) exists and does not depend on the choice of \(U_n\).

2.1 Local Escape Rate for Unions of Cylinders

First, we consider with the case where each \(U_n\) is a union of \(\kappa _n\)-cylinders, for some non-decreasing sequence of integers \(\{\kappa _n\}\).

We make some assumptions on the sizes of the nested sequence \(\{U_n\}\). For each n and \(j\ge 1\), we define \({{\mathcal {C}}}_j(U_n) =\{A\in {{\mathcal {A}}}^j,A\cap U_n\ne \emptyset \}\) the collection of all j-cylinders that have non-empty intersection with \(U_n\). Then, we write

for the approximation of \(U_n\) by j-cylinders from outside. For each fixed j, \(\{U_n^j\}_n\) is also nested, that is, \(U_{n+1}^j\subset U_n^j\). Obviously, we have \(U_n\subset U_n^j\) for all j, and \(U_n=U_n^j\) if \(j\ge \kappa _n\).

Definition 2.3

A nested sequence \(\{U_n\in {{\mathcal {A}}}^{\kappa _n}\}\) is called a good neighborhood system, if:

-

(1)

\(\kappa _n\nearrow \infty \) and \(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\) for some \(\varepsilon \in (0,1)\);

-

(2)

there exists \(C>0\) and \(p'>1\) such that \(\mu (U^j_n)\le \mu (U_n) + Cj^{-p'}\) for all \(j<\kappa _n\).

2.1.1 Local Escape Rate and the Extremal Index

We make the following definitions, following [13]

For a positive measure set \(U\subset \mathbf{{M}}\), we define the higher-order entry times recursively:

For simplicity, we write \(\tau ^0_U = 0\) on U.

For a nested sequence \(\{U_n\}\), define

i.e., \({\hat{\alpha }}_\ell (K,U_n)\) is the conditional probability of having at least \((\ell -1)\) returns to \(U_n\) before time K. We shall assume that the limit \({\hat{\alpha }}_\ell (K)=\lim _{n\rightarrow \infty }{\hat{\alpha }}_\ell (K,U_n)\) exists for for \(K\in {{\mathbb {N}}}\) large enough and every \(\ell \ge 1\). By monotonicity the limits

exist as \({\hat{\alpha }}_\ell (K)\le {\hat{\alpha }}_\ell (K')\le 1\) for all \(K\le K'\). Since we put \(\tau ^0_U = 0\), it follows that \(\hat{\alpha }_1 = 1\). We will see later that the existence of the limits defining \({\hat{\alpha }}_{\ell }\) implies the existence of the following limits:

\(\alpha _1\in [0,1]\) is generally known as the extremal index (EI). See the discussion in Freitas et al. [9].

The next theorem shows that the escape rate is indeed given by the extremal index.

Theorem A

Assume that \(T:\mathbf{{M}}\rightarrow \mathbf{{M}}\) preserves a probability measure \(\mu \) that is right \(\phi \)-mixing with \(\phi (k)\le Ck^{-p}\) for some \(C>0\) and \(p>1\), and \(\{U_n\}\) is a good neighborhood system such that \(\{\hat{\alpha }_{\ell }\}\) defined in (3) exists, and satisfies \(\sum _{\ell }\ell {\hat{\alpha }}_{\ell }<\infty \).

Then \(\alpha _1\) defined by (4) exists, and the localized escape rate at \(\Lambda \) exists and satisfies

Remark 2.4

Theorem A has a similar formulation for left \(\phi \)-mixing systems. See Remark 4.7 and Theorems 4.12, 5.2 for more detail.

For Gibbs–Markov systems (for the precise definition, see Sect. 3) the same result is true:

Theorem B

Assume that \(T:\mathbf{{M}}\rightarrow \mathbf{{M}}\) is a Gibbs–Markov system with respect to the partition \({{\mathcal {A}}}\). Let \(\{U_n\}\) be a good neighborhood system such that \(\{\hat{\alpha }_{\ell }\}\) defined in (3) exists, and satisfies \(\sum _{\ell }\ell {\hat{\alpha }}_{\ell }<\infty \).

Then \(\alpha _1\) defined by (4) exists. Furthermore, the localized escape rate at \(\Lambda \) exists and satisfies

Remark 2.5

If T is invertible, then one has to define the n-join by

In this case it is useful to write, for \(m,n\in {{\mathbb {Z}}}\), \({{\mathcal {A}}}^n_m: = \bigvee _{j=m}^{n}T^{-j}{\mathcal {A}}\). In particular we have \({{\mathcal {A}}}^n = {{\mathcal {A}}}_{-n}^n\). The \(\phi \) and \(\psi \)-mixing properties are defined by the same formulas. For integers \(m,m',n,n'>0\), if \(U\in {{\mathcal {A}}}^n_{-m}, V \in {{\mathcal {A}}}^{n'}_{-m'}\) then for \(k>n+m'\), we have \(\mu (U\cap T^{-k} V) = \mu (T^{-m}U \cap T^{-k-m} V)\) where \(T^{-m}U\in {{\mathcal {A}}}_0^{m+n}\), \(T^{-k-m}V \in {{\mathcal {A}}}_{k+m-m'}^{k+m+n'}\). Note that \(k+m-m'>n+m > 0\), so the estimate can be treated in the same way as the non-invertible case with only minor adjustments. However, the approximation \(U^j_n\) need to be handled with care. See Remark 4.8 for a potential problem and the treatment.

2.1.2 In the Absence of Short Returns

We will see below that when points in \(\{U_n\}\) do not return to \(U_n\) “too soon”, then \(\alpha _1 = 1\). To formulate this, we define the period of U as:

and the essential period of U by:

\(\pi \) and \(\pi _{{\text {ess}}}\) mark the shortest return of points in U. Note that \(\pi (U)\le \pi _{{\text {ess}}}(U)\) for all measurable \(U\in \mathbf{{M}}\), and equality holds if T is continuous, U is open and \(\mu \) has full support.

Corollary C

Let \((\mathbf{{M}}, T, {{\mathcal {B}}}, \mu )\) be a measure preserving system. Assume that \(\{U_n\}\) is a good neighborhood system with \(\pi _{{\text {ess}}}(U_n) \rightarrow \infty \), and \((T,\mu ,{{\mathcal {A}}})\) satisfies one of the following two assumptions:

-

(1)

either \(\mu \) is right \(\phi \)-mixing with \(\phi (k)\le Ck^{-p}\) for some \(p>1\);

-

(2)

or T is Gibbs–Markov;

then the localized escape rate at \(\Lambda \) exists and satisfies

Combining Corollary C with [21, Proposition 6.3], we have:

Corollary D

The conclusion of Corollary C holds if the assumption “\(\pi _{{\text {ess}}}(U_n)\rightarrow \infty \)” is replaced by the following assumptions:

-

(1)

T is continuous, \(\Lambda = \cap _nU_n = \cap _n {\overline{U}}_n\);

-

(2)

\(\Lambda \) intersects every forward orbit at most once, that is, for every \(x\in \Lambda \) we have \(\Lambda \cap \{T^k(x) : k\ge 1\}=\emptyset \).

The proof of both corollaries can be found at the end of Sect. 4.2.

2.2 From Cylinders to Open Sets: Exceedance Rate for the Extreme Value Process

Next, we deal with the case where \(\{U_n\}\) consists of open sets. For this purpose, we consider a observable

which is continuous except when \({\varphi }(x)=+\infty \), such that the maximal value of \({\varphi }\), which could be positive infinite, is achieved on a \(\mu \) measure zero closed set \(\Lambda \). Then we consider the process generated by the dynamics of T and the observable \(\varphi :\)

Let \(\{u_n\}\) be a non-decreasing sequence of real numbers. We will think of \(u_n\) as a sequence of thresholds, and the event \(\{X_k>u_n\}\) marks an exceedance above the threshold \(u_n\). Also denote by \(U_n\) the open set

It is clear that \(\{U_n\}\) is a nested sequence of sets.

We are interested in the set of points where \(X_k(x)\) remains under the threshold \(u_n\) before time t. For this purpose, we put

and

Finally, define the exceedance rate of \({\varphi }\) along the thresholds \(\{u_n\}\) as:

We will make the following assumption on the shape of \(U_n\). For each \(r_n>0\), we approximate \(U_n\) by two open sets (‘o’ and ‘i’ stand for ‘outer’ and ‘inner’):

It is easy to see that

The following assumption requires \(U_n\) to be well approximable by \(U^{i/o}_n\).

Assumption 1

There exists a positive, decreasing sequence of real numbers \(\{r_n\}\) with \(r_n\rightarrow 0\), such that

Here o(1) means the term goes to zero under the limit \(n\rightarrow \infty \).

Theorem E

Assume that

-

(1)

either \(\mu \) is right \(\phi \)-mixing with \(\phi (k)\le Ck^{-p}\), \(p>1\);

-

(2)

or \((T,\mu ,{{\mathcal {A}}})\) is a Gibbs–Markov system.

Let \({\varphi }:\mathbf{{M}}\rightarrow {{\mathbb {R}}}\cup \{+\infty \}\) be a continuous function achieving its maximum on a measure zero set \(\Lambda \). Let \(\{u_n\}\) be a non-decreasing sequence of real numbers with \(u_n\nearrow \sup {\varphi }\), such that the open sets \(U_n\) defined by (5) satisfy Assumption 1 for a sequence \(r_n\) that decreases to 0. Assume \(\{\hat{\alpha }_{\ell }\}\), defined by (3), exist and satisfy \(\sum _{\ell }\ell \hat{\alpha }_{\ell }<\infty \). Let \(\kappa _n\) be the smallest positive integer for which \({\text {diam}}{{\mathcal {A}}}^{\kappa _n}\le r_n\) and assume:

-

(a)

\(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\) for some \(\varepsilon \in (0,1)\);

-

(b)

\(U_n\) has small boundary: there exist \(C>0\) and \(p'>1\), such that \(\mu \left( \bigcup _{A\in {{\mathcal {A}}}^j, A\cap B_{r_n}(\partial U_n) \ne \emptyset }A\right) \le C j^{-p'}\) for all n and \(j\le \kappa _n\).

Then the exceedance rate of \({\varphi }\) along \(\{u_n\}\) exists and satisfies

2.3 Conditional Escape Rate: A General Theorem

Our next theorem deals with the relation between the escape rate and the conditioned escape rate.

Theorem F

For any measure preserving ergodic system \((\mathbf{{M}}, T, {{\mathcal {B}}}, \mu )\) and any positive measure set \(U\in \mathbf{{M}}\), we have

assuming one of them exists and is positive.

Note that this theorem does not rely on the mixing assumption nor any information on the geometry of U. In particular, if one defines the localized conditional escape rate at \(\Lambda \) as

then we immediately have \(\rho (\Lambda , \{U_n\}) = \rho _{\Lambda }(\Lambda , \{U_n\})-\alpha _1\) under the assumptions of Theorem A or B.

2.4 Escape Rate on Young Towers With First Return Map and Exponential Tail

Young towers, also known as the Gibbs–Markov–Young structure, is first introduced by Young in [22, 23]. Young tower can be viewed as a discrete time suspension \((\Omega , T,\mu )\) over a Gibbs–Markov system \(({\tilde{\Omega } }, {\tilde{T} }, {\tilde{\mu } })\), such that the roof function R (in this case, it is usually call the return time function) is integrable with respect to the measure \({\tilde{\mu } }\). A dynamical system \((\mathbf{{M}},T)\) is modeled by a Young tower, if there exists a semi-conjugacy \(\Pi :\Omega \rightarrow \mathbf{{M}}\) that is one-to-one on the base of the tower \({\tilde{\Omega } }\). In this case, we say that the tower is defined using the first return map, if \(R(x) = \tau _{\Pi ({\tilde{\Omega } })}(\Pi (x))\) is indeed the first return map on \(\Pi ({\tilde{\Omega } })\).

To simply notation, we will use the notation \(\lesssim \) which means that the inequality holds up to some constant \(C>0\), uniform in n.

Theorem G

Assume that T is a \(C^{2}\) map modeled by Young tower defined using the first return map, such that the return time function R has exponential tail: There exists \(\lambda \in (0,1)\) such that

Let \(\{U_n\subset {\tilde{\Omega } }\}\) be a nested sequence of sets for which the base system \(({\tilde{\Omega } }, {\tilde{T} }, {\tilde{\mu } })\) satisfies the assumptions of Theorem B in the cylinder case, or Theorem E in the open set case. Then the localized escape rate at \(\Lambda =\cap _n U_n\) exists and satisfies

We would like to remark that similar results for escape rate under suspension have been obtained in [2] and [19] under slightly different settings.

2.5 Organization of the Paper

This paper is organized in the following way. In Sect. 3, we then state some properties that surround the parameters of very short returns whose presence is unaffected by the Kac timescale. In Sect. 4,, we then prove the main results for cylinder approximations of the zero measure target set \(\Lambda \). One crucial result here is Lemma 4.6 which yields the extremal index for the near zero time limiting distribution for entry times (as opposed to return times). Those results are then used in Sect. 5 to extend them to the case when the approximating sets are metric neighborhoods. In Sect. 6, we then provide a general argument which shows that the local escape rate for entry times is the same as the local escape rate for returns. In Sect. 7, we then show that the local escape rate persists for the induced map. Section 8 is dedicated to examples.

3 Preliminaries

3.1 Return and Entry Times Along a Nested Sequence of Sets

In this section, we recall the general results in [13] on the number of entries to an arbitrary null set \(\Lambda \) within a cluster.

Given a sequence of nested sets \(U_n,n=1,2,\ldots \) with \(U_{n+1}\subset U_n\), \(\cap _n U_n=\Lambda \) and \(\mu (U_n)\rightarrow 0\), we will fix a large integer \(K>0\) (which will be sent to infinity later) and assume that the limit

exists for K sufficiently large and for every \(\ell \in {{\mathbb {N}}}\). By definition \({\hat{\alpha }}_\ell (K)\ge {\hat{\alpha }}_{\ell +1}(K)\) for all \(\ell \), and \({\hat{\alpha }}_1(K)=1\) due to our choice of \(\tau ^0 = 0\) on U. Also note that \({\hat{\alpha }}_\ell (K)\) is non-decreasing in K for every \(\ell \). As a result, we have for every \(\ell \ge 1\):

Note that in the definition of \(\hat{\alpha }\), the cut-off for the short return time K does not depend on the set \(U_n\). Another way to study the short return properties for the nested sequence \(U_n\) is to look at

for some increasing sequence of integers \(\{s_n\}\), with \(s_n\mu (U_n)\rightarrow 0\) as \(n\rightarrow \infty \). This is the approach taken by Freitas et al in [11]. It is proven that for many systems (including Gibbs–Markov systems and Young towers with polynomial tails), we have \({\hat{\beta }}_\ell =\hat{\alpha }_{\ell }\). See [21, Proposition 5.4 and 6.2]

To demonstrate the power of desynchronizing K from n, recall that for any set U, the essential period of U is given by:

Then the following lemma can be easily verified using the definition of \(\hat{\alpha }\):

Lemma 3.1

Let \(\{U_n\}\) be a sequence of nested sets. Assume that \(\pi _{{\text {ess}}}(U_n)\rightarrow \infty \) as \(n\rightarrow \infty \), then \(\hat{\alpha }_\ell \) exists and equals zero for all \(\ell \ge 2\).

Proof

For each K, one can take \(n_0\) large enough such that \(\pi _{{\text {ess}}}(U_n)> K\) for all \(n > n_0\). Then for \(\ell \ge 2\),

since all the intersections have zero measure. \(\square \)

Note that \({\hat{\alpha }}_\ell (K)\) is the conditional probability to have at least \(\ell -1\) returns in a cluster with length K. If we consider the level set:

and its limit

then it is easy to see that

which, in particular, implies the existence of \(\alpha _\ell \).

Next, following [13] we put for every integer \(\ell >0\) and \(K>0\),

In other words, \(\lambda _\ell (K,U_n)\) is, conditioned on having an entry to the set \(U_n\), the probability to have precisely \(\ell \) entries in a cluster with length K. The next theorem provides the relation between \({\hat{\alpha }}_\ell \) and \(\lambda _\ell \):

Theorem 3.2

[13, Theorem 2] Assume that \(U_n\) is a sequence of nested sets with \(\mu (U_n)\rightarrow 0\). Assume that the limits in (7) exist for K large enough and every \(\ell \ge 1\). Also assume that \(\sum _{\ell =1}^{\infty }\ell {\hat{\alpha }}_\ell <\infty \).

Then

where \(\alpha _\ell = {\hat{\alpha }}_\ell -{\hat{\alpha }}_{\ell +1}\). In particular, the limit defining \(\lambda _\ell \) exists. Moreover, the average length of the cluster of entries satisfies

For more properties on \(\{\hat{\alpha }_\ell \}\), \(\{\alpha _{\ell }\}\) and \(\{\lambda _\ell \}\), we direct the readers to [13] and [21, Section 3].

3.2 Gibbs–Markov Systems

A map \(T:\mathbf{{M}}\rightarrow \mathbf{{M}}\) is called Markov if there is a countable measurable partition \({{\mathcal {A}}}\) on \(\mathbf{{M}}\) with \(\mu (A)>0\) for all \(A\in {{\mathcal {A}}}\), such that for all \(A\in {{\mathcal {A}}}\), T(A) is injective and can be written as a union of elements in \({{\mathcal {A}}}\). Write \({{\mathcal {A}}}^n=\bigvee _{j=0}^{n-1}T^{-j}{{\mathcal {A}}}\) as before, it is also assumed that \({{\mathcal {A}}}\) is (one-sided) generating.

Fix any \(\lambda \in (0,1)\) and define the metric \(d_\lambda \) on \(\mathbf{{M}}\) by \(d_\lambda (x,y) = \lambda ^{s(x,y)}\), where s(x, y) is the largest positive integer n such that x, y lie in the same n-cylinder. Define the Jacobian \(g=JT^{-1}=\frac{d\mu }{d\mu \circ T}\) and \(g_k = g\cdot g\circ T \cdots g\circ T^{k-1}\).

The map T is called Gibbs–Markov if it preserves the measure \(\mu \), and also satisfies the following two assumptions:

-

(i)

The big image property: there exists \(C>0\) such that \(\mu (T(A))>C\) for all \(A\in {{\mathcal {A}}}\).

-

(ii)

Distortion: \(\log g|_A\) is Lipschitz for all \(A\in {{\mathcal {A}}}\).

In view of (i) and (ii), there exists a constant \(D>1\) such that for all x, y in the same n-cylinder, we have the following distortion bound:

and the Gibbs property:

It is well known (see, for example, Lemma 2.4(b) in [18]) that Gibbs–Markov systems are exponentially \(\phi \)-mixing, that is, \(\phi (k)\lesssim \eta ^k\) for some \(\eta \in (0,1)\).

4 Escape Rate for Unions of Cylinders

This section contains the Proof of Theorem A, B and Corollary C, D. We will suppress the dependence of \(\rho \) on \(\{U_n\}\) and simply write \(\rho (\Lambda )\) for the local escape rate at \(\Lambda \).

4.1 The Block Argument

In this section, we will provide a general framework on the escape rate for polynomially \(\phi \)-mixing systems. The main lemma, which is Lemma 4.3, allows us to reduce the escape rate (which is on the points that do not enter U in a large time-scale) to the probability of having short entries.

First we introduce the following standard result for systems that are either left or right \(\phi \)-mixing. The proof can be found in [1, 14].

Lemma 4.1

[14, Lemma 4] Assume that \(\mu \) is either left or right \(\phi \)-mixing for the partition \({{\mathcal {A}}}\). For \(U\in \sigma ({{\mathcal {A}}}^{\kappa _n})\), let \(s,t>0\) and \(\Delta <\frac{s}{2}\) then we have

Iterating the previous lemma, we obtain:

Lemma 4.2

Assume that \(\mu \) is either left or right \(\phi \)-mixing for the partition \({{\mathcal {A}}}\). Let \(s>0\) and \(\Delta <\frac{s}{2}\). Define \(q=\left\lfloor \, \frac{s}{\Delta } \, \right\rfloor \), \(\eta =\frac{q}{q+1}\), and \(\delta =2(\Delta \mu (U)+\phi (\Delta -\kappa _n)).\) Assume that \(\delta ^\eta < {{\mathbb {P}}}(\tau _U>s)\), then there exists \(a(q)>0\) such that for every \(k\ge 2-q^{-1}\) that is an integer multiple of \(q^{-1},\) we have

Proof

We follow the proof of Theorem 1 in [14] and use induction. We first take \(a(q)>0\) large enough such that

Also note that for \(k \le k'\) we have \( {{\mathbb {P}}}(\tau _u> k's) \le {{\mathbb {P}}}(\tau _u > ks). \) Then for \(k\in [2-q^{-1}, 3]\) that is an integer multiple of \(q^{-1}\), we have

On the other hand, we have, for \(k\le 3\),

Combining with (13), this shows that (12) holds for \(k\in [2-q^{-1}, 3]\) that is an integer multiple of \(q^{-1}\).

For \(k>3\), we use induction on \(m=k\cdot q\in {{\mathbb {N}}}\):

The second inequality follows from the induction assumption. We justify the last inequality as follows. By definition of \(\eta ,\) we have \(\delta =\delta ^\eta \delta ^{\frac{\eta }{q}}\le \delta ^\eta ({{\mathbb {P}}}(\tau _U>s) +\delta ^\eta )^{q^{-1}}.\) Consider the bracketed term in the forth line:

By induction this completes the proof of the right-hand-side of (12). The proof of the left-hand-side is largely analogous (with \(\delta \) replaced by \(-\delta \) and the direction of the inequality reversed) and thus omitted. \(\square \)

The next lemma establishes the relation between the escape rate and the probability of short entries:

Lemma 4.3

Assume that \(\mu \) is either left or right \(\phi \)-mixing for the partition \({{\mathcal {A}}}\), with \(\phi (k)\le Ck^{-p}\) for some \(p>0\). Let \(\{U_n\in \sigma ({{\mathcal {A}}}^{\kappa _n})\}\) be a nested sequence of sets for some \(\kappa _n\nearrow \infty \). Furthermore, assume that there exists \(\varepsilon \in (0,1)\), such that \(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\).

Then we have

where \(s_n= \left\lfloor \, \mu (U_n)^{-(1-a)} \, \right\rfloor \) for any fixed \(a>0\) small enough.

Remark 4.4

At first glance, the RHS of (14) is similar to the definition of the local escape rate in (1). However, since \(s_n \ll \mu (U_n)^{-1}\) (where the latter is the average return time given by Kac’s formula), \({{\mathbb {P}}}(\tau _{U_n}\le s_n)\) concerns the probability of short entries to U. A similar observation was made in [2].

Proof

Let \(\{s_n\}, \{\Delta _n\}\) be increasing sequences of positive integers with \(\Delta _n<s_n/2\), whose choice will be specified later. Write \(q_n = \left\lfloor \, s_n/\Delta _n \, \right\rfloor \), \(\eta _n = \frac{q_n}{q_n+1}\) and \(\delta _n = 2(\Delta _n\mu (U_n) + \phi (\Delta _n-\kappa _n))\) as before. Our choice of \(s_n\) and \(\Delta _n\) below will guarantee that \(\delta _n^{\eta _n} = o(s_n\mu (U_n))\), which also implies that \(\delta _n^{\eta _n}< {{\mathbb {P}}}(\tau _{U_n} > s_n)\).

We again follow largely the Proof of Theorem 1 in [14] and get by Lemma 4.2

Taking limit as \(k\rightarrow \infty \) (with n fixed) and note that \({{\mathbb {P}}}(\tau _{U_n}>s_n) = 1-{{\mathbb {P}}}(\tau _{U_n}\le s_n)\), we obtain

Here we used the trivial estimate

Divide (15) by \(\mu (U_n)\) and let \(n\rightarrow \infty \), we obtain

It remains to show that the second term converges to zero for some proper choice of \(\{s_n\}\) and \(\Delta _n\). For this purpose, we fix some \(a\in (0,1), b\in (\varepsilon ,1)\) and choose \(s_n= \left\lfloor \, \mu (U_n)^{-(1-a)} \, \right\rfloor \), and \(\Delta _n = \left\lfloor \, \mu (U_n)^{-b} \, \right\rfloor \gg \kappa _n = o(\mu (U_n)^{-\varepsilon })\). Then we have:

In order for both terms to go to zero, we need:

-

(1)

\(1-a>b\), which guarantees that \(s_n\gg \Delta _n\), so \(q_n\rightarrow \infty \) and consequently \(\eta _n\nearrow 1\); then the first term will go to zero;

-

(2)

\(bp>a\), so that the second term goes to zero.

Both requirements are satisfied if we take any \(b\in (\varepsilon ,1)\), then choose \(0<a<\min \{1-b,bp\}\). Combining this with (17), we conclude that

as desired. \(\square \)

In the remaining part of this section, we will prove that the RHS of (14) coincides with the extreme index defined by (4). But before we move on, let us state a direct corollary of the previous lemma, which is interesting in its own right.

Proposition 4.5

Assume that \(\mu \) is either left or right \(\phi \)-mixing for the partition \({{\mathcal {A}}}\), with \(\phi (k)\le Ck^{-p}\) for some \(p>0\). Let \(\{U_n\in \sigma ({{\mathcal {A}}}^{\kappa _n})\}\) be a nested sequence of sets for some \(\kappa _n\nearrow \infty \). Furthermore, assume that there exists \(\varepsilon \in (0,1)\), such that \(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\).

Then we have

provided that the local escape rate at \(\Lambda \) exists.

Proof

The lower bound is clear. For the upper bound, the trivial estimate (16) yields

\(\square \)

4.2 Proof of Theorem A and B

First we prove Theorem A using the following lemma, which is stated for right \(\phi \)-mixing systems. The proof can be adapted for left \(\phi \)-mixing systems as well, with certain modification on the assumptions of \(U_n\) (in particular, on how \(U_n\) can be approximated by shorter cylinders). See Remark 4.7 below and the discussion in Sect. 4.3.

Lemma 4.6

Let \(\mu \) be right \(\phi \)-mixing for the partition \({{\mathcal {A}}}\), with \(\phi (k) \le Ck^{-p}\) for some \(p>1\). Assume that \(\{U_n\}\) is a good neighborhood system, such that \({\hat{\alpha }}_\ell (K)\) exists for K large enough, and \(\sum _{\ell }\hat{\alpha }_\ell <\infty \). Then we have

for any increasing sequence \(\{s_n\}\) for which \(s_n\mu (U_n)\rightarrow 0\) as \(n\rightarrow \infty \).

Proof

For an given integer s, write \(Z_n^{s} = \sum _{j=1}^s{\mathbb {I}}_{U_n}\circ T^j\) which counts the number of entries to \(U_n\) before time s. Let K be a large integer, then by [13] Lemma 3 for every \(\varepsilon >0\) one has \({\mathbb {P}}(\tau _{U_n}\le K)=\alpha _1K\mu (U_n)(1+{\mathcal {O}}^*(\varepsilon ))\) for all n large enough, where the notation \({\mathcal {O}}^*\) means that the implied constant is one (i.e., \(x={\mathcal {O}}^*(\varepsilon )\) if \(|x|< \varepsilon \)). For simplicity, assume \(r=s_n/K\) is an integer and put

\(q=0,1,\dots ,r-1\), and

Then

is a disjoint union. Let us now estimate

To bound \(\mathrm{II}\), note that \(\{Z_n^{s_n - (q+1)K - 2\kappa _n}\circ T^{(q+1)K+2\kappa _n}\ge 1\}\) is the event of having a hit between \([(q+1)K+2\kappa _n, s_n]\). We cut this interval into \(t_n = \left\lfloor \, \frac{s_n - (q+1)K - 2\kappa _n}{K} \, \right\rfloor \ge 0\) (II is void when \(t_n\) is negative) many blocks with length K. This allow us to estimate:

where we used \((t_n +1)\) many blocks instead of \(t_n\) to cover the remaining \(\le K\) many hits at the end. The third inequality follows from right \(\phi \)-mixing, and the last line is due to \(\mu (Z_n^K\ge 1) = \mu (V_q)\).

For the third term in (19), we use right \(\phi \)-mixing again to get (and recall that \(U_n^j\) is the outer approximation of \(U_n\) by j-cylinders):

where the last equality follows from

Combining the previous estimates, we get

where

and \(\phi ^1(u)=\sum _{i=u}^\infty \phi (i)\) is the tail-sum of \(\phi \) which by assumption goes to zero as u goes to infinity.

If n is large enough so that \(\max \{s_n\mu (U_n), \kappa _n\mu (U_n), \phi ^1(\kappa _n)\}<\varepsilon \), then

where we used the assumption that \(\mu (U_n^{i})\le \mu (U_n)+ Ci^{-p'}\) for some \(p'>1\). Consequently

and since \(\{Z_n^{qK}\ge 1,V_q\}=V_q\) and \(\mu (V_q)=\mu (V_0)\) we get

Since by [13] Lemma 3 \(\mu (V_0)=\alpha _1K\mu (U_n)(1+{\mathcal {O}}^*(\varepsilon ))\), we obtain

The statement of the lemma now follows if we let \(\varepsilon \rightarrow 0\) and then \(K\rightarrow \infty \).

\(\square \)

Remark 4.7

Similar to the previous lemmas which hold for both left and right \(\phi \)-mixing measures, Lemma 4.6 has a similar formulation in the left \(\phi \)-mixing case. The estimate of II in (19) is mostly the same (see the proof of Lemma 4.9 below for more detail). However, this would require us to modify the definition of the approximated sets \(U^i_n\) as

with the assumption that the measure of \(\tilde{U}^i_n\) is small (preferably summable in i, similar to (2) in Definition 2.3). This is indeed the treatment in [14, Lemma 3] when \(\Lambda = \{x\}\). However, such an assumption may not hold when \(\Lambda \) is a non-singleton null set. The right \(\phi \)-mixing property avoids this problem.

Remark 4.8

So far we have assumed that T is non-invertible. This is because in the invertible case, the approximation \(U^j_n\) and \({\tilde{U}}^j_n\) may become the entire space. As an example, take \(\mathbf{{M}}=\Omega \) to be a full, two-sided shift space and \(T=\sigma \) the left-shift. Let the sets \(U_n\) be n-approximation of an unstable leaf \(\Gamma \) through a non-periodic point \(x\in \Omega \), e.g., \(\Gamma =\{y\in \Omega : y_i=x_i\;\forall \;i\le 0\}\). Obviously \(\Gamma \) is a null set but in this case we get that \(\tilde{U}^j=\Omega \) the entire space whenever \(i<n/2\). For a geometric example,let T be an Anosov diffeomorphisms on \({{\mathbb {T}}}^n\) with minimal unstable foliations and \(\Lambda \) be the local unstable manifold at some \(x\in \mathbf{{M}}\). Then \(T^j \Lambda \) eventually becomes \(\varepsilon \)-dense in \(\mathbf{{M}}\), and the approximation \(\tilde{U}^i_n\) (with respect to a Markov partition \({{\mathcal {A}}}\)) is the entire space for i small. By symmetry and Remark 4.8, we see that if \(\Lambda \) is chosen to be a local stable manifold then \(U^j_n = \mathbf{{M}}\) for j small.

On the other hand, in the proof of Lemma 4.6, the approximation \(U^j_n\) is only used to control III of (19). Later this observation will allow us to obtain a result for invertible systems where this term does not appear. See Theorem 4.12 and 5.2 below.

Below we state an alternate version of Lemma 4.6 where the right \(\phi \)-mixing assumption is replaced by the Gibbs–Markov property. This allows us to bypass the issue stated in Remark 4.7 and keep the choice of \(U_n^i\).

Lemma 4.9

Let \((T,\mu ,{{\mathcal {A}}})\) be a Gibbs–Markov system. Assume that \(\{U_n\}\) is a good neighborhood system, such that \({\hat{\alpha }}_\ell (K)\) exists for K large enough, and \(\sum _{\ell }\hat{\alpha }_\ell <\infty \). Then we have

for any increasing sequence \(\{s_n\}\) for which \(s_n\mu (U_n)\rightarrow 0\) as \(n\rightarrow \infty \).

Proof

Recall that Gibbs–Markov systems are left \(\phi \)-mixing with exponential rate. The proof follows the lines of Lemma 4.6 up to Eq. (19), which is now estimated using the left \(\phi \)-mixing as:

Note that the proof in this case is much short and the bound is almost the same as before.

For III, we first split the term into the summation over the intersections of \(U_n\) with \(T^iU_n\):

Each term in the summation can be bounded by:

where the third and forth inequality follow from the distortion and the big image property of Gibbs–Markov systems. See [21, Theorem D].

Then

for some \(c_1\) and the rest of the proof is identical to Lemma 4.6. \(\square \)

Now Theorem A and B are immediate consequences of Lemmas 4.3, 4.6 and 4.9 .

Proof of Corollary C

This corollary directly follows from Lemma 3.1. \(\square \)

Proof of Corollary D

We need the following proposition from [21]:

Proposition 4.10

[21, Proposition 6.3] Let T be a continuous map on the compact metric space \(\mathbf{{M}}\), and \(\{U_n\}\) a nested sequence of sets such that \(\cap _nU_n = \cap _n {\overline{U}}_n\). Then \(\pi (U_n) \rightarrow \infty \) if and only if \(\Lambda =\cap _nU_n \) intersects every forward orbit at most once.

Since \(\pi _{{\text {ess}}}(U)\ge \pi (U)\), we have \(\pi _{{\text {ess}}}(U_n)\rightarrow \infty \). Combined with Corollary C, we obtain Corollary D. \(\square \)

4.3 Some Remarks on the Extremal Index

In the classic literature (for example, [7, 9, 11]), the extremal index is defined as

where \(K_n\rightarrow \infty \) is some increasing sequence of integers. It is shown in [21, Proposition 5.4] that under the assumption of Theorem B we have

It is also straight forward to check that the Proof of Lemmas 4.6 and 4.9 remain true with \(\alpha _1\) replaced by \(\theta \). We state this as the following proposition:

Proposition 4.11

Assume that one of the following assumptions holds:

-

(1)

either \(\mu \) is right \(\phi \)-mixing with \(\phi (k)\lesssim k^{-p}\), \(p>1\);

-

(2)

or \((T,\mu ,{{\mathcal {A}}})\) is a Gibbs–Markov system.

Let \(\theta \) be the extremal index defined by (20) for some sequence \(\{K_n\}\). Then for any good neighborhood system \(\{U_n\}\) and any increasing sequence \(\{s_n\}\) with \(s_n\mu (U_n)\rightarrow 0\) and \(s_n/K_n\rightarrow \infty \), we have

Furthermore, the local escape rate at \(\Lambda = \bigcap _n U_n\) exists and satisfies

Note that in the Proof of Lemma 4.6, the bound on II of (19) holds for both left and right \(\phi \)-mixing systems, as already demonstrated in Lemma 4.9. On the other hand, for \(\theta \) defined by (20), III of (19) does not exist when \(K_n > \kappa _n^2\). By Remarks 4.7 and 4.8 , we can drop the right \(\phi \)-mixing and the Gibbs–Markov assumption, obtaining the following theorem for left \(\phi \)-mixing systems that is either invertible or non-invertible:

Theorem 4.12

Assume that \(T:\mathbf{{M}}\rightarrow \mathbf{{M}}\) is a dynamical system, either invertible or non-invertible, and preserves a measure \(\mu \) that is left \(\phi \)-mixing with \(\phi (k)\le Ck^{p}\) for some \(C>0\) and \(p>1\). Let \(\{U_n\in {{\mathcal {A}}}^{\kappa _n}\}\) be a nested sequence of sets with \(\kappa _n \mu (U_n)^\varepsilon \rightarrow 0\) for some \(\varepsilon \in (0,1)\).

Assume that \(\theta \) defined by (20) exists for some sequence \(\{K_n\}\) with \(K_n > \kappa _n^2\). Then the localized escape rate at \(\Lambda \) exists and satisfies

5 Escape Rate for Open Sets: An Approximation Argument

First, observe that

As a result, we have

therefore we have

The following proposition allows us to replace \(\{U_n\}\) by its cylinder-approximation.

Proposition 5.1

Let \(\{U_n\}\), \(\{V_n\}\) and \(\{W_n\}\) be sequences of nested sets with \(V_n\subset U_n\subset W_n\) for each n, and \(\Lambda = \bigcap _n U_n = \bigcap _n V_n = \cap _n W_n\). Assume that

and \(\rho (\Lambda , \{W_n\})=\rho (\Lambda , \{V_n\}) = \alpha \).

Then

Proof

\(V_n\subset U_n\subset W_n\) implies that \(\tau _{W_n}\ge \tau _{V_n} \ge \tau _{U_n}\) and therefore

On the other hand, (21) means that \(\mu (W_n)/\mu (V_n)\rightarrow 1\). We thus obtain

and the proposition follows from the squeeze theorem. \(\square \)

Proof of Theorem E

For the sequence \(\{r_n\}\) given in Assumption 1, we write \(\kappa _n\) for the smallest integer such that \({\text {diam}}{{\mathcal {A}}}^{\kappa _n} \le r_n\). Then consider

Clearly we have \(V_n\subset U_n\subset W_n\) for each n. Moreover, the choice of \(\kappa _n\) gives

Combine this with (6), we have \(\mu (W_n\setminus V_n) = o(1)\mu (V_n).\)

Let us write \({\hat{\alpha }}_\ell ^*\), \(* = U,V,W\) for \({\hat{\alpha }}_\ell \) defined using \(\{U_n\},\{V_n\},\{W_n\}\), respectively. Then it is proven in [21, Lemma 5.6] that

In particular, \(\sum _\ell \ell {\hat{\alpha }}_\ell ^U<\infty \) implies that the same holds for \({\hat{\alpha }}_\ell ^*\), \(* = V, W\), and the value of \(\alpha _1\) defined by \(\{V_n\}, \{U_n\}, \{W_n\}\) are equal.

It remains to show that \(\{V_n\}\) and \(\{W_n\}\) are good neighborhood systems. (1) of Definition 2.3 holds due to (a) in Theorem E. For (2) of Definition 2.3, observe that

thanks to (b) in Theorem E. A similar argument shows that \(\{W_n\}\) is also a good neighborhood system.

Now we can apply Theorem A or B on \(\{V_n\}\) and \(\{W_n\}\) to obtain

It then follows from Proposition 5.1 that \(\rho (\Lambda , \{U_n\}) = \alpha _1\). This concludes the Proof of Theorem E. \(\square \)

Similar to Theorem 4.12, when the extremal index \(\theta \) is defined as

for some sequence \(K_n > \kappa _n^2\), the conditions on the right \(\phi \)-mixing and \(V_n^j\) can be dropped. We thus obtain the following version of Theorem 4.12 for open sets \(\{U_n\}\):

Theorem 5.2

Assume that \(T:\mathbf{{M}}\rightarrow \mathbf{{M}}\) is a dynamical system, either invertible or non-invertible, and preserves a measure \(\mu \) that is left \(\phi \)-mixing with \(\phi (k)\le Ck^{p}\) for some \(C>0\) and \(p>1\).

Let \({\varphi }:\mathbf{{M}}\rightarrow {{\mathbb {R}}}\cup \{+\infty \}\) be a continuous function achieving its maximum on a measure zero set \(\Lambda \). Let \(\{u_n\}\) be a non-decreasing sequence of real numbers with \(u_n\nearrow \sup {\varphi }\), such that the open sets \(U_n\) defined by (5) satisfy Assumption 1 for a sequence \(r_n\) that decreases to 0 as \(n\rightarrow \infty \). Let \(\kappa _n\) be the smallest positive integer for which \({\text {diam}}{{\mathcal {A}}}^{\kappa _n}\le r_n\) and assume that:

-

(i)

\(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\) for some \(\varepsilon \in (0,1)\);

-

(ii)

\(U_n\) has small boundary: there exist \(C>0\) and \(p'>1\), such that \(\mu \left( \bigcup _{A\in {{\mathcal {A}}}^j, A\cap B_{r_n}(\partial U_n) \ne \emptyset }A\right) \le C j^{-p'}\) for all n and \(j\le \kappa _n\);

-

(iii)

the extremal index \(\theta \) defined by (20) exists for some sequence \(K_n > \kappa _n^2\).

Then the exceedance rate of \({\varphi }\) along \(\{u_n\}\) exists and satisfies

6 The Conditional Escape Rate

In this section, we will prove Theorem F.

First we establish the following relation between the hitting times and return times.

Lemma 6.1

For any set \(U\subset M\) with \(\mu (U)>0,\) let \(A_k:=\{x\in \mathbf{{M}}|\tau _U\ge k\},\) and \(B_k:=\{x\in U|\tau _U\ge k\}=A_k\cap U.\) Then we have

Proof

By definition we have \(A_{k+1}\subset A_k.\) Thus, we compute

where the third equality follows from the invariance of \(\mu .\) \(\square \)

Next, we need the following arithmetic lemma on the exponential decay rate for a sequence of real numbers \(\{a_n\}\) and its difference sequence \(\{b_n = a_n - a_{n+1}\}\).

Lemma 6.2

Suppose that \(\{a_n\}\) is a decreasing sequence of positive real numbers with \(a_n\searrow 0\). Let \(b_n=a_n-a_{n+1}.\) Suppose, also, that \(b_n\) is non-increasing. Then the following statements are equivalent:

-

(1)

\(\lim _{n\rightarrow \infty }-\frac{\log a_n}{n}=\vartheta \) for some \(\vartheta >0\);

-

(2)

\(\lim _{n\rightarrow \infty }-\frac{\log b_n}{n}=\vartheta \) for some \(\vartheta >0\).

Remark 6.3

Note that there are counter-examples for which the statement of the lemma fails without the monotonicity assumption on the sequence \(\{b_n\}\).

Proof

First note that since \(a_n\searrow 0\) we have \(a_n = \sum _{k\ge n} b_k\); therefore (2) \(\implies \) (1) is obvious. It thus remains to show that (1) \(\implies \) (2). Let \(\gamma >1\) be fixed. Since by assumption the limit \(\lim _{n\rightarrow \infty }\frac{1}{n}|\log a_n|=\vartheta \) exists and \(\vartheta >0\), there is an N so that

for some positive \(\varepsilon <(\gamma -1)\vartheta /4\). Hence

which implies

for all n large enough (assuming \(\varepsilon <\frac{\vartheta }{2}\)). Since

we get by monotonicity of the sequence \(b_j\)

and consequently \(a_n\le 2b_n(\gamma -1)n\). Hence

which in the limit \(n\rightarrow \infty \) yields

and

Since this applies to every \(\gamma >1\) we obtain that \(\lim _{n\rightarrow \infty }\frac{1}{n}\log b_n=-\vartheta \). \(\square \)

Proof of Theorem F

Let \(a_k=\mu (A_k) = {{\mathbb {P}}}(\tau _U\ge k)\) and \(b_k=\mu (U)\mu (B_k) = \mu (U){{\mathbb {P}}}_U(\tau _U\ge k).\) Since \(\mu \) is assumed to be ergodic one has \(a_k\searrow 0\). Also note that \(b_k\) is non-increasing. Now the theorem follows from Lemma 6.2,

\(\square \)

7 Escape Rate Under Inducing

In this section, we will state a general theorem for the local escape rate under inducing. For this purpose, we consider a measure preserving dynamical system \(({\tilde{\Omega } }, {\tilde{T} },{\tilde{\mu } })\) with \({\tilde{\mu } }\) being a probability measure. Given a measurable function \(R: {\tilde{\Omega } }\rightarrow {{\mathbb {Z}}}^+\) consider the space \(\Omega = {\tilde{\Omega } }\times {{\mathbb {Z}}}^+ /\sim \) with the equivalence relation \(\sim \) given by

Define the (discrete-time) suspension map over \({\tilde{\Omega } }\) with roof function R as the measurable map T on the space \(\Omega \) acting by

We will call \(\Omega \) a tower over \({\tilde{\Omega } }\) and refer to the set \(\Omega _k:=\{(x,k): x\in {\tilde{\Omega } }, k<R(x)\}\) as the kth floor where \({\tilde{\Omega } }\) can be naturally identified with the 0th floor called the base of the tower.

For \(0\le k < i\), set \(\Omega _{k,i} = \{(x,k): R(x) = i\}\). The map

is naturally viewed as a projection from the tower \(\Omega \) to the base \({\tilde{\Omega } }\) and for any given set \(U\subset \Omega \) we will write

The measure \({\tilde{\mu } }\) can be lifted to a measure \({\hat{\mu }}\) on \(\Omega \) by

It is easy to verify that \({\hat{\mu }}\) is T-invariant and if \({\tilde{\mu } }(R) = \int R\, d{\tilde{\mu } }<\infty \) then \({\hat{\mu }}\) is a finite measure. In this case, the measure

is a T-invariant probability measure on \(\Omega \).

We write \({\tilde{U}} = \Pi (U)\subset {\tilde{\Omega } }\), \({\tilde{\Lambda }} = \cap _n{\tilde{U}}_n\) and define \({\tilde{\rho }}({\tilde{\Lambda }}, \{{\tilde{U}}_n\})\) to be the localized escape rate at \({\tilde{\Lambda }}\) for the system \(({\tilde{\Omega } }, {\tilde{T} },{\tilde{\mu } })\). The following theorem relates the escape rate of the base system with that of the suspension. A similar result is obtained for continuous suspensions under the assumption that R is bounded, see [6].

Theorem 7.1

Let \((\Omega , T, \mu )\) be a discrete-time suspension over an ergodic measure preserving system \(({\tilde{\Omega } }, {\tilde{T} }, {\tilde{\mu } })\) with a roof function R satisfying the following assumptions:

-

(1)

R has exponential tail: there exists \(C, c>0\) such that \({\tilde{\mu } }(R>n) \le Ce^{-cn}\);

-

(2)

exponential large deviation estimate: for every \(\varepsilon >0\) small, there exists \(C_\varepsilon , c_\varepsilon >0\) such that the set

$$\begin{aligned} B_{\varepsilon , k} = \left\{ y\in {\tilde{\Omega } }: \left| \frac{1}{n}\sum _{j=0}^{n-1}R(\tilde{T}^jy_0) - \frac{1}{\mu (\Omega _0)} \right| >\varepsilon \hbox { for some } n\ge k\right\} , \end{aligned}$$satisfies \({\tilde{\mu }}(B_{\varepsilon ,k}) \le C_\varepsilon e^{-c_\varepsilon k}\).

Then for every nested sequence \(\{U_n\}\), we have

Proof

The result of this theorem is in fact hidden in the proof of Theorem 4 of [14] and Theorem 3.2 (1) in [2]. We include the proof here for completeness.

For every \(y= (x,m)\in \Omega \), we take \(y_0 = x\in {\tilde{\Omega } }\). Then we have

where \({\tilde{\tau }}\) is the return times defined for the system \(({\tilde{\Omega } }, {\tilde{T} },{\tilde{\mu } })\). By the Birkhoff ergodic theorem on \(({\tilde{\Omega } },\tilde{T},{\tilde{\mu }})\), we see that

where we apply the Kac’s formula on the last equality and use the fact that \(\mu \) is the lift of \({\tilde{\mu }}\).

On the other hand, since the return time function R has exponential tail, we get, for each \(\varepsilon >0\) and t large enough,

To simplify notation, we introduce the set (n is fixed)

Combine (23) with the previous estimates on \(B_{\varepsilon ,k}\), for \(k=t(1+\varepsilon )\) we get

Note that \(A_t\) contains the set

and is contained in

Now we are left to estimate \(\mu (A_t^\pm )\). Since \(\mu \) is the lift of \({\tilde{\mu }}\), we have

where

Let \(\alpha = {\tilde{\rho }}({\tilde{\Lambda }}, \{{\tilde{U}}_n\})\). Then we have (recall that \({\tilde{\mu }}({\tilde{U}}_n) \mu (\Omega _0)=\mu (U_n)\))

By (25), we get that

For each \(\varepsilon >0\) we can take \(n_0\) large enough, such that for \(n>n_0:\)

It then follows that the right-hand-side of (24) is of order \(o(\mu (A^\pm _t)).\) We thus obtain

for every \(\varepsilon >0\). This shows that \(\rho (\Lambda , \{U_n\})=\alpha = {\tilde{\rho }}({\tilde{\Lambda }}, \{{\tilde{U}}_n\})\). \(\square \)

Proof of Theorem G

Young towers can be seen as discrete-time suspension over Gibbs–Markov maps. Moreover, the exponential tail of \(\mu (R>n)\) implies the exponential large deviation estimate (see for example [2] Appendix B). Therefore, Theorem G immediately follows from Theorems B, E and 7.1. \(\square \)

8 Examples

8.1 Periodic and Non-periodic Points Dichotomy

First we consider the case where \(\Lambda = \{x\}\) is a singleton, and \(U_n = B_{\delta _n}(x)\) is a sequence of balls shrinking to x. Alternatively one could take \(\varphi (y) = g(d(y,x))\) for some function \(g(x): {{\mathbb {R}}}\rightarrow {{\mathbb {R}}}\cup \{+\infty \}\) achieving its maximum at 0 (for example, \(g(y) = -\log y\)) and let \(u_n\nearrow \infty \) be a sequence of threshold tending to infinity. Then \(U_n = \{y:\varphi (y) > u_n\}\) is a sequence of balls with diameter shrinking to zero.

This situation has been dealt with in [2] for certain interval maps, and in [14] for maps that are polynomially \(\phi \)-mixing. A dichotomy is obtained: when x is non-periodic the local escape rate is 1; when x is periodic then \(\rho (x) = 1-\theta \) where

where m is the period of x. When \(\mu \) is an equilibrium state for some potential function h(x) with zero pressure, one has \(\theta = e^{S_mh(x)}\) where \(S_m\) is the Birkhoff sum. See [2].

Note that if x is non-periodic then one naturally deduces that \(\pi (U_n)\nearrow \infty \) (see for example [14, Lemma 1]). When x is periodic, in [13, Section 8.3] it is shown that \({\hat{\alpha }}_\ell = \theta ^{l-1}\) is a geometric distribution. In particular, one has \(\sum _\ell \ell {\hat{\alpha }}_\ell <\infty \) and \(\alpha _1 = 1-\theta .\) This leads to the following theorem:

Theorem 8.1

Assume that

-

(1)

either \(\mu \) is right \(\phi \)-mixing with \(\phi (k)\le Ck^{-p}\), \(p>1\);

-

(2)

or \((T,\mu ,{{\mathcal {A}}})\) is a Gibbs–Markov system.

Assume that \(0<r_n<\delta _n\) satisfies

Write \(\kappa _n\) for the smallest positive integer with \({\text {diam}}{{\mathcal {A}}}^{\kappa _n}\le r_n\). We assume that:

-

(a)

\(\kappa _n\mu (U_n)^\varepsilon \rightarrow 0\) for some \(\varepsilon \in (0,1)\);

-

(b)

\(U_n\) has small boundary: there exists \(C>0\) and \(p'>1\), such that \(\mu \left( \bigcup _{A\in {{\mathcal {A}}}^j, A\cap B_{r_n}(\partial U_n) \ne \emptyset }A\right) \le C j^{-p'}\) for all n and \(j\le \kappa _n\).

-

(c)

when x is periodic with period m, \(\theta \) defined by (26) exists.

Then we have

This theorem improves [14, Theorem 2] by dropping the assumption \(\theta < 1/2\). Also note that such results can be generalized to interval maps which can be modeled by Young towers using Theorem G.

8.2 Cantor Sets for Interval Expanding Maps

For simplicity, below we will only consider the Cantor ternary set. However, the argument below can be adapted to a large family of dynamically defined Cantor set discussed in [11] with only minor modification.

Consider the uniformly expanding map \(T(x) = 3x \mod 1\) defined on the unit interval [0, 1]. We take \(\Lambda \) to be the ternary Cantor set on [0, 1], and define recursively: \( U_0 = [0,1]; \) \(U_{n+1}\) is obtained by removing the middle third of each connected component of \(U_{n}\). Then we have \(\cap _n U_n = \Lambda \).

Theorem 8.2

For the uniformly expanding map \(T(x) = 3x\mod 1\) on [0, 1], the Cantor ternary set \(\Lambda \) and the nested sets \(\{U_n\}\), we have

Proof

Let \({{\mathcal {A}}}= \{[0,1/3), [1/3, 2/3), [2/3, 1]\}\) be a Markov partition of T, with respect to which the Lebesgue measure \(\mu \) is exponentially \(\psi \)-mixing. Below we will verify the assumptions of Proposition 4.11.

It is easy to see that \(U_n \in {{\mathcal {A}}}^n\), i.e., \(\kappa _n = n\). On the other hand, \(\mu (U_n) = 2^n/3^n\) which shows that item (1) of Definition 2.3 is satisfied for any \(\varepsilon \in (0,1)\). For item (2), note that \(U_n^j = U_j\) which implies that

We conclude that \(\{U_n\}\) is a good neighborhood system.

The extremal index can be found as follows. Let us write \(U_n=\bigcup _{|\alpha |=n} J_\alpha \) where the disjoint union is over all n-words \(\alpha =\alpha _1\alpha _2\dots \alpha _n\in \{0,2\}^n\) and

where \(I=[0,1]\) is the unit interval. The length \(|J_\alpha |\) is equal to \(3^{-n}\). For \(j<n\)

(disjoint union), thus

and therefore \(U_n\cap T^{-j}U_n=U_{n+j}\). Consequently,

Since \(\mu (U_{n+j})=\!\left( \frac{2}{3}\right) ^j\mu (U_n)\) this implies that \({\hat{\alpha }}_2(K,U_n)=\frac{\mu (U_{n+1})}{\mu (U_n)}=\frac{2}{3}\) and therefore \(\alpha _1=\frac{1}{3}\).

This result was recently shown in [11, Theorem 3.3] in more generality. By Proposition 4.11, we conclude that \(\rho (\Lambda , \{U_n\})\) = 1/3. \(\square \)

8.3 Submanifolds of Anosov Maps

In this section, we consider the case where \(\Lambda \) is a submanifold for some Anosov map T. More importantly, we will show how our results can be applied to those cases where the extremal index \(\theta \) is defined using time cut-off \(K_n\) that depends on \(U_n\) [see (20)].

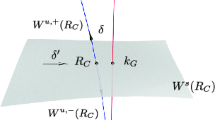

Let \(T = \begin{pmatrix} 2 &{} 1\\ 1 &{} 1 \end{pmatrix}\) be an Anosov system on \({{\mathbb {T}}}^2\) and \(\mu \) be the Lebesgue measure. It is well known that \(\mu \) is exponentially \(\psi \)-mixing with respect to its Markov partition \({{\mathcal {A}}}\). Also denote by \(\lambda >1\) the eigenvalue of T. Following [3] we take \(\Lambda \) to be a line segment with finite length \(l(\Lambda )\). We will lift \(\Lambda \) to \({\hat{\Lambda }}\subset {{\mathbb {R}}}^2\) and parametrize \({\hat{\Lambda }}\) by \(p_1 + t v\) for some \(p_1\in {{\mathbb {R}}}^2\) and \(t\in [0, l(\Lambda )]\). Write \(p_2\) for the other end point of \({\hat{\Lambda }}\), that is, \(p_2 = p_1 + l(\Lambda ) v\).

Consider the function \(\varphi _\Lambda (y) = -\log d(x, \Lambda )\) which achieves its maximum (\(+\infty \)) on \(\Lambda \). Write \(v^{*}, * = s, u\) for the unit vector along the stable and unstable direction, respectively. Then we have:

Theorem 8.3

For the sequence \(\{u_n = \log n\}\),

-

(1)

if \(\Lambda \) is not aligned with the stable direction \(v^s\) or the unstable direction \(v^u\) then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1\);

-

(2)

if \(\Lambda \) is aligned with the unstable direction but \(\{p_1 + tv^u, t\in {{\mathbb {R}}}\}\) has no periodic point, then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1\);

-

(3)

if \(\Lambda \) is aligned with the stable direction but \(\{p_1 + tv^s, t\in {{\mathbb {R}}}\}\) has no periodic point, then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1\);

-

(4)

\(\Lambda \) is aligned with \(v^{*}, *=s,u\) and L contains a periodic point with prime period q, then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1 - \lambda ^{-q}\);

-

(5)

\(\Lambda \) is aligned with the unstable direction \(v^u\), \(\Lambda \) has no periodic points but \(\{p_1+tv^u, t\in {{\mathbb {R}}}\}\) contains a periodic point of prime period q; if \(\Lambda \cap T^{-q}\Lambda = \emptyset \) then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1\); if \(\Lambda \cap T^{-q} \Lambda \ne \emptyset \) then \(\zeta (\varphi _\Lambda , \{u_n\}) = (1 - \lambda ^{-q})\frac{|p_2|}{l(\Lambda )}\);

-

(6)

\(\Lambda \) is aligned with the stable direction \(v^u\), \(\Lambda \) has no periodic points but \(\{p_1+tv^u, t\in {{\mathbb {R}}}\}\) contains a periodic point of prime period q; if \(\Lambda \cap T^{-q}\Lambda = \emptyset \) then \(\zeta (\varphi _\Lambda , \{u_n\}) = 1\); if \(\Lambda \cap T^{-q} \Lambda \ne \emptyset \) then \(\zeta (\varphi _\Lambda , \{u_n\}) = (1 - \lambda ^{-q})\frac{|p_2|}{l(\Lambda )}\);

Proof

We will only prove case (1), in which we will need the result of [3, Theorem 2.1 (1)]. The other cases use similar arguments and correspond to case (2) to (6) of [3, Theorem 2.1].

Below we verify the assumptions of Theorem 5.2.

Put \(\delta _n = e^{-u_n}\). Then we see that \(U_n = \{y: \varphi _\Lambda (y) > u_n\} = B_{\delta _n}(\Lambda )\). Since \(\mu \) is the Lebesgue measure, it is straightforward to verify that Assumption 1 is satisfied with \(r_n = \delta _n^2 = e^{-2 u_n}\). See [3, Figure 1].

By the hyperbolicity of T, there exists \(C>0\) such that \({\text {diam}}{{\mathcal {A}}}^n < C\lambda ^{-n}\). This invites us to take

which guarantees that \({\text {diam}}{{\mathcal {A}}}^{\kappa _n} < r_n\). On the other hand, \(\mu (U_n)\lesssim e^{-u_n}l(\Lambda ) = {{\mathcal {O}}}(1/n)\), so item (i) of Theorem 5.2 is satisfied for any \(\varepsilon \in (0,1)\).

To prove (ii), we write \(\epsilon _j = {\text {diam}}{{\mathcal {A}}}^j\), and note that if \(A\in {{\mathcal {A}}}^j\) has non-empty intersection with \(B_{r_n}(\partial U_n)\), then \(A\subset B_{r_n+\epsilon _j}(\partial U_n)\). In particular,

Recall that \(j\le \kappa _n = {\mathcal {O}}(u_n)\), we see that the right-hand side is exponentially small in j.

We are left with the extremal index \(\theta \) defined by (20). For this purpose, we choose \(K_n = (\log n)^5 \gg \kappa _n^2\). Now we estimate:

where the last inequality follows from [3, Section 3.3, page 16]. This shows that

finishing the proof of (iii) of Theorem 5.2. We conclude that

\(\square \)

Notes

Since in most situations, the rate of convergence for the entry times statistics \(|{\mathbb {P}}(\tau _U>\frac{t}{\mu (U)}) -e^{-\alpha t}|\) is independent of t (see for example [1, 15]; also see [13] where the error is a linear multiple of t); however, the localized escape rate problem requires one to obtain an error that is exponentially small in t, with rate higher than \(\alpha _1\).

We note that for piecewise expanding maps on higher dimensions, one could potentially use the functional space constructed by Saussol [20] which is an analog of the BV space in one dimension.

The existence of stochastic processes that are polynomially \(\phi \)-mixing is proven in [17, Theorem 2]. However, we do not know if there are any dynamical system examples without spectral gap (over certain Banach spaces).

References

Abadi, M.: Hitting, returning and the short correlation function. Bull. Braz. Math. Soc. 37(4), 1–17 (2006)

Bruin, H., Demers, M., Todd, M.: Hitting and escaping statistics: mixing, targets and holes. Adv. Math. 328, 1263–1298 (2018)

Carney, M., Holland, M., Nicol, M.: Extremes and extremal indices for level set observables on hyperbolic systems. Nonlinearity 34(2), 1136–1167 (2021)

Demers, M., Todd, M.: Asymptotic escape rates and limiting distributions for multimodal maps. Preprint. Available at arXiv.org/abs/1905.05457

Demers, M., Young, L.-S.: Escape rates and conditionally invariant measures. Nonlinearity 19(2), 377–397 (2006)

Dreher, F., Kesseböhmer, M.: Escape rates for special flows and their higher order asymptotics. Ergod. Theory Dynam. Syst. 39(6), 1501–1530 (2019)

Faranda, D., Moreira Freitas, A.C., Milhazes Freitas, J., Holland, M., Kuna, T., Lucarini, V., Nicol, M., Todd, M., Vaienti, S.: Extremes and Recurrence in Dynamical Systems. Wiley, New York (2016)

Ferguson, A., Pollicott, M.: Escape rates for Gibbs measures. Ergod. Theory Dynam. Syst. 32(3), 961–988 (2012)

Freitas, A.C.M., Freitas, J.M., Todd, M.: The compound Poisson limit ruling periodic extreme behaviour of non-uniformly hyperbolic dynamics. Comm. Math. Phys. 321, 483–527 (2013)

Freitas, A.C.M., Freitas, J.M., Magalhães, M.: Convergence of marked point processes of excesses for dynamical systems. J. Eur. Math. Sci. 20, 2131–2179 (2018)

Freitas, A.C.M., Freitas, J.M., Rodrigues, F.B., Soares, J.V.: Rare events for Cantor target sets. Preprint. Available at arXiv.org/abs/1903.07200

Haydn, N., Vaienti, S.: The distribution of return times near periodic orbits. Probab. Theory Related Fields 144, 517–542 (2009)

Haydn, N., Vaienti, S.: Limiting entry times distribution for arbitrary null sets. Comm. Math. Phys. 378(1), 149–184 (2020)

Haydn, N., Yang, F.: Local Escape Rates for \(\varphi \)-mixing Dynamical Systems. To appear on Ergodic Theory Dynam. Systems. Preprint available at arXiv.org/abs/1806.07148

Hirata, M., Saussol, B., Vaienti, S.: Statistics of return times: a general framework and new applications. Comm. Math. Phys. 206(1), 33–55 (1999)

Keller, G., Liverani, C.: Rare events, escape rates and quasistationarity: some exact formulae. J. Stat. Phys. 135, 519–534 (2009)

Kesten, H., O’Brien, G.L.: Examples of mixing sequences. Duke Math. J. 43(2), 405–415 (1976)

Melbourne, I., Nicol, M.: Almost sure invariance principle for nonuniformly hyperbolic systems. Commun. Math. Phys. 260, 131–146 (2005)

Pollicott, M., Urbański, M.: Open conformal systems and perturbations of transfer operators. Lecture Notes in Mathematics, vol. 2206. Springer, Cham (2017)

Saussol, B.: Absolutely continuous invariant measures for multidimensional expanding maps. Israel J. Math. 116, 223–248 (2000)

Yang, F.: Rare event process and entry times distribution for arbitrary null sets on compact manifolds. Ann. Inst. Henri Poincaré, Probab. Stat. 57(2), 1103–1135 (2021)

Young, L.-S.: Statistical properties of dynamical systems with some hyperbolicity. Ann. Math. 7, 585–650 (1998)

Young, L.-S.: Recurrence time and rate of mixing. Israel J. Math. 110, 153–188 (1999)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Dmitry Dolgopyat.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Davis, C., Haydn, N. & Yang, F. Escape Rate and Conditional Escape Rate From a Probabilistic Point of View. Ann. Henri Poincaré 22, 2195–2225 (2021). https://doi.org/10.1007/s00023-021-01070-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-021-01070-z