Abstract

We investigate the spectral properties of discrete one-dimensional Schrödinger operators whose potentials are generated by sampling along the elements of the Fibonacci subshift with a locally constant function. The fundamental trace map formalism for this model is presented and related to its spectral features via an extension of a multitude of works on the classical model, where the sampling function only depends on a single entry of the sequence.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Fibonacci Hamiltonian is one of the most heavily studied Schrödinger operators, both in the physics literature and in the mathematics literature. It is the central model in the study of one-dimensional quasicrystals, and it exhibits interesting spectral phenomena, such as zero-measure Cantor spectrum and purely singular continuous spectral measures, in a persistent way. We refer the reader to the surveys [7,8,9] for background, known results, and discussion, as well as [16] for a recent study that essentially completes the spectral analysis of this model.

Let us briefly recall the definition of the Fibonacci Hamiltonian. In fact, there are two standard ways of generating the potential, either via a coding of a rotation or via the iteration of a primitive substitution.

In the first setting, we consider the potential

where \(\alpha = \frac{\sqrt{5}-1}{2}\) is the inverse of the golden ratio, \(\lambda > 0\), and \(\theta \in {{\mathbb {T}}}:= {{\mathbb {R}}}/ {{\mathbb {Z}}}\). Thus, we consider the circle, written in additive notation, the transformation given by rotation by \(\alpha \), and sample along the orbit of an initial point \(\theta \) under this transformation with a characteristic function of an interval, whose length happens to coincide with \(\alpha \). Whenever an iterate falls in this interval, we write down \(\lambda \); otherwise, we write down 0. The two-sided sequence generated in this way serves as the potential of the discrete one-dimensional Schrödinger operator

in \(\ell ^2({{\mathbb {Z}}})\), which is called the Fibonacci Hamiltonian.

In the second setting, we start with the primitive substitution \(S_F : a \mapsto ab\), \(b \mapsto a\) on the alphabet \(\mathcal {A} = \{ a, b \}\). Iterating \(S_F\) on the symbol a, we obtain the words \(s_k := S_F^k(a)\), \(k \ge 0\). For example,

Obviously, we have

which follows quickly from the definition. Therefore, the “limit” \(u_F = S_F^\infty (a)\), called the Fibonacci sequence, makes sense as the unique one-sided infinite sequence that has each \(s_k\) as a prefix. This then gives rise to the Fibonacci subshift

There is also a natural transformation on \(\Omega _F\), namely the shift \(T : \Omega _F \rightarrow \Omega _F\) given by \((T \omega )(n) := \omega (n+1)\). We again can define potentials by sampling along the orbit of an initial point \(\omega \in \Omega \) with a suitable sampling function, that is,

where

This gives rise to the discrete one-dimensional Schrödinger operator

in \(\ell ^2({{\mathbb {Z}}})\).

It is by now well understood that for each fixed \(\lambda > 0\), the families \(\{ H_{\lambda ,\theta } \}_{\theta \in {{\mathbb {T}}}}\) and \(\{ H_{f,\omega } \}_{\omega \in \Omega }\) are essentially the same.Footnote 1 Which of the two representations of the potentials is used often depends on the specific aspect of them that needs to be highlighted: either the (generalized) quasi-periodicity or the self-similarity.

Indeed, the self-similarity often turns out to be the more critical aspect, as it gives rise to the trace map, whose dynamical study underlies all the recent progress on this model; compare the papers [7,8,9, 16] mentioned above.

Given that the subshift perspective has turned out to be the critical one for the Fibonacci Hamiltonian, we can now explain our motivation for writing this paper. Once the Fibonacci subshift \(\Omega _F\), and hence the associated topological dynamical system \((\Omega _F,T)\), has been defined, the choice of sampling function given by (1.3) appears to be rather special. Note that \(f(\omega )\) only depends on one entry of \(\omega \). It is therefore a locally constant function on \(\Omega _F\) of a very special form. From the general perspective of dynamically defined Schrödinger operators (cf. [8, 12]), it would be natural to start with the general class of continuous sampling functions \(f : \Omega _F \rightarrow {{\mathbb {R}}}\) and to only impose additional conditions as they become necessary for the proofs of the desired results.

Inspecting now the known results for the Fibonacci Hamiltonian, one observes that only the statement that the spectrum is a Cantor set of zero Lebesgue measure has been established for sampling functions more general than the ones in (1.3). Indeed, Damanik and Lenz showed in [21] that this spectral property holds for all potentials \(V_\omega (n) = f(T^n \omega )\), \(\omega \in \Omega \), provided that \(\Omega \) is a subshift that satisfies the Boshernitzan condition (cf. [1, 2]) and \(f : \Omega \rightarrow {{\mathbb {R}}}\) is locally constant. In [22], the same authors provide many examples of subshifts \(\Omega \) that satisfy the Boshernitzan condition, and in particular the Fibonacci subshift \(\Omega _F\) is among them. All of the other known results for the Fibonacci Hamiltonian have been proved only for sampling functions of the form (1.3).

Now that we have established that it would be natural to investigate whether those spectral results can be extended to more general sampling functions, in a setting for which we will fix assumptions and notation in Sect. 2, let us briefly comment on the immediate obstacle that needs to be overcome. The works [21, 22] rely only on the Boshernitzan condition and hence make no use of the self-similarity and the resulting trace map dynamical system. On the other hand, all the other results rely heavily on the trace map approach. If one considers a general locally constant \(f : \Omega _F \rightarrow {{\mathbb {R}}}\), the very first objective is to clarify whether the resulting operator family can indeed be studied via the Fibonacci trace map.Footnote 2 This is discussed in Sect. 3.

From this point on, the analysis bifurcates. There are statements and proofs that rely merely on the trace map description of the spectrum and make use of it only in the sense of a recursion. This concerns most of the results obtained for the standard Fibonacci Hamiltonian prior to 2008. On the other hand, most of the developments around the standard Fibonacci Hamiltonian since 2008 make heavy use of properties of the Fibonacci trace map as a hyperbolic dynamical system and employ methods and tools from the theory of such maps.

Some of the extensions will be rather straightforward, while others will require some work. Let us point out here that we found the following items to be crucial and non-trivial in the course of our investigation of this model:

-

the matrix recursion and the resulting trace map formalism are no longer a direct consequence of the Fibonacci substitution rule,

-

the property \(x_{-1} = 1\) does not extend from the standard case to the general case,

-

the proof of power-law bounds on transfer matrices for energies in the spectrum and all elements of the subshift requires considerations in both directions, and

-

the curve of initial conditions is in general no longer a line and does not lie inside a single invariant surface of the trace map.

We will naturally give detailed arguments whenever the extension to the general case presents some obstacles or difficulties, but we will only sketch the proof of statements that largely follow the same arguments as the proof of the corresponding statement in the standard case.

In the discussion above, we have motivated our desire to extend the work on the Fibonacci Hamiltonian from sampling functions of the form (1.3) to general locally constant sampling functions intrinsically. Here we point out that there exists additional motivation. Namely, the paper [11] studied a certain class of quantum graphs, which may be reduced to suitable direct sums of generalized Sturm–Liouville operators. The combinatorial data of the quantum graphs studied in [11] generate the parameters of the resulting generalized Sturm–Liouville operators in a locally constant way, which, however, does not depend on a window of size one, but rather on a window of size two. This naturally prompts one to extend, in the Fibonacci case (which prominently features there, too), the known results for window size one to greater window sizes. Indeed, the extension from size one to size two already presents the same difficulties as the extension from size one to a general larger size, and hence, one should immediately work out the extension in the general case (which is exactly what we do in the present paper).

2 The Setting

In this short section, we fix the setting in which we work throughout the rest of the paper.

Given the Fibonacci subshift \(\Omega _F\) as defined above, we generate potentials by sampling \(T^n \omega \), \(\omega \in \Omega _F\), \(n \in {{\mathbb {Z}}}\), with an arbitrary non-constant locally constant sampling function \(f : \Omega _F \rightarrow {{\mathbb {R}}}\).

Remark 2.1

It is not hard to see that the resulting potentials are quasi-Sturmian, and hence, we obtain a subclass of the operators studied in [20]. However, since the underlying subshift is Fibonacci, it is important to clarify the precise nature of the resulting trace map dynamics since much more is known for the Fibonacci trace map (especially the part of the theory that employs hyperbolic dynamics!) than for the trace map recursions used in the analysis of a general quasi-Sturmian operator. Concretely, while some of our results only recover what is known in the quasi-Sturmian case (and for these results, our proofs are quite succinct and omit details), others are not known in the general quasi-Sturmian case and their proofs require the more detailed analysis we carry out based on the Fibonacci trace map with a more general curve of initial conditions.

Since a locally constant function depends only on finitely many entries, we will assume (essentially without loss of generality) that there is an \(N \in {{\mathbb {Z}}}_+\) such that \(f(\omega )\) is completely determined by the N entries \(\omega _0, \ldots , \omega _{N-1}\). It is well known that the Fibonacci sequence \(u_F\) is Sturmian, that is, it has precisely \(N+1\) subwords of length N. This property extends to all elements of \(\Omega _F\), and hence, our function f is determined by \(N+1\) real numbers \(\lambda _1, \ldots , \lambda _{N+1}\), corresponding to the values of \(f(\omega )\) as \(\omega _0, \ldots , \omega _{N-1}\) runs through all the possible allowed choices. Fixing an order on this set of subwords of length N once and for all, the possible f’s in question are then parametrized by \(\lambda = (\lambda _1, \ldots , \lambda _{N+1}) \in {{\mathbb {R}}}^{N+1}\), where the non-constancy of f corresponds to the assumption that not all \(\lambda _i\) are equal—such \(\lambda \)’s will be called non-degenerate. The resulting potentials will then be denoted by \(V_{\lambda ,\omega }\).

Lemma 2.2

If \(\lambda \) is non-degenerate, then \(V_{\lambda ,\omega }\) is non-periodic for every \(\omega \in \Omega _F\).

Before giving the proof of the lemma, we recall a useful fact: there are elements \(\omega _F \in \Omega \) that coincide with the Fibonacci sequence \(u_F\) when restricted to the right half-line \(\{ 0, 1, 2, \ldots \}\). In fact, there are precisely two such elements. We fix one of them for definiteness; the subsequent considerations will be independent of this choice.

Proof of Lemma 2.2

Suppose \(\lambda \) is such that \(V_{\lambda ,\omega }\) is periodic for some \(\omega \in \Omega _F\). We have to show that \(\lambda \) is degenerate.

Note first that, by minimality, either all \(V_{\lambda ,\omega }\) are periodic or none of them are. In particular, our assumption implies that \(V_{\lambda ,\omega _F}\) is periodic.

Denote the period of \(V_{\lambda ,\omega _F}\) by \(m \in {{\mathbb {Z}}}_+\). It is well known that the sequence \(\{ F_k \mod m \}_{k \ge 0}\) is periodic; see, for example, [34]. Since \(F_0 = 1\), this implies that \(F_k \equiv 1 \mod m\) for infinitely many values of \(k \ge 0\).

Choose k large enough so that

-

(i)

\(F_{k} \equiv 1 \mod m\),

-

(ii)

\(F_{k-2} \ge m\), and

-

(iii)

\(F_{k-3} \ge N\).

Denote the values of \(V_{\lambda ,\omega _F}\) over one period, \((f(T^n \omega _F))_{n=0}^{m-1}\), by \((V_1, \cdots , V_m)\). By (1.2) and items (ii) and (iii) above, we have

On the other hand, since \(\{f(T^n \omega _F)\}_{n \in {\mathbb {Z}}}\) is m-periodic, item (i) implies

In conclusion, we have

and this implies \(V_i = V_j\) for all \(1 \le i,j \le m\). Thus, \(V_{\lambda ,\omega _F}\) is constant, which in turn implies that \(\lambda \) is degenerate. \(\square \)

In the remainder of the paper, we will study the spectral properties of the operators \(H_{\lambda ,\omega }\) in \(\ell ^2({{\mathbb {Z}}})\) given by

Let us discuss an example to clarify the setting and the notation.

Example 2.3

Let us consider the case \(N = 3\). That is, the sampling function f depends on the three entries \(\omega _0, \omega _1, \omega _2\) of its argument \(\omega \in \Omega _F\). The first step is to determine the subwords of length 3 that appear in the Fibonacci sequence \(u_F\) and to fix an order. It is easy to check that these words are given by the \(4 = N+1\) words

and we will order them in the way just specified.

Next, given a non-degenerate \(\lambda \in {{\mathbb {R}}}^4\) and an \(\omega \in \Omega \), we want to determine the values of the potential.

Suppose the \(\omega \) in question looks like

around the origin, where the vertical bar denotes the position between the entries \(\omega _{-1}\) and \(\omega _0\).

Suppose further that \(\lambda = (1,2,3,4)\). Then, for example,

Continuing in this fashion, we find that \(V_{\lambda ,\omega }\) looks like

around the origin, where the vertical bar again sits between \(V_{\lambda ,\omega }(-1)\) and \(V_{\lambda ,\omega }(0)\).

3 The Self-similarity Relation and the Trace Map

In this section, we recall the transfer matrix formalism that is a standard tool in the spectral analysis of one-dimensional Schrödinger operators, and we exhibit self-similarity properties of the transfer matrices in the setting we consider, which then lead to the realization that the standard Fibonacci trace map can be associated with our more general model. This will then be the starting point for the work presented in the subsequent sections, which employ the trace map, either as a recursion or as a hyperbolic dynamical system, in an essential way.

Given a potential \(V_{\lambda ,\omega }\) generated by the Fibonacci subshift and a locally constant sampling function f, where \(\lambda = (\lambda _1, \ldots , \lambda _{N+1}) \in {{\mathbb {R}}}^{N+1}\) and \(\omega \in \Omega _F\), as described at the end of the previous section, we consider the associated difference equation

where \(n \in {{\mathbb {Z}}}\) and \(E \in {{\mathbb {R}}}\). It is well known that the properties of the solutions u to the difference equation (3.1) are intimately connected to the spectral properties of the Schrödinger operator \(H_{\lambda ,\omega }\) in \(\ell ^2({{\mathbb {Z}}})\) with potential \(V_{\lambda ,\omega }\); compare, for example, [8, 12].

The solutions to (3.1) can be expressed via transfer matrices,

which are defined by \((T,A_{\lambda ,E})^n = (T^n,A_{\lambda ,E}^n)\), where

and

(Recall that we are implicitly using the association \(f \leftrightarrow \lambda \) explained earlier.)

It is an essential feature of the standard Fibonacci Hamiltonian that a certain sequence of transfer matrices of this type satisfies a recursion. It is our immediate goal to extend this feature to the general locally constant case we consider.

In the standard Fibonacci case, this sequence of matrices is easy to define: it is simply the sequence of (energy-dependent) matrices that map across the words \(s_k = S_F^k(a)\). In the general case at hand, we cannot define the matrices in this way because they are defined using a “look-ahead” that takes one outside the word \(s_k\).

Definition. Let \(\omega _F \in \Omega \) be an element that coincides with the Fibonacci sequence \(u_F\) when restricted to the right half-line \(\{ 0, 1, 2, \ldots \}\). With the Fibonacci numbers \(\{ F_k \}\) given by \(F_0 = 1\), \(F_1 = 2\), and \(F_{k+1} = F_k + F_{k-1}\), \(k \ge 1\), we set

Since the look-ahead points to the right, the definition of the \(M_k\) is independent of the choice of \(\omega _F\) (recall there are two possible choices).Footnote 3

The following lemma addresses the first issue one encounters when passing from window size one to the general case.

Lemma 3.1

Let

Then, we have

for every \(\lambda \in {{\mathbb {R}}}^{N+1}\), \(E \in {{\mathbb {R}}}\), and \(k \ge k_0\).

Proof

Applying (1.2) several times, we find

Thus, since \(s_{k-2}\) is a prefix of \(s_k\), which in turn is a prefix of \(u_F\), (3.4) follows from (3.2) and (3.3) since the length \(F_{k-2}\) of \(s_{k-2}\) exceeds the look-ahead \(N-1\) that is necessary to define the matrices. \(\square \)

Remark 3.2

If we want the recursion (3.4) to hold also for \(k < k_0\), we can force this by iteratively redefining the matrices

for \(k = k_0-2, k_0-3, \cdots \). Of course this will come at the price of the matrices \(M_k\), \(k \le k_0 - 2\) not corresponding to actual transfer matrices. Thus, one needs to be careful in all arguments that use this interpretation of the matrices to ensure that k is large enough.

It is well known that the matrix recursion (3.4) gives rise to the recursion

for the variables

To explain the terminology, note that (3.5) can be rewritten as

with the so-called Fibonacci trace map

The specific properties of T will not play a role in the present section and Sect. 4, as all arguments in this part of the paper are based on a study of (3.5) as a recursion. However, for our work in Sect. 5 on the extension of the results for the classical Fibonacci Hamiltonian whose proofs involve (partially) hyperbolic dynamics, the specific properties of the map T, and especially the fact that, once it is restricted to one of its invariant surfaces, the non-wandering set is hyperbolic, will be absolutely crucial.

The recursion (3.5) will hold for \(k \ge k_0 + 1\) if we use the actual transfer matrices, and it will hold for all \(k \in {{\mathbb {Z}}}\) if we use the redefined matrices; compare Remark 3.2.

Remark 3.3

In the remainder of the paper, we will consider the variables \(\{ x_k \}_{k \in {{\mathbb {Z}}}}\) only in the redefined sense, that is, when we talk about \(x_k\) for some \(k < k_0 - 1\), we are referring to the value that is obtained by solving the recursion (3.5) backwards, starting from \(k_0 + 1\).

It is also well known that any solution of the recursion (3.5) must obey an invariance relation. Namely, the quantity

is independent of \(k \in {{\mathbb {Z}}}\). With our variables, we note that I depends on both \(\lambda \) and E. We will write I(E) or \(I(E,\lambda )\) whenever we want to make this dependence explicit. Also, foreshadowing Sect. 5, let us rephrase (3.8) in terms of the map T defined in (3.7): with the so-called Fricke–Vogt invariant

we have

Even in the standard case, it is helpful to solve the recursion (3.5) backwards and compute the values at least up to index \(k = -1\). One then realizes two things:

-

(i)

The variable \(x_{-1}\) takes the value 1, independently of E and \(\lambda \).

-

(ii)

The variables \(x_0\) and \(x_1\) take the values \(\frac{E}{2}\) and \(\frac{E - \lambda }{2}\), respectively.

Item (i) is crucial in the formulation and proof of Sütő’s central Lemma 2 in [33]. Item (ii) (combined with item (i)) means that the map \(E \mapsto (x_{1},x_0,x_{-1})\) is affine and the image is a line. This so-called line of initial conditions is a central object in the part of the analysis of the standard Fibonacci Hamiltonian that employs hyperbolic dynamics.

The next lemma will address the absence of item (i) in the general case. We will deal with the absence of item (ii) in the general case in Sect. 5.

Lemma 3.4

Let the sequence \(\{x_k\}_{k \in {{\mathbb {Z}}}}\) be defined as above and set

Then, \(\{x_k\}_{k \ge 0}\) is unbounded if and only if there exists \(K \ge 0\) such that

If such a K exists, it is unique, and we have \(|x_{k+2}|> |x_{k+1} x_k| > b\) for every \(k \ge K\), and there exists \(d > 1\) such that

for every \(k \ge K\).

On the other hand, if no such K exists, then

for every \(k \ge 0\).

Proof

Suppose that (3.12) holds for some \(K \ge 0\). Then, by (3.5), the triangle inequality, and the assumptions,

By induction we get \(|x_{k+2}| > |x_{k+1} x_k|\) for every \(k \ge K\). Since \(\log |x_{k+2}| > \log |x_{k+1}| + \log |x_k|\) shows that \(\log |x_k|\) increases faster than the Fibonacci sequence, we find \(|x_k| > d^{F_{k-K}}\) for some \(d > 1\) and all \(k \ge K\). Note that

if \(k = K\). It is obvious that the above inequalities cannot simultaneously hold for any other value of k, and hence, K is unique.

Suppose now that (3.12) fails for every \(K \ge 0\). If \(|x_k| > b\) for some \(k \ge 0\), we must have \(|x_{k-1}| \le b\) and \(|x_{k+1}| \le b\). Otherwise, (3.12) holds for some \(K \le k\) since \(|x_{-1}| \le b\).

Observe that

recall definition (3.8) of I.

By combining the inequalities above, we find

We claim that the maximum of the right-hand side of (3.14), subject to \(|x_{k-1}|, | x_{k+1}| \le b\), is attained at \(|x_{k-1}|, | x_{k+1}| = b\). This yields

as claimed in (3.13).

Let us show this claim. If \(b = 1\), the assertion holds by [33].

Consider the case \(1 < b \le \sqrt{2}\). By [33], the right-hand side of (3.14) does not attain its maximum at \(|x_{k-1} |,|x_{k+1}|< 1\). If it attains its maximum at \(1 \le |x_{k-1} |, |x_{k+1}|< b\). Then, we have

which is a contradiction. If it attains its maximum at \(1 \le |x_{k+1}| < b\) and \(|x_{k-1} | < 1\), then

which again is a contradiction.

Finally, consider the case \(b \ge \sqrt{2}\). If \(1 - x^2_{k-1} \ge 0\) and \(1 - x^2_{k+1} \ge 0\), then

If \(1 - x^2_{k-1}< 0\) and \(1 - x^2_{k+1}< 0\), then

If \((1 - x^2_{k-1})(1 - x^2_{k+1}) \le 0\), then

This covers all cases and hence proves the claim. \(\square \)

4 Extension of Classical Results Not Relying on Hyperbolicity

In this section, we discuss those results for our generalized Fibonacci Hamiltonian whose proofs do not make use of the hyperbolicity of the trace map. This corresponds roughly to those results obtained for the standard Fibonacci Hamiltonian that were obtained prior to 2008.

4.1 Uniformity of the Lyapunov Exponent and Zero-Measure Spectrum

The first result is stated merely for completeness, as it is already known. As was discussed in introduction, the zero-measure property of the spectrum is actually the only result that was already known for the generalized Fibonacci Hamiltonian, generated by a general locally constant sampling function over the Fibonacci subshift, and its proof can be given without trace map considerations. It proceeds by showing that the associated Schrödinger cocycles are uniform via [21, 22] and then appealing to results by Johnson [26] and/or Lenz [28] and Kotani [27].

Theorem 4.1

Fix a non-degenerate \(\lambda \in {{\mathbb {R}}}^{N+1}\). Then, for every \(E \in {{\mathbb {R}}}\), there is \(L_\lambda (E) \ge 0\), called the Lyapunov exponent, such that

Moreover, there is a Cantor set \(\Sigma _\lambda \) of zero Lebesgue measure such that

In fact, with \(\mathcal {Z}_\lambda := \{ E \in {{\mathbb {R}}}: L_\lambda (E) = 0 \}\), we have

Proof

The \(\omega \)-independence of the spectrum (4.2) is an immediate consequence of the minimality of \((\Omega _F,T)\) and the continuity of the sampling function. The uniform convergence statement (4.1) follows from the general theory developed in [21, 22], where it is derived from three ingredients: aperiodicity, the Boshernitzan condition for the subshift, and the local constancy of the sampling function. Once the uniform convergence statement (4.1) has been established, (4.3) follows from [26, 28]. Moreover, by [27] and Lemma 2.2, the set \(\mathcal {Z}_\lambda \) has zero Lebesgue measure. Finally, \(\Sigma _\lambda \) is compact and has no isolated points by general principles. \(\square \)

It should be noted that the approach from [21, 22] does not give any additional information about the fractal properties of the Cantor set in question, while the trace map approach does. Thus, even when the results from [21, 22] cover a given model, it is still worthwhile to explore the trace map approach to the given model, as one can obtain additional information in this way.

4.2 The Description of the Spectrum in Terms of the Trace Map

Recall that the \(x_k\) are E-dependent and that \(b = b(E)\) is defined by \(b = \max \{ 1, |x_{-1}| \}.\) Let, for \(k \ge 0\),

and

Proposition 4.2

The set \(B^c_\infty \) is open, and

for every \(k_0 \ge 0\). Moreover,

for every \(k \ge 0\).

Proof

By Lemma 3.4,

is a disjoint decomposition of \(B^c_\infty .\) Obviously,

for all \(k \ge 0\), and we therefore have

This implies (4.4) and also that \(B^c_\infty \) is open, since \(\rho _k\) is open for every k. Finally, \(\rho _k \cap \rho _{k+1} \subseteq \bigcap _{k' = k}^\infty \rho _{k'}\) is a consequence of Lemma 3.4 and the other inclusion is trivial. \(\square \)

The next step is to identify the set \(\sigma _k\) as the spectrum of a suitable periodic Schrödinger operator.

Definition. Let \(k \ge k_0\) with \(k_0\) as defined in (3.3). Consider the periodic sequence

where as usual the bar is located between positions \(-1\) and 0. Define the \(F_k\)-periodic potential \(V^{(k)}\) by

and consider the associated periodic Schrödinger operator

in \(\ell ^2({{\mathbb {Z}}})\).

Lemma 4.3

For every \(k \ge k_0\), we have

Moreover, for the common spectrum \(\Sigma _\lambda \) of the operators \(\{ H_{\lambda ,\omega } \}_{\omega \in \Omega _F}\), we have

Proof

The identity \(\sigma (H^{(k)}) = \{ E \in {{\mathbb {R}}}: |x_k(E)| \le 1 \}\) in (4.7) follows from standard Floquet theory, and the inclusion \(\{ E \in {{\mathbb {R}}}: |x_k(E)| \le 1 \} \subseteq \sigma _k\) follows from the definition of \(\sigma _k\).

One inclusion underlying the identity (4.8) is essentially a consequence of strong convergence. There is a subtlety, however. It is not true that the sequence \(\{ H^{(k)} \}_{k \ge 0}\) converges strongly because to the left of the bar, the two rightmost values do (in general) not stabilize. Namely, the last two symbols of the words \(s_k\) (for \(k \ge 1\)) alternate between ab and ba, compare (1.1) (and the statement follows quickly inductively from (1.2)). This can be dealt with by considering suitable translates of the operators \(H^{(k)}\), which of course have the same spectrum.

In order to choose suitable translates that ensure strong convergence of the associated sequence of operators, recall that by (1.2), we have \(s_k = s_{k-1} s_{k-2}\). Thus, if we arrange the period relative to the origin as follows,

and then, as we move from level k to \(k+1\), the two sequences coincide on at least \(F_{k-1}\) positions to the left of the bar and on at least \(F_{k-2}\) positions to the right of the bar. This shows that for the associated operators (\(H^{(k)}\) defined as before for k even, and shifted to the left by \(F_{k-1}\) positions for k odd) converges strongly to \(H_{\omega _F}\). Thus,

where we used (4.2) in the first line, a standard strong operator convergence result in the second line, (4.7) in the third line, Proposition 4.2 in the fourth line and the sixth line, and the fifth line is obvious.

Conversely, the inclusion \(B_\infty \subseteq \sigma (H_{\omega _F}) = \Sigma _\lambda \) follows as in the standard case using the two-block Gordon argument along the sequence of the lengths \(\{ F_k \}\). Namely, due to the presence of squares to the right of the bar and the boundedness of the associated transfer matrix trace for each \(E \in B_\infty \), the Gordon lemma shows that no solution is square-summable at infinity (this will be discussed in more detail in the next subsection), which in turn implies that the energy in question must belong to the spectrum. \(\square \)

4.3 Existence and Uniqueness of k-Partitions

In this subsection, we recall the partitions of elements of the Fibonacci subshift introduced by Damanik and Lenz in [18], which are an important tool used to establish spectral results that hold uniformly in \(\omega \in \Omega _F\), and extend them to the setting of this paper.

The starting point is given by the construction of the subshift \(\Omega _F\). Recall that each element looks locally like the Fibonacci sequence \(u_F\), which in turn is given as the limit of the words \(\{ s_k \}\), which obey the recursion (1.2). From there, it is not too difficult to show that each \(\omega \in \Omega _F\) can be partitioned, for every \(k \ge 1\), into subwords of type \(s_k\) or \(s_{k-1}\). Moreover, blocks of type \(s_{k-1}\) are isolated (i.e., surrounded by blocks of type \(s_k\)), whereas blocks of type \(s_k\) occur with multiplicity either one or two. This partition of a given \(\omega \in \Omega _F\) is unique and called the k-partition of \(\omega \).

In the classical case, this induces directly a k-partition of the associated potentials since we simply replace the symbols a, b by real numbers \(\lambda , 0\), and do so in a bijective way.

In the case at hand, a small amount of care has to be exercised. To consistently apply the standard Gordon two-block argument, we need the following:

-

(i)

k needs to be large enough so that the look-ahead only inspects one additional block,

-

(ii)

the resulting potential value has to be the same, regardless of which type of block, \(s_k\) or \(s_{k-1}\), occurs to the right of the current one,

To ensure item (i), we simply need \(|s_{k-1}| = F_{k-1} \ge N - 1\), that is, \(k \ge k_0 - 1\); compare (3.3). Item (ii) holds for these values of k as well since \(s_{k-1}\) is a prefix of \(s_k\).

4.4 Singular Continuous Spectrum

In this very short subsection, we note that the results above determine the spectral type completely for all parameters:

Theorem 4.4

Fix a non-degenerate \(\lambda \in {{\mathbb {R}}}^{N+1}\). Then, for every \(\omega \in \Omega _F\), the operator \(H_{\lambda ,\omega }\) has purely singular continuous spectrum.

Proof

The absence of absolutely continuous spectrum follows from Theorem 4.1 since a set of zero Lebesgue measure cannot support an absolutely continuous measure.

The absence of point spectrum follows from the existence and uniqueness of the k-partitions of the potentials, as discussed in the previous subsection, along with the boundedness of the transfer matrix traces across the blocks of the partition for every energy in the spectrum (cf. (4.8)), together with the combinatorial case-by-case analysis from [18]. Indeed, these ingredients allow one to apply the Gordon two-block lemma [6, Lemma 3.1] and deduce the absence of solutions that decay near \(+ \infty \), which of course implies the absence of square-summable solutions, and hence the absence of eigenfunctions. \(\square \)

4.5 Quantitative Spectral Continuity

Once it is known that all spectral measures are purely singular continuous, it is of interest to study dimensional properties of these measures. Upper bounds can, for example, be established by proving upper bounds for the dimension of the spectrum, since the latter set supports all spectral measures. We will discuss this approach later. Lower bounds for the dimensional properties of spectral measures, on the other hand, need statements to the effect that these measures give no weight to sets that are too small in a suitable dimensional sense. The latter issue will be discussed in the present subsection from the perspective of Hausdorff dimension. Results in this direction for the standard Fibonacci Hamiltonian appeared in [5, 17, 25]. The overall structure of the proofs is largely similar in the general case, and hence, parts of the presentation will be somewhat brief. However, some parts will be quite detailed as the extension is not straightforward and requires us to delve quite deeply into the inner workings of the arguments.

To clarify the goal of this section, let us state the main theorem we wish to prove.

Theorem 4.5

Fix a non-degenerate \(\lambda \in {{\mathbb {R}}}^{N+1}\). Then, there is \(\alpha _\lambda > 0\) such that for every \(\omega \in \Omega _F\), all spectral measures of \(H_{\lambda ,\omega }\) are \(\alpha _\lambda \)-continuous, that is, they give zero weight to sets \(S \subseteq {{\mathbb {R}}}\) with \(h^{\alpha _\lambda }(S) = 0\).

Here, \(h^\alpha \) denotes the \(\alpha \)-dimensional Hausdorff measure on \({{\mathbb {R}}}\). Since \(\alpha _\lambda > 0\) and any countable set S obeys \(h^\alpha (S) = 0\) for every \(\alpha > 0\), Theorem 4.5 is a strengthening of the absence of point spectrum part of Theorem 4.4.

Remark 4.6

It is not necessary to assume in Theorem 4.5 that \(\lambda \) is non-degenerate, and the assumption will not be used in the proof. However, the statement is obviously true when \(\lambda \) is degenerate, and hence, the assumption is made to clarify that our focus is on the non-trivial case, where a proof is necessary. The same remark applies to the other results presented in this subsection.

It was shown in [17] that \(\alpha \)-continuity of spectral measures associated with Schrödinger operators in \(\ell ^2({{\mathbb {Z}}})\) can be established by proving power-law upper and lower bounds for solutions on one of the two half lines. Given that correspondence, Theorem 4.5 follows from the following:

Theorem 4.7

Fix a non-degenerate \(\lambda \in {{\mathbb {R}}}^{N+1}\). Then, there are \(C_1, C_2, \gamma _1, \gamma _2 \in (0,\infty )\) such that for every \(E \in \Sigma _\lambda \) and every \(\omega \in \Omega _F\), every solution u of

that is normalized in the sense that \(|u(0)|^2 + |u(1)|^2 = 1\) obeys

for every \(L \ge 1\).

Remark 4.8

(a) The local \(\ell ^2\) norm \(\Vert \cdot \Vert _L\) is defined by

(b) While it is clear from the way Theorem 4.7 is formulated, we emphasize that the constants \(C_1, C_2, \gamma _1, \gamma _2\) depend on \(\lambda \) (but are uniform in E and \(\omega \)). The value of \(\alpha _\lambda \) for which the statement in Theorem 4.5 can then by derived via [17] is given by

The proof of the lower bound in (4.9) follows largely the same arguments as in the standard case, given the results already established about boundedness of traces for energies in the spectrum and the existence of unique k-partitions. Thus, this part of the proof of Theorem 4.7 will only be sketched below. On the other hand, the proof of the upper bound in (4.9) turns out to be significantly more difficult than in the standard case and we will in fact have to work out an adapted version of the results from [24]. This part of the analysis will be presented with full details.

We introduce the following notation. For \(E \in \Sigma _\lambda \), let

Note that c is E-dependent. By (3.13), with b defined in (3.11) we have \(c \le b^2 + \sqrt{(1-b^2)^2 + I}\) for every \(E \in \Sigma _\lambda \), which leads to the uniform estimate

Let us recall an observation from the proof of [24, Lemma 4] and adjust it to the present setting.

Lemma 4.9

For \(E \in \Sigma _\lambda \), let

Then, we have

Proof

By (3.4) and the Cayley-Hamilton Theorem, we have

Moreover, since \(M_{k-3} \in {\mathrm {SL}}(2,{{\mathbb {R}}})\), we have

The bound (4.13) follows by induction from (4.10) and (4.14)–(4.15). \(\square \)

Let us establish a few useful recursive relations that follow from (3.4) and the Cayley–Hamilton Theorem:

Lemma 4.10

(Lemma 6 of [24]).

Lemma 4.11

We have

Proof

We note that

where we used (4.18) in the last step, and

where we again used (4.18) in the penultimate step. This establishes (4.18)–(4.20). \(\square \)

Recall that all these quantities depend on \(\lambda \) and E, and that this dependence is often left implicit. However, in the following lemmas, the statements need to be interpreted in such a way that \(\lambda \) is fixed and E is the independent variable. That is, when we state that a certain quantity is a polynomial, what we mean is that it is a \(\lambda \)-dependent polynomial in the variable E.

Lemma 4.12

For \(i=1,2,\cdots , 6\), let \(P^{(i)}\) be a polynomial of the variables \((x_{k-1}, x_k,\cdots , x_{k+m})\) for some \(m\ge 1\).

Then, we have

where \(Q^{(i)}\) is a polynomial of the variables \((x_{k-2},x_{k-1}, \cdots , x_{k+m})\).

Moreover, for every \(E \in \Sigma _\lambda \), we have

and

Proof

Using Lemmas 4.10 and 4.11, we have

Set \(Q^{(i)}\) as follows:

All properties are easy to check. \(\square \)

Lemma 4.13

Let \(k,\ell \in {\mathbb {N}}\) with \(k\ge 2\) and \(k-\ell \ge 2.\) Then, we have

where \(Q^{(i)}\) is a polynomial of variables \((x_{k-\ell -2},x_{k-\ell -1},\cdots , x_{k-3}, x_{k-2})\) and the dependence of all quantities on E and \(\lambda \) is left implicit.

Moreover, for every \(E \in \Sigma _\lambda \), we have

and

Proof

Note that we may write

Observe that, by (4.18),

In particular, we may write

where \(P_1^{(i)}\) is a polynomial of the variables \((x_{k-4},x_{k-3}, x_{k-2})\).

Thus, by applying Lemma 4.12, we have

where \(P_2^{(i)}\) is a polynomial of the variables \((x_{k-5},x_{k-4},x_{k-3}, x_{k-2})\).

Again, by applying Lemma 4.12, we have

where \(P_3^{(i)}\) is a polynomial of the variables \((x_{k-6},x_{k-5},x_{k-4},x_{k-3}, x_{k-2})\).

By applying lemma 4.12 repeatedly, we have

where \(P_{\ell -2}^{(i)}\) is a polynomial of the variables \((x_{k-\ell -1},x_{k-\ell },\cdots , x_{k-3}, x_{k-2})\).

Let \(E \in \Sigma _\lambda \) and let \(c:= \sup _k\{x_k\}\). By observing (4.5), we have

Thus, by using (4.21), (4.22) and (4.23) recursively, we have

and

A direct calculation using Lemma 4.10 and Lemma 4.11 shows

In particular, we may write

where \(P_{\ell -1}^{(i)}\) is a polynomial of the variables \((x_{k-\ell -1},x_{k-\ell },\cdots , x_{k-3}, x_{k-2})\).

Also, it is easy to see that

and

Again, a direct calculation using Lemma 4.10 and 4.11 shows

In particular, we may write

where \(P_{\ell }^{(i)}\) is a polynomial of the variables \((x_{k-\ell -2},x_{k-\ell -1},\cdots , x_{k-3}, x_{k-2}).\)

Also, it is easy to see that

and

This completes the proof by setting \(Q^{(i)} = P_{\ell }^{(i)}.\) \(\square \)

Lemma 4.14

There exist constants \({{\bar{C}}} > 0\) and \(\tau > 0\) such that for \(E \in \Sigma _\lambda \) and \(k_0< k_1< \cdots < k_t\), we have

where

Proof

Throughout this proof we denote \(M(k):=M_k\) for the sake of better legibility since we use subindices.

Let us prove first that

with \(d = 4 c^2 + 4 c + 1\), where a is the constant from (4.12).

We begin with the case \(t= 1\). By Lemma 4.13, we have for \(E \in \Sigma _\lambda \),

Assume now that (4.30) holds for \(t\in \{1, \cdots , \ell \}\). We claim that (4.30) holds for \(t=\ell +1.\) We have three cases.

Case 1: \(k_\ell -k_{\ell -1}>2.\)

By Lemma 4.13, we have

By inductive hypothesis and Lemma 4.13, we have

and hence, (4.30) holds for \(t=\ell + 1\) as well.

Case 2: \(k_\ell -k_{\ell -1}=2.\)

Note that in this case, we have

By Lemma 4.13 and (4.31), we have

By the inductive hypothesis and Lemma 4.13, we have

and hence, (4.30) holds for \(t=\ell + 1\) as well.

Case 3: \(k_{\ell }-k_{\ell -1}=1.\)

Note that in this case, we have

and

By Lemma 4.13, we have

By inductive hypothesis and Lemma 4.13,

and hence, (4.30) holds for \(t=\ell + 1\) as well. This completes the proof of (4.30) for all cases.

Since \(k_{j + 1} - k_j \ge 1\) for \(j \in \{0,1,\cdots ,t - 1\}\), we have \(k_t - k_0 \ge t\). Setting \(g = d^3 a^3\), (4.30) gives

Moreover,

Therefore, for large k,

with \(\tau = (\log \sqrt{5} \log g)/\log \alpha ^{-1}\) and for some constant \({{\bar{C}}}.\) \(\square \)

Proposition 4.15

Fix a non-degenerate \(\lambda \in {{\mathbb {R}}}^{N+1}\). Then, there exist \(C > 0\) and \(\gamma > 0\) such that for every \(E\in \Sigma _\lambda \), every \(\omega \in \Omega _F\), and every \(n \ge 1\), we have \(\Vert A_{\lambda ,E}^n(\omega ) \Vert \le C n^\gamma \)

Proof

The proof will consist of two steps. In the first step we prove the assertion for \(\omega = \omega _F\). Thus, we extend a result from [24] from the standard Fibonacci Hamiltonian to the generalized Fibonacci Hamiltonian. In the second step, we consider the case of a general \(\omega \in \Omega _F\), but use the result from the first step in the proof. This extends a result from [19] from the standard Fibonacci Hamiltonian to the generalized Fibonacci Hamiltonian.

Step 1: Given \(n \ge 1\) we may choose a unique index set of positive integers \(\{ k_j \}_{j = -S}^J\) such that

-

(1)

\(k_{j+1} - k_j \ge 2\),

-

(2)

\(F_{k_j} < N\) if \(-S \le j < 0\) and \(F_{k_j} \ge N\) if \(0 \le j \le J\),

-

(3)

\(n = \sum _{j = -S}^K F_{k_j}\).

Consider the case \(J \ge 0\). Then, we may write \(A_{\lambda ,E}^n(\omega _F)\) as

By using a similar argument as in the proof of [24, Theorem 1], we have

where \(h := 4 c + 1\). By item (1), we have \(k_J - k_0 \ge 2J\), and hence, \(\Vert M_{k_0}(E) \cdots M_{k_J}(E) \Vert \le f^{n_K}\), \(f := a h^2\).

Therefore, for all large enough J,

where \(n^* := \sum _{j = 0}^{J} F_{k_j} \le n\).

Let \(A := A_{\lambda ,E}^{\sum _{j=-S}^{-1} F_{k_j}} (\omega _F) \cdots A_{\lambda ,E}^{1}\). Define

In conclusion, we have

with \(\mu = \frac{\log \sqrt{5} \log d }{\log \alpha ^{-1}}.\)

Step 2: Let now \(\omega \in \Omega _F\) be arbitrary and consider \(A_{\lambda ,E}^n(\omega )\). Viewing the underlying matrix product relative to a k-partition (for k sufficiently large) as in [19], we can partition it relative to the (at most) two consecutive blocks in which we fall. The norm of \(A_{\lambda ,E}^n(\omega )\) is then bounded from above by the product of the norms of the two pieces.

The right piece is covered by Step 1 since it is aligned at the left endpoint of a block, which is also the starting point of the matrix products associated with \(\omega _F\).

The left piece is estimated using an inversion of the order of the pieces \(M_{k_j}(E)\) we break the long product into. The difference is that now the index decreases from left to right (while it increases in Step 1). This is the reason why we had to prove Lemma 4.14. Using this lemma, we obtain a power-law estimate also for the left piece.

Putting the two estimates together, the desired result follows. \(\square \)

Proof of Theorem 4.7

The proof of the lower bound in (4.9) follows by the same arguments as in the standard case: using the k-partitions of the potentials, one can identify sufficiently many length scales so that, using the Gordon two-block argument and the trace bounds for energies on the spectrum, the mass-reproduction technique developed in [5] can inductively prove the desired lower bound for every \(\omega \in \Omega _F\); see [17] for details.

The upper bound in (4.9) follows readily from the power-law upper bound for the transfer matrices corresponding to energies in the spectrum, as established in Proposition 4.15. \(\square \)

5 Extension of Results Whose Proofs are Based on Hyperbolicity

In this section we discuss those results for our generalized Fibonacci Hamiltonian whose proofs do make use of the hyperbolicity of the trace map. This corresponds roughly to those results obtained for the standard Fibonacci Hamiltonian that have been obtained since 2008, starting with [10].

5.1 General Setup

The result on the zero measure property of \(\Sigma _\lambda \) naturally leads one to ask about the fractal dimension of this set. There are several ways to measure the fractal dimension of a nowhere dense subset of the real line, for example the Hausdorff dimension or the box counting dimension. Given \(S\subset {\mathbb {R}}\), we denote by \(\dim _{\text {H}}(S)\) and \(\dim _{\text {B}}(S)\) the Hausdorff dimension and the box counting dimension of S, respectively. The local Hausdorff dimension and box counting dimension of S at \(s \in S\) are given by

and

Recall the Fibonacci trace map

and the Fricke–Vogt invariant,

for which we have

Define

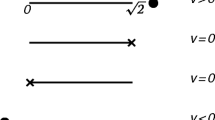

It is well known that for \(I > 0\), \(S_I\) is a smooth, connected, non-compact two-dimensional submanifold of \({\mathbb {R}}^3\), homeomorphic to the four-punctured sphere. When \(I = 0\), \(S_I\) develops four conic singularities, away from which it is smooth. When \(-1< I < 0\), \(S_I\) contains five smooth connected components: four noncompact components, each homeomorphic to the two-disc, and one compact component, homeomorphic to the two-sphere. When \(I = -1\), \(S_I\) consists of the four smooth noncompact discs and a point at the origin. When \(I < -1\), \(S_I\) consists only of the four noncompact two-discs (Fig. 1).

To investigate the dynamics of the trace map, we consider the following initial conditions

and ask how points on it behave under iteration of the map T.

Recall that the quantity

is independent of \(k \in {{\mathbb {Z}}}\), and we note that I depends on both \(\lambda \) and E. We will write I(E) or \(I(E,\lambda )\) whenever we want to make this dependence explicit.

Given a point \(p\in S_I,\) the forward semi-orbit of p under T is given by

We say that a point p satisfies property \(\mathbf{B} \) if p has a bounded forward semi-orbit. Note that (4.8) shows for non-degenerate \(\lambda \in {\mathbb {R}}^{N+1}\),

and this set is a Cantor set of zero Lebesgue measure. Moreover, for every \(E\in \Sigma _\lambda \), \(I(E)\ge 0\) since if there exists \(E\in \Sigma _\lambda \) such that \(I(E)<0\), by the assumption of \(I(E)<0\) and the continuity of I, we could find an open neighborhood of E that belongs to \(\Sigma _\lambda \), contradicting the fact that \(\Sigma _\lambda \) is a Cantor set. Therefore, we mainly focus on the cases \(I\ge 0\) in the remainder of this paper.

For \(I=0\), we define the surface

which is smooth everywhere except at the four points \(P_1=(1,1,1)\), \(P_2=(-1,-1,1)\), \(P_3=(1,-1,-1)\), \(P_4=(-1,-1,1)\). By invariance of \({\mathbb {S}}\) under T it follows that all points of \({\mathbb {S}}\) are of type B. Beside these points, there exist type B points in \(S_0\backslash {\mathbb {S}}\), these points form a disjoint union of four smooth injectively immersed connected one-dimensional submanifolds of \(S_0\backslash {\mathbb {S}}\), \(\mathcal {W}_1,\cdots ,\mathcal {W}_4\); see [23, Lemma 2.2].

For fixed \(I > 0\), the set of all bounded two-sided orbits of T in \(S_I\) coincides with the nonwandering set \(\Lambda _I\), and the set \(\Lambda _I\) is a compact locally maximal T-invariant hyperbolic subset of \(S_I\); see [3, 4, 13]. Therefore, a point p is a type-B point in \(S_I\) if and only if there exists \(q\in \Lambda _I\), such that \(p\in W^s(q)\), the stable manifold at q. We define

and define a smooth three-dimensional submanifold \(\mathcal {M}\) of \({\mathbb {R}}^3\) by

There exists a family, denoted by \(\mathcal {W}^s\), of smooth 2-dimensional connected injectively immersed submanifolds of \(\mathcal {M}\), whose members we denote by \(W^{cs}\) and call center-stable manifolds, such that for every \(p\in \Lambda \), there exist a unique \(W^{cs}\in \mathcal {W}^s\) containing p and the type-B points of \(\mathcal {M}\) are precisely \(\bigcup _{W^{cs}\in \mathcal {W}^s}W^{cs}\); see [23, Theorem 2.6].

5.2 Fractal Dimensions in the Case of General N

Denote by \(\gamma _\lambda \) the curve of the initial conditions

Define

Theorem 5.1

There exists a non-empty set \({\mathfrak {N}} \subset {\mathbb {R}}^{N+1}\) of Lebesgue measure zero, such that for non-degenerate \(\lambda \in {\mathbb {R}}^{N+1}\),

-

(a)

if \(\gamma _\lambda \) lies entirely in some \(S_{I\circ \gamma _\lambda (E)}\), that is, \(\frac{\partial I\circ \gamma _\lambda }{\partial E}\equiv 0\), then \(I\circ \gamma _\lambda (E)\equiv c>0\) and for every \(E\in B_\infty (\gamma _\lambda )\), we have

$$\begin{aligned} 0<\dim _{\mathrm {H}}^{\mathrm {loc}}\left( B_\infty (\gamma _\lambda ),E\right) <1, \end{aligned}$$(5.2)and

$$\begin{aligned} \dim _{\mathrm {H}}^{\mathrm {loc}}\left( B_\infty (\gamma _\lambda ),E\right)&=\dim _{\mathrm {B}}^{\mathrm {loc}}\left( B_\infty (\gamma _\lambda ),E\right) \nonumber \\&=\dim _{\mathrm {H}} (B_\infty (\gamma _\lambda ))\nonumber \\&=\dim _{\mathrm {B}} (B_\infty (\gamma _\lambda )). \end{aligned}$$(5.3) -

(b)

if \(\gamma _\lambda \) does not lie entirely in some \(S_{I\circ \gamma _\lambda (E)}\), that is, \(\frac{\partial I\circ \gamma _\lambda }{\partial E} \not \equiv 0\), we have

-

(b.1)

\(B_\infty (\gamma _\lambda )\ni E\mapsto \dim _{\mathrm {H}}^{\mathrm {loc}}(B_\infty (\gamma _\lambda ),E)\) is continuous;

-

(b.2)

there exists a finite set \(F\subseteq B_\infty (\gamma _\lambda )\) such that for \(E\in B_\infty (\gamma _\lambda )\backslash F\), \(\dim _{\mathrm {B}}^{\mathrm {loc}}(B_\infty (\gamma _\lambda ),E)\) exists and is equal to \(\dim _{\mathrm {H}}^{\mathrm {loc}}(B_\infty (\gamma _\lambda ),E)\).

-

(b.1)

-

(c)

-

(c.1)

for all \(\lambda \notin {\mathfrak {N}}\) and for all \(E \in B_\infty (\gamma _\lambda )\), we have \(0< \dim _{\mathrm {H}}^{\mathrm {loc}}(B_\infty (\gamma _\lambda ),E) < 1\);

-

(c.2)

for all \(\lambda \in {\mathfrak {N}}\), \(0< \dim _{\mathrm {H}}^{\mathrm {loc}}(B_\infty (\gamma _\lambda ),E) < 1\) for all \(E \in B_\infty (\gamma _\lambda )\) away from the lower and upper boundary points of the spectrum, and \(\dim _{\mathrm {H}}(B_\infty (\gamma _\lambda ))) = 1\).

-

(c.1)

Proof

(a) By assumption we have \(I \circ \gamma _\lambda (E) \equiv c\). We have already argued above that c must be non-negative, as the spectrum is non-empty and for E’s in the spectrum, the invariant cannot take negative values. By a similar argument it follows that c also cannot be zero. Note that the curve of initial conditions \(\gamma _\lambda \) does not intersect \({\mathbb {S}}\) (see (5.1)), that is, \({\mathbb {S}} \cap \gamma _\lambda =\emptyset \). Indeed, by invariance of \({\mathbb {S}}\) under T it follows that all points of \({\mathbb {S}}\) are of type-B, and if there exists \(E = E(\lambda )\) such that \(\gamma _\lambda (E) \in {\mathbb {S}}\), then \(E \in B_\infty (\gamma _\lambda )\). On the other hand, by the continuity of the curve, we could find an open neighborhood of E that belongs to \(B_\infty (\gamma _\lambda )\), contradicting the fact that \(\Sigma _\lambda \) is a Cantor set. Therefore, any type-B point \(\gamma _\lambda (E)\) must lie in one of the four curves \(\mathcal {W}_1,\cdots ,\mathcal {W}_4\). But this is again at odds with the fact that for non-degenerate \(\lambda \), the spectrum is a Cantor set.

Thus, we know that \(I \circ \gamma _\lambda (E) \equiv c > 0\). The curve of the initial conditions \(\gamma _\lambda \) intersects \(W^s(\Lambda _c)\) transversally; see [16, Theorem 1.5]. As a consequence of this, the box counting dimension of the spectrum \(\Sigma _\lambda \) exists and coincides with the Hausdorff dimension and we have (5.3); see [16, Theorem 1.1].

If \(I\circ \gamma _\lambda (E)\equiv c>0\) and \(\gamma _\lambda (E)\) is a type-B point, by using the proof of Theorem 2.1–iii in [36], we have

Combining (5.4) with the fact (see [14] and [15]) that

this implies (5.2), and hence, we complete the proof of (a).

(b) Since \(I\circ \gamma _\lambda (E)\) is E dependent, we can follow the arguments Yessen used in the proof of [35, Theorem 2.3].

Assume \(c>0\), and let \(\gamma _\lambda (E)\in \gamma _\lambda \cap S_c\) be a point of transversal intersection with the center-stable manifold, we have

Since \(c\rightarrow \dim _{\text {H}}(\Lambda _c)\) is continuous, this proves the continuity result (b.1); see the proof of [35, Theorem 2.3–(i)] for more details.

Since the curve of initial conditions \(\gamma _\lambda \) is analytic and it is contained in no single invariant surface, it may have only isolated tangencies with invariant surfaces; hence, we have the result (b.2); see [23, Theorem 3.2].

As for the proof of (c), let \(E_0: {\mathbb {R}}^{N+1}\rightarrow {\mathbb {R}}\) be such that \(I \circ \gamma _\lambda \circ E_0(\lambda ) = 0\). Define

Then, \(\mathcal {C}\) is a smooth two-dimensional submanifold of \({\mathbb {R}}^3\) with four connected components, and there exist four smooth curves in \(\mathcal {C}\), \(\mathcal {W}_1,\cdots ,\mathcal {W}_4\) whose union we denote by \(\tau \), such that for all \(x\in \mathcal {C}\), \({\mathcal {O}}^+_{T}(x)\) is bounded if and only if \(x\in \tau \). We define the continuous map \(F:{\mathbb {R}}^{N+1}\rightarrow \mathcal {C}\) by

Let

Clearly, \({\mathfrak {N}}\) has zero Lebesgue measure. We also claim that \({\mathfrak {N}}\) is non-empty. Let \(P_1=(1,1,1)\). One of the four curves \(\mathcal {W}_1, \cdots , \mathcal {W}_4\) is a branch of the strong stable manifold at \(P_1\), which we denoted by \(W^{ss}\). The tangent space \(T_{P_1}W^{ss}\) is transversal to the plane \(\{ (x,y,z) : x, y \in {\mathbb {R}}, z=1 \}\). Hence, \(W^{ss} \cap \{ z \approx 1 \} \ne \emptyset \). Let us assume that \(x_{-1} = x_{-1}(E,\lambda ) \equiv c \approx 1\), for any \(p=(x_{1},x_0,x_{-1}) \in \{ z = c \}\), we can always find \(\lambda \in {\mathbb {R}}^{N+1}\) such that \(p \in \gamma _\lambda \). Thus, \({\mathfrak {N}} \ne \emptyset \); see the proof of [35, Theorem 2.3–(iib)] for more details.

If \(\lambda \notin {\mathfrak {N}}\), the intersection of the corresponding \(\gamma _\lambda \) with the center-stable manifolds is away from \(S_0\). Hence, for all \(E\in B_\infty (\gamma _\lambda )\), we have

If \(\lambda \in {\mathfrak {N}}\), pick \(\gamma _\lambda (E) \in \gamma _\lambda \cap S_0\). Then, E is one of the two extreme boundary points of the spectrum, and away from it, we have \(\dim _{\mathrm {H}}^{\mathrm {loc}} (B_\infty (\gamma _\lambda ), E) \in (0,1)\). On the other hand, we have \(\dim _{\text {H}} (B_\infty (\gamma _\lambda )) = 1\) due to \(\lim _{c\rightarrow 0^+}\dim _{\mathrm {H}}(\Lambda _c)=2\). \(\square \)

Remark 5.2

As we have seen that the value of the local fractal dimension at a point in the spectrum is determined by the value of the invariant at that point, it is worth pointing out that the former has explicitly known asymptotics in the regime of small [15] and large [10] values of the latter.

5.3 Fractal Dimension of the Spectrum for the case \(N=2\)

In this subsection, we illustrate the results from the previous subsection in the special case \(N = 2\), where explicit calculations are easy to carry out and the resulting expressions may be readily analyzed.

We notice that in this case, the locally constant function \(f(\omega )\) depends on the window (\(\ldots {\omega _0, \omega _1} \ldots \)) of size two. For \(g:\{a,b\}^{2}\rightarrow {\mathbb {R}}\), we define \(g(a,b)=\lambda _1\), \(g(b,a)=\lambda _2\) and \(g(a,a)=\lambda _3\). We define the locally constant function \(f(\omega )\) as the following

that is,

As the subshift \(\Omega \) is minimal, we consider \(\omega = \omega _F\), where \(\omega _F \in \Omega \) is such that its restriction to the right half-line \(\{ 0, 1, 2, \ldots \}\) coincides with the Fibonacci sequence \(u_F\). That is, \(\omega \) looks like

around the origin, where the vertical bar denotes the position between the entries \(\omega _{-1}\) and \(\omega _0\).

When the size of window \(N=2\), we recall from (3.3) that

where \(\{ F_k \}_{k\ge 0}\) is the sequence of Fibonacci numbers given by \(F_0 = 1\), \(F_1 = 2\), and \(F_{k+1} = F_k + F_{k-1}\), \(k \ge 1\). Then, Lemma 3.1 implies that for any \(k \ge 2\),

The exact expression for \(M_1\) and \(M_2\) is

We define

and then define

therefore, the curve of initial conditions is

The Fricke–Vogt invariant is

which obeys

We consider the following two cases:

Case 1: \(\lambda _1=\lambda _3\) or \(\lambda _2=\lambda _3\). \(\frac{\partial I\circ \gamma _\lambda }{\partial E}\equiv 0\); hence, the Fricke–Vogt invariant is

(if \(\lambda _1=\lambda _2\), then \(\lambda _1=\lambda _2=\lambda _3\), that means \(\lambda =(\lambda _1,\lambda _2,\lambda _3)\in {\mathbb {R}}^3\) is degenerate); thus, the curve of the initial conditions lies in single invariant surface \(S_I\), and it intersects \(W^s(\Lambda _I)\) transversally. Hence, the Hausdorff dimension of \(\Sigma _\lambda \) is strictly between zero and one and for every \(E\in \Sigma _\lambda \) and every \(\varepsilon >0\), we have

Case 2: \(\lambda _1\ne \lambda _3\) and \(\lambda _2\ne \lambda _3\).

Case 2.1: \(\lambda _1> \lambda _3\), \(\lambda _2>\lambda _3\). \(I\circ \gamma _\lambda (E)\) decreases monotonically on the interval \((-\infty ,\frac{\lambda _1+\lambda _2}{2})\), increases monotonically on the interval \((\frac{\lambda _1+\lambda _2}{2},+\infty )\), so \(I\circ \gamma _\lambda (E)\) takes its minimum at \(\frac{\lambda _1+\lambda _2}{2}\). In particular, we have

Case 2.1.1: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})>0\). \(\gamma _\lambda \) intersects the invariant surfaces \(\{S_I\}_{I>0}\) transversally; therefore, for all \(E\in \Sigma _\lambda \), we have \(0<\dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E)<1\).

Case 2.1.2: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})=0\). If \(E=\frac{\lambda _1+\lambda _2}{2}\) is such that

where \(\tau \) is the union of four smooth curves \(\mathcal {W}_1,\cdots ,\mathcal {W}_4\) in \(\mathcal {C}\) (see the proof of (c) in Theorem 5.1), then \(E=\frac{\lambda _1+\lambda _2}{2}\in \Sigma _\lambda \). Therefore, for this spectral point E, \(\dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E)=1\), and for other \(E \in \Sigma _\lambda \), we have \(0< \dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E) < 1\).

Case 2.1.3: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})<0\). We take \(E_1 = E_1(\lambda _1,\lambda _2,\lambda _3)\) and \(E_2 = E_2(\lambda _1,\lambda _2,\lambda _3)\) such that \(I \circ \gamma _\lambda (E_1) = I \circ \gamma _\lambda (E_2) = 0\), then the spectrum \(\Sigma _\lambda \subset (-\infty ,E_1] \cup [E_2,+\infty )\). If \(E_1\) and \(E_2\) are such that

then \(E_1 \in \Sigma _\lambda \) and \(E_2 \in \Sigma _\lambda \). Therefore, for \(E \in \{ E_1, E_2 \}\), \(\dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E) = 1\), and for \(E \in \Sigma _\lambda \backslash \{ E_1, E_2 \}\), \(0< \dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E) < 1\) (Fig. 2).

Case 2.2: \(\lambda _1> \lambda _3\), \(\lambda _2<\lambda _3\). \(I\circ \gamma _\lambda (E)\) increases monotonically on the interval \((-\infty ,\frac{\lambda _1+\lambda _2}{2})\), decreases monotonically on the interval \((\frac{\lambda _1+\lambda _2}{2},+\infty )\), \(I\circ \gamma _\lambda (E)\) takes its maximum at \(\frac{\lambda _1+\lambda _2}{2}\).

Case 2.2.1: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})<0\). Since \(I \circ \gamma _\lambda (E) \ge 0\) for every \(E \in \Sigma _\lambda \), this case cannot happen.

Case 2.2.2: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})=0\). The spectrum \(\Sigma _\lambda \) at most consists of one single point, that is, \(\frac{\lambda _1+\lambda _2}{2}\), it contradicts the Cantor spectrum \(\Sigma _\lambda \). This case cannot happen.

Case 2.2.3: \(I \circ \gamma _\lambda (\frac{\lambda _1+\lambda _2}{2})>0\). We take \(E_1=E_1(\lambda _1,\lambda _2,\lambda _3)\) and \(E_2=E_2(\lambda _1,\lambda _2,\lambda _3)\) such that \(I\circ \gamma _\lambda (E_1)=I\circ \gamma _\lambda (E_2)=0\), then the spectrum \(\Sigma _\lambda \subset [E_1,E_2]\). And if \(E_1\) and \(E_2\) are such that

then \(E_1\in \Sigma _\lambda \) and \(E_2\in \Sigma _\lambda \). Therefore, for \(E\in \{E_1,E_2\}\), \(\dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E)=1\), and for \(E\in \Sigma _\lambda \backslash \{E_1,E_2\}\), we have \(0<\dim _{\mathrm {H}}^{\mathrm {loc}}(\Sigma _\lambda ,E)<1\) (Fig. 3).

Case 2.3: \(\lambda _1< \lambda _3\), \(\lambda _2>\lambda _3\). \(I\circ \gamma _\lambda (E)\) increases monotonically on the interval \((-\infty ,\frac{\lambda _1+\lambda _2}{2})\), decreases monotonically on the interval \((\frac{\lambda _1+\lambda _2}{2},+\infty )\), so \(I\circ \gamma _\lambda (E)\) takes its maximum at \(\frac{\lambda _1+\lambda _2}{2}\). This is a situation similar to Case 2.2, so we omit it here.

Case 2.4: \(\lambda _1< \lambda _3\), \(\lambda _2<\lambda _3\). \(I\circ \gamma _\lambda (E)\) decreases monotonically on the interval \((-\infty ,\frac{\lambda _1+\lambda _2}{2})\), increases monotonically on the interval \((\frac{\lambda _1+\lambda _2}{2},+\infty )\), \(I\circ \gamma _\lambda (E)\) takes its minimum at \(\frac{\lambda _1+\lambda _2}{2}\). This is a situation similar to Case 2.1, so we omit it here.

Notes

The latter family contains one additional orbit, namely the operators with potentials \(\lambda \chi _{(1 - \alpha ,1]}(n\alpha + m\alpha \!\! \mod 1)\), \(m \in {{\mathbb {Z}}}\).

This is the primary reason why we have normalized the window inspected by f in the way we did.

References

Boshernitzan, M.: A condition for minimal interval exchange maps to be uniquely ergodic. Duke Math. J. 52, 723–752 (1985)

Boshernitzan, M.: A condition for unique ergodicity of minimal symbolic flows. Ergodic Theory Dyn. Syst. 12, 425–428 (1992)

Casdagli, M.: Symbolic dynamics for the renormalization map of a quasiperiodic Schrödinger equation. Commun. Math. Phys. 107, 295–318 (1986)

Cantat, S.: Bers and Hénon, Painlevé and Schrödinger. Duke Math. J. 149, 411–460 (2009)

Damanik, D.: \(\alpha \)-continuity properties of one-dimensional quasicrystals. Commun. Math. Phys. 192, 169–182 (1998)

Damanik, D.: Gordon-type arguments in the spectral theory of one-dimensional quasicrystals. In: Baake, M., Moody, R.V. (eds.) Directions in Mathematical Quasicrystals, CRM Monograph Series, vol. 13, pp. 277–305. American Mathematical Society, Providence (2000)

Damanik, D.: Strictly ergodic subshifts and associated operators. In: Spectral Theory and Mathematical Physics: a Festschrift in Honor of Barry Simon’s 60th Birthday, Proceedings of Symposium on Pure Mathematics, vol 76, Part 2, pp. 505–538. American Mathematical Society, Providence (2007)

Damanik, D.: Schrödinger operators with dynamically defined potentials. Ergodic Theory Dyn. Syst. 37, 1681–1764 (2017)

Damanik, D., Embree, M., Gorodetski, A.: Spectral properties of Schrödinger operators arising in the study of quasicrystals. In: Mathematics of Aperiodic Order. Progress in Mathematical Physics, vol. 309, pp. 307–370. Birkhäuser/Springer, Basel (2015)

Damanik, D., Embree, M., Gorodetski, A., Tcheremchantsev, S.: The fractal dimension of the spectrum of the Fibonacci Hamiltonian. Commun. Math. Phys. 280, 499–516 (2008)

Damanik, D., Fang, L., Sukhtaiev, S.: Zero measure and singular continuous spectra for quantum graphs. Ann. Henri Poincaré 21, 2167–2191 (2020)

Damanik, D., Fillman, J.: Spectral Theory of Discrete One-Dimensional Ergodic Schrödinger Operators. monograph in preparation

Damanik, D., Gorodetski, A.: Hyperbolicity of the trace map for the weakly coupled Fibonacci Hamiltonian. Nonlinearity 20, 123–143 (2009)

Damanik, D., Gorodetski, A.: The spectrum of the weakly coupled Fibonacci Hamiltonian. Electron. Res. Announc. Math. Sci. 16, 23–29 (2009)

Damanik, D., Gorodetski, A.: Spectral and quantum dynamical properties of the weakly coupled Fibonacci Hamiltonian. Commun. Math. Phys. 305, 221–277 (2011)

Damanik, D., Gorodetski, A., Yessen, W.: The Fibonacci Hamiltonian. Invent. Math. 206, 629–692 (2016)

Damanik, D., Killip, R., Lenz, D.: Uniform spectral properties of one-dimensional quasicrystals. III. \(\alpha \)-continuity. Commun. Math. Phys. 212, 191–204 (2000)

Damanik, D., Lenz, D.: Uniform spectral properties of one-dimensional quasicrystals. I. Absence of eigenvalues. Commun. Math. Phys. 207, 687–696 (1999)

Damanik, D., Lenz, D.: Uniform spectral properties of one-dimensional quasicrystals. II. The Lyapunov exponent. Lett. Math. Phys. 50, 245–257 (1999)

Damanik, D., Lenz, D.: Uniform spectral properties of one-dimensional quasicrystals, IV. Quasi-Sturmian potentials. J. d’Analyse Math. 90, 115–139 (2003)

Damanik, D., Lenz, D.: A criterion of Boshernitzan and uniform convergence in the multiplicative ergodic theorem. Duke Math. J. 133, 95–123 (2006)

Damanik, D., Lenz, D.: Zero-measure Cantor spectrum for Schrödinger operators with low-complexity potentials. J. Math. Pures Appl. 85, 671–686 (2006)

Damanik, D., Munger, P., Yessen, W.N.: Orthogonal polynomials on the unit circle with Fibonacci Verblunsky coefficients. I. The essential support of the measure, J. Approx. Theory 173, 56–88 (2013)

Iochum, B., Testard, D.: Power law growth for the resistance in the Fibonacci model. J. Stat. Phys. 65, 715–723 (1991)

Jitomirskaya, S., Last, Y.: Power-law subordinacy and singular spectra. II. Line operators. Commun. Math. Phys. 211, 643–658 (2000)

Johnson, R.: Exponential dichotomy, rotation number, and linear differential operators with bounded coefficients. J. Differ. Equ. 61, 54–78 (1986)

Kotani, S.: Jacobi matrices with random potentials taking finitely many values. Rev. Math. Phys. 1, 129–133 (1989)

Lenz, D.: Singular continuous spectrum of Lebesgue measure zero for one-dimensional quasicrystals. Commun. Math. Phys. 227, 119–130 (2002)

Mañé, R.: The Hausdorff dimension of horseshoes of diffeomorphisms of surfaces. Bol. Soc. Brasil. Mat. (N.S.) 20, 1–24 (1990)

McCluskey, H., Manning, A.: Hausdorff dimension for horseshoes. Ergodic Theory Dyn. Syst. 3, 251–261 (1983); Erratum. Ergodic Theory Dynam. Syst. 5, 319 (1985)

Palis, J., Viana, M.: On the continuity of the Hausdorff dimension and limit capacity for horseshoes. In: Dynamical Systems, Lecture Notes in Mathematics 1331, pp. 150–160. Springer, Berlin (1988)

Palis, J., Takens, F.: Hyperbolicity and Sensitive Chaotic Dynamics at Homoclinic Bifurcations. Fractal Dimensions and Infinitely Many Attractors. Cambridge University Press, Cambridge (1993)

Sütő, A.: The spectrum of a quasi-periodic Schrödinger operator. Commun. Math. Phys. 111, 409–415 (1987)

Wall, D.: Fibonacci series modulo \(m\). Am. Math. Mon. 67, 525–532 (1960)

Yessen, W.: Spectral analysis of tridigonal Fibonacci Hamiltonians. J. Spectr. Theory 3, 101–128 (2013)

Yessen, W.: On the energy spectrum of 1D quantum Ising quasicrystal. Ann. Henri Poincaré 15, 2167–2191 (2014)

Acknowledgements

Some of this work was carried out while D.D. was visiting the University of Bielefeld. He would like to thank Michael Baake and Sebastian Herr for the kind hospitality. We would also like to thank Philipp Gohlke for useful comments, and especially for pointing out an alternative approach to the questions studied in this paper by realizing the resulting potentials as quasi-Sturmian sequences.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Anton Bovier.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

D.D. was supported in part by NSF Grant DMS-1700131 and by an Alexander von Humboldt Foundation research award. L.F. was supported by NSFC (No. 11571327) and the Joint PhD Scholarship Program of Ocean University of China.

Appendix A. Notions and Results From Hyperbolic Dynamics

Appendix A. Notions and Results From Hyperbolic Dynamics

An invariant closed set \(\Lambda \) of a diffeomorphism \(f:M\rightarrow M\) is hyperbolic if there exists a splitting \(T_xM=E_x^s \oplus E_x^u\) of the tangent space at every point \(x \in \Lambda \) that is invariant under Df, and Df exponentially contracts vectors from the stable subspaces \(\{E_x^s\}\) and exponentially expands vectors from the unstable subspaces \(\{E_x^u\}\).

Let us recall that an invariant set \(\Lambda \) of a diffeomorphism \(f: M\rightarrow M\) is locally maximal if there exists a neighborhood \(U(\Lambda )\) such that

The set \(\Lambda \) is called transitive if it contains a dense orbit. It is not hard to prove that the splitting \(E_x^s\oplus E_x^u\) depends continuously on \(x \in \Lambda \); hence, \(\dim (E_x^{s,u})\) is locally constant. If \(\Lambda \) is transitive, then \(\dim (E_x^{s,u})\) is constant on \(\Lambda \).

Consider a locally maximal invariant transitive hyperbolic set \(\Lambda \subset M\), \(\dim M=2\), of a diffeomorphism \(f \in \text {Diff}^r(M)\), \(r \ge 1\). We have \(\Lambda = \bigcap _{n \in {\mathbb {Z}}} f^n(U(\Lambda ))\) for some neighborhood \(U(\Lambda )\). Assume that \(\dim E^s = \dim E^u=1\). Then, the following properties hold.

A.1. Stability. There is a neighborhood \(\mathcal {U} \subset \text {Diff}^1(M)\) of the map f such that for every \(g \in \mathcal {U}\), the set

is a locally maximal invariant hyperbolic set of g. Moreover, there is a homeomorphism \(h : \Lambda \rightarrow \Lambda _g\) that conjugates \(f|_\Lambda \) and \(g|_{\Lambda _g}\), that is, the following diagram commutes:

Also h can be taken arbitrarily close to the identity by taking \(\mathcal {U}\) sufficiently small.

A.2. Invariant Manifolds. For \(x \in \Lambda \) and small \(\varepsilon > 0\), consider the local stable and unstable sets

If \(\varepsilon \) is small enough, these sets are embedded \(C^r\)-disks with \(T_x W_\varepsilon ^s(x) = E_x^s\) and \(T_x W_\varepsilon ^u(x) = E_x^u\). Define the global stable and unstable sets by

Define also

A.3. Invariant Foliations. A stable foliation for \(\Lambda \) is a foliation \(\mathcal {F}^s\) of a neighborhood of \(\Lambda \) such that

-

(a)

for each \(x \in \lambda \), \(\mathcal {F}(x)\), the leaf containing x, is tangent to \(E_x^s\),

-

(b)

for each x sufficiently close to \(\Lambda \), \(f(\mathcal {F}^s(x)) \subset \mathcal {F}^s(f(x))\).

An unstable foliation \(\mathcal {F}^u\) can be defined in a similar way.

For a locally maximal hyperbolic set \(\Lambda \subset M\) for \(f \in \text {Diff}^1(M)\), \(\dim (M) = 2\), stable and unstable \(C^0\) foliations with \(C^1\) leaves can be constructed, see [29]; in case \(f \in \text {Diff}^2(M)\), \(C^1\) invariant foliations exist, see [32].

A.4. Local Hausdorff Dimension and Box Counting Dimension. Consider, for \(x \in \Lambda \) and small \(\varepsilon > 0\), the set \(W_\varepsilon ^s(x) \cap \Lambda \). Its Hausdorff dimension does not depend on \(x \in \Lambda \) and \(\varepsilon > 0\), and coincides with its box counting dimension

In a similar way,

Denote \(h^s = \dim _{\mathrm {H}} W_\varepsilon ^s(x) \cap \Lambda \) and \(h^u = \dim _{\mathrm {H}} W_\varepsilon ^u(x) \cap \Lambda \). We will say that \(h^s\) and \(h^u\) are the local stable and unstable Hausdorff dimension of \(\Lambda \).

For properly chosen small \(\varepsilon > 0\), the sets \(W_\varepsilon ^s \cap \Lambda \) and \(W_\varepsilon ^u \cap \Lambda \) are dynamically defined Cantor set, and this implies that

A.5. Global Hausdorff dimension. The Hausdorff dimension of \(\Lambda \) is equal to its box counting dimension, and

see [30, 31] for more details.

A.6. Continuity of the Hausdorff Dimension. The local Hausdorff dimensions \(h^s(\Lambda )\) and \(h^u(\Lambda )\) depend continuously on \(f : M\rightarrow M\) in the \(C^1\)-topology; see [30, 31]. Therefore, \(\dim _{\mathrm {H}} \Lambda _f = \dim _{\mathrm {B}} \Lambda _f = h^s(\Lambda _f) + h^u(\Lambda _f)\) also depends continuously on f in the \(C^1\)-topology. Moreover, for a \(C^r\) diffeomorphism \(f : M \rightarrow M\), \(r \ge 2\), the Hausdorff dimension of a hyperbolic set \(\Lambda _f\) is a \(C^{r-1}\) function of f, see [29].

Rights and permissions

About this article

Cite this article

Damanik, D., Fang, L. & Jun, H. Schrödinger Operators Generated by Locally Constant Functions on the Fibonacci Subshift. Ann. Henri Poincaré 22, 1459–1498 (2021). https://doi.org/10.1007/s00023-020-01005-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-020-01005-0