Abstract

We prove the Schrödinger operator with infinitely many point interactions in \(\mathbb {R}^d\)\((d=1,2,3)\) is self-adjoint if the support \(\Gamma \) of the interactions is decomposed into infinitely many bounded subsets \(\{\Gamma _j\}_j\) such that \(\inf _{j\not =k}\mathop {\mathrm{dist}}\nolimits (\Gamma _j,\Gamma _k)>0\). Using this fact, we prove the self-adjointness of the Schrödinger operator with point interactions on a random perturbation of a lattice or on the Poisson configuration. We also determine the spectrum of the Schrödinger operators with random point interactions of Poisson–Anderson type.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\Gamma \) be a locally finite subset of \(\mathbb {R}^d\) (\(d=1,2,3\)), that is, \(\#(\Gamma \cap K)<\infty \) for any compact subset K of \(\mathbb {R}^d\), where the symbol \(\#S\) is the cardinality of a set S. We define the minimal operator\(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\) by

where \(\Delta =\sum _{j=1}^d \partial ^2/\partial x_j^2\) is the Laplace operator. Clearly, \(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\) is a densely defined symmetric operator, and it is well known that the deficiency indices \(n_\pm (H_{\Gamma ,\mathop {\mathrm{min}}\nolimits })\) are given by

where \(\mathcal {K}_{\Gamma ,\pm }:=\mathop {\mathrm{Ker}}\nolimits ({H_{\Gamma , \mathop {\mathrm{min}}\nolimits }}^*\mp i)\) are the deficiency subspaces (see e.g., [2]). So, \(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\) is not essentially self-adjoint unless \(\Gamma =\emptyset \). A self-adjoint extension of \(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\) is called the Schrödinger operator with point interactions, since the support of the interactions is concentrated on countable number of points in \(\mathbb {R}^d\). The Schrödinger operator with point interactions is known as a typical example of solvable models in quantum mechanics, and numerous works are devoted to the study of this model or its perturbation by a scalar potential or a magnetic vector potential. The book [2] contains most of fundamental facts about this subject and exhaustive list of references up to 2004. The papers [9, 20, 22] also give us recent development of this subject.

There are mainly three popular methods of defining self-adjoint extensions H of \(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\). Here, we denote the free Laplacian by \(H_0\), that is, \(H_0=-\Delta \) with \(D(H_0)=H^2(\mathbb {R}^d)\).

- (i)

Calculate the deficiency subspaces \(\mathcal {K}_{\Gamma ,\pm }\) and give the difference of the resolvent operators \((H-z)^{-1}-(H_0-z)^{-1}\) (\(\mathop {\mathrm{Im}}\nolimits z\not =0\)) for the desired self-adjoint extension H by using von Neumann’s theory and Krein’s resolvent formula.

- (ii)

Introduce a scalar potential V, choose the renormalization factor \(\lambda (\epsilon )\) appropriately and define the operator H as the norm resolvent limit

$$\begin{aligned} H= \lim _{\epsilon \rightarrow 0}H_\epsilon ,\quad H_\epsilon =-\Delta +\lambda (\epsilon ) \epsilon ^{-d}V(\cdot /\epsilon ). \end{aligned}$$(1) - (iii)

Define the operator domain D(H) of the desired self-adjoint extension H in terms of the boundary conditions at \(\gamma \in \Gamma \).

These methods are mutually related with each other and give the same operators consequently. Historically, the seminal works by Kronig–Penney [21] (\(d=1\)) and Thomas [28] (\(d=3\)) start from the method (ii), and conclude that the limiting operators are described by the method (iii). Bethe–Peierls [7] also obtain a similar boundary condition for \(d=3\). Berezin–Faddeev [6] start from the method (ii) for \(d=3\) by using the cutoff in the momentum space and show that the limiting operator is also defined by the method (i). After the paper [6], the method (i) becomes probably the most commonly used one. It is mathematically rigorous and useful in the analysis of spectral and scattering properties of the system, since various quantities (e.g., spectrum, scattering amplitude, resonance, etc.) are defined via the resolvent operator. The characteristic feature in the method (ii) is the dependence of the renormalization factor \(\lambda (\epsilon )\) on the dimension d. We can take \(\lambda (\epsilon )=1\) for \(d=1\), but \(\lambda (\epsilon )\rightarrow 0\) as \(\epsilon \rightarrow 0\) for \(d=2,3\) (see [2] or Theorem 23 in the appendix). Recently, the method (iii) is reformulated in terms of the boundary triplet (see [9, 20, 22] and references therein). The method (iii) is useful when we cannot calculate the deficiency subspaces explicitly, e.g., the point interactions on a Riemannian manifold, etc. In the present paper, we adapt the method (iii), as explained below.

We define the maximal operator\(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits }\) by \(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits }={H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }}^*\), the adjoint operator of \(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }\). The operator \(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits }\) is explicitly given by

where \(\Delta |_{\mathbb {R}^d{\setminus }\Gamma } u=\sum _{j=1}^d\partial ^2u/\partial x_{j}^2\) in the sense of Schwartz distributions \({\mathcal D}'(\mathbb {R}^d{\setminus }\Gamma )\) on \(\mathbb {R}^d{\setminus }\Gamma \) (see [2] or Proposition 8 below). When \(d=1\), an element \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\) has boundary values \(\displaystyle u(\gamma \pm 0) (:=\lim \nolimits _{x\rightarrow \gamma \pm 0} u(x))\) and \(u'(\gamma \pm 0)\) for any \(\gamma \in \Gamma \). When \(d=2, 3\), it is known that any element \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\) has asymptotics

for every \(\gamma \in \Gamma \), where \(u_{\gamma ,0}\) and \(u_{\gamma ,1}\) are constants (see [2, 9] or Proposition 9 below).

Let \(\alpha = (\alpha _\gamma )_{\gamma \in \Gamma }\) be a sequence of real numbers. We define a closed linear operator \(H_{\Gamma ,\alpha }\) in \(L^2(\mathbb {R}^d)\) by

The boundary condition \((BC)_\gamma \) at the point \(\gamma \in \Gamma \) is defined as follows.

where \(u_{\gamma ,0}\) and \(u_{\gamma ,1}\) are the constants in (2). The above parametrization of the boundary conditions is consistent with the parametrization in [2], though our \(H_{\Gamma ,\alpha }\) is denoted by \(-\Delta _{\alpha .Y}\) in [2]. When \(d=1\), ‘no interaction at \(\gamma \)’ corresponds to the value \(\alpha _\gamma =0\), and the formal expression \(H_{\Gamma ,\alpha }=-\Delta + \sum _{\gamma \in \Gamma }\alpha _\gamma \delta _\gamma \) is justified in the sense of quadratic form, where \(\delta _\gamma \) is the Dirac delta function supported on the point \(\gamma \) (see (6)). However, when \(d=2,3\), ‘no interaction at \(\gamma \)’ corresponds to the value \(\alpha _\gamma =\infty \), and the coupling constant \(\alpha _\gamma \) is not the coefficient before the delta function, but the parameter appearing in the second term of the expansion of the renormalization factor \(\lambda (\epsilon )\) in (1) (see [2] or Theorem 23 in the appendix).

It is well known that \(H_{\Gamma ,\alpha }\) is self-adjoint when \(\#\Gamma <\infty \). When \(\#\Gamma =\infty \), the self-adjointness of \(H_{\Gamma ,\alpha }\) is proved under the uniform discreteness condition

in the book [2] and many other references (e.g., [10, 15, 16, 22]). There are only a few results in the case \(d_*=0\). Minami [24] studies the self-adjointness and the spectrum of the random Schrödinger operator \(H_\omega =\displaystyle -\frac{\mathrm{d}^2}{\mathrm{d}t^2}+Q_t'(\omega )\) on \(\mathbb {R}\), where \(\{Q_t(\omega )\}_{t\in \mathbb {R}}\) is a temporally homogeneous Lévy process. If we take

for the Poisson configuration (the support of the Poisson point process; see Definition 14 below) \(\Gamma _\omega \) on \(\mathbb {R}\) and i.i.d. (independently, identically distributed) random variables \(\alpha _\omega =(\alpha _{\omega ,\gamma })_{\gamma \in \Gamma _\omega }\), we conclude that \(H_{\Gamma _\omega ,\alpha _\omega }\) is self-adjoint almost surely. Kostenko–Malamud [20] give the following remarkable result.

Theorem 1

(Kostenko–Malamud [20]). Let \(d=1\). Let \(\Gamma =\{\gamma _n\}_{n\in \mathbb {Z}}\) be a sequence of strictly increasing real numbers with \(\displaystyle \lim \nolimits _{n\rightarrow \pm \infty }\gamma _n = \pm \infty \). Assume

Then, \(H_{\Gamma ,\alpha }\) is self-adjoint for every \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\).

Actually, Kostenko–Malamud [20] state the result in the half-line case, but the result can be easily extended in the whole line case, as stated above. In the proof, Kostenko–Malamud construct an appropriate boundary triplet for \({H_{\Gamma , \mathop {\mathrm{min}}\nolimits }}^*\). Moreover, Christ–Stolz [10] give a counter example of \((\Gamma ,\alpha )\) so that \(d=1\), \(d_*=0\) and \(H_{\Gamma ,\alpha }\) is not self-adjoint. However, the proof of Minami [24] uses that the deficiency indices are not more than two for one-dimensional symmetric differential operator, and the proof of Kostenko–Malamud [20] uses the decomposition \(L^2(\mathbb {R})=\oplus _{n=-\infty }^\infty L^2((\gamma _{n},\gamma _{n+1}))\). Both methods depend on the one dimensionality of the space and cannot directly be applied in two- or three-dimensional case.

In the present paper, we give a sufficient condition for the self-adjointness of \(H_{\Gamma ,\alpha }\), which is available even in the case \(d_*=0\) and \(d=2,3\). In the sequel, we denote R-neighborhood of a set S by \((S)_R\), that is,

where the distance \(\mathop {\mathrm{dist}}\nolimits (S,T)\) between two sets S and T is defined by

Assumption 2

\(\Gamma \) is a locally finite subset of \(\mathbb {R}^d\) and there exists \(R>0\) such that every connected component of \((\Gamma )_R\) is a bounded set.

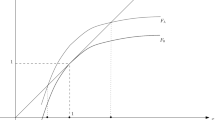

The set \((\Gamma )_R\) is the union of \(B_R(\gamma )\), an open disk of radius R centered at \(\gamma \in \Gamma \) (see Fig. 1). Assumption 2 is a generalization of the uniform discreteness condition (4). In fact, when \(\#\Gamma =\infty \), Assumption 2 is equivalent to the condition that \(\Gamma \) is decomposed into infinitely many bounded subsets \(\{\Gamma _j\}_j\) such that \(\inf _{j\not =k}\mathop {\mathrm{dist}}\nolimits (\Gamma _j,\Gamma _k)>0 \). However, in this paper, we cannot treat the case where \(\Gamma \) has accumulation points.

Our first main result is stated as follows.

Theorem 3

Let \(d=1,2,3\). Suppose Assumption 2 holds. Then, \(H_{\Gamma ,\alpha }\) is self-adjoint for any \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\).

In the case \(d=1\), Theorem 3 is a special case of Theorem 1, since Assumption 2 implies there are infinitely many positive n and negative n such that \(d_n\ge 2R\), so the assumption (5) holds. In the case \(d=2,3\), Theorem 3 is new. Moreover, Theorem 3 also holds even if \(\alpha _\gamma =\infty \) (Dirichlet condition at \(\gamma \) for \(d=1\), no interaction at \(\gamma \) for \(d=2,3\)) for some \(\gamma \in \Gamma \), as is easily seen from the proof.

Theorem 3 is especially useful in the study of Schrödinger operators with random point interactions. There are a lot of studies about the Schrödinger operators with random point interactions ([3, 8, 11,12,13, 17, 24]), but in most of these results \(\Gamma \) is assumed to be \(\mathbb {Z}^d\) or its random subset, except Minami’s paper [24]. Using Theorem 3, we can study more general random point interactions so that \(d_*\) can be 0. In the present paper, we prove the self-adjointness of \(H_{\Gamma ,\alpha }\) for the following two models. First one is the random displacement model, given as follows. Notice that \(d_*\) can be 0 for this model.

Corollary 4

Let \(d=1,2,3\). Let \(\{\delta _n(\omega )\}_{n \in \mathbb {Z}^d}\) be a sequence of i.i.d. \(\mathbb {R}^d\)-valued random variables defined on some probability space \(\Omega \) such that \(|\delta _n(\omega )|< C\) for some positive constant C independent of n and \(\omega \in \Omega \). Put

Then, \(H_{\Gamma _\omega ,\alpha }\) is self-adjoint for any \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma _\omega }\).

The proof of Corollary 4 is an application of Theorem 3 via some auxiliary result (Corollary 13). Another one is the Poisson model, given as follows.

Corollary 5

Let \(d=1,2,3\). Let \(\Gamma _\omega \) be the Poisson configuration on \(\mathbb {R}^d\) with intensity measure \(\lambda \mathrm{d}x\) for some positive constant \(\lambda \). Then, \(H_{\Gamma _\omega ,\alpha }\) is self-adjoint for any \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma _\omega }\), almost surely.

Corollary 5 is proved by combining Theorem 3 with the theory of continuum percolation (Theorem 15). These results are new when \(d=2,3\), and are not new when \(d=1\), as stated before.

The proof of Theorem 3 also enables us to determine the spectrum of \(H_{\Gamma ,\alpha }\) for the point interactions of Poisson–Anderson type, defined as follows.

Assumption 6

-

(i)

\(\Gamma _\omega \) is the Poisson configuration with intensity measure \(\lambda \mathrm{d}x\) for some \(\lambda >0\).

-

(ii)

The coupling constants \(\alpha _\omega =(\alpha _{\omega ,\gamma })_{\gamma \in \Gamma _\omega }\) are real-valued i.i.d. random variables with common distribution measure \(\nu \) on \(\mathbb {R}\). Moreover, \((\alpha _{\omega ,\gamma })_{\gamma \in \Gamma _\omega }\) are independent of \(\Gamma _\omega \).

We note that \(\alpha _\omega =(\alpha _{\omega ,\gamma })_{\gamma \in \Gamma _\omega }\) can be deterministic: the case \(\mathop {\mathrm{supp}}\nolimits \nu =\{\alpha _0\}\) for some \(\alpha _0\in \mathbb {R}\) is allowed.

Theorem 7

Let \(d=1,2,3\). Let \(\Gamma _\omega \) and \(\alpha _\omega \) satisfy Assumption 6 and put \(H_\omega =H_{\Gamma _\omega ,\alpha _\omega }\). Then, the spectrum \(\sigma (H_\omega )\) of \(H_\omega \) is given as follows.

- (i)

When \(d=1\), we have

$$\begin{aligned} \sigma (H_\omega )= {\left\{ \begin{array}{ll} {[}0,\infty ) &{} (\mathop {\mathrm{supp}}\nolimits \nu \subset [0,\infty )),\\ \mathbb {R} &{} (\mathop {\mathrm{supp}}\nolimits \nu \cap (-\infty ,0)\not =\emptyset ), \end{array}\right. } \end{aligned}$$almost surely.

- (ii)

When \(d=2,3\), we have \( \sigma (H_\omega )=\mathbb {R}\) almost surely.

Notice that the result is independent of \(\mathop {\mathrm{supp}}\nolimits \nu \) when \(d=2,3\). If we permit \(\alpha _{\omega ,\gamma }=\infty \) for some \(\omega , \gamma \) and regard \(\nu \) as a measure on \(\mathbb {R}\cup \{\infty \}\), the statement still holds in the case \(d=1\) if we replace ‘\(\mathop {\mathrm{supp}}\nolimits \nu \subset [0,\infty )\)’ by ‘\(\mathop {\mathrm{supp}}\nolimits \nu \subset [0,\infty ]\).’ In the case \(d=2,3\), we have to assume ‘\(\mathop {\mathrm{supp}}\nolimits \nu \cap \mathbb {R}\not =\emptyset \)’ in order to obtain the same conclusion. If \(\mathop {\mathrm{supp}}\nolimits \nu =\{\infty \}\), then \(H_\omega \) becomes the free Laplacian, so \(\sigma (H_\omega )=[0,\infty )\).

Theorem 7 can be interpreted as a generalization of the corresponding result for the Schrödinger operator \(-\Delta +V_\omega \) with random scalar potential of Poisson–Anderson type

where \(\Gamma _\omega \) and \(\alpha _\omega \) satisfy Assumption 6, and \(V_0\) is a real-valued scalar function having some regularity and decaying property. The spectrum \(\sigma (-\Delta +V_\omega )\) is determined in [5, 18, 25], and the result says ‘the spectrum equals\([0,\infty )\)if\(V_\omega \)is nonnegative, and it equals\(\mathbb {R}\)if\(V_\omega \)has negative part.’ When \(d=1\), the point interaction at \(\gamma \) has the same sign as the sign of the coupling constant \(\alpha _\gamma \) in the sense of quadratic form, that is,

for \(u\in D(H_{\Gamma ,\alpha })\) with bounded support. When \(d=2,3\), the sign of point interaction at \(\gamma \) is in some sense negative for any \(\alpha _\gamma \in \mathbb {R}\). Actually, if \(H_\epsilon \) converges to some \(H_{O,\alpha }\) (\(O:=\{0\}\), \(\alpha =\{\alpha _0\}\)) for some \(\alpha _0\in \mathbb {R}\) in the approximation procedure (1), V necessarily has negative part (for the detail, see Theorem 23 in the appendix). There is also qualitative difference between the proof of Theorem 7 in the case \(d=1\) and that in the case \(d=2,3\). The spectrum \((-\infty ,0)\) is created by the accumulation of many points in one place when \(d=1\), while it is created by the merging of two points when \(d=2,3\) (see Sect. 3.3). The latter fact reminds us Thomas collapse, which says the mass defect of the tritium \({^3}\mathrm{H}\) becomes arbitrarily large as the distances between a proton and two neutrons become small enough (see [28]).

Let us give a brief comment on the magnetic case. The Schrödinger operator with a constant magnetic field plus infinitely many point interactions was studied in [12, 14], and the self-adjointness was proved under the uniform discreteness condition (4). Theorem 3 can be generalized under the existence of a constant magnetic field, by using the magnetic translation operator. We will discuss this case elsewhere in the near future.

The present paper is organized as follows. In Sect. 2, we review some fundamental formulas about self-adjoint extensions of \(H_{\Gamma , \mathop {\mathrm{min}}\nolimits }\) and prove Theorem 3. The crucial fact is ‘bounded support functions are dense in \(D(H_{\Gamma ,\alpha })\) under Assumption 2’ (Proposition 12). In Sect. 3, we prove the self-adjointness of Schrödinger operators with various random point interactions. We also determine the spectrum of \(H_\omega =H_{\Gamma _\omega ,\alpha _\omega }\) for Poisson–Anderson-type point interactions, using the method of admissible potentials (Proposition 18; see also [5, 18, 19, 25]). In the proof, we again need Proposition 12 and also need to take care of the dependence of the operator domain \(D(H_{\Gamma ,\alpha })\) with respect to \(\Gamma \) and \(\alpha \). Once we establish the method of admissible potentials, the proof of Theorem 7 is reduced to the calculation of \(\sigma (H_{\Gamma ,\alpha })\) for admissible \((\Gamma ,\alpha )\).

Let us explain the notation in the manuscript. The notation \(A:=B\) means A is defined as B. The set \(B_r(x)\) is the open ball of radius r centered at \(x\in \mathbb {R}^d\), that is, \(B_r(x):=\{y\in \mathbb {R}^d;|y-x|<r\}\). The space D(H) is the operator domain of a linear operator H equipped with the graph norm \(\Vert u\Vert _{D(H)}^2=\Vert u\Vert ^2 + \Vert H u\Vert ^2\). For an open set U, \(C_0^\infty (U)\) is the set of compactly supported \(C^\infty \) functions on U. The space \(L^2(U)\) is the space of square integrable functions on U, and the inner product and the norm on \(L^2(U)\) are defined as

When \(U=\mathbb {R}^d\), we often abbreviate the subscript \(L^2(U)\). The space \(H^2(U)\) is the Sobolev space of order 2 on U, and the norm is defined by

where \(\alpha =(\alpha _1,\ldots ,\alpha _d)\in (\mathbb {Z}_{\ge 0})^d\) is the multi-index and \(|\alpha |=\alpha _1+\cdots +\alpha _d\), and the derivatives are defined as elements of \(\mathcal {D}'(U)\), the Schwartz distributions on U. The space \(L^2_\mathrm{loc}(U)\) is the set of the functions u such that \(\chi u\in L^2(U)\) for any \(\chi \in C_0^\infty (U)\). The space \(H^2_\mathrm{loc}(U)\) is defined similarly.

2 Self-adjointness

2.1 Structure of \(D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\)

First, we review fundamental properties of the operator domain \(D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\) of the maximal operator \(H_{\Gamma , \mathop {\mathrm{max}}\nolimits }\). Most of the results are already obtained under more general assumption (see e.g., [2, 9, 22]), but we give the proof here since the ingredient of which is closely related to those of our main theorems.

Proposition 8

Let \(d=1,2,3\).

- (i)

We have

$$\begin{aligned} D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })&=\{u\in L^2(\mathbb {R}^d) \,;\, \Delta |_{\mathbb {R}^d{\setminus }\Gamma } u \in L^2(\mathbb {R}^d)\}\\&=\{u\in L^2(\mathbb {R}^d)\cap H^2_\mathrm{loc}(\mathbb {R}^d{\setminus } \Gamma ) \,;\, \Delta |_{\mathbb {R}^d{\setminus }\Gamma } u \in L^2(\mathbb {R}^d)\}, \end{aligned}$$where \(\Delta |_{\mathbb {R}^d{\setminus }\Gamma } u=\sum _{j=1}^d\partial ^2u/\partial x_{j}^2\) in the sense of Schwartz distributions \({\mathcal D}'(\mathbb {R}^d{\setminus }\Gamma )\) on \(\mathbb {R}^d{\setminus }\Gamma \).

- (ii)

Let \(\chi \in C_0^\infty (\mathbb {R}^d)\) such that \(\Gamma \cap \mathop {\mathrm{supp}}\nolimits \nabla \chi =\emptyset \). Then, for any \(u\in D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\), we have \(\chi u\in D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\).

In the sequel, we simply write \(\Delta =\Delta |_{\mathbb {R}^d{\setminus }\Gamma }\) when there is no fear of confusion.

Proof

(i) By definition, the statement \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })=D({H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }}^*)\) is equivalent to ‘\(u\in L^2(\mathbb {R}^d)\) and there exists \(v\in L^2(\mathbb {R}^d)\) such that

for any \(\phi \in C_0^\infty (\mathbb {R}^d{\setminus } \Gamma )\)’. The latter statement is equivalent to \(v=-\Delta |_{\mathbb {R}^d{\setminus }\Gamma } u\in L^2(\mathbb {R}^d)\). Moreover, by the elliptic inner regularity theorem (Corollary 25), we have \(u\in H^2_\mathrm{loc}(\mathbb {R}^d{\setminus } \Gamma )\).

(ii) Let \(\chi \) satisfy the assumption and \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\). By the chain rule, we have

Since \(u,\Delta u\in L^2(\mathbb {R}^d)\), the first term of (7) and the third belong to \(L^2(\mathbb {R}^d)\). Moreover, since \(\mathop {\mathrm{supp}}\nolimits \nabla \chi \) is a compact subset of \(\mathbb {R}^d{\setminus }\Gamma \) and \(u\in H^2_\mathrm{loc}(\mathbb {R}^d{\setminus }\Gamma )\), the second term also belongs to \(L^2(\mathbb {R}^d)\). Thus, \(\chi u, \Delta (\chi u)\in L^2(\mathbb {R}^d)\), and the statement follows from (i). \(\square \)

The assumption \(\Gamma \cap \mathop {\mathrm{supp}}\nolimits \nabla \chi =\emptyset \) above cannot be removed when \(d=2,3\). For example, consider the case \(d=2\), \(\Gamma =O:=\{0\}\). Take functions u and \(\chi \) such that

and defined appropriately for \(1\le |x| \le 2\) so that \(u\in C^\infty (\mathbb {R}^2{\setminus } O)\) and \(\chi \in C_0^\infty (\mathbb {R}^2)\). Since \(\Delta \log |x|=0\) for \(x\not =0\), we see that \(u,\Delta u\in L^2(\mathbb {R}^2)\), so \(u\in D(H_{O,\mathop {\mathrm{max}}\nolimits })\). However, the chain rule (7) implies

for \(|x|<1\), so \(\Delta (\chi u)\not \in L^2(\mathbb {R}^2)\). This fact is crucial in our proof of self-adjointness criterion (Theorem 3).

Next, we define the (generalized) boundary values at \(\gamma \in \Gamma \) of \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\). In the case \(d=2,3\), similar argument is found in [2, 9].

Proposition 9

-

(i)

Let \(d=1\), \(u\in D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\) and \(\gamma \in \Gamma \). Then, one-side limits \(u(\gamma \pm 0)\) and \(u'(\gamma \pm 0)\) exist.

-

(ii)

Let \(d=2,3\), \(u\in D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\) and \(\gamma \in \Gamma \). Let \(\epsilon \) be a small positive constant so that \(\overline{B_\epsilon (\gamma )}\cap \Gamma =\{\gamma \}\). Then, there exist unique constants \(u_{\gamma ,0}\) and \(u_{\gamma ,1}\), and \(\widetilde{u}\in H^2(B_\epsilon (\gamma ))\) with \(\widetilde{u}(\gamma )=0\), such that for \(x\in B_{\epsilon }(\gamma )\)

$$\begin{aligned} \begin{array}{cc} u(x) = u_{\gamma ,0}\log |x-\gamma |+u_{\gamma ,1}+ \widetilde{u}(x) &{} (d=2),\\ u(x) = u_{\gamma ,0}|x-\gamma |^{-1}+u_{\gamma ,1}+ \widetilde{u}(x) &{} (d=3). \end{array} \end{aligned}$$(9)

Proof

(i) This is a consequence of the Sobolev embedding theorem, since the restriction of \(u\in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\) on I belongs to \(H^2(I)\) for any connected component I of \(\mathbb {R}{\setminus }\Gamma \).

(ii) We consider the case \(d=2\). By a cutoff argument ((ii) of Proposition 8), we can reduce the proof to the case \(\Gamma \) equals one point set. Without loss of generality, we assume \(\Gamma =O\). Then, by von Neumann’s theory of self-adjoint extensions (see e.g., [26, Section X.1]), we have

where \(\overline{D(H_{O,\mathop {\mathrm{min}}\nolimits })}\) is the closure of \(D(H_{O,\mathop {\mathrm{min}}\nolimits })\) with respect to the graph norm (or \(H^2\)-norm), and \(\mathcal {K}_{O,\pm } = \mathop {\mathrm{Ker}}\nolimits (H_{O,\mathop {\mathrm{max}}\nolimits }\mp i)\) are deficiency subspaces. It is known that \(\mathcal {K}_{O,\pm }\) are one-dimensional spaces spanned by

where \(H^{(1)}_0\) is the 0-th order Hankel function of the first kind, \(r=|x|\), and the branches of \(\sqrt{\pm i}\) are taken as \(\mathop {\mathrm{Im}}\nolimits \sqrt{\pm i}>0\) (see [2]). Thus, we have inclusion

The first inclusion is due to the Sobolev embedding theorem. The second inclusion is clearly strict, and the third one is also strict since \(D(H_{O,\mathop {\mathrm{max}}\nolimits })\) contains elements singular at 0, by (10) (see (12) below). The decomposition (10) also tells us \(\dim \left( D(H_{O,\mathop {\mathrm{max}}\nolimits })/ \overline{D(H_{O,\mathop {\mathrm{min}}\nolimits })}\right) =2\), so the first inclusion in (11) must be equality, that is,

By the series expansion of the Hankel function, we have

where \(\gamma _E\) is the Euler constant. It is easy to see the remainder term is in \(H^2(B_\epsilon (0))\) and vanishes at 0. Thus, by the decomposition (10), every \(u\in D(H_{O,\mathop {\mathrm{max}}\nolimits })\) can be uniquely written as (9).

In the case \(d=3\), a basis of the deficiency subspace \(\mathcal {K}_{O,\pm }\) is

(see [2]). Using this expression, we can prove the statement for \(d=3\) similarly.

\(\square \)

Next, we introduce the generalized Green formula.

Proposition 10

Let \(d=1,2,3\). Let \(u,v \in D(H_{\Gamma , \mathop {\mathrm{max}}\nolimits })\), and assume \(\mathop {\mathrm{supp}}\nolimits u\) or \(\mathop {\mathrm{supp}}\nolimits v\) is bounded. Then, we have

Proof

The proof in the case \(d=1\) is easy. Consider the case \(d=2\). By a cutoff argument, we can assume both \(\mathop {\mathrm{supp}}\nolimits u\) and \(\mathop {\mathrm{supp}}\nolimits v\) are bounded. We can also assume \(\mathop {\mathrm{supp}}\nolimits u \cup \mathop {\mathrm{supp}}\nolimits v\subset B_R(0)\), and \(\Gamma \cap \partial B_R(0)=\emptyset \). Then, we can decompose u and v as

where \(\widetilde{u},\widetilde{v}\in \overline{D(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits })}\), and \(\phi _\gamma ,\psi _\gamma \in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\) are real-valued functions such that

and \(\mathop {\mathrm{supp}}\nolimits \phi _\gamma \cup \mathop {\mathrm{supp}}\nolimits \psi _\gamma \) is contained in some small neighborhood of \(\gamma \) so that \(\{\mathop {\mathrm{supp}}\nolimits \phi _\gamma \cup \mathop {\mathrm{supp}}\nolimits \psi _\gamma \}_{\gamma \in \Gamma \cap B_R(0)}\) are disjoint sets in \(B_R(0)\).

We use the notation

Clearly, \([\phi ,\psi ]=-\overline{[\psi ,\phi ]}\), so \([\phi ,\phi ]\)=0 for real-valued \(\phi \in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\). Moreover, \([\phi ,\psi ]=0\) if \(\phi \in D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\) and \(\psi \in \overline{D(H_{\Gamma ,\mathop {\mathrm{min}}\nolimits })}\). Thus, we have

Let us calculate \([\phi _\gamma ,\psi _\gamma ]\). By translating the coordinate, we assume \(\gamma =0\) and write \(\phi _\gamma =\phi \), \(\psi _\gamma =\psi \). Then, since \(\phi =\log r\) and \(\psi =1\) near \(x=0\),

where n is the unit inner normal vector on \(\partial B_\epsilon (0)\), ds is the line element, and \((r,\theta )\) is the polar coordinate. Thus, the assertion for \(d=2\) holds. The proof for the case \(d=3\) is similar, but we take the function \(\phi _\gamma \) as

\(\square \)

If the uniform discreteness condition (4) holds, the results in this subsection can be formulated in terms of the boundary triplet for \(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits }\), as is done in [9, 22]. When \(d=1\) and \(d_*=0\), the boundary triplet for \(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits }\) is constructed in [20]. The construction in the case \(d=2,3\) and \(d_*=0\) seems to be unknown so far.

2.2 Proof of Theorem 3

Let \(\Gamma \) be a locally finite set in \(\mathbb {R}^d\), and \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\) be a sequence of real numbers. In this subsection, we write \(H=H_{\Gamma ,\alpha }\), that is,

where \((BC)_\gamma \) is defined in (3). We introduce an auxiliary operator \(H_b\) by

By the generalized Green formula (Proposition 10), we have the following.

Proposition 11

Let \(d=1,2,3\). For any locally finite set \(\Gamma \) and sequence of real numbers \(\alpha \), the operator \(H_b\) is a densely defined symmetric operator, and \({H_b}^*=H\).

Proof

We consider the case \(d=2\), since the case \(d=1,3\) can be treated similarly. For \(u,v\in D(H_b)\), the generalized Green formula (13) and \((BC)_\gamma \) imply

Thus, \(H_b\) is a symmetric operator.

The equality (14) also holds for any \(u\in D(H_b)\) and \(v\in D(H)\), so \(D(H)\subset D({H_b}^*)\). Conversely, let \(v\in D({H_b}^*)\). By definition, \([u,v]=0\) holds for any \(u\in D(H_b)\). For \(\gamma \in \Gamma \), take \(u\in D(H_b)\) such that \(u_{\gamma ,0}=1/(2\pi )\), \(u_{\gamma ,1}=-\alpha _\gamma \), and \(u_{\gamma ',0}=u_{\gamma ',1}=0\) for \(\gamma '\not =\gamma \). Since \(D({H_b}^*)\subset D({H_{\Gamma ,\mathop {\mathrm{min}}\nolimits }}^*)=D(H_{\Gamma ,\mathop {\mathrm{max}}\nolimits })\), we have by the generalized Green formula (13)

Thus, v satisfies \((BC)_\gamma \) for every \(\gamma \in \Gamma \), and we conclude \(v\in D(H)\). This means \(H={H_b}^*\). \(\square \)

Now, Theorem 3 is a corollary of the following proposition.

Proposition 12

Suppose Assumption 2 holds. Then, \(\overline{H_b}=H\). In other words, \(D(H_b)\) is an operator core for the operator H.

Proof

Let R be the constant in Assumption 2. For a positive integer n, let \(S_n\) be the connected component of \(B_n(0)\cup (\Gamma )_R\) containing \(B_n(0)\) (see Fig. 2).

The union of non-dashed disks is the set \(S_n\) for \(n=2\). Here, \((\Gamma )_R\) is the set in Fig. 1

By assumption, \(S_n\) is a bounded open set in \(\mathbb {R}^d\). Let \(\eta \in C_0^\infty (\mathbb {R}^d)\) be a rotationally symmetric function such that \(\eta \ge 0\), \(\mathop {\mathrm{supp}}\nolimits \eta \subset B_{R/3}(0)\), and \(\int _{\mathbb {R}^d}\eta \mathrm{d}x =1\). Put

The function \(\chi _n\) has the following properties.

- (i)

\(\chi _n\in C_0^\infty (\mathbb {R}^d)\), \(0\le \chi _n(x)\le 1\), and

$$\begin{aligned} \chi _n(x)= {\left\{ \begin{array}{ll} 1 &{} (x\in S_n,\ \mathop {\mathrm{dist}}\nolimits (x,\partial S_n)>R/3), \\ 0 &{} (x\not \in S_n,\ \mathop {\mathrm{dist}}\nolimits (x,\partial S_n)>R/3). \end{array}\right. } \end{aligned}$$In particular, \(\chi _n(x)\rightarrow 1\) as \(n\rightarrow \infty \) for every \(x\in \mathbb {R}^d\).

- (ii)

\(\mathop {\mathrm{supp}}\nolimits \nabla \chi _n\subset (\partial S_n)_{R/3}\), and \(\mathop {\mathrm{supp}}\nolimits \nabla \chi _n\cap \Gamma =\emptyset \).

- (iii)

\(\Vert \nabla \chi _n\Vert _\infty \), \(\Vert \Delta \chi _n\Vert _\infty \) are bounded uniformly with respect to n, where \(\Vert \cdot \Vert _\infty \) denotes the sup norm.

Let \(u\in D(H)\). By (i), (ii) and Proposition 8, \(\chi _n u \in D(H_b)\). By the dominated convergence theorem and (i), \(\chi _n u \rightarrow u\) in \(L^2(\mathbb {R}^d)\). Moreover,

Since \(u,\Delta u\in L^2\), the first term of (15) and the third tend to 0 in \(L^2(\mathbb {R}^d)\) by the dominated convergence theorem. As for the second term, we apply the elliptic inner regularity estimate (Corollary 25) for \(U=(\partial S_n)_{R/2}\) and \(V=(\partial S_n)_{R/3}\), and obtain

Here, the constant C is independent of n, since \(\mathop {\mathrm{dist}}\nolimits (V,\partial U)\ge R/6\) and the lower bound is independent of n. The last expression tends to 0 as \(n\rightarrow \infty \), so \(\Delta (\chi _n u)-\Delta u\rightarrow 0\) in \(L^2(\mathbb {R}^d)\). Thus, \(\chi _n u \in D(H_b)\) converges to u in D(H), and we conclude \(D(H_b)\) is dense in D(H). \(\square \)

Proof of Theorem 3

Proposition 11 implies \(H={H_b}^*\), and \({H_b}^*=(\overline{H_b})^*\) always holds. On the other hand, Proposition 12 says \(\overline{H_b}=H\), so

Thus, H is self-adjoint. \(\square \)

3 Random Point Interactions

Using Theorem 3, we study the Schrödinger operators with random point interactions so that \(d_*\) can be 0.

3.1 Self-adjointness

First, we give a simple corollary of Theorem 3.

Corollary 13

Assume that there exists \(R_0>0\) and \(M>0\) such that \(\#(\Gamma \cap B_{R_0}(x))\le M\) for every \(x\in \mathbb {R}^d\). Then, \(H_{\Gamma ,\alpha }\) is self-adjoint for any \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\).

Proof

The assumption implies Assumption 2 holds with \(R=R_0/(2M+1)\), since the connected component of \((\Gamma )_R\) containing \(x\in \mathbb {R}^d\) is contained in the bounded set \(B_{R_0}(x)\). \(\square \)

The assumption of Corollary 13 is satisfied for random displacement model (Corollary 4).

Proof of Corollary 4

Under the assumption of Corollary 4, we have

where |S| denotes the Lebesgue measure of a measurable set S. Thus, the assumption of Corollary 13 is satisfied. \(\square \)

Next, we consider the case \(\Gamma =\Gamma _\omega \) is the Poisson configuration (Corollary 5). We review the definition of the Poisson configuration (see e.g., [5, 18, 25, 27]).

Definition 14

Let \(\mu _\omega \) be a random measure on \(\mathbb {R}^d\) (\(d\ge 1\)) dependent on \(\omega \in \Omega \) for some probability space \(\Omega \). For a positive constant \(\lambda \), we say \(\mu _\omega \) is the Poisson point process with intensity measure \(\lambda \mathrm{d}x\) if the following conditions hold.

- (i)

For every Borel measurable set \(E\in \mathbb {R}^d\) with the Lebesgue measure, \(|E|<\infty \), \(\mu _\omega (E)\) is an integer-valued random variable on \(\Omega \) and

$$\begin{aligned} \mathbb {P}(\mu _\omega (E)=k)=\frac{(\lambda |E|)^k}{k!}\mathrm{e}^{-\lambda |E|} \end{aligned}$$for every nonnegative integer k.

- (ii)

For any disjoint Borel measurable sets \(E_1,\ldots ,E_n\) in \(\mathbb {R}^d\) with finite Lebesgue measure, the random variables \(\{\mu _\omega (E_j)\}_{j=1}^n\) are independent.

We call the support \(\Gamma _\omega \) of the Poisson point process measure \(\mu _\omega \) the Poisson configuration.

We introduce a basic result in the theory of continuum percolation (see e.g., [23]).

Theorem 15

(Continuum percolation) Let \(\Gamma =\Gamma _\omega \) be the Poisson configuration on \(\mathbb {R}^d\) (\(d\ge 2\)) with intensity measure \(\lambda \mathrm{d}x\), where \(\lambda \) is a positive constant. For \(R>0\), let \(\theta _R(\lambda )\) be the probability of the event ‘the connected component of \((\Gamma )_R\) containing the origin is unbounded.’ Then, for any \(R>0\), there exists a positive constant \(\lambda _c(R)\), called the critical density, such that

Moreover, the scaling property

holds for any \(R>0\).

When \(d=1\), it is easy to see \(\theta _R(\lambda )=0\) for every \(R>0\) and \(\lambda >0\), so we put \(\lambda _c(R)=\infty \).

Proof of Corollary 5

By the scaling property (16), the condition \(\lambda <\lambda _c(R)\) is satisfied if we take R sufficiently small. Then, since the Poisson point process is statistically translationally invariant and \(\mathbb {R}^d\) has a countable dense subset, we see that every connected component of \((\Gamma _\omega )_R\) is bounded, almost surely. Thus, Theorem 3 implies the conclusion. \(\square \)

3.2 Admissible Potentials for Poisson–Anderson-Type Point Interactions

By Corollary 5, we can define the Schrödinger operator with random point interactions of Poisson–Anderson type, that is, \((\Gamma _\omega ,\alpha _\omega )\) satisfies Assumption 6. We write \(H_\omega =H_{\Gamma _\omega ,\alpha _\omega }\) for simplicity and study the spectrum of \(H_\omega \). For this purpose, we use the method of admissible potentials, which is a useful method when we determine the spectrum of the random Schrödinger operators (see e.g., [5, 18, 19, 25]).

Definition 16

Let \(\nu \) be the single-site measure in (ii) of Assumption 6.

- (i)

We say a pair \((\Gamma ,\alpha )\) belongs to \({\mathcal A}_F\) if \(\Gamma \) is a finite set in \(\mathbb {R}^d\) and \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\) with \(\alpha _\gamma \in \mathop {\mathrm{supp}}\nolimits \nu \) for every \(\gamma \in \Gamma \).

- (ii)

We say a pair \((\Gamma ,\alpha )\) belongs to \({\mathcal A}_P\) if \(\Gamma \) is expressed as

$$\begin{aligned} \Gamma =\bigcup _{k=1}^n \left( \gamma _k+ \bigoplus _{j=1}^d \mathbb {Z}e_j \right) \end{aligned}$$for some \(n=0,1,2,\ldots \), some vectors \(\gamma _1,\ldots ,\gamma _n\in \mathbb {R}^d\) and independent vectors \(e_1,\ldots ,e_d\in \mathbb {R}^d\), and \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\) is a \(\mathop {\mathrm{supp}}\nolimits \nu \)-valued periodic sequence on \(\Gamma \), i.e., \(\alpha _\gamma \in \mathop {\mathrm{supp}}\nolimits \nu \) for every \(\gamma \in \Gamma \) and \(\alpha _{\gamma +e_j}=\alpha _\gamma \) for every \(\gamma \in \Gamma \) and \(j=1,\ldots ,d\).

Notice that \((\Gamma ,\alpha )\) belongs to both \(\mathcal {A}_F\) and \(\mathcal {A}_P\) if \(\Gamma =\emptyset \).

We need a lemma about the continuous dependence of the operator domain \(D(H_{\Gamma ,\alpha })\) with respect to \((\Gamma ,\alpha )\).

Lemma 17

Let \(\Gamma =\{\gamma _j\}_{j=1}^n\) be an n-point set and \(\alpha =(\alpha _j)_{j=1}^n\) a real-valued sequence on \(\Gamma \), where we denote \(\alpha _{\gamma _j}\) by \(\alpha _j\). Let \(\delta =\mathop {\mathrm{min}}\nolimits _{j\not =k}|\gamma _j-\gamma _k|\). Let \(\epsilon >0\), \(E\in \mathbb {R}\), and U be a bounded open set. Suppose that there exists \(u_\epsilon \in D(H_{\Gamma ,\alpha })\) such that \(\Vert u_\epsilon \Vert =1\), \(\mathop {\mathrm{supp}}\nolimits u_\epsilon \subset U\), and \(\Vert (H_{\Gamma ,\alpha }-E)u_\epsilon \Vert \le \epsilon \). Then, the following holds.

- (i)

There exists \(\epsilon '>0\) satisfying the following property; for any \(\widetilde{\Gamma }=\{\widetilde{\gamma }_j\}_{j=1}^n\) with \(|\gamma _j-\widetilde{\gamma }_j|\le \epsilon '\), there exists \(v_\epsilon \in H_{\widetilde{\Gamma },\alpha }\) such that \(\Vert v_\epsilon \Vert =1\), \(\mathop {\mathrm{supp}}\nolimits v_\epsilon \subset U\), and \(\Vert (H_{\widetilde{\Gamma },\alpha }-E)v_\epsilon \Vert \le 2\epsilon \).

- (ii)

There exists \(\epsilon ''>0\) satisfying the following property; for any \(\widetilde{\alpha }=(\widetilde{\alpha }_j)_{j=1}^n\) with \(|\alpha _j-\widetilde{\alpha }_j|\le \epsilon ''\), there exists \(v_\epsilon \in H_{\Gamma ,\widetilde{\alpha }}\) such that \(\Vert v_\epsilon \Vert =1\), \(\mathop {\mathrm{supp}}\nolimits v_\epsilon \subset U\), and \(\Vert (H_{\Gamma ,\widetilde{\alpha }}-E)v_\epsilon \Vert \le 2\epsilon \). Moreover, \(\epsilon ''\) can be taken uniformly with respect to \(\Gamma \) so that \(\delta =\delta (\Gamma )\) is bounded uniformly from below.

Proof

(i) Let \(\eta \in C_0^\infty (\mathbb {R}^d)\) such that \(0\le \eta \le 1\), \(\eta (x)=1\) for \(|x|\le \delta /4\), and \(\eta (x)=0\) for \(|x|\ge \delta /3\). Let \(\widetilde{\Gamma }=\{\widetilde{\gamma }_j\}_{j=1}^n\) with \(|\gamma _j-\widetilde{\gamma }_j|\le \epsilon '\) for sufficiently small \(\epsilon '\) (specified later). Consider the map

By definition, \(\Phi \) is a \(C^\infty \) map from \(\mathbb {R}^d\) to itself, \(\Phi (\gamma _j)=\widetilde{\gamma }_j\), and

for some positive constant C. Thus, by Hadamard’s global inverse function theorem, \(\Phi \) is a diffeomorphism from \(\mathbb {R}^d\) to itself, for sufficiently small \(\epsilon '\).

Put \(w_\epsilon := u_\epsilon \circ \Phi ^{-1}\). We can easily check \(w_\epsilon \in D(H_{\widetilde{\Gamma },\alpha })\), since \(\Phi (x)=x+\widetilde{\gamma }_j-\gamma _j\) for \(x \in B_{\delta /4}(\gamma _j)\). We use the coordinate change \(x=\Phi (y)\) or \(y=\Phi ^{-1}(x)\). By (17) and the inverse function theorem, we have estimates

as \(\epsilon '\rightarrow 0\), where \(\delta _{jk}\) is Kronecker’s delta, and \({\partial x}/{\partial y}=(\partial x_j/\partial y_k)_{jk}\) is the Jacobian matrix. The remainder terms are uniform with respect to x (or y), and are equal to 0 for \(x \not \in \bigcup _j \bigl (B_{\delta /3}(\gamma _j){\setminus } B_{\delta /4}(\gamma _j)\bigr )\). Thus, we have by (18)

Next, by the chain rule

Thus, we have by (18)

By the elliptic inner regularity estimate (Corollary 25)

where C is a positive constant independent of \(u_\epsilon \). Taking \(\epsilon '\) sufficiently small and putting \(v_\epsilon = w_\epsilon /\Vert w_\epsilon \Vert \), we conclude \(v_\epsilon \) has the desired property.

(ii) We give the proof only in the case \(d=2\) (the case \(d=1,3\) can be treated similarly). Let \(\phi _j=\phi _{\gamma _j}\) and \(\psi _j=\psi _{\gamma _j}\) be the functions introduced in the proof of Proposition 10. Then, the function \(u_\epsilon \) can be uniquely expressed as

where \(C_j\) is a constant and \(\widetilde{u}_\epsilon \in H^2(\mathbb {R}^2)\) such that \(\widetilde{u}_\epsilon (\gamma _j)=0\) for every j.

Suppose \(|\widetilde{\alpha }_j-\alpha _j|\le \epsilon ''\) for sufficiently small \(\epsilon ''\), and put

and \(v_\epsilon =w_\epsilon /\Vert w_\epsilon \Vert \). Then, we can prove that \(v_\epsilon \) has the desired property. \(\square \)

Proposition 18

Let \(d=1,2,3\), and \(\Gamma _\omega \) and \(\alpha _\omega \) satisfy Assumption 6. Then, for \(H_\omega =H_{\Gamma _\omega ,\alpha _\omega }\),

holds almost surely.

Proof

First, let

and prove \(\sigma (H_\omega )=\Sigma \) holds almost surely.

Recall that \(\Gamma _\omega \) is a locally finite subset satisfying Assumption 2 (so \(H_\omega \) is self-adjoint), almost surely. For such \(\omega \), let \(E\in \sigma (H_\omega )\). Then, by Proposition 12, for any \(\epsilon >0\), there exists \(u_\epsilon \in D(H_\omega )\) such that \(\mathop {\mathrm{supp}}\nolimits u_\epsilon \) is bounded, \(\Vert u_\epsilon \Vert =1\), and \(\Vert (H_\omega -E)u_\epsilon \Vert \le \epsilon \). Let \(\widetilde{\Gamma }=\Gamma _\omega \cap \mathop {\mathrm{supp}}\nolimits u_\epsilon \) and \(\widetilde{\alpha }=(\alpha _{\omega ,\gamma })_{\gamma \in \widetilde{\Gamma }}\). Then, \((\widetilde{\Gamma },\widetilde{\alpha })\in \mathcal {A}_F\), \(u_\epsilon \in D(H_{\widetilde{\Gamma },\widetilde{\alpha }})\) and \(\Vert (H_{\widetilde{\Gamma },\widetilde{\alpha }}-E)u_\epsilon \Vert \le \epsilon \). This implies \(\mathop {\mathrm{dist}}\nolimits (E,\Sigma )\le \epsilon \) for any \(\epsilon >0\), so \(E\in \Sigma \). Thus, we conclude \(\sigma (H_\omega )\subset \Sigma \) almost surely.

Conversely, let \(E\in \sigma (H_{\Gamma ,\alpha })\) for some \((\Gamma ,\alpha )\in \mathcal {A}_F\). Then, for any \(\epsilon >0\), there exists \(u_\epsilon \in D(H_{\Gamma ,\alpha })\) such that \(\mathop {\mathrm{supp}}\nolimits u_\epsilon \) is contained in some bounded open set U, \(\Vert u_\epsilon \Vert =1\), and \(\Vert (H_{\Gamma ,\alpha }-E)u_\epsilon \Vert \le \epsilon \). We write \(\widetilde{\Gamma }:=\Gamma \cap U=\{\gamma _j\}_{j=1}^n\) and \(\alpha _j=\alpha _{\gamma _j}\). By the ergodicity of \((\Gamma _\omega ,\alpha _\omega )\), for any \(\epsilon ', \epsilon ''>0\) we can almost surely find \(y\in \mathbb {R}^d\) such that \(\Gamma _{\epsilon '} := \Gamma _\omega \cap (y+U)=\{\gamma _j'\}_{j=1}^n\), \(\gamma _j'=\gamma _j+ y+\epsilon _j'\) with \(|\epsilon _j'|\le \epsilon '\), and \(\alpha _{\omega ,\gamma _j'}=\alpha _j+ \epsilon _j''\) with \(|\epsilon _j''|\le \epsilon ''\). Taking \(\epsilon '\) and \(\epsilon ''\) sufficiently small and applying Lemma 17, we can almost surely find \(v_\epsilon \in D(H_\omega )\) such that \(\mathop {\mathrm{supp}}\nolimits v_\epsilon \) is bounded, \(\Vert v_\epsilon \Vert =1\), and \(\Vert (H_\omega -E)v_\epsilon \Vert \le 4\epsilon \). Then, we have \(\mathop {\mathrm{dist}}\nolimits (\sigma (H_\omega ),E)\le 4\epsilon \) for any \(\epsilon >0\), so \(E\in \sigma (H_\omega )\) almost surely. Thus, \(\Sigma \subset \sigma (H_\omega )\), and the first equality in (19) holds.

The proof of the second equality in (19) is similar; we have only to replace \(\mathcal {A}_F\) by \(\mathcal {A}_P\), and \((\widetilde{\Gamma },\widetilde{\alpha })\) in the first part of the proof by its periodic extension.

\(\square \)

3.3 Calculation of the Spectrum

By Proposition 18, the proof of Theorem 7 is reduced to the calculation of the spectrum of \(H_{\Gamma ,\alpha }\) for \((\Gamma ,\alpha )\in \mathcal {A}_F\) or \(\mathcal {A}_P\).

First, we consider the case \(d=1\) and the interactions are nonnegative.

Lemma 19

Let \(d=1\). Let \(\Gamma \) be a finite set and \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\) with \(\alpha _\gamma \ge 0\) for every \(\gamma \in \Gamma \). Then, \(\sigma (H_{\Gamma ,\alpha })= [0,\infty )\).

Proof

Under the assumption of the lemma, we have

for any \(u\in D(H_{\Gamma ,\alpha })\). Thus, \(\sigma (H_{\Gamma ,\alpha })\subset [0,\infty )\). The inverse inclusion \(\sigma (H_{\Gamma ,\alpha })\supset [0,\infty )\) follows from [2, Theorem II-2.1.3]. \(\square \)

Lemma 19 seems obvious, but the same statement does not hold when \(d=2,3\), since the point interaction is always negative in that case, as stated in the introduction.

Next, we consider the other cases. In the following lemmas, the sequence \(\alpha =(\alpha _\gamma )_{\gamma \in \Gamma }\) is assumed to be a constant sequence, that is, all the coupling constants \(\alpha _\gamma \) are the same. We denote the common coupling constant \(\alpha _\gamma \) also by \(\alpha \), by abuse of notation.

Lemma 20

Let \(d=1\), and \(x_1,\ldots ,x_N\) be N distinct points in \(\mathbb {R}\) with \(2\le N<\infty \). For \(L>0\), put \(\Gamma _{N,L}=\{L x_j\}_{j=1}^N\). Let \(\alpha \) be a constant sequence on \(\Gamma _{N,L}\) with common coupling constant \(\alpha <0\). Then, the following holds.

- (i)

Let \(N=2\) and \(|x_1-x_2|=1\). Then, \(H_{\Gamma _{2,L},\alpha }\) has only one negative eigenvalue \(E_1(L)\) for \(L \le -2/\alpha \), and two negative eigenvalues \(E_1(L)\) and \(E_2(L)\) (\(E_1(L)<E_2(L)\)) for \(L>-2/\alpha \). The function \(E_1(L)\) (resp. \(E_2(L)\)) is real analytic and monotone increasing (resp. decreasing) with respect to \(L\in (0,\infty )\) (resp. \(L\in (-2/\alpha ,\infty )\)), and

$$\begin{aligned} \lim _{L\rightarrow +0} E_1(L)=-\alpha ^2,\quad \lim _{L\rightarrow \infty } E_1(L)=-\frac{\alpha ^2}{4},\\ \lim _{L\rightarrow -2/\alpha +0} E_2(L)=0,\quad \lim _{L\rightarrow \infty } E_2(L)=-\frac{\alpha ^2}{4}. \end{aligned}$$ - (ii)

Let \(N\ge 3\). Then, the operator \(H_{\Gamma _{N,L},\alpha }\) has at least one negative eigenvalue for any \(L>0\). The smallest eigenvalue \(E_1(L)\) is simple, real analytic and monotone increasing with respect to \(L\in (0,\infty )\), and

$$\begin{aligned} \lim _{L\rightarrow +0} E_1(L)=-\frac{(N\alpha )^2}{4},\quad \lim _{L\rightarrow \infty } E_1(L)=-\frac{\alpha ^2}{4}. \end{aligned}$$

Proof

According to [2, Theorem II-2.1.3], \(H_{\Gamma _{N,L},\alpha }\) has a negative eigenvalue \(E=-s^2\) (\(s>0\)) if and only if \(\det M=0\), where \(M=(M_{jk})\) is the \(N\times N\) matrix given by

Let \(\widetilde{M}=(\widetilde{M}_{jk})\) be the \(N\times N\)-matrix given by \(\widetilde{M}_{jk}=\mathrm{e}^{-sL|x_j-x_k|}\). Then, since \(M= -(2s)^{-1}(2s/\alpha \cdot I +\widetilde{M})\) (I is the identity matrix),

(i) Let \(N=2\) and \(|x_1-x_2|=1\). Then, the eigenvalues of \(\widetilde{M}\) are \(1\pm \mathrm{e}^{-sL}\). Thus, \(E=-s^2\)\((s>0)\) is an eigenvalue of \(H_{\Gamma _{2,L},\alpha }\) if and only if s satisfies one of two equations

The statements can be understood graphically by the graphs of both sides of (20) (see Figs. 3 and 4). Below we give a rigorous proof of the statements.

Graphs of both sides of (20) for \(\alpha =-1\) and \(L=2^n\) (\(n=-4,\ldots ,1\))

Graphs of both sides of (20) for \(\alpha =-1\) and \(L=2^n\) (\(n=2,\ldots ,4\))

In order to study the first equation in (20), put \(f(s,L)=1+\mathrm{e}^{-sL}+2s/\alpha \). Then,

since \(\alpha <0\). Thus, the equation \(f(s,L)=0\) has unique positive solution \(s=s_1(L)\), which is analytic with respect to L. Moreover, by the implicit function theorem,

Thus, \(s_1(L)\) is monotone decreasing, and \(E_1(L)=-s_1(L)^2\) is monotone increasing. The limit of \(s_1(L)\) as \(L\rightarrow +0\) (resp. \(L\rightarrow \infty \)) can be obtained by solving the limit equation \(f(s,0)=2+2s/\alpha =0\) (resp. \(f(s,\infty )=1+2s/\alpha =0\)), that is,

Thus, we obtain the corresponding limit values for \(E_1(L)=-s_1(L)^2\).

Next, we study the second equation in (20). Put \(g(s,L)=1-\mathrm{e}^{-sL}+2s/\alpha \). Then,

Thus, the number of positive solutions of \(g(s,L)=0\) depends on L and \(\alpha \). If \(L\le -2/\alpha \), then \(\partial g/\partial s<0\), and no positive solution exists. If \(L> -2/\alpha \), then g(s, L) is monotone increasing for \(0<s<s_m\) for some \(s_m>0\), takes positive maximum at \(s=s_m\), and is monotone decreasing for \(s>s_m\). Thus, there exists unique positive solution \(s=s_2(L)\), which is analytic with respect to L, and \(E_2(L)=-s_2(L)^2\) is a negative eigenvalue. Since \(f(s,L)>g(s,L)\) and \(\partial f/\partial s<0\), we have \(s_1(L)>s_2(L)\) and \(E_1(L)<E_2(L)\). Moreover, by the implicit function theorem,

since \(\partial g/\partial s|_{s=s_2(L)}<0\). Thus, \(s_2(L)\) is monotone increasing, and \(E_2(L)\) is monotone decreasing. In order to obtain the limit of \(s_2(L)\) as \(L\rightarrow -2/\alpha +0\), consider the inequality for positive s and L

The equation \(s\left( L+{2}/{\alpha }\right) -{s^2L^2}/{2}=0\) has unique positive solution \(s=s_3(L)=2(L+2/\alpha )/L^2\), which tends to 0 as \(L\rightarrow -2/\alpha +0\). Since \(0<s_2(L)<s_3(L)\), we have

The limit of \(s_2(L)\) as \(L\rightarrow \infty \) can be obtained by solving the limit equation \(g(s,\infty )=1+2s/\alpha =0\), that is,

Thus, we obtain the corresponding limit values for \(E_2(L)=-s_2(L)^2\).

(ii) Let \(N\ge 3\). Let \(\mu _1(s,L)\) be the largest eigenvalue of \(\widetilde{M}\). The matrix \(\widetilde{M}\) is a symmetric matrix with positive components, depending only on the product sL. Then, by the Perron–Frobenius theorem, we conclude the eigenvalue \(\mu _1(s,L)\) is simple and positive, and there is unique normalized eigenvector \(v(s,L)\in \mathbb {R}^N\) with only positive components. Since the components of \(\widetilde{M}\) are real analytic with respect to sL, \(\mu _1(s,L)\) and v(s, L) are also real analytic with respect to sL. Since v has only positive components, we have by the Feynman–Hellman theorem

where \({}^t v\) is the transpose of a column vector v. Moreover, the asymptotic form of \(\widetilde{M}\) is given by

where \(\delta _{jk}\) is the Kronecker delta. Thus, we conclude

In Figs. 5 and 6, we give the graphs of \(-\alpha s /2\) and eigenvalues of \(\widetilde{M}\) for \(N=4\), \(x_j=j\) (\(j=1,\ldots ,4\)), \(\alpha =-1\) and \(L=1/16,4\).

By the above properties and \(\alpha <0\), we conclude that there exists a unique positive solution \(s=s_1(L)\) of the equation \(-2s/\alpha =\mu _1(s,L)\), and the function \(s_1(L)\) is real analytic and strictly monotone decreasing on \((0,\infty )\), similarly as in the case (i). Moreover, by solving the limit equations \(-2s/\alpha =\mu _1(s,0)=N\) and \(-2s/\alpha =\mu _1(s,\infty )=1\), we conclude

Since \(E_1(L)=-s_1(L)^2\), the statement holds. \(\square \)

Lemma 21

Let \(d=2\). For \(L>0\), let \(\Gamma _L=\{\gamma _1, \gamma _2\}\) with \(|\gamma _1-\gamma _2|=L\). Let \(\alpha \) be a constant sequence on \(\Gamma _L\) with common coupling constant \(\alpha \in \mathbb {R}\). Then, \(H_{\Gamma _L,\alpha }\) has only one negative eigenvalue \(E_1(L)\) for \(L\le \mathrm{e}^{2\pi \alpha }\), and two negative eigenvalues \(E_1(L)\) and \(E_2(L)\) (\(E_1(L)<E_2(L)\)) for \(L> \mathrm{e}^{2\pi \alpha }\). The function \(E_1(L)\) (resp. \(E_2(L)\)) is real analytic, monotone increasing (resp. decreasing) with respect to \(L\in (0,\infty )\) (resp. \(L\in (\mathrm{e}^{2\pi \alpha },\infty )\)), and

where \(\gamma _E\) is the Euler constant.

Proof

By [2, Theorem II-4.2], \(H_{\Gamma _L,\alpha }\) has a negative eigenvalue \(E=-s^2\)\((s>0)\) if and only if \(\det M=0\), where \(M=(M_{jk})\) is a \(2\times 2\)-matrix given by

Here, \(H_0^{(1)}\) is the 0-th order Hankel function of the first kind. By [1, 9.6.4], we have

where \(K_\nu (z)\) is the \(\nu \)-th order modified Bessel function of the second kind. Thus, \(\det M=0\) if and only if one of the following two equations hold.

Let us review formulas for the modified Bessel functions [1, 9.6.23,9.6.27,9.6.13, 9.7.2].

By (23)–(26), we see that \(K_\nu (z)>0\) for \(z>0\) and \(\nu > -1/2\), and

The graphs of \(y=f(s,L)\) are given as curves below the dashed curve in Figs. 7 and 8. Here, the dashed curve is the limit curve \(y=2\pi \alpha +\gamma _E+\log s/2\).

Similarly as in the proof of Lemma 20, by using these properties, we conclude that Eq. (21) has unique positive solution \(s=s_1(L)\) for any \(L>0\), \(s_1(L)\) is real analytic and monotone decreasing, and

The graphs of \(y=g(s,L)\) are given as curves above the dashed curve in Figs. 7 and 8. By these properties, we conclude Eq. (22) has no positive solution for \( L\le \mathrm{e}^{2\pi \alpha }\), has unique positive solution \(s=s_2(L)\) for \(L>\mathrm{e}^{2\pi \alpha }\), \(s_2(L)\) is real analytic and monotone increasing, and

Since \(E_1(L)=-s_1(L)^2\) and \(E_2(L)=-s_2(L)^2\), the statements hold. \(\square \)

Lemma 22

Let \(d=3\). For \(L>0\), let \(\Gamma _L=\{\gamma _1,\gamma _2\}\) with \(|\gamma _1-\gamma _2|=L\). Let \(\alpha \) be a constant sequence on \(\Gamma _L\) with common coupling constant \(\alpha \in \mathbb {R}\). Then, the following holds.

- (i)

Assume \(\alpha \ge 0\). Then, \(H_{\Gamma _L,\alpha }\) has no negative eigenvalue for \(L \ge 1/(4\pi \alpha )\) and has one negative eigenvalue \(E_1(L)\) for \(0<L < 1/(4\pi \alpha )\) (when \(\alpha =0\), we interpret \(1/(4\pi \alpha )=\infty \) and the first case does not occur). The function \(E_1(L)\) is real analytic, monotone increasing with respect to \(L\in (0,1/(4\pi \alpha ))\), and

$$\begin{aligned} \lim _{L\rightarrow +0}E_1(L)=-\infty ,\quad \lim _{L\rightarrow 1/(4\pi \alpha )-0}E_1(L)=0. \end{aligned}$$ - (ii)

Assume \(\alpha <0\). Then, \(H_{\Gamma _L,\alpha }\) has one negative eigenvalue \(E_1(L)\) for \(L\le 1/(-4\pi \alpha )\) and two negative eigenvalues \(E_1(L)\) and \(E_2(L)\) (\(E_1(L)<E_2(L)\)) for \(L> 1/(-4\pi \alpha )\). The function \(E_1(L)\) (resp. \(E_2(L)\)) is real analytic, monotone increasing (resp. decreasing) with respect to \(L\in (0,\infty )\) (resp. \(L\in (1/(-4\pi \alpha ),\infty )\)), and

$$\begin{aligned} \lim _{L\rightarrow +0}E_1(L)=-\infty ,\quad \lim _{L\rightarrow \infty }E_1(L)=-(4\pi \alpha )^2,\\ \lim _{L\rightarrow 1/(-4\pi \alpha )+0}E_2(L)=0,\quad \lim _{L\rightarrow \infty }E_2(L)=-(4\pi \alpha )^2. \end{aligned}$$

Proof

By [2, Theorem II-1.1.4], \(H_{\Gamma _L,\alpha }\) has a negative eigenvalue \(E=-s^2\)\((s>0)\) if and only if \(\det M=0\), where \(M=(M_{jk})\) is a \(2\times 2\)-matrix given by

So, \(\det M=0\) if and only if one of the following equations holds.

The graphs of both sides of (27) and (28) are given in Figs. 9 and 10.

The functions

satisfy the following properties.

By using these properties, we conclude the following, similarly as in the proof of Lemma 20.

- (i)

For \(\alpha \ge 0\), Eq. (27) has no positive solution for \(L \ge 1/(4\pi \alpha )\) and has one positive solution \(s=s_1(L)\) for \(0<L<1/(4\pi \alpha )\). Moreover, \(s_1(L)\) is real analytic and monotone decreasing, and \(\displaystyle \lim \nolimits _{L\rightarrow +0}s_1(L)=\infty \), \(\displaystyle \lim \nolimits _{L\rightarrow 1/(4\pi \alpha )-0}s_1(L)=0\). Equation (28) has no positive solution.

- (ii)

For \(\alpha <0\), Eq. (27) has one positive solution \(s=s_1(L)\) for any \(L>0\), \(s_1(L)\) is real analytic and monotone decreasing, and \(\displaystyle \lim \nolimits _{L\rightarrow +0}s_1(L)=\infty \), \(\displaystyle \lim \nolimits _{L\rightarrow \infty }s_1(L)=-4\pi \alpha \). Equation (28) has no positive solution for \(L\le 1/(-4\pi \alpha )\), has one positive solution \(s=s_2(L)\) for \(L> 1/(-4\pi \alpha )\), \(s_2(L)\) is real analytic and monotone increasing, and \(\displaystyle \lim \nolimits _{L\rightarrow 1/(-4\pi \alpha )+0}s_2(L)=0\), \(\displaystyle \lim \nolimits _{L\rightarrow \infty }s_2(L)=-4\pi \alpha \).

These facts and \(E_1(L)=-s_1(L)^2\), \(E_2(L)=-s_2(L)^2\) imply the statements.

\(\square \)

Proof of Theorem 7

Put

By Proposition 18, we have \(\sigma (H_\omega ) = \Sigma \) almost surely.

First, consider the case \(d=1\) and \(\mathop {\mathrm{supp}}\nolimits \nu \subset [0,\infty )\). Then, for any \((\Gamma ,\alpha )\in \mathcal {A}_F\), we have \(\sigma (H_{\Gamma ,\alpha })=[0,\infty )\) by Lemma 19. So \(\Sigma =[0,\infty )\).

In all other cases, we have to prove \(\Sigma =\mathbb {R}\). Since \(\sigma (H_{\Gamma ,\alpha }) = [0,\infty )\) for \(\Gamma =\emptyset \), we have only to prove \((-\infty ,0)\subset \Sigma \).

Consider the case \(d=1\) and \(\mathop {\mathrm{supp}}\nolimits \nu \cap (-\infty ,0)\not =\emptyset \). Let \(\Gamma _{N,L}\) given in Lemma 20, and \(\alpha \) be a constant sequence on \(\Gamma _{N,L}\) with common coupling constant \(\alpha \in \mathop {\mathrm{supp}}\nolimits \nu \cap (-\infty ,0)\). Then, \((\Gamma _{N,L},\alpha )\in \mathcal {A}_F\) for any \(N\ge 2\) and \(L>0\), so

By Lemma 20, the right hand side contains \((-\infty ,0)\). When \(d=2,3\), the statement can be proved similarly by using Lemmas 21 and 22. \(\square \)

In the case \(d=1\) and \(\mathop {\mathrm{supp}}\nolimits \nu \) has negative part, there is a simple another proof using the spectrum of the Kronig–Penney model (see [2, 21]).

Another proof of Theorem7 (i) Put

By Proposition 18, we have \(\sigma (H_\omega ) = \Sigma \) almost surely.

Assume \(d=1\) and \((-\infty ,0)\cap \mathop {\mathrm{supp}}\nolimits \nu \not =\emptyset \). It is sufficient to show \(\Sigma \supset (-\infty ,0)\). For \(L>0\), let \(\Gamma _L=L\mathbb {Z}\), and \(\alpha \) be a constant sequence on \(\Gamma _L\) with common coupling constant \(\alpha \in \mathop {\mathrm{supp}}\nolimits \nu \cap (-\infty ,0)\). Then, \((\Gamma _L,\alpha )\in \mathcal {A}_P\). By [2, Theorem III.2.3.1], the spectrum of \(H_{\Gamma _L,\alpha }\) is given by

Put \(k=is\) for \(s>0\). Then, \(E=-s^2\in \sigma (H_{\Gamma _L,\alpha })\) if and only if

Take arbitrary \(s_0>0\), and let \(s\in (0,s_0]\). Consider the Taylor expansion with respect to L

The remainder term is uniform with respect to \(s\in (0,s_0]\). Since \(\alpha <0\), (30) implies (29) holds for sufficiently small L uniformly with respect to \(s\in (0,s_0]\) (see also Fig. 11). Thus, \([-s_0^2,0)\subset \sigma (H_{\Gamma _L,\alpha })\) for sufficiently small L, so \((-\infty ,0)\subset \Sigma \).

\(\square \)

References

Abramowitz, M., Stegun, I.A.: Handbook of mathematical functions with formulas, graphs, and mathematical tables. National Bureau of Standards Applied Mathematics Series, vol. 55. U.S. Government Printing Office, Washington, DC (1964)

Albeverio, S., Gesztesy, F., Høegh-Krohn, R., Holden, H.: Solvable models in quantum mechanics, 2nd edn. With an appendix by Pavel Exner, AMS Chelsea Publishing, Providence, RI (2005)

Albeverio, S., Høegh-Krohn, R., Kirsch, W., Martinelli, F.: The spectrum of the three-dimensional Kronig–Penney model with random point defects. Adv. Appl. Math. 3, 435–440 (1982)

Agmon, S.: Lectures on Elliptic Boundary Value Problems. Van Nostrand Reinhold Inc., New York (1965)

Ando, K., Iwatsuka, A., Kaminaga, M., Nakano, F.: The spectrum of Schrödinger Operators with Poisson type random potential. Ann. Henri Poincaré 7, 145–160 (2006)

Berezin, F.A., Faddeev, L.D.: A remark on Schrödinger’s equation with a singular potential. Soviet Math. Dokl. 2, 372–375 (1961)

Bethe, H., Peierls, R.: Quantum theory of the diplon. Proc. R. Soc. 148A, 146–156 (1935)

Boutet de Monvel, A., Grinshpun, V.: Exponential localization for multi-dimensional Schrödinger operator with random point potential. Rev. Math. Phys. 9(4), 425–451 (1997)

Brüning, J., Geyler, V., Pankrashkin, K.: Spectra of self-adjoint extensions and applications to solvable Schrödinger operators. Rev. Math. Phys. 20(01), 1–70 (2008)

Christ, C.S., Stolz, G.: Spectral theory of one-dimensional Schrödinger operators with point interactions. J. Math. Anal. Appl. 184, 491–516 (1994)

Delyon, F., Simon, B., Souillard, B.: From power pure point to continuous spectrum in disordered systems. Ann. Inst. Henri Poincaré 42(3), 283–309 (1985)

Dorlas, T.C., Macris, N., Pulé, J.V.: Characterization of the spectrum of the Landau Hamiltonian with delta impurities. Commun. Math. Phys. 204, 367–396 (1999)

Drabkin, M., Kirsch, W., Schulz-Baldes, H.: Transport in the random Kronig–Penney model. J. Math. Phys. 53, 122109 (2012)

Geĭler, V.A.: The two-dimensional Schrödinger operator with a uniform magnetic field, and its perturbation by periodic zero-range potentials. St. Petersburg Math. J. 3(3), 489–532 (1992)

Geĭler, V.A., Margulis, V.A., Chuchaev, I.I.: Potentials of zero radius and Carleman operators. Sib. Math. J. 36(4), 714–726 (1995)

Grossmann, A., Høegh-Krohn, R., Mebkhout, M.: The one particle theory of periodic point interactions. Commun. Math. Phys. 77, 87–110 (1980)

Hislop, P.D., Kirsch, W., Krishna, M.: Spectral and dynamical properties of random models with nonlocal and singular interactions. Math. Nachrichten 278, 627–664 (2005)

Kaminaga, M., Mine, T.: Upper bound for the Bethe–Sommerfeld threshold and the spectrum of the Poisson random hamiltonian in two dimensions. Ann. Henri Poincaré 14, 37–62 (2013)

Kirsch, W., Martinelli, F.: On the spectrum of Schrödinger operators with a random potential. Commun. Math. Phys. 85, 329–350 (1982)

Kostenko, A.S., Malamud, M.M.: 1-D Schrödinger operators with local point interactions on a discrete set. J. Differ. Equ. 249(2), 253–304 (2010)

Kronig, R.de L., Penney, W.G.: Quantum mechanics of electrons in crystal lattices. Proc. R. Soc. 130A, 499–513 (1931)

Malamud, M.M., Schmüdgen, K.: Spectral theory of Schrödinger operators with infinitely many point interactions and radial positive definite functions. J. Funct. Anal. 263(10), 3144–3194 (2012)

Meester, R., Roy, R.: Continuum Percolation, Cambridge Tracts in Mathematics, vol. 119. Cambridge University Press, Cambridge (1996)

Minami, N.: Schrödinger operator with potential which is the derivative of a temporally homogeneous Lévy process. In: Watanabe, S., Prokhorov, J.V. (eds.) Probability Theory and Mathematical Statistics, Lecture Notes in Mathematics, vol. 1299. Springer, Berlin, Heidelberg (1988)

Pastur, L., Figotin, A.: Spectra of Random and Almost Periodic Operators. Springer, Berlin (1992)

Reed, M., Simon, B.: Methods of Modern Mathematical Physics. II. Fourier Analysis, Self-Adjointness. Academic Press, New York (1975)

Reiss, R.-D.: A Course on Point Processes. Springer Series in Statistics. Springer, New York (1993)

Thomas, L.H.: The interaction between a neutron and a proton and the structure of \(H^3\). Phys. Rev. 47, 903–909 (1935)

Acknowledgements

The work of T. M. is partially supported by JSPS KAKENHI Grant No. JP18K03329. The work of F. N. is partially supported by JSPS KAKENHI Grant No. JP26400145.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alain Joye.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Renormalization Procedure for Point Interactions

For readers’ convenience, we quote a result about the renormalization procedure (1)

in the case \(d=3\) and \(\Gamma =O:=\{0\}\) ([2, Theorem I-1.2.5]).

Theorem 23

([2]). Let \(d=3\). Let V be a real-valued function on \(\mathbb {R}^3\) belonging to Rollnik class (i.e., \(\iint _{\mathbb {R}^3\times \mathbb {R}^3}|V(x)||V(x')||x-x'|^{-2}\mathrm{d}x\mathrm{d}x'<\infty \)), and \((1+|\cdot |)V\in L^1(\mathbb {R}^3)\). Let \(\lambda (\epsilon )=\epsilon \mu (\epsilon )\), where \(\mu (\epsilon )\) is real analytic near \(\epsilon =0\), \(\mu (0)=1\) and \(\mu '(0)\not =0\). Let \(G_0=(-\Delta )^{-1}\), defined as an integral operator with integral kernel \(1/(4\pi |x-x'|)\). If two functions \(\phi \in L^2(\mathbb {R}^3)\) and \(\psi \in L^2_\mathrm{loc}(\mathbb {R}^3){\setminus } L^2(\mathbb {R}^3)\) satisfy

where \(\mathop {\mathrm{sgn}}\nolimits z= z/|z|\) (\(z\not =0\)) and \(\mathop {\mathrm{sgn}}\nolimits 0 =1\), then \(\psi \) is called a zero-energy resonance for \(-\Delta +V\).

- (i)

If a zero-energy resonance for \(-\Delta +V\) exists, then the norm resolvent limit of \(H_{\epsilon }\) in (1) coincides with \(H_{O,\alpha }\), \(\alpha =(\alpha _0)\), for some \(\alpha _0\in \mathbb {R}\).

- (ii)

If no zero-energy resonance for \(-\Delta +V\) exists, then the norm resolvent limit of \(H_{\epsilon }\) in (1) coincides with the free Laplacian \(H_0\).

It is easy to see that \(\psi \) satisfies the equation \((-\Delta +V)\psi =0\). In the case (i), an explicit formula for \(\alpha _0\) is given in [2, Theorem I-1.2.5]. If \(-\Delta +V\) has simple zero-energy resonance \(\psi \) and no zero-energy eigenfunction, it is given as

Thus, \(\alpha _0\) appears in the coefficient of \(\lambda (\epsilon )\) as \(\lambda (\epsilon )=\epsilon - C\alpha _0 \epsilon ^2 + O(\epsilon ^3)\). It is known that if V is nonnegative, then there is no zero-energy resonance for \(-\Delta +V\). So, the occurrence of the case (i) requires V to have negative part.

A similar result is obtained also in the case \(d=2\), but \(\alpha _0\) appears in the coefficient of \(\lambda (\epsilon )\) in more complicated way (see [2, Theorem I-5.5]).

1.2 Elliptic Inner Regularity Estimate

The following is a special case of the elliptic inner regularity theorem ([4, Theorem 6.3]).

Theorem 24

Let U be an open set in \(\mathbb {R}^d\) and \(u\in L^2(U)\). Assume that there exists a positive constant M such that

holds for every \(\phi \in C_0^\infty (U)\). Then, \(u\in H^2_\mathrm{loc}(U)\). Moreover, for any open set V such that \(\overline{V}\) is a compact subset of U, there exists a positive constant C dependent only on U and V such that

where M is the constant in (31).

From Theorem 24, we have the following corollary useful for our purpose.

Corollary 25

Let U, V be open sets in \(\mathbb {R}^d\) such that \(\overline{V}\subset U\) and

for some positive constant \(\delta \). Let \(u\in L^2(U)\) such that \(\Delta u\in L^2(U)\) in the distributional sense. Then, \(u\in H^2_\mathrm{loc}(U)\), and there exists a constant C dependent only on \(\delta \) and the dimension d such that

Proof

Put \(\epsilon =\delta /(2d)\). For \(x_0\in \mathbb {R}^d\), consider open cubes \(Q=x_0 + (-\epsilon ,\epsilon )^d\) and \(Q'=x_0 + (-\epsilon /2,\epsilon /2)^d\). When \(Q\subset U\), we have

for every \(\phi \in C_0^\infty (Q)\). Then, the assumption of Theorem 24 is satisfied with \(U=Q\), \(V=Q'\), and \(M=\Vert \Delta u\Vert _{L^2(Q)}\), and we have

for some positive constant C dependent only on \(\delta \) and dimension d. We collect all the cubes \(Q'\) such that the center \(x_0\in \epsilon \mathbb {Z}^d\) and \(Q'\cap V \not =\emptyset \). Notice that \(Q\subset U\) for such \(Q'\). Thus, we have by (33)

where we use the fact Q can overlap at most \(2^d\) times. \(\square \)

Rights and permissions

About this article

Cite this article

Kaminaga, M., Mine, T. & Nakano, F. A Self-adjointness Criterion for the Schrödinger Operator with Infinitely Many Point Interactions and Its Application to Random Operators. Ann. Henri Poincaré 21, 405–435 (2020). https://doi.org/10.1007/s00023-019-00869-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-019-00869-1