Abstract

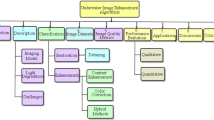

The quality and appearance of underwater images perform a relevant role in the underwater computer vision paradigm. Wherein, the underwater images are useful in various applications to make a detailed study on underwater life. Despite the hype, the images caprited underwater undergoes various challenges like light attenuation, color absorption, type of water, etc. To address these types of issues, many algorithms are proposed. This paper provides a comprehensive study of frequent methods used to intensify the visual nature of underwater images and different underwater image datasets that are used to perform tasks regarding underwater imaging. The different quality assessment measures are also summarized in this research work. The presented methods and their shortcomings are studied to enable the in-depth comprehension of underwater image enhancement. In the end, possible future research directions are also implied.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Underwater image enhancement

- Deep learning

- Underwater image dataset

- Generative adversarial network

- Convolution neural network

- Residual network

1 Introduction

Exact, high-resolution undersea images are crucial in tracking sea nature, inspecting the underwater world, and detecting sea bed objects. Despite that, it is difficult to accomplish such an objective because of the light attenuation and scattering and deformation of image color in the underwater scene. Water contains sea organisms, floating particles called marine snow [1], and other different obstacles. Because of the depth of water level, types of water, light conditions, and different wavelengths of light, the quality of underwater images is rapidly degraded. According to the light wavelengths, a light that has the longest wavelength disappears first. Hence, the red light has a large wavelength so it disappears in water first. As the light travels depth of water, orange, yellow, and green lights vanish, respectively. The blue light travels faraway in water which has the shortest wavelength. The light beam would be reflected and goes through the underwater particles prior to reach the camera. Because of that the light is absorbed and reduces their energy. Due to this situation, underwater images are suffering from color distortion, low brightness, and haziness. There are various methods for enhancing the underwater images that have been found out by many researchers, like adaptive histogram equalization (AHE) [2], dark channel prior (DCP) [3], deep learning algorithms [1], and so on .

It is an essential topic to take crystal clear underwater images for applications related to ocean engineering, marine biology. Ocean engineering is one of the wide research areas for underwater imaging. The epoch of formal oceanographic studies began with the H.M.S. Challenger Campaign (1872–1876), gather information related to ocean temperatures, underwater life, and seafloor geology, and discover the ocean. Buoys and moorings are used to keep track of sea earth circumstances and water quality essence, microorganisms in water, sonar redound to create a blueprint of the ocean floor, and remotely operated vehicles (ROVs) [4], and autonomous underwater vehicles (AUVs) [4] are used to explore all parts of the ocean securely and efficiently. Using these tools or instruments, researchers do a study of the underwater world, and for better results image enhancing is necessary These underwater vehicles are provided with several sensors and GPS systems to gather information regarding deepsea culture, underwater metals, and coral reef ecosystem. But these tools cannot perform effectively due to different types of water like turbid, stormy, and red seawater and also large suspended particles, marine organisms, and plants.

Another application of underwater imaging is Marine Biology. In this, researchers perusal a wide range of marine mammals and birds including dolphins, shark, blue whales, starfish, sea otters, earless seals, sea horse, sea urchin, eagles, and a variety of marine birds. Also study on marine biodiversity, digestive physiology, and processing of plant foods is to explore marine life. Underwater sites are dynamic and naturally difficult to access and more hazardous as compared to dry land. Marine archeology needs excavation and finding out about important data and details in the deep ocean. The study of this field also involves going to different marvelous locations to showing amazing structures and creations, precious material immersed underwater, and submarines. Taken these kinds of materials, the investigation is carried out to provide historic importance.

In recent years, many deep learning algorithms are developed for underwater image enhancement tasks. These algorithms show better and effective performance in the process of enhancing underwater image quality. Despite that deep learning models required a huge amount of data to train the network model effectively. Using a deep learning algorithm's lack of labeled data that contains both good and poor quality underwater images is the main issue.

For the increasing interest of researchers to discover the underwater world, there are different enhancement techniques used. With the reliance of these methods, preeminence of underwater images is enhanced. In this paper, review on algorithms or techniques which have used improving degraded underwater images is carried and also provides information about available underwater image datasets and different image quality metrics that are used to evaluate the quality of an image. The study of literature provides researchers to appreciate opportunities in this important field.

2 Literature Survey

This section gives a summary of the existing algorithms used to improve the underwater image quality.

2.1 Traditional Underwater Image Enhancement Methods

Qing et al. introduced an adaptive dehazing framework to enhance the underwater image [2]. The proposed adaptive enhance dehazing framework consists of two tasks: adaptive atmosphere light estimation and adaptive histogram equalization [2]. The proposed framework improves the quality of images according to the attributes of the underwater environment. The adaptive brightness estimation method provides to the alteration of feature map and to improve the image captured in underwater to the true waterscape, and the AHE method is employed to enhance the visibility of underwater images and improves the global quality of underwater images. The presented method dehazes underwater images and improved visual quality. But AHE has a limitation of immoderate amplified noise in the image.

In 2016, Li et al. employed underwater image enhancement techniques based on dark channel prior and luminance adjustment [3]. Each color channel has different light wavelengths. Depending on wavelength, the color of an image is dominant. The underwater image sustains low brightness. To achieve the haze-free underwater image, the dark channel prior method is applied and evaluates the depth map of an image. Then, the author employs a luminance adjustment method [3] to equalize the non-uniform radiance and reduce information loss. The output image shows improved color contrast and minimum information loss.

In the study of underwater imaging, the author presented a differential evolution algorithm [5] to improve the contrast of an image. The underwater image has been initially segregated into R, G, and B color channel components. RGB space constituent of an image is nothing but the value of each pixel which is set of three color intensities red, blue, and green. Then the contrast stretching method is applied to each component for improving the contrast of an image by increasing the effective range of white intensity level in an image. At the time of improving the contrast of an image, the presented algorithm has been utilized accordingly to decide the threshold value of contrast. After enhancing the color contrast of an image, RGB space constituents are combined to obtain a colored image of a corresponding underwater image. At the final step, unsharp masking is applied on colored images to get a sharpened underwater image. The proposed method satisfactorily obtained an enhanced underwater image.

One of the undersea image enhancements is based on an optimization algorithm proposed in 2017 [6]. Based on an optimization algorithm, an enhanced fuzzy intensification method is employed [6]. In the employed algorithm, there is no segregation between the target object and background to eliminate the haziness and improve the natural appearance of an image. According to the minimal and maximal value of pixel intensity in the original degraded image, the adaptive fuzzy membership function is accomplished for every color component [6]. The fuzzy membership function [6] consists of the set of membership values to manage the boundary limits utilization in the fuzzy intensification operator [6]. This operator is employed to enhance the image contrast. At the time of underwater image capturing, if the pixel intensity of the red channel is highest, then fuzzy histogram equalization is implemented as post-processing. Otherwise, it is not necessary to perform post-processing. The proposed method obtained haze-free and better contrast images. In the future, the proposed algorithm is to be better work for forecasting an object in underwater video.

Mi et al. [7] introduced a multi-scale gradient-domain contrast enhancement method to better the contrast of an underwater image. The white balancing method is employed on the original degraded image to eliminate the unwanted color cast. Then the multi-scale gradient enhancement method is utilized to enhance the contrast. Then to catch out the distinct levels of features into an equivalent layer, edge-conserving image fragmentation based on weighted least squares (WLS) [7] is employed in the lightness color channel of the CIELAB color space to construct a multi-scale representation [7]. Finally, recompensing color saturation corresponds to the evaluated transmission. The proposed method enhances the underwater image with minimum information and structural loss and also removes the unwanted noise.

Li et al. developed a novel method for improving the underwater image based on the DCP and depth transmission map [8] for dehaze and look up the contrast of an image. The proposed dehazing algorithm consists of three main parts: homomorphic filtering, double transmission map, and dual image wavelet fusion [8]. The green and blue colors have the shortest wavelengths, hence the underwater image looks bluish and greenish. Initially, the effect of blue and green radiance is eliminated by homomorphic filtering. Then the second part makes use of the seafloor map and dark channel map [8] as a feature map to compute the variation between the bright and dark channels and get an output as enhanced images. In the last part, the two enhanced images which are the output of the previous step blended simultaneously by the technique of wavelet fusion of images [8] to get an output as an enhanced underwater image. To improve the color contrast of an underwater image, author utilized contrast-limited adaptive histogram equalization (CLAHE) method [8]. The author presents a novel block solving based on the global background light algorithm [8]. The original image is split into six blocks and then takes the highest block and after that evaluates the difference between the standard deviation of the pixel values in every block and the midpoint of the pixel values in the analogous block. This method removes the impact of bright objects. The novel algorithm introduced by the author is to enhance the contrast and dehaze the underwater image.

2.2 Deep Learning Underwater Image Enhancement Methods

Apart from traditional image enhancement algorithms, the deep learning-based undersea image enhancement techniques are also prevalent. The deep learning algorithms perform effectively compared to traditional image enhancement algorithms.

2.2.1 Convolution Neural Network Models

In 2016, Li et al. [9] employed a contrast improvement algorithm based on deep neural networks. The presented method mainly used to enhance high turbid underwater images. If the captured underwater image is highly degraded due to turbid water, then it is difficult to compute the real depth map. To address this issue, the guidance image filtering method is proposed by the author [9]. Using the guidance image filtering method easily filters the real depth map. Combining the SSIM and Euclidian distance image quality metrics, author proposed a new quality assessment metric called Qu [9]. The structures and colors of images both are computed by using the proposed quality metric. The presented method is utilized for preprocessing the deep learning-based classification using different machine learning algorithms. The proposed image enhancement method removes an unwanted color cast.

Wang et al. [10] introduced UIE-Net that divides the further two networks: First is color correction (CC-Net), and another is haze removal (HR-Net) [10] to haze removal and color correction of the set underwater image. This model consists of convolution layers to extract haze-relevant features and color correction features to enhance the image. Underwater images serve the effect of texture and unwanted color cast because of underwater suspended particles and scattering of light. To extract relevant natural features in local patches, UIE-Net [10] makes use of a pixel disrupting strategy. Proposed framework trains on synthetic underwater image dataset. After performing color correction and haze removal tasks simultaneously, the pixel disrupting the strategy is used to squeeze the presumption of texture without information loss. UIE-Net architecture improves processing time and accuracy. Moreover, the computational cost of the reported model is still high. To remove noise and improve efficiency using fully connected, CNN is the proposed approach.

The novel technique for underwater image enhancement is introduced which is based on encoding–decoding deep CNN networks [11]. In proposed model convolution layers and deconvolution, layers are utilized as encoding and decoding, respectively. To overcome the problem of overfitting, the author transfers AlexNet network model variables to the task of enhancing the undersea images. The employed network architecture is referred to as ED-AlexNet (encoder–decoder AlexNet) [11]. The consecutive convolution layers of the AlexNet network model fail to re-establish the details of the degraded image. To address this problem, deconvolution layers are utilized. These layers effectually filter the features of the transmission map of the prior convolution layers. The ReLU activation function is used for activation function, and the original degraded image is improved by removing the noise. Then the proposed deconvolution layers perform the deconvolution task to get better the loss information by the operation of convolution. Stochastic gradient descent optimization is applied to reduce the error function, and standard back-propagation is utilized to upgrade weights in the network model [11]. The introduced network architecture gives better results in underwater image enhancement.

The image captured in turbid water is highly distorted, and the effect of dust also degrades the quality of underwater images. To address this problem, Li et al. proposed a deep CNN-based dust removal method [12]. The convolutional neural network is used to remove the impact of artificial light on an image captured in turbid water. After removing the effect of artificial light, Gaussian convolution is utilized to eliminate turbid dregs in underwater image and denoising the image [12]. Then, the effect of haziness and blurriness removes simultaneously. This network employed a locally adaptive filter to dehaze the image and used a patch-based de-scattering method to restore a blurred underwater image. Finally, proposed CNN model restores the color of an image which is distorted due to color absorption. The presented network model removes the dust from an underwater image captured in highly turbid water [12] and also removes the haze-like effects, noise, and blurriness in the underwater image.

2.2.2 Residual Network Models

In 2018, the residual convolutional neural networks are developed to enhance underwater images [13]. The presented deep learning model is called an underwater residual CNN model (CNN) [13]. Firstly, the deep CNN model evaluates the transmission map which depends on a combination of preceding work and training data. In the proposed network, architecture batch normalization is used to speed up the training and learning performance. The ReLU activation function is used with convolution layers to improve the formation of scene depth from raw input through the hidden layers. To achieve minimum loss, stochastic gradient descent method is used [11, 13]. To restore color, novel scene residual component is proposed. Then a gray-world algorithm is applied to the image for illumination stability and obtained dehaze underwater image. The presented deep model reduces haziness improving color contrast.

Liu et.al. employed a residual learning-based network model in which goals are to better the performance for enhancing the underwater image. Initially, the cycle consistent adversarial networks (CycleGAN) were applied to address the overfitting problem due to the data insufficiency, which is used to produce synthetic underwater images. These images are used as a training set to train the network model. Then, the residual network model that is underwater ResNet (UResNet) [14] employed with the very-deep super-resolution (VDSR) model [14] was developed to improve the quality of underwater images. The residual network generated high-resolution underwater image as output without changing the dimension of the original image. Edge difference loss (EDL) [14] is introduced as a penalty term to enhance the details regarding the edges of an image. After that to reduce the amplified noise in the image, asynchronous training mode is used. This mode is significant to deep learning models that utilized the multi-term loss function [14]. The batch normalization [14] is used to restore the fine details which are lost during the enhancement task and improve color contrast. The proposed residual framework achieves better performance than previous enhancement methods.

The image captured in the underwater environment suffered from blurry effect due to suspended particles and turbidity. Because of that, the depth estimation from images is a more difficult task. To address this problem, Haofei Kuang et al. utilized fully convolutional residual neural network (FCRN) for depth estimation on underwater omnidirectional images [15]. The author utilized a conventional FCRN to determine the depth for deep-sea point of view images and spherical FCRN for omnidirectional images. The conventional FCRN utilized ResNet-50 as a feature acquisition layer and up-project as an upsampling layer [15]. To construct a SphereResNet, spherical convolution and pooling layers are used in the spherical FCRN. Stochastic gradient descent and back-propagation are included to achieve better performance of the model [15]. The model performs effectively, and generated output images that both conventional and spherical FCRN can determine depth map precisely for synthetic underwater perspective and omnidirectional images, respectively.

2.2.3 Generative Adversarial Network Models

Md Jahidul Islam et al. employed a conditional generative adversarial network framework for improving the visibility of undersea images [16]. The proposed network model is referred to as a FUnIE-GAN. The author constructs a multimedia objective function to do the network model training task. The author incorporates additional features in objective function, namely global content, image color, localized texture, and quality details to compute the perceptual quality of an image [16]. The fully connected layers are not used in the presented network architecture. In this network model encoder and decoder, the strategy is used [11]. Also, the concept of skip connections [16] in the generator network is demonstrated to be very effectual for image-to-image transformation and image visual quality enhancement applications. Leaky-ReLU nonlinearity activation function and batch normalization [11, 16] are used with convolution layers for better performance of the training process. To train the network, an extensive dataset including paired and unpaired real-time undersea images is presented which is called enhancement of underwater visual perception (EUVP) [16]. The proposed model gives better performance and also takes less time for processing.

Motivated by GANs, a novel multi-scale dense generative adversarial network (GAN) is proposed for enhancing the underwater image [17]. The presented model includes two parts: a generator network G and a discriminator network D [17]. Residual MSDB (RMSDB) is utilized in a fully connected network architecture of the generator network. The skip connections [14, 16] in the generator network are demonstrated to be very effectual for image-to-image transformation and image visual quality enhancement applications. The presented G is developed to blend the undersea images. The developed D comprises five layers with spectral normalization [17]. The discriminator is constructed to differentiate the synthesized images obtained by the G from the corresponding real undersea images. Leaky–ReLU activation function and batch normalization are used [11, 16, 17] followed by convolution layers. The proposed model performs better with synthetic as well as real underwater images.

In 2019, Li and Li proposed a novel fusion generative adversarial network (FGAN) to improve an underwater image. To better the contrast and remove the haziness presented model, blend more than one input image and the generative adversarial network [18]. The generator network utilized a fully connected network and two basic blocks. There are two networks employed; out of that one network used fusion method and other networks utilized DUIENet. Then the outputs of these two networks are fused. Instead of using too many blocks, the generator network used two basic blocks and obtained equivalent performance. The loss function applied in the network comprises RaGAN loss [18] to maintain fine details of ground truth and enhanced image. The discriminator network included the convolution layer with spectral normalization to improve the appearance quality of the degraded image. The author presented U-45 dataset is preserved into three subgroups of the green, blue, and haze-related categories. The proposed method achieves better performance and enhances the quality of underwater images.

Due to issues in underwater imaging, the underwater images suffer from haze effect and color distortion. To address these problems, stacked generative adversarial networks (UIE-sGAN) are proposed [19]. The developed network consists of two networks: one for haze detection and another for color correction. Each sub-conditional GAN networks include generator and discriminator. The input is fed into the first sub-network for haze detection. The generator gives the output as a haze detection mask [19] and then sends it to the discriminator to determine the real output image. After this, the raw image and output image of the first sub-network send as input to the second sub-network, which generated color corrected image [19]. Then, raw image, haze detection mask, and output of generator are fed into discriminator to differentiate real and fake underwater images. Finally, the proposed model accomplished haze-free and color corrected underwater images.

To enhance the underwater image, Yang et al. developed a conditional generative adversarial network (cGAN) [20]. The proposed model contained a multi-scale generator and dual discriminator. The multi-scale generator used convolution layers with nonlinear ReLU activation functions for the speedup performance. At the time of training, degraded images are given as input to a multi-scale generator to estimate residual maps [20]. These residual maps are used to obtain an enhanced underwater image as output. This output image is fed into presented dual discriminator [20]. These two discriminators have a different structure of the network and the same values of weights. The dual discriminator decides the obtained output images are genuine or fake from different perspectives. The proposed model achieves satisfactory results as compared to the state-of-the-art underwater image enhancement methods and, also, avoid the problem of overfitting by applied image augmentation strategy [20].

Table 1 illustrates the comparative study of existing underwater image enhancement methods which are invented by researchers to better the underwater images. It also contains outcomes after employing proposed methods and also shortcomings of existing algorithms.

3 Underwater Image Datasets

In this section, the available underwater image dataset which is used for the underwater image enhancement task is described briefly.

-

i.

EUVP dataset [16]—The EUVP undersea images dataset comprises a huge number of paired and unpaired undersea images of poor and good perceptive quality. To collect underwater scenes to prepare the dataset, utilize seven different cameras. The unpaired data is constructed with the help of six human candidates. Based on the perception of human candidates, the unpaired image data is collected. The dataset includes 12 K paired and 8 K unpaired underwater images.

-

ii.

U-45 dataset [18]—The U-45 dataset is an underwater test dataset. The author collects 240 underwater images from the existing datasets like ImageNet, SUN [15], and the seafloor near to the Zhangzi islet in the Yellow Sea, China [18]. Then choose 45 real underwater images from that and give the name as U45. The U45 is preserved into three subgroups of the green, blue, and haze-related categories, where subgroup equivalent to the color casts, low contrast, and haze like impacts of underwater image quality degradation.

-

iii.

Underwater Image Enhancement Benchmark Dataset (UIEBD) [21]—Author gathers a large number of real-world underwater image considering a variety of seafloor scenes, various features of image structure degradation, and content of the underwater object is widely covered. After collecting the images, most of the images are removed. The dataset contains 950 underwater images that exist after removing the task. The resolution of the images ranges from 183 × 275 to 1350 × 1800 [21]. The presented UIEBD consists of two subgroups: One is 890 raw undersea images with the equivalent good-quality reference images, and another challenging set of undersea images includes 60 images [21].

-

iv.

Jamaica Port Royal Dataset [22]—This dataset was captured in Port Royal, Jamaica, at the place of an immersed city containing both natural and artificial structures. These images were gathered by using a handheld diver rig. The dataset includes 6500 underwater images from a single plunge [22]. The maximum depth from the seabed is considered 1.5 m approximately. The dataset contains a total of 18,091 underwater images.

-

v.

MHL dataset [19, 22]—This dataset is constructed by the Marine Hydrodynamics Laboratory of the University of Michigan. The artificial rock tank platform is immersed in the pure water tank to collect underwater images and construct the MHL dataset, which contains over 15,000 underwater images [19, 22].

-

vi.

Real-world Underwater Image Enhancement (RUIE) Dataset [23]—To collect images multi-view underwater imaging system utilized with 22 underwater video cameras to gather large-scale underwater images [23]. These cameras are situated at a maximal depth of the scene which varies from 0.5 to 8 m [23]. This dataset consists of 4000 underwater images. Based on UIE network algorithms, the dataset is divided into three subsets—underwater image quality set (UIQS), underwater color cast set (UCCS), and underwater higher-level task-driven set (UHTS) [23]. UIQS, UCCS, and UHTS subsets are used to improve the visual appearance, restore the color cast, and compute the impact of UIE methods to prominent level computer vision applications respectively [24].

Some underwater image datasets are available in https://github.com/xahidbuffon/Underwater-Datasets.

4 Underwater Image Quality Assessment

The quality of the image depends on many features like colorfulness, sharpness, brightness, texture, and structural details. Considering these features, image quality assessment is done. To evaluate the performance of image enhancement in the real-world underwater, the subjective and quantitative evaluation metrics and also human visual-inspired quality evaluation metrics are utilized.

The quantitative evaluation is presented in terms of mean square error (MSE), peak signal-to-noise ratio (PSNR), structural similarity index measure (SSIM), and entropy. These metrics are referred to as full-reference image quality measures, which are used if reference images exist. The MSE [6, 21] and PSNR [6, 11, 16, 19,20,21] metrics compared paired image, i.e., raw underwater image and corresponding enhanced image, and values of these metrics show the noise in the image. The low value of MSE and higher PSNR value convey that the quality of the enhanced image is good. The SSIM [6, 9, 11, 16, 19,20,21] is adopted to evaluate structural details in the image. The higher value of SSIM means the structural details included in the image are more. The higher value of entropy [6, 8, 10] denotes that the achieved image has a minimum loss of information. Another full-reference metric is patch-based contrast quality index (PCQI) [10] used to calculate the local color contrast of an image. The higher value of this metric means the contrast of an image. The average gradient is [8] utilized to evaluate blurriness, sharpness, and details of texture and contrast of underwater images. The higher score indicates better quality of an image.

5 Conclusions

In this paper, the existing methods to enhance the underwater images were briefly introduced, and the limitations in the methods were epitomized. The impact of the developed methods for underwater image enhancement on haze, bluish, greenish, coastal, scattered, and degraded sea images was collated, which gave a credential for choosing the most appropriate underwater image improvement methods as per various instances. The available underwater image datasets which are used for image enhancement tasks are also described. The details of image quality measures are also provided. Still, underwater imaging is faced with various problems.

A researcher has opportunities to explore the sea life persuasively and have scope to improve real-time performance and efficiency of underwater imaging. To achieve better quality underwater images, instead of using synthetic image data, there is a need to construct a real-world underwater image dataset that contains a huge number of labeled data. However, there are limited datasets of deep-sea images constructed, but these datasets contain a finite number of images. For future studies, there is a need to provide real-world underwater images that help to achieve better performance of underwater image enhancement algorithms. Another direction is to develop the evaluation metric which considers more features of the underwater images like different types of noise, texture, and depth estimation. Because existing quality metrics have some limitations, there is also a need to build lightweight tools and instruments for capturing underwater images under challenging conditions. Still, there is a lot of work and scope of underwater computer vision.

References

Yeh C-H, Huang C-H, Lin C-H (2019) Deep learning underwater image color correction and contrast enhancement based on hue preservation. In: 2019 IEEE underwater technology (UT). IEEE, Kaohsiung, Taiwan, pp 1–6

Qing C, Huang W, Zhu S, Xu X (2015) Underwater image enhancement with an adaptive dehazing framework. In: 2015 IEEE international conference on digital signal processing (DSP). IEEE, pp 338–342

Li X, Yang Z, Shang M, Hao J (2016) Underwater image enhancement via dark channel prior and luminance adjustment. In: OCEANS 2016—Shanghai. IEEE, pp 1–5

Schettini R, Corchs S (2010) Underwater image processing: state of art of restoration and image enhancement methods. EURASIP J Adv Signal Process 1–14

Guraksin GE, Kose U, Deperlioglu O (2016) Underwater image enhancement based on contrast adjustment via differential evolution algorithm. In: 2016 International symposium on innovations in intelligent systems and applications (INISTA). IEEE, pp 1–5

Akila C, Varatharajan R (2018) Color fidelity and visibility enhancement of underwater image de-hazing by enhanced fuzzy intensification operator. Multimedia Tools Appl 77(4):4309–4322

Mi Z, Liang Z,Wang Y, Fu X, Chen Z (2018) Multi-scale gradient domain underwater image enhancement. In: 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO). IEEE, pp 1–5

Yu H, Li X, Lou Q, Lei C, Liu Z (2020) Underwater image enhancement based on DCP and depth transmission map. Multimedia Tools Appl 1–18

Li Y, Lu H, Li X, Li Y, Serikawa S (2016) Underwater image de-scattering and classification by deep neural network. Comput Electr Eng 54:68–77

Wang Y, Zhang J, Cao Y, Wang Z (2017) A deep CNN method for underwater image enhancement. IEEE international conference on image processing (ICIP). IEEE, Beijing, China, pp 1382–1386

Sun X, Liu L, Dong J (2017) Underwater image enhancement with encoding-decoding deep CNN networks. In: 2017 IEEE smart world, ubiquitous intelligence and computing, advanced and trusted computed, scalable computing and communions, cloud and big data computing, internet of people and smart city innovation. IEEE, pp 1–6

Li Y, Zhang Y, Xu X, He L, Serikawa S, Kim H (2019) Dust removal from high turbid underwater images using convolutional neural networks. Opt Laser Technol 110:2–6

Hou M, Liu R, Fan X, Luo Z (2018) Joint residual learning for underwater image enhancement. In: 25th IEEE international conference on image processing (ICIP). IEEE, pp 4043–4047

Liu P, Wang G, Qi H, Zhang C, Zheng H, Yu Z (2019) Underwater image enhancement with a deep residual framework. IEEE Access 7:94614–94629

Kuang H, Xu Q, Schwertfeger S (2019) Depth estimation on underwater omni-directional images using a deep neural network. arXiv preprint arXiv:1905.09441

Islam MJ, Xia Y, Sattar J (2020) Fast underwater image enhancement for improved visual perception. IEEE Robot Autom Lett 5(2):3227–3234

Guo Y, Li H, Zhuang P (2019) Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J Oceanic Eng 1–9

Li J, Li H (2019) A fusion adversarial network for underwater image enhancement. arXiv preprint arXiv:1906.06819

Ye X, Xu H, Ji X, Xu R (2018) Underwater image enhancement using stacked generative adversarial networks. In: Pacific rim conference on multimedia. Springer, pp 514–524

Yang M, Hu K, Du Y, Wei Z, Sheng Z, Hu J (2020) Underwater image enhancement based on conditional generative adversarial network. Signal Process: Image Commun 81:115723

Li C, Guo C, Ren W, Cong R, Hou J, Kwong S, Tao D (2019) An underwater image enhancement benchmark dataset and beyond. arXiv:1901.05495

Li J, Skinner KA, Eustice RM, Johnson-Roberson M (2017) WaterGAN: unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot Autom Lett 3(1):387–394

Liu R, Fan X, Zhu M, Hou M, Luo Z (2019) Real-world underwater enhancement: challenges, benchmarks, and solutions. arXiv preprint arXiv:1901.05320

Lu H, Li Y, Uemura T, Ge Z, Xu X, He L, Serikawa S, Kim H (2018) FDCNet: filtering deep convolutional network for marine organism classification. Multimedia Tools Appl 77:21847–21860

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Dharwadkar, N.V., Yadav, A.M. (2021). Survey on Techniques in Improving Quality of Underwater Imaging. In: Smys, S., Palanisamy, R., Rocha, Á., Beligiannis, G.N. (eds) Computer Networks and Inventive Communication Technologies. Lecture Notes on Data Engineering and Communications Technologies, vol 58. Springer, Singapore. https://doi.org/10.1007/978-981-15-9647-6_19

Download citation

DOI: https://doi.org/10.1007/978-981-15-9647-6_19

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-9646-9

Online ISBN: 978-981-15-9647-6

eBook Packages: EngineeringEngineering (R0)