Abstract

To solve the existing problems in spectrum sensing methods adopted in cognitive radio systems, such as poor sensing performance and easily being influenced dramatically by noise in low signal-to-noise ratio (SNR) environments, we propose a spectrum sensing algorithm based on deep neural network in this paper. First, we preprocess the received primary user (PU) signal with energy normalization, and then the normalized received signal is fed into the proposed Noisy Activation CNN-GRU-Network (NA-CGNet), which includes a one-dimensional convolutional neural network (1D CNN) and a gated recurrent unit (GRU) network with noisy activation function. NA-CGNet is able to extract spatially and temporally correlated features of the signal received by the time series. The deep neural network with noisy activation function can improve the anti-noise performance of the network, thus enhancing the spectrum sensing performance at low signal-to-noise ratio (SNR) level. Simulation results show that the proposed NA-CGNet model achieves a spectrum sensing accuracy of 0.7557 at SNR = -13dB, which is 0.0567 better than the existing DetectNet model, achieving a lower false alarm probability of \({P}_{f}\)=7.06%.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Cognitive Radio (CR) has received much attention from academia and industry as a potential solution to the problem of wireless spectrum resource strain [1].The concept of CR is spectrum sharing and spectrum sensing is an important prerequisite for CR, where the user authorized to use the band is the Primary User (PU) and the unlicensed Secondary User (SU) is allowed to opportunistically occupy the free licensed spectrum resources of the PU to improve spectrum utilization [2]. With the advantages of low complexity and no a prior knowledge of PU signals, traditional energy detection method are easy to implement, but it is greatly affected by noise interference in a low SNR environment, which leads to a significant decrease in the accuracy of spectrum sensing [3]. Traditional methods are model-driven and it is difficult to obtain prior knowledge about signal or noise power, especially for non-cooperative communication [4]. Therefore, traditional methods do not always meet the requirements for fast and accurate spectrum sensing in real communication environments.

Deep learning (DL) is able to explore the most appropriate functions and models in a data-driven mining manner that can improve signal detection performance [5, 6]. Deep learning based spectrum sensing have attracted increasingly research work, i.e., Zheng et al. [7] proposed a deep learning classification technique that uses the power spectrum of the received signal as an input to train a CNN model and normalizes the input signal to solve the problem of noise uncertainty. Xie et al. [8] utilized their historical knowledge of PU activity and used a CNN-based deep learning algorithm to learn PU activity patterns to achieve spectrum sensing (APASS). However, CNN-based deep neural networks are not sufficient to process PU signals because wireless spectrum data are time-series data with fixed time correlation, and long-term memory (LSTM) network is introduced into the DL network to extract total time correlation [9]. Gao et al. [10] combined LSTM and CNN to form DetectNet to extract total time correlation from received signal time series data to learn total dependencies and local features, which enables better spectrum sensing than LSTM and CNN structures separately.

The spectrum sensing method based on Deep Learning may focus on extracting various aspects of the input. However, existing CNNs are not suitable for temporal modeling and time series data analysis [11], and 1D CNNs are good at extracting local features from serial data to obtain sufficient locally relevant features [15]; LSTM and GRU are capable of temporal correlation extraction. However, GRU [12] has a simpler network structure than LSTM and is easier to train, which can largely improve the training efficiency of the network while achieving similar results with LSTM. To the best of the authors’ knowledge, the temporal dependence of spectral data has not been modeled in the literature using GRU networks with noisy activation function (NAF) [13], to solve the problem that the existing methods are not robust to noise in a low signal-to-noise environment, the NAF is used in deep neural networks, and Noisy Activation CNN-GRU-Network (NA-CGNet) is proposed to deal with the non-cooperative spectrum sensing problem. Simulation results verify that the proposed algorithm outperforms the existing spectrum sensing methods in terms of spectrum sensing accuracy at low SNR and achieves low false alarm probability at the same time.

2 Related Work

2.1 System Model

According to the idle or busy state of the PU, spectrum sensing can be viewed as a binary classification issue, i.e., the presence or absence of PU. Therefore, the signal detection of the SU scenario can be modeled as the following binary hypothesis testing issue [14].

where \(X\left(n\right)\) is the \(n\)-th received signal of the SU, \(U\left(n\right)\) is additive noise following the zero mean circularly symmetric complex Gaussian (CSCG) distribution with variance \({\sigma }_{w}^{2}\). \(S\left(n\right)\) denotes the noise-free modulated signal transmitted by the PU, and \(h\) represents the channel gain between the PU and the SU, where it is assumed that this channel is constant and follows Gaussian distribution mode. \({H}_{0}\) is used to assume that the PU is not present, and \({H}_{1}\) is used to assume that the PU is present.

Typically, two performance metrics for spectrum sensing are considered, namely the detection probability \({P}_{d}\) and the false alarm probability \({P}_{f}\). If a deep learning-based approach is used to sense spectrum, the output of the activation layer is the probability of each classification. For this binary classification issue, determining \(i=arg\mathrm{max}\left({P}_{i}\right)\) is equivalent to comparing the output probability under hypothesis \({H}_{1}\) with a threshold value \({\gamma }_{D}\). Thus, the performance metric can be defined as

where \({z}^{1}\) denotes the probability of output neurons labeled “1” in the presence of PU.

2.2 Noisy Activation Function

The activation function enhances the nonlinear fitting ability and nonlinear representation of the neural network. Due to the saturation phenomenon of the generally used nonlinear activation function itself, it can lead to gradient vanishing problems. Caglar et al. [13] proposed to use Noisy Activation Function (NAF) learn the scale of the noise to make the gradient more significant.

Gate-structured RNN are proposed to solve the long-term dependence of time series, it includes LSTM and GRU, which both use soft saturated nonlinear functions such as sigmoid and tanh to imitate the hard decisions in digital logic circuits. However, the saturation characteristics cause the gradient to vanish when crossing the gate, and they are only softly saturated, which leads to the problem that the gates cannot be fully opened or closed, so hard decisions cannot be realized.

The hard decision problem can be solved by using a hard saturated nonlinear function, taking the sigmoid and tanh function as an example, the first-order Taylor expansion near the 0 point which is constructed as a hard saturation function, it is as follows,

Clipping the linear approximations result to,

The use of a hard saturated nonlinear function aggravates the problem of vanishing gradient because the gradient is set to exactly zero at saturation rather than converging to zero. However, further stochastic exploration of the gradient can be facilitated by introducing noise into the activation function that varies with the degree of saturation. Let us consider a noisy activation function.

where \(\alpha \mathrm{h}\left(x\right)\) is the nonlinear part, \(\alpha \) is a constant hyperparameter that determines the noise and the direction of the slope, \(h\left(x\right)\) represents the initial activation functions such as \(\mathrm{hard}\_\mathrm{sigmoid}\) and \(\mathrm{hard}\_\mathrm{tanh}\), \(\left(1-\alpha \right)\mathrm{u}\left(x\right)\) is the linear part, \(\mathrm{d}(x)\sigma (x)\epsilon \) is the random part of the noise, \(\mathrm{d}\left(x\right)=-sgn\left(x\right)sgn(1-\alpha )\) changes the direction of the noise, \(\sigma \left(x\right)=c{(g\left(p\Delta \right)-0.5)}^{2}\), and \(\epsilon \) is the noise that generates the random variable.

3 Spectrum Sensing Based on Noisy Activation Deep Neural Network

In this paper, we provide a spectrum sensing method based on noisy activation deep neural network (NA-CGNet) for a SU in CR, where the SU does not need a prior knowledge about the PU or noise power but uses the raw received signal data to achieve spectrum sensing. The proposed algorithm flow is shown in Fig. 1, including offline training and online sensing. First, the received I/Q signals of the primary user are sampled and pre-processed with energy normalization, and then the normalized dataset is input to the NA-CGNet model proposed in this paper for network model training and validation to obtain a well-trained model. In online sensing detection, the SU received signal testset is input to the well-trained NA-CGNet model to obtain the corresponding probabilities under \({H}_{1}\) and \({H}_{0}\) to make a spectrum sensing decision.

Figure 2 shows the proposed NA-CGNet network structure, we treat the received signal as a 2 × 128 × 1 image and process it first with a CNN-based model, 1D CNN is first used to extract spatially relevant features of the input time-series received signal, so as to obtain sufficient local features. Among them, the 1D CNN consists of two convolutional blocks, each of which includes a Conv1D layer, a regularized Dropout layer that prevents overfitting of the network and enhances the network generalization ability, and a modified line unit (ReLU) activation function that increases the nonlinearity between the layers of the network. Since functional information can be lost during network transmission, a cascade layer (CONCAT) is used to combine the input of the first convolutional block and the output of the second convolutional block in order to compensate for the loss of features.

1D CNN performs well in local feature extraction, but it lacks the ability to connect the long-term dependencies of the input time series. GRU is introduced for processing time series data to fully extract the temporal global correlation features. Compared with LSTM, GRU has a simpler network structure and is easier to train, which can largely improve the training efficiency, and most importantly, GRU can achieve comparable results to LSTM. By adding normal noise to the zero-gradient part of the activation function the NA-GRU block, the added noise can grow according to the degree of saturation of the activation function, so that more noise points in the saturated state can be randomly explored toward the unsaturated state to make more attempts in the training convergence process, thus the network is noise-resistant at low SNR to achieve good sensing performance.

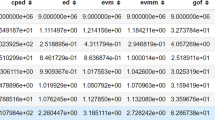

The signal processed by the NA-GRU block is fed to the final classification network, which consists of two FC layers and each FC layer maps the input features to a low-dimensional vector. As for the activation function, the first FC layer uses Relu and the last FC layer uses SoftMax to obtain the classification results. We map the output to a binary classification vector normalized by the SoftMax function, which represents the probability of the presence and absence of PU. The network parameters are optimized using the Adam optimizer, the classification cross-entropy is the loss function used, the initial learning rate is set to 0.0003, and the Dropout ratio is kept at 0.2 to obtain the best hyperparameters. The above network is called “NA-CGNet” and the hyperparameters confirmed by extensive cross-validation are detailed in Table 1.

4 Experimental Analysis

4.1 Dataset Generation and Pre-processing

Based on the baseline dataset RadioML2016.10a [16], which is widely used in modulation identification tasks, eight digital modulation signals are generated as positive samples, and they consist of baseband I/Q signal vectors and common radio communication practical channel effects are took into account. According to the signal energy and the required signal-to-noise ratio level, the corresponding AWGN conforming to the CSCG distribution is generated as negative samples. The (SNR) varies from -20 dB to 5 dB in 1 dB increments. For each modulated signal type, 1000 modulated signal positive samples and 1000 noise samples are generated at each SNR. The whole dataset is divided into three different sets with a common split ratio of 3:1:1. The dataset parameters are detailed in Table 2 below.

Considering that the energy interference of the signal has minimal impact and the modulation structure of the signal can be better exposed, the received time-domain complex signal \({X}_{in}=[{X}_{real};{X}_{imag}]\) data is pre-processed with energy normalization before the network training. It works as follows.

where \({X}_{i}\) denotes the \(i\)-th sequence of the sampled signal and \(abs({X}_{i})\) denotes the absolute value of \({X}_{i}\).

4.2 Experimental Configuration and Network Training

The experiment uses Tensorflow as the framework and the Python language for programming. The operating system is Ubuntu 18.04. GNU Radio is a free open source software development kit that provides signal processing modules to implement software radio and wireless communication research.

Considering the necessity of setting a constant false alarm probability \({P}_{f}\), a two-stage training strategy is used. In the first stage, convergence is achieved by training the model with early stopping, so that both validation loss and accuracy are kept stable. A stopping interval of \({P}_{f}\) is first set in the second stage, and stops when \({P}_{f}\) falls into this interval. Applying a two-stage training strategy can control the detection performance to some extent by adjusting the preset stop intervals.

4.3 Simulation Results

In this section, extensive simulation results are provided to demonstrate the performance of the proposed model. For GFSK modulated signals with sample length 128, the sensing accuracy of dif-ferent neural network models CNN, LSTM, DetectNet and the proposed model NA-CGNet with different SNRs were compared as shown in Fig. 3, it can be seen that the NA-CGNet model proposed in this paper provides better sensing accuracy than other networks, especially the accuracy improvement of NA-CGNet is more obvious at low SNR level. The NA-CGNet model achieves a sensing accuracy \({P}_{d}\)=0.5239 at SNR = −15 dB which is 0.042 better than the DetectNet model while ensuring a lower \({P}_{f}\)=7.06%, and has the highest sensing accuracy \({P}_{d}\) at SNR = −20 dB than other models. This is due to the proposed NA-CGNet model adding noisy activation function to the GRU block, which enables further stochastic exploration of the network in a saturated state, more robust to noise in a low SNR environment, and a corresponding improvement in sensing performance.

The generalization of the proposed NA-CGNet model is demonstrated by varying the modulation characteristics of the PU signal. Figure 4 shows the comparison of the sensing performance of NA-CGNet on eight different digital modulation schemes with sample length of 128 and SNRs ranging from −20 dB to 5 dB. It can be observed that the difference in sensing performance between the various modulated signals is negligible, which implies that the NA-CGNet model performance is insensitive to the modulation order. In addition, it is observed that the difference in sensing performance between the same type of modulation such as BPSK, 8PSK and QPSK is very small, which indicates the robustness of the proposed NA-CGNet model for different modulation schemes of radio signals.

5 Conclusion

In this paper, a deep neural network model for spectrum sensing in a low SNR environment is proposed. The 1D CNN and the GRU network with NAF respectively capture sufficient local features and global correlations of the input SU received signal, while the cascade layer helps to compensate for feature loss. Adding noise activation helps to improve the anti-noise performance of the network, which enables the network model to maintain good sensing performance at low SNR level. Simulation results show that the proposed model performs significantly better than CNN, LSTM, and DetectNet. in addition, the proposed NA-CGNet model is applicable to various modulation signal schemes, such as GFSK, BPSK, QPSK, QAM16, etc., and has good robustness to different modulation scheme signals. Finally, the proposed NA-CGNet model can achieve better spectrum sensing performance in low SNR environment which is shown to achieve high \({P}_{d}\) and low \({P}_{f}\) simultaneously.

References

Arjoune, Y., Kaabouch, N.: A comprehensive survey on spectrum sensing in cognitive radio networks: recent advances, new challenges, and future research directions. Sensors 19(1), 126 (2019)

Yucek, T., Arslan, H.: A survey of spectrum sensing algorithms for cognitive radio applications. IEEE Communications Surveys & Tutorials 11(1), 116–130 (2009)

Upadhye, A., Saravanan, P., Chandra, S.S., Gurugopinath, S.: A survey on machine learning algorithms for applications in cognitive radio networks. In: 2021 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT). pp. 01–6 (2021)

Awin, F., Abdel-Raheem, E., Tepe, K.: Blind spectrum sensing approaches for interweaved cognitive radio system: a tutorial and short course. IEEE Communications Surveys & Tutorials 21(1), 238–259 (2019)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Zheng, S., Chen, S., Qi, P., Zhou, H., Yang, X.: Spectrum sensing based on deep learning classification for cognitive radios. China Commun. 17(2), 138–148 (2020)

Xie, J., Liu, C., Liang, Y.C., Fang, J.: Activity pattern aware spectrum sensing: a CNN-based deep learning approach. IEEE Commun. Lett. 23(6), 1025–1028 (2019)

Soni, B., Patel, D.K., Lopez-Benitez, M.: Long short-term memory based spectrum sensing scheme for cognitive radio using primary activity statistics. IEEE Access. 8, 97437–97451 (2020)

Gao, J., Yi, X., Zhong, C., Chen, X., Zhang, Z.: Deep learning for spectrum sensing. IEEE Wireless Commun. Lett. 8(6), 1727–1730 (2019)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Cho, K., et al.: Learning phrase representations using RNN encoder-decoder for statistical machine translation. 2014 Jun 3 [cited 2022 May 14]. https://arxiv.org/abs/1406.1078v3

Gülçehre, Ç., Moczulski, M., Denil, M., Bengio, Y.: Noisy Activation Functions. ICML (2016)

Quan, Z., Cui, S., Sayed, A.H., Poor, H.V.: Optimal multiband joint detection for spectrum sensing in cognitive radio networks. IEEE Trans. Signal Process. 57(3), 1128–1140 (2009)

Kiranyaz, S., Avci, O., Abdeljaber, O., Ince, T., Gabbouj, M., Inman, D.J.: 1D convolutional neural networks and applications: a survey. Mech Syst Signal Proc. 151, 107398 (2021)

O’Shea, T.J., West, N.: Radio machine learning dataset generation with GNU radio. In: Proceedings of the GNU Radio Conference [Internet]. 2016 [cited 2022 Jun 23]. https://xueshu.baidu.com/usercenter/paper/show?paperid=1b963c825ef5ed29011305f8305cc727&site=xueshu_se&hitarticle=1

Acknowledgment

This work was supported by the National Natural Science Foundation of China (61761034), the Natural Science Foundation of Inner Mongolia (2020MS06022).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhu, X., Zhang, X., Wang, S. (2023). Research on Spectrum Sensing Algorithm Based on Deep Neural Network. In: Liang, Q., Wang, W., Liu, X., Na, Z., Zhang, B. (eds) Communications, Signal Processing, and Systems. CSPS 2022. Lecture Notes in Electrical Engineering, vol 873. Springer, Singapore. https://doi.org/10.1007/978-981-99-1260-5_31

Download citation

DOI: https://doi.org/10.1007/978-981-99-1260-5_31

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-99-1259-9

Online ISBN: 978-981-99-1260-5

eBook Packages: EngineeringEngineering (R0)