Abstract

Moving target detection is widely used in the field of intelligent monitoring. This paper proposes an Improved Frame Difference Method Fusion Horn-Schunck Optical Flow Method (IFHS) algorithm to detect moving targets in video surveillance. First, a three-frame difference method is performed on the image to obtain a difference image. Second, the differential image is tracked according to the HS optical flow method to obtain an optical flow vector. Finally, the moving target is obtained by fusing the optical flow vector information of the two difference results. The problem of incomplete contours of moving targets detected in video surveillance by the separate optical flow method and the three-frame difference method is solved. The experimental simulation and experimental applications in different scenarios prove the robustness and accuracy of the method.

This work was supported by National Natural Science Foundation of China (Grant No. 61702247) and the Natural Science Foundation of Liaoning Province of China (Grant No. 2019-ZD-0052).

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With the development of artificial intelligence and the emergence of intelligent robots, people will rely on machines to complete a large number of complex and tedious tasks. Computer vision is a study of related theories and technologies to try to build artificial intelligence systems that acquire “information” from images or multidimensional data. Moving object detection is a branch of image processing and computer vision. It refers to the process of reducing redundant information in time and space in video by computer vision to effectively extract objects that have changed spatial position. It has great significance in theory and practice, and has been concerned by scholars at home and abroad for a long time.

There are three main methods of traditional moving object detection: the first is the inter-frame difference method [1,2,3,4]. It subtracts the pixel values of two images in two adjacent frames or a few frames apart in the video stream, and performs thresholding on the subtracted image to extract the moving areas in the image. This method is more robust, but the detected moving target contour is incomplete, which is suitable for scenes with slow background changes and fast object movement.

The second is the background subtraction method [5, 6]. Background subtraction is a method for detecting moving objects by comparing the current frame in the image sequence with the background reference model. Its performance depends on the background modeling technology used. Its disadvantage is that it is particularly sensitive to dynamic background changes and external unrelated events.

The last method is optical flow method [7,8,9]. In the optical flow method, the optical flow of each pixel is calculated by using the optical flow. Then the threshold value segmentation is performed on the optical flow field to distinguish the foreground and background to obtain the moving target area. Optical flow not only carries the motion information of moving objects, but also carries rich information about the three-dimensional structure of the scene. It can detect moving objects without knowing any information about the scene. This method is less robust and susceptible to light, and the basic assumptions are difficult to meet.

The above methods can be applied to sports scenes in most fields. According to the movement background, the detection of moving objects can be divided into two cases: detection in static scenes and dynamic scenes. A static scene means that the camera and the motion scene remain relatively still. A dynamic scene is a sequence of video images captured by a camera mounted on a moving object. The above-mentioned methods have developed very rapidly in the past few years, and there are also some theoretical applications worth improving in various application scenarios. The main contribution of this paper is to propose an IFHS algorithm to improve the traditional algorithm and solve the following two shortcomings: The first is that the optical flow method alone is harsh and has poor robustness. The second is that the traditional frame-to-frame difference method has incomplete target contours for fast moving targets. This algorithm effectively improves the accuracy and efficiency of moving target detection in video surveillance. This paper is detecting video surveillance information and is therefore performed in a static scenario.

2 Inter-frame Difference Method

2.1 Three-Frame Difference Method

Commonly used frame difference methods are two-frame difference method [10, 11] and three-frame difference method [12, 13]. The result of the two-frame difference method may appear that the overlapping part of the target in the two-frame image is not easy to detect, and the center of the moving target is prone to voids. For slow moving targets, missed tests may occur. The three-frame difference method can overcome the double shadow problem that is easily generated by the difference between frames, and also suppresses the noise to a certain extent [14]. Therefore, this paper chooses the three-frame difference method. Figure 1 is a comparison of the matrix difference method of the first frame and the 26th frame.

The three-frame difference method is to change two adjacent frames in a video sequence to obtain characteristic information of a moving target. This method first uses three adjacent frames as a group to perform the difference between two adjacent frames.

Next, Binarize the difference results are binarized. In order to remove isolated noise points in the image and holes generated in the target, it is necessary to perform a morphological filtering process on the obtained binary image, to perform a closing operation [15]. After the image is enlarged and corroded, it is a logical AND operation. Therefore, the area of the moving target can be better obtained.

2.2 The Process of Three-Frame Difference Method

-

1)

Let the image sequence of n frames be expressed as:

\( \left\{ {f0(x,y), \ldots ,\,f\left( k \right)\left( {x,y} \right), \ldots ,\,f\left( {n - 1} \right)\left( {x,y} \right)} \right\} \)

\( f\left( k \right)\left( {x,y} \right) \) represents the k-th frame of the video sequence. Select three consecutive images in a video sequence:

$$ f\left( {k - 1} \right)\left( {x,y} \right),\;f\left( k \right)\left( {x,y} \right),\;f\left( {k + 1} \right)\left( {x,y} \right) $$

Calculate the difference between two adjacent frames of images, the binarization results are expressed by Eqs. (1) and (2)

-

2)

For the two difference images obtained, by setting an appropriate threshold the binarized images \( b_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) \) and \( b_{{\left( {k,k + 1} \right)}} \left( {x,y} \right) \) can be obtained as shown in Eqs. (3) and (4).

$$ b_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) = \left\{ {\begin{array}{*{20}c} 1 & {d_{{\left\{ {k - 1,k} \right\}}} \left( {x,y} \right) \ge T} \\ 0 & {d_{{\left\{ {k - 1,k} \right\}}} \left( {x,y} \right) < T} \\ \end{array} } \right. $$(3)$$ b_{{\left( {k,k + 1} \right)}} \left( {x,y} \right) = \left\{ {\begin{array}{*{20}c} 1 & {d_{{\left\{ {k,k + 1} \right\}}} \left( {x,y} \right) \ge T} \\ 0 & {d_{{\left\{ {k,k + 1} \right\}}} \left( {x,y} \right) < T} \\ \end{array} } \right. $$(4) -

3)

The closed operation is related to the expansion and corrosion operations. The closed operation can be used to fill small cracks and discontinuities in the foreground object. And small holes, while the overall position and shape are unchanged. Closing is the dual operation of opening and is denoted by A•B; it is generated by dilating A by B, followed by erosion by B with Eq. 5.

$$ A \bullet S = \left( {A \oplus S} \right)\Theta S $$(5) -

4)

For each pixel \( \left( {x,y} \right) \), the above two binary images \( b_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) \) with \( b_{{\left( {k,k + 1} \right)}} \left( {x,y} \right) \) perform logical and operation after closed operation to get \( B_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) \). The result of the logical AND operation is represented by Eq. 6.

$$ B_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) = b_{{\left( {k - 1,k} \right)}} \left( {x,y} \right) \otimes b_{{\left( {k,k + 1} \right)}} \left( {x,y} \right) $$(6)

3 Optical Flow Method

3.1 Optical Flow Field

When the human eye observes a moving object, the scene of the object forms a series of continuously changing images on the retina of the human eye. This series of continuously changing information continuously “Flow” through the retina (the image plane), like a light “Flow”, so called optical flow. Optical flow expresses the change of the image. Because it contains information about the movement of the target, it can be used by the observer to determine the movement of the target. In space, motion can be described by a motion field. However, on an image plane, the motion of an object is often reflected by the gray distribution of different images in the image sequence. Therefore, the motion field in space is transferred to the image and is expressed as optical flow field [16, 17].

The movement of a two-dimensional image is a projection of the three-dimensional object movement on the image plane relative to the observer. Ordered images can estimate the instantaneous image rate or discrete image transfer of two-dimensional images. The projection of 3D motion in a 2D plane is shown in Fig. 2.

3.2 Horn-Schunck Optical Flow Method

There are two types of constraints for the optical flow method:

-

1)

The brightness does not change.

-

2)

Time continuous or motion is “small motion”.

Let \( I(x,y,t) \) be the illuminance of the image point at time \( t + \delta t \). If \( u\left( {x,y} \right) \) and \( v\left( {x,y} \right) \) are the \( x \) and \( y \) components of the optical flow at this point, suppose that point moves to \( \left( {x + \delta x,y + \delta y} \right) \) and the brightness remains unchanged: \( \delta x = u\delta t,\delta y = v\delta t \).

Equation (7) is a constraint condition [18, 19]. This constraint cannot uniquely solve for \( u \) and \( v \), so other constraints are needed, such as constraints such as continuity everywhere in the sports field. If the brightness changes smoothly through \( x,y,t \) the left side of Taylor series Eq. (7) can be extended to Eq. (8).

Where \( \varepsilon \) is the second and higher order terms for \( \delta x \), \( \delta y \), \( \delta {\text{t}} \). The \( I(x,y,t) \) on both sides of the above formula cancel each other, divide both sides by \( \delta {\text{t}} \), and take the limit \( \delta {\text{t}} \to 0 \) to get the Eq. (9).

Equation (9) is actually an expansion of Eq. (10).

Assume:

the relationship between the spatial and temporal gradients and the velocity components is obtained from Eq. (9) as shown in Eq. (11).

Finally, the constraint equation of light is shown in the following Eq. (12).

Horn-Schunck optical flow [20,21,22,23] algorithm introduces global smoothing constraints to do motion estimation in images. The motion field satisfies both the optical flow constraint equation and the global smoothness. According to the optical flow constraint equation, the optical flow error is shown in the following Eq. (13).

among them \( {\text{x = }}\left( {\text{x,y}} \right)^{T} \) For smooth changing optical flow, the sum of the squares of the velocity components is shown in Eq. (14).

Combining the smoothness measure with a weighted differential constraint measurement, where the weighting parameters control the balance between the image flow constraint differential and the smoothness differential:

The parameter \( \alpha \) in the equation is a parameter that controls the smoothness, and the value of \( \alpha \) is proportional to the smoothness. Use the variation method to transform the above equation into a pair of partial differential equations:

The finite difference method is used to replace the Laplace in each equation with a weighted sum of the local neighborhood image flow vectors, and iterative methods are used to solve the two difference Eqs. (16) and (17).

The Horn-Schunck optical flow method uses the minimization function of (18) to find the differential of E with respect to u and v to obtain the optical flow vectors (21) and (22).

Find:

\( \bar{u} \) and \( \bar{v} \) are the averages in their neighborhoods, respectively. From the above two equations, u and v can be found. The optical flow is

The Horn-Schunck optical flow method is also called a differential method because it is a gradient-based optical flow method. This type of method is based on the assumption that the brightness of the image is constant. Calculate the two-dimensional velocity field. Therefore, the direction and contour of the moving object will be detected more completely.

The comparison between the detection results of the HS optical flow method and the original is shown in Fig. 3. The detected effect is affected by lighting, so moving targets are not particularly noticeable.

4 IFHS Algorithm

The IFHS (Improved Frame Difference Method Fusion HS Optical Flow Method) algorithm is an improvement that combines the improved three-frame difference method with the HS optical flow method to detect motion information in video surveillance.

4.1 Mathematical Morphological Filtering and Denoising

Digital images are subject to noise pollution during the process of acquiring and transmitting, resulting in some black and white point noise, as shown in Fig. 4. Therefore, we need to perform a neighborhood operation on the pixels of the image by filtering to achieve the effect of removing noise. In the process of mathematical morphological image denoising [24], the filtering denoising effect can be improved by appropriately selecting the new installation and dimension of the structural elements. In the cascading process of multiple structural elements, the shape and dimensions of the structural elements need to be considered.

Mathematical morphology processes images by using certain structural elements to measure and extract the corresponding shapes in the images, and uses set theory to achieve the goal of analyzing and identifying the images, while maintaining the original information in the images. Assume that the structural elements are \( A_{mn} \), \( n \) represents a shape sequence, and \( m \) represents a dimensional sequence. The process of \( A_{mn} = \{ A_{11,} A_{12} , \ldots A_{21} , \ldots ,A_{nm} \} \) is to digitally filter and denoise digital images. According to the characteristics of noise, structural elements from small to large dimensions are adopted, and more geometric features of digital images are maintained. Therefore, a cascade filter is selected. The elements of the same shape structure are used to filter the image according to the dimensional arrangement from small to large. The series filtering composed of structural elements of different shapes is then connected in parallel. Finally, calculate and plot the Peak Signal to Noise Ratio (PSNR) value curve to show the denoising effect. PSNR is the ratio of the maximum signal power to the signal noise power. The larger the PSNR value between the two images, the more similar it is. The figure below shows the original image and the noise image as shown in Fig. 4. The effect of four series noise reduction is shown in Fig. 5.

The parallel denoising effect is shown in Fig. 6. The image of the denoising effect by the PSNR curve is shown in Fig. 7.

It is proved by experiments that de-noising through series and parallel filters is better than series filtering alone. So we use series and parallel denoising.

4.2 Improved Three-Frame Difference Method

Aiming at the shortcomings of the traditional frame difference method, this paper uses series-parallel filtering and three-frame difference method to perform image processing. The specific process is shown in Fig. 8.

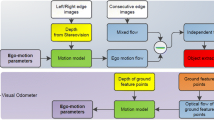

4.3 Moving Object Detection with IFHS Algorithm

Differentiate the k-frame images in the input video sequence with their k − 1 and k + 1 frames, respectively, and use the HS optical flow method to calculate the optical flow vectors of the two images; then set a threshold to remove the length less than this threshold optical flow vector; initialize k = 3; When a new frame enters, perform a difference operation on the previous frame and the next frame, use the appropriate threshold to binarize the new difference image and the previous difference image, and then Perform logical AND operation on the two binarized images, and iteratively accumulate the obtained moving targets in sequence. The specific operation is shown in Fig. 9.

In this paper, the algorithm operates on a computer with Inter i5-9300H,8g memory and 2.4 GHz. It is implemented by Matlab R2016a and its own toolbox. The data set involved in the algorithm is publicly provided on the Internet. The URL is as follows: http://cmp.felk.cvut.cz/data/motorway/. The “Toyota Motor Europe (TME) Motorway Dataset” is composed by 28 clips for a total of approximately 27 min (30000 + frames) with vehicle annotation. Annotation was semi-automatically generated using laser-scanner data. Image sequences were selected from acquisition made in North Italian motorways in December 2011. This selection includes variable traffic situations, number of lanes, road curvature, and lighting, covering most of the conditions present in the complete acquisition. The dataset comprises: Image acquisition: stereo, 20 Hz frequency, 1024 × 768 grayscale losslessly compressed images, 32° horizontal field of view, bayer coded color information (in OpenCV use CV_BayerGB2GRAY and CV_BayerGB2BGR color conversion codes; please note that left camera was rotated upside down, convert to color/grayscale BEFORE flipping the image). A checkboard calibration sequence is made available. Laser-scanner generated vehicle annotation and classification (car/truck). A software evaluation toolkit (C ++ source code). The data provided is timestamped, and includes extrinsic calibration.

The dataset has been divided in two sub-sets depending on lighting condition, named “daylight” (although with objects casting shadows on the road) and “sunset” (facing the sun or at dusk). For each clip, 5 s of preceding acquisition are provided, to allow the algorithm stabilizing before starting the actual performance measurement. The data has been acquired in cooperation with VisLab (University of Parma, Italy), using the BRAiVE test vehicle.

In this paper, in order to fuse the advantages of various algorithms together to make up for their shortcomings, we combine the improved three-frame difference method and the HS optical flow method into a new method (Improved Frame Difference Method Fusion Horn-Schunck Optical Flow) to detect vehicle motion information in the video. For the sake of comparison, we take frame 1 and frame 26 as examples. It is simulated on the running software of matlab. After the video is subjected to the three-frame difference method, the difference result is tracked and detected by the HS optical flow method. First calculate the magnitude of the optical flow vector. Because optical flow is a complex matrix, you need to multiply by conjugate. Second, you need to calculate the average value of the optical flow amplitude to represent the speed threshold. Finally, this paper use threshold segmentation to extract moving objects, next filter to remove noise. The mathematical morphological filtering denoising was mentioned above. After multiple comparisons in the experiment, as shown in the detection result of Fig. 7, the comparison of the PSNR curves of the series filtering denoising and the parallel filtering denoising. This paper uses series, parallel, and filtering to denoise, and Xia compensates for the loss of the image during the transmission process, so as to make the detection target contour clearer in the following.

Remove the road by morphological erosion, and fill the car hole by morphological closure. In this way, the vehicle information in the video can be more completely retrieved. The image results of the specific method combined detection are shown in Fig. 10.

4.4 Vehicle Flow

This paper has an image of 120 × 160 pixels. We add a white virtual bar on its 30th line to make a “ruler” to measure the content. Then we also need to add a car border to show the tracked and show the tracked car. Because the vehicle needs to have two frames to pass the frame, in order to clearly show the frame effect, we take the middle frame 6 and 22 as an example. At the same time, we called the toolbox in matlab to calculate the area and border of the car. An image with a white ruler and a border in the image is shown in Fig. 11. The border of the car passed the white bar. We judged that it was passing by the car.

Next, you need to calculate the percentage of the area of the car to the area of the drawn border. After many experimental prediction tests, we found that more than 40% of the area of the frame is a vehicle. As shown in the figure, we determine whether the vehicle is a vehicle based on whether the percentage is greater than 40%. This paper uses the white bar as a ruler to count the number of vehicles. This experiment uses highway video information, so no one will cross the road. If there is a pedestrian on the road, the new algorithm will draw a border around the detected object and the percentage of its area detected will not reach 40%. White bars are not counted. In order to make the identification clearer, the parameters should be adjusted from 40% to 45% in the experiment, so that the car can be completely distinguished. The detected image is shown in Fig. 12. Take the images of frames 22 and 43 as examples in this paper.

This paper selects a certain number of frames based on the speed of the vehicle and considers the vehicle to be in the process of moving from video surveillance to entry. Here in this video, the selected frame number is 20. This paper takes the maximum value of the vehicle count in 10 frames out of 20 frames as the number of vehicles we think is currently passing through the road section, and then add up to get the video traffic volume.

Due to the three-frame difference method, the video image has a total of 118 frames, with 20 frames as a part, and the result is rounded up. Then six partial loops are accumulated to get the number of vehicles in the video. The specific counting results are shown in Table 1.

As can be seen from Table 1, each process of vehicle technology counts a total of 6 times, and the video passes through a total of 10 vehicles.

5 Evaluation

In order to test the effectiveness of the algorithm in this paper, the F-measure method [25] is used to evaluate the effectiveness of the proposed new moving target detection algorithm. F-Measure is the \( tp \) (true positives) detected by Precision and Recall weighted harmonic average \( fp \) (false positives) \( fn \) (false negatives) \( tn \) (true negatives).

\( f1 \) combines the results of \( P \) and \( R \).When F1 is high, the comparison shows that the experimental method is ideal. The following Table 2 compares the experimental results.

From the standpoint of video alone, this article uses four videos for comparison. From the results of separate tests, it can still be proven that the accuracy of the algorithm in this paper is higher than the accuracy of the two algorithms alone.

6 Conclusion and Future Work

After testing in this paper, the algorithm has obvious advantages in detecting vehicle information in video than the two algorithms alone, which can prove the accuracy and robustness of the algorithm. The experimental results show that the IFHS method has a good guiding effect on vehicle flow detection. The test results in Tables 1 and 2 show that the algorithm can well compensate for image holes between frames. The matching optical flow information obtains the movement trajectory to achieve the positioning algorithm of the vehicle during the movement. It can reduce the number of false recognitions, speed up the detection speed, reduce the complexity of the computer, meet the needs of traffic flow statistics, and ensure the accuracy of the number of statistics. Through several comparative iterative experiments, we find that the method is both effective and stable.

In the future, this algorithm can intelligently monitor the information in video surveillance, saving human resources. In order to provide basic guarantees for intelligent transportation systems. In the future, research on monitoring and identifying vehicle information at intersections in real time and managing traffic lights at intersections may continue. If a large amount of traffic is detected, the changes in lighting will accelerate, otherwise it will slow down, thereby promoting the development and popularization of intelligent transportation systems.

References

Zhang, T., Jiang, P., Zhang, M.: Inter-frame video image generation based on spatial continuity generative adversarial networks. Signal Image Video Process. 13(8), 1487–1494 (2019). https://doi.org/10.1007/s11760-019-01499-0

Zhao, D.-N., Wang, R.-K., Lu, Z.-M.: Inter-frame passive-blind forgery detection for video shot based on similarity analysis. Multimed. Tools Appl. 77(19), 25389–25408 (2018)

Li, H., Xiong, Z., Shi, Z.: HSVCNN: CNN-based hyperspectral reconstruction from RGB videos. In: 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE (2018)

He, Z.-J., Yang, C.-L., Tang, R.-D.: Research on structural similarity based inter-frame group sparse representation for compressed video sensing. Acta Electron. Sinica 46(3), 544–553 (2018)

Mabrouk, L., Huet, S., Houzet, D.: Efficient adaptive load balancing approach for compressive background subtraction algorithm on heterogeneous CPU–GPU platforms. J. Real-Time Image Process. (1) (2019)

Smeureanu, S., Ionescu, R.T.: Real-time deep learning method for abandoned luggage detection in video. In: European Signal Processing Conference (2018)

Wu, D., Hao, H., Wang, L.: Intelligent monitoring system based on Hi3531 for recognition of human falling action. In: 2018 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS) (2018)

Shah, N., Píngale, A., Patel, V.: An adaptive background subtraction scheme for video surveillance systems. In: IEEE International Symposium on Signal Processing & Information Technology. IEEE (2018)

Chen, H., Nedzvedz, A., Nedzvedz, O.: Correction to: wound healing monitoring by video sequence using integral optical flow. J. Appl. Spectrosc. 86, 1146 (2020)

Li, Y., Jia, H., Chen, S.: Single-shot time-gated fluorescence lifetime imaging using three-frame images. Opt. Express 26(14), 17936 (2018)

Li, B., Zan, S., Jing, Q., et al.: Detection and tracking method for maneuvering targets using improved gradient-based optical flow field. In: 2019 Chinese Control And Decision Conference (CCDC) (2019)

Mitiche, A., Mansouri, A.-R.: On convergence of the Horn and Schunck optical-flow estimation method. IEEE Trans. Image Process. 13(6), 848–852 (2004)

Han, X., Gao, Y., Lu, Z., et al.: Research on moving object detection algorithm based on improved three frame difference method and optical flow (2016)

Zhang, M., Zhou, X., Wang, C.: Noise suppression threshold channel estimation method using RC and SRRC filters in OFDM systems. In: 2018 IEEE 18th International Conference on Communication Technology (ICCT). IEEE (2018)

Batabyal, T., Acton, S.T.: Elastic Path2Path: automated morphological classification of neurons by elastic path matching. In: 2018 25th IEEE International Conference on Image Processing (ICIP). IEEE (2018)

Hu, X., Li, D., Zeng, X., et al.: A visualization method of facial expression deformation based on pore-scale facial feature matching. In: 2019 Chinese Control Conference (CCC). IEEE (2019)

Petrou, Z.I., Xian, Y., Tian, Y.: Towards breaking the spatial resolution barriers: an optical flow and super-resolution approach for sea ice motion estimation. Isprs J. Photogram. Remote Sens. 138, 164–175 (2018)

Hur, J., Roth, S.: Iterative residual refinement for joint optical flow and occlusion estimation (2019)

Xiang, X., Zhai, M., Zhang, R., et al.: A CNNs-based method for optical flow estimation with prior constraints and stacked U-Nets. Neural Comput. Appl. (2018)

Wang, H., Zheng, J., Pei, B.: A robust optical flow calculation method based on wavelet. Dianzi Yu Xinxi Xuebao/J. Electron. Inf. Technol. 40(12), 2945–2953 (2018)

Leila, C., Salah, C., Karima, B.: Fast motion estimation algorithm based on geometric wavelet transform. Int. J. Wavelets Multiresolut. Inf. Process. 17(2) (2019)

Peng, Y., Chen, Z., Wu, Q.M.J., et al.: Traffic flow detection and statistics via improved optical flow and connected region analysis. Signal Image Video Process. 12(1), 99–105 (2018)

Ali, I., Alsbou, N., Jaskowiak, J., et al.: Quantitative evaluation of the performance of different deformable image registration algorithms in helical, axial, and cone-beam CT images using a mobile phantom. J. Appl. Clin. Med. Phys. 19(2), 62 (2018)

Shivarama Holla, K., Jidesh, P., Bini, A.A.: Multiple-coil magnetic resonance image denoising and deblurring with nonlocal total bounded variation. Iete Tech. Rev. 1–6 (2019)

Altschuler, M., Bloodgood, M.: Stopping active learning based on predicted change of f measure for text classification. In: 2019 IEEE 13th International Conference on Semantic Computing (ICSC). IEEE (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Zhao, R., Wei, H., Yu, H. (2020). IFHS Method on Moving Object Detection in Vehicle Flow. In: Qian, J., Liu, H., Cao, J., Zhou, D. (eds) Robotics and Rehabilitation Intelligence. ICRRI 2020. Communications in Computer and Information Science, vol 1336. Springer, Singapore. https://doi.org/10.1007/978-981-33-4932-2_1

Download citation

DOI: https://doi.org/10.1007/978-981-33-4932-2_1

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-33-4931-5

Online ISBN: 978-981-33-4932-2

eBook Packages: Computer ScienceComputer Science (R0)