Abstract

This paper proposes an algorithm to restore low-light images by enhancement of its inverted illumination map using dehazing. The proposed algorithm follows the Retinex theory and decomposes the low-light input image into reflectance and illumination components. The illumination map is then inverted and enhanced using dehazing-type algorithm and gamma correction. The enhanced map is again inverted back to obtain the enhanced illumination, and finally, it is combined with the original reflectance to output an enhanced image. The enhanced images obtained using the proposed algorithm have improved contrast and visual quality. The proposed method is analyzed qualitatively on various standard images used in literature and compared quantitatively with several benchmark techniques. It is found that the proposed algorithm is superior over various other benchmarks.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Images taken under low-light environment tend to exhibit a lack of contrast and poor visibility. The poor quality of such images adversely affects the efficiency of various machine vision and multimedia applications like object tracking [1] and detection [2]. As these algorithms are trained primarily on high-quality images, they yield poor results in low-light conditions. To improve the performance of these applications, and also for aesthetic purposes, various low-light image enhancement methodologies have been proposed in recent decades.

1.1 Literature Review

One of the most intuitive approaches for low-light image enhancement and contrast restoration is the contrast enhancement-based techniques. Histogram equalization (HE) [3] constrains the image uniformly in the range of [0, 1]. However, it also enhances any noise present in the scene and gives rise to checkerboard effects. Furthermore, it fails to consider the statistical distribution of the image and, hence, leads to visible artifacts. Adaptive contrast enhancement (AHE) [4], on the other hand, is computationally expensive. Celik et al. [5] proposed a contrast enhancement method for variational and contextual information (CVC) which considers the inter-pixel relationship and their contextual information to smooth the target histogram. Lee et al. [6] used layered difference (LDR) to represent 2D histogram for contrast enhancement. They amplified the difference in gray level between neighboring pixels. Direct amplification of low-light image is another conventional approach to restore the color contrast and visibility. However, this can lead to over-enhancement and saturation of areas having bright light. It also gives rise to halos. To overcome this, Tang et al. [7] applied dehazing-type algorithm on the inverted low-light image to suppress regions with strong light from over-enhancement. Dong et al. [8] also performed dehazing on the inverted low-light images to obtain enhanced results by manipulating their illumination maps. Guo et al. introduced a restoration approach for low-light images (LIME) [9] which imposes a structure prior on the illumination map. Li et al. [10] performed super-pixel segmentation and used various statistical measures to estimate the noise level of each super-pixel. They used an adaptive approach for dehazing to avoid over-enhancement.

A benchmark model which inspired several enhancement techniques for low-light images was presented by Land et al., called as Retinex theory [11]. The theory is based on human perception and assumes that images are made up of two factors, namely reflectance and illumination. Some of the initial attempts include single [12] and multi-scale [13] Retinex-based approaches. These, however, give unnatural and over enhanced results. Shin et al. [14] introduced an algorithm to restore naturalness (ENR) which creates a mapping curve to adjust contrast and suppress edge artifacts. Fu et al. [15] used morphological operations to separate an image to its corresponding illumination and reflectance maps. They use a fusing strategy to combine the advantages of various techniques such as histogram equalization [3] and sigmoid function. Wang et al. [16] enhanced non-uniformly illuminated images by balancing naturalness and details.

Although significant advancements have been made to enhance low-light images, some of these approaches are computationally costly and, hence, unfeasible for real-time purposes. Furthermore, although Retinex theory preserves the edge information and the naturalness of an image, the existing algorithms still fail to completely restore an image without any loss of contrast or color information.

1.2 Contributions

While several methodologies have been developed to restore low-light images, they often tend to lose color information or do not perform significant enhancement or improvements. This paper, on the other hand, proposes a simple, yet efficient approach to effectively enhance and restore a given low-light image. The dehazing-type approach used in the paper increases the general pixel intensity of the image. Also, using gamma correction further widens the dynamic range of the image, thereby improving its contrast and overall visual appeal. The results obtained present the efficiency of the proposed method when compared with several benchmark techniques in qualitative as well as quantitative terms.

The remaining of the paper is arranged as follows: Sect. 2 illustrates upon the algorithm introduced and describes it in detail. Section 3 includes the qualitative and quantitative results obtained and their comparison with several benchmarks. Lastly, Sect. 4 highlights some of the important discussions followed by the concluding remarks.

2 Proposed Methodology

This section includes the explanation of the proposed methodology. Figure 1 outlines the steps to be followed in the proposed algorithm. The following subsections involve discussions on the basic building blocks of Fig. 1.

2.1 Retinex Theory

The basic assumption of the Retinex theory [11] is that images can be separated into their constituent illumination (lightness) and reflectance maps. Mathematically, an input image \(I_{\text {input}}\) can be represented by its illumination \(L_{\text {illum}}\) and reflectance \(R_{\text {ref}}\) components as

where (a, b) is the pixel location and c is for the blue (B), green (G), and red (R) color channels. It can be observed that the reflectance is different for different color channels as it represents the properties of each color. On the other hand, the illumination map for all the color channels is the same, as the same amount of light reaching each of the color channels. From (1), the reflectance is defined as

2.2 Illumination Map Estimation

The illumination map can be approximated as the maximum intensity of the image at each pixel location. For an input image \(I_{\text {input}}\), the initial illumination map \(L_{\text {illum}}\) is estimated as

The inverted illumination \(L_{\text {inv}}\) can then be calculated as

Herein, it has been assumed that the range of the input image is [0, 255]. Figures 2a–c show a low-light image, its illumination map using (3), and the inverted illumination map using (4), respectively. It can be observed that the high intensity of the inverted illumination map gives it a hazy appearance. Accordingly, suitable dehazing techniques can be applied to the inverted illumination map to enhance it.

Image enhancement using proposed algorithm: a input image, b corresponding illumination map approximated by (3), c inverted illumination map calculated by (4), d recovered illumination map using (9), e illumination map further enhanced with gamma correction by (10), and f final enhanced output image by (11)

2.3 Dehazing of Inverted Illumination Map

As the inverted illumination map has a hazy appearance, dehazing like approach can be applied to it. A pioneer dehazing technique is based on the conventional atmospheric scattering model [17], given by

where J(a, b) is the original dehazed scene, I(a, b) represents the observed image, \(t_{\text {trans}}(a,b)\) represents the transmission map representing the amount of light that reaches the observer, A is global atmospheric light, a and b represent the coordinate locations of the pixels, and c represents the color channels B, G, and R. The global atmospheric light represents the pixels with maximum light in an image. To calculate the atmospheric light, the brightest 0.1% pixels of the dark channel [18] are considered. For an inverted illumination map \(L_{\text {inv}}\), the corresponding dark channel [18] \(L{_{\text {inv}}^{\text {dark}}}\) is given by

where \(\Omega \) represents a patch with center at pixel (i, j). The patch size considered here is 3 \(\times \) 3.

The pixel locations corresponding to the brightest pixel intensities in the dark channel are mapped to their corresponding locations of the pixels in the illumination map. The highest intensity among these, i.e., 0.1% pixel locations in the inverted illumination map is the estimated atmospheric light.

The transmission map can then be approximated using atmospheric light as

where \(\omega \) is a constant parameter used to prevent an image from appearing unnatural. Based on [18], \(\omega \) is set to 0.95.

Finally, the dehazed inverted illumination map \(L_{\text {inv}}^{\text {dehazed}}\) is calculated as

where \(t_0\) is the minimum transmission value that is set to avoid division by 0.

The enhanced illumination map \(L_{\text {enh}}\) of the input low-light image is then obtained as

This illumination map can further be enhanced to improve the contrast by applying gamma correction as

where \(\gamma \) = 0.5 is the gamma correction parameter.

Finally, the required enhanced output image \(I_{\text {enh}}\) is calculated as

where \(R_{\text {ref}}^c(a, b)\) is the reflectance of the image calculated using (2).

3 Experimental Results

The proposed algorithm has been compared with several state of the artFootnote 1, namely HE [3], AHE [4], CVC [5], LDR [6], ENR [14], and LIME [9]. The resultsFootnote 2 suggest the superiority of the proposed methodology over the considered benchmarks both quantitatively and qualitatively.

3.1 Qualitative Analysis

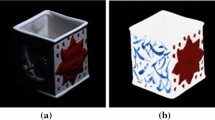

Figures 3, 4, and 5 present the output of the proposed methodology along with several benchmarks. It can be observed that while the result of the proposed approach has sufficient light and color information, AHE [4], CVC [5], and LDR [6] fail to preserve any such color fidelity. Although the result of HE [3] in Fig. 3b has increased overall pixel intensity, the image appears whitewashed. Outputs of CVC [5] and LDR [6] continue to appear dark and fail to enhance the contrast of the image, as can be observed in images (d) and (e) of Figs. 3, 4, and 5. The proposed algorithm performs better than these benchmarks in terms of visual appeal while giving comparable results as ENR [14] and LIME [9].

Various standard images used for quantitative comparison. From left to right and top to bottom: Images of Baby at Window, Baby on Grass, Christmas Rider, High Chair, Standing Boy, Waving Girl, Dog, and Santa’s Little Helper. (Download Link: Click here)

3.2 Quantitative Analysis

The proposed method has been compared with several benchmarks on various low-light images shown in Fig. 6. The performance metric used for comparison is the lightness order error (LOE) [16], mathematically given by

where m is the pixel location, \(U(\alpha , \beta )\) = 1 if \(\alpha \) > \(\beta \), and 0 if \(\alpha \) \(\le \) \(\beta \), \(\oplus \) represents the exclusive OR operation, and \(M_r(i)\) and \(M_e(i)\) are the highest pixel values among blue, green, and red color channels of the reference and enhanced images, respectively, at pixel location i. Lower value of LOE [16] indicates higher naturalness of lightness in the images.

It can be inferred from Table 1 that the proposed method performs better than nearly all the considered benchmarks. Although ENR [14] outperforms the proposed algorithm in two images (Baby at Window and Santa’s Little Helper), the proposed algorithm gives superior results in most of the cases. In the image of High Chair, the LOE value of the proposed method is lesser than one-tenth of the LOE value of ENR [14] and is lower by a factor of nearly two in the image of Baby on Grass and Christmas Rider. The proposed method gives better results than nearly all the benchmarks considered in qualitative as well as quantitative parameters.

4 Conclusions

The paper proposed an image restoration approach for low-light images by enhancing its illumination map. The results show the superiority of the proposed methodology over various other famous benchmarks. It can be concluded that the proposed method gives visually pleasing results with enhanced contrast and color fidelity. The qualitative and quantitative results are superior to a majority of the other benchmark methods considered. However, the future work may include estimation of the enhanced color image directly with input low-light image.

Notes

- 1.

The results of the state of the art have been obtained using MATLAB libraries or the source codes made publicly available by the authors.

- 2.

The results of the proposed algorithm on images in Fig. 6 are available at https://drive.google.com/drive/u/0/folders/1jf_uq_ua5JpPmxJtQAyQzL1WDntue7ct.

References

Zhang, K., Zhang, L., Yang, M.: Real-time compressive tracking. In: Proceedings of the \(12^th\) European conference on Computer Vision, pp. 864–877, Florence, Italy, 7–13 Oct (2012). https://doi.org/10.1007/978-3-642-33712-3_62

Oneata, D., Revaud, J., Verbeek, J., Schmid, C.: Spatio-temporal object detection proposals. In: ECCV-European Conference on Computer Vision, pp. 737–752, Zurich, Switzerland, Sep (2014). https://doi.org/10.1007/978-3-319-10578-9_48

Gonzalez, R.C., Woods, R.E.: Digital Image Processing, 3rd edn. Prentice-Hall, Englewood Cliffs, NJ, USA (2007)

Zuiderveld, K.: Contrast limited adaptive histogram equalization. In: Graphic Gems IV, pp. 474–485. Academic Press Professional, San Diego, Aug (1994)

Celik, T., Tjahjadi, T.: Contextual and variational contrast enhancement. IEEE Trans. Image Proc. 20(12), 3431–3441, Dec (2011). https://doi.org/10.1109/TIP.2011.2157513

Lee, C., Lee, C., Kim, C.-S.: Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process 22(12), pp. 5372–5384, Dec 2013. https://doi.org/10.1109/TIP.2013.2284059

Tang, C., Wang, Y., Feng, H., Xu, Z., Li, Q., Chen, Y.: Low-light image enhancement with strong light weakening and bright halo suppressing. IET 13(3), 537–542, 28 Feb (2019). https://doi.org/10.1049/iet-ipr.2018.5505

Dong, X., Wang, G., Pang, Y., Li, W., Wen, J., Meng, W., Lu, Y.: Fast efficient algorithm for enhancement of low lighting video. In: 2011 IEEE International Conference on Multimedia and Expo. Barcelona, Spain, 11–15 July (2011). https://doi.org/10.1109/ICME.2011.6012107

Guo, X., Li, Y., Ling, H.: LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993, Feb (2017). https://doi.org/10.1109/TIP.2016.2639450

Li, L., Wang, R., Wang, W., Gao, W.: A low-light image enhancement method for both denoising and contrast enlarging. In: 2015 IEEE International Conference on Image Processing (ICIP). Quebec City, QC, Canada, 27–30 Sep 2015. https://doi.org/10.1109/ICIP.2015.7351501

Land, E.H., McCann, J.J.: Lightness and retinex theory. J. Opt. Soc. Am. 61(1), 1–11, (1971). https://doi.org/10.1364/JOSA.61.000001

Jobson, D.J., Rahman, Z., Woodell, G.A.: Properties and performance of acenter/surround retinex. IEEE Trans. Image Proc. 6(3), 451–462, Mar (1997). https://doi.org/10.1109/83.557356

Jobson, D.J., Rahman, Z., Woodell, G.A.: A multiscale Retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Proc. 6(7), 965–976, July (1997). https://doi.org/10.1109/83.597272

Shin, Y., Jeong, S., Lee, S.: Efficient naturalness restoration for non-uniform illumination images. IET Image Proc. 9(8), 662–671, 27 July (2015). https://doi.org/10.1049/iet-ipr.2014.0437

Fu, X., Zeng, D., Huang, Y., Liao. Y., Ding, X., Paisley, J.: A fusion-based enhancing method for weakly illuminated images. Signal Process. 129, 82–96, Dec (2016). https://doi.org/10.1016/.sigpro.2016.05.031

Wang, S., Zheng. J., Hu, H.-M., Li, B.: Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 22(9), 3538–3548, Sep (2013). https://doi.org/10.1109/TIP.2013.2261309

Narasimhan, S.G., Nayar, S.K.: Vision and the atmosphere. Int. J. Comput. Vis. 48(3), 233–254 (2002). https://doi.org/10.1023/A:1016328200723

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353, Dec (2011). https://doi.org/10.1109/TPAMI.2010.168

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Agrawal, I., Sharma, T., Verma, N.K. (2023). Low-Light Image Restoration Using Dehazing-Based Inverted Illumination Map Enhancement. In: Castillo, O., Bera, U.K., Jana, D.K. (eds) Applied Mathematics and Computational Intelligence. ICAMCI 2020. Springer Proceedings in Mathematics & Statistics, vol 413. Springer, Singapore. https://doi.org/10.1007/978-981-19-8194-4_12

Download citation

DOI: https://doi.org/10.1007/978-981-19-8194-4_12

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-8193-7

Online ISBN: 978-981-19-8194-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)