Abstract

As Human-Computer Interaction (HCI) moves towards deep collaboration, it is urgent to study users’ trust in chatbots. This study takes customer service chatbots as an example. Firstly, literature review is conducted on the relevant research on users’ trust in chatbots, and the value chain model of customer service chatbots is analyzed. Taking Taobaoxiaomi as the specific research object, we conducted in-depth interviews with 18 users, organized the interview data with value focused thinking method (VFT), constructed the users’ trust model of customer service chatbots, and carried out an empirical test by questionnaire survey. The results show that professionalism, response speed and predictability have positive effects on users’ trust in chatbots, while ease of use and human-likeness have no significant positive effects on users’ trust. Besides, brand trust has a positive impact on users’ trust in chatbots, risk perception negatively affects users’ trust in chatbots, and human support has no significant negative effect on users’ trust. Finally, privacy concerns have a moderating effect on environmental factors (brand trust, risk et al.). This study will deepen the understanding of human-computer trust and provide reference for the industry to improve chatbots and enhance users’ trust.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Chatbots are software agents that interact with users through natural language conversations [1] and are seen as a promising customer service technology. Recent advances in artificial intelligence (AI) and machine learning, as well as the widespread adoption of messaging platforms, have prompted companies to explore chatbots as a complement to customer service [2]. According to a new report by Grand View Research, the global chatbots market is expected to reach $2.4857 billion by 2028 [3]. In addition, major platforms, such as Amazon, eBay, Facebook, Wechat, Jingdong and Taobao, have adopted chatbots for conversational commerce [4]. AI chatbots can provide unique business benefits [5]. They automate customer service and facilitate company-initiated communications. Chatbots are equipped with sophisticated speech recognition and natural language processing tools, enabling them to understand complex and subtle dialogue, and in a sympathy, and even humorous way to meet the requirements of the consumers [6]. Despite this potential benefit for vendors, one of the key challenges facing AI chatbots applications is customer response [7]. Human may be biased against chatbots, believing that they lack human emotion and empathy, and they are less credible in payment information and product recommendations (i.e., the Uncanny Valley theory and algorithm-based aversion proposed by Dietvorst et al. [8] and Kestenbaum[9]). In addition, some enterprises collect and use customer data illegally, resulting in the risk of user privacy disclosure [10].

Customer service is currently only an emerging application field of chatbots and has not yet achieved the expected general acceptance of customers [2]. From other technology areas, we know that users’ trust is critical for the widespread adoption of new interactive solutions [11]. However, our understanding of users’ trust in chatbots and the factors that influence this trust is very limited [2]. Therefore, it is of great theoretical and practical significance to study the users’ trust construction of customer service chatbots.

2 Literature Review

There is a wide range of studies on trust, ranging from psychology, sociology to technology [11]. Therefore, there are many definitions of trust. Trust refers to one’s dependence on another [12]. The relationship between people requires trust to make continuous interaction successful [13]. Trust is not only an important part of interpersonal communication but also an important part of the rapid development of the “human-machine” relationship [14]. Mayer et al. defined trust as a belief and will, emphasizing the risk of trust, the cause and effect of trust behavior [15]. They believe that “trust means that the trustor is willing to be in a vulnerable state influenced by the other party’s behavior based on the expectation that the trustor will show an important behavior toward him or her, and it has nothing to do with the ability to monitor or control the other party” [15]. Rousseau et al. proposed an interdisciplinary definition of trust that reflects the commonality, believing that trust is based on the positive expectation of others’ intentions or behaviors, while trusting (willing to take risks) is a psychological state that accepts vulnerability based on the positive expectation of another party’s intentions or behaviors [16]. Mayer et al., and Rousseau et al., both emphasized the importance of the will of fragile states and the actions at stake [16], and did not limit the concept of trust to the interaction between people. The object of trust could be technology, including artificial intelligence [17].

Most of the existing studies on non-human trust focus on automated systems, and many foreign scholars have studied the trust in robot systems [2, 18,19,20], but the research on the trust in customer service chatbots is relatively new. While the study of trust in automated and robot systems provides a solid foundation for understanding users’ trust in customer service chatbots to some extent, customer service chatbots are different from other forms of automation, and these differences will affect trust in ways that are not yet fully understood. This paper reviews the relevant literature on users’ trust in customer service chatbots, and lists the influencing factors of trust, as shown in Table 1.

According to the results of the above literature review, the influencing factors on users’ trust in chatbots can be divided into three categories: factors related to chatbots, environment, and user [2, 11, 22, 23, 25]. Corritore et al. classified the above three influencing factors as external factors. Besides external factors, they also put forward perceived factors, including perceived ease of use, perceived reliability, and perceived risk [11].

Based on the above scholars on the research of the users’ trust in chatbots, this paper puts forward the chatbots value chain, and analysis the main involving entities and their relations, thus confirming trust object. Then we conducted interviews with 18 users and made qualitative analysis of the influence factors of users’ trust in chatbots using VFT methods. And a model of users’ trust in customer service chatbots is established. Finally, we verified the model quantitatively by a questionnaire survey.

3 Interview Analysis Based on Value Focus Thinking

3.1 Value Focused Thinking

Due to the complexity of customer service systems containing chatbots, the factors that influence the users’ trust in customer service chatbots are more complex. This paper uses value focused thinking (VFT) method to find out some of the key factors. This paper analyzes the forms of users’ trust in online customer service chatbots, and establishes a users’ trust model of online customer service chatbots.

Value focused thinking is a creative decision-making analysis method proposed by Keeney(1992), which is suitable for solving complex multi-objective problems requiring highly subjective decision-making based on the value goals of decision-makers and stakeholders [25]. This method firstly focuses on value rather than scheme, believing that value is the primary criterion to evaluate the satisfaction of any possible scheme, and then the scheme to realize value [26]. Value refers to the criteria for evaluating possible solutions or results, which are externalized by the way of goal recognition, while the goal is defined as a state that a person wants to achieve to a certain extent [27]. Value is the core connotation of VFT method, including economic value, personal value, social value, or other values [25]. The goals consist of three factors: decision background, subject, and general direction [28]. Keeney applied this method to the study of e-commerce and analyzed the consumer value of e-commerce by comparing the perceived value difference between online shopping and shopping through other channels [29]. Zhaohua Deng et al. studied consumer trust in mobile commerce by using VFT method [29]. The analytical steps of VFT method adopted in this paper are shown in Fig. 2 [29] (Fig. 1).

3.2 Interview Data Collection

According to the analysis steps of VFT method, the value of users should be directly inquired from users [29]. This paper takes the users’ trust in online customer service chatbots as the target, and interviews 18 users of the shopping platform. These users are all college students and postgraduates with online shopping experience. Before the interview, we explained the purpose and general situation of the interview to each interviewee, and explained that the interview content would be recorded but without personal information, and it would only be used for this study and no other purposes. The interview in this study was conducted after the consent of the interviewee. This interview is mainly conducted through online meetings or voice calls. Taobaoxiaomi is taken as the main research object. And several major questions are asked for the interviewees to answer. The interview raw data is stored at the following address: https://github.com/Yangyangyounglv/chatbots-interview-record.git.

3.3 Analysis Steps

According to VFT method, the specific analysis steps are as follows.

Step 1. Make a list of all values.

In order to obtain the value of users, we asked interviewees questions such as “What are the advantages of Taobaoxiaomi, Taobao’s customer service chatbots? What are the advantages over human customer service?” “What are the shortcomings of Taobaoxiaomi? What are the disadvantages compared to human customer service?” “What do you think Taobao can do to repair or improve your trust in it?” “What future suggestions or assumptions do you have for Taobaoxiaomi?”. We sorted out the answers of interviewees and obtained the value list, as shown in Table 2.

Step 2. Translate abstract values into goals.

Three characteristics of goal in VFT method are decision context, subject, and decision of preference. For example, most interviewees believe that ensuring the professionalism of Taobaoxiaomi is the key factor influencing users’ trust in online customer service chatbots. In this goal, the decision situation is related to chatbots, and the decision purpose is professional. The more professional the chatbots are, the more considerate and intelligent it is, the more users will trust the chatbots. Therefore, the decision maker’s preference is the more considerate and intelligent situation. In this way, the abstract values in Table 3 are transformed into goals of the same format, as shown in Table 3.

Step 3: Identify relationships between goals.

After the general formalization of goals in step 2, common goals with the same format were obtained, followed by the distinction between basic goals and means goals. Basic goals involve “goals that decision-makers attach importance to in a specific context”, while means goals are “methods to achieve goals” [30]. In order to separate the basic goals and means goals to establish the relationship between them, our each goal was identified using a test called “why so important”. Asking “why so important” will produce two possible responses. First, the goal is to focus on one of the fundamental causes of the situation, which also is the root of the decision, this is known as the basic goal. Another reaction is that a goal is important because it has an impact on other goals, which is called the means goal [31]. According to this method, the target is analyzed and the results obtained are shown in Table 4.

3.4 Construction of Users’ Trust Model for Customer Service Chatbots

According to the above analysis of customer service chatbots users’ trust, there are three main factors influencing customer service chatbots users’ trust, which are chatbots-related factors, environment-related factors and user-related factors. The specific forms of users’ trust in customer service chatbots are more powerful chatbots, more reliable environment and users that more receptive. Based on the above analysis of the influencing factors of users’ trust in customer service chatbots, this paper builds a model of users’ trust in customer service chatbots, as shown in Fig. 2.

4 The Questionnaire Survey

4.1 Hypothesis

Based on the theoretical model constructed by the interview, the following hypotheses are proposed:

H1. The professionalism of chatbots has a positive impact on users’ trust in them.

H1a. Users’ privacy concerns negatively moderates the positive relationship between chatbots’ professionalism and users’ trust.

H1b. Users’ trust in technology positively moderates the positive relationship between chatbots’ professionalism and users’ trust.

H2. Chatbots’ response speed has a positive impact on users’ trust in chatbots.

H2a. Users’ privacy concerns negatively moderates the positive relationship between chatbots’ response speed and users’ trust.

H2b. Users’ trust in technology positively moderates the positive relationship between chatbots’ response speed and users’ trust.

H3. Chatbots’ predictability has a positive impact on users’ trust in chatbots.

H3a. Users’ privacy concerns negatively moderates the positive relationship between chatbots’ predictability and users’ trust.

H3b. Users’ trust in technology positively moderates the positive relationship between chatbots’ predictability and users’ trust.

H4. Chatbots’ ease of use has a positive impact on users’ trust in chatbots.

H4a. Users’ privacy concerns negatively moderates the positive relationship between chatbots’ ease of use and users’ trust.

H4b. Users’ trust in technology positively moderates the positive relationship between chatbots’ ease of use and users’ trust.

H5. Chatbots’ human-likeness has a positive impact on users’ trust in chatbots.

H5a. Users’ privacy concerns negatively moderates the positive relationship between chatbots’ human-likeness and users’ trust.

H5b. Users’ trust in technology positively moderates the positive relationship between chatbots’ human-likeness and users’ trust.

H6. Users’ brand trust in chatbots providers has a positive impact on users’ trust in chatbots.

H6a. Users’ privacy concerns negatively moderates the positive relationship between users’ trust in the chatbots provider and users’ trust.

H6b. Users’ trust in technology positively moderates the positive relationship between users’ trust in the chatbots provider and users’ trust.

H7. The risk of using a chatbot has a negative impact on users’ trust in the chatbots.

H7a. Users’ privacy concerns positively moderates the negative relationship between the risk of using a chatbot and users’ trust.

H7b. Users’ trust in technology negatively moderates the negative relationship between the risk of using chatbots and users’ trust.

H8. Human support has a negative impact on users’ trust in chatbots.

H8a. Users’ privacy concerns positively moderates the negative relationship between human support and users’ trust.

H8b. Users’ trust in technology negatively moderates the negative relationship between human support and users’ trust.

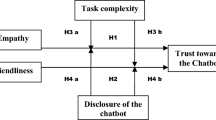

To sum up, further theoretical models are obtained by integrating various variables and their relations (see Fig. 3).

4.2 Questionnaire Data Collection

We collected data through a questionnaire survey, which lasted for two days from June 20, 2020 to June 21, 2020. Questionnaires were issued mainly through Credamo, an online data research platform. After the completion of the collection, the data were screened. And the person who adopted the questionnaire was rewarded with 2 yuan, while the person whose questionnaire was rejected was not rewarded. After the screening, the questionnaire was re-issued and repeated until 500 qualified samples were obtained. Samples were collected from Chongqing, Zhejiang, Shanghai, Yunnan, Tianjin, Shandong, Henan, Hebei, Shanxi, Sichuan, and Jiangsu provinces. During data screening, the questionnaires with the same answers to consecutive questions or contradictory answers to positive and negative questions were removed. A total of 592 questionnaires were collected, and finally, 500 were valid, with an effective rate of 84.46%.

4.3 Data Analysis

Before model verification, we performed skewness and kurtosis test, common method bias test on the data. After these test, the data from the formal questionnaire conform to the standard and can continue the following analysis. And the reliability and validity analysis results are showed in Table 5. Therefore, the structural model had good model fitness.

Regression analysis.

Stepwise regression method is adopted to screen independent variables to ensure that the optimal model is finally obtained. Tolerance is close to 1, and variance inflation factor (VIF) is greater than 0. Therefore, there is no obvious colinearity problem in the model. After regression analysis, professionalism (β = 0.351, P < 0.001), risk (β = −0.231, P < 0.001), brand trust (β = 0.211, P < 0.001), speed of response (β = 0.103, P < 0.05), and predictability (β = 0.103, P < 0.05). H1, H2, H3, H6, and H7 are supported, while H4, H5, and H8 are not. The regression results are shown in Table 6.

The results of model verification show that professionalism, response speed, and predictability of variables related to chatbots positively affect users’ trust in chatbots. In variables related to environment, brand trust positively affects users’ trust in chatbots, and risk negatively affects users’ trust in chatbots. The professional regression coefficient is the largest, indicating that it has the greatest influence on trust. The coefficient of predictability and response speed is the smallest, which indicates that they have the least influence on trust.

Robustness test.

In order to ensure the scientific nature and effectiveness of regression results, it is necessary to conduct robustness test for sample data, and use the whole sample and subsample (N = 500, after deleting ease-of-use, human-likeness and human support variables) to conduct input method regression test. Professional test results (whole sample: β = 0.331, P < 0.001; Subsample: β = 0.351, P < 0.001), risk (whole sample: β = −0.226, P < 0.001; Subsample: β = 0.231, P < 0.001), brand trust (whole sample: β = 0.207, P < 0.001; Subsample: β = 0.211, P < 0.001), response speed (whole sample: β = 0.097, P < 0.05; Subsample: β = 0.103, P < 0.05) and predictability (whole sample: β = 0.114, P < 0.05; Subsample: β = 0.103, P < 0.05) was significantly correlated with the dependent variable trust, and there was significant correlation between the two variables, such as human-likeness (whole sample: β = −0.071, P = 0.081), ease of use (whole sample: β = 0.067, P = 0.195), and human support (whole sample: β = −0.027, P = 0.422) was not significantly correlated with the dependent variable trust. In conclusion, it is consistent with the original results. Its robustness is proved.

Difference analysis.

This part mainly uses the independent sample T-test and one-way variance analysis method to analyze whether there are significant differences between demographic variables of samples and task characteristics on trust. In this study, T-test of independent samples was used to analyze gender and task characteristics, and one-way ANOVA was used to analyze age, education background, and personality.

The results showed that there were no significant differences in task characteristics (P = 0.998 > 0.05), age (P = 0.849 > 0.05), education level (P = 0.922 > 0.05) and trust in chatbots. Gender, and personality (extraversion, conscientiousness, and openness) were significantly correlated with the level of trust in chatbots. There is a significant correlation between the users’ gender and the level of trust between the user and the chatbots (P = 0.037 < 0.05), and the level of trust in males is higher than that in females.

The moderating effect analysis.

H4, H5, and H8 fail, so we drop its assumptions, namely the adjustment effect test, and continue to test the assumptions of H1, H2, H3, H6, and H7, respectively test trust in technology and privacy concerns of professionalism, response speed, predictability, brand trust and risk the five variables and the moderation effect between users trust effect.

The results show that the moderating effect of trust in technology on all variables is not significant. The results of hypothesis verification in this study are summarized in Table 7, and the moderating effect of privacy concerns is shown in Fig. 4. Professionalism * trust in technology (β = 0.041, P = 0.333), response speed * trust in technology (β = −0.064, P = 0.262), predictability * Trust technology propensity (β = -0.023, P = 0.660), brand trust * trust in technology (β = −0.052, P = 0.441), risk * trust in technology (β = 0.016, P = 0.784), H1b, H2b, H3b, H6b, and H7b are not supported. Privacy concerns have moderating effects on environment-related factors, brand trust (β = 0.097, P < 0.05), and risk (β = 0.104, P < 0.05). H6a, and H7a are supported. As for chatbots related factors, professionalism (β = 0.037, P = 0.303), response speed (β = 0.036, P = 0.347), and the predictability (β = −0.059, P = 0.103) showed no moderating effect. H1a, H2a, and H3a are not supported.

5 Summary

5.1 Conclusions

It is found that the professionalism, response speed, and predictability of chatbots have a positive impact on users’ trust in chatbots, which verifies the previous hypothesis.

Environment-related factors, brand trust, and risk are significantly correlated with users’ trust in chatbots, in which brand trust is positively correlated and risk is negatively correlated, which verifies previous hypotheses. Human customer service and chatbots customer service are in a relatively independent position, so human support does not significantly change users’ trust in chatbots customer service.

Although trust in technology can reflect the personal differences of users to a certain extent, our results showed that trust in technology is not a moderating variable.

The relationship between environmental factors (brand trust, risk, etc.) and trust is moderated by privacy concerns, while chatbot-related factors (professionalism, response speed, predictability, etc.) are not moderated by privacy concerns. Moreover, privacy concerns weaken the positive correlation between brand trust and chatbots trust, and enhance the negative correlation between risk and chatbots trust. In other words, enterprises need to increase the user privacy protection mechanism, establish clear and sufficient policy norms, and reduce users’ privacy concerns. At the same time, the study found that for users with high privacy concerns, organizations should strive to improve environment-related factors rather than chatbot-related factors, which means improving brand management, improving users’ trust in brands, or reducing users’ perceived risks in using chatbots. But making chatbots more professional is a common approach for all users (high/low privacy concerns).

5.2 Contributions

In theory, this study further accumulates the knowledge of trust construction and promotion. At the same time, the interaction model between users and chatbots needs to take into account the trust factor, and the process of human-computer trust creating value for all parties in human-computer interaction as a whole.

In practice, the findings of this study can point out the improvement direction of chatbots, provide insights for improving users’ trust in chatbots, and have practical guiding significance for the development of chatbots.

5.3 Limitations and Further Research

Distrust and trust are relatively independent and coexist with different constructs [32]. If we use Hertzberg’s two-factor theory to divide factors into hygiene factors and motivators, and explore their correlation coefficients with trust and distrust, you might get some valuable research. Users’ trust in chatbots should be measured from several angles, such as functional, helpful, and reliable, or cognitive versus affective.

The object of this study is Taobaoxiaomi, which can represent the current e-commerce field and other task-oriented chatbots. But there are chatbots in physical and virtual forms, task-oriented and non-task-oriented. Future chatbots already have better emotional interaction functions, such as Jingdongzhilian cloud intelligent emotional customer service. With the development of science and technology and the change of the scene, the influencing factors of the interaction between users and chatbots must change, and the theory and model construction of human-machine trust should be improved over time.

References

Følstad, A., Brandtzæg, P., et al.: Chatbots and the New World of HCI. Interactions 24(4), 38–42 (2017)

Følstad, A., Nordheim, C.B., Bjrkli, C.A.: What makes users trust a Chatbot for customer service? an exploratory interview study. In: The Fifth International Conference on Internet Science – INSCI 2018 (2018). https://doi.org/10.1007/978-3-030-01437-7_16

Grand View Research. Chatbot Market Size Worth $2,485.7 Million By 2028 | CAGR: 24.9%. https://www.grandviewresearch.com/press-release/global–chatbot—market, 2021–4/ 2021–5–29

Thompson, C.: May A.I. help you? New York Times (November 18). https://www.nytimes.com/interactive/2018/11/14/magazine/tech-design-ai-chatbot.html

Luo, X., Tong, S., Fang, Z., et al.: Frontiers: machines vs. humans: the impact of artificial intelligence Chatbot disclosure on customer purchases. Mark. Sci. 38(6), 937--947 (2019)

Wilson, H.J., Daugherty, P.R., Morini-Bianzino, N.: The jobs that artificial intelligence will create. MIT Sloan Manage. Rev. 58(4), 14 (2017)

Froehlich A. Pros and cons of chatbots in the IT helpdesk. Informationweek.com. https://www.informationweek.com/strategic-cio/it-strategy/pros-and-cons-of-chatbots-in-the-it-helpdesk/a/d-id/1332942. Accessed 18 Oct 2018

Dietvorst, B.J., Simmons, J.P., Massey, C.: Overcoming algorithm aversion: people will use imperfect algorithms if they can (even slightly) modify them. Manage. Sci. 64(3), 1155–1170 (2018)

Kestenbaum, R.: Conversational commerce is where online shopping was 15 years ago —Can it also become ubiquitous? Forbes(June 27). https://www.forbes.com/sites/Richard%20Kestenbaum/2018/06/27/shopping-by-voice-is-small-now-but-it-has-huge-potential/?sh=40e52c907ba1

Evert, V., Zarouali, B., Poels, K.: Chatbot advertising effectiveness: when does the message get through? Comput. Hum. Behav. 98, 150–157 (2019)

Corritore, C.L., Kracher, B., Wiedenbeck, S.: On-line trust: concepts, evolving themes, a model. Int. J. Hum. Comput. Stud. 58(6), 737–758 (2003)

Rotter, J.B.: A new scale for the measurement of interpersonal trust. J. Pers. 35(4), 651–665 (2010)

Arrow, K.E.: The Limits of Organization. Norton, Tempe (1974)

Baker, A.L., Phillips, E.K., Ullman, D., et al.: Toward an understanding of trust repair in human-robot interaction: current research and future directions. ACM Trans. Interact. Intell. Syst. 8(4), 1–30 (2018)

Mayer, R.C., Davis, J.H., Schoorman, F.D.: An integrative model of organizational trust. Acad. Manag. Rev. 20(3), 709–734 (1995)

Rousseau, D.M., Sitkin, S.B., Burt, R.S., et al.: Not so different after all: a cross-discipline view of trust. Acad. Manag. Rev. 23(3), 393–404 (1998)

Glikson, E., Woolley, A.W.: Human trust in artificial intelligence: review of empirical research. Acad. Manag. Ann. 14(2), 627–660 (2020)

Coeckel bergh, M.: Can we trust robots?. Ethics Inf. Technol. 14(1), 53--60 (2012)

Desai, M., Stubbs, K., Steinfeld, A., et al.: Creating Trustworthy Robots: Lessons and Inspirations from Automated Systems (2009)

Hancock, P.A., Billings, D.R., Schaefer, K.E., et al.: A meta-analysis of factors affecting trust in human-robot interaction. Hum. Factors 53(5), 517–527 (2011)

Nordheim, C.B.: Trust in Chatbots for customer service–findings from a questionnaire study (2018)

Ho, C.C., Macdorman, K.F.: Revisiting the uncanny valley theory: developing and validating an alternative to the godspeed indices. Comput. Hum. Behav. 26(6), 1508–1518 (2010)

Mcknight, D.H., Carter, M., Thatcher, J.B., et al.: Trust in a specific technology: an investigation of its components and measures. ACM Trans. Manag. Inf. Syst. 2(2), 12 (2011)

Bertinussen, N.C., Asbjrn, F., Alexander, B.C.: An initial model of trust in Chatbots for customer service—findings from a questionnaire study. Interact. Comput. 31(3), 317—335 (2019)

Keeney, R.L.: Value-focused thinking : a path to creative decisionmaking (1992)

Keeney, R.L.: Creativity in MS/OR: value-focused thinking—creativity directed toward decision making. Interfaces 23(3), 62–67 (1993)

Mcknight, D.H., Choudhury, V., Kacmar, C.: Developing and validating trust measures for e-commerce: an integrative typology. Inf. Syst. Res. 13(3), 344–359 (2002)

Drevin, L., Kruger, H.A., Steyn, T.: Value-focused assessment of ICT security awareness in an academic environment. Comput. Secur. 26(1), 36–43 (2007)

Deng, C.H., Lu, Y.B.: Research on VFT-based trust construction framework for mobile commerce. Sci. Technol. Manag. Res. 03, 185–188 (2008)

Keeney, R.L.: Value-focused Thinking. Harvard University Press, Cambridge (1992)

Sheng, H., Nah, F.H., Siau, K.: Strategic implications of mobile technology: a case study using value-focused thinking. J. Strateg. Inf. Syst. 14(3), 269–290 (2005)

Lin, H., et al.: An empirical study on the difference of influencing factors between trust and distrust in consumers’ first online shopping. Mod. Inf. 35(4), 5 (2015)

Acknowledgments

This work is supported by 2019, Digital Transformation in China and Germany: Strategies, Structures and Solutions for Ageing Societies, GZ 1570. Also supported by the Research Project of Shanghai Science and Technology Commission (No.20dz2260300) and The Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Lv, Y., Hu, S., Liu, F., Qi, J. (2022). Research on Users’ Trust in Customer Service Chatbots Based on Human-Computer Interaction. In: Meng, X., Xuan, Q., Yang, Y., Yue, Y., Zhang, ZK. (eds) Big Data and Social Computing. BDSC 2022. Communications in Computer and Information Science, vol 1640. Springer, Singapore. https://doi.org/10.1007/978-981-19-7532-5_19

Download citation

DOI: https://doi.org/10.1007/978-981-19-7532-5_19

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-7531-8

Online ISBN: 978-981-19-7532-5

eBook Packages: Computer ScienceComputer Science (R0)