Abstract

Medical expert analyses, brain MR images to segment the tumor area. The report may vary according to the machines or the experience of operators. Automating the process of brain tumor segmentation based on MRIs is a greater need to maintain uniformity. In addition, provide a report to the doctor with high accuracy to proceed with the diagnosis of the patient. Many researchers have applied ANN in training the model to segment the tumorous cells in the MR images. Using ANN, multiple state-of-art methods have been proposed with promising results. Motivated with architecture and performance of Neural Network. In this chapter we discussed the working of ANN (Artificial Neural Network) and its application in brain tumor segmentation.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Introduction

Nowadays, artificial network (AI) has become a part of almost all the fields to improve accuracy and to automate the work, with higher precision. In the medical field, still there are lot of areas in which AI can play a vital role in better diagnosis. Brain tumor segmentation is one of the emerging areas in which many researchers are doing work to automate the process of brain tumor segmentation based on Medical Resonance Imaging (MRIs). In a broad sense, the term brain tumor segmentation means identifying the presence or absence of tumor in MR image. It is not restricted only to identifying the presence or absence of the tumor but also AI-based algorithm can identify the exact area of the tumor that can help doctors for better diagnosis. Until today, various automatic and semi-automatic methods proposed for the segmentation process [1,2,3]. The fully automatic [4, 5] approach requires a very high computation time and the seed pixels are automatically selected by the algorithm. Whereas in the semi-automatic method [6,7,8] users manually select the Region of Interest (RoI) to provide as input for the execution of the algorithm, therefore, the computational time is lesser as compared to the fully automatic approach. Artificial Neural Network (ANN) is widely used by many researchers to automate/semi-automate the process of brain tumor segmentation with higher accuracies. ANN is based on the working and structure of the mind as shown in Fig. 12.1, where the basic structure of the brain resembles the neural network. The dendrites act as input layer in ANN that takes the input from the user and provides it to the neuron, which processes it and sends it to the output layer. In shown in Fig. 12.1b, there are three inputs, given to the neuron for some processing and have some specific weights. Neuron process the information and provide the result. Figure 12.1b represents a structure of a neural network with no hidden layer, also called perceptron (or single-layer neural network) [9,10,11,12].

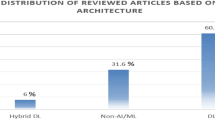

ANN used in extracting information from medical images for segmenting and classifying of brain tumors in MRIs. ANN-based methods produce better results as compared to machine learning methods, mainly two main methods are used for the segmentation/classification approach that is patch based and semantic segmentation [13,14,15,16,17]

Concept of ANN and Activation Function

A very basic or single-layer neural network comprises the input layer, neuron, and output layer. Neuron process the information given by the input layer on the basis of assigned weights and provide the output y′. Every neuron performs two steps first as a summation of input values and weights and applies the activation function on the summation as shown in Fig. 12.2. In step 1, the summation of input values and weights are performed as shown in Eq. 12.1 represented by S where n is a number of features (x1, x2, …, xn).

Step 2 is an application of the activation function to activate the particular neuron. The activation function decides whether the neuron will be activated or remain deactivated based on the value of S. During the forward propagation from left to right, the activation function decided which of the following neurons will take part in predicting the final result y′ as shown in Fig. 12.3. Now, y′ (predicted result) is compared with the actual y for that input (single row of the dataset). Now the weights are adjusted to minimize the difference between y′ and y which is represented by C as shown in Eq. 12.2. The equation is squared to remove the negation sign. And, the process of adjusting weight continues till the value of C become almost zero.

This process of adjusting the weights recursively to minimize C that is Mean Squared Error (MSE) is called backpropagation [18, 19].

Activation Function (Φ)

Based on activation function it is decided whether the particular neuron will contribute to the prediction of the result or not. Activation function φ is applied on the value of S. There are many activation functions that can be used according to the problem [20,21,22,23,24,25]

Threshold Function

It is used for binary classification problem that is in which the result either of the two classes (eg. 0 or 1, A or B). The threshold function cannot be used with multi-class problems. The graph of threshold function is shown below in Fig. 12.4, in which is the y-axis is representing the predicted output y′ and the x-axis is representing the summation of input and assign weights. Now, let us take the problem of finding the correct output of AND operation using neural network and threshold function. Considering Table 12.1 of AND operation as now we will design a neural network to predict the result on the basis of the input and weights.

Considering Fig. 12.5, the first node is the biased unit and the other two nodes are input and weights are assigned with blue color that is 10 to each connection. Now, when the first row is given as input to the network then in neuron the S will be calculated and the threshold activation function is applied to it to get the value of y′.

Now, the activation function is applied on the value of S

According to the Fig. 12.4, the value of \(\varphi \left( {10} \right)\) will be equal to 1, so y′ for this particular input will be 1. But, according to Table 12.1 it must be equal to 0.

Therefore, according to Eq. 12.2 the value of C = 1/2 which is very high and now to minimize the value of C backpropagation is done to adjust the weights as shown in Fig. 12.6.

Now, again we calculate the new y′ with new weights as shown in below lines

Now, the activation function is applied on the value of S

According to Fig. 12.4, the value of \(\varphi \left( { - 30} \right)\) will be equal to 0, so y′ for this particular input will be 0. And as the actual value of y for this particular input is also 0; therefore, we will consider these weights and calculate the value for another set of inputs (second row) as shown in Fig. 12.7.

Again the value of S and \(\varphi \left( S \right)\) will be calculated to compute the value of y′.

Now, the activation function is applied on the value of S

According to Fig. 12.4, the value of \(\varphi \left( { - 10} \right)\) will be equal to 0, so y′ for this particular input will be 0. And as the actual value of y for this particular input is also 0; therefore, we will consider these weights and calculate the value for another set of inputs (third row).

Now, the activation function is applied on the value of S

Again the value of \(\varphi \left( { - 10} \right)\) is 0, so y′ for this particular input will be 0. And as the actual value of y for this particular input is also 0; therefore, we will consider these weights and calculate the value for another set of inputs (final row).

Now, the activation function is applied on the value of S

According to Fig. 12.4, the value of \(\varphi \left( {10} \right)\) will be equal to 1, so y′ for this particular input will be 1. And as the actual value of y for this particular input is also 1; therefore, our model is working correctly and accurately for predicting the result of AND operation.

Sigmoid Function

The working of sigmoid function is same as discussed in the previous section, the only difference is the graph or representation of sigmoid function is different as shown in Fig. 12.8. According to the figure, y-axis is representing the predicted output y′ and x-axis is representing the summation of input and assign weights.

In this case, we will see the working of sigmoid function for the same AND logic and consider the weighted neural network as shown Fig. 12.7. When the first row of Table 12.1 is given as input to the neural network than in the neuron the value of \(S\) and \(\varphi \left( S \right)\) will be get calculated.

According to the sigmoid graph as shown in Fig. 12.8 as the value of \(S = - 30\) that is \(x = - 30\) as the value \(x\) is far left from 0; therefore, the value of \(\varphi \left( x \right)\) would be 0 that is y′ = 0. And as the actual label that is the value of y for the particular row is also 0; therefore, the model is working great with these weights. Similarly, the second row is given as input to the neural network and the value of \(S\) and \(\varphi \left( S \right)\) will be get calculated.

According to the sigmoid graph still the value \(x\) is at the left from 0; therefore, the value of \(\varphi \left( x \right)\) would be near to 0 that is y′ = 0. Following the similar process, the output would be predicted for third input row

Again the value of \(x\) is at the left from 0; therefore, \(\varphi \left( x \right)\) would be near to 0 that is y′ = 0. Finally, we calculate for the last input row.

In this case, the value of \(S = 10\) and the value of \(x\) = 10 which would be right of the 0 according to the sigmoid graph. Therefore, the value of \(\varphi \left( x \right)\) would be near to 1 that is y′ = 1.

Hyperbolic Tangent (tanh)

This activation function is also very similar to the sigmoid function, the only difference is it takes any input value and scale it with in the range of −1 to 1, whereas sigmoid function scale the input in the range of 0 to 1. If the input is a greater real number than the output would be close to 1 and if the input to the activation function is greater negative number than the output of the activation function will be close to −1 as shown in Fig. 12.9.

Rectifier Function (ReLU)

It just propagate the positive input that is if the value of \(S\) is greater than 0, where \(S\) is the summation of product of weights and inputs as shown in Eq. 12.1. The graph of ReLU is shown in Fig. 12.10 which show that if the value of \(x > 0\) than the value of \(\varphi \left( x \right)\) would be equal to \(x\), where \(x = S.\)

ReLU activation function is one of the most popular function used in neural network with multiple layers or we can say it is a default activation function in neural network with multiple layers. As threshold activation function can only be used for binary classification problem, sigmoid and tanh activation function face the problem of vanishing gradient [26] during back propagation for adjusting the weights.

Steps Involved in ANN

Here, we will discuss the basic steps required for the application of ANN for any particular problem.

Step 1—Randomly assign the weights to the first layer, weights are recommended to be close to 0 but not 0 it could be in range from −0.5 to +0.5.

Step 2—Input the first row of the dataset to the input layer and the number of nodes in the input layer should be equal to the number of independent input that is number of features or number of columns.

Step 3—Now, propagate the network from left to right by assigning weights and applying the activation function until we get y′ that is the predicted output. This process is called forward propagation.

Step 4—Now, calculate the difference of predicted y′ with the actual y and adjust the weights from last layer to the first layer more generally from right to left. The adjustment of weights should be done in such a way that error get minimized. This process of adjusting the weights from right to left is called back propagation.

Step 5—Now, repeat above 4 steps, it can be done either for every input at once and adjust the weights after that or the batch of inputs at once and update the weights after getting the error of particular batch.

Step 6—After one epoch, the process can be repeated for multiple epoch until we get the global minima. Here, epoch means that we get the neural network (with weights) after inputting all the data in the network. More epochs can be done until we reach the global minima.

Application of ANN in Brain Tumor Segmentation—Image segmentation defined as a process of partitioning segmenting the area of interest from the image. It is having a wide range of applications in the field of medical science. Majorly, used in brain tumor segmentation, tissue classification, fracture detection, tumor volume calculation, lung tumor segmentation and many more. A brain tumor considered as an abnormal growth of tissues in any random shape. It can be malignant or benign, only malignant cells are cancerous that multiplied or grow at a very high rate. MRI considered as an evolutionary development in the field of brain tumor segmentation. MRIs provide significant information about the brain tissues with different modalities like FLAIR, T1, T1c, and T2. Various modalities with segmented mask of MR image are shown in Fig. 12.11 as per BraTS 2013 dataset [27]. These MRI are analysis by the radiologist for manually or knowledge base segmentation of brain tumor to generate the report for the same. These reports are very much to the human error. Moreover, manual segmentation of brain tumor based on MR images is very tedious task. As these reports play a pivotal role in the diagnosis of the patient. Therefore, the accuracy of brain tumor segmentation and uniformity of reports from different MRI machine are two major issues in the field of medical science.

Considering the above requirement, it is indispensable to have an automated approach for segmenting the brain tumor MR images with high accuracy. Therefore, many researchers has contributed various semi-automated and fully automated methods for brain tumor segmentation. With the evolution of machine leaning, many researchers propose various methods with the remarkable results in brain tumor segmentation. Support vector machine, random forest, KNN, and K-mean clustering are some majorly used machine learning algorithms used for brain tumor segmentation. However, the accuracy of machine learning based models have great scope of improvement. Moreover, even after providing large amount of data the accuracy of machine learning models remain stagnant. With the profuse of high quality MRI data availability, neural network become emerging approach for various researchers.

Artificial neural network, adopted as promising approach by the researchers to proposed automatic method for brain tumor segmentation. In addition, used for pixel wise binary classification that is tumor or non-tumor [28,29,30]. Moreover, multi-class classification models used ANN results in higher accuracy as compare to various outperforming machine learning methods. ANN is a complex network of artificial neurons and multiple hidden layers. The input given to the input layer, traverse through the web of hidden layers before giving a relevant output. Forward and backward propagation used to fine tune the network for better accuracy or minimize loss. In [31], the author has proposed a novel method for brain tumor segmentation, by fusing SVM and ANN. The segmented brain tumor in MR images shown in Fig. 12.12.

Feed-forward neural network (FNN) is one of the simple ANN used for brain tumor segmentation [32,33,34,35]. Information flow from input to output layers, traversing through multiple hidden layers. FNN model can be trained using forward and backward propagation approach. Forward neural network [36] proven better than some standard machine learning algorithms like Bayesian and KNN with accuracy of 80%. In [37], author proposed a brain tumor segmentation method based on feedback pulse coupled neural network, features of the images extracted using DWT. Moreover, dimensionality also reduced with principle component analysis, to improve the computation process. Shenbagarajan et al. [38] proposed a novel model for brain tumor segmentation by integrating region-based active contour model for segmentation and ANN for classification.

Conclusion

In this, we discussed the concept and importance of artificial neural networks in solving complex problems. The role and types of activation function are discussed in detail, and which activation function should be used for a particular problem. Artificial neural network plays a vital role in automating the process of segmenting various medical images, for example, brain tumor segmentation. With this automation, uniformity can be maintained in the results of MR images so that doctors can diagnose the patient in a much better way. Activation function is discussed, for example, as the activation function plays a major role in designing any neural network. The concept of front and backpropagation is also discussed. In the future, deep learning and machine learning algorithms can be used in various other medical areas which are still unexplored by many researchers to give some useful contribution in medical field.

References

Clark, M.C., Hall, L.O., Goldgof, D.B., Velthuizen, R., Murtagh, F.R., Silbiger, M.S.: Automatic tumor segmentation using knowledge based techniques. IEEE Trans. Med. Imaging 17(2), 187–2011 (1998)

Lynn, M., Lawerence, O.H., Demitry, B., Goldgof, R.M.F.: Automatic segmentation of non-enhancing brain tumors. Artif. Intell. Med. 21, 43–63 (2001)

Wang, T., Cheng, I., Basu, A.: Fluid vector flow and applications in brain tumor segmentation. IEEE Trans. Biomed. Eng. 56(3), 781–789 (2009)

Salah, M.B., et al.: Fully automated brain tumor segmentation using two mri modalities. In: International Symposium on Visual Computing. Springer, Berlin (2013)

Alqazzaz, S., et al.: Automated brain tumor segmentation on multi-modal MR image using SegNet. Computat. Visual Media 5(2), 209–219 (2019)

Dubey, R.B., et al.: Semi-automatic segmentation of MRI brain tumor. ICGST-GVIP J. 9(4), 33–40 (2009)

Lim, K.Y., Mandava, R.: A multi-phase semi-automatic approach for multisequence brain tumor image segmentation. Expert Syst. Appl. 112, 288–300 (2018)

Guo, X., Schwartz, L., Zhao, B.: Semi-automatic segmentation of multimodal brain tumor using active contours. Multimodal Brain Tumor Segmentation 27 (2013)

Raudys, Š: Evolution and generalization of a single neurone: I. Single-layer perceptron as seven statistical classifiers. Neural Netw. 11(2), 283–296 (1998)

Shynk, J.J.: Performance surfaces of a single-layer perceptron. IEEE Trans. Neural Netw. 1(3), 268–274 (1990)

Auer, P., Burgsteiner, H., Maass, W.: A learning rule for very simple universal approximators consisting of a single layer of perceptrons. Neural Netw. 21(5), 786–795 (2008)

Marcialis, G.L., Roli, F.: Fusion of multiple fingerprint matchers by single-layer perceptron with class-separation loss function. Pattern Recogn. Lett. 26(12), 1830–1839 (2005)

Kao, P.-Y., et al.: Improving patch-based convolutional neural networks for MRI brain tumor segmentation by leveraging location information. Front. Neurosci. 13, 1449 (2020)

Cordier, N., et al.: Patch-based segmentation of brain tissues. MICCAI challenge on multimodal brain tumor segmentation. IEEE (2013)

Sharif, M., et al.: A unified patch based method for brain tumor detection using features fusion. Cogn. Syst. Res. 59, 273–286 (2020)

Rezaei, M., et al.: A conditional adversarial network for semantic segmentation of brain tumor. In: International MICCAI Brainlesion Workshop. Springer, Cham (2017)

Pereira, S., Alves, V., Silva, C.A.: Adaptive feature recombination and recalibration for semantic segmentation: application to brain tumor segmentation in MRI. In: International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham (2018)

Hecht-Nielsen, R.: Theory of the backpropagation neural network. Neural Networks for Perception, pp. 65–93. Academic Press (1992)

Li, J., et al.: Brief introduction of back propagation (BP) neural network algorithm and its improvement. In: Advances in Computer Science and Information Engineering, pp. 553–558. Springer, Berlin (2012)

Plagianakos, V.P., Vrahatis, M.N.: Training neural networks with threshold activation functions and constrained integer weights. In: Proceedings of the IEEE-INNS-ENNS International Joint Conference on Neural Networks. IJCNN 2000. Neural Computing: New Challenges and Perspectives for the New Millennium, vol. 5. IEEE (2000)

Huang, G.-B., et al.: Can threshold networks be trained directly? IEEE Trans. Circuits Syst. II Express Briefs 53(3), 187–191

Jamel, T.M., Khammas, B.M.: Implementation of a sigmoid activation function for neural network using FPGA. In: 13th Scientific Conference of Al-Ma’moon University College, vol. 13 (2012)

Pratiwi, H., et al.: Sigmoid activation function in selecting the best model of artificial neural networks. J. Phys. Conf. Series 1471(1) (2020)

Sartin, M.A., Da Silva, A.C.R.: Approximation of hyperbolic tangent activation function using hybrid methods. In: 2013 8th International Workshop on Reconfigurable and Communication-Centric Systems-on-Chip (ReCoSoC). IEEE (2013)

Agarap, A.F.: Deep learning using rectified linear units (relu). arXiv preprint arXiv:1803.08375 (2018)

Roodschild, M., Sardiñas, J.G., Will, A.: A new approach for the vanishing gradient problem on sigmoid activation. Prog. Artif. Intell. 9(4), 351–360 (2020)

Hu, K., et al.: Brain tumor segmentation using multi-cascaded convolutional neural networks and conditional random field. IEEE Access 7, 92615–92629 (2019)

Kharrat, A., et al.: A hybrid approach for automatic classification of brain MRI using genetic algorithm and support vector machine. Leonardo J. Sci. 17(1), 71–82 (2010)

Zacharaki, E.I., et al.: Classification of brain tumor type and grade using MRI texture and shape in a machine learning scheme. Magn. Reson. Med. Official J. Int. Soc. Magn. Reson. Med. 62(6), 1609–1618 (2009)

El-Dahshan, E.-S., Hosny, T., Salem, A.-B.: Hybrid intelligent techniques for MRI brain images classification. Digit. Signal Process. 20(2), 433–441 (2010)

Chithambaram, T., Perumal, K.: Brain tumor segmentation using genetic algorithm and ANN techniques. In: 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI). IEEE (2017)

Kavitha, A.R., Chellamuthu, C., Rupa, K.: An efficient approach for brain tumour detection based on modified region growing and neural network in MRI images. In: Int. Conf. Comput. Electron. Electr. Technol. (ICCEET) 1087–1095 (2012)

Damodharan, S., Raghavan, D.: Combining tissue segmentation and neural network for brain tumor detection. Int. Arab J. Inform. Technol. 12(1), 42–52 (2015)

Wang, S., Zhang, Y., Dong, Z., Du, S., Ji, G., Yan, J., Yang, J., Wang, Q., Feng, C., Phillips, P.: Feed-forward neural network optimized by hybridization of PSO and ABC for abnormal brain detection. Int. J. Imag. Syst. Technol. 25(2), 153–164 (2015)

Muhammad, N., Fazli, W., Sajid, A.K.: A simple and intelligent approach for brain MRI classification. J. Intell. Fuzzy Syst. 28(3), 1127–1135 (2015)

El-Dahshan, El-Sayed, A., et al.: Computer-aided diagnosis of human brain tumor through MRI: a survey and a new algorithm. Expert Syst. Appl. 41(11), 5526–5545 (2014)

Damodharan, S., Raghavan, D.: Combining tissue segmentation and neural network for brain tumor detection. Int. Arab J. Inform. Technol. (IAJIT) 12(1) (2015)

Shenbagarajan, A., Ramalingam, V., Balasubramanian, C., Palanivel, S.: Tumor diagnosis in MRI brain image using ACM segmentation and ANN-LM classification techniques. Indian J. Sci. Technol. 9(1)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Verma, A. (2023). ANN: Concept and Application in Brain Tumor Segmentation. In: Kadyan, V., Singh, T.P., Ugwu, C. (eds) Deep Learning Technologies for the Sustainable Development Goals. Advanced Technologies and Societal Change. Springer, Singapore. https://doi.org/10.1007/978-981-19-5723-9_12

Download citation

DOI: https://doi.org/10.1007/978-981-19-5723-9_12

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-19-5722-2

Online ISBN: 978-981-19-5723-9

eBook Packages: Computer ScienceComputer Science (R0)