Abstract

In this paper, the heterogeneous comprehensive learning particle swarm optimization is proposed for the tuning of multivariable proportional integral derivative (PID) for Wood and Berry system. This simulation work is done for both the decentralized and centralized PID controller. For comparison, results from the tuning of multivariable PID controller by particle swarm optimization (PSO) algorithm is considered. Here, our objective is to minimize the integral absolute error (IAE) value of the system. For the simulation of the system and algorithm, MATLAB/SIMULINK software is used. Statistical performance of evolutionary algorithms such as best value, mean value, and standard deviation are going to be evaluated based on ten independent initial conditions. In this work, it is observed that HCLPSO give more consistent performance compared to PSO algorithm.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

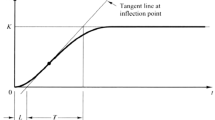

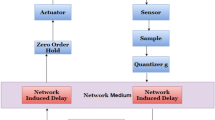

In this modern world, most of the industries are having multiple inputs and multiple outputs systems. These systems are very complicated to get the desired output so that a suitable controller and tuning methods are needed. Most of the MIMO system is controlled by using a PID controller [1]. PID controller [2] is the most popular controller due to its advantages over others. It is most widely used in industrial applications. It uses a control loop feedback mechanism to control the variables. PID stands for the mathematical term proportional, integral, and derivative. Proportional means constant or right value. Integral means the summation of a function over a given interval and derivative is the rate of change of value for a given interval. In this work, decentralized and centralized PID controllers for the tuning of the MIMO system. In decentralized controller [3], there are n PID controllers and in centralized controller [4] n*n PID controllers. In various engineering applications, the tuning of the PID controller for various algorithm is mentioned in [5]. In recent times, heterogeneous comprehensive learning particle swarm optimization (HCLPSO) algorithm [6] performs very well. The tuning of PID controller for SISO system for HCLPSO algorithm was explained in the paper [7]. In this paper, we are going to tune the PID controller for MIMO system by using HCLPSO algorithm. Among population-based tuning algorithms, HCLPSO has the learning capability to become the output of the system as consistent, converged, and has better performance. In HCLPSO, the total population is grouped into two subpopulations such as exploration and exploitation. For the optimal results, we have to balance between these two subpopulations. This paper mainly focuses on minimizing the error value such as integral absolute error (IAE) and the performance analysis of the PID controller for the binary distillation column plant that was described by Wood and Berry [8]. The PID controller continuously calculates the error value which is the difference between the desired set point and the measured process variable and applies the correction by changing the proportional, integral, and derivative value. For the performance analysis, we compare the output results of HCLPSO to the output results of PSO.

2 MIMO System

The MIMO system is the multiple inputs and multiple outputs system which requires control techniques for improving the performance of the system. The MIMO system is more difficult to exploit than a SISO system. We have used a 2 × 2 Binary distillation column plant described by Wood and Berry.

The generalized transfer function for the MIMO system is given below,

2.1 Wood and Berry System

Wood and Berry have derived the mathematical model for binary distillation column plant. The typical column plant consists of a vertical column, re-boiler, condenser, reflux drum, and it contains a feed stream and two product streams. The column plant requires a minimum four number of feedback control loops. These control loops are used to control the distillate concentration, bottom concentration, level of re-boiler, and level of reflux rate. Each controller loop requires a minimum of one input and output so that the system is considered as a MIMO system.

The derived transfer function for the column plant is given by

2.2 Decentralized Controller

In a decentralized controller, there are n controllers are used. We used the Wood and Berry system with 2 PID controllers. The decentralized controller system is most frequently used in industries. The computational time is less for a decentralized controller system compared to a centralized controller system. Simulink model for the decentralized controller is shown below (Fig. 1). The general transfer function for the decentralized controller is given by,

2.3 Centralized Controller

For a MIMO system, a full multivariable controller is used, such a controller is called a centralized controller. This controller will give satisfactory responses for the desired system. The centralized controller requires n*n controllers, whereas the decentralized controller requires only n controllers. In this controller, two PIDs are coupled to each other to obtain the desired output for the system. We can design a full matrix for a centralized controller system and find out the controller parameter values by suitable tuning methods. Simulink model for the centralized controller is shown below (Fig. 2).

The general form of n* n multivariable PID controller is given by,

3 HCLPSO Algorithm

In HCLPSO, the swarm is divided into two heterogeneous subpopulations. The first subpopulation is enhanced for exploration and the second subpopulation is enhanced for exploitation. In both exploration and exploitation subpopulations, the exemplar is generated using comprehensive learning (CL) strategy with the learning probability. Each subpopulation is assigned to focus only on either exploration or exploitation. To generate exemplars, in exploration subpopulation exemplars, the personal best experience of the same subpopulation is used and in the exploitation subpopulation, the personal best experience of the entire swarm population is used. As exploration subpopulation learns only from the same population, the diversity can be retained. The velocity of an exploitation-enhanced subpopulation is updated using the following equation:

- d:

-

dimension

- Vid:

-

updated velocity of an ith particle

- ω:

-

inertia weight

- c1, c2:

-

acceleration factor

- rand1d, rand2:

-

uniform random between 0 and 1

- Xid:

-

dth value of ith particle in the population

- Pbestdfi(d):

-

best position of the ith particle

- Gbestd:

-

best position of the whole swarm population

where fi(d) =[fi(1), fi(2),…., fi(D)] indicates the ith particle follows its own or other pbest for each dimensions. The particle is specified according to the learning probability values(pci) .

4 Parameter Analysis

For the decentralized controller, we made the performance analysis. In HCLPSO, there are several tuning parameters such as learning probability, grouping, and the velocity of the particle. The output result was analyzed by changing these parameters, keeping the two parameters as constant. So that one parameter is kept as constant and change the other parameter and analyze the output. In order to find the effect of learning probability to the performance of HCLPSO, the velocity of the particle is kept as constant. The obtained statistical performance such as best value, mean value, and optimal value among ten independent runs for the velocity = 0.15, learning probability = 0.25 and velocity = 0.15, learning probability = 0.15 are represented in Table 1.

From Table 1, we infer that

-

If we decrease the learning probability value, the standard optimal value will increase and output will not converge.

-

If we increase the learning probability value, the standard optimal value will decrease and output will converge, and it will give the consistent performance.

The obtained statistical performance such as best value, mean value, and standard deviation value for the velocity = 0.25, learning probability = 0.2 and velocity = 0.2, learning probability = 0.2 are represented in Table 2. In order to find the effect of maximum velocity of the performance of the HCLPSO algorithm, the learning probability is kept as constant.

From the above Table 2, we infer that

-

1.

If we decrease the velocity of the particle, it will give the best optimal solution.

-

2.

From the above analysis, we conclude that the best optimal solution and system consistent

5 Results

From this paper, we analyze the parameter changes and finally conclude that the best optimal solutions will get for the particular parameters. We made several trials and runs and obtained the suitable parameters for the design of centralized and decentralized PID controllers for HCLPSO algorithm.

For decentralized controller, the parameters are learning probability (pc) = 0.25, grouping ratio (g1) = 0.3, and velocity (Vmax) = 0.2. The overall obtained results for the decentralized controller using HCLPSO algorithm for various function evolutions are reported in Table 3.

For centralized controller, the parameters are learning probability (pc) = 0.25, grouping ratio (g1) = 0.3, and velocity (vmax) = 0.1. The overall obtained results for the centralized controller using HCLPSO algorithm are represented in Table 4.

From the Tables 3 and 4, the obtained best performance of both the HCLPSO and PSO algorithms are similar, but the performance of HCLPSO algorithm gives more consistent performance compared to PSO algorithm when the number of function evaluations are higher due to their learning ability. Convergence characteristics of both the algorithms are reported in Figs. 3 and 4 which shows both the algorithms have converged their optimal values.

6 Conclusions

-

From the Table 1, we conclude that by increasing the learning probability value, the output results are more consistent and the deviation is minimum.

-

From the Tables 3 and 4, we conclude that PSO and HCLPSO have moreover give same optimal solution, but HCLPSO gives lesser value of standard deviation value for increasing the function evaluation of the particle. Since the HCLPSO has learning probability, it gives more consistent solution for greater value of function evaluation.

-

From the Tables 3 and 4, we conclude that centralized controller has better optimal solution compared to the decentralized controller.

References

Juang JG, Huang MT, Liu WK (2008) PID Control using presearched genetic algorithms for a MIMO system. IEEE Trans Syst Man Cybern Part C (Appl Rev) 38(5):716–727

Ochi Y, Yokoyama N (2012) PID controller design for MIMO systems by applying balanced truncation to integral- type optimal servomechanism. IFAC Proceed Volumes 45(3):364–369

Harjare VD, Patre BM (2015) Decentralized PID controller for TITO systems using characteristic ratio assignment with an experimental application. ISA Trans 59(385–397)

Dhanya Ram V, Chidambaram M (2015) Simple method of designing centralized PI controllers for multivariable systems based on SSGM. ISA Trans 56: 252–260

Willjuice Iruthyarajan M, Baskar S (2009) Evolutionary algorithms based design of multivariable PID controller. Expert Syst Appl 36(5):9159–9167

Lynn N, NagaratnamSuganthan P (2015) Heterogeneous comprehensive learning particle swarm optimization with enhanced exploration and exploitation. Swarm Evol Comput 24:11–24

Muniraj R, Willjuice Iruthayarajan M (2018) Tuning of robust PID controller with filter for PLL system using HCLPSO algorithm. Int J Pure Appl Math 118(16):181–197

Wood R, Berry M (1973) Terminal Composition control of a binary distillatillation column. Chem Eng Sci 28(9):1707–1717

Acknowledgements

We thank the college management for providing the lab facility to do the project work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Jeyaraman, T., Joelpraveenkumar, D., Kaliraj, M., Chandar, M.K., Iruthayarajan, M.W. (2022). Tuning of MIMO PID Controller Using HCLPSO Algorithm. In: Subramani, C., Vijayakumar, K., Dakyo, B., Dash, S.S. (eds) Proceedings of International Conference on Power Electronics and Renewable Energy Systems. Lecture Notes in Electrical Engineering, vol 795. Springer, Singapore. https://doi.org/10.1007/978-981-16-4943-1_35

Download citation

DOI: https://doi.org/10.1007/978-981-16-4943-1_35

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-4942-4

Online ISBN: 978-981-16-4943-1

eBook Packages: EnergyEnergy (R0)