Abstract

We proposed an ADMM-like splitting method in [11] for solving convex minimization problems with linear constraints and multi-block separable objective functions. Its proximal parameter is required to be sufficiently large to theoretically ensure the convergence, despite that a smaller value of this parameter is preferred for numerical acceleration. Empirically, this method has been applied to solve various applications with relaxed restrictions on the parameter, yet no rigorous theory is available for guaranteeing the convergence. In this paper, we identify the optimal (smallest) proximal parameter for this method and clarify some ambiguity in selecting this parameter for implementation. For succinctness, we focus on the case where the objective function is the sum of three functions and show that the optimal proximal parameter is 0.5. This optimal proximal parameter generates positive indefiniteness in the regularization of the subproblems, and thus its convergence analysis is significantly different from those for existing methods of the same kind in the literature, which all require positive definiteness (or positive semi-definiteness plus additional assumptions) of the regularization. We establish the convergence and estimate the convergence rate in terms of iteration complexity for the improved method with the optimal proximal parameter.

Bingsheng He—He was supported by the NSFC Grant 11471156. // Xiaoming Yuan—He was supported by the General Research Fund from Hong Kong Research Grants Council: 12300317.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Our purpose is finding the optimal (smallest) proximal parameter for the splitting method in [11] for separable convex programming models. To expose our main idea and technique more clearly, we focus on the special convex minimization problem with linear constraints and a separable objective function that can be represented as the sum of three functions without coupled variables:

where \(A\in \mathfrak {R}^{m\times n_1}\), \(B\in \mathfrak {R}^{m\times n_2}\), \(C\in \mathfrak {R}^{m\times n_3}\); \(b \in \mathfrak {R}^m\); \(\mathcal{X}\subset \mathfrak {R}^{n_1}\), \(\mathcal{Y}\subset \mathfrak {R}^{n_2}\) and \(\mathcal{Z}\subset \mathfrak {R}^{n_3}\) are closed convex sets; and \(\theta _i:\mathfrak {R}^{n_i}\rightarrow \mathfrak {R}\) (\(i=1,2,3\)) are closed convex but not necessarily smooth functions. Such a model may arise from a concrete application in which one of the functions represents a data-fidelity term while the other two account for various regularization terms. We refer to, e.g., [16, 20,21,22,23], for some applications of (1). The solution set of (1) is assumed to be nonempty throughout.

To recall the splitting method in [11] for the model (1), we start from the augmented Lagrangian method (ALM) that was originally proposed in [15, 18]. Let the Lagrangian and augmented Lagrangian functions of (1) be given, respectively, by

and

In (2) and (3), \(\lambda \in \mathfrak {R}^m\) is the Lagrange multiplier; and in (3), \(\beta >0\) is the penalty parameter. When the three-block separable convex minimization model (1) is purposively regarded as a generic convex minimization model and its objective function is treated as a whole, the ALM in [15, 18] can be applied directly and the resulting iterative scheme is

If two functions in the objective are treated together and two variables in the constraints are grouped accordingly, the alternating direction method of multipliers (ADMM) in [5] can also be directly applied to (1). The resulting iterative scheme reads as

Unless the functions and/or coefficient matrices in (1) are special enough, direct applications of the ALM (1.4) and the ADMM (1.5) usually are not preferred because the (x, y, z)-subproblem in (1.5b) and (y, z)-subproblem in (1.5b) may still be too difficult (even when the functions \(\theta _i\) per se are relatively easy). Therefore, generally the three-block model (1) should not be treated as a one-block or two-block case and the ALM (1.4) or ADMM (1.5) should not be applied directly.

On the other hand, for specific applications of the model (1), functions in its objective usually have their own physical explanations and mathematical properties. Thus, it is usually necessary to treat them individually to design more efficient algorithms. More accurately, we are interested in such an algorithm that handles these functions \(\theta _i\) individually in its iterative scheme. A natural idea is to split the subproblem in the original ALM (1.4) in the Jacobian or Gaussian manner; the corresponding schemes are as follows:

and

All the subproblems in (6) and (7) are easier than the original problem (1); only one function in its objective and a quadratic term are involved in the x-, y-, z-subproblems. But, as shown in [1, 8], neither of the schemes (6) and (7) is necessarily convergent. Therefore, although schemes such as (6) and (7) can be easily generated, the lack of convergence may require more meticulous theoretical study and algorithmic design techniques for the three-block case (1). The results in [1, 8] also justify that designing augmented-Lagrangian-based splitting algorithms for the three-block case (1) is significantly different from that for the one- or two-block case; and they need to be discussed separately despite that there is a rich literature of the ALM and ADMM.

Despite of their lack of convergence, the schemes (6) and (7) may empirically work well, see, e.g., [20, 22, 23]. It is thus interesting to design an augmented-Lagrangian-based splitting method whose iterative scheme is analogous to (6), (7), or a fused one of both, while its theoretical convergence and empirical efficiency can be both ensured. The method in [11] is such one; its iterative scheme for (1) reads as

where the parameter \(\mu \) is required to be \(\mu \ge 2\) in [11]. The scheme (1.8) has the simplicity in sense of that each of the x-, y-, and z-subproblems involves just one function from (1) in its objective. Its efficiency has been verified in [11] by some sparse and low-rank models and image inpainting problems. Also, it was used in [2] for solving a dimensionality reduction problem on physical space.

It is easy to see that the scheme (1.8) can be rewritten as

with \(\tau =\mu -1\) and thus \(\tau \ge 1\) as shown in [11]. The scheme (1.9) shows more clearly that it is a mixture of the augmented-Lagrangian-based splitting schemes (6) and (7), in which the x- and (y, z)-subproblems are updated in the alternating order while the (y, z)-subproblem is further splitted in parallel so that parallel computation can be implemented to the resulting y- and z-subproblems. Recall the lack of convergence of (6) and (7). Thus, it is necessary to regularize the splitted y- and z-subproblems appropriately in (1.9) to ensure the convergence. Indeed, the terms \(\frac{\tau \beta }{2}\Vert B(y-y^k)\Vert ^2\) and \(\frac{\tau \beta }{2}\Vert C(z-z^k)\Vert ^2\) in (1.9) can be regarded as proximal regularization terms with \(\tau \) as the proximal parameter.

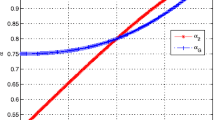

On the other hand, with fixed \(\beta \), the proximal parameter \(\tau \) determines the weight of the proximal terms in the subproblems (1.9b) and its reciprocal plays the role of step size for an algorithm implemented internally to solve the subproblems (1.9b). We hence prefer smaller values of \(\tau \) whenever the convergence of (1.9) can be theoretically guaranteed. As mentioned, in [11], we have shown that the condition \(\tau \ge 1\) is sufficient to ensure the convergence of (1.9). While, numerically, as shown in [11] and also in [2] (see Section V, Part B, Pages 3247–3248), it has been observed that values very close to 1 are preferred for \(\tau \). For example, \(\mu =2.01\), i.e., \(\tau =1.01\), was recommended in [11] and used in [2] to result in faster convergence. This raises the necessity of seeking the optimal (smallest) value of \(\tau \) that can ensure the convergence of (1.9). The main purpose of this paper is to rigorously prove that the optimal value of \(\tau \) is 0.5 for the method (1.9). That is, any \(\tau >0.5\) ensures the convergence of (1.9) yet any \(\tau \in (0,0.5)\) yields divergence.

Note that, because of our analysis in [1], without loss of the generality, we can just assume \(\beta \equiv 1\). That is, the augmented Lagrangian function defined in (3) is reduced to

and the iterative scheme of (1.9) is now simplified as

The rest of this paper is organized as follows. We recall some preliminaries in Sect. 2. In Sect. 3, we show why positive indefiniteness occurs in the proximal regularization for the scheme (1.11) when \(\tau > 0.5\). Then, we provide an explanation in the prediction-correction framework for (1.11) in Sect. 4; and focus on analyzing an important quadratic term in Sect. 5 that is the key for conducting convergence analysis for (1.11). The convergence of (1.11) with \(\tau > 0.5\) is proved in Sect. 6; and the divergence of (1.11) with \(\tau \in (0,0.5)\) is shown in Sect. 7 by an example. We estimate the worst-case convergence rate in terms of iteration complexity for the scheme (1.11) in Sect. 8. Finally, we make some conclusions in Sect. 9.

2 Preliminaries

In this section, we recall some preliminary results for further analysis. First of all, a pair of \(\bigl ((x^*,y^*,z^*),\lambda ^*\bigr )\) is called a saddle point of the Lagrangian function defined in (2) if it satisfies the inequalities

Or, we can rewrite these inequalities as

Indeed, a saddle point of the Lagrangian function defined in (2) can also be characterized by the following variational inequality:

We call (x, y, z) and \(\lambda \) the primal and dual variables, respectively.

The optimality condition of the model (1) can be characterized by the monotone variational inequality:

where

and

We denote by \(\Omega ^*\) the solution set of (14). Note that the operator F in (14b) is affine with a skew-symmetric matrix and thus we have

3 The Positive Indefiniteness of (1.11) with \(\tau >0.5\)

In this section, we revisit the scheme (1.11) from the variational inequality perspective; and show that it can be represented as a proximal version of the direct application of ADMM (1.5) but the proximal regularization term is not positive definite for the case of \(\tau >0.5\). The positive indefiniteness of the proximal regularization excludes the application of a vast set of known convergence results in the literature of ADMM and its proximal versions, because they all require positive definiteness or semi-definiteness (plus additional assumptions on the model (1)) for the proximal regularization term to validate the convergence analysis.

Let us first take a look at the optimality conditions of the subproblems in (1.11). Note that the subproblem (1.11b) are specified as

and

Thus, the optimality condition of the y-subproblem in (1.11b) can be written as \(y^{k+1}\in \mathcal{Y}\) and

or equivalently: \(y^{k+1}\in \mathcal{Y}\) and

Similarly, the optimality condition of the z-subproblem in (1.11b) can be written as \(z^{k+1}\in \mathcal{Z}\) and

Then, with (1.11c), we can rewrite the inequalities (17a) and (17b) as \( (y^{k+1}, z^{k+1})\in \mathcal{Y}\times \mathcal{Z}\) and

where

Obviously, \(D_0\) is positive semidefinite and indefinite when \(\tau \ge 1\) and \(\tau \in (0,1)\), respectively.

Then, it is easy to see that the scheme (1.11) can be rewritten as

Comparing (3.5b) with (1.5b) (note that \(\beta =1\)), we see that the scheme (1.11) can be symbolically represented as a proximal version of (1.5) in which the (y, z)-subproblem is proximally regularized by a proximal term. But the difficulty is that \(D_0\) defined in (19) is positive indefinite when \(\tau \in (0.5,1)\). Indeed, our analysis in [11] requires \(\tau \ge 1\) and thus the positive semidefiniteness of \(D_0\) is ensured. For this case, the convergence analysis is relatively easy because it can follow some techniques used for the proximal point algorithm which is originated from [17, 19]. For the case where \(\tau \) is relaxed to \(\tau >0.5\) and hence the matrix \(D_0\) in (19) is positive indefinite, the analysis in [11] and other literatures is not applicable and more sophisticated techniques are needed for proving the convergence of the scheme (1.11) with \(\tau >0.5\).

4 A Prediction-Correction Explanation of (1.11)

In this section, we show that the scheme (1.11) can be expressed by a prediction-correction framework. This prediction-correction explanation is only for the convenience of theoretical analysis and there is no need to follow this prediction-correction framework to implement the scheme (1.11).

In the scheme (1.11), we see that \(x^{k}\) is not needed to generate the next \((k+1)\)-th iterate; only \((y^k, z^k,\lambda ^k)\) are needed. Thus, we call x the intermediate variable; and \((y,z,\lambda )\) essential variables. To distinguish their roles, accompanied with the notation in (14b), we additionally define the notation

Moreover, we introduce the auxiliary variables \(\tilde{w}^k= (\tilde{x}^k,\tilde{y}^k,\tilde{z}^k,\tilde{\lambda }^k)\) defined by

where \((x^{k+1},y^{k+1},z^{k+1})\) is the iterate generated by the scheme (1.11) from the given one \((y^k,z^k,\lambda ^k)\). Using these notations, we have

Now, we interpret the optimality conditions of the subproblems in (1.11) by using the auxiliary variables \(\tilde{w}^k\). First, ignoring some constant terms, the subproblem (1.11.a) is equivalent to

and its optimality condition can be rewritten as

Using (23), \(y^{k+1}=\tilde{y}^k\) and \(z^{k+1}=\tilde{z}^k\), the inequalities (17a) and (17b) can be written as

and

respectively. Thus, the inequality (18) becomes \((\tilde{y}^k,\tilde{z}^k)\in \mathcal{Y}\times \mathcal{Z}\) and

Note that the equality \(\tilde{\lambda }^k = \lambda ^k- (Ax^{k+1} + By^k + Cz^k -b)\) in (22) can be written as the variational inequality form

Therefore, it follows from the inequalities (24a), (24b) and (24c) that the auxiliary variable \(\tilde{w}^k= (\tilde{x}^k,\tilde{y}^k,\tilde{z}^k,\tilde{\lambda }^k)\) defined in (22) satisfies the following variational inequality.

We call the auxiliary variable \(\tilde{w}^k= (\tilde{x}^k,\tilde{y}^k,\tilde{z}^k,\tilde{\lambda }^k)\) as the predictor. Using (23), the update form (1.11c) can be represented as

Recall we define by v in (21) the essential variables for the scheme (1.11). The new essential variables of (1.11), \(v^{k+1} =(y^{k+1}, z^{k+1},\lambda ^{k+1})\), are updated by the following scheme:

Overall, the scheme (1.11) can be explained by a prediction-correction framework which generates a predictor characterized by the step (4.5) and then corrects it by the step (4.6). As we shall show, the inequality (4.5) indicates the discrepancy between \({\tilde{w}}^k\) and a solution point of the variational inequality (14) and it plays an important role in the convergence analysis for the scheme (1.11). Indeed, we can further investigate the inequality (4.5) and derive a new right-hand side that is more preferred for establishing the convergence. For this purpose, let us define a matrix as

which is positive definite for any \(\tau >0\) when B and C are both full column rank. Then, for the matrices Q and M defined in (4.5b) and (4.6b), respectively, it obviously holds that

In the following lemma, we further analyze the right-hand side of (4.5) and show more explicitly the difference of the proof for the convergence of (1.11) with \(\tau > 0.5\) from that with \(\tau \ge 1\) in [11].

Theorem 25.1

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then, \(\tilde{w}^k\in \Omega \) and

where

Proof

Using \(Q=HM\) (see (28)) and the relation (4.6a), the right-hand side of (4.5a) can be written as

and hence we have

Applying the identity

to the right-hand side of (31) with

we obtain

For the last term of (32), we have

Substituting (33) into (32), we get

Recall that \((w- \tilde{w}^k)^T F(\tilde{w}^k) = (w- \tilde{w}^k)^T F(w)\) (see (15)). Using this fact, the assertion of this lemma follows from (31) and (34) directly. \(\square \)

When G given in (30) is positive definite, as shown in [11], it is relatively easier to use the assertion (29) to prove the global convergence and estimate its worst-case convergence rate in terms of iteration complexity, see, e.g., [7, 14] for details and [6] (Sections 4 and 5 therein) for a tutorial proof. For the matrix G given in (30), since \(HM=Q\) and \(M^THM = M^TQ\), we have

Then, using (4.5b) and the above equation, we have

By using the notation \(D_0\) (see (19)), the matrix G can be rewritten as

Obviously, the proximal matrix \(D_0\) in (19) can be rewritten as

Therefore, for \(\tau \in (\frac{1}{2}, 1)\), G is positive indefinite because the matrix \(D_0\) is not so. The positive indefiniteness of G is indeed the main difficulty of proving the convergence of the scheme (1.11) with \(\tau > 0.5\); and we need to look into the quadratic term \((v^k-\tilde{v}^k)^T G(v^k-\tilde{v}^k)\) more intensively.

5 Investigation of the Quadratic Term \({\boldsymbol{ (v^k-\tilde{v}^k)^T G(v^k-\tilde{v}^k)}}\)

As mentioned, the key point of proving the convergence of the scheme (1.11) with \(\tau > 0.5\) is to analyze the quadratic term \((v^k-\tilde{v}^k)^T G(v^k-\tilde{v}^k)\) which is not guaranteed to be positive. In this section, we focus on investigating this term and show that

where \(\psi (\cdot ,\cdot )\) and \(\varphi (\cdot ,\cdot )\) are both non-negative functions. The first two terms \(\psi (v^k,v^{k+1}) - \psi (v^{k-1},v^k)\) in the right-hand side of (37) can be manipulated consecutively between iterates and the last term \(\varphi (v^k, v^{k+1})\) should be such an error bound that can measure how much \(w^{k+1}\) fails to be a solution point of (14). If we find such functions that guarantee the assertion (37), then we can substitute it into (29) and get the inequality

As we shall show, all the components of the right-hand side of (38) in parentheses should be positive to establish the convergence and convergence rate of (1.11). It is indeed this requirement that implies our restriction of \(\tau > 0.5\). We show the details in Theorem 26.5, preceded by several lemmas. Similar techniques for the convergence analysis of the ADMM are referred to, e.g. [4, 9, 10, 12].

Lemma 26.1

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then we have

Proof

First, according to (35), we have

and thus

For the term \(\Vert \lambda ^k - \tilde{\lambda }^k\Vert ^2\) in the right-hand side of the above equation, because \(\tilde{x}^k = x^{k+1}\),

we have

Finally, by a manipulation, we get

The lemma is proved. \(\square \)

For further analysis, we will divide the crossing term \(2(\lambda ^k -\lambda ^{k+1})^T \bigl ( B(y^k-y^{k+1}) + C(z^k - z^{k+1}) \bigr )\) in the right-hand side of (39) into two parts and give their lower bounds by quadratic terms.

Lemma 26.2

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then we have

where

with

Proof

Recall (18). It holds that

Analogously, for the previous iteration, we have

Setting \((y,z)=(y^k,z^k)\) and \((y,z)=(y^{k+1},z^{k+1})\) in (43) and (44), respectively, and adding them, we get

Consequently, we have

Thus, using Cauchy-Schwaez inequality, from (45) we obtain

where the last inequality is because of the Cauchy-Schwarz inequality. Manipulating the right-hand side of (46) recursively and using the notation of \(\psi (\cdot ,\cdot )\) (see (41)), we get (40) and the lemma is proved. \(\square \)

In addition to (40), we need to the term \((\lambda ^k -\lambda ^{k+1})^T \bigl ( B(y^k-y^{k+1}) + C(z^k - z^{k+1}) \bigr )\) by an another quadratic terms. This is done by the following lemma.

Lemma 26.3

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then, for \(\tau \in (0.5,1)\), we have

Proof

Setting \(\delta = \tau -\frac{1}{2}\). Because \(\tau \in (0.5,1)\), we have \(\delta \in (0,0.5)\). Using the Cauchy-Schwarz inequality twice, we get

Since \(\delta \in (0,0.5)\), we have

and thus

Substituting \(\delta =\tau - \frac{1}{2}\) in the above inequality, we get (47) and the lemma is proved. \(\square \)

Recall that we want to bound the quadratic term \((v^k-\tilde{v}^k)^T G(v^k-\tilde{v}^k)\) in the form of (38). Our previous analysis enables us to achieve it; and this is the basis of the convergence analysis to be shown soon.

Lemma 26.4

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then, for \(\tau \in (0.5, 1)\), we have

where \(\psi (v^k,v^{k+1})\) is defined in (41) and

Proof

Substituting (40) and (47) into (39), we get

The assertion of this lemma follows from the definition of \(\varphi (v^k,v^{k+1})\) directly. \(\square \)

Finally, substituting (48) into (29), we obtain the following theorem directly. This theorem plays a fundamental role in proving the convergence of (1.11) with \(\tau >0.5\).

Theorem 26.5

Let \(\{w^k\}\) be the sequence generated by (1.11) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then we have

where \(\psi (v^k,v^{k+1})\) and \(\varphi (v^k,v^{k+1})\) are defined in (41) and (49), respectively.

6 Convergence

As mentioned, proving the convergence of the scheme (1.11) with \(\tau >0.5\) essentially relies on Theorem 26.5. With Theorem 26.5, the remaining part of the proof is subroutine. In this section, we present the convergence of the scheme (1.11) with \(\tau >0.5\); a lemma is first proved to show the contraction property of the sequence generated by (1.11).

Lemma 27.1

Let \(\{w^k\}\) be the sequence generated by (1.11) with \(\tau >0.5\) for the problem (1). Then we have

where \(\psi (v^k,v^{k+1})\) and \(\varphi (v^k,v^{k+1})\) are defined in (41) and (49), respectively.

Proof

Setting \(w=w^*\) in (50) and using

we obtain the assertion (51) immediately. \(\square \)

Theorem 27.2

Let \(\{w^k\}\) be the sequence generated by (1.11) with \(\tau >0.5\) for the problem (1). Then the sequence \(\{v^k\}\) converges to a \(v^{\infty } \in \mathcal{V}^*\) when B and C are both full column rank.

Proof

First, it follows from (51) and (49) that

Summarizing the last inequality over \(k=1,2,\ldots \), we obtain

and thus

For an arbitrarily fixed \(v^*\in \mathcal{V}^*\), it follows from (51) that, for any \(k>1\), we have

Thus the sequence \(\{v^k\}\) is bounded. Because M is non-singular, according to (4.6), \(\{\tilde{v}^k\}\) is also bounded. Let \(v^{\infty }\) be a cluster point \(\{\tilde{v}^k\}\) and \(\{\tilde{v}^{k_j}\}\) be the subsequence of \(\{\tilde{v}^k\}\) converging to \(v^{\infty }\). Let \(x^{\infty }\) be the vector induced by given \((y^{\infty }, z^{\infty }, \lambda ^{\infty }) \in \mathcal{V}\). Then, it follows from (31) that

which means \({w}^{\infty }\) is a solution point of (14) and its essential part \(v^\infty \in \mathcal{V}^*\). Since \(v^{\infty }\in \mathcal{V}^*\), it follows from (53) that

Together with (52), it is impossible that the sequence \(\{v^k\}\) has more than one cluster point. Thus \(\{v^k\}\) converges to \(v^{\infty }\) and the proof is complete. \(\square \)

Remark 27.3

Note that the convergence of (1.11) with \(\tau >0.5\) in terms of the sequence \(\{v^k\}\) is proved in Theorem 27.2 under the assumption that both B and C are full column rank. Without this assumption, weaker convergence results in terms of \(\{By^k,Cz^k\}\) can be derived. We refer to Sect. 6 in [11] for details.

7 The Optimality of \({\boldsymbol{ \tau =0.5}}\)

We have proved the convergence of (1.11) with \(\tau > 0.5\); the key is sufficiently ensuring the non-negativeness of the coefficients in the right-hand side of (50). In this section, we show by an example that any \(\tau \in (0,0.5)\) may yield divergence of (1.11). Hence, \(\tau =0.5\) is the watershed, or optimal value, to ensure the convergence of (1.11).

For any given \(\tau <0.5\), we take \(\epsilon =0.5-\tau >0\) and consider the problem

which is a special case of the model (1). Obviously, the solution of this problem is \(x=y=z=0\).

The augmented Lagrangian function of the problem (55) with a penalty parameter of 1 is

and the iterative scheme (1.11) for (55) is

Since \(\mathcal{X} = \{0\}\), we have \(x^{k+1} \equiv 0\). Ignoring constant terms in the objective function of the subproblems, the recursion (56) becomes

Further, it follows from (57) that

Thus, the iterative scheme for \(v=(y,z,\lambda )\) can be written as

Without loss of generality, we can take \(y^0=z^0\) and thus \(y^k\equiv z^k\), for all \(k>0\). Using this fact and \(\tau +\epsilon =0.5\), we get

With elementary manipulations, we obtain

which can be written as

Let \(f_1(\tau )\) and \(f_2(\tau ) \) be the two eigenvalues of the matrix \(P(\tau )\). Then we have

and

Certainly, the scheme (60) is divergent if the absolute value of one of the eigenvalues of the matrix \( P(\tau )\) is greater than 1. Indeed, it holds that \(f_2(\tau )<-1\) for any \(\tau \in (0,0.5)\). To see this assertion, we notice that

Hence, the scheme (1.11) is not necessarily convergent for any \(\tau \in (0,0.5)\).

8 Convergence Rate

In this section, we derive a worst-case O(1/t) convergence rate in terms of iteration complexity for the scheme (1.11) with \(\tau >0.5\), where t is the iteration counter. Hence, although the condition \(\tau \ge 1\) in [11] is now relaxed to \(\tau >0.5\), the same convergence rate result in [11] remains valid for the scheme (1.11). Similar analysis is refereed to [11, 13].

First of all, recall (14). If we find \(\tilde{w}\) satisfying the inequality

then \(\tilde{w}\) is a solution point of (14). As mentioned in (15), we have \( (w-\tilde{w})^T F(\tilde{w}) = (w-\tilde{w})^T F(\tilde{w})\). Thus, a solution point \(\tilde{w}\) of (14) can be also characterized by

Therefore, as [3], for given \(\epsilon >0\), \(\tilde{w} \in {\Omega }\) is called an \(\epsilon \)-approximate solution of VI\(({\Omega },F,\theta )\) if it satisfies

where

In the following, we show that based on the first t iterates generated by the scheme (1.11) with \(\tau >0.5\), we can find an approximate solution of (14), denoted by \(\tilde{w} \in \Omega \), such that

where \(\epsilon =O(1/t)\). That is, a worst-case O(1/t) convergence rate is established for the scheme (1.11) with \(\tau >0.5\). Theorem 26.5 is still the basis for the analysis in this section.

Theorem 29.1

Let \(\{w^k\}\) be the sequence generated by (1.11) with \(\tau >0.5\) for the problem (1) and \(\tilde{w}^k\) be defined by (22). Then for any integer t, we have

where

and \(\psi (v^0,v^{1})\) is defined in (41) and thus

Proof

First, it follows from (50) that

Thus, we have

Summarizing the inequality (65) over \(k=1,2, \ldots , t\), we obtain

and thus

Since \(\theta (u)\) is convex and

we have that

Substituting it into (66), the assertion of this theorem follows directly. \(\square \)

For a given compact set \( \mathcal{D}_{(\tilde{w})} \subset \Omega \), let

where \(v^0=(y^0,z^0,\lambda ^0)\) and \(v^1=(y^1,z^1,\lambda ^1)\) are the initial and the first generated iterates, respectively. Then, after t iterations of the scheme (1.11), the point \(\tilde{w}_t \in \Omega \) defined in (64) satisfies

which means \({\tilde{w}}_t\) is an approximate solution of VI(\(\Omega ,F,\theta \)) with an accuracy O(1/t) (recall (62)). That is, a worst-case O(1/t) convergence rate is established for the scheme (1.11) with \(\tau >0.5\). Since \({\tilde{w}}_t\) defined in (64) is the average of all iterates of (1.11), this convergence rate is in the ergodic sense.

9 Conclusions

We revisit the splitting method proposed in [11] for solving separable convex minimization models; and show that its optimal proximal parameter is 0.5 when the objective function is the sum of three functions. This optimal proximal parameter offers the possibility of immediate numerical acceleration; which can be easily verified by the examples tested in [2, 11] and others. For succinctness, we omit the presentation of numerical results. Meanwhile, more sophisticated techniques are required for the convergence analysis because this optimal proximal parameter generates positive indefiniteness in the proximal regularization term as well. We establish the convergence and estimate the worst-case convergence rate in terms of iteration complexity for the improved version of the method in [11] with the optimal proximal parameter. This work is inspired by the analysis in our recent work [9, 10] for the augmented Lagrangian method and alternating direction method of multiplies.

References

C.H. Chen, B.S. He, Y.Y. Ye, X.M. Yuan, The direct extension of ADMM for multi-block convex minimization problems is not necessary convergent. Math. Program. 155, 57–79 (2016)

E. Esser, M. Möller, S. Osher, G. Sapiro, J. Xin, A convex model for non-negative matrix factorization and dimensionality reduction on physical space. IEEE Trans. Imaging Process. 21(7), 3239–3252 (2012)

F. Facchinei, J.S. Pang, Finite-Dimensional Variational Inequalities and Complementarity Problems, Vol. I (Springer Series in Operations Research, Springer, 2003)

R. Glowinski, Numerical Methods for Nonlinear Variational Problems (Springer, New York, Berlin, Heidelberg, Tokyo, 1984)

R. Glowinski, A. Marrocco, Sur l’approximationparéléments finis d’ordre un et larésolution parpénalisation-dualité d’une classe de problèmes deDirichlet non linéaires, Revue Fr. Autom. Inform. Rech.Opér., Anal. Numér. 2 (1975), pp. 41–76

B.S. He, PPA-like contraction methods for convex optimization: a framework using variational inequality approach. J. Oper. Res. Soc. China 3, 391–420 (2015)

B.S. He, H. Liu, Z.R. Wang, X.M. Yuan, A strictly contractive Peaceman-Rachford splitting method for convex programming. SIAM J. Optim. 24, 1011–1040 (2014)

B.S. He, L.S. Hou, X.M. Yuan, On full Jacobian decomposition of the augmented Lagrangian method for separable convex programming. SIAM J. Optim. 25(4), 2274–2312 (2015)

B.S. He, F. Ma, X.M. Yuan, Indefinite proximal augmented Lagrangian method and its application to full Jacobian splitting for multi-block separable convex minimization problems. IMA J. Numer. Anal. 75, 361–388 (2020)

B.S. He, F. Ma, X.M. Yuan, Optimally linearizing the alternating direction method of multipliers for convex programming. Comput. Optim. Appl. 75(2), 361–388 (2020)

B.S. He, M. Tao, X.M. Yuan, A splitting method for separable convex programming. IMA J. Numer. Anal. 35, 394–426 (2014)

B.S. He, H. Yang, Some convergence properties of a method of multipliers for linearly constrained monotone variational inequalities. Oper. Res. Lett. 23, 151–161 (1998)

B.S. He, X.M. Yuan, On the \(O(1/t)\) convergence rate of the alternating direction method. SIAM J. Numer. Anal. 50, 700–709 (2012)

B.S. He, X.M. Yuan, On non-ergodic convergence rate of Douglas-Rachford alternating directions method of multipliers. Numer. Math. 130, 567–577 (2015)

M.R. Hestenes, Multiplier and gradient methods. J. Optim. Theory Appl. 4, 303–320 (1969)

K.C. Kiwiel, C.H. Rosa, A. Ruszczy\(\acute{n}\)ski, Proximal decomposition via alternating linearization. SIAM J. Optim. 9, 668–C689 (1999)

B. Martinet, Regularisation, d’inéquations variationelles par approximations succesives. Rev. Francaise d’Inform. Recherche Oper. 4, 154–159 (1970)

Powell M.J.D., A method for nonlinear constraints in minimization problems, in Optimization, ed. by R. Fletcher (Academic Press, New York, NY, 1969), pp. 283–298

R.T. Rockafellar, Monotone operators and the proximal point algorithm. SIAM J. Cont. Optim. 14, 877–898 (1976)

M. Tao, X.M. Yuan, Recovering low-rank and sparse components of matrices from incomplete and noisy observations. SIAM J. Optim. 21, 57–81 (2011)

R. Tibshirani, M. Saunders, S. Rosset, J. Zhu, K. Knight, Sparsity and smoothness via the fused lasso. J. R. Stat. Soc. 67, 91–108 (2005)

X. Zhou, C. Yang, W. Yu, Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 35, 597–610 (2013)

Z. Zhou, X. Li, J. Wright, E.J. Candes, Y. Ma, Stable principal component pursuit, in Proceedings of international symposium on information theory, Austin, Texas, USA (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

He, B., Yuan, X. (2021). On the Optimal Proximal Parameter of an ADMM-like Splitting Method for Separable Convex Programming. In: Tai, XC., Wei, S., Liu, H. (eds) Mathematical Methods in Image Processing and Inverse Problems. IPIP 2018. Springer Proceedings in Mathematics & Statistics, vol 360. Springer, Singapore. https://doi.org/10.1007/978-981-16-2701-9_8

Download citation

DOI: https://doi.org/10.1007/978-981-16-2701-9_8

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-2700-2

Online ISBN: 978-981-16-2701-9

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)