Abstract

This paper proposes an automated technique to segment the retinal blood vessels from funduscopic images. An Adaptive Line Structuring Element (ALSE) [12] is used for initial segmentation, but the process introduces large number of noisy objects accompanying the vessel structure. Fortunately, these noisy objects are relatively isolated structures in comparison to the blood vessels. So, a suitably Scaled Grid can be used to delimit the noisy objects from its neighborhood. When an object falls fully inside a block of the grid, it is considered as a noise and is eliminated. But the objects which passes over the boundary of a block are preserved. The scale of the grid is iteratively increased to identify eventually the all isolated objects and are eliminated without any loss of the actual vessel’s structure. To measure the performance, Accuracy, Sensitivity and Specificity are calculated and compared with the recently found algorithms proposed in the literature.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Adaptive Line Structuring Element

- Medical image processing

- Retinal image

- Diabetic Retinopathy

- Mathematical morphology

1 Introduction

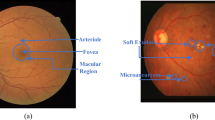

An Ophthalmoscope or Fundoscope is used to visualize the anatomical structure of retina, including blood vessels and the optic nerve, for diagnosis and prognosis of retinal pathologies. Change in the characteristics of blood vessels, such as the diameter, tortuosity or branching angles, are the indication of ophthalmic diseases. The success of treatment for Diabetic Retinopathy or other ophthalmic disorders is dependent on the timely monitoring of the changes in retinal anatomy. Many recent studies in medical literature [1, 2] finds high correlation of the coronary and cardiovascular diseases with morphological changes in retinal blood vessels. The manual scanning of retinal images to detect pathological changes entails specialized training and skill and hence is an expensive process in terms of time and cost. A better alternative is to find out a reliable and automated method to segment the retinal blood vessels that could help in early detection of morphological variations in the fundus vasculature. In last decade, a significant amount of research effort was directed towards automated detection of pathological changes in blood vessels of retina. A detailed review related to retinal vessel segmentation can be found in [3]. Those approaches can be classified into two wide categories: the supervised methods and the unsupervised or rule-based methods. The rule-based methods can also be categorized into techniques based on morphological image processing [4, 10, 12, 13], matched filtering [5], model-based algorithms [6] and multiscale analysis [7]. The supervised segmentation approach [8, 9], utilize training and test images to classify the blood vessels from its noisy background based on selected features.

This paper proposes an unsupervised technique to extract retinal vasculature from fundus image using adaptive mathematical morphological operations. In [12], the authors proposed a novel mathematical morphology based method to extract the vessel structure using Adaptive Line Structuring Elements (ALSE). By rotating a straight line shaped Structuring Element and collecting the maximum response at each pixel with increasing size of the line at each step, the vessel’s structure with minimum loss can be extracted. The rationale behind this process is that, a curved line can be approximately represented by a set of connected straight lines of assorted sizes. This approach efficaciously extracts the vessel’s structure but faces two major difficulties when practically applied for segmentation of retinal blood vessels. Firstly, fundoscopic images, in general, contains large number of noisy pixels and many pathological objects that are similar in appearance in terms of intensity and shape and sometime in close proximity with blood vessels. ALSE confoundingly match these unwanted objects as blood vessels, and as a consequence, extracts them. Secondly, by increasing the size of the Line Structuring Element (LSE), the curved shape of the blood vessels is lost and isolated linear artifacts are created from finer arterioles and venules. In this paper our aim is to address and rectify the above-mentioned shortcomings faced by the ALSE process. By efficacious selection of noise elimination technique, this paper presents a novel unsupervised method to segment blood vessel structure for both physiological and pathological fundoscopic images.

There are three significant observations that can help us in overcoming the shortcomings of ALSE process. (i) The length (or scale) of the line structuring element must be limited to a convenient size so that the curvature of the vessels is preserved and that small-scale vessels are not dislodged from their continuous structure. Requirement of this observation is that the number of iterations in ALSE process must be kept small. Small size of the LSE can preserve vessel shape and structure but is unable to eliminate noisy and unwanted objects from the resultant image. To overcome this problem, we have our second observation. (ii) Most noise and other pathological objects are isolated and discontinuous from the main vessel structure in a limited scale of observation. So, all isolated discontinuous objects in limited scale can be safely eliminated without any harm to the main vessel structure. Lastly, (iii) objects that are in close proximity to the main vessel structure and similar (but not same) in color can be isolated by suitable contrast stretching algorithms. Following this, these isolated objects can be treated in the same manner as described in second observation.

For practical implementation of the above mentioned observations, following steps are adopted in succession:

-

(A)

Preprocessing of the gray scale image by eliminating small scale noisy objects and bridging the small gaps present in the vessels by mathematical morphological operations.

-

(B)

Constructing and applying an ALSE process, by limiting the size of the structuring element, which does not deform the shape of the vessels, but, extracts the full vessel structure with noise. A suitable adaptive threshold is used to convert the resultant image into a binary image.

-

(C)

A Scaled Grid starting with a minimum size is placed on the binary image found in the last step. Any object that falls fully inside a block of the grid are eliminated but objects that falls on the boundary of a grid are preserved. The size of the grid is iteratively increased, and the same procedure is followed to eliminate the unwanted noisy objects.

The final binary image contains a clear view of the blood vessels of the retinal image, without loss of information.

Rest of this paper is organized as follows: Sect. 2 describes the details of ALSE process and the proposed Scaled Grid-Based noise elimination algorithm. Section 3 explains the experimental results and comparison of the performance of our algorithm with other methods proposed in the literature and finally in Sect. 4 the conclusion is drawn.

2 Proposed Algorithm

2.1 Preprocessing of Retinal Image

In the color (RGB) fundus image (\( I_{RGB} \)), the Green channel (G) has maximum contrast compared to the other channels and the Blue channel (B) has got very low contrast with maximum noise. On the other hand, the retinal image is generally saturated by the Red channel (R). Hence, maximum importance is applied over the Green channel (G) to convert the RGB image into a gray level fundus image (\( I_{gray} \)) and the following Eq. (1) is used:

Kundu et al. [12] and others [10] observed that the diameter of all the retinal blood vessels range from 3 to 7 pixels in fundus images. Accordingly, objects having size 3 pixels or less are eliminated form \( I_{gray} \) using a morphological Top-Hat operation as shown in Eq. (2) below. In Eq. (2), the morphological opening (\( \gamma_{{S_{d3} }} \)) followed by the closing (\( \varphi_{{S_{d8} }} \)) operations are done using disc shaped structuring element having diameters 3 and 8 pixels respectively. The resultant image (\( I_{rst} \)) retains the objects of size larger than 3 pixels and bridges the small gaps present between the finer vessels.

2.2 Segmentation of Retinal Vessels Structure from Background

For initial segmentation of the blood vessels from \( I_{rst} \), mathematical morphological operations are applied with an Adaptive Line Structuring Element (ALSE). ALSE has two parameters, Size or Span (\( i \)) and Angle (\( \theta^\circ \)). The ALSE (\( S_{\theta^\circ }^{i} \)) is a one-dimensional array of numbers having length ‘i’ pixels, and value 1. The position of the middle pixel of \( S_{\theta^\circ }^{i} \) is taken as center and the orientation of ALSE is given by the angle \( \theta^\circ \). Now, for each pixel of \( I_{rst} \) a morphological opening is employed using \( S_{\theta^\circ }^{i} \) as a structuring element, for a specific length, i, starting from 3 pixels and with an angle \( \theta^\circ \). Angle \( \theta^\circ \) is increased from \( 0^\circ \) to \( 180^\circ \), with successive increment of \( 9^\circ \). After a complete \( 180^\circ \) rotation, the maximum response (\( I^{i} \)(x, y)) of each pixel is collected. In each iteration the length, ‘i’, of ALSE (\( S_{\theta^\circ }^{i} \)) is increased by 1 pixel, starting from 3 pixels up to 7 pixels (as the diameter of vessels are in between 3 pixels to 7 pixels). Value of length, ‘i’, higher than 7 pixels can distort the original vessel’s structure as mentioned in the introduction, and that was a drawback of Kundu et al. [12].

The above mentioned operations are precisely given by Eq. (3) and (4) and Fig. 1 explains the working of ALSE algorithm as discussed above.

(a) Rotation of ALSE (\( S_{\theta^\circ }^{7} \)) with size 7 pixels and value of each pixel is one. (b) (i) and (ii) where \( S_{\theta^\circ }^{7} \) does not match with the pixels, it is not considered as vessel’s structure and so eliminated. (b) (iii) where \( S_{\theta^\circ }^{7} \) matches with image pixels, it is considered as vessels structure and hence preserved.

2.3 Enhancement of Vessel’s Structure Using Difference of Gaussian

When the resultant image (\( I^{7} \)) of vessel structure is segmented, it is accompanied with numerous amounts of noisy pixels. Some of these noisy pixels are close to the vessels with almost similar gray levels. To separate these noisy pixels from the neighboring vessels by increasing the contrast, Difference of Gaussians (DoG) is applied. The DoG acts as a band pass filter that preserves spatial information but gains contrast between vessels and neighboring noise.

For applying DoG, grayscale retinal image (\( I^{7} \)) is convolved with the Gaussian kennel of two different variance, \( \sigma_{1}^{2} \) and \( \sigma_{2}^{2} \) respectively and the difference is taken between the two convolved images, as shown in Eq. (5).

2.4 Elimination of Background Noise Using Scaled Grid

At this stage, a local threshold is applied on the resultant image (\( \Upgamma_{{\sigma_{1} ,\sigma_{2} }} \)) to separate vessel structure from the background, and the binary image thus produced is denoted by \( I_{binary}^{r} \). It is done by dividing the image (\( \Upgamma_{{\sigma_{1} ,\sigma_{2} }} \)) into number of blocks and then the Otsu’s threshold [15] is applied to each of these blocks.

The main difficulty in extracting the vessel structure in retinal image is the similarity of gray levels between the blood vessels and noise. Though the application of DoG separates the noise in the neighborhood of vessel structure, numerous noisy pixels and small objects remain persistent.

An interesting property of these noisy pixels is that, compared to the blood vessels they are small isolated structures. Hence a suitable boundary can isolate each noisy structure from its neighborhood. For this purpose, a sequence of virtual grids, \( I_{grid}^{i} \) are superimposed on the binary image, \( I_{binary}^{r} \). The sides of each square block in the grid, \( I_{grid}^{i} \) starts with i = 3 pixels and increases gradually. One-Pixel thick boundary of each block is set to one and inside is kept as zero.

Now, the ith grid \( I_{grid}^{i} \) is superimposed (logically multiplied) on the image \( I_{binary}^{r} \) to get the parts of the object which falls on the boundary. Let, the overlapped image thus formed, be denoted by \( I_{boundary\_obj}^{i} \), as shown in Eq. (6).

The isolated objects inside the boundary of a block are considered as a noise, and are required to be eliminated. To detect the objects that falls fully inside the boundary (isolated objects) and the objects which continues to the next block by passing over the boundary, a sequence of logical operations are applied.

-

Step 1: The complement of the grid (\( I_{ \sim grid}^{i} ) \) is taken and it is superimposed on \( I_{binary}^{r} \) and the resultant image, \( I_{iso}^{i} \), contains all objects falling inside a block.

-

Step 2: A morphological dilation is applied on each resultant block or window of \( I_{iso}^{i} \), using a disk shaped Structuring Element (\( s_{r1} \)) with radius 1 pixel and the dilated image (\( \delta I_{iso}^{i} \)) is formed.

-

Step 3: The dilated image (\( \delta I_{iso}^{i} \)) is superimposed again on the \( I_{boundary\_obj}^{i} \) and the resultant image (\( I_{{iso^{ \wedge } boundary\_obj}}^{i} \)) contains all the objects falls inside a block and continues over the boundary to the adjacent block.

-

Step 4: If the boundary of a block of the image \( I_{{iso^{ \wedge } boundary\_obj}}^{i} \), contains non zero value, it means that this is the continuation of same object of the two adjacent windows. So, these continuous objects are preserved. But, if the boundary of the window doesn’t contain any non-zero value, it is considered to be an isolated object, so it can be treated as noise and this window is filled with ‘0’. So, in this procedure, noisy isolated objects are eliminated. The above process is shown in Eqs. (7), (8), and (9).

$$ I_{boundary\_obj}^{i} = I_{binary}^{r} \wedge I_{grid}^{i} $$(6)$$ I_{iso}^{i} = I_{binary}^{r} \wedge I_{ \sim grid}^{i} $$(7)$$ \delta I_{iso}^{i} = I_{iso}^{i} \oplus S_{r1} $$(8)$$ I_{{iso^{ \wedge } boundary\_obj}}^{i} = \delta I_{iso}^{i} \wedge I_{boundary\_obj}^{i} $$(9)

The above described method retains the parts of the vessel structure which are present on the boundaries of the grid with a particular window size. It may be possible that a large noisy object is considered as vessel’s part for a small window size, but when the size of window is gradually increased, then the large noises also fully fall inside a window and eliminated by the above procedure. At the end of this process, resultant image contains only retinal blood vessel structure. Above technique is explained in Fig. 2 and Fig. 3.

(a) 5 × 5 pixels window grid image (\( I_{grid}^{5} \)) superimposed on image 2(b), where the isolated objects which had fallen fully inside a window are eliminated. (b) 7 × 7 pixels window grid image (\( I_{grid}^{7} \)) superimposed on image 3(a), where the isolated objects which had fallen into 5 × 5 pixels window grid are eliminated.

3 Experiment

3.1 Results and Discussions

The proposed algorithm is tested on the freely available Drive database [14]. This database contains Forty of Funduscopic images and for every image there is a corresponding mask and manually segmented ground truth image, having size 584 × 565 pixels.

Figure 4(b) shows the converted gray scale image \( \varvec{I}_{{\varvec{gray}}} \), after applying Eq. (1) on the original RGB image (\( \varvec{I}_{{\varvec{RGB}}} \)). Next, the Top-Hat transformation is applied on \( \varvec{I}_{{\varvec{gray}}} \) using Eq. (2) and the resultant image \( \varvec{I}_{{\varvec{rst}}} \) is formed, as shown in Fig. 4(c). It contains large quantity noise very similar to the intensity of vessels. Now, to segment the vessel’s structure from background an Adaptive Line Structuring Element (ALSE) is used on \( \varvec{I}_{{\varvec{rst}}} \) with the help of Eq. (3) and (4) and the output image \( \varvec{I}^{{\mathbf{7}}} \) is shown in Fig. 4(d). Following this, to increase the contrast between the vessel’s structure and neighboring noise, a DoG is applied on \( \varvec{I}^{{\mathbf{7}}} \) and the output image (\( {\varvec{\Gamma}}_{{\varvec{\sigma}_{{\mathbf{1}}} ,\varvec{\sigma}_{{\mathbf{2}}} }} \)) as shown in Fig. 5(a). Next Otsu’s threshold is applied locally on \( {\varvec{\Gamma}}_{{\varvec{\sigma}_{{\mathbf{1}}} ,\varvec{\sigma}_{{\mathbf{2}}} }} \) to segment the vessels from the background. Figure 5(b) shows the output binary retinal image (\( \varvec{ I}_{{\varvec{binary}}}^{{\mathbf{7}}} \)).

(a) Output Image \( \Upgamma_{{\sigma_{1} ,\sigma_{2} }} \), after applying DoG on \( I^{7} \) (b) Binary retinal image \( I_{binary}^{7} \), after applying locally Otsu’s threshold on \( \Upgamma_{{\sigma_{1} ,\sigma_{2} }} \) (c) The resultant image (\( \delta I_{iso}^{7} \)), which is formed after dilation is applied on of \( I_{iso}^{7} \) using the \( s_{r1} \) (d) output image \( I_{{iso^{ \wedge } boundary\_obj}}^{7} \), which contains the common boundary objects of \( \delta I_{iso}^{7} \) and \( I_{boundary\_obj}^{7} \)

To calculate the isolated objects of \( I_{binary}^{7} \), the complimented grid image (\( I_{ \sim grid}^{7} \)) is overlapped (logically AND) on \( I_{binary}^{7} \) and the resultant image \( I_{iso}^{7} \), is dilated by \( s_{r1} \) to form \( \delta I_{iso}^{7} \) (see Fig. 5(c)). The Fig. 5(d) shows the resultant image \( I_{{iso^{ \wedge } boundary\_obj}}^{7} \), which is formed after the dilated image \( \delta I_{iso}^{7} \) is superimposed on the \( I_{boundary\_obj}^{7} \). This resultant image (\( I_{{iso^{ \wedge } boundary\_obj}}^{7} \)) contains only the parts of the image \( I_{binary}^{7} \), which are the common boundary objects of \( \delta I_{iso}^{7} \) and \( I_{boundary\_obj}^{7} \).

After calculating the non-zero boundary information, we consider the adjacent blocks as part of the vessel’s structure and those blocks remain untouched, and others are filled with zero. Again, the size of the window is increased and the same procedure is followed, until no isolated structures appear in the image. After applying different sized grids (3 × 3 pixels to 9 × 9 pixels), the resultant images are shown in the Fig. 6(a), 6(b), 6(c) and 6(d), where the noiseless vessel’s structure is found after the block or window size is 9 × 9 pixels (see Fig. 6(d)).

3.2 Performance Measurement

The performance of our algorithms is measured on the DRIVE data set, where the segmented image is compared with the given ground truth image. The following measures are found, when the comparison is done with respect to each pixel: A segmented vessel pixel is considered as a true positive (TP) measure, if it is a part of both the vessel pixel and the given ground truth, otherwise it is consider as a false positive (FP), if it is a part of background pixel in the given ground truth image; also a background pixel of the segmented image is considered as true negative (TN) if is a part of the background in the given ground truth image, otherwise it is consider as a false negative (FN). To compare the performance of our proposed technique with existing technique, we calculated the Accuracy (Accu), Sensitivity (Sen) and Specificity (Spf). which are defined as follows:

The performance measures, Accuracy (Accu), Sensitivity (Sen) and Specificity (Spf) of our proposed technique, are calculated on all the images of DRIVE database and the average value of these measures are compared with the latest existing methodology which is presented in Table 1.

4 Conclusion

In this paper, a novel technique is proposed to segment the vessel’s structure from retinal or fundoscopic image. No new objects or structures have been introduced in the fundus image after using the morphological operators, which are anti-extensive by nature. Elimination of noise using scaled grid is a less time consuming and more efficient method. The Accuracy, Specificity and Sensitivity of the output images are measured with respect to the ground truth images given in DRIVE database (manual-1 and manual-2). The average Accuracy, Sensitivity and Specificity of our proposed method are 95.21%, 72.83% and 98.35% respectively. The results of this method are potentially better than the others previously proposed unsupervised techniques. The experimental results prove that our proposed mathematical morphology and uniform grid-based technique is very efficient to extract the structure of both the large and small vessels simultaneously. This technique can enhance the vessel’s structure and suppress the noise without changing its original structure. So, using this method, low contrasted vessels are also detected accurately.

References

Wong, T.Y., Klein, R., Sharrett, A.R., et al.: Retinal arteriolar narrowing and risk of coronary heart disease in men and women 287(9), 1153–1159. JAMA: J. Am. Med. Assoc. (2002). http://jama.amaassn.org/content/287/9/1153.abstract

Gelman, R., Martinez-Perez, M.E., Vanderveen, D.K., et al.: Diagnosis of plus disease in retinopathy of prematurity using retinal image multiscale analysis. Invest. Ophthalmol. Vis. Sci. 46(12), 4734–4738 (2005)

Fraz, M.M., Barman, S.A., Remagnino, P., et al.: Blood vessel segmentation methodologies in retinal images – a survey. Comput. Methods Prog. Biomed. 108, 407–433 (2012)

Fraz, M.M., Barman, S.A., Remagnino, P., et al.: An approach to localize the retinal blood vessels using bit planes and centerline detection. Comput. Methods Prog. Biomed. 108(2), 600–616 (2011)

Hoover, A.D., Kouznetsova, V., Goldbaum, M.: Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med. Imaging 19(3), 203–210 (2000)

Al-Diri, B., Hunter, A., Steel, D.: An active contour model for segmenting and measuring retinal vessels. IEEE Trans. Med. Imaging 28(9), 1488–1497 (2009)

Sofka, M., Stewart, C.V.: Retinal vessel centerline extraction using multiscale matched filters, confidence and edge measures. IEEE Trans. Med. Imaging 25(12), 1531–1546 (2006)

Ricci, E., Perfetti, R.: Retinal blood vessel segmentation using line operators and support vector classification. IEEE Trans. Med. Imaging 26(10), 1357–1365 (2007)

Lupascu, C.A., Tegolo, D., Trucco, E.: FABC: retinal vessel segmentation using AdaBoost. IEEE Trans. Inf. Technol. Biomed. 14(5), 1267–1274 (2010)

Samanta, S., Saha, S.K., Chanda, B.: A simple and fast algorithm to detect the fovea region in fundus retinal image. In: Second International Conference on Emerging Applications of Information Technology, pp. 206–209. IEEE Xplore (2011)

Azzopardi, G., Strisciuglio, N., Vento, M., Petkov, N.: Trainable COSFIRE filters for vessel delineation with application to retinal images. Med. Image Anal. 19(1), 46–57 (2015)

Kundu, A., Chatterjee, R.K.: Morphological scale-space based vessel segmentation of retinal image. In: Annual IEEE India Conference (INDICON), pp. 986–990 (2012)

Mondal, R., Chatterjee, R.K., Kar, A.: Segmentation of retinal blood vessels using adaptive noise island detection. In: Fourth International Conference on Image Information Processing (ICIIP), pp. 1–5 (2017)

http://www.isi.uu.nl/Research/Databases/DRIVE/. Accessed 30 June 2012

Otsu, N.: A threshold selection method from gray-level histogram. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Nandy, R.S., Chatterjee, R.K., Das, A. (2020). Segmentation of Blood Vessels from Fundus Image Using Scaled Grid. In: Bhattacharjee, A., Borgohain, S., Soni, B., Verma, G., Gao, XZ. (eds) Machine Learning, Image Processing, Network Security and Data Sciences. MIND 2020. Communications in Computer and Information Science, vol 1240. Springer, Singapore. https://doi.org/10.1007/978-981-15-6315-7_18

Download citation

DOI: https://doi.org/10.1007/978-981-15-6315-7_18

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-6314-0

Online ISBN: 978-981-15-6315-7

eBook Packages: Computer ScienceComputer Science (R0)