Abstract

Light-emitting diodes (LED) based photoacoustic imaging (PAI) systems have drastically reduced the installation and operational cost of the modality. However, the LED-based PAI systems not only inherit the problems of optical and acoustic attenuations encountered by PAI but also suffers from low signal-to-noise ratio (SNR) and relatively lower imaging depths. This necessitates the use of computational signal and image analysis methodologies which can alleviate the associated problems. In this chapter, we outline different classes of signal domain and image domain processing algorithms aimed at improving SNR and enhancing visual image quality in LED-based PAI. The image processing approaches discussed herein encompass pre-processing and noise reduction techniques, morphological and scale-space based image segmentation, and deformable (active contour) models. Finally, we provide a preview into a state-of-the-art multimodal ultrasound-photoacoustic image quality improvement framework, which can effectively enhance the quantitative imaging performance of PAI systems. The authors firmly believe that innovative signal processing methods will accelerate the adoption of LED-based PAI systems for radiological applications in the near future.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Introduction

Photoacoustic imaging (PAI) emerged in the early 2000s as a novel non-invasive and non-ionizing imaging method, harnessing the advantages of optical and ultrasound imaging modalities to provide high-contrast characteristic responses of functional and molecular attributes without sacrificing resolution (for depths of millimeters to centimeters) in highly optically scattering biological tissues. In PAI, acoustic waves are generated by the absorption of short-pulsed electromagnetic waves, followed by detection of generated acoustic waves using sensors, e.g., piezoelectric detectors, hydrophones, micro-machined detectors. The term photoacoustic (also optoacoustic) imaging is synonymous with the modalities where one uses visible or near-infrared light pulses for illumination while using electromagnetic waves in the radiofrequency or microwave range is referred to as thermo-acoustic imaging. The research efforts in PAI have been directed towards the development of new hardware components and inversion methodologies allowing an increase in imaging speed, depth, and resolution, as well as on investigating potential biomedical applications. Further, the unique capabilities of the recently developed small animal imaging systems and volumetric scanners have opened up the unexplored domain of post-reconstruction image analysis. Despite these advantages and massive growth in PAI modalities, it is still operational mostly in research fields and for preclinical studies due to high-cost associated with the instrumentation and so-called limited-view effects, offering sub-optimal imaging performance and limited quantification capabilities. Significant limitations yet remain in terms of inadequate penetration depth and lack of high-resolution anatomical layout of whole cross-sectional areas, thereby encumbering its application in the clinical domain.

With the emergence of the light-emitting diode (LED)-based PAI modalities, the operational cost of the imaging system drastically reduced, and the instrumentation becomes compact and portable while maintaining the imaging depth of nearly 40 mm with significant improvisation in resolution as well. Being cost-effective and more stable compared to the standard optical parametric oscillator (OPO)-based systems, the LED-based systems have made PAI technology more accessible and open to new application domains. Recently several early clinical studies using PAI have been reported, e.g., gastrointestinal imaging [1], brain resection guidance [2], rheumatoid arthritis imaging [3]. Further, it is the capability of real-time monitoring of disease biomarkers that makes it an impeccable tool for longitudinal supervision of circulating tumour cells, heparin, lithium [4]. However, this probing modality, especially LED-PAI is still characterized by low signal to noise ratio (SNR) and lower image saliency when compared to several other clinically adopted imaging modalities. Therefore, enhancing the SNR in both signal and image domain, as well as the use of image enhancement techniques and pre-processing of PA images is of significant interest to obtain clinically relevant information and characterize different tissue types based on their morphological and functional attributes. In this context, this chapter aims to provide the use of signal and image analysis in conjunction with imaging and post-processing techniques to improve the quality of PA images and enable optimized workflows for biological, pre-clinical and clinical imaging.

This chapter will illustrate relevant signal analysis techniques and is organized with the following sections. Section 2 introduces a generalized PAI system where different aspects of the imaging instrumentation are highlighted with a precise description, followed by discussion of different signal processing techniques, e.g., ensemble empirical mode decomposition, wavelet-based denoising, Wiener deconvolution using the channel impulse response and principal component analysis to increase the SNR of the acquired acoustic signals prior to reconstruction, in Sect. 3. Section 4 entails image analytics, intends to implement at different stages in post-processing, ranging from noise removal to segmentation of different biological features. Various techniques, to significantly increase the SNR of PA images, are discussed while maintaining a great deal in both spatial domain and frequency domain processing techniques. Besides, segmentation of different biological structures, based on their structural and functional properties, can be achieved through numerous approaches e.g. morphological processing, feature-based segmentation, cluster techniques, deformable objects. This entire section describes various image analysis methods to comprehend PA image analysis further and helps to ascertain problem-specific processing methodologies in the application domain. Additionally, Sect. 5 covers advanced solutions to improve image quality by rectifying various PAI parameters such as optical and acoustic inaccuracies, generated sue to practical limitations and approximations in PAI modality. In this context, different experimental and algorithmic approaches are discussed with the help of recent findings in PA research. In summary, this chapter provides a holistic approach of performing LED-based PA signal and image processing at various stages starting from acoustic signal acquisition to post-reconstruction of PA images through the different computational algorithms with prospective dimensions of probable research areas to improve the efficacy of PA imaging system in clinical settings.

2 Block Diagram of Imaging and Signal Acquisition

A generalized schematic of a PAI system is shown in Fig. 1. Generally, a nano-second pulse duration light source (in Fig. 1, we show a laser diode-based PAI system) with a repetition rate from 10 Hz to several kHz and wavelength in the range of visible to NIR is used to irradiate the sample under observation. Importantly, it is also empirical to keep the laser energy exposure within the maximum permissible limit following the guideline by ANSI standard.Footnote 1 Due to the thermoelastic effect, the absorbing tissue compartments will produce characteristic acoustic responses which are further acquired using an ultrasound transducer unit. An ultrasound transducer unit, working in the range of several MHz, can be placed adjacent to the sample body to capture these PA signals and converts them into their corresponding electrical signal levels to transfer it to the data acquisition unit which is directly connected and controlled with the host PC for post-processing, reconstruction and storage of the signal and image data for further offline processing if required.

Prior to acquisition by data acquisition system, these PA signals are amplified due to their low order of amplitude and filtered to reduce the effect of noises, usually combines electronic noise, system thermal noise and most importantly the measurement noise that arises due to the highly scattering tissue media for which the acoustic waves undergo multiple attenuation event before acquisition using ultrasound transducers [5]. These noises are capable of deteriorating the signal strength and eventually the quality of PA images. Therefore, a significant amount of signal processing both at the hardware level and software platform needs to be carried out to mitigate this challenge. These techniques are discussed in the following sections.

3 Signal Domain Processing of PA Acquisitions

In most cost-effective PA imaging systems, researchers are using low energy PLD or LED which in turn significantly reduces signal strength, hence affecting SNR and quality of reconstructed images. Such imaging set-ups need significant improvement in signal processing to enhance the SNR so that it would eventually produce considerably good quality PA images after reconstruction. With recent scientific deductions and technological advancement, several researchers have targeted this problem from a different perspective. Zhu et al. introduced a low-noise preamplifier in the LED-PAI signal acquisition path to increase the sensitivity of PA reception, followed by a two-steps signal averaging: 64 times by data acquisition unit and 6 times by host PC, thus combining 384 times averaging which significantly improves the SNR with a square root factor of the total averaging times [6]. They also established such an SNR improvement strategy through the phantom model experiment and in vivo imaging of vasculature on a human finger. However, such a technique can also lead to losing high-frequency information that stems from the small and subtle structures in LED-PAI.

Among other signal enhancement techniques, several conventional approaches include ensemble empirical mode decomposition (EEMD), wavelet-based denoising, Wiener deconvolution using the channel impulse response, and principle component analysis (PCA) of received PA signals [5]. EEMD is a time-space analysis technique that relies on shifting an ensemble noisy (white noise) data, followed by averaging out the noisy counterpart with a sufficient number of trials [7]. In this mechanism, the added white noise provides a uniform reference frame in time-frequency space. The advantage over the classical empirical mode decomposition technique is that EEMD scales can be easily separated without any a priori knowledge. However, challenges arise while specifying an appropriate criterion for PA image reconstruction based on intrinsic mode function.

In the case of wavelet-based denoising, although the acoustic signal can be tracked-out from the background noise with significant accuracy, it is empirical to optimize the thresholding parameter to suppress undesired noise and preserve signal details optimally. Moreover, wavelet-based denoising requires prior knowledge about the signal and noisy environment as the choice of wavelet function and threshold necessitate the characteristics knowledge of the signal and noise. One way to overcome such difficulty is to make the process parametric and adaptive [8, 9]. They introduced polynomial thresholding operators which are parameterized according to the signal environment to obtain both soft and hard thresholding operators. Such methodology not only enables increased degrees of freedom to preserve signal details optimally in a noisy environment but also adaptively approach towards the optimal parameter value with least-square based optimization of polynomial coefficients. However, such a heuristic analogy for optimally finding the threshold values is cumbersome in LED-PAI imaging modality, thereby increasing the computational burden of the overall denoising process.

Another category of methods that follow a deconvolution based strategies to restore signal content and suppress noisy counterpart. Wiener deconvolution plays a vital role in reducing noise by equalizing phase and amplitude characteristics of the transducer response function [10]. Such a technique can greatly diminish both the noisy and signal degradation part with an accurate assumption of the transducer impulse response, failing to which it may bring additional signal artifacts and interpretation of signal becomes difficult in those scenarios. The algorithm is hugely influenced by the accurate estimation of correlation function between signal and noise which firmly controls the SNR of the output. In case, where the prior knowledge about the transducer response function and noisy power are unknown, researchers undergo a probabilistic measure of the response function using Bayesian or maximum a posteriori estimation-based approach, which on the other hand, increases the computational cost of the signal recovery mechanism. In the PCA mechanism, although the algorithm searches for principal components distributed along the perpendicular directions, often, it shows insignificant results due to its baseline assumption that the ratio of PA energy to the total energy of detected signals is more than 75%, which is not always the case [5].

Recently, researchers are exploring adaptive filtering mechanism, which does not require any prior knowledge of the signal and noise parameters, which could yield significant noise reduction. The ground assumption of such methodology stems from the fact that signal and noise are uncorrelated in consecutive time points, which can be satisfied with the general physics of the LED-PAI signal generation [11, 12]. Moreover, such techniques also attract the eye corner due to its fewer computations and reduced sensitivity to tuning parameters. One such technique where the SNR can be significantly increased is an adaptive noise canceller (ANC). Although, in ANC, there is a specific need to define a reference signal that significantly correlates with the noise which is hard to deduce in a real-time environment. This challenge can be adjusted in another form of ANC—adaptive line enhancer (ALE), in which the reference signal is prepared by providing a de-correlation delay to the noisy signal [13]. The reference signal consists of a delayed version of the primary (input) signal, instead of being derived separately. The delay is provided to de-correlate the noise signal so that the adaptive filter (used as a linear prediction filter) cannot predict the noise signal while easily predicting the signal of interest. Thus, the output contains only the signal of interests which is again subtracted from the desired signal, and the error signal is thereafter used to adapt the filter weights to minimize the error. It adaptively filters the delayed version of the input signal in accordance with the least mean square (LMS) adaptation algorithm. The time-domain computation of the ALE can be summarized as follows.

where, \( x\left( n \right) \) is the primary input signal corresponding to the individual sensor element of ultrasound (US) transducer array, consists of PA signal component [\( pa\left( n \right) \)] and wideband noise component [\( noi\left( n \right) \)]. The reference input signal \( r\left( n \right) \) is the delayed version of the primary input signal by a delaying factor d. The output \( y\left( n \right) \) of the adaptive filter represents the best estimate of the desired response and \( e\left( n \right) \) is the error signal at each iteration. \( w_{k} \left( n \right) \) represents the adaptive filter weights, and L represents the adaptive filter length. The filter is selected as a linear combination of the past values of the reference input. Three parameters determine the performance of the LMS-ALE algorithm for a given application [14]. These parameters are ALE adaptive filter length (L), the de-correlation delay (d), and the LMS convergence parameter (\( \mu \)). The performance of the LMS-ALE includes: adaptation rate, excess mean squared error (EMSE) and frequency resolution.

The convergence of the mean square error (MSE) towards its minimum value is commonly used performance measurement in adaptive systems. The MSE of the LMS-ALE converges geometrically with a time constant \( \tau_{mse} \) as:

where, \( \lambda_{\min} \) is the minimum eigenvalue of the input vector autocorrelation matrix. Because \( \tau_{mse} \) is inversely proportional to \( \mu \), a large \( \tau_{mse} \) (slow convergence) corresponds to small \( \mu \). The EMSE \( \xi_{mse} \) resulting from the LMS algorithm noisy estimate of the MSE gradient is approximately given by:

where, \( \lambda_{av} \) is the average eigenvalue of the input vector autocorrelation matrix. EMSE can be calibrated by choosing the values of \( \mu \) and L. Smaller values of \( \mu \) and L reduce the EMSE while larger values increase the EMSE. The frequency resolution of the ALE is given by:

where, \( f_{s} \) is the sampling frequency. Clearly, \( f_{res} \) can be controlled by L. However, there is a design trade-off between the EMSE and the speed of convergence. Larger values of \( \mu \) results in faster convergence at the cost of steady-state performance. Further, improper selection of \( \mu \) might lead to the convergence speed unnecessary slower, introducing more EMSE in steady-state. In practice, one can choose larger \( \mu \) at the beginning for faster convergence and then change to smaller \( \mu \) for a better steady-state response. Again, there is an optimum filter length L for each case, because larger L results in higher algorithm noise while smaller L implies the poor filter characteristics. As the noise component of the delayed signal is rejected and the phase difference of the desired signal is readjusted, they cancel each other at the summing point and produce a minimum error signal that is mainly composed of the noise component of the input signal.

Moreover, researchers have proposed signal domain analysis to retrieve the acoustic properties of the object to be reconstructed from characteristic features of the detected PA signal prior to image reconstruction. In the proposed method, the signals are transformed into a Hilbert domain to facilitate analysis while retaining the critical signal features that originate from absorption at the boundary. The spatial and the acoustic propagation properties are strongly correlated with the PA signal alteration, and the size of an object governs the time of flight of the PA signal from the object to the detections. The relationship between object shape and signal acquisition delay exists partly because the smaller speed of sound (SoS) within the object will delay the arrival of the signal and vice versa. A simplistic low dimensional model as predicted by Lutzweiler et al. can forecast the corresponding time of arrival given the known phantom shape or the SoS (Fig. 2) [15]. Based on a similar assumption, the inverse problem of obtaining the unknown acoustic parameters can be solved from the extracted signal features. Lutzweiler et al. implemented the signal domain approach for the segmentation of PA images by addressing the heterogeneous optical and acoustic properties of the tomographic reconstruction. Later in this chapter we will discuss about the use of image analytics in improving the visual quality.

Adapted with permission from [15]

Signal domain analysis of PA (optoacoustic) signal a At the absorbing boundary (black cross) of the numerical phantom huge signals will be detected at detector locations [(1) and (2)] with a tangential integration arc (dashed black line). Opposite detectors provide partially redundant information and, consequently information on the SoS. Accordingly, boundary signals (white cross) with direct (1′) and indirect (3) propagation provide information on the location of a reflecting boundary (white dashed line). b The corresponding sinogram with signal features corresponding to those in the image domain in (a). c The workflow of the proposed algorithm: Instead of performing reconstructions (red) with a heuristically assumed SoS map, signal domain analysis (green) is performed prior to reconstruction. Unipolar signals H are generated from the measured bipolar signals S by applying a Hilbert transformation with respect to the time variable. The optimized SoS parameters are obtained by retrieving characteristic features in the signals via maximizing the low dimensional functional f depending on acoustic parameters m through TOF and on the signals H. Subsequently, only a single reconstruction process with an optimized SoS map has to be performed. Conversely, for image domain methods (pale blue) the computationally expensive reconstruction procedure has to be performed multiple times as part of the optimization process.

4 Image Processing Applications

Post image reconstruction, the PA images need to be further processed in both spatial and transform domain for better visual perception of subtle features within the object and in advance level to classify/cluster different regions based on the morphological and functional attributes of the image. Several approaches that need to be performed in this domain starting with pre-processing, object recognition and segmentation based on morphological attributes and feature-based methodologies, including clustering of various regions within the object to image super-resolution techniques which are detailed in the following sub-sections.

4.1 Pre-processing and Noise Removal

Pre-processing in image domain majorly targets intensity enhancement of LED-PAI images and filtering of noises through spatio-frequency domain techniques. Although the implementation of pre-processing steps is subjective, indeed it is essential to readjust the dynamic scale of intensity and contrast for better understanding and perception of PA images, further helping in figuring out the significant regions or structures within the sample of interest. Generally, the dynamic range of reconstructed grayscale PA images is of low contrast, the histogram of which is concentrated within a narrow range of gray intensities. Therefore, a substantial normalization in the grayscale range needs to performed to increase the dynamic range of intensities and eventually enhance the contrast at both global and local scale. Although the intensity transformations—gamma, logarithmic, exponential functions play a crucial role in the intensity rescaling process, it is quite evident that the exact transformation that would possibly provide better-enhanced result is modality dependent.

Let \( f\left( {x,y} \right) \) denotes a reconstructed PA image, while \( f\left( {x,y, t} \right) \) corresponds to the successive time frames of PA images and \( g\left( {x,y} \right) \) is the intensity enhanced PA image. Following the gamma transformation, the rule, \( s = cr^{\gamma } \) maps the input intensity value r into output intensity s with the power-law factor \( \gamma \). This law works well in general sense because most of the digital devices obey power-law distribution. However, the exact selection of \( \gamma \) is instrument-specific and depends on the image reconstruction methodology as well. Another frequently used technique is the logarithmic transformation function, \( s = c{ \log }\left( {1 + r} \right) \) that expands the intensity range of dark pixels of the input image while narrowing down the intensity range of brighter pixels of the input PA images. The opposite is true for the exponential transformation function. It is quite apparent that these two intensity transformations are experiment specific and are used to highlight the significant area/region in a case specific manner. More subjectively, the intensity transformations are quite limited in use and can facilitate image details at a very crude level. Histogram equalization, on the other hand, works at both global and local scale, stretching out the dynamic range of the intensity gray scales. The transformation function for the histogram equalization, of particular interest in image processing, at a global scale and can be written as,

where, \( p_{r} \left( r \right) \) denotes the probability density function of input intensities, L is the maximum gray level value and \( \omega \) is a dummy integration variable. In the discrete domain, the above expression is reduced to,

where, \( n_{k} \) is the number of pixels having gray level \( r_{k} \) and \( MN \) stands for the total number of pixels in the input PA image. Although histogram processing at a global scale increases the contrast level significantly, often, it turns out that several subtle features in the imaging medium cannot be adequately distinguished from its neighborhood background due to proximity in gray levels values between these two. To mitigate this effect, researchers chose to implement local histogram processing for contrast enhancement which works on relatively smaller regions (sub-image) to implement histogram equalization technique. Similar to the global scale, local histogram analysis stretch-out the intensity levels within the sub-image part, thereby enhancing the subtle structural features at those locations.

For noise removal, PA image \( f\left( {x,y} \right) \) undergoes filtering operations based on the PA imaging instrumentation and type of noises that hamper the image quality. The filtering operations can be performed either in spatial or in frequency domain. Depending on the nature of the associativity of noise (additive or convolutive), the filtering domains are finalized. In general, for additive noises, one can go forward with the spatial domain filtering, whereas for a convolutive type of noise it is advised to carry out the filtering process in frequency or transform domain. Spatial filtering operations are performed using the convolution operation using a filter kernel function \( h\left( {x,y} \right) \), a generalized form of which is presented below,

where \( \otimes \) denotes the convolution operation which is linear spatial filtering of an image of size \( M \times N \) with a kernel of size \( m \times n \) and \( \left( {x,y} \right) \) are varied so that the origin of the kernel \( h\left( {x,y} \right) \) visits every pixel in \( f\left( {x,y} \right). \) In case of filtering based on the correlation, only the negative sign in the above equation will be replaced by a positive sign. Now, depending on the type of noise that corrupt the image content or produce an artifact in the PA images, the filter kernel can be any of the types—Low-pass filters: Gaussian, simple averaging, weighted averaging, median and High-pass filters: first-order derivative, Sobel, Laplacian, Laplacian of Gaussian functions. Details of these filtering kernels and related operations are described in [16, 17]. In general, Gaussian smoothing operation can reduce the noisy effect which follows a Gaussian distribution pattern, whereas simple averaging can reduce the blurry noise globally, and median filtering reduces the effect of salt and pepper noise from input reconstructed PA images. While the low-pass filters are working on the images to reduce the effect of high frequency noises, high-pass filtering is performed to sustain the edge and boundary information as well as to keep the subtle high-frequency structures in PA images. A special category of filtering operation which reduces the high frequency noises as well as restores the high-frequency edge information is unsharp masking and high-boost filtering, expression of which is presented below,

where, \( \bar{f}\left( {x,y} \right) \) is a blurred version of the input image \( f\left( {x,y} \right) \) and for unsharp masking \( k \) is kept at 1 whereas, \( k > 1 \) signifies high-boost filtering. However, the above filters work globally irrespective of the changes in local statistical patterns, and there is a class of filtering techniques through an adaptive approach which includes adaptive noise removal filter, adaptive median filtering, etc. [16]. Apart from these generalized filtering approaches, there is a special class of techniques that controls the intensity values using fuzzy statistics, enabling the technique to regulate the inexactness of gray levels with improved performance [18]. The fuzzy histogram computation is based on associating the fuzziness with a frequency of occurrence of gray levels \( h\left( i \right) \) by,

where, \( \mu_{{\tilde{I}\left( {x,y} \right)}} \) is the fuzzy membership function. This is followed by the partitioning of the histogram based on the local maxima and dynamic histogram equalization of these sub-histograms. The modified intensity level corresponding to j-th intensity level on the original image is given by,

where \( h\left( k \right) \) denotes histogram value at k-th intensity level of the fuzzy histogram and \( M_{i} \) specifies the whole population count within i-th partition of a fuzzy histogram. In the last stage, the final image is obtained by normalization of image brightness level to compensate for the difference in mean brightness level between input and output images. Such a technique not only provides better contrast enhancement but also efficiently preserves the mean image brightness level with reduced computational cost and better efficiency.

On the other hand, frequency domain techniques are also essential in the scope of denoising PA images as it can efficiently and significantly reduce the effect of convolutive type of noises. Periodic noises or noises arrived due to specific frequency bands can be reduced through the frequency domain filtering approach as well. Moreover, such transform domain filtering can also be used to reduce the effect of degradation sources that hinders the image details after reconstruction. The general block diagram of the frequency domain filtering technique is depicted below in Fig. 3.

The input PA images are transformed into frequency domain counterpart, followed by the implementation of filtering kernel and again bringing back the images to a spatial domain at the end. Through this frequency domain approach, one can become aware of the noise frequencies and their strength, which further enables frequency selective filtering of the PA images. Several filtering kernels can significantly reduce the noisy part, like Butterworth and Gaussian low- and high-pass filters, band-pass, notch filtering kernels, homomorphic filtering etc. While transforming the input image into its frequency domain counterpart, the noisy part in \( f\left( {x,y} \right) \) becomes in multiplicative form, which can be further reduced by homomorphic filtering technique [16]. A more generalized form of homomorphic filtering which works on a Gaussian high-pass filtering approach is given below,

where, \( D_{0} \) is the cut-off frequency, \( D\left( {u,v} \right) \) is the distance between coordinates \( \left( {u,v} \right) \) and the center frequency at \( \left( {0,0} \right) \). c advocates the steepness of the slope of the filter function. \( \gamma_{H} \) and \( \gamma_{L} \) are the high and low-frequency gains. This transfer function simultaneously compresses the dynamic range of intensities and enhances the contrast, thereby preserving the high-frequency edge information while reducing the noisy components. Another form of such filter is given in [17] which has the transfer function,

where, the high frequency and low-frequency gains are manipulated by following rules,

Although preprocessing of PA images in spatial and frequency domains significantly improve the image quality and enhances the contrast level, the selection of proper filter function is purely subjective, and the parametrization of filtering attributes is PAI model specific. Therefore, it is of more significant importance while choosing the filter function and optimizing its parameters either through heuristic approaches or iterative solutions which can further help in reducing the artifacts and noisy components in PA reconstructed images.

4.2 Segmentation of Objects in PA Images

Post-reconstruction image analysis is an integral part of PAI as it aids in understanding different sub-regions through the processing of different morphological features. In case of functional imaging, it also helps to reduce artefacts and noise that unnecessary hampers functional parameters. Being a challenging task due to relatively low intrinsic contrast of background structures and increased complexity due to limited view problems of LED-PAI, various researchers have worked on the segmentation of objects in PA images through the implementation of different algorithms that are detailed in the following sections.

4.2.1 Morphological Processing

Classical approaches in image processing for edge detection and segmentation of objects with different shapes and sizes can be implemented to segment out any object of interest in PA images. In this context, classical Sobel operators (works on approximating the gradient of image intensity function), Canny edge detector (implements a feature synthesis step from fine to coarse-scale) and even combination of morphological opening and closing operators (through a specific size of structuring element) can help in identifying the object edge/boundary. However, the parameterization of these kernel operators is specific to the LED-PAI system as the contrast resolution between object and background varies significantly, and intra-object intensity distribution is modality dependent. Another mechanism driven by anisotropic diffusion can estimate the object boundary in PA images through the successful formulation of a scale-space adaptive smoothing function [19]. The operation works on successive smoothing of the original PA image \( I_{0} \left( {x,y} \right) \) with a Gaussian kernel \( G\left( {x,y:t} \right) \) of variance t (scale-space parameter), thereby producing a successive number of more and more blurred images [20]. Such anisotropic diffusion can be modelled as,

with the initial condition \( I\left( {x,y,0} \right) = I_{0} \left( {x,y} \right) \), the original image. Mathematically, the AD equation can be written as,

For a constant \( c\left( {x,y,t} \right) \), the above diffusion equation becomes isotropic as given by,

Perona and Malik have shown that the simplest estimate of the edge positions providing excellent results are given by the gradient of the brightness function [19]. So, the conduction coefficient can be written as,

The Gaussian kernel, used in smoothing operation, blurs the intra-region details while the edge information remains intact. A 2D network structure of 8 neighboring nodes is considered for diffusion conduction. Due to low intrinsic contrast stemming from the background structures on PA modality, often this anisotropic diffusion filtering can be used to create a rough prediction of the object boundary which can be further utilized as seed contour for active contour technique (described later in chapter) for actual localization and segmentation of the object boundary under observation.

In another work, researchers show that low-level structures in images can be segmented through a multi-scale approach, facilitating integrated detection of edges and regions without restrictive models of geometry or homogeneity [20]. Here, a vector field is created from the neighborhood of a pixel while heuristically determining its size and spatial scale by a homogeneity parameter, followed by integrating the scale into a nonlinear transform which makes structure explicit in transformed domain. The overall computation of scale-space parameters is made adaptive from pixel to pixel basis. While such methodology can identify structures at low-resolution and intensity levels without any smoothing at even coarse scales, another technique that serves as a boundary segmentation through the utilization of color and textural information of images to track changes in directions, creating a vector flow [21]. Such an edge-flow method detects boundaries when there are two opposite directions of flow at a given location in a stable state. However, it depends strongly on color information and requires a user-defined scale to be input as a control parameter. However, reconstructed PA images lack color information and edge-flow map has to be extracted solely from edge and textural information.

Recently, Mandal et al. have developed a new segmentation method by integrating multiscale edge-flow, scale-space diffusion and morphological image processing [22]. As mentioned earlier, this method draws inspiration from edge-flow methods but circumvents the lacking color information by using a modified subspace sampling method for edge detection and iteratively strengthens edge strengths across scales. This methodology reduces the parameters that need to be defined to achieve a segmented boundary between imaged biological tissue and acoustical coupling medium by integrating anisotropic diffusion and scale-space dependent morphological processing, followed by a curve fitting to link the detected boundary points. The edge flow algorithm defines a vector field, such that the vector flow is always directed towards the boundary on both its sides, in which the relative directional differences are considered for computing gradient vector. The gradient vector strengthens the edge locations and tracks the direction of the flow along x and y directions. The search function looks for sharp changes from positive to negative signs of flow directions and whenever it encounters such changes, the pixel is labelled as an edge point. The primary deciding factor behind the edge strength is the magnitude of change of direction for the flow vector, which is reflected as edge intensity in the final edge map. The vector field is generated explicitly from fine to coarse scales, whereas the multiscale vector conduction is implicitly from coarse to finer scales. The algorithm essentially localizes the edges in the finer scales. The method achieves it by preserving only the edges that exist in several scales and suppressing features that disappear rapidly with an increment of scales.

Often in LED-PAI imaging, noisy background is present in reconstructed images due to low SNR, limited view, and shortcomings of inversion methodologies. Additionally, signals originate from the impurities or inhomogeneities within the coupling medium. Such noises are often strong enough to be detected by edge detection algorithm as true edges. Thus, the use of an anisotropic diffusion process is useful to further clean up the image, where it smoothens the image without suppressing the edges. Thereafter non-linear morphological processing is done on the binary (diffused) edge mask. Mandal et al. took a sub-pixel sampling approach (0.5 px), rendering the operation is redundant beyond the second scale level [22]. Further in PA images, the formation of smaller edge clusters and open contours is quite apparent. Thus, getting an ideal segmentation using edge linker seems to perform poorly. The proposed method first generates the centroids for edge clusters and then tries to fit on a geometric pattern (deformable ellipse) iteratively through a set of parametric operations. The method is self-deterministic and requires minimal human intervention. Thus, the algorithm is expected to help automate LED-based PA image segmentation, with important significance towards enabling quantitative imaging applications.

4.2.2 Clustering Through Statistical Learning

Pattern recognition and statistical learning procedures play an essential role in segmentation through clustering of different tissue structures in LED-PAI based on their intensity profiles which are directly associated with the wavelength-specific absorption of optical radiation, followed by characteristic emission of acoustic waves. Depending on the constituents at vascular and cellular levels, various structures and regions can be segmented through structural and functional attributes in PA images using machine learning-based approaches. Guzmán-Cabrera et al. shown a segmentation technique, performed using an entropy-based analysis, for identification and localization of the tumor area based on different textural features [23]. The local entropy within a window \( M_{k} \times N_{k} \) can be computed as,

where, \( \Omega _{k} \) is the local region within which the probability of grayscale \( i \) is \( P_{i} \) with number of pixels having the grayscale \( i \) is \( n_{i} \). The whole contrast image is then converted to a texture-based image, in which the bottom texture represents a background mask. This is used as the contrast mask to create the top-level textures, thus obtaining the segmentation of different classes of objects with region-based quantification of tumor areas.

In another research, Raumonen and Tarveinen worked on developing a vessel segmentation algorithm following a probabilistic framework in which a new image voxel is classified as a vessel if the classification parameters are monotonically decreased [24]. The procedure follows an iterative approach by uniformly sweeping over the parameter space, resulting in an image where the intensity is replaced with confidence or reliability value of how likely the voxel is from a vessel. The framework is initiated with the smoothing of PA images, followed by clustering and vessel segmentation of clusters and finally filling gaps in the segmented image. A small ball-supported kernel is convolved with the reconstructed PA images to smooth-out the noisy parts, followed by a threshold filtering. Clustering is approached using a region growing procedure in which the vessel structures are labelled as connected components. A large starting intensity and a large neighbor intensity leads the voxels to be classified as a vessel with high reliability, and decreasing these values increases the number of voxels classified as vessel but with less reliability. Post-clustering, each vessel network is segmented into smaller segments without bifurcations and finally filling the gaps in vessel-segmented data and potential breakpoints of vessels are identified and filled based on a threshold length of the gap and threshold angle between the tip directions.

Furthermore, statistical learning procedures have shown to perform significantly well in this context [25], showing a new dimension in LED-PAI research towards automatic segmentation and characterization of pathological structures. Different learning techniques, comprising supervised, unsupervised and even deep neural networks, can be implemented for segmentation and classification of breast cancer, which substantially improves the segmentation accuracy at the cost of computational expenses. In a nutshell, Bayesian decision theory quantifies the tradeoffs between various classification decisions using probability theory. Considering a two-class problem with n features having feature space, \( {\text{X}} = \left[ {{\text{X}}_{1} ,{\text{X}}_{2} \ldots ..{\text{X}}_{\text{n}} } \right]^{\text{T}} \), the Bayes’ theorem forms the relationship,

where, \( {\text{p}}\left( {\upomega_{\text{i}} /{\text{x}}} \right) \) is termed as posterior, \( {\text{P}}\left( {\upomega_{\text{i}} } \right) \) is prior, \( {\text{p}}\left( {{\text{x}}/\upomega_{\text{i}} } \right) \) likelihood, \( {\text{P}}\left( {\text{x}} \right) \) is evidence. Based on the various statistical and morphological features, the decision can be made as,

At a bit higher level, support vector machine (SVM), which is a highly non-linear statistical learning network, works on maximizing the distance between the classes and separating hyper-plane. Considering a two-class problem in which the region of interest belongs to a particular class and all other areas are comprising another class in PA images, let \( \left\{ {{\text{x}}_{1} ,{\text{x}}_{2} \ldots .{\text{x}}_{\text{n}} } \right\} \) be our data set and let \( {\text{y}}_{\text{i}} \) be the class label of \( {\text{x}}_{\text{i}}. \)

Now,

-

(a)

The decision boundary should be as far away from the data of both classes as possible. Distance between the origin and the line \( W^{T} X = k \) is,

$$ Distance = \frac{k}{\left\| W \right\|} $$(26)And we have to maximize m where \( m = \frac{2}{\left\| W \right\|} \) .

-

(b)

For this the linear Lagrangian objective function is

$$ J\left( {w,\alpha } \right) = \frac{1}{2}w^{T} w - \sum \alpha_{i} \left\{ {y_{i} \left( {w_{0} + w^{T} X} \right) - 1} \right\} $$(27) -

(c)

Differentiating this with respect to \( {\text{w}} \) and \( \upalpha \), \( {\text{w}} \) can be recovered as \( {\text{w}} = \sum {{\upalpha }_{{\text{i}}} } {\text{y}}_{{\text{i}}} {\text{x}}_{{\text{i}}}\).

-

(d)

Now for testing a new data z, compute \( \left( {{\text{w}}^{\text{T}} {\text{z}} + {\text{b}}} \right) \), and classify z as class 1 if the sum is positive, and class 2 otherwise.

Although the algorithm is well capable of segmenting the region of interest, the training procedure requires prior knowledge and annotation of the region of interest to work in a supervised manner. In contrast to this, K-means clustering approach is purely unsupervised and works relatively fast in clustering various regions as per their different statistical and image-based feature sets. For a 2-class clustering problem, initially two points are taken randomly as cluster centers. The main advantages of K-mean clustering are its simplicity and computational speed. The disadvantage of this technique is that it does not give the same result due to random initialization. It minimizes the intracluster variances but does not ensure that the result has a global minimum of variance. After the initialization of the center for each class, each sample is assigned to its nearest cluster. To find nearest cluster one can use different distance measures e.g. Euclidian, city-block, Mahalanobis distances etc. the simplest one to use the Euclidian distance with the following form,

where \( {\text{x}}_{\text{i}}\,\, \text{and}\,\, {\text{y}}_{\text{i}}\) are the coordinates of ‘i’th sample, and n is the total number of samples. The new cluster center is obtained by,

where, \( {\text{p}}_{\text{k}} \) is the number of the points in kth cluster and \( {\text{c}}_{\text{k}} \) is the kth cluster. In order to include the degree of belongingness, fuzzy c-means (FCM) based approach is more accurate over the K-means clustering process. FCM contrasts K-means clustering with the fact that in FCM each data point in the feature set has a degree of belonging to a cluster rather than belonging entirely to a cluster. Let us define a sample set of n data samples that we wish to classify into c classes as \( {\text{X}} = \left\{ {{\text{x}}_{1} ,{\text{x}}_{2} ,{\text{x}}_{3} , \ldots ..,{\text{x}}_{\text{n}} } \right\} \) where each \( {\text{x}}_{\text{i}} \) is an m-dimensional vector of m elements or features. The membership value of kth data point belonging to ith class is denoted as \( \upmu_{\text{ik}} \) with the constraint that,

The objective function is,

where b is the index of fuzziness and \( {\text{d}}_{\text{ik}} \) is the Euclidean distance measure between the kth sample \( {\text{x}}_{\text{k}} \) and ith cluster center \( {\text{v}}_{\text{i}} \) . Hence, \( {\text{d}}_{\text{ik}} \) is given by (23),

Minimization of the objective function with respect to µ and v leads to the following equations. The ith cluster center is calculated by,

And membership values are, \( \upmu_{\text{ik}} = \frac{{\left( {{1 \mathord{\left/ {\vphantom {1 {{\text{d}}_{\text{ik}} }}} \right. \kern-0pt} {{\text{d}}_{\text{ik}} }}} \right)^{{{2 \mathord{\left/ {\vphantom {2 {\left( {{\text{b}} - 1} \right)}}} \right. \kern-0pt} {\left( {{\text{b}} - 1} \right)}}}} }}{{\mathop \sum \nolimits_{{{\text{r}} = 1}}^{\text{c}} \left( {{1 \mathord{\left/ {\vphantom {1 {{\text{d}}_{\text{rj}} }}} \right. \kern-0pt} {{\text{d}}_{\text{rj}} }}} \right)^{{{2 \mathord{\left/ {\vphantom {2 {\left( {{\text{b}} - 1} \right)}}} \right. \kern-0pt} {\left( {{\text{b}} - 1} \right)}}}} }} \)

Now to find the optimum partition matrix µ, an iterative optimization algorithm is used. The step-by-step procedure is given below,

-

(a)

Initialization of the partition matrix µ(0) randomly.

-

(b)

Then do r = 0, 1, 2,…..

-

(c)

Calculation of c cluster centre vectors \( {\mathbf{v}}_{\text{i}}^{{\left( {\text{r}} \right)}} \) using \( {\varvec{\upmu}}^{{\left( {\text{r}} \right)}} \)

-

(d)

Updating the partition matrix \( {\varvec{\upmu}}^{{\left( {\text{r}} \right)}} \) using the cluster center values, if \( \left\| {{\varvec{\upmu}}^{{\left( {{\text{r}} + 1} \right)}} - {\varvec{\upmu}}^{{\left( {\text{r}} \right)}} } \right\|_{\text{F}} \le \,\upvarepsilon \) where ε is the tolerance level and \( \left\| \cdot \right\|_{\text{F}} \) is Frobenius distance, stop; otherwise set \( {\text{r}} = {\text{r}} + 1 \) and return to step 2.

After obtaining the optimized partition matrix, depending upon the highest membership value, the data points are assigned to that particular class.

In more recent work, Zhang et al. have demonstrated the deep-learning procedures to segment out tumor area in breast PA images [25]. The area of deep learning is becoming very broad with the recent advancement in artificial intelligence-based approaches through mathematical formulations which is beyond the scope of this chapter. In short, deep learning is a powerful technique that not only reduces the labor in manually computing various features and curse of feature dimensionality but also provides a powerful and robust mechanism of creating any decision. Zhang et al. have shown different deep learning networks like AlexNet and GoogleNet for PA images and established their efficacy in segmenting the breast tumor area [25]. Furthermore, the final contour selection was implemented using a dynamic programming architecture: active contour model which is elaborated in the next section.

4.2.3 Deformable Segmentation

Segmentation of the region of interest through deformable objects plays a significant role in PAI as it is indeed necessary to locate a region / area from the background in-homogeneous reflection model. An example of such deformable object formation is through designing an active contour (AC) algorithm which can regulate the boundary based on various parameters such as energy, entropy, class levels etc. AC can be modeled using geodesic and level set methods. Here, we focus on such an algorithm designed using an improved snake-based AC method which works on a greedy approach [13]. The idea is to fit an energy-minimizing spline along the boundary, characterized by different internal and external image forces. The goal is to reach for a curve where the weighted sum of internal and external energy will be minimum. The basic equation can be formulated as,

where, the position of snake is represented by a planar curve \( v\left( s \right) = \left( {x\left( s \right),y\left( s \right)} \right) \), \( E_{\text{int}} \) is the internal energy force, used to smooth the boundary during deformation. \( E_{ext} \) represents the external energies, pushing the snake towards the desired object. Seed contour for the initial labeling can be identified through various segmentation techniques discussed earlier in this chapter. Coordinates of seed contour is transformed into polar form \( \left( {\rho ,\theta } \right) \). The contour is now represented with a set of such discrete polar coordinates \( v_{i} = \left( {\rho_{i} ,\theta_{i} } \right){\text{ for }}i = 0,1,2,\ldots.,\left( {n - 1} \right) \); where \( \theta_{i} = i \times \theta_{s} \). For example, quantization step size for angel \( \theta \) is \( \theta_{z} = 1^{^\circ } \) (n = 360) and for \( \rho \) is \( \rho_{\text{s}} = 1 \) pixel. The energy function of this model is given by,

According to Fig. 4, for each point \( v_{i} \,{\text{for}}\,i = 0,1,2, \ldots .,\left( {n - 1} \right) \), the energies at the points \( \Omega _{i} = \left\{ {v_{i}^{ - } ,v_{i} ,v_{i}^{ + } } \right\} \) are calculated and \( v_{i} \) are moved to the point with the minimum energy among these three where \( v_{i}^{ - } \) and \( v_{i}^{ + } \) are the two discrete points adjacent to \( v_{i} \) at the radial direction. This operation is performed iteratively until the number of moved contour points is sufficiently small or the iteration time exceeds a predefined threshold. The energy functions are: \( E_{cont} \) is the internal continuity spline energy that helps to maintain the contour to be continuous, \( E_{curv} \) is the internal curvature energy for smoothing the periphery, \( E_{image} \) is external image force that depends on the image intensity points and \( E_{grow} \) represents the external grow energy that helps to expand the contour from the center towards the boundary. Mathematically they can be represented as [26, 27],

where, \( \bar{d} = \sum {\frac{{\left| {v_{t} - v_{t - 1} } \right|}}{n}} \,\,{\text{and}}\,\,\bar{\rho } = \sum {\frac{{\left| {\rho_{t} - \rho_{t - 1} } \right|}}{n}} \)

where, \( \bar{I}_{{v_{j} }} = \frac{1}{k \times k}\sum\limits_{{v_{i} \in \,\Psi _{{v_{j} }} }} {I\left( {v_{i} } \right)} \,\,{\text{and}}\,\,\bar{I}_{origin} = \frac{1}{k \times k}\sum\limits_{{v_{i} \in \,\Psi _{0} }} {I\left( {v_{i} } \right)} \, \)

\( \Psi _{{v_{j} }} \) and \( \Psi _{0} \) are two \( k \times k \) (e.g., k = 3) sub-blocks with center points at \( v_{i} \) and the centroid of the contour respectively. The energy will decrease at \( v_{i}^{ + } \) if both the sub-blocks are of the same intensity approximately, resulting in an outward movement of the contour. This movement stops while the sub-blocks have different intensities. Threshold T determines the range up to which the change in intensity is allowed. e is a negative constant, small value of which will limit the algorithm for more shape restrictions where large value of e also nullifies the effect of image energy for which the contour can exceed the actual boundary.

5 Reconstructed PA Image Quality Improvement Using a Multimodal Framework

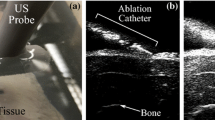

Biological tissues show significant depth-dependent optical fluence loss and acoustic attenuations. Correcting for the optical and acoustic variations are critical for delivering a quantitative imaging performance [28, 29]. Several techniques have been proposed for alleviating this problem including the use of exogenous contrast agents and computing differences in the spatial characteristics of the absorption coefficient over length scales [30, 31], using multiple optical sources together with non-iterative reconstruction and use of context encoding within a machine learning framework [32]. Most of the applied methods use a model of light transport equation considering a homogeneous medium [33]. Furthermore, for characterizing heterogeneous medium, the use of intrinsic (segmented) priors [34], and extrinsic priors obtained by combining PA with diffused optical tomography [35], acousto-optical imaging [36] and other imaging modalities have been investigated. However, these methods require additional computational resources and often hardware support for multimodal imaging. On the other hand, most current state-of-the-art PA imaging systems come equipped with hybrid ultrasound imaging capabilities. Thus, there is an increased interest in employing the co-registered US image to improve the performance of quantitative PA imaging [37, 38]. Additionally, the use of integrated PA-US imaging can further be utilized for the correction of small SoS changes. Mandal et al. aimed to correct for the optical and acoustic inaccuracies in PA imaging using extrinsic imaging priors obtained through segmentation of concurrently acquired high-frequency US images [38]. The US prior is used to create a localized fluence map and apply the correct SoS during advanced beamforming. Figure 5 depicts the process diagram. The method outlined by [38] shows that the use of multimodal priors can significantly improve the quantification of PA signals, and further computer vision methods can be employed to obtain the performance enhancement. Related publications by Naser et al. [39] have further shown that combining finite-element-based local fluence correction (LFC) with SNR regularization can estimate oxygen saturation (SO2) in tissue accurately. Though a detailed discussion on tissue oxygenation measurement is beyond the scope of the chapter, the readers should reconcile to the fact that a quantitative measurement is only possible by producing an accurate estimate of tissue absorption profile. The B-mode ultrasound images provided a mean for surface segmentation and an initiation point for building the FEM mesh, which was employed by both research groups.

Reprinted with permission from [38]

The algorithmic workflow for multimodal prior based image correction for small animal PA imaging.

The PA signal received from a high-frequency linear array system is often not suitable for proper segmentation of anatomical structures. Therefore, the co-registered B-mode US signal is used as a reference frame to segment skin boundaries and delineated organ structure as well as tumor masses. The segmented prior information from the US is then used for iteratively correcting the PA images. A two-step approach is used to generate the US priors: (1) the skin line is detected using graph cuts [40, 41], and (2) internal structures were detected using active contour models (Fig. 5) [27, 42]. The lazy snapping method based on graph cuts separates coarse and fine-scale processing and enhances object specification and boundary detection even in low contrast conditions. The satisfactory low SNR performance of the method with suitable convergence speed makes it an ideal choice for skin line detection in PA-US images. Earlier in this chapter, we have described active contour methods in sufficient detail. Modified AC segmentation methods have been used extensively for visual quality enhancement in PA images. The methods performed efficiently for whole-body tomographic images, as well as for 2D linear array geometries. The majority of commercial LED-PAI systems utilize linear array geometry for signal acquisition; thus, the outlined methods are translatable to such instrumentations without many changes.

The algorithmic workflow consists of acquiring the PA signal and beamforming using the delay and sum algorithm. An automatic SoS estimation is implemented based on prior temperature information [42]. The images are spectrally unmixed using 10 optical wavelengths for finding out the tissue oxygenation profile. The US images are individually segmented and superimposed on the PA image. A deformable active contour segmentation (snakes) model is used for the segmentation of US images. The segmented tissue boundary is considered as the starting point for the model, followed by an iterative segmentation of the tumor region using multiscale edge detectors.

The (segmented) prior information from the US image is used to delineate the tumor mass and improve the fidelity of the optical fluence and multiparametric SoS fitting. Based on the segmented US mask (Fig. 6a), the process can accurately model the decay of light fluence used. The fluence field, thus created, is used to compensate for the depth-dependent decrease in the PA signal (Fig. 6b–d). Additionally, given the prior information about the tissue/coupling medium background, a two-compartment model for SoS calibration can be implemented and fit two different SoS for the object and the background. In summary, the multimodal segmentation framework is helpful in addressing both the optical attenuation as well as the acoustic attenuation, providing an improved visual image quality and a more quantitative imaging performance in vivo. The advanced multimodal methodologies can be integrated with crucial image processing techniques and imaging physics to achieve better LED-PAI imaging performance. Interestingly, the small form factor, as well as the ease of handling LED-based illumination arrays, can make it a modality of choice for exploring for such multimodal imaging, especially as we enter the realms of radiological imaging.

Adapted in parts from [38]

Fluence correction improves CNR performance and quantitative information of PA images, a segmented ultrasound image, b reference MRI image for validation,c co-registered PA-US image, d fluence field map generated FEM method with US prior information, e PA image without correction, and f PA image after correction.

6 Summary

The last couple of decades have seen rapid developments in the field of biomedical PA imaging with the evolution of state-of-the-art small animal imaging scanners and experimental clinical hand-held platforms. The technology has graduated from the engineering laboratories to commercial products for pre-clinical imaging, and further into biomedical/translational imaging platforms. So far, the focus of development in PA imaging was primarily focused on hardware improvements and solving complex inverse problems. More recently, researchers have shown the applicability of image analysis to the current state-of-the-art PA imaging instrumentation. Post reconstruction signal and image processing methods are increasingly becoming practical tools for improving the visual image quality of PA imaging. The imaging physics—image analysis corroboration, as illustrated in this chapter, has led to the development of new methods for quantitative inversion and parameter self-calibration, resolution enhancement, and accurate mapping of fluence and acoustic heterogeneities. LED-based PA systems are in a nascent state itself, and these developments in PA signal processing will accelerate the growth and clinical adoption of LED-PAI. However, several additional challenges (e.g., low SNR, reduced imaging depths, errors in multimodal image registration due to high signal averaging requirement) exist in the application of intelligent image processing techniques in LED-PAI images. In the future, these advancements will be helpful in enabling quantitative molecular and oncological imaging using multispectral LED-PAI imaging [43, 44]. This opens up the possibility of a plethora of new developments, including the development of machine learning (ML) based algorithms for parameter estimation and image enhancement. ML-based algorithms can vastly be useful for improved reconstruction, identification, and segmentation of organs and vascular structures [45]. Finally, the relatively lower cost, accessibility, and low-profile form factor of LED-based PA system is bound to accelerate its use in computational PA imaging and encourage further development in signal and image processing methodologies.

Notes

- 1.

ANSI-American National Standards Institute

References

Y. Zhang, M. Jeon, L.J. Rich, H. Hong, J. Geng, Y. Zhang, S. Shi, T.E. Barnhart, P. Alexandridis, J.D. Huizinga, Non-invasive multimodal functional imaging of the intestine with frozen micellar naphthalocyanines. Nat. Nanotechnol. 9(8), 631–638 (2014)

M.F. Kircher, A. De La Zerda, J.V. Jokerst, C.L. Zavaleta, P.J. Kempen, E. Mittra, K. Pitter, R. Huang, C. Campos, F. Habte, A brain tumor molecular imaging strategy using a new triple-modality MRI-photoacoustic-Raman nanoparticle. Nat. Med. 18(5), 829–834 (2012)

J. Jo, G. Xu, Y. Zhu, M. Burton, J. Sarazin, E. Schiopu, G. Gandikota, X. Wang, Detecting joint inflammation by an LED-based photoacoustic imaging system: a feasibility study. J. Biomed. Opt. 23(11), 110501 (2018)

C.M. O’Brien, K. Rood, S. Sengupta, S.K. Gupta, T. DeSouza, A. Cook, J.A. Viator, Detection and isolation of circulating melanoma cells using photoacoustic flowmetry. J. Vis. Exp. 57 (2011)

M. Zafar, R. Manwar, K. Kratkiewicz, M. Hosseinzadeh, A. Hariri, S. Noei, M. Avanaki, Photoacoustic signal enhancement using a novel adaptive filtering algorithm. In: Proceeding of SPIE BiOS. vol. 10878 (2019)

Y. Zhu et al., Light emitting diodes based photoacoustic imaging and potential clinical applications. Sci. Rep. 8(1), 9885 (2018)

Z. Wu, N.E. Huang, Ensemble empirical mode decomposition: a noise-assisted data analysis method. Adv. Adapt. Data Anal. 1(01), 1–41 (2009)

X. Jing et al., Adaptive wavelet threshold denoising method for machinery sound based on improved fruit fly optimization algorithm. Appl. Sci. 6(7), 199 (2016)

C.B. Smith, A. Sos, A. David, A wavelet-denoising approach using polynomial threshold operators. IEEE Signal Process. Lett. 15, 906–909 (2008)

C.V. Sindelar, N. Grigorieff, An adaptation of the Wiener filter suitable for analyzing images of isolated single particles. J. Struct. Biol. 176(1), 6074 (2011)

J. Xia, J. Yao, L.V. Wang, Photoacoustic tomography: principles and advances. Electromagn. Waves (Cambridge, Mass.), 147, 1 (2014)

Z. Yu, H. Li, P. Lai, Wavefront shaping and its application to enhance photoacoustic imaging. Appl. Sci. 7(12), 1320 (2017)

K. Basak, X.L. Deán-Ben, S. Gottschalk, M. Reiss, D. Razanksy, Non-invasive determination of murine placental and foetal functional parameters with multispectral optoacoustic tomography. Nat. Light. Sci. App. 8(1), 1–10 (2019)

J.R. Zeidler, Performance analysis of LMS adaptive prediction filter. Proc. IEEE 78(12) (1990)

C. Lutzweiler, R. Meier, D. Razansky, Optoacoustic image segmentation based on signal domain analysis. Photoacoustics 3, 151–158 (2015)

R.C. Gonzalez, Woods RE. Digital Image Processing. 4th edition. (Pearson, 2018)

M. Petrou, C. Petrou, Image Processing: The Fundamentals (Wiley, 2010)

D. Sheet, H. Garud, A. Suveer, M. Mahadevappa, J. Chatterjee, Brightness preserving dynamic fuzzy histogram equalization. IEEE Trans. Consumer Electro. 56(4), 2475–2480 (2010)

P. Perona, J. Malik, Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Patt. Ana. Mach. Intelli. 12(7), 629–639 (1990)

M. Tabb, N. Ahuja, Multiscale image segmentation by integrated edge and region detection. IEEE Trans. Image Process. 6(5), 642–655 (1997)

W.Y. Ma, B.S. Manjunath, Edgeflow: a technique for boundary detection and image segmentation. IEEE Trans. Image Process. 9(8), 1375–1388 (2000)

S. Mandal, V.P. Sudarshan, Y. Nagaraj, X.L. De´an-Ben, D. Razansky, Multiscale edge detection and parametric shape modeling for boundary delineation in optoacoustic images. in Proceeding of 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC). (2015). pp. 707–710

R. Guzmán-Cabrera, J.R. Guzmán-Sepúlveda, A. González-Parada, M. Torres-Cisneros, A system for medical Photoacoustic image processing. Pensee J. 75(12), 374–381 (2013)

P. Raumonen, T. Tarveinen, Segmentation of vessel structures from photoacoustic images with reliability assessment. Biomed. Opt. Express. 9(7), 328251 (2018)

J. Zhang, B. Chen, M. Zhou, H. Lan, F. Gao, Photoacoustic image classification and segmentation of breast cancer: a feasibility study. IEEE Access 7, 5457–5466 (2019)

K. Basak, R. Patra, M. Manjunatha, P.K. Dutta, Automated detection of air embolism in OCT contrast imaging: anisotropic diffusion and active contour-based approach. in Proceeding of 3rd International Conference on Emerging Applications of Information Technology. (2012), pp. 110–115. https://doi.org/10.1109/eait.2012.6407874

M. Kass, A. Witkin, D. Terzopoulos, Snakes: active contour models. Int. J. Comp. Vis. 1(4), 321–331 (1988)

S.L. Jacques, Coupling 3D monte carlo light transport in optically heterogeneous tissues to photoacoustic signal generation. Photoacoustics 2(4), 137–142 (2014)

B. Cox, J.G. Laufer, S.R. Arridge et al., Quantitative spectroscopic photoacoustic imaging: a review. J. Biomed. Optics. 17, 061202 (2012)

A. Rosenthal, D. Razansky, V. Ntziachristos, Quantitative optoacoustic signal extraction using sparse signal representation. IEEE Trans. Med. Imaging 28(12), 1997–2006 (2009)

T. Jetzfellner, A. Rosenthal, A. Buehler et al., Optoacoustic tomography with varying illumination and non-uniform detection patterns. J. Opt. Soc. Am.. A, Optics, image science, and vision. 27(11), 2488–2495 (2010)

T. Kirchner, J. Grohl, L. Maier-Hein, Context encoding enables machine learning-based quantitative photoacoustics. J. Biomed. Opt. 23(5), 056008 (2018)

J. Laufer, B. Cox, E. Zhang et al., Quantitative determination of chromophore concentrations from 2d photoacoustic images using a nonlinear model-based inversion scheme. Appl. Opt. 49(8), 1219–1233 (2010)

S. Mandal, X.L. Dean-Ben, D. Razansky, Visual quality enhancement in optoacoustic tomography using active contour segmentation priors. IEEE Trans. Med. Imaging 35, 2209–2217 (2016)

A.Q. Bauer, R.E. Nothdurft, J.P. Culver et al., Quantitative photoacoustic imaging: correcting for heterogeneous light fluence distributions using diffuse optical tomography. J. Biomed. Optics. 16(9), 096016 (2011)

K. Daoudi, A. Hussain, E. Hondebrink et al., Correcting photoacoustic signals for fluence variations using acousto-optic modulation. Opt. Express 20(13), 14117–14129 (2012)

M.A. Naser, D.R. Sampaio, N.M. Munoz et al., Improved photoacoustic-based oxygen saturation estimation with snr-regularized local fluence correction. IEEE Trans. Med. Imaging 38(2), 561–571 (2018)

S. Mandal, M. Mueller, D. Komljenovic, Multimodal priors reduce acoustic and optical inaccuracies in photoacoustic imaging. in Photons Plus Ultrasound: Imaging and Sensing. 2019, vol. 10878. International Society for Optics and Photonics, p. 108781M

M.A. Naser et al., Improved photoacoustic-based oxygen saturation estimation with SNR-regularized local fluence correction. IEEE Trans. Med. Imaging 38(2), 561–571 (2018)

Y. Li, J. Sun, C.K. Tang et al., Lazy snapping. ACM Trans. Graph. (ToG) 23(3), 303–308 (2004)

J. Shi, J. Malik, Normalized cuts and image segmentation. Dep. Pap. (CIS). 107 (2000)

S. Mandal, E. Nasonova, X.L. Dean-Ben et al., Fast calibration of speed-of-sound using temperature prior in whole-body small animal optoacoustic imaging. in Photonics West—Biomedical Optics. (2015), p. 93232Q

K.S. Valluru, K.E. Wilson, J.K. Willmann, Photoacoustic imaging in oncology: translational preclinical and early clinical experience. Radiology 280(2), 332–349 (2016)

V. Ermolayev, X.L. Dean-Ben, S. Mandal et al., Simultaneous visualization of tumour oxygenation, neovascularization and contrast agent perfusion by real-time three-dimensional optoacoustic tomography. Eur. Radiol. 26(6), 1843–1851 (2016)

S. Mandal, A.B. Greenblatt, J. An, Imaging intelligence: AI is transforming medical imaging across the imaging spectrum. IEEE Pulse (2018)

Acknowledgements

The authors acknowledge the support of several students and research scholars (PS Viswanath, XL Dean Ben, Jayaprakash, V Periyasamy, HT Garud) and faculty mentors (D Razansky, PK Dutta, D Komljenovic, M Pramanik) whose work and/or comments have contributed directly or indirectly to this chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Basak, K., Mandal, S. (2020). Multiscale Signal Processing Methods for Improving Image Reconstruction and Visual Quality in LED-Based Photoacoustic Systems. In: Kuniyil Ajith Singh, M. (eds) LED-Based Photoacoustic Imaging . Progress in Optical Science and Photonics, vol 7. Springer, Singapore. https://doi.org/10.1007/978-981-15-3984-8_6

Download citation

DOI: https://doi.org/10.1007/978-981-15-3984-8_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-3983-1

Online ISBN: 978-981-15-3984-8

eBook Packages: EngineeringEngineering (R0)