Abstract

Extraction of blood vessels from retinal fundus images is a primary phase in the diagnosis of several eye disorders including diabetic retinopathy, a leading cause of vision impairment among working-age adults globally. Since manual detection of blood vessels by ophthalmologists gets progressively difficult with increasing scale, automated vessel detection algorithms provide an efficient and cost-effective alternative to manual methods. This paper aims to provide an efficient and highly accurate algorithm for the extraction of retinal blood vessels. The proposed algorithm uses morphological processing, background elimination, neighborhood comparison for preliminary detection of the vessels. Detection and removal of fovea, and bottom-hat filtering are performed subsequently to improve the accuracy, which is then calculated as a percentage with respect to ground truth images.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Diabetic retinopathy

- Vessel extraction

- Fovea detection

- Fundus image

- Bottom-hat filter

- Neighborhood comparison

1 Introduction

According to the World Health Organization (WHO), diabetic retinopathy (DR) is one of the major causes of blindness and occurs due to long-term damage to the blood vessels in the retina. It is a microvascular disorder resulting due to prolonged, unrestrained suffering from diabetes mellitus and attributes to 2.6% of global blindness [7]. Early detection/screening and suitable treatment have been shown to avert blindness in patients with retinal complications of diabetes. Retinal fundus images are extensively used by ophthalmologists for detecting and observing the progression of certain eye disorders such as DR, neoplasm of the choroid, glaucoma, multiple sclerosis, age-macular degeneration (AMD) [9]. Fundus photographs are captured using ‘Charge-coupled Devices’ (CCD), which are cameras that display the retina, or the light-sensitive layer of tissue in the interior surface of the eye [1, 9]. The key structures that can be visualized on a fundus image are the central and peripheral retina, optic disk and macula [1, 2]. These images provide information about the normal and abnormal features in the retina. The normal features include the optic disk, fovea, and vascular network [9]. There are different kinds of abnormal features caused by DR, such as microaneurysms, hard exudates, cotton-wool spots, hemorrhages, and neovascularization of blood vessels [3, 4]. Detection and segmentation of retinal vessels from fundus images is an important stage in classification of DR [5]. However, manual detection is extremely challenging as the blood vessels pictured in these images have a complex arrangement and poor local contrast, and the manual measurement of the features of blood vessels, such as length, width, branching pattern, and tortuosity, becomes cumbersome. As a result, it extends the period of diagnosis and decreases the efficiency of ophthalmologists as the scale increases [4]. Hence, automated methods for extracting and measuring the retinal vessels in fundus images are required to save the workload of the ophthalmologists and to aid in quicker and more efficient diagnosis.

The paper is organized as follows: Sect. 2 enlists previous attempts at deriving algorithms for segmentation of retinal features and detection of diabetic retinopathy. The proposed algorithm is an attempt to automate the process of retinal blood vessel detection as described in Sect. 3. Section 4 provides the result of application of the algorithm on the DRIVE database in terms of the accuracy of detection, sensitivity, and specificity. A comparison is provided between the average accuracies attained using existing algorithms and that of the proposed algorithm. It is seen that the proposed algorithm provides the highest accuracy of detection with a mean of 96.13%.

2 Literature Survey

Over the past decade, many image analysis methods have been suggested to interpret retinal fundus images based on image processing and machine learning techniques. Chaudhuri et al. [3] proposed an algorithm that approximated intensity profiles by means of a Gaussian curve. Gray-level profiles were derived along the perpendicular direction to the length of the vessel. Staal et al. proposed an algorithm for automated vessel segmentation in two-dimensional retinal color images, based on extraction of image ridges, which coincide approximately with vessel centerlines [11]. Chang et al. proposed a technique for the segmentation of retinal blood vessels [2] that overcame the problem of Ricci and Perfetti [8] method which erroneously classified non-vessel pixels near the actual blood vessels, as vessel pixels. Fraz et al. proposed a hybrid method using derivative of Gaussian for vessel centerline detection and then combining them with vessel shape and orientation maps [4]. Zhang et al. proposed a method based on Derivative of Gaussian (DoG) filters and matched filters for vessel detection [4]. Soares et al. used Gabor filter at various scales to detect features of interest, followed by a Bayesian classifier for accurate classification of features [10]. Salazar-Gonzalez et al. proposed a method based on graph cut technique and Markov random field image reconstruction method for segmentation of the optic disk and blood vessels [9]. Miri et al. proposed a method based on curvelet transform to enhance vessel edges, followed by morphological operations to segment the vessels [6]. Marin et al. proposed a method based on neural network schemes for classification of pixels and a seven-dimensional vector for pixel representation [5].

3 Algorithm

3.1 Preprocessing

-

3.1.1

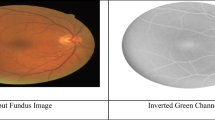

The green channel \(\left( {I_{g} } \right)\) from the RGB image is extracted, as all the essential features are prominent in this channel.

3.2 Vessel Enhancement

-

3.2.1

Bottom-hat filtering is applied on \(I_{g}\) to preserve the sharp bottoms and enhance contrast. The bottom-hat transform is obtained as the difference between the morphological closing of \(I_{g}\), using a disk structuring element S, and \(I_{g}\), as in Eq. (1)

-

3.2.2

Contrast-limited adaptive histogram equalization is performed on image \(I_{1}\) and then filtered with a 3 × 3 median filter to remove spurious non-vessel pixels, generating image \(I_{2}\).

-

3.2.3

\(I_{2}\) is then binarized using Otsu’s threshold, to generate image \(I_{3}\).

3.3 Separation of Image Background

-

3.3.1

The background \(I_{B}\) of image \(I_{g}\) is separated using a disk averaging filter of radius 30 units, corresponding to the size of the optic disk, the largest feature in the retinal fundus image.

-

3.3.2

The contrast of the image \(I_{g}\) is enhanced by scanning the entire image with a 9 × 9 window. For each 9 × 9 region, the average of the pixel intensities in the mask is calculated. The pixels whose intensities are less than the average intensity are suppressed.

-

3.3.3

The enhanced image \(I_{4}\) is subtracted from the background \(I_{B}\) to obtain image \(I_{5}\).

-

3.3.4

Pixel intensities in \(I_{5}\) are amplified to obtain image \(I_{6}\).

3.4 Vessel Reconstruction

-

3.4.1

The images \(I_{3}\) and \(I_{6}\) are compared, and the presence of common edge points in both the images is considered to be a vessel in the final image \(I_{7}\).

-

3.4.2

The bridge morphological operation is applied on the image to eliminate discontinuities in the detected vessels, generating image \(I_{8}\).

3.5 Removal of Fovea

-

3.5.1

\(I_{g}\) is closed with a structuring element S to obtain \(I_{close }\) using Eq. (2).

-

3.5.2

\(I_{9}\) is generated through

where \(I_{r}\) is the red channel of the RGB image.

-

3.5.3

\(I_{ad} = adjust\left( {I_{9} } \right)\), where \(I_{ad}\) is the adjusted image \(I_{9}\) obtained after mapping the intensities in gray scale image \(I_{9}\) to new values in \(I_{ad}\) such that 1% of data is saturated at low and high intensities of \(I_{9}\).

-

3.5.4

The image \(I_{ad}\) is filtered with a disk structuring element with size 10 units to obtain image \(I_{back}\).

-

3.5.5

Image \(I_{10}\) is obtained by adding the closed image and the background image \(I_{back}\) using Eq. (4).

-

3.5.6

Image \(I_{11}\) is obtained by

where \(I_{mask}\) is the retinal mask. This is followed by suppressing the pixels greater than 1 down to 1.

-

3.5.7

Image \(I_{12}\) is generated by Eq. (6):

-

3.5.8

A 40 × 40 mask \(M_{fovea}\), derived from a standard retinal scan, is used as a template, and the coordinates of the center pixel of the region in image \(I_{12}\) with the closest match to the template are taken as the centroid of the fovea \(\left( {x_{fovea} ,y_{fovea} } \right)\).

-

3.5.9

The image \(I_{12}\) is scanned to determine the coordinates of each fovea pixel \(\left( {x,y} \right)\), where each such point satisfies the equation:

$$\left( {\left( {x_{fovea} - x} \right)^{2} + \left( {y_{fovea} - y} \right)^{2} } \right) \le D_{fovea}^{2}$$(7)All such pixels are raised to the maximum intensity, and all other pixels are suppressed in the binary image \(I_{fov}\).

-

3.5.10

The final vessel extracted image \(I_{vess}\) is obtained by,

Figure 1 illustrates the outputs of each stage of the algorithm for one of the images from the database.

4 Results

The algorithm was applied on the DRIVE database and the accuracy of detection was calculated for each image, using the ground truth images provided in the database, with the help of the following concepts [4]:

-

True Positives refer to vessel pixels that are correctly identified. TP refers to the number of pixels which have high intensity value both in the algorithm output and the ground truth.

-

True Negatives refer to pixels that have not been identified both in the ground truth and the algorithm output; that is, they are correctly identified to be of no importance. TN refers to the number of pixels which have low intensity both in the algorithm output and the ground truth image.

-

False Positives refer to pixels which have been incorrectly identified. FP refers to the number of pixels which have high intensity in the algorithm output but low intensity in the ground truth image.

-

False Negatives refer to pixels which have been missed out incorrectly. FN refers to pixels that have low intensity in the algorithm output but high intensity in the ground truth image.

-

Sensitivity, also called the true positive rate (TPR), refers to the proportion of positives correctly identified. It is calculated as

-

Specificity, also called the true negative rate, refers to the proportion of negatives correctly identified. It is calculated as

-

Accuracy refers to the accuracy of vessel detection and is calculated as

Application of the algorithm on the DRIVE database images yielded accuracy of detection in the range of 95–97% with a mean of 96.13%. Table 1 lists out the individual accuracy of detection for each of the images. Table 2 tabulates the average accuracies of existing methods along with that of the proposed method.

5 Conclusion

The proposed algorithm extracts the retinal vessels in a fundus scan with a stable accuracy. The resulting vessel extracted image can be used as a tool for classification of various eye disorders like diabetic retinopathy (DR). This algorithm provides an integrated approach to a vital step in the diagnostic procedure of DR. The high accuracy of the proposed algorithm can thus improve the accuracy of detection of DR when used in conjunction with other relevant algorithms for detection of exudates, hemorrhages, and other retinal abnormalities.

References

Abramoff, Michael, Garvin, Mona, Sonka, Milan: Retinal Imaging and Image Analysis, IEEE Transactions on Medical Imaging, Jan. 2010

Chang, Samuel H, Gong Leiguang, Li, Maoqing, Hu, Xiaoying, Yan, Jingwen: Small retinal vessel extraction using modified Canny edge detection, International Conference on Audio, Language and Image Processing, 2008.

Chaudhuri, S, Chatterjee, S, Katz, N, Nelson, M, Goldbaum, M: Detection of blood vessels in retinal images using two-dimensional matched filters, IEEE Transactions on Medical Imaging, Volume: 8, Issue: 3, Sept. 1989

Fraz, M.M., Barman, S, Remagnino, P, Hoppe, A, Basit, A., Uyyanonvara, B, Rudnicka, A. R., and Owen, C.G.: An Approach To Localize The Retinal Blood Vessels Using Bit Planes And Centerline Detection, Comp. Methods and Progs in Biomed., vol. 108, no. 2, 2012

Marin, D., Aquino, A., Gegndez-Arias, M.E., and Bravo, J.M: A New Supervised Method For Blood Vessel Segmentation In Retinal Images By Using Gray-Level And Moment Invariants-Based Features, IEEE Trans. Med. Imaging, vol. 30, no. 1, pp. 146–158, 2011.

Miri, M.S. and Mahloojfar, A.: Retinal Image Analysis Using Curvelet Transform and Multistructure Elements Morphology by Reconstruction, IEEE Trans. on Biomed. Eng, vol. 58, pp. 1183–1192, May 2011.

Prevention of Blindness from Diabetes Mellitus, Report of a WHO consultation in Geneva, Switzerland, Nov. 2005.

Ricci, Elisa, Perfetti, Renzo: Retinal Blood Vessel Segmentation Using Line Operators and Vector Classification, IEEE Trans. on Medical Imaging, Volume: 26, Issue: 10, Oct. 2007

Salazar-Gonzalez, A., Kaba, D, Li, Y, and Liu, X: Segmentation Of Blood Vessels And Optic Disc In Retinal Images, IEEE Journal of Biomedical and Health Informatics, 2014.

Soares, J. V. B., Leandro, J. J. G., Cesar, R. M., H. Jelinek, F, and Cree, M.J.: Retinal Vessel Segmentation Using The 2-D Gabor Wavelet And Supervised Classification, IEEE Trans. Med. Imaging, vol. 25, no. 9, pp. 1214–1222, 2006.

Staal, Joel, Abramoff, Michael, Niemeijer, Meindert, Vuergever, Max, Ginneken, Bram van: Ridge-Based Vessel Segmentation in Color Images of the Retina, IEEE Transactions on Medical Imaging, Vol. 3 Issue 4, Apr. 2004.

Zhang, B, Zhang, L, Karray, F: Retinal Vessel Extraction by Matched Filter with First Order Derivative of Gaussian, Comp. in Bio. And Med., vol 40, no 4, 2010.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Banerjee, A., Bhattacharjee, S., Latib, S. (2018). Retinal Vessel Extraction and Fovea Detection Using Morphological Processing. In: Pattnaik, P., Rautaray, S., Das, H., Nayak, J. (eds) Progress in Computing, Analytics and Networking. Advances in Intelligent Systems and Computing, vol 710. Springer, Singapore. https://doi.org/10.1007/978-981-10-7871-2_30

Download citation

DOI: https://doi.org/10.1007/978-981-10-7871-2_30

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7870-5

Online ISBN: 978-981-10-7871-2

eBook Packages: EngineeringEngineering (R0)