Abstract

Array pattern synthesis has a lot of importance in most of the communication and radar systems. It increases in defining the appropriate configuration of the array, which produces desired radiation pattern. Low sidelobe narrow beams are very useful for point-to-point communication and high-resolution radars. In this chapter, two evolutionary computing techniques like cuckoo search algorithm and accelerated particle swarm optimization are used. The desired amplitude levels are achieved by the algorithm with element spacing d = 0.40 and 0.45. The main objective is to generate patterns with fixed beam width with acceptable sidelobe level. The results are compared with conventional Taylor method. The array patterns are numerically computed for 100 number of elements.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Sum pattern

- Sidelobe

- Antenna array

- Taylor

- Cuckoo search algorithm (CSA)

- Accelerated particle swarm optimization (APSO)

1 Introduction

Array antennas have a great importance because its radiators have the ability to exhibit beam scanning with enhanced gain and directivity [1]. For a desired pattern, appropriate weighting vector is used. The major advantages of the use of array are that the mainlobe direction and sidelobe level of radiation pattern are controllable and function of the magnitude and the phase of the excitation current and the position of each array element [2].

Various analytical and numerical techniques have been developed to meet this challenge [3]. Analytical techniques converge to local values rather than global optimum values, which optimize further when compared to mathematical techniques [4]. Hence, there is need of evolutionary techniques so we can achieve the desired patterns with minimum sidelobe level [5].

The most used optimization techniques in array pattern synthesis are steepest descent algorithms. In this chapter, an effective method based on Cuckoo Search Optimization [6] (CSA) and Accelerated Particle Swarm Optimization [7] (APSO) is proposed for synthesizing of linear antenna array. As an excellent search and optimization algorithm, CSA has gained more and more attention and has very wide applications.

In this chapter, a CSA and APSO are applied for array synthesis, to control the desired pattern, for linear geometrical configuration with various spacing ranging between 0.40 λ and 0.45 λ relative displacement between the elements, the excitation amplitudes of individual elements and with no additional phase are computed.

2 Array Formulation and Fitness Calculation

2.1 Linear Array

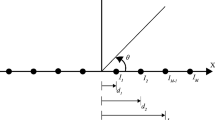

Because of its simple design, most commonly a linear array is synthesized for many communication problems [8]. The representation of such geometry is as shown in Fig. 1. Considering a linear array of N isotropic antennas, where all the antenna elements are identically spaced at a distance d from one another along the x-axis [9].

The free space far-field pattern E (u) is given by

where

- \( {\text{A}}_{\text{n }} \) :

-

excitation of the nth element on either side of the array

- K:

-

wave number = 2π/λ

- λ:

-

wavelength

- θ:

-

angle between the line of observer and broadside

- \( \uptheta_{0} \) :

-

Scan angle

- d:

-

spacing between the radiating elements

- u:

-

sin θ

- u0 :

-

sin θ0

Normalized far field in dB is given as

The excitation amplitudes are taken as parameters to be optimized with the objective of achieving reduced sidelobe level. Equation (1) is used to find the far-field pattern information of current amplitude excitation \( {\text{A}}_{\text{n }} \) for all the elements, with element spacing as d = (0.40 and 0.45) with zero additional phase.

In this optimization process, a design is made to minimize the sidelobe level of the radiation pattern without disturbing the gain of the main beam. The problem of minimizing the maximum SLL in the pattern with prescribed beamwidth by varying wavelength spaced array is solved using the fitness function. An appropriate set of element amplitudes are achieved by reduced sidelobe levels.

Thus, the fitness function is formulated as

3 Optimization Techniques

3.1 Cuckoo Search Algorithm (CSA)

The CSA mimics the natural behavior of cuckoo birds [10]. The principle depends on reproduction strategy of cuckoos. The algorithm has 3 idealized assumptions:

-

1.

Egg laid by a cuckoo in a specific time is reserved for hatching.

-

2.

Depending on the nest, the quality of the egg is defined.

-

3.

Host nests are finite and the probability of identifying eggs lies between (0 and 1).

Random-walk style search is implemented by by Lévy flights [11]. Single parameter in Cuckoo Search Algorithm makes it simpler when comparing the other agent-based metaheuristic algorithms. The new generation of excitation current amplitude are determined by the best nest. The updating procedure is mentioned in the following Eq. (3)

The Levi flight equation represents the stochastic equation for random-walk as it depends on the current position and the transition probability (second term in the equation). Where α is the step size, generally α = 1. Element wise multiplications is given as:

Here, the term t−λ refers to the fractal dimension of the step size and the probability Pa in this paper is taken as 0.25 [12].

3.2 Accelerated Particle Swarm Optimization

The standard PSO uses both the individual personal best and the current global best but APSO uses global best only [13]. This technique interestingly accelerates the search efficiency and iteration time.

It decreases the randomness as the iterations proceed. The APSO starts by initializing a swarm of particles with random positions and velocities. The fitness function of each particle is evaluated and the best g value is calculated [14].

Later, actual position is updated for each and every particle. This process is repeated until the optimum best g value is obtained. Some of the advantages of APSO over other traditional optimization techniques, it has the reliability to modify and find a balance between the global and local exploration of the search space, and it has implicit parallelism.

Here, w is the inertia coefficient of the particle which play a vital role in PSO. \( Vel_{n } \left( {t + 1} \right) \) is present particle’s velocity, \( Vel_{n } \left( t \right) \) is the earlier particle’s velocity, \( X_{n} \left( t \right) \) is the present particle’s position, \( X_{n} \left( t \right) \) is the earlier particle’s position. r1 and r2 are random in nature and lies in the range [0 and 1] and uniformly distributed [15].

c1 and c2 are the acceleration constants which manage the relative effect of the pbest and gbest particles. pbestn is the present pbest value, gbest is the present gbest value.

The PSO algorithm is defined in 4 steps which will terminate when the exit criteria are met. The velocity vector is produced by using the below expression.

Here \( c_{n } \) value lies in between (0, 1) and in random nature.

The position vector is modified using the following expression

Combining the above two equations yield the following expressions.

The distinctive values of APSO are \( \alpha \) = 0.1–0.4 and = \( \beta \) 0.1–0.7. Here \( \alpha \) is 0.2 and \( \beta \) is 0.5.

\( \gamma \) is referred to a control parameter with magnitude 0.9 coherent in the iteration number.

4 Results

Cuckoo search algorithm and Accelerated Particle Swarm Optimization are applied to evaluate amplitude distribution required to maintain sum patterns with Sidelobe level at –35 dB. The patterns are numerically computed for N = 100 arrays of elements by varying the spacing between elements as d = 0.40 and 0.45. As the number of elements are increased in the array, the Null to Null beamwidth is found to decrease (Figs. 2, 3, 4 and 5).

5 Conclusion

The synthesis of uniform linear arrays for sidelobe level reduction is considered in the present work. The Algorithms is found to be useful to generate desired radiation pattern. The method is useful to solve multi-objective array problems involving with specified number of constraints. The sidelobe level is decreased to –35 dB. The results are extremely useful in communication and radar systems where the mitigation of EMI is a major concern. The beamwidth remains unaltered even after reducing the sidelobe level. The rise of far away sidelobes is not a problem in the system of present interest.

References

Raju G S N, Antennas and wave propagation, 3rd ed., Pearson Education, 2005

Stienberg B D. Principles of Aperture and Array System Design. New York: John Wiley and Sons, 1976

Unz H “Linear arrays with arbitrarily distributed elements, IEEE Transactions on Antennas and Propagation, 1960, 1(8), pp. 222–223

Harrington R., “Sidelobe reduction by non-uniform element spacing” IRE Transactions on Antennas and propagation, 1961, 9(2), pp. 187–192

V. V. S. S. S. Chakravarthy, P. S. R. Chowdary, Ganapati Panda, Jaume Anguera, Aurora Andújar, and Babita Majhi: On the Linear Antenna Array Synthesis Techniques for Sum and Difference Patterns Using Flower Pollination Algorithm, Arabian Journal for Science and Engineering, Aug (2017)

Yang, X.-S., and Deb, S, “Engineering Optimization by Cuckoo Search”, Int. J. Mathematical Modelling and Numerical Optimization, Vol. 1, No. 4, 330–343, 2010

Amir Hossein Gandomi., Gun Jin Yun., Xin-She Yang., Siamak Talatahari.: Chaos – enhanced accelerated particle swarm optimization. J. Commun Nonlinear Sci Numer Simulat. Vol. 18. (2013) 327–340

Khairul Najmy Abdul Rani, Mohd. Fareq Abd Malek, Neoh Siew-Chin”, Nature-Inspired Cuckoo Search Algorithm For Side Lobe Suppression In A Symmetric Linear Antenna Array, Radioengineering, Vol. 21, No. 3, September 2012

Haffane Ahmed, Hasni Abdelhafid, “Cuckoo Search Optimization For Linear Antenna Arrays Synthesis”, Serbian Journal Of Electrical Engineering Vol. 10, No. 3, 371–380, October 2013

K N Abdul,F Malek, “Symmetric Linear Antenna Array Geometry Synthesis Using Cuckoo Search Metaheuristic Algorithm”, 17th Asia-Pacific Conference On Communications (Apcc) 2nd – 5th October 2011

Urvinder Singh and Munish Rattan: “Design of Linear and Circular Antenna Arrays Using Cuckoo Optimization Algorithm”, Progress in Electromagnetics Research C, Vol. 46, 1–11, 2014

M. Khodier And M. Al-Aqeel, “Linear And Circular Array Optimization: A Study Using Particle Swarm Intelligence”, Progress In Electromagnetics Research B, Vol. 15, 347–373, 2009

Majid M. Khodier, Christos G. Christodoulou, “Linear Array Geometry Synthesis With Minimum Sidelobe Level And Null Control Using Particle Swarm Optimization”, IEEE Transactions On Antennas And Propagation, Vol. 53, No. 8, August 2005

N. Pathak, G. K. Mahanti, S. K. Singh, and J. K. Mishra A. Chakraborty,” Synthesis Of Thinned Planar Circular Array Antennas Using Modified Particle Swarm Optimization”, Progress In Electromagnetics Research Letters, Vol. 12, 87–97, 2009

Pinar Civicioglu, Erkan Besdok: A conceptual comparison of the Cuckoo-search, particle swarm optimization, differential evolution and artificial bee colony algorithms, Springer Science + Business Media B.V. 2011

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Vamshi Krishna, M., Raju, G.S.N., Mishra, S. (2018). Synthesis of Linear Antenna Array Using Cuckoo Search and Accelerated Particle Swarm Algorithms. In: Anguera, J., Satapathy, S., Bhateja, V., Sunitha, K. (eds) Microelectronics, Electromagnetics and Telecommunications. Lecture Notes in Electrical Engineering, vol 471. Springer, Singapore. https://doi.org/10.1007/978-981-10-7329-8_86

Download citation

DOI: https://doi.org/10.1007/978-981-10-7329-8_86

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-7328-1

Online ISBN: 978-981-10-7329-8

eBook Packages: EngineeringEngineering (R0)