Abstract

Wavelet transform provides excellent energy compaction and exploits redundancy for better image compression. This paper analyses the performance of wavelet-based image compression techniques, namely set partitioning in hierarchical trees (SPIHT), set partitioned embedded block (SPECK) and JPEG2000. Image quality measured as peak signal-to-noise ratio and computational complexity, i.e. encoding and decoding times, is calculated for these techniques. Simulation results show that JPEG2000 outperforms other state-of-the-art wavelet image codecs (SPIHT and SPECK) in coding efficiency and computational complexity. Simulation is carried out on various images of different dimensions at different bit rates using MATLAB software.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- JPEG2000

- Set partitioning in hierarchical trees (SPIHT)

- Set partitioned embedded block coder (SPECK)

- Wavelet transform

1 Introduction

For computer applications before transmission, the redundant data needs to be compressed in order to reduce storage. In image compression, the highly correlated neighbouring pixels are discarded by reducing the redundant information in an image. Image compression techniques are becoming more complex with the improvement in efficiency. Image can be transformed using discrete cosine transform (DCT) [1] and discrete wavelet transform (DWT) [2, 3]. Joint Photographic Experts Group developed (JPEG) coder [4,5,6] uses DCT and generates energy-compacted spectral components. Wavelet transform offers excellent energy compaction and eliminates redundant information present in an image. Shapiro [7] introduced EZW which is extremely fast, very effective and produces embedded bit stream. The performance of EZW is further enhanced by presenting a more efficient and faster technique known as set partitioning in hierarchical trees (SPIHT) developed by Said and Pearlman which is simple technique and offers excellent rate–distortion performance [8]. A. Islam and W. Pearlman proposed block-based wavelet transform coding technique with low complexity known as set partitioning embedded block (SPECK) [9]. JPEG2000 [10] provides higher compression efficiency which is faster than zerotree coders but it needs extra memory allocation. However, for high resolution images, SPIHT and SPECK need large memory bank which in turn increases the cost of hardware. The paper is organized as follows: Sect. 2 contains overview of the wavelet-based compression techniques, namely SPIHT, SPECK and JPEG2000. In Sect. 3, results are given. We conclude the paper in Sect. 4.

2 Overview of Wavelet-Based Compression Techniques

2.1 SPIHT

Set partition coding (SPC) has high energy compaction and zerotree properties of wavelet decomposition. This gives bitstream which is embedded in nature. This means that coding algorithm can be stopped at any time. SPIHT transmits decision by assigning bits. The pixel values are compared with the threshold using the following function:

where 1 and 0 indicates the significance and insignificance of sets with respect to threshold.

The set partitioning rules are defined as:

Ộ(i, j) = A set of the co-ordinates of offsprings of node (i, j); Ḋ(i, j) = A set of the co-ordinates of descendants of node (i, j); Ḷ(i, j) = A set of co-ordinates of descendants excluding Ộ (i, j); Ḷ(i, j) = Ḋ(i, j) − Ộ(i, j).

SPIHT comprises two passes: sorting and refinement and consists of three lists to keep track of pixel values [8]. LIP is the list of insignificant pixels which has all the pixels of LL band (highest energy band). LIS is the list of insignificant sets that consist of sets with descendants only excluding tree root. LSP is the list of significant pixels that contains significant coefficients of all passes. During sorting pass or significance map testing, all the pixels in LIP are tested for significance. If they are significant, an output bit is generated and a sign bit is given. The significant pixels are now sent to LSP. Now, tree sets with descendants are checked for significance. If they are found significant, they are detached from the list and then partitioned into four offsprings. These offsprings are tested and if found significant, they are sent to LSP otherwise they are sent to LIP. During refinement pass, those pixels which were significant in the previous sorting pass are refined and output the most significant bit of the pixel value [11,12,13].

2.2 SPECK

Set partitioned embedded block coding (SPECK) [9] is the block-based efficient state-of-the-art algorithm which has only two lists, namely LIS and LSP. There are three coding steps in SPECK algorithm: initialization, sorting and refinement. Initially, a threshold is set. The image is transformed using DWT and partitioned into a set Ṥ which consists of root node of the pyramid and a set Ḯ which consists of remaining coefficients. Initially, Ṥ block coefficients are kept in LIS and LSP is empty. During the sorting pass, significance of Ṥ and Ḯ sets is checked. If Ṥ is found to be significant, it is partitioned into four equal sized subsets added to LIS. All subsets of 1 × 1 are checked for significance and if found significant, the sign bit is coded. These subsets are then added to LSP. If the set Ḯ becomes significant, it is divided into octave band partitioning. A total of four sets are generated: three Ṥ sets and one Ḯ set. When all sets are partitioned and made significant, the refinements pass starts which refines the quantized pixel values of LSP that are found significant in the earlier passes. Now, threshold is reduced in a step of two and the process is repeated until the desired bit rate is attained. Because of the linked lists of SPECK, huge memory is required that increases its complexity [14, 15].

2.3 JPEG2000

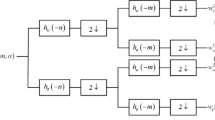

JPEG2000 [10] was developed by Joint Photographic Experts Group (JPEG) committee. It exploits the features of discrete wavelet transform (DWT), a benchmark in image compression and introduced several new features. These new features such as better low bit rate performance, grey and colour image compression, lossless and lossy compression, large images and image components, large dynamic range of pixels, progressive transmission, error resiliency, image security are effective for vast applications. The block diagram of JPEG2000 encoder is given in Fig. 1.

JPEG2000 encoder performs three steps in compression: discrete wavelet transform (DWT), quantization and entropy encoding. Each component after multicomponent transformation is decomposed by DWT in different subbands which are then independently quantized. These quantized subbands are broken into equal sized small code blocks, generally 32 × 32, 64 × 64. These code blocks produce compressed bitstreams after independently entropy encoded by Tier-1 and Tier-2 coding engines. In Tier-1 coding process, each code block is divided into n bit planes, where n is the precision of number of elements in code blocks. They are encoded from nth bit plane to first bit plane by fractional bit plane coding using embedded block truncation optimization technique (EBCOT) [16] algorithm in three coding passes such that if a part of one bit plane is being encoded, other bit planes do not overlap. The three coding passes of EBCOT are significance propagation, magnitude refinement and clean-up pass. EBCOT generates context data and binary decision values which are coded by binary arithmetic coding (known as MQ-coder) and produce compressed bits for all code blocks. In Tier-2 coding, for each code block, a layer has all the coding passes from all consecutive bit planes and block summary information has length of compressed bitstreams, zero bit plane data and the number of coding passes data. Tag trees (quadtrees) are used to represent the layer and block summary data for all code blocks as compressed file header.

3 Simulation Results

The wavelet-based image compression techniques SPIHT, SPECK and JPEG2000 are simulated on MATLAB software. The results are tabulated as follows, and PSNR, encoding and decoding times are plotted against different bit rates for Lena and Barbara images (Tables 1 and 2).

Figures 2 and 3 compare the coding performance (PSNR) (in dB) of SPIHT, SPECK and JPEG2000 algorithms for Lena image of dimension 256 × 256 and 512 × 512 against different bit rates. Figures 4 and 5 compare the PSNR (in dB) values of SPIHT, SPECK and JPEG2000 for Barbara image of 256 × 256 and 512 × 512 size, respectively. PSNR values (in dB) for JPEG2000 are best at all bit rates for these dimensions (Tables 3, 4, 5 and 6).

The encoding time is defined as the total time elapsed in wavelet transforming an image and then encoding the transformed coefficients. The decoding time is defined as the time elapsed in decoding the bitstream to reconstruct the image at a given bit rate. From Figs. 6, 7, 8 and 9, it is seen that at low bit rate, SPIHT and SPECK have small values of the encoding and decoding times for Lena and Barbara image of 256 × 256 and 512 × 512 but at high bit rate, JPEG2000 outperforms them.

Figures 6 and 7 show the encoding and decoding times (in sec) for SPIHT, SPECK, JPEG2000 for Lena image of size 256 × 256 and 512 × 512 against different bit rates, and Figs. 8 and 9 show the encoding and decoding times (in sec) for Barbara image of size 256 × 256 and 512 × 512, respectively.

4 Conclusion

This paper compares the set partition coding algorithms (viz. SPIHT and SPECK) and JPEG2000. The simulation results indicate that JPEG2000 technique is fast compared to set partition coding algorithms. The encoding and decoding times for JPEG2000 are small compared to other algorithms. The PSNR values of JPEG2000 are better for a given bit rate for Lena and Barbara images of 256 × 256 and 512 × 512.

References

Ahmed N., Natarajan T. and Rao K. R..: Discrete cosine transform: IEEE Transactions on Computers, vol. 23 no. 1, pp. 90–93 (1974)

Calderbank A. R., Daubechies I., Sweldens W., and Yeo B.L.: Lossless Image Compression Using Integer to Integer Wavelet Transforms, in Proc.: IEEE Int. Conf. Image Processing, vol. 1, Santa Barbara, CA, opp. 596–599. (1997)

Antonini M., Barlaud, M., Mathieu, P., and Daubechies, I.: Image coding using the wavelet transform, IEEETrans. on Image Processing, 1, 205–220, (1992)

Wallace G.K., The JPEG still picture compression standard: IEEE Transaction Consumer Electronics, vol. 38, no. 1, Feb (1992)

ISO/IEC 1091 8 (JPEG), Information Technology-Digital Compression and Coding of Continuous-Tone Still Images.”

Pennebaker W. B. and. Mitchell J. L.: JPEG: Still Image Compression Standard:. Van Nostrand Reinhold, New York (1993)

Shapiro J. M.: Embedded image coding using zerotrees of wavelet coefficients: IEEE Trans. Signal Process., vol. 41, pp. 3445–3462 (1993)

Said A. and Pearlman W. A.: A new, fast, and efficient image codec based on set partitioning in hierarchical trees: IEEE Trans. Circuits Syst. Video Technol., vol. 6, no. 3, pp. 243–250 (1996)

A. Islam and W. A. Pearlman: Embedded and efficient low-complexity hierarchical image coder: IEEE Transactions on circuits and systems for video technology, vol. 14, no. 11 (2004)

Taubman D.: High performance scalable image compression with EBCOT: IEEE Trans. Image Processing, vol. 9, pp. 1158–1170 (2000)

J.Z. Gang, G.X. Dong, L.L. Sheng: a fast image compression algorithm based on SPIHT: IEEE international conference (2009)

Akter M., Reaz M. B. I., Mohd-Yasin F., Choong F.: A modified set partitioning in hierarchical trees algorithm for real-time image compression: J. Commun. Technol. Electron., vol. 53, no. 6, pp. 642–650 (2008)

Pan H., Siu W.-C., and Law N.-F., A fast and low memory image coding algorithm based on lifting wavelet transform and modified SPIHT: Signal Process., Image Commun., vol. 23, no. 3, pp. 146–161 (2008)

Baojun H and Yan L.: An Improved SPECK Image Coding Algorithm.: IEEE Fifth International Conference on Information Assurance and Security, pp 227–229 (2009)

Senapati R. K., Pati U. C., Mahapatra K. K.: Listless block tree set partitioning algorithm for very low bit rate embedded image compression, Int. J. Electron. Commun., vol. 66, no. 12, pp. 985–995 (2012)

Taubman D., Ordentlich E., Weinberger M. and Seroussi G.: Embedded Block Coding in JPEG2000 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Bano, N., Alam, M., Ahmad, S. (2018). Performance Evaluation of Wavelet-Based Image Compression Techniques. In: Singh, R., Choudhury, S., Gehlot, A. (eds) Intelligent Communication, Control and Devices. Advances in Intelligent Systems and Computing, vol 624. Springer, Singapore. https://doi.org/10.1007/978-981-10-5903-2_79

Download citation

DOI: https://doi.org/10.1007/978-981-10-5903-2_79

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-5902-5

Online ISBN: 978-981-10-5903-2

eBook Packages: EngineeringEngineering (R0)