Abstract

Many systems experience gradual degradation while simultaneously being exposed to a stream of random shocks of varying magnitude that eventually cause failure when a shock exceeds the residual strength of the system. This failure mechanism is found in diverse fields of application. Lee and Whitmore Shock-degradation failure processes and their survival distributions. Manuscript submitted for journal publication, 2016) presented a family of system failure models in which shock streams that follow a Fréchet process are superimposed on a degrading system described by a stochastic process with stationary independent increments. They referred to them as shock-degradation failure models. In this article, we discuss applications of these models and investigate practical issues and extensions that help to make these models more accessible and useful for studies of system failure. This family has the attractive feature of defining the failure event as a first passage event and the time to failure as a first hitting time (FHT) of a critical threshold by the underlying stochastic process. FHT models have found use in many real-world settings because they describe the failure mechanism in a realistic manner and also naturally accommodate regression structures. This article discusses a variety of data structures for which this model is suitable, as well as the estimation methods associated with them. The data structures include conventional censored survival data, data sets that combine readings on system degradation and failure event times, and data sets that include observations on the timing and magnitudes of shocks. This assortment of data structures is readily handled by threshold regression estimation procedures. Predictive inferences and risk assessment methods are also available. This article presents three case applications related to osteoporotic hip fractures in elderly women, divorces for cohorts of Norwegian couples, and deaths of cystic fibrosis patients. This article closes with discussion and concluding remarks.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Applications

- Degradation process

- Fréchet process

- Shock stream

- Strength process

- Survival distribution

- Threshold regression

1 Introduction

Many systems experience gradual deterioration while being exposed simultaneously to a stream of random shocks of varying magnitude that eventually cause failure when a shock exceeds the residual strength of the system. This basic situation is found in many fields of application as illustrated by the following examples:

-

1.

An equipment component experiences normal wear and tear during use but is also exposed to mechanical shocks from random external forces of varying intensity. The weakening component finally fails when a sufficiently strong shock arrives to break it.

-

2.

A new business may experience gradual financial erosion or strengthening through time while experiencing shocks or disturbances from economic events that impact its business sector. The business fails if an external shock forces it into bankruptcy.

-

3.

The human skeleton weakens with age and disease but is also exposed to occasional traumatic events such as blunt force injuries, stumbles or falls. The skeleton ‘fails’ when the trauma produces a skeletal fracture.

-

4.

Many lung diseases such as chronic obstructive pulmonary disease (COPD) or cystic fibrosis involve a progressive deterioration of lung function. The time course of these diseases is punctuated by occasional acute exacerbations, brought on by infections or other assaults, that create moments of life-threatening crisis for the patient. A sufficiently weak patient dies when an exacerbation overwhelms the body’s defenses.

-

5.

The cohesion of a marriage may gradually weaken or strengthen through time while simultaneously being subjected to stresses or disturbances of varying severity, such as financial problems, alcoholism or infidelity. The marriage fails if a shock is sufficient to break the marriage bond.

In this article, we consider practical applications of a family of shock-degradation failure models that were initially described in [7]. This family is one in which shock streams are superimposed on a degrading underlying condition for the system and failure occurs when a shock causes the weakened system to fail. The family of models has already found several applications, which will be introduced later. These shock-degradation models have the attractive feature of defining the failure event and failure time as the first hitting time (FHT) of a threshold by the underlying stochastic process. Such FHT models are useful in practical applications because, first, they usually describe the failure mechanism in a realistic manner and, second, they naturally accommodate regression structures that subsequently can be analyzed and interpreted using threshold regression methods. The family encompasses a wide class of underlying degradation processes, as demonstrated in [7]. The shock stream itself is assumed to be generated by a Fréchet process that we describe momentarily.

2 Shock-Degradation Failure Models

We now describe the two principal components of this family of shock-degradation failure models.

2.1 The Shock Process

The kind of shocks that impact a system require careful definition so the potential user of the model will know if it is suitable for a particular application or may require some extension or modification (of which there are many). As illustrated by the examples presented in the introduction, a shock refers to any sudden and substantial force applied to the system. The shock may be a sharp physical force, a sudden and significant economic disturbance, a physiological reaction to trauma or assault, or an instance of major emotional distress, depending on the context. We consider only shocks that create a momentary negative excursion or displacement in the condition of the system, with the system returning to its previous state or condition after the shock is absorbed. The shocks are therefore not cumulative in their impact. For example, if a fall doesn’t produce a bone fracture then there is no lasting damage. The shock is also concentrated at a ‘moment in time’. This moment may be a few minutes, hours or days depending on the time scale of the application. For example, a COPD exacerbation may work itself out over a few days or weeks but this interval is just a ‘moment’ when considered over a life span of years.

To define a Fréchet shock process, we start with any partition of the open time interval (0, s] into n intervals (0, t 1], …, (t n−1, t n ], where 0 = t 0 < t 1 < ⋯ < t n = s. The largest interval in the time partition is denoted by Δ = max j (t j − t j−1). Let V j be a positive value associated with interval (t j−1, t j ]. Assume that the sequence V 1, …, V n is a set of mutually independent draws from the following cumulative distribution function (c.d.f.):

Here G(v) is the cumulative distribution function (c.d.f.) of a Fréchet distribution, which has the following mathematical form [3]:

The Fréchet process {V (t), 0 < t ≤ s} associated with the generating distribution in (11.2) is the limiting sequence of V 1, …, V n as the norm of the time partition Δ tends to 0 (and n increases accordingly). The process can be extended analytically to the whole positive real line by allowing s to increase without limit. At its essence, the process {V (t)} describes a steam of local maxima in the sense that the maximum of V (t) for all t in any open interval (a, b] has the c.d.f. G(v)b−a. Thus, the process is defined by:

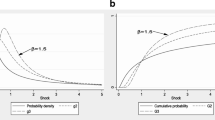

Lee and Whitmore [7] pointed out a number of properties of the Fréchet shock process. Importantly, they noted that scale parameter α is the exp(−1)th fractile or 37th percentile of the maximum shock encountered in one unit of time, irrespective of the value of shape parameter β. They also show that larger values of β tighten the distribution of shock magnitudes about this fixed percentile.

2.2 The Degradation Process

The shock-degradation model assumes that the system of interest has an initial strength y 0 > 0 and that this strength degrades over time. The system strength process is denoted by {Y (t), t ≥ 0} and the degradation process is denoted by {W(t), t ≥ 0}. The model assumes {W(t)} is a stochastic process with stationary independent increments and initial value W(0) = 0. It also assumes that the degradation process has a cumulant generating function (c.g.f.) defined on an open set \(\boldsymbol{\mathcal{Z}}\), denoted by κ(u) = lnE{exp[uW(1)]}. The system strength process at time t is Y (t) = y 0exp[W(t)]. This type of degradation process is one in which the system strength changes in increments that are proportional to the residual strength at any moment. As a result, the system strength Y (t) never reaches a point of zero strength but may approach it asymptotically. Therefore, in this model setting, the system cannot fail through degradation alone. The failure occurs when a shock exceeds the residual strength.

Lee and Whitmore [7] considered the following two families of stochastic processes that possess stationary independent increments as important illustrations of the class:

-

Wiener diffusion process. A Wiener diffusion process {W(t), t ≥ 0}, with W(0) = 0, having mean parameter μ, variance parameter σ 2 ≥ 0 and c.g.f.:

$$\displaystyle{ \kappa (u) =\ln E\{\exp [uW(1)]\} = u(\mu +u\sigma ^{2}/2). }$$(11.4) -

Gamma process. A gamma process {X(t), t ≥ 0}, with X(0) = 0, having shape parameter ζ > 0 and scale parameter η > 0. Define W(t) as the negative of X(t), that is, W(t) = −X(t) in order to assure that the sample paths of {W(t)} are monotonically decreasing. The c.g.f. of W(t), as a negative-valued gamma process, has the form:

$$\displaystyle{ \kappa (u) =\ln E\{\exp [uW(1)]\} =\zeta \ln \left ( \frac{\eta } {\eta +u}\right )\quad \mbox{ for}\ \eta + u> 0. }$$(11.5)

Lee and Whitmore [7] also considered the following family of models in which degradation has a smooth deterministic trajectory:

-

Deterministic exponential process. System strength follows a deterministic exponential time path of the form Y (t) = y 0exp(λt) where λ denotes the exponential rate parameter.

This family is simple but an adequate practical representation for some important applications.

2.3 The Shock-Degradation Survival Distribution

Lee and Whitmore [7] showed that their shock-degradation model has the following survival distribution:

where S denotes the survival time,

The notation \(E_{\boldsymbol{\mathcal{C}}}\) denotes an expectation over the set of degradation sample paths \(\boldsymbol{\mathcal{C}} =\{ W(t): 0 \leq t \leq s,W(0) = 0\}\). The random quantity Q(s) is seen to be a stochastic integral of the strength process, raised to power −β, over time interval (0, s]. The authors went on to show how the survival function can be expanded in a series involving expected moments of Q(s) and derive exact formulas for these moments. Both a Taylor series expansion and an Euler product-limit series expansion are considered. The authors pointed out that conditions for the Fubini-Tonelli Theorem, governing the interchange of integration and expectation operators for the infinite series implicit in (11.6), fails to hold. Thus, they proposed the use of the following finite expansions as approximations for the survival function \(\overline{F}(s)\):

-

Taylor series kth-order expansion

$$\displaystyle{ \overline{F}_{k}(s) =\exp (-cm_{1})\left [\sum _{\ell=0}^{k}(-1)^{\ell}\frac{c^{\ell}} {\ell!} m_{\ell}^{{\ast}}\right ]. }$$(11.8) -

Euler product-limit kth-order expansion

$$\displaystyle{ \overline{F}_{k}(s) =\exp (-cm_{1})\left [\sum _{\ell=0}^{k}(-1)^{\ell} \frac{k!} {\ell!(k-\ell)!}\left ( \frac{c} {k}\right )^{\ell}m_{\ell}^{{\ast}}\right ]. }$$(11.9)

Here \(m_{1} = E_{\boldsymbol{\mathcal{C}}}[Q(s)]\) is the expected first moment of Q(s) and \(m_{\ell}^{{\ast}} = E_{\boldsymbol{\mathcal{C}}}[Q_{{\ast}}(s)^{\ell}],\ell= 0,1,2,\ldots,\) are the expected central moments of Q(s), with Q ∗(s) = Q(s) − m 1. By definition, m 0 ∗ = 1 and m 1 ∗ = 0.

Lee and Whitmore [7] also showed that the following log-survival function is a lower bound for the logarithm of the exact survival function (11.6):

where κ is short-hand for κ(−β), the c.g.f. evaluated at −β. This lower bound is quite tight for degradation processes with modest variability, especially for survival time below the median. Note that this lower-bound survival function is the leading expression exp(−cm 1) found in approximations (11.8) and (11.9).

As pointed out by Lee and Whitmore [7], both the exact survival function (11.6) and its lower bound in (11.10) are over-parameterized when they are estimated from censored survival data alone. Such data cannot be informative about every feature of the degradation and shock processes. Readings on the underlying degradation process {W(t)} or shock process {V (t)} individually are needed to estimate and validate the full model. This insight is completely reasonable because survival outcomes alone have limited information content when it comes to separating the influences of degradation and shocks in the failure mechanism.

A number of simple extensions for the shock-degradation model make it quite adaptable to practical application. The model can be modified to include a power transformed time scale, an analytical time scale or an exogenous cure rate, as may be required by a particular application. For example, a geometric Wiener degradation model can have an endogenous cure rate if the underlying process is one of growing strength rather than degrading strength (as in the social context of marriage dissolution, for example, or the survival of a business organization). An exogenous cure rate, on the other hand, recognizes that a subgroup of individuals under study may not be susceptible to failure. Our case applications demonstrate a power transformed time scale as well as cure rates of both the exogenous and endogenous variety.

Next, we present a few properties of the lower bound for the survival function given in (11.10) that are useful in understanding the behavior of the shock-degradation model where the degradation process is not highly variable.

-

Hazard function. The hazard function corresponding to the lower-bound survival function (11.10) is:

$$\displaystyle{ h_{L}(s) = c\exp (\kappa s). }$$(11.11)Thus, the hazard is increasing, constant or decreasing exponentially according to whether κ is positive, 0 or negative, respectively. As h L (0) = c = (α∕y 0)β, we see quite reasonably that the initial risk of failure depends on α∕y 0, the ratio of the 37th percentile shock to the initial strength of the system, modulated by the shock shape parameter β.

-

Probability density function. The probability density function (p.d.f.) of the lower-bound survival function (11.10) is:

$$\displaystyle{ \ln f_{L}(s) =\ln h_{L}(s) +\ln \overline{F}_{L}(s) =\ln (c) +\kappa s - c\left [\frac{e^{\kappa s} - 1} {\kappa } \right ]. }$$(11.12)The density function has a positive density of c at the origin and steadily declines as s increases if κ ≤ c. If κ > c, the density function has a single mode at ln(κ∕c)∕κ.

-

Cure rate. The lower-bound survival function in (11.10) has a positive probability of never failing if parameter κ < 0; specifically, lnP(S = ∞) = c∕κ.

3 Data Structures

Practical applications of shock-degradation models present a wide variety of data structures. We now elaborate on some of these data structures and their associated measurement and observation challenges.

3.1 Direct Readings on the Degradation Process

We first consider the topic of obtaining direct readings on the degradation process. As pointed out by Lee and Whitmore [7], readings on their shock-degradation process are unlikely to be disturbed significantly by the shock process when the readings are gathered over brief moments of time. Put differently, large shocks occur so infrequently in the time continuum that they are rarely encountered when making one or a few observations on the underlying degradation process in a limited observation period. As a practical illustration, a patient suffering from COPD may only have an acute exacerbation a few times each year so the patient’s lung condition on any random day of the year is likely to be close to its chronic stable level. Moreover, in actual clinical practice, a patient visit for spirometric testing is usually only scheduled when the patient’s condition is known to be stable.

To see the point mathematically, equation (11.1) gives the following probability for the maximum shock V observed in a time interval of length Δt > 0:

For fixed values of α, β and v > 0, this formula gives a probability that approaches 1 as Δt approaches zero. Thus, in this limiting sense, the probability of a material shock is vanishingly small if the process is observed at any arbitrary moment of time and, moreover, the probability of any material shock remains vanishingly small if shocks are observed for any finite number of such moments. Relaxing this theoretical statement to accept that Δt may not be vanishingly small, the mathematics implies that no shock of practical significance will be present in any finite number of observation intervals if each interval is sufficiently short. To illustrate the point numerically, consider a shock level v equal to α, the 37th percentile, and set β to 1. If the unit time is, say, one calendar year and Δt = 1∕365 (representing one day) then P(V ≤ v) = 0. 997. Thus, on only 3 occasions in 1000 will the maximum shock on that day exceed the 37th percentile maximum shock for a year. The practical lesson of this result is that significant shocks in a shock-degradation process occur sparingly and are rarely discovered at random moments of observation.

3.2 Observations on Failure Times, System Strength and Shocks

The preceding section has focussed on the survival function, which is an essential mathematical element for analyzing censored survival data for systems. Yet, actual applications frequently offer opportunities to observe and measure the underlying strength or degradation of the system at time points prior to failure, at failure, or at withdrawal from service or end of study. Observations on the strength process refer to measurements of the intrinsic strength of the system at moments of relative stability which are little influenced by shocks, as we noted earlier. Thus, in general, applications may involve censored survival data that are complemented by periodic readings on the underlying condition of the system.

To expand on the types of observation on degradation that may be available, we mention that degradation readings might be feasible for all systems under study. Alternatively, they may be available only for survivors because failure may destroy the system and make any reading on residual strength of the failing system impossible. In contrast, readings may be available only for failing items because measurement of residual strength may require a destructive disassembly of a system, which will not be feasible for systems still in service.

Our model assumes that strength and, therefore, degradation follows a one-dimensional process with failure being triggered when system strength is forced to zero by a shock. In reality, however, strength is usually a complex multidimensional process. Moreover, readings on the underlying strength may be unavailable. Rather, investigators may only have access to readings on a marker or surrogate process so that only indirect measurements of degradation are at hand. The marker process might be highly correlated with the actual degradation process but a high correlation is not assured. For example, electromigration of metallic material in the presence of an electrical field is a spatially complex degradation process that can cause failure of electronic components such as integrated circuits. This degradation process might be monitored by a single performance measure (for example, transported mass) that only captures the phenomenon in a crude manner. An alternative scenario is one in which we estimate a surrogate strength process that is a composite index (a linear regression function, say) constructed from readings on one or more observable marker processes that can be monitored through time. The practical realities of the context determine what can be done to produce an adequate model for the degradation process in the actual application.

Opportunities occasionally arise to observe and measure shocks in a variety of ways. For example, in COPD, various markers describe the magnitude of an acute exacerbation including severity of symptoms, recovery time, and the like. As a practical matter, however, shocks are often not observed if they are minor. In this situation, it is a left-truncated shock distribution that is observed and the truncation point may need to be estimated from the data. Again, considering COPD exacerbations as an illustration, these may not be measured unless they are above a threshold of severity; for instance, severe enough to require prescription medication or hospitalization.

The most general data situation encountered in practical application is one in which survival times and readings on degradation are jointly observed for individual systems. The data record for each individual system is a longitudinal one that consists of a sequence of readings (none, one, two, or more) on the strength or degradation process, gathered at irregularly spaced time points, together with a survival outcome (either a censoring time or a failure time). The readings on the strength or degradation process may be observations on the actual process or on one or more marker processes. A regression structure can be introduced if the setting provides data on relevant covariates for system parameters. In this situation, maximum likelihood estimation can be used. The evolving state or condition of a system often possesses the Markov property, which allows a tidy handling of longitudinal data under the threshold regression approach. Published methods for threshold regression with longitudinal data found in [5] have immediate application in this situation, as one of our later case applications will demonstrate. Applications that involve joint observation of survival and degradation require mathematical extensions of previous results [6, 8]. In the next section, we summarize pertinent results from [7].

4 Joint Observation of Survival and Degradation

We now consider the data elements found in a longitudinal record consisting of periodic readings on system degradation, ending with either a failure event or a survival event (that is, a right-censored failure event). Our line of development follows the method of Markov decomposition proposed in [5]. We limit our study now to the lower-bound survival function in (11.10). The likelihood function for a longitudinal record will be a product of conditionally independent events of two types. The first type is a failure event. In this event, the system has an initial strength, say y 0, and then fails at time s later. The likelihood of this event is given by a p.d.f. like that in (11.12). The second type is a survival event; more precisely, an event in which the system has an initial strength, say y 0, survives beyond a censoring time s (that is, S > s) and has a recorded strength at time s of, say, y > 0. Note that y = Y (s) = y 0exp[W(s)] = y 0exp(w), where w denotes the amount of degradation corresponding to strength y. Thus, this second event involves the following set of strength sample paths: \(\boldsymbol{\mathcal{C}}^{{\ast}} =\{ Y (t): 0 \leq t \leq s,Y (0) = y_{0},Y (s) = y = y_{0}\exp (w)\}\); in other words, the sample paths are pinned down at two end points but are otherwise free to vary. The likelihood of this second type of event is \(P\left \{S> s,W(s) \in [w,w + dw]\right \}\). This probability can be factored into the product of a conditional survival probability and the p.d.f. for degradation level w at time s, as follows:

The p.d.f. g(w) is known from the specified form of the degradation process. The conditional survival function \(\overline{F}(s\vert w)\) is less straightforward and has yet to be derived in a general form for the shock-degradation model. Lee and Whitmore [7] presented a more limited but very useful mathematical result which we now employ. For a degradation process with modest variability, they noted that a lower bound on the survival function is quite tight. For a pinned degradation process, they showed:

where c and Q(s) are defined as in (11.7). Expectation \(E_{\boldsymbol{\mathcal{C}}^{{\ast}}}\left [Q(s\vert w)\right ]\) does not have a general closed form for the family of degradation processes with stationary independent increments. However, Lee and Whitmore [7] derived a closed form for \(\ln \overline{F}_{L}(s\vert w)\) in the important case where the degradation process is a Wiener process. The derivation builds on properties of a Brownian bridge process. Their formula for the lower bound of the survival function for a pinned Wiener process is as follows:

where

and Φ(⋅ ) denotes the standard normal c.d.f.. The p.d.f. of the degradation level W(s) at time s in the Wiener case is given by

Thus, (11.16) and (11.17) are the two components required for evaluating the joint probability in (11.14) for lower-bound survival of a system beyond a censoring time S > s and having the degradation level w at time s.

5 Case Applications

We next present three case applications of shock-degradation models. The first application concerns osteoporotic hip fractures and has been published elsewhere. We summarize it briefly to demonstrate the wide range of potential applications. The second application looks at Norwegian divorces. It has not been published previously so we present more details on its development and findings. The third application considers survival times for cystic fibrosis patients. A deterministic version of the shock-degradation model was previously published for this case. We present an extension of the published model that incorporates explicitly the stochastic time course of patient lung function.

5.1 Osteoporotic Hip Fractures

Osteoporotic hip fractures in elderly women were studied by He et al. [4] using this shock-degradation model in conjunction with threshold regression. They studied times to first and second fractures. The underlying strength process in their model represented skeletal health. The shock process represented external traumas, such as falls and stumbles, which taken together with chronic osteoporosis, might trigger a fracture event. Threshold regression was used to associate time to fracture with baseline covariates of study participants.

The system strength model used by He et al. [4] is the deterministic exponential process that we described in Sect. 11.2.2, for which the log-survival function is:

The authors equated parameters ln(y 0) and λ to linear combinations of covariates while fixing the remaining parameters. The data set consists of censored times to first and second fractures. The models were fitted by maximum likelihood methods using (11.18). Technical details and discussion of the study findings can be found in the original publication.

5.2 Norwegian Divorces

Extensive data on the durations of marriages contracted in Norway are found in [9]. The data for the 1960, 1970 and 1980 marriage cohorts were presented and carefully analyzed by Aalen et al. [1]. They used the data to demonstrate various statistical concepts, models and techniques related to time-to-event data analysis. We use the same cohort data here to demonstrate the shock-degradation model. In this example, only censored survival data for the marriages are available. The data set has no longitudinal measurements on the strength of the marital unions themselves. Recall our explanation in the introduction that marriage might be viewed as a shock-degradation process in which some marriages actually tend to strengthen through time. In our model, a marriage is subjected to a stream of minor and major shocks and, if the marriage fails, the failure will occur at the moment of a shock that exceeds the residual strength of the marital bond. The data appear in Table 5.2 of [1]. The marriage duration numbers represent completed years of marriage. The midpoints of the yearly intervals are taken as the durations for divorces that occurred in the year. The marriage cohorts ignore cases lost to follow-up because of death or emigration. Marriages lasting more than 35, 25 and 15 years for the 1960, 1970 and 1980 cohorts are censored. The respective sample sizes are 23,651, 29,370 and 22,230 for these three cohorts.

We use the maximum likelihood method to estimate our model. We assume at the outset that the degradation process is a Wiener diffusion process for which κ = κ(−β) = −βμ + β 2 σ 2∕2. We use the lower-bound log-survival function (11.10) and its corresponding log-hazard function (11.11) in the sample likelihood calculation. We expected this bound to be reasonably tight to the actual survival function in this application and our sensitivity analysis discussed shortly justifies the approach. We incorporate regression functions for model parameters. Parameters are made to depend on linear combinations of covariates with logarithmic link functions being used for positive-valued parameters. As only censored survival data are available, some parameters of the process model cannot be estimated. Referring to the survival function in (11.10) for the Wiener case, we see immediately that μ and σ for the process cannot be estimated separately. Hence, we can only estimate the parameter ξ = μ −βσ 2∕2. Further, the survival function depends on α and y 0 only through the ratio α∕y 0. In terms of logarithms, the ratio becomes ln(α) − ln(y 0). Thus, these two logarithmic regression functions cannot be distinguished mathematically and are not estimable by maximum likelihood unless the regression functions depend on different sets of covariates. This mathematical result points out the practical reality that censored survival data cannot separate the effect of a covariate on the degradation process from its effect on the scale parameter α of the shock process when the covariates act multiplicatively, as implied by the use of log-linear regression functions. We therefore do not estimate α but rather set its value to 1. As α is the 37th percentile of the shock distribution irrespective of β, setting α to 1 makes this percentile the unit measure for the latent degradation process. Even with α set to 1, parameters β, y 0 and ξ cannot be independently estimated from censored survival data if all three have intercept terms. We therefore fix one more parameter and choose to set ξ = 0. 001. A positive value is chosen in anticipation that some marriages are not prone to fail. The magnitude 0.001 is chosen to produce a convenient scaling of the parameter values. In the end, therefore, only parameters β and y 0 will be estimated for the principal model. In addition, however, we also wish to extend the model using a power transformation of the time scale, that is, a transformation of form s = t γ with γ > 0. We estimate power exponent γ using a logarithmic link function. The power transformation implies that marriage breakdown occurs on a time scale that is accelerating or decelerating relative to calendar time depending on the value of exponent γ.

Given the preceding specifications for the model, the sample log-likelihood function in this case application has the form:

Here \(\boldsymbol{\theta }\) denotes the vector of regression coefficients. Index set N includes all couples in the sample data set and index set N 1 is the subset of couples for whom divorce durations are observed. The observed or censored marriage duration for couple i is denoted by t i and s i = t i γ is the power-transformed duration. The terms in the first sum of (11.19) are log-survival probabilities. Those in the second sum are log-hazard values, taken together with the values ln(γ) + (γ − 1)ln(t i ) which represent the log-Jacobian terms for the power transformation. The log-survival probabilities and log-hazard values are based on formulas for the lower-bound found in (11.10) and (11.11), respectively.

Table 11.1 presents the threshold regression results for the Norwegian marriage duration data. The parameters β and γ are made to depend on indicator variables for the marriage cohort through log-linear regression functions. The assumption is that socio-economic trends in Norway may have changed marriage stability over these three decades. The estimate for the initial marital strength y 0 is 3.871. This value is in units of the 37th percentile annual maximum shock. In other words, the initial marital strength is just under four modest shocks away from divorce. Estimates of β, the shape parameter for shocks, vary slightly (but significantly) across the cohorts, being 4.967 in 1960, 4.655 in 1970 and 4.744 in 1980. The magnitudes of this parameter suggest that modest shocks are numerous but extreme shocks that threaten a marriage are rare. For example, given the estimate for β in 1960 (4.967), the initial marital strength of 3.871 represents the 99.9th percentile of the maximum annual shock. Estimates of the power exponent γ for the time scale transformation also vary moderately (but significantly) across the cohorts, being 1.693 in 1960, 1.746 in 1970 and 2.106 in 1980. The estimates indicate an accelerating analytical time. The implication is that shock patterns will occur on a more compressed time scale as the marriage lengthens, for those marriages that eventually end in divorce. Interestingly, the power exponent is increasing with the decade, suggesting that the shock patterns are accelerating across cohorts (so extreme shocks of given size occur more frequently in calendar time). Finally, the proportions of marriages that would eventually end in divorce if the time horizon were extended indefinitely (and spousal deaths are ignored) are 0.215 for 1960, 0.326 for 1970 and 0.290 for 1980. We looked at asymptotic correlation coefficients for parameter estimates for suggestions of multicollinearity. The output shows one large correlation coefficient, namely, − 0. 998 for the intercepts of ln(β) and ln(y 0). This large value indicates that the magnitudes of errors in these two estimates are almost perfectly offsetting; in other words, the pattern of shocks and initial marital strength are mathematically difficult to distinguish when only censored survival data are available.

Figure 11.1 compares the Kaplan-Meier plots and the fitted shock-degradation survival functions for marriage duration in the Norwegian marriage cohorts. The fits are quite good, considering that only covariates for the marriage cohort are taken into account. The slight lack of fit that does appear for the shock-degradation model may be produced in part by administrative artifacts that cause the timing of a divorce decree to deviate from our theoretical survival model. Under Norwegian law, a divorce can be granted under several conditions. Spouses can divorce after legal separation for one year or without legal separation if they have lived apart for two years. Divorce can also be granted in cases of abuse or if spouses are closely related. These laws have the effect of lengthening the recorded marriage duration beyond the first hitting time for the threshold. Likewise, administrative and judicial delays may further delay divorce decrees and, hence, lengthen duration. We do not try to model these administrative influences.

We conducted a sensitivity analysis on the model to see if the variance parameter σ 2 of the Wiener process might differ from zero and could be estimated. As the model is already parameter rich for a case application that uses only censored survival data, we take the fit of the lower-bound survival model as a starting point. We then fix the regression coefficients for the β parameter at their lower-bound fitted values. Next, we set aside the fixed value of 0.001 for ξ and allow μ and σ to vary in the model (recall that ξ = μ −βσ 2∕2). A 4th-order Euler product-limit approximation for the true survival function is used. Starting with μ = 0. 001 and σ = 0, the sample log-likelihood begins to decline slowly as σ increases away from zero, which indicates that the lower-bound fitted model cannot be improved. Specifically, the sample log-likelihood for the fitted lower-bound model in Table 11.1 is − 94, 783. 6. This log-likelihood declines to − 94, 787. 2 as σ increases from 0 to 0.009. The estimate of μ simultaneously increases from 0.001 to 0.00117. Estimates of the other parameters, ln(y 0) and the regression coefficients for ln(γ), change little as μ and σ change. As anticipated by the theoretical analysis in Lee and Whitmore [7], the numerical analysis reaches a breakdown point as the variability of the degradation process increases. In this case, the breakdown occurs just beyond σ = 0. 009, which is a modest level of variability in this case setting.

5.3 Survival Times for Cystic Fibrosis Patients

Aaron et al. [2] used the shock-degradation model, together with threshold regression, to assess one-year risk of death for cystic fibrosis (CF) patients. Their data were drawn from the Canadian registry of cystic fibrosis patients. They used the version of the model having a deterministic exponential degradation trajectory. The authors developed a CF health index comprised of risk factors for CF chronic health, including clinical measurements of lung function. This CF health index was the strength process in their model. The shock process represented acute exacerbations, both small and large, to which CF patients are exposed. Death is triggered when an exacerbation overwhelms the residual health of a CF patient. The authors’ modeling focussed on short-term risk of death and produced a risk scoring formula that could be used for clinical decision making.

We extend the model in Aaron et al. [2] and consider the estimation of a stochastic degradation process for cystic fibrosis. For this task, we have created a synthetic data set that imitates data from a CF registry sufficiently well to demonstrate the technicalities of our modeling approach. Briefly, our synthetic data set has 2939 cystic fibrosis patients who have lung function measured during regular clinic visits that are usually about one year apart. Patients are assumed to be stable during these scheduled visits, that is, they are not experiencing an acute exacerbation at the time of the visit. Our synthetic data set has 40,846 scheduled clinic visits for all patients combined and includes 532 patient deaths. As shown by Aaron et al. [2], the health status of a cystic fibrosis patient is affected by many risk factors but the most important by far is the lung measurement forced expiratory volume in one second (FEV1), expressed as a fraction or percentage of the same measurement for a healthy person of the same height, age and sex. We refer to the fractional value as FEV1pred, which stands for FEV1 predicted. We take the patient’s FEV1pred as our strength measure and assume that its logarithm follows a Wiener diffusion process. Again, following Aaron et al. [2], we note that each patient has a longitudinal record of regular lung function measurements. We use the Markov decomposition approach to decompose each longitudinal record into a series of individual records as described in [5]. At each scheduled clinic visit, the patient either survives until the next scheduled visit or dies before the next visit. We therefore can estimate our Wiener shock-degradation model using maximum likelihood methods incorporating both censored survival times and degradation readings. We use the lower-bound results found in (11.16) and (11.17) to implement the maximum likelihood estimation, imitating the procedure in [2]. The parameters to be estimated by threshold regression methods for this shock-degradation model include α and β for the shock process and μ and σ for the Wiener degradation process. Note that y 0 and w are known because strength process Y (t) is equated with the patient’s evolving FEV1pred levels.

The log-likelihood function to be maximized in this case application is the following:

Here \(\boldsymbol{\theta }= (\alpha,\beta,\mu,\sigma )\) denotes the vector of parameters to be estimated. Index set N 0 includes all clinic visits i in which the patient survives until the next scheduled clinic visit at time s i and has a log-FEV1pred reading of w i on that next visit. Index set N 1 is the set of clinic visits i in which the patient dies at time s i after the visit (and before the next scheduled visit). Quantities \(\ln \overline{F}_{L}(s_{i}\vert w_{i})\) and lng(w i ) are calculated from formulas (11.16) and (11.17), respectively. Quantity lnf L (s i ) is calculated from formula (11.12).

Table 11.2 presents the parameter estimates. The sample size is n = 40, 846 clinic visits. The table shows logarithmic estimates for α, β and σ. The maximum likelihood estimates for α and β are 0.130 and 3.72, respectively. As α represents the 37th percentile of maximum annual shocks for a Fréchet process, our estimate for α suggests that cystic fibrosis patients have acute exacerbations that are more severe than a decline of 13% points in FEV1pred in about 2 of every 3 years on average. Given the estimates for both parameters, one can calculate from (11.2) that the 90th percentile of the most severe annual exacerbation of a cystic fibrosis patient is about 24% points of FEV1pred. The estimate of μ for the Wiener degradation process suggests that FEV1pred declines about 4.48% annually in cystic fibrosis patients. The estimate for σ is 0.236. This value indicates that log-FEV1pred varies annually by this amount, which is equivalent to average annual variation of about 27% in FEV1pred. Finally, our estimation routine also generates an estimate of the asymptotic correlation matrix of the parameter estimates (not shown). The matrix is helpful in checking for multicollinearity. The only correlation coefficient that is moderately large is that for the estimates of ln(α) and ln(β), which happens to be 0.65. The number shows that estimation errors for these parameters tend to lie in the same direction, that is, tending to be positive or negative together.

6 Discussion and Concluding Remarks

As we have illustrated, many systems in diverse fields of application degrade with time, asymptotically approaching a point of zero strength. These systems are often simultaneously exposed to a stochastic stream of shocks that momentarily weaken the system as they arrive. These systems ultimately fail when an incident shock exceeds the residual strength of the system. Thus, degradation is a contributing factor to failure but it is a shock that delivers the coup de grâce. Our illustrations also show that in certain circumstances a system that is strengthening rather than deteriorating with time may also fail because a sufficiently severe shock overwhelms its strength.

The survival function in (11.6) is based on a system strength process whose logarithm has stationary independent increments. This model formulation happens to be mathematically conjugate with the Fréchet process and, hence, yields a tidy mathematical form. If the geometric aspect is dropped in favor of a strength process that itself has stationary independent increments then some tractable forms of the survival function can be derived. For example, a deterministic linear strength process is a simple example. We do not develop or explore this alternative class of models here.

The shock stream assumed in our stochastic model does not produce cumulative damage, by assumption. In reality, however, there are shock systems that generate cumulative damage, such as fluctuating stresses that produce crack propagation in materials. A practical approximation to a cumulative damage process can be obtained within our modeling approach by assuming that the degradation process itself in our model is a monotone stochastic process, like the gamma process for example. Again, however, it would be a shock, superimposed on cumulative damage, that causes failure.

References

Aalen OO, Borgan O, Gjessing HK (2008) Survival and event history analysis: a process point of view. Statistics for biology and health. Springer, New York

Aaron SD, Stephenson AL, Cameron DW, Whitmore GA (2015) A statistical model to predict one-year risk of death in patients with cystic fibrosis. J Clin Epidemiol 68(11):1336–1345. doi: 10.1016/j.jclinepi.2014.12.010

Gumbel EJ (1953) Probability table for the analysis of extreme-value data: introduction. Applied mathematics series, vol 22. National Bureau of Standards, United States Department of Commerce, Washington, DC, pp 1–15

He X, Whitmore GA, Loo GY, Hochberg MC, Lee M-LT (2015) A model for time to fracture with a shock stream superimposed on progressive degradation: the Study of Osteoporotic Fractures. Stat Med 34(4):652–663. doi: 10.1002/sim.6356

Lee M-LT, Whitmore GA (2006) Threshold regression for survival analysis: modeling event times by a stochastic process reaching a boundary. Stat Sci 21:501–513

Lee M-LT, Whitmore GA (2010) Proportional hazards and threshold regression: their theoretical and practical connections. Lifetime Data Anal 16:196–214

Lee M-LT, Whitmore GA (2016) Shock-degradation failure processes and their survival distributions, manuscript under review

Lee M-LT, Whitmore GA, Rosner BA (2010) Threshold regression for survival data with time-varying covariates. Stat Med 29:896–905

Mamelund S-E, Brunborg H, Noack T. Divorce in Norway 1886–1995 by calendar year and marriage cohort. Technical report 97/19. Statistics Norway

Acknowledgements

Mei-Ling T. Lee is supported in part by NIH Grant R01 AI121259. We thank research colleagues in the fields of osteoporosis, cystic fibrosis and chronic obstructive pulmonary disease for making us aware of the important role that physical traumas and acute exacerbations play in initiating critical medical events in their fields. Given our previous awareness of the importance of shock processes in causing failure in engineering systems, it was not difficult for us to see the shock-degradation process as a general failure mechanism and to anticipate its natural extension to social and economic systems as well.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Lee, ML.T., Whitmore, G.A. (2017). Practical Applications of a Family of Shock-Degradation Failure Models. In: Chen, DG., Lio, Y., Ng, H., Tsai, TR. (eds) Statistical Modeling for Degradation Data. ICSA Book Series in Statistics. Springer, Singapore. https://doi.org/10.1007/978-981-10-5194-4_11

Download citation

DOI: https://doi.org/10.1007/978-981-10-5194-4_11

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-5193-7

Online ISBN: 978-981-10-5194-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)