Abstract

This chapter gives an overview on how the analytic hierarchy process (AHP) methodology can be used to elicit patients’ preferences and presents case studies on how this methodology may inform HTA and HTA-based decisions. Patients’ preferences are, together with external scientific knowledge and physician’s experience, the tenets of evidence-based medicine (Sackett et al., BMJ 312:71, 1996). To ensure that data on patients’ preferences is considered as robust evidence for decision-making, it should be generated in a methodologically sound, structured, and transparent way. AHP is a multiple-criteria decision-analytic (MCDA) method that can be used to elicit patients’ preferences for specific treatment characteristics or outcomes assessed in HTA. The steps in conducting an AHP are depicted. AHP follows transparent mathematical rules for data analysis but has its own methodological challenges and opportunities as depicted in this chapter. Examples of how AHP may be used in HTA and decision-making are provided and discussed.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

This chapter gives an overview on how the analytic hierarchy process (AHP) methodology can be used to elicit patients’ preferences and presents case studies on how this methodology may inform HTA and HTA-based decisions. Patients’ preferences are, together with external scientific knowledge and physician’s experience, the tenets of evidence-based medicine (Sackett et al. 1996). To ensure that data on patients’ preferences is considered as robust evidence for decision-making, it should be generated in a methodologically sound, structured, and transparent way. AHP is a multiple-criteria decision-analytic (MCDA) method that can be used to elicit patients’ preferences for specific treatment characteristics or outcomes assessed in HTA. The steps in conducting an AHP are depicted. AHP follows transparent mathematical rules for data analysis but has its own methodological challenges and opportunities as depicted in this chapter. Examples of how AHP may be used in HTA and decision-making are provided and discussed.

2 A Role for AHP in Patient Preference Elicitation

A number of recent reviews and pilot applications in HTA have pointed to the potential of MCDA methods to guide healthcare decision-making or get structured information on patient preferences (Marsh et al. 2014; Maruthur et al. 2015; Danner et al. 2011; Ho et al. 2015). The AHP, which was developed by the mathematician Thomas L. Saaty in the 1970s (Saaty 1977), is, among others, one MCDA approach that can be used to elicit preferences and measure the relative importance of decision criteria to decision-makers including patients (Hummel et al. 2014; Dolan 2010). Recent reviews (Adunlin et al. 2015a; Marsh et al. 2014) suggest AHP is the most used MCDA technique.

While DCE (Chap. 10) is rooted in expected utility theory, AHP is a decision-analytic approach. It decomposes and structures a decision problem into its basic elements, asks decision-makers to value these elements relative to each other, and then combines these judgments to generate composite value information on the alternatives or criteria making up a decision problem. AHP is not an approach to assess value in terms of money or utility units as is the DCE. Rather, it aims to assign a relative value to its elements—generating relative importance weights. Saaty proposed AHP to facilitate complex decision-making, especially for groups of decision-makers (Saaty 1977, 1994). According to Whitaker (Whitaker 2007, p. 859), the AHP in group decision processes “tends to give better results because of the broader knowledge available and also because of the possibility of debates that may arise and change people’s understanding.” While DCE is based on the assumption that patients try to maximize their utility each time they make a choice, AHP assumes no normative theory predicting choices but admits that individuals—in terms of bounded rationality—often deviate from the basic assumptions of rationality (Simon 1978; Kinoshita 2005). Rather the goal of AHP is to structure a decision and make values and preferences transparent to enable informed and—ideally—more rational decisions. This is why AHP is considered a “descriptive” rather than a “normative” theory.

In healthcare, AHP is often used to elicit preferences from experts (clinicians, administrators) to support structured and transparent decision or planning processes (Benaim et al. 2010). It has, to a lesser extent, been used to elicit preferences from patients, sometimes in larger samples (Dolan et al. 2013b; Kuruoglu et al. 2015) or limited to small patient group surveys (Danner et al. 2011). Eliciting preferences from patients instead of other decision-makers is different in several ways. Firstly, patients are direct consumers of the decisions they take regarding their own health. Decision-relevant criteria often cause anxieties and involve uncertainty or, e.g., risks of side effects, which impact decision heuristics. Secondly, information asymmetries dominate the decision situation. Physicians are usually well informed about the evidence and have professional experience, while the patient is less well informed and lacks professional experience. On the other hand, health illiteracy—especially about statistical information such as risks or probabilities—is prevalent on both sides, physician and patient, and adequate communication about these issues remains insufficient (Envisioning Health Care 2020, 2011).

3 Steps in Developing an AHP Preference Elicitation

Similar to the recommendations published by the International Society for Pharmacoeconomics and Outcomes Research (ISPOR) for the development of a DCE study (Bridges et al. 2011; Johnson et al. 2013), an AHP should be carefully prepared and well designed, as depicted in Fig. 11.1.

In line with the ISPOR recommendations, a literature search, expert interviews, as well as qualitative work with patients should be employed to select and refine decision-relevant criteria and subcriteria.

4 How Does AHP Work?

The AHP allows elicitation of preferences using a procedure of pairwise comparison between decision criteria and treatment alternatives (Saaty 1977; Dolan 1989). AHP first structures a decision in a hierarchy (Fig. 11.2). The objective of a decision is positioned at the highest level (e.g., to weigh the benefits and risk of alternative treatments), followed by relevant decision criteria (e.g., effectiveness or side effects of alternative treatments) and clusters of subcriteria at the next level(s) (e.g., reduction of different symptoms to specify the effectiveness criterion). The treatment alternatives are placed at the lowest level of the AHP hierarchy but may not be part of the AHP preference elicitation process. Elements in the hierarchy should be comprehensive to give a complete picture of the decision situation. Lower-level elements should be independent of the next higher-level elements, and elements at one level should ideally not overlap. Also, a comparison between two criteria should be independent of a third criterion at that level in the cluster.

AHP is either used in a more comprehensive assessment to prioritize or rank the performance of alternative treatment options based on preferences for decision criteria and alternatives or, in a more reduced form, to just elicit respondents’ preferences for different decision criteria to measure their relative importance (Angelis, Kanavos 2016). The total number of pairwise comparisons at each level of the hierarchy and in each cluster of criteria is given by (n*(n – 1))/2 (see blue and green lines in Fig. 11.2). In these pairwise comparisons, AHP respondents express how strongly they prefer one criterion, subcriterion, or alternative compared to the other one. The strength of preference is usually measured on a two-sided nine-point ratio scale. While each point on the scale has an ordinal verbal interpretation to facilitate judgments, the numerical (ratio scale) values are used in AHP weight calculations (see Fig. 11.3).

When comparing criteria i and j, choosing “1” on the scale means that criterion i is equally important or preferred by the patient as criterion j, 3 means i is moderately more important than j, 5 means much more important, 7 means very much more important, and 9 means extremely more important. The intermediate values 2, 4, 6, and 8 may also be chosen. If alternatives are part of the procedure, the question format reflects how an alternative is rated on its performance relating to two specific (sub)criteria compared to each other.

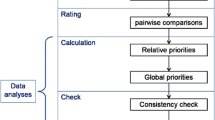

Based on pairwise comparisons provided, the AHP uses a direct mathematical approach to calculate “importance” weights for each of the included criteria and alternatives. All comparisons resulting from the AHP survey are first transferred to a comparison matrix A = [a ij ]. Values at the upper right side of the matrix’s diagonal are the result of actual pairwise comparisons; values at its lower left side are their reciprocals. Local importance weights in AHP are calculated using the principal right eigenvector approach (Saaty 1977; Dolan 1989), which represents the vector of weights (w) of included criteria/subcriteria in case of a reciprocal matrix. The calculation is based on the following matrix algebraic equation: some comparison matrix A multiplied by its right eigenvector w is, in case of a nonnegative reciprocal matrix, equal to the matrix’s maximal eigenvalue λ max multiplied by w (A × w = λ max × w). Based on this relationship, the right eigenvector may be estimated for each matrix by, for example, using the matrix multiplication method (Dolan 1989). In practical terms, this process is “a simple averaging process by which the final weights are the average of all possible ways of comparing the scores on the pairwise comparisons” (Hummel et al. 2014). Alternative calculation and analysis modes may be used (Ishizaka, Labib 2009; Dolan 1989). AHP weight vector calculation may well be performed in Microsoft Excel but is also supported by professional software (e.g., Expert Choice Comparison, http://expertchoice.com/comparion/, or SuperDecisions, www.superdecisions.com). For a comprehensive list of available software, see Hummel et al. (2014).

Data aggregation for groups of AHP respondents may be done in two ways. Aggregated weights may be calculated as the average mean of individual weights calculated based on individual judgments (so-called aggregation of individual priorities (AIP)) or by calculating AHP weights for the group based on the geometric mean of all individual judgments (so-called aggregation of individual judgments (AIJ)) (Forman and Peniwati 1998). The aggregation method should depend on the specific decision context. If the group is considered as one entity striving for consensus, the AIJ aggregation is usually preferred. If the focus is on eliciting individual preferences of a group of heterogenous decision-makers, the AIP method is preferred. The latter might be more relevant in patient preference research where heterogenous groups of individuals are surveyed.

Finally, AHP allows calculation of a “consistency ratio (CR),” which measures the logical consistency of pairwise judgments within a cluster of judgments. The concept of consistency relies on two basic assumptions of the AHP: the transitivity of preferences (i.e., if A > B (A preferred to B) and B > C, then A > C) and the reciprocity of judgments. While transitivity is a necessary condition for consistency, AHP does not require that preferences are perfectly transitive. Technically, the CR measures how much the measured consistency of a matrix, the consistency index (CI), differs from the average consistency (the so-called random index, RI) of a simulated set of reciprocal but totally random pairwise comparison matrices. The consistency index of matrix A is calculated by the following formula: \( CI=\frac{\lambda_{\max }- n}{n-1} \). The CR is defined as CI/RI. The closer CI and RI are, the higher the CR and the greater the probability that judgments in a comparison matrix result from a completely random decision process. The smaller the CI in relation to the RI, the smaller the CR and the higher the probability that judgments are the result of a consistent decision process. For details on calculation of the consistency ratio and its components, refer to the literature (Saaty 2000; Dolan et al. 1989).

5 Using AHP to Elicit Patients’ Preferences in Healthcare Policy and HTA

Awareness is increasing that the views and preferences of patients as primary consumers of health interventions should be taken into account at various levels and steps in decision-making. Involving patients early in HTA and decision processes may increase the legitimacy of final decisions. Knowing patient preferences can further help determine which health technologies, interventions, and types of services should be offered to patients. It can also increase adherence to them. Technically, AHP has the advantage of facilitating direct calculation of preference weights for individual patients, which is beneficial if AHP is to be integrated in decision aids, for example. Aggregated preference information, on the other hand, can feed into decisions to prioritize interventions at the other decision levels. Since a current overview of AHP applications can be found in Schmidt et al. (2015) (Schmidt et al. 2015) and Adunlin et al. (2015b) (Adunlin et al. 2015b), a sample of recent publications was used to demonstrate how AHP may enable the uptake of patient preference information into HTA-based decision processes.

5.1 Example 1: Health Policy Decisions—Uptake of Preventive Screening Measures

Three recent AHPs elicited patient preferences for colorectal cancer screening interventions in different settings and using different survey modes (Xu et al. 2015; Hummel et al. 2013; Dolan et al. 2013a, b). Two studies were conducted in the USA and one in the Netherlands. They were administered as paper-pencil questionnaire (Xu et al. 2015) and online survey (Hummel et al. 2013) or in personal interviews (Dolan et al. 2013a, b). While Xu et al. (2015) limited their study population to individuals who had experienced screening before, such restrictions were not applied in the other studies. All studies concluded that patient preference information is indispensable, especially in helping to understand why certain screening programs have better uptake than others.

5.1.1 Study Findings and Insights

All studies identified clinical outcome criteria such as the “prevention of cancer” or the “sensitivity” or “accuracy” of the screening method to detect cancer, as well as the “safety/complication frequency/side effects” of the test as being most relevant. The studies also suggest that process-related screening characteristics such as the frequency of the test or the complexity of test preparation (or “convenience of test” or “logistics”) play an important role when patients finally decide to undergo a test or not. Therefore, when offering a specific screening test to a population, it is important to adequately inform patients about test characteristics to ensure uptake.

5.1.2 Methodological Insights

Only 167 of the 650 patients (26%) who returned the completed questionnaire in the AHP study by Hummel (Hummel et al. 2013) provided consistent responses—based on a consistency ratio below 0.3. Dolan (Dolan et al. 2013a, b) included 379 of 484 (78%) of patients and Xu (Xu et al. 2015) included 667 out of 954 (70%) patients with a consistency ratio below 0.15 in their analyses. Including inconsistent respondents in AHP analyses might bias study results. Excluding them, on the other hand, might put the external validity of a study at stake. AHP studies should explore the effects of excluding inconsistent respondents on results in sensitivity analyses as in Hummel et al. In addition, the demographic or disease-related characteristics of included participants may be compared to the overall target population to explore reasons for inconsistency. Technical reasons for inconsistency that were identified by the authors were the complexity and a large number of pairwise comparisons and not providing the option to patients to revise inconsistent judgments. While Xu et al. indicate that due to the individuality of patient preferences, the aggregation of patient priorities was performed using the described AIP method, the other studies do not explicitly provide information on the chosen aggregation mode.

5.2 Example 2: Health Policy Decisions—Drug Reimbursement or Approval

The German Institute for Quality and Efficiency in Healthcare (IQWiG) regularly assesses the additional benefit of new drugs seeking reimbursement (Chap. 25). These HTAs focus on clinical outcomes measuring mortality, morbidity, side effects, and quality of life. IQWiG conducted two preference elicitation studies where patients valued the importance of treatment outcomes in different indications to test whether this information could be used to prioritize outcome-specific HTA results. One of these studies was conducted using AHP (Danner et al. 2013; Gerber-Grote et al. 2014). Another DCE study that identified patient preferences for outcomes and characteristics of lung cancer treatments was recently submitted by a pharmaceutical company to support the benefit assessment of the lung cancer drug afatinib (Muhlbacher and Bethge 2015; Gemeinsamer Bundesausschuss (G-BA) 2014).

5.2.1 Study Findings and Insights

The IQWiG AHP study was conducted in a group setting, separately with patients and clinicians (Danner et al. 2013). Patients valued treatment outcomes differently from clinicians. They considered fast response to treatment most important, while experts considered remission and avoidance of relapse most important. Patients also rated the quality of life dimensions cognitive function, reduction of anxiety, and social function higher than experts. The DCE lung cancer study (Muhlbacher and Bethge 2015) found that the clinical treatment endpoints of progression-free survival and reduction of the tumor-specific symptoms such as coughing, shortness of breath, and pain were most relevant and of comparable importance to patients.

5.2.2 Methodological Insights

The IQWiG pilot projects suggest that AHP or other preference methods may be used to generate weights or prioritize outcome-specific HTA results. Yet, no gold standard method for preference elicitation exists, and methods like AHP or DCE will need further research and testing in practical applications to learn more about their specific characteristics and suitability in different settings. Using patients as the target population in these assessments seems legitimate since their preferences as “consumers” of healthcare interventions likely differ from their physicians’, the general public, or other HTA stakeholders’ preferences (Muhlbacher and Juhnke 2013; Danner et al. 2011). The German AHP study further points to the potential of group studies in patients since the group setting facilitates exchange of information and experience. Group interaction is also likely to help increase the consistency of judgments by avoiding judgmental errors or misunderstandings. Group studies may, on the other hand, suffer from dominant individuals’ leading group discussions (Thokala et al. 2016). In the DCE lung cancer study, patient preferences were elicited including progression-free survival as surrogate endpoint for overall survival as one outcome. IQWiG in the respective hearing stated that—in its view and in accordance with many other regulatory and HTA bodies—the (most) important patient-relevant endpoint overall survival was not included in the preference elicitation task (Gemeinsamer Bundesausschuss (G-BA) 2014). It is thus important to select outcomes or criteria for preference elicitation which are accepted within a specific HTA decision context.

Elicitation of patients’ relative judgments may be an important tool to inform and support authorities’ outcome-specific evidence or benefit/risk prioritization preceding approval or reimbursement decisions. The latter was also highlighted by the FDA in its recently released guidance on patient preference research (FDA Center for Devices and Radiological Health 2016).

6 Where and How Should Information on Patient Preferences Inform HTA?

As the examples above suggest, AHP may be used at different levels in decision-making. While a range of stated preference or multi-criteria-analytic methods may be used to elicit preferences, methods like DCE or AHP have the advantage to force patients to make trade-offs between criteria. An advantage of AHP could be its ability to directly calculate weights for individual decision criteria—in contrast to DCE, where this is only indirectly possible using attribute-level ranges and, therefore, dependent on the chosen levels. AHP is less easily applicable to generate utilities or exchange rates (e.g., in monetary units) compared to a DCE and may not be readily usable in cost-utility analyses to support resource allocation. A study by Reddy et al. (2015), however, takes up the AHP as an alternative to time-trade-off to calculate utilities for EQ-5D health states based on ordinal preference data from an AHP. The authors conclude that the described method “… offers the potential to convert ordinal preference data into cardinal utilities” being “simpler than TTO (time trade off) studies to carry out….” Whether AHP might in the future play a role in such applications remains to be seen; DCEs or other conjoint analytic techniques are currently preferred in these instances.

7 Practical and Methodological Issues with AHP

In line with most recent ISPOR recommendations, an AHP as any MCDA study should be carefully developed and follow the steps only recently suggested by the respective MCDA task force (Thokala et al. 2016; Marsh et al. 2016). Careful selection and refinement of decision criteria, the combination of quantitative with qualitative elements, and a transparent documentation and calculation of importance weights are essential (Marsh et al. 2016). The practical aspects of an AHP, such as survey format or administration, depend on the target population and the study objective. An AHP group setting or an interviewer-assisted questionnaire administration both may facilitate the generation of combined qualitative and quantitative information on patient preferences. In the group setting, patients provide judgments, then discuss the individual judgments in a group, and finally may revise their individual judgments. While the group setting might suffer from influential or dominant participants, the qualitative component provides insights into patients’ reasoning and their decision-making processes. This may equally be attained in an interviewer-assisted setting if patients are asked to think aloud throughout providing judgments. Individual online or paper-pencil surveys, on the other hand, are limited to quantitative information. Also, inconsistency might be higher. Striking a balance between internal consistency (excluding inconsistent respondents) and external validity (including all respondents) is a challenge. An option to reduce inconsistency would be to use online tools, such as those offered by the Expert Choice software, asking participants to verify their judgments in case of high inconsistency. Researchers will have to define which setting (group or individual, interviewer-assisted or not, online or in-person) and which type of information (qualitative and/or quantitative) are needed.

In the literature, inconsistency ratio thresholds from 0.1 to 0.3 have been used to identify inconsistent respondents. While in a group setting a CR of 0.1 might be a good threshold, it is likely not for large individual surveys, where a limit of 0.2 or 0.3 seems reasonable. There is no agreement on the thresholds to use in preference studies yet.

The face validity of an AHP study may be increased by following rigorous steps in the development of the design (e.g., comprehensive set of relevant criteria, ensure independence of criteria at one level) and by using qualitative elements to verify the generated quantitative information (Marsh et al. 2016). Also, convergent validity could be tested by assessing preferences using different methods for preference elicitation. There is some debate about the reliability of AHP in that interviewing the same group of patients at different points in time might lead to different findings. This is likely true since preferences depend on patient characteristics and patient characteristics change over time. Hence, a clear definition of the study population is important.

AHP has undergone a range of methodological criticisms. Probably the issue that has been most frequently raised in the past years is rank reversal. This may be observed in AHP and other MCDA methods when an identical copy of an alternative or new but non-discriminating criterion is added to the decision hierarchy (Maleki and Zahir 2013). Several methodological recommendations (e.g., comprehensiveness of AHP hierarchy, relevance of included criteria) and analysis modes (e.g., ideal eigenvector standardization mode) to prevent or minimize the risk of rank reversal have been proposed and are frequently applied (e.g., Wang and Elhag 2006; Hummel et al. 2014; Ishizaka, Labib 2009). Other issues are the appropriateness of the AHP judgment scale and the search for other/more appropriate scales to reflect respondents’ values. Several publications discuss the AHP ratio scale and its potential limitations (e.g., not a continuous scale, being bounded, or not appropriately representing verbal judgments) (e.g., Dong et al. 2008; Hummel et al. 2014). Other scales avoiding the potential weaknesses of the AHP scale were developed (e.g., Lootsma or geometric scales, other continuous, smaller, or wider scales) and are explored, but the AHP scale is still the most used scale. Finally, the relatively “abstract” pairwise comparison of individual criteria in AHP—while making the procedure easy and transparent—has been criticized. In comparison, a DCE presenting entire choice sets to respondents appears to be a more realistic and holistic approach and easier to understand for patients who are used to choose between treatment alternatives. However, most recent AHP studies suggest that AHP is feasible for patients once they understand the type of task—including the AHP judgment scale—they have to perform (Danner et al. 2016).

8 Conclusion

As for any method to be used in HTA or other kinds of health-related assessments, AHP is not suited for all kinds of decision-making in healthcare. We consider AHP a very valuable preference elicitation tool, especially to enrich decision aids or prioritize criteria, endpoints, or alternatives in complex preference-sensitive and HTA-based decision-making. However, we also caution its application. There are methodological challenges, so it is important to present these and account for them transparently (e.g., in sensitivity analyses). It should be noted that while there is no “gold standard” approach for patient preference elicitation, research is always a dynamic and ongoing process.

References

Adunlin G, Diaby V, Montero AJ, Xiao H. Multicriteria decision analysis in oncology. Health Expect. 2015a;18:1812–26. doi:10.1111/hex.12178.

Adunlin G, Diaby V, Xiao H. Application of multicriteria decision analysis in health care: a systematic review and bibliometric analysis. Health Expect. 2015b;18(6):1894–905. doi:10.1111/hex.12287.

Angelis A, Kanavos P. Value-based assessment of new medical technologies: towards a robust methodological framework for the application of multiple criteria decision analysis in the context of health technology assessment. PharmacoEconomics. 2016;34(5):435–46. doi:10.1007/s40273-015-0370-z.

Benaim C, Perennou DA, Pelissier JY, Daures JP. Using an analytical hierarchy process (AHP) for weighting items of a measurement scale: a pilot study. Rev Epidemiol Sante Publique. 2010;58:59–63. doi:10.1016/j.respe.2009.09.004.

Envisioning Health Care 2020. Better doctors, better patients, better decisions. Cambridge MA: MIT Press; 2011.

Bridges JF, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, et al. Conjoint analysis applications in health—a checklist: a report of the ISPOR good research practices for conjoint analysis task force. Value Health. 2011;14:403–13. doi:10.1016/j.jval.2010.11.013.

FDA Center for Devices and Radiological Health. Patient Preference Information—Voluntary Submission, Review in Premarket Approval Applications, Humanitarian Device Exemption Applications, and De Novo Requests, and Inclusion in Decision Summaries and Device Labeling. Guidance for Industry, Food and Drug Administration Staff, and Other Stakeholders. http://www.fda.gov/downloads/%20MedicalDevices/DeviceRegulationandGuidance/GuidanceDocuments/UCM446680.pdf (2016). Accessed 29 Aug 2016.

Danner M, Gerber-Grote A, Volz F, Wiegard B, Hummel JM, Ijzerman MJ et al. Analytic Hierarchy Process (AHP)—Pilotprojekt zur Erhebung von Patientenpräferenzen in der Indikation Depression. 2013. https://www.iqwig.de/download/Arbeitspapier_Analytic-Hierarchy-Process_Pilotprojekt.pdf. Accessed: 11 Nov 2016.

Danner M, Hummel JM, Volz F, van Manen JG, Wiegard B, Dintsios CM, et al. Integrating patients’ views into health technology assessment: analytic hierarchy process (AHP) as a method to elicit patient preferences. Int J Technol Assess Health Care. 2011;27:369–75. doi:10.1017/S0266462311000523.

Danner M, Vennedey V, Hiligsmann M, Fauser S, Gross C, Stock S. How well can analytic hierarchy process be used to elicit individual preferences? Insights from a survey in patients suffering from age-related macular degeneration. Patient. 2016;9(5):481–92. doi:10.1007/s40271-016-0179-7.

Dolan JG. Medical decision making using the analytic hierarchy process: choice of initial antimicrobial therapy for acute pyelonephritis. Med Decis Mak. 1989;9:51–6.

Dolan JG. Multi-criteria clinical decision support: a primer on the use of multiple criteria decision making methods to promote evidence-based, patient-centered healthcare. Patient. 2010;3:229–48. doi:10.2165/11539470-000000000-00000.

Dolan JG, Boohaker E, Allison J, Imperiale TF. Can streamlined multicriteria decision analysis be used to implement shared decision making for colorectal cancer screening? Med Decis Mak. 2013a;34:746–55. doi:10.1177/0272989X13513338.

Dolan JG, Boohaker E, Allison J, Imperiale TF. Patients’ preferences and priorities regarding colorectal cancer screening. Med Decis Mak. 2013b;33:59–70. doi:10.1177/0272989X12453502.

Dolan JG, Isselhardt Jr BJ, Cappuccio JD. The analytic hierarchy process in medical decision making: a tutorial. Med Decis Mak. 1989;9:40–50.

Dong Y, Xu Y, Li H, Dai M. A comparative study of the numerical scales and the prioritization methods in AHP. Eur J Oper Res. 2008;186:229–42. doi:10.1016/j.ejor.2007.01.044.

Forman E, Peniwati K. Aggregating individual judgements and priorities with the analytic hierarchy process. Eur J Oper Res. 1998;108 doi:10.1016/S0377-2217(97)00244-0.

Mündliche Anhörung gemäß 5. Kapitel § 19 Abs. 2 Verfahrensordnung des Gemeinsamen Bundesausschusses—hier: Wirkstoff Afatinib [database on the Internet]. 2014. Available from: https://www.g-ba.de/downloads/91-1031-87/2014-03-25_Wortprotokoll_end_Afatinib.pdf. Accessed: 6 Oct 2016.

Gerber-Grote A, Dintsios CM, Scheibler F, Schwalm A, Wiegard B, Mühlbacher A et al. Wahlbasierte Conjoint-Analyse—Pilotprojekt zur Identifikation, Gewichtung und Priorisierung multipler Attribute in der Indikation Hepatitis C. 2014.

Ho MP, Gonzalez JM, Lerner HP, Neuland CY, Whang JM, McMurry-Heath M, et al. Incorporating patient-preference evidence into regulatory decision making. Surg Endosc. 2015;29:2984–93. doi:10.1007/s00464-014-4044-2.

Hummel JM, Bridges JF, IJzerman MJ. Group decision making with the analytic hierarchy process in benefit-risk assessment: a tutorial. Patient. 2014;7:129–40.

Hummel JM, Steuten LG, Groothuis-Oudshoorn CJ, Mulder N, Ijzerman MJ. Preferences for colorectal cancer screening techniques and intention to attend: a multi-criteria decision analysis. Appl Health Econ and Health Policy. 2013;11:499–507. doi:10.1007/s40258-013-0051-z.

Ishizaka A, Labib A. Analytic hierarchy process and expert choice: benefits and limitations. OR Insight. 2009;22:201–20.

Johnson RF, Lancsar E, Marshall D, Kilambi V, Muhlbacher A, Regier DA, et al. Constructing experimental designs for discrete-choice experiments: report of the ISPOR conjoint analysis experimental design good research practices task force. Value Health. 2013;16:3–13. doi:10.1016/j.jval.2012.08.2223.

Kinoshita E. Why we need AHP/ANP instead of utility theory in today’s complex world—AHP from the perspective of bounded rationality. 2005. http://citeseerx.ist.psu.edu/viewdoc/download;jsessionid=C1A22937FCE9669436D827A37E0A1689?doi=10.1.1.333.5543&rep=rep1&type=pdf. Accessed: 6 Oct 2016.

Kuruoglu E, Guldal D, Mevsim V, Gunvar T. Which family physician should I choose? The analytic hierarchy process approach for ranking of criteria in the selection of a family physician. BMC Med Inform Decis Mak. 2015;15:63. doi:10.1186/s12911-015-0183-1.

Maleki H, Zahir S. A comprehensive literature review of the rank reversal phenomenon in the analytic hierarchy process. J Multi-Criteria Decis Anal. 2013;20:141–55. doi:10.1002/mcda.1479.

Marsh K, Lanitis T, Neasham D, Orfanos P, Caro J. Assessing the value of healthcare interventions using multi-criteria decision analysis: a review of the literature. PharmacoEconomics. 2014;32:345–65. doi:10.1007/s40273-014-0135-0.

Marsh K, IJzerman M, Thokala P, Baltussen R, Boysen M, Kalo Z, et al. Multiple criteria decision analysis for health care decision making-emerging good practices: report 2 of the ISPOR MCDA emerging good practices task force. Value Health. 2016;19:125–37. doi:10.1016/j.jval.2015.12.016.

Maruthur NM, Joy SM, Dolan JG, Shihab HM, Singh S. Use of the analytic hierarchy process for medication decision-making in type 2 diabetes. PLoS One. 2015;10:e0126625. doi:10.1371/journal.pone.0126625.

Muhlbacher AC, Bethge S. Patients’ preferences: a discrete-choice experiment for treatment of non-small-cell lung cancer. The Europ J Health Econ. 2015;16(6):657–70. doi:10.1007/s10198-014-0622-4.

Muhlbacher AC, Juhnke C. Patient preferences versus physicians’ judgement: does it make a difference in healthcare decision making? Appl Health Econ Health Policy. 2013;11:163–80. doi:10.1007/s40258-013-0023-3.

Reddy BP, Adams R, Walsh C, Barry M, Kind P. Using the analytic hierarchy process to derive health state utilities from ordinal preference data. Value Health. 2015;18:841–5. doi:10.1016/j.jval.2015.05.010.

Saaty TL. A scaling method for priorities in hierarchical structures. J Math Psychol. 1977;15 doi:10.1016/0022-2496(77)90033-5.

Saaty TL. Highlights and critical points in the theory and application of the analytic hierarchy process. Eur J Oper Res. 1994;74 doi:10.1016/0377-2217(94)90222-4.

Saaty TL. Fundamentals of decision making and priority theory with the analytic hierarchy process. Pittsburgh PA: RWS Publications; 2000.

Sackett DL, Rosenberg WM, Gray J, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312:71.

Schmidt K, Aumann I, Hollander I, Damm K, von der Schulenburg JM. Applying the analytic hierarchy process in healthcare research: a systematic literature review and evaluation of reporting. BMC Med Inform Decis Mak. 2015;15(1):112. doi:10.1186/s12911-015-0234-7.

Simon HA. Rationality as process and as product of thought. Am Econ Rev. 1978;68:1–16.

Thokala P, Devlin N, Marsh K, Baltussen R, Boysen M, Kalo Z, et al. Multiple criteria decision analysis for health care decision making-an introduction: report 1 of the ISPOR MCDA emerging good practices task force. Value Health. 2016;19:1–13. doi:10.1016/j.jval.2015.12.003.

Wang Y-M, Elhag TMS. An approach to avoiding rank reversal in AHP. Decis Support Syst. 2006;42:1474–80. doi:10.1016/j.dss.2005.12.002.

Whitaker R. Validation examples of the analytic hierarchy process and analytic network process. Math Comput Model. 2007;46:840–59. doi:10.1016/j.mcm.2007.03.018.

Xu Y, Levy BT, Daly JM, Bergus GR, Dunkelberg JC. Comparison of patient preferences for fecal immunochemical test or colonoscopy using the analytic hierarchy process. BMC Health Serv Res. 2015;15:175. doi:10.1186/s12913-015-0841-0.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this chapter

Cite this chapter

Danner, M., Gerber-Grote, A. (2017). Analytic Hierarchy Process. In: Facey, K., Ploug Hansen, H., Single, A. (eds) Patient Involvement in Health Technology Assessment. Adis, Singapore. https://doi.org/10.1007/978-981-10-4068-9_11

Download citation

DOI: https://doi.org/10.1007/978-981-10-4068-9_11

Published:

Publisher Name: Adis, Singapore

Print ISBN: 978-981-10-4067-2

Online ISBN: 978-981-10-4068-9

eBook Packages: MedicineMedicine (R0)