Abstract

LIDAR integrates digital camera, global positioning system (GPS), inertial navigation system, laser ranging and other advanced technology,using GPS to control the attitude of flying platform, obtaining the distance information between receiver and landmark via laser, capturing the image information by digital camera. At last, the distance is expressed as the 3D coordinates of the target landmark, and the image information is expressed as the surface characteristics on the target landmark. In this paper, firstly, the 3D coordinates are speckle noise filtered to realize point cloud de-noising and classification; then the point cloud of classification is plain rasterized to achieve the transformation from plane coordinates to image coordinates, and the image coordinates is processed to be corresponding elevation of grid unit by incremental insertion and median filter, at the same, the elevation is filled to the corresponding grid to generate DSM depth image by Gray-Scale transformation. Finally, the SOBEL detection operator is quickly and efficiently used to extract the building boundary characteristics which are applying in digital city construction, three dimensional entity model construction, urban planning and construction.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Preface

LIDAR integrates digital camera, global positioning system (GPS), inertial navigation system, laser ranging and other advanced technology, using GPS to control the attitude of flying platform, obtaining the distance information between receiver and landmark via laser and the distance is quickly and efficiently expressed to be 3D coordination information of the measured object by coordination transformation. The massive data obtained by this method are the basics in feature extraction of landmark. The building is an important symbol of city, its boundary information are important applied in the urban planning and construction, disaster prevention, three-dimensional digital simulation and many other fields. Therefore, how to quickly and accurately extract the building boundary information from the massive LIDAR point cloud data [5] has become a hot research topic at present.

Guo et al., University of Tokyo, Japan [1] combine the high resolution satellite images and airborne laser scanning data to extract buildings. You et al. [2] interpolate and encrypt the 3D point cloud data to generate DSM depth image and extract the building roof boundary by image data segmentation, boundary extraction and normalization and many other steps. Zeng et al. [9] build TIN model using the discrete point cloud data obtained from LIDAR, filter the boundary line of the whole triangulation network by using the spatial geometric relationship of the TIN model point, and obtain the initial boundary point; regard the point that change significantly on the boundary slope as turning point; further, expand the turning point in the range of point cloud to detect all the expansion points in the boundary; at last, use the expansion point to construct the building roof boundary. In the point cloud de-noising, Xiao et al. [10] put forward the multi-temporal polarization SAR data speckle noise filtering algorithm based on scattering model. The method uses the multi-temporal polarization SAR data to realize the speckle noise suppression on the basis of keeping the main scattering characteristic of the pixel.

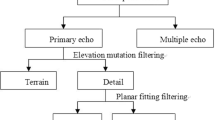

Based on the de-noising and classification of LIDAR point clouds, this paper rasterizes the classified building point cloud to construct DSM depth image [4], then uses edge detection operator to extract the building boundary and obtains the building’s total external contour. The process of building boundary extraction is shown in Fig. 1:

2 Point Cloud Denoising and Classification

2.1 Principle and Method of Point Cloud Denoising

By using speckle noise filtering of multi-temporal point cloud, the speckle noise can be effectively removed. De-noising principle: choose the pixel that is same in the main scattering of the central pixel in the window, and averagely suppress the speckle noise through the covariance. The realized process: obtain the polar covariance matrix from the polar SAR image scattering matrix; decompose the polar covariance matrix in the way of Freeman-Durden to get 3 scattering components including surface scattering, volume scattering and double scattering; classify the pixel by using unsupervised classification; choose 9 × 9 moving window to do the speckle noise filter processing in the basic of classification, choose the pixel that is same in the main scattering of the central pixel in the window, and averagely suppress the speckle noise through the covariance. In order to keep up the point cloud features, remain the central pixel which has the max total power and is surface scattering or double scattering in the moving window. This can effectively remove the noise, but also maintain the edge texture information and the characteristics of the target point.

2.2 Classification Principle of Point Cloud Data

The principle of classification of the building: firstly, the landmark is divided into separate and small objects. The smaller division values are, the more careful in the subdivision. Then disengage the trees by normalized difference vegetation index and distinguish the roads and the buildings by using rectangular degree tool. Lastly, combine the nearby elements into an object and disengage the building point cloud (Figs. 2 and 3).

3 DSM Grayscale Image Generation

3.1 The Principle of Generating DSM Depth Image

DSM (Digital Surface Model) depth image is a kind of raster data, which has the advantage of two-dimensional plane reflecting three-dimensional features. LIDAR acquires discrete vector point. In order to generate the DSM, the horizontal coordinate (X, Y) of discrete vector point needs to be transformed to image coordinates (I, J), and realize the grid transformation of the vector point cloud. In raster data, each pixel (I, J) has the corresponding elevation value. In the actual operation, there will be a pixel corresponding a number of LIDAR points, on this occasion, use the median filtering algorithm to calculate elevation value which is regarded as the pixel value; When a pixel does not correspond to any LIDAR point, use the incremental inserting algorithm to insert the pixel value in order not to introduce a new elevation. In the end, the elevation value is filled to the corresponding grid to generate DSM depth image and grid transformation Digital Surface Model (DSM).

3.2 The Step of Generating DSM

In order to achieve the grid transformation of the vector point quickly, first of all, convert the plane coordinate of the point cloud that converts the X direction of the plane coordinate system into I direction and the Y direction of the plane coordinate system into J direction. Conversion formula as formula (1.1)

S is grid resolution in the formulate above and S = 1/√n, n is the original point cloud density of the LIDAR. This can insure that 1 m in the X direction respondent to 1/step = √n in the I direction on the grid. (It is the same to the Y direction.) For the target area, every square metre correspondent with the grid of √n * √n = n. And the target areas exactly have n vector points per square metre. It is that n points corresponding to n grid. When a pixel corresponding to multiple point, calculate the pixel corresponds to the elevation values by using the median filtering method. Implementation Methods: Median filtering calculations usually use the mid-value among the height of every point as the height of the grid. Assuming that the window contains n points, order the elevation values from small to large, and then get the sequence of the median as the mid-value. And consider this value as the output value of the median filter (Figs. 4, 5 and 6).

When a pixel corresponding to no point, in order not to introduce new elevation values, we adopt point-by-point interpolation [11] to find out the elevation values that correspond to the pixels. Implementation Methods: Take the adopt which need interpolation as the center of the field, choose the nearby eight points in order to determine the scope of the neighborhood, then calculate the height of the current point through the interpolation arithmetic with the point that falls within the neighborhood in this range. Point by point interpolation model can be expressed as (Figs. 7 and 8):

In order to generate gray image, firstly, read the maximum of x, y coordinates of building point cloud to get the data range; then, determine the side length of the plane grid in the range of point cloud data to create an empty two-dimensional matrix and fill the plane vector point into the related grid. In the end, read the points’ elevation and the elevation maximum of building point cloud, i.e. Zmax and Zmin. According to gray conversion formula, the gray value related to elevation value. Conversion formula:

G(I, J) is the gray value of the point (I, J), Z(I, J) is the elevation value in DSM. The higher the elevation is, the greater the gray value is, the results on the image that will be brighter (Fig. 9).

4 Building Boundary Detection

4.1 Edge Detection Operator [6, 8]

Boundary is the most prominent position in the change of the gray level in an image, it is also the important feature that image segmentation depends on, the foundation and key information resources of the shape and texture characteristics. The boundary features of the image are also a key position of the image matching. There are many classical algorithms in edge detection. Sobel edge detection operator is a typical algorithm of first derivative which can not only eliminate the noise but can also smooth it. Roberts’s edge detection operators finds the edge using the principle of partial difference, and detect the edge position through the gradient change of the two adjacent pixels in the diagonal direction. So the detection effect in the vertical direction is better than the oblique detection. Prewitt edge detection operator also use first differential to carry on the edge detection, it carrys on the detection using the extreme value that is acquired among the gray-value difference of the adjacent point that is located on the up and down or so of the pixel point. Thus it can smooth the noise to some extent. Laplace edge detection operator is a kind of second differential operator, and it is suitable for the situation that only cares about the edge position and don’t consider the surrounding gray level difference by using the properties of isotropy. Laplace edge detection operator does well in the procession of the noiseless images because it responses strongly to the independent pixel response. Candy edge detection operator is a kind of edge detection operator that calculates the gradient magnitude and direction through the finite difference. First of all, it does the convolution operation for the images to reduce the noises by carrying out the Gaussian filter, then inhibit the maximum of the gradient’s size, and keep the biggest point during the gradient change, finally adopt the double threshold algorithm for testing and the edge of the connection.

4.2 Sobel Operator Theory and Testing Results [3]

Sobel operator is adopted for edge detection through the practical comparisons on a variety of edge detections [5, 7]. It’s strength is: Detect the edge points and suppress the noise effectively at the same time, the detection of the rear edge width is two pixelsat least. The sobel is obtained through the convolution of two convolution kernel g1(x, y) and g2(x, y) that is carried out on the original image f(x, y). It’s mathematical expression is:

In fact, the algorithms of the Sobel edge operator are carrying on the weighted average firstly, and then carry on the differential operation.

We can use difference instead of the first order partial derivative operator, it’s computing method is:

The calculation template of the sobel operator’s vertical direction and horizontal direction is as shown in figure, the former can detect the image edge of the horizontal direction, and the later can detect the image edge of the vertical direction, in the course of practical application, each pixel of the image is done with the two convolution kernels for convolution operation, and get the maximize as a result to put out, and the calculation result is an image that reflects the edge gradient (Fig. 10 and Tables 1 and 2).

5 Conclusions

As an important symbol of a city, buildings play a vital role in the process of urban digitization. The extraction of the building boundary can help people to grasp the building area grandly, provides the basis for urban planning and the construction of 3D models. Our paper generates DSM depth image based on the de-noising of point cloud classification the rasterization of plane coordinates and the conversion of height gray, finally extracts the complete building boundary. Through the analysis of this thesis, we can see:

-

a.

LIDAR technology can process the acquisition of the building’s point cloud efficiently and quickly.

-

b.

It is a good way to retain boundary information using the above methods for building boundary extraction.

-

c.

The selection of multi-resolution mesh influences the effect of the boundary extraction. At the same time, it will produce serrated burrs in the process of extracting boundary that need to be further optimization for practical application.

References

Guo, T., Yoshifumi Y.: Combining high resolution satellite imagery and airborne laser scanning data for generating Bareland and DEM in urban areas. In: International Workshop on Visualization and Animation of Landscape, Kunming, China, 26–28 February 2002. XXX IV Part: 5/W3

You, H., Su, L., Li, S.: Automatic extraction of DSM data from airborne 3D imaging instrument. J. Wuhan Univ. 27(4), 408–413 (2002)

Cui, J., Sui, L., Xu, H., Zhao, D.: LiDAR data building extraction based on edge detection algorithm. J. Surv. Mapp. Sci. Technol. 02, 98–100 (2008)

Wang, B.: The Extraction of LiDAR Point Cloud Data Based on the Edges of the Building. Chang’an University, Xi’an (2014)

Wei, Z., Yang, B., Li, Q.: Fast extraction of the boundary of the building in the vehicle borne laser scanning point cloud. J. Remote Sens. 02, 286–296 (2012)

Meng, F., Li, H., Wu, K.: Building feature line LIDAR point cloud data extraction. Sci. Surv. Mapp. (15405), 97–99+108 (2008)

Zhao, Y.: Research on Urban Building Extraction Based on Airborne LIDAR Data. Jilin University, Changchun (2011)

Yang, P.: Research on Building Extraction and Modeling Based on Airborne LiDAR Point Cloud Data. Liaoning Technical University, Fuxin (2013)

Zeng, Q., Mao, J., Li, X., Liu, X.: Roof boundary extraction of building LiDAR point cloud. J. Wuhan Univ. (Inf. Sci. Ed.) 3404, 383–386 (2009)

Xiao, K., Wang, Zuo, T., Song, C., Yang, R., Han, X., Wang, Z.: The model of multiple phase polarization SAR speckle noise filtering algorithm. Surv. Mapp. Sci. 2, 99–103+124 (2014)

Lan, Y., Wang, M., Liu, S., Dai, X.: Point by point interpolation method to establish the DEM study. Sci. Surv. Mapp. 1, 214–216 (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Wang, M., Yang, G., Zhang, X., Lu, L. (2017). Research on Rapid Extraction Method of Building Boundary Based on LIDAR Point Cloud Data. In: Yuan, H., Geng, J., Bian, F. (eds) Geo-Spatial Knowledge and Intelligence. GRMSE 2016. Communications in Computer and Information Science, vol 698. Springer, Singapore. https://doi.org/10.1007/978-981-10-3966-9_46

Download citation

DOI: https://doi.org/10.1007/978-981-10-3966-9_46

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3965-2

Online ISBN: 978-981-10-3966-9

eBook Packages: Computer ScienceComputer Science (R0)