Abstract

How to build a good appearance descriptor for tracking target is a basic challenge for long-term robust tracking. In recent research, many tracking methods pay much attention to build one online appearance model and updating by employing special visual features and learning methods. However, one appearance model is not enough to describe the appearance of the target with historical information for long-term tracking task. In this paper, we proposed an online adaptive multiple appearances model to improve the performance. Building appearance model sets, based on Dirichlet Process Mixture Model (DPMM), can make different appearance representations of the tracking target grouped dynamically and in an unsupervised way. Despite the DPMM’s appealing properties, it characterized by computationally intensive inference procedures which often based on Gibbs samplers. However, Gibbs samplers are not suitable in tracking because of high time cost. We proposed an online Bayesian learning algorithm to reliably and efficiently learn a DPMM from scratch through sequential approximation in a streaming fashion to adapt new tracking targets. Experiments on multiple challenging benchmark public dataset demonstrate the proposed tracking algorithm performs 22 % better against the state-of-the-art.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

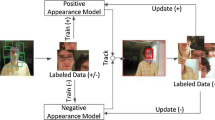

Object tracking plays an important role in numerous vision applications, such as motion analysis, activity recognition, visual surveillance and intelligent user interfaces. However, while much progress has been made in recent years, it is still a challenging problem to track a moving object in a long term in the real-world because of the variations of tracking environment such as view port exchanging, illuminance varying, and etc. For visual tracking problem, an appearance model is used to represent the target object and predicted the likely states of tracking target in future frame [1]. However, using one appearance model is not suitable to describe all the historical appearance information, especially for long term tracking task. So we mainly focused on building multiple appearance models dynamically in an unsupervised way in order to adapt the changing appearance of tracking target (Fig. 1).

In this paper, we used Bayesian non-parametric clustering method to cluster multiple different appearances dynamically. It can cover more aspects of the target appearance to make the proposed algorithm more robust to abrupt appearance changes, and the number of clusters can be inferred from the observation. Among the different probabilistic models, Bayesian non-parametric method has several properties which suit the object tracking application well. In particular, DPMM represents mixture distributions with an unbounded number of components where the complexity of the model adapts to the observed data. This property is important for building multiple appearance models dynamically. In general, the number of appearances is uncertain and varying over time.

However, despite the appealing properties of DPMM, it characterized by computationally intensive inference procedures, which often based on Gibbs samplers [2]. While Gibbs sampling can be an appropriate inference mechanism when execution time is not an issue. It is not applicable in visual tracking, as it needs more faster inference. In [3] a variational inference method which maximizes a lower bound to the true underlying distribution and after each iteration, the obtained parameters define a distribution which approximates the true one in a properly defined way. However, variational inference method is extremely vulnerable to local optima for non-convex unsupervised learning problems, and is frequently yielding poor solutions.

In visual tracking literature, the appearance model based on BNP is not applied as usual as the parametric methods [4]. The main strategy of the BNP tracking methodology is based on three aspects shown as follows: we need to solve (1) how to represent the observation of tracking target by Bayesian non-parametric models, (2) how to create multiple appearance models dynamically without knowledge of cluster numbers and model parameters to adapt in tracking environment variation, (3) how to update multiple appearance models effectively and reliably in tracking process.

Our proposed algorithm is mostly inspired by [5, 6] which are online Bayesian learning algorithm to estimate DP mixture models. This method does not require random initialization like Gibbs samplers. Instead, it can reliably and efficiently learn a DPMM from scratch through sequential approximation in a single pass. The algorithm takes data in a streaming fashion, and thus can be easily adapted to new tracking target.

The rest of the paper is organized as follows: Sect. 2 reviews some of the related work. Section 3 reviews Bayesian non-parametric model on which our proposed model based. Section 4 introduces multiple appearances modeling and representation, and proposes related probabilistic distributions which can describe the generation process of tracking features. Section 5 introduces an online sequential Bayesian method to build multiple appearance models. Section 6 presents the framework of the proposed tracking algorithm. Section 7 reports the experimental results. Section 8 makes the conclusion of the paper.

2 Related Work

There is a rich literature in object tracking approatches [7]. As a main component in tracking algorithms, tracking target appearance modeling plays a key role in tracking performance. A good appearance representation should have strong description or discrimination power to distinguish the target from the background. In order to adapt to the appearance variations of the target during tracking, there are many adaptive appearance models have been proposed for object tracking including both generative and discriminative methods.

For generative appearance modeling methods, Jepson et al. [8] learn a Gaussian mixture model via an online expectation maximization algorithm to account for target appearance variations during tracking. Incremental subspace methods have also been used for online object representation [9]. This method uses target observations obtained online to learn a linear subspace for object representation. Since the appearance of a target in a long-time interval may be quite different, these generative models may not describe the appearance variations of the target well.

For discriminative appearance modeling methods, Avidan et al. [10] use online boosting method for tracking. They proposed an ensemble tracking framework to construct a strong classifier to distinguish the target from the background. Babenko et al. [11] use Multiple Instance Learning (MIL) instead of traditional supervised learning to avoid the inaccuracy accumulation problem caused by self-learning. In these methods, tracking is usually treated as a binary classification problem. In order to train and update the classifiers, samples usually needs to be correctly labeled, which may not be available in many real tracking applications.

The most related methods to our model is [12], which proposed the original Adaptive Multiple Appearance Model (AMAM) framework to maintain not only one appearance model as many other tracking methods but appearance model set to describe all historical appearances of the tracking target during a long term tracking task. This method employed DPMM to build multiple appearance models unsupervised to tackle drifting problem, and experiment in several public datasets shows that this tracker has high tracking performance compared with several other state-of-the-art. In order to infer the number of different appearances underlying tracking observations, this tracker resorts to Gibbs sampler [2] for approximate inference and also requires random initialization of components. However, as this sampler needs to maintain the entire configuration, the computational complexity of this tracker is quite high, which limits its applications in real-time scenarios.

Compared with the tracking methods as described above, our proposed method shows three mainly characteristics in dealing with appearance variations of the target. Firstly, our method can cluster multiple different appearances dynamically, and the number of clusters can be inferred from the tracking observation. Secondly, in our method, different kinds of tracking target appearances can be modeled by new model or constructed appearance models. It covered various target appearances, which made the proposed method more robust to abrupt appearance changes. Finally, our method begins with an empty model and progressively refines the models as tracking observation come in, adding new appearance models on the fly when needed.

3 Bayesian Non-parametric Model

The Dirichlet process (DP) introduced in [14], is a popular nonparametric stochastic process that defines a distribution over probability distributions. The DP is parameterized by a base distribution H which has corresponding density \(h(\mu )\), and a positive scaling parameter \(\alpha >0\). We denote a DP as follows:

The DP is most commonly used as a prior distribution on the parameters of a mixture model when the number of mixture components is uncertain. Such a model is called a Dirichlet process mixture model (DPMM) which can be specified as:

Let \(z_{i}\) indicate the subset, or cluster, associated with the \(i^{th}\) observation, the DP mixture model can also be modeled by using the Chinese Restaurant Process (CRP) representation [17] of the DP, leading to the followings:

So, a model equivalent to the DPMM using the CRP can be specified as:

4 Multiple Appearance Modeling and Representation

In this section, our goal is to develop a probabilistic method to cluster multiple different appearances unsupervised, which can cover aspects of the target appearance. In order to do so, we represent motion features (e.g. HOG, color feature etc.) using histograms, and then, quantize motion feature values of tracking observations to 20 or more levels, which is a common practice for similar histogram-based descriptors, such as [13]. Thus, considering N tracking observations \(X= \{X_{i}\}_{i=1}^{N}\), which can be clustered into K clusters or different appearances, and each \(X_{i}=\{x_{i }\}_{i=1}^{D}\) represents a quantized D dimensional motion feature, and \(x_{i}\) is the corresponding histogram quantized bin counts, which is a quantized integer. With the new tracking observation arrival, the number of clusters became to be varies. Given the cluster assignment for \(i^{th}\) each observation \(X_{i}\), its likelihood for that cluster is \(F(X_{i} | \theta _{k} )\), while the \(\theta _{1:K}\) are drawn from the base distribution of DPMM.

4.1 Exponential Family and Sufficient Statistics

In order to describe motion feature X which is the collection of small integers of histograms and the histogram bin counts, we adopt component distributions of which are members of the exponential family distributions. The base measure of the DPMM will be the conjugate prior, because it has many well-known properties, which can admit efficient inference algorithms. Thus, in this paper, we will consider to describe the distributions as follows:

where a is the log-partition function. We take H to be in the corresponding conjugate family:

where the sufficient statistics are given by the vector \((\theta ^{T},-\alpha (\theta ))\), and \(\lambda = (\lambda _{1}^{T},\lambda _{2})\).

4.2 Model Representation

Specifically, we choose Multinomial distribution \(F(X_{i}|\theta )\), which we denote \(Mult(\theta _k;n) \theta _{k}=(p_{1},\cdots ,p_{D})\), is a discrete distribution over D dimensional non-negative integer vectors \(X_{i}=(x_{1},x_{2},\cdots x_{D})\) where \(\sum _{i=1}^{D} x_i=n\). The probability mass function is given as follows:

The cluster prior \(H(\theta | \lambda )\) is represented by a Dirichlet distribution which is conjugate to \(F(X_{i} | \theta )\). We denote cluster prior \(H(\theta | \lambda ) \) as follows, which is a Dirichlet distribution and is conjugate to \(F(X_{i} | \theta )\).

where the normalizing constant is the multinomial Beta function. Because \(H(\lambda )\) is conjugate to \(F(\theta )\), then the marginal joint distribution can be obtained by integrating out \((p_{1},\cdots ,p_{D})\) as follows:

where \(A= \Sigma _{i} \lambda _{i}\) and \(N= \Sigma _{i}n_{i}\), and where \(n_{i}=\) number of \(x_{i}\)’s with value i.

4.3 Multiple Appearance Modeling

When the number of clusters K is estimated, the multiple appearance model can be built. Considering all of the model parameters, which is comprised of the model parameters \(\theta _{1:K}\) and the cluster indicator \(z_{1:N}\), the joint distribution of this Bayesian Non-parametric mixture model can be written as in Eq. (4).

Here, \(z_{i} \in \{1\cdots K\}\) with \(i \in \{1\cdots N\}\) indicates the cluster label of the observation \(X_{i}\) and \(\theta _{k}\) are the parameters for the k-th appearance model. The target of our proposed method is to infer the joint posterior distribution \(p(\theta _{1:K},z_{1:N} |X_{1:N} )\) unsupervised and dynamical, then we can get the parameters \(\theta _{1:K}\) of multiple appearance models.

5 Online Sequential Approximation

In order to infer the joint posterior distribution \(p(\theta _{1:K},z_{1:N} |X_{1:N} )\), we can initialize the components randomly, and then resort to Gibbs sampler for approximate inference [12]. However, this method needs to maintain the entire configuration, so the computational complexity of this tracker is rather high, which limits its applications in real-time scenarios.

We improved it by using an online sequential variational approximation method to learn a DPMM from scratch through sequential approximation in a streaming, which is easily adapted to new observation.

By marginalizing out the cluster assignment \(z_{1:N}\), we obtain the posterior distribution \(p(\theta | X_{1:N})\):

In order to compute the distribution above, it requires enumerating all possible partitions \(z_{1: N}\), which grows exponentially as n increases. To tackle this difficulty, we resort to variational approximation [6] to choose a tractable distribution to approximate \(p(\theta | X)\) as follows:

We begin our tracker with one appearance model (i.e. \(K = 1\)) and progressively refine the model as samples come in, adding new appearance models on the fly when needed. Specifically, when we have \(\rho =(\rho _{1},\rho _{2},\cdots ,\rho _{i})\) and \(v^{(i)}=(v_{1}^{(i)},v_{2}^{(i)},\cdots ,v_{K}^{(i)})\) after processing i frames. To determine \(X_{i+1}\), we can use either of the K existing appearance models or generate a new model \(\theta _{K+1}\). Then the posterior distribution of \(z_{i+1},\theta _{1},\cdots ,\theta _{K+1}\) given \(x_{1},\cdots ,x_{i+1}\) is

Using the tractable distribution \(q(\theta | \rho ,\upsilon )\) to approximate the posterior \(p(z_{i+1},\theta _{1:K+1} | X_{1:i} )\), we get the following:

Then, for our model, the optimal setting of \(q_{i+1}\) and \(v^{(i+1)}\) minimizes the Kullback-Leibler divergence between \(q(z_{i+1} ,\theta _{1:K+1} | \rho _{1:i+1} ,v^{(i+1)} )\) and the approximate posterior in Eq. (8) are given as follows:

with \( \omega _{k}^{(i)}=\sum _{j=1}^i \rho _{j}(k)\), and

Algorithm 1 illustrates the basic flow of our algorithm. More details can be found in [5]. The implementation of this algorithm is under the circumstance where H and F are exponential family distributions that form a conjugate pair. In such cases, base measure h and posterior measures \(v_{k}\) can be represented by natural parameter denoted by \(\lambda \) and \(\zeta _{k}\).

6 Proposed Tracking Algorithm

Given the observation set of the target \(X_{1:t}=[X_{1},\ldots ,X_{t}]\) up to time t, where each \(X_t\) represents a quantized HOG target feature at time t, the target state \(s_{t}\)(motion parameter set) can be determined by the maximum a posteriori(MAP) estimation as follows:

where \(p(s_{t}|X_{1:t})\) can be inferred by the Bayesian theorem in a recursive manner (with Markov assumption)

where \(p(s_{t}|X_{1:t-1})=\int p(s_{t}|s_{t-1})p(s_{t-1}|X_{1:t-1})ds_{t-1}\). The tracking process is governed by a dynamic model, i.e. \(p(s_{t}|s_{t-1})\), and an observation model, i.e. \(p(X_{t}|s_{t})\).

A particle filter method [15] is adopted here to estimate the target state. In the particle filter, \(p(s_{t}|X_{1:t})\) is approximated by a finite set of samples with important weights. Let \(s_{t}=[l_{x},l_{y},\theta ,s,\alpha ,\phi ]\), where \(l_{x},l_{y},\theta ,s,\alpha ,\phi \) denote x, y translations, rotation angle, scale, aspect ratio, and skew respectively. We approximate the motion of a target between two consecutive frames with affine transformation. The state transition is formulated as \(p(s_{t}|s_{t-1})=N(s_{t};s_{t-1},\sum )\) where \(\sum \) is the covariance matrix of six affine parameters. The observation model \(p(X_{t}|s_{t})\) denotes the likelihood of the observation \(X_{t}\) at state \(s_{t}\). The Noisy-OR (NOR) [17] model is adopted for doing this:

where \(\zeta ^{k},k\in (1,2,\ldots ,K)\) represents the multiple appearance model parameters learned from Algorithm 1. The equation above has the desired property that if one of the appearance models has a high probability, the resulting probability will be high as well. Algorithm 2 illustrates the basic flow of our tracking algorithm.

Figure 2 shows how the online adaptive multiple appearances model working. These small face images show the appearance instance belong to each appearance model and the historical instances while tracking. The red rectangle in main frame is the tracking result based on our proposed model, and the green one is the ground truth. With the new tracking observation arrival, the number of clusters became varies.

7 Experiments

To evaluate our tracker, we compared the proposed tracker with 10 latest algorithms using 10 challenging public tracking datasets introduced by [20]. When evaluating the trackers, there are several problems should be discussed. We followed the evaluation methods from [20]. As object tracking is a traditional problem in computer vision, these trackers have quite different frameworks, so that all of them have advantage and disadvantage when meeting different challenges like occlusion and etc. Table 1 shows all the trackers (including our proposed algorithm) and their features and models. Note that in our proposed algorithm the HOG feature can be replaced by other features.

One thing to emphasis is that all the trackers are running with adjusted parameters or simply use the parameters given by their publication for fair evaluation.

As mentioned before, a tracker might face tons of problems listed below in a real usage. According to the [20, 29], we divided these variation into six groups and analyzed some datasets by using this division. In the Table 2, we also add a short form of each challenge on each datasets. Here, the OCC stands for Occlusion, IV stands for Illumination Variation, R stands for Rotation which contains in-place rotation and out-of-place rotation, SV stands for Scale Variation while BC stands for Background Clutters.

One general problem for tracking is that the object may be occluded by other objects for several seconds. While in the dataset Bolt, the main object Bolt just kept the sportsman near him out in some of the frames and this will lead trackers to track on the sportsman near Bolt.

This method we proposed didn’t limited any certain kind of features for tracking task. Better features can get better tracking results. We simply applied HOG feature to implement.

It’s common to use Center Location Error (CE [20]) and Overlap Score (OS [29]), to estimate the performance of the tracker. OS is calculated by the formula \(score = \frac{area(ROI_{T}\cap ROI_{G})}{area(ROI_{T}\cup ROI_{G})}\). In the experiment, the \(area(ROT_{T})\) is the area of bounding box of tracking, and the \(area(ROT_G)\) is the area of the ground truth. The CE is the Euclidean distance between the centers of tracking bounding box and the ground truth.

7.1 Online AMAM vs. Original AMAM [12]

In the previous sections, we compared our new proposed tracking method with AMAM tracking method [12]. As online method benefits the predicting speed on a long-term object, we compared these two methods in the time consumption. Figure 4(a) illustrates the DPMM time consumption of each frame in Trellis for both OAMAM method and AMAM method. It’s obvious that AMAM method has a quite unaffordable time cost tracking for a long time while our online method performs relatively stable.

Besides the time cost, they have a slightly difference in forming appearance models during the tracking task. Figure 4(b) shows the amount of appearance models for both methods in every frame in dataset Trellis.

7.2 Qualitative Comparison

Our tracker has a robust performance while solving different challenges in different video sequences. Typical background problem can be seen in MountainBike, Crossing. In the Fig. 3 in Crossing, when a car was passing by the pedestrian, they shared similar dark colors in the frame 31 and result in the ASLA, Struck, and TLD’s failure in tracking. In the frame 73 to frame 85 the target pedestrian blurred himself with the dark shade and only Struck, MIL and our tracker catched the target successfully(even Struck failed to track the pedestrian in the frame 31). In MountainBike, our tracker still performed well while the target was on the grass or dark shade in frame 62(VTD lost the target entirely from this frame), frame 150, frame 199, and frame 225. During the whole period of these two video sequences, our tracker tracked the target perfectly and constantly performed better than other trackers.

At the same time, there are view port varying problem in Bolt and rotation challenge in David2. In Bolt, the view port of the camera varied three times. It firstly lied in frame 97, as shown in the Fig. 3 Bolt was running towards the camera. The Second variation lied in frame 137 while Bolt was running parallel to the camera. The third variation lied in frame 252 while Bolt running away from the camera. Most of the trackers lost the target at the first stage, except four were still catching the Bolt. Only three trackers tracked Bolt successfully at the second stage. At the last stage, only our tracker was still working. In David2, there were abundant in-plane rotations and out-of-plane rotations. During the out-of-plane rotation(from the frame 79 to 115), half of the trackers had high CE rate even they did not lost the target.

In Trellis and Car4, there are significant illumination variations. In Trellis, The illumination of target varied from all dark to half dark during frame 139 to frame 213, and changed to bright in the frame 230. All the bounding box of these frames is shown in Fig. 3. In the frame 282 we could clearly find that only two trackers (ours and MIL) succeed in tracking the target while others drifted away because of the dark background. In Car4, the video sequences undergo serious illumination changes when the vehicle ran through a tunnel or under trees. At the frame 182, most of trackers performed well except two trackers fail to track the vehicle. But in the frame 207, 6 trackers enlarged its bounding box and drift away in frame 233 while the vehicle ran outside the tunnel. After the frame 490 and passed several trees and billboards, only 4 trakers including our tracker, MIL, ASLA and VTD were succeed in tracking target, and only our tracker didn’t falsely enlarge its bounding box comparing to the ground truth.

We employed the protocol above to finish a comparison and analyzed all the data after evaluating. By adopting the OPE evaluation matrics, we compared the performance of trackers in all testing datasets with the same testing result shown in Fig. 5. From Fig. 5, we found that our tracking method outperforms state-of-art in the OPE evaluation on these 10 datasets. In the plot we can also infer that our tracking method is approximately 22 % better than the second best tracking method.

8 Conclusion

In this paper, we proposed a new online adaptive multiple appearances model for long-term tracking. This approach remained more historical information on appearances of tracking target to avoid the target drifting or lost during the tracking caused by varying illumination or pose changing. We employed HOG to build the basic appearance representation of the tracking target in our algorithm framework. Multiple appearances representations were grouped unsupervised and dynamically by an online sequential approximation BNP learning method. Tracking result can be selected from candidate targets, which were predicted by trackers based on those appearance models, by using Noisy-OR method. Experiments on public datasets show that, our tracker has low variation (less than 0.002), low time cost for real-time tracking, and high tracking performance (22 % better than other 10 trackers in average) when compared to the state-of-the-art.

References

Li, Y., Ai, H., Yamashita, T., Lao, S., Kawade, M.: Tracking in low frame rate video: a cascade particle filter with discriminative observers of different life spans. IEEE Trans. Pattern Anal. Mach. Intell. 30(10), 1728–1740 (2008)

An, S., An, D.: Stochastic relaxation, Gibbs distributions, the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 6, 721–741 (1984)

Blei, D.M., Jordan, M.I.: Variational inference for dirichlet process mixtures. Bayesian Anal. 1(121–144), 1 (2005)

Stauffer, C., Grimson, W.E.L., Adaptive background mixture models for real-time tracking. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2, pp. 246–252. IEEE, Los Alamitos, August 1999

Lin, D.: Online Learning of nonparametric mixture models via sequential variational approximation. In: Proceedings of NIPS (2013)

Ulker, Y., Gunsel, B., Cemgil, A.T.: Sequential Monte Carlo samplers for Dirichlet process mixtures. In: AISTATS 2010 (2010)

Cannons, K.: A review of visual tracking. Department of Computer Science, York Univ., Toronto, ON, Canada, Technical report CSE-2008–07 (2008)

Jepson, A.D., Fleet, D.J., El-Maraghi, T.F.: Robust online appearance models for visual tracking. IEEE Trans. Pattern Anal. Mach. Intell. 25(10), 1296–1311 (2003)

Ross, D., Lim, J., Lin, R.-S., Yang, M.-H.: Incremental learning for robust visual tracking. IJCV 77(1–3), 125–141 (2008)

Avidan, S.: Ensemble tracking. PAMI 29(2), 261–271 (2007)

Babenko, B., Yang, M.-H., Belongie, S.: Visual tracking with online multiple instance learning. In: CVPR, pp. 983–990 (2009)

Tang, S., Zhang, L., Chi, J., Wang, Z., Ding, G.: Adaptive multiple appearances model framework for long-term robust tracking. In: Ho, Y.-S., Sang, J., Ro, Y.M., Kim, J., Wu, F. (eds.) PCM 2015. LNCS, vol. 9314, pp. 160–170. Springer, Heidelberg (2015). doi:10.1007/978-3-319-24075-6_16

Lowe, D.G.: Object recognition from local scale-invariant features. In: Proceedings of ICCV, pp. 1150–1157 (1999)

Ferguson, T.: A Bayesian analysis of some nonparametric problems. Ann. Stat. 1(2), 209–230 (1973)

Isard, M., Blake, A.: CONDENSATION-conditional density propagation for visual tracking. Int. J. Comput. Vis. 29(1), 5–28 (1998)

Pitman, J.: Combinatorial Stochastic Processes. Lecture Notes in Mathematics, vol. 1875. Springer, Heidelberg (2006)

Viola, P., Platt, J.C., Zhang, C.: Multiple instance boosting for object detection. In: Proceeding of Neural Information Processing Systems, pp. 1417–1426 (2005)

Bernardo, J.M., Smith, A.F.M.: Bayesian Theory. Wiley, New York (1994)

Bissacco, A., Yang, M., Soatto, S.: Detecting humans via their pose. Adv. Neural Inf. Process. Syst. 19, 169 (2007)

Wu, Y., Lim, J., Yang, M.: Online Object Tracking: a benchmark. In: Proceeding IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2411–2418 (2013)

Babenko, B., Yang, M., Belongie, S.: Robust object tracking with online multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 33(8), 1619–1632 (2011)

Oron, S., Bar-Hillel, A., Levi, D., Avidan, S.: Locally orderless tracking. In: CVPR (2012)

Jia, X., Lu, H., Yang, M.: Visual tracking via adaptive structural local sparse appearance model. In: CVPR (2012)

Kwon, J., Lee, K.M.: Visual tracking decomposition. In: CVPR (2010)

Bao, C., Wu, Y., Ling, H., Ji, H.: Real time robust L1 tracker using accelerated proximal gradient approach. In: CVPR (2012)

Grabner, H., Grabner, M., Bischof, H.: Real-time tracking via online boosting. In: BMVC (2006)

Hare, S., Golodetz, S., Saffari, A., Vineet, V., Cheng, M.M., Hicks, S., Torr, P.: Struck: structured output tracking with kernels. In: ICCV (2011)

Zhang, T., Ghanem, B., Liu, S., Ahuja, N.: Robust visual tracking via multi-task sparse learning. In: CVPR (2012)

Everingham, M., Van Gool, L., Williams, C.K.I., et al.: The Pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Tang, S., Zhang, L., Tan, X., Yan, J., Ding, G. (2016). Online Adaptive Multiple Appearances Model for Long-Term Tracking. In: Tan, T., Li, X., Chen, X., Zhou, J., Yang, J., Cheng, H. (eds) Pattern Recognition. CCPR 2016. Communications in Computer and Information Science, vol 662. Springer, Singapore. https://doi.org/10.1007/978-981-10-3002-4_42

Download citation

DOI: https://doi.org/10.1007/978-981-10-3002-4_42

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-3001-7

Online ISBN: 978-981-10-3002-4

eBook Packages: Computer ScienceComputer Science (R0)