Abstract

Preprocessing the noisy sinogram before reconstruction is an effective and efficient way to solve the low-dose X-ray computed tomography (CT) problem. The objective of this paper is to develop a low-dose CT image reconstruction method based on statistical sonogram smoothing approach. The proposed method is casted into a variational framework and the solution of the method is based on minimization of energy functional. The solution of the method consists of two terms, viz., data fidelity term and a regularization term. The data fidelity term is obtained by minimizing the negative log likelihood of the signal-dependent Gaussian probability distribution which depicts the noise distribution in low-dose X-ray CT. The second term, i.e., regularization term is a nonlinear CONvolutional Virtual Electric Field Anisotropic Diffusion (CONVEF-AD) based filter which is an extension of Perona–Malik (P–M) anisotropic diffusion filter. The main task of regularization function is to address the issue of ill-posedness of the solution of the first term. The proposed method is capable of dealing with both signal-dependent and signal-independent Gaussian noise, i.e., mixed noise. For experimentation purpose, two different sinograms generated from test phantom images are used. The performance of the proposed method is compared with that of existing methods. The obtained results show that the proposed method outperforms many recent approaches and is capable of removing the mixed noise in low-dose X-ray CT.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- X-ray computed tomography

- Statistical sinogram smoothing

- Image reconstruction algorithm

- Noise reduction

- Anisotropic diffusion

1 Introduction

Nowadays X-ray computed tomography (CT) is one of the most widely used medical imaging modalities for various clinical applications such as diagnosis and image-guided interventions. Recent discoveries in medical imaging have certainly improved the physician’s perspectives for better understanding of diseases and treatment of the patients. Unfortunately, on the other hand it may also show some potential harmful effects of X-ray radiation including lifetime risk of genetic, cancerous, and other diseases due to overuse of imaging diagnostic [1]. Therefore, the effort should be made to reduce the radiation in medical applications. To realize this objective, many algorithms have been developed during the last two decades for CT radiation dose reduction [1–3]. From these algorithms, preprocessing the noisy and under sampled sinogram by statistical iterative methods has shown great potential to reduce the radiation dose while maintaining the image quality in X-ray CT as compared with the FBP reconstruction algorithm. For the sinogram smoothing purpose, it is very important to consider a widely studied effective regularization terms or priors [4–6]. The main aim of using the regularization priors during reconstruction is to lower the noise effects and preserve the edges consequently maximizing the important diagnostic information. However, one of the drawbacks associated with using regularization term is over penalization of the image or its gradient which may lead to loss of basic fine structure and detailed information. To address these drawbacks, still several priors which include smoothing, edge-preserving regularization terms, and iterative algorithms with varying degrees of success have been already studied and used to obtain high-quality CT reconstruction images from low-dose projection data. In view of the above-discussed problems, an anisotropic diffusion nonlinear partial differential equation (PDE) based diffusion process was developed by Perona and Malik (P-M) [8] for image smoothing while preserving the small structures. In this process, the diffusion strength is controlled by a gradient magnitude parameter to preserve edges. The over smoothing problem associated with P–M model was addressed by Ghita [9], by proposing the concept of Gradient Vector Flow (GVF) field for the implementation of the anisotropic diffusion models. But it has the disadvantage to produce undesirable staircase effect around smooth edges. Due to this effect, this method could not remove the isolated noise accurately and falsely recognize the boundaries of different blocks that actually belong to the same smooth area as edges. However, due to the presence of mixed noise in the sinogram data, i.e., signal-dependent and signal-independent Gaussian noise; these methods cannot be applied directly. Even, GVF fields have no ability to find edge when images are corrupted by extraneous or Gaussian noise, and thus the denoising effect of mixed noisy images remains unsatisfactory.

In this work, the low-dose CT image reconstruction has been improved by modifying the CONVEF-based P–M approach which is used as a prior in the denoising process. The proposed modified CONVEF-AD serves as a regularization or smoothing term for low-dose sinogram restoration to deal with the problem of mixed (Poisson + Gaussian) noise as well as ill-posedness issue. The proposed reconstruction model provides many desirable properties like better noise removal, less computational time, preserving the edges, and other structure. It can also overcome the staircase effect effectively. The proposed model performs well in low-dose X-ray CT image reconstruction. Also, the proposed results are compared with some recent state-of-the-art methods [5, 6].

Rest of the paper is divided into the following sections. Section 2 presents the methods and materials of the work. The proposed variational framework for sinogram restoration using CONVEF-AD regularized statistical image reconstruction method is presented in Sect. 3. Section 4 presents the discussion on simulation experiments results achieved by the proposed CONVEF-AD reconstruction method in both the simulated data and CT data. The conclusions are given in Sect. 5.

2 Methods and Models

Noise modeling of the projection (or sinogram) data, specifically for low-dose CT, is essential for the statistics-based sinogram restoration algorithms. Low-dose (or mAs) CT sinogram data usually contained serious staircase artifacts. It also follows a Gaussian distribution with a nonlinear signal independent as well as Poisson distribution with signal-dependent noise model between the sample mean and variance. To address this issue, several approaches are available in the literature for statistical sinogram smoothing methods like penalized weighted least square (PWLS) [7], Poisson likelihood (PL) [3] methods, etc. [5]. However, these existing methods often suffer from noticeable resolution loss especially in the case of constant noise variance over all sinogram data [1–3].

The formation of X-ray CT images can be modeled approximately by a discrete linear system as follows:

where \(f = \left( {f_{1} ,f_{2} , \ldots ,f_{N} } \right)^{T}\), is the original image vector to be reconstructed, N is the number of voxels, the superscript T is the transpose operator, \(g = \left( {g_{1} ,g_{2} , \ldots ,g_{M} } \right)^{T}\), is the measured projection vector data, M is the total number of sampling points in the projection data, \(A = \left\{ {a_{ij} } \right\}\), i = 1, 2, …, M and j = 1, 2, …, N is the system matrix of size I × J and relates with f and g. The value of \(a_{ij}\) is commonly calculated by using the intersectional length of projection ray i with pixel j.

It has been shown in [3, 10] that there are two principal sources of noise occurred during CT data acquisition, X-ray quanta noise (signal-dependent compound Poisson distribution), and system electronic background noise (signal-independent Gaussian or normal distribution with zero mean). However, it is numerically difficult to directly implement these models for data noise simulation. Several reports have discussed the approximation of this model by the Poisson model [4]. Practically, the measured transmission data N i can be assumed to statistically follow the Poisson distribution upon a Gaussian distributed electronic background noise [2]:

where \(m_{e}\) and \(\sigma_{e}^{2}\) are the mean and variance of the Gaussian distribution from the electronic background noise, \(\tilde{N}_{i}\) is the mean of Poisson distribution. In reality, the mean \(m_{e}\) of the electronic noise is generally calibrated to zero (i.e., ‘dark current correction’) and the associative variance slightly changes due to different settings of tube current, voltage, and durations in a same CT scanner [5]. Hence, in a single scan, the variance of electronic background noise can be considered as uniform distribution.

Based on the noise model in Eq. (2), the calibrated and log-transformed projection data may contain some isolated noise points which follow approximately a Gaussian distribution. After removing this noisy points from the projection data, there is a relationship between the data sample mean and variance, which is described as [6]:

where \(N_{0i}\) is the incident X-ray intensity,\(\tilde{g}_{i}\) is the mean of the log-transformed ideal sinogram datum \(g_{i}\) on path i, and \(\sigma_{e}^{2}\) means background Gaussian noise variance. During implementation, the sampling mean \(\tilde{y}_{i}\) could be calculated approximately by taking the average of neighboring 3 × 3 window. The parameters \(N_{0i}\) and \(\sigma_{e}^{2}\) could be measured as a part of the standard process in CT routine calibration systems [3].

3 The Proposed Model

The energy minimization function is used to obtain sinogram smoothing in variational framework can be defined as:

where the energy functional is described as follows:

In the Eq. (5), \(E_{1} \left( f \right)\) is used to represents data fidelity (or equivalently, data fitting, data mismatch, and data energy) term which ensures the modeling of statistics of projection data f and the measurement g. \(E_{2} \left( f \right)\) is a regularization (or equivalently, prior, penalty, and smoothness energy) term. The parameter \(\lambda\) is called balancing parameter that controls the degree of prior’s influence between the estimated and the measured projection data.

By taking the (negative) log likelihood of the estimated data and ignoring the constant and irrelevant term, the joint probability distribution function (pdf) can be expressed as [10]:

where \(g = \left( {g_{1} ,g_{2} , \ldots ,g_{M} } \right)^{T} ,\) is the measured projection vector data. Then, ignoring the constant, the negative logarithm function can be written as:

According to the MRF framework, the smoothness energy is calculated by the negative log likelihood of the priori [4]. The focus in this paper is to use nonlinear CONVEF-based P-M anisotropic diffusion regularization term for two reasons. (i) The integral nature of the edge-preserving MRF priori does not suit well for high continuity of the sparse measured data. (ii) The PDE-based AD model is capable of giving the optimal solution in less computational time. Therefore, the smoothness function can be defined as follows [10]:

where \(\phi \left( {\left\| {{\nabla }f} \right\|^{2} } \right)\) represents gradient norm of the image of corresponding energy function. The solution of the proposed sinogram smoothing of Eq. (4) can be described by substituting Eqs. (7) and (8) to Eq. (4):

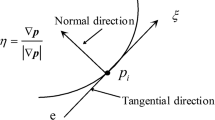

The functional \(E\left( f \right)\) is defined on the set of \(f \in BV\left(\Omega \right)\) such that \(\log f \in L^{1} \left(\Omega \right)\) and f must be positive. After minimizing Eq. (9) using combined approach of Euler–Lagrange minimization technique with gradient descent, the solution to above Eq. (9) can be written as:

where div and \({\nabla }\) are known as divergence and gradient operator, respectively, \(f_{{\left( {t = 0} \right)}} = f_{0}\) is the initial condition for noisy image. The value of diffusion coefficient \(c\left( \cdot \right)\) represents nonnegative monotonically decreasing function of the image or its gradient. Generally \(c\left( \cdot \right)\) takes as:

where k is a conductance parameter also known as gradient magnitude threshold parameter that controls the rate of diffusion and the level of contrast at the boundaries. Since the scale-space generated by these function is different. The diffusion coefficient \(c\) favors high-contrast edges over low-contrast ones. The small value of k is well capable of preserving small edges and other fine details but the smoothing effect on results is poor and weak. Conversely, on the large value of k, denoising effects on results is better but it will lose small edges and fine details. Since, it is reported in literature [3, 12] that second term in Eq. (9) is nonlinear AD prior to detect edges in multi-scale space, where diffusion process is controlled by the gradient coefficient of image intensity in order to preserve edges. However, P-M diffusion model can remove isolated noise and preserve the edges to some extent but it cannot preserve the edge details effectively and accurately which leads to blocking staircase effect. Moreover, it also gives poor results for very noisy images and the values of high gradient areas of the images get smoothen out that affects the fine edges. Therefore more diffusion cannot be allowed to remove the noise along edges.

To address these limitations of AD method, CONVEF-based P-M anisotropic diffusion process is introduced as a second term in Eq. (8). The second term is an expanded form of the P-M model [11] which is defined as:

where the first term in Eq. (12) is an inverse diffusion term used to enhance or sharpen the boundaries while the second term is a Laplacian term used for smoothing the regions that are relatively flat, ∇f is used to displace the inverse diffusion term to improve the denoising effect, as the GVF field basically implements a weighted gradient diffusion process. Thus, we used here a new version of CONVEF-AD based P–M equation defined as:

where \(f_{{^{{_{0} }} }}\) is the input noisy image, \(f_{t - 1}\) is the updated f at iteration t − 1. IN(\(f_{{^{{_{0} }} }}\)) is the Poisson or Gaussian estimator defined in [4, 11], med is the median filter, and \({\text{E}}_{\text{CONVEF}}\) denotes the Convolutional Virtual Electric Field defined as follows [12]:

where \(r_{h} = \sqrt {a^{2} + b^{2} + h} ,\) is a desired kernel that modifies the distance metrics, the variable h plays a significant role in multi-scale space filtering, (a, b) is used as the virtual electric field in the image pixel, and q denotes the magnitude of the image edge maps. The signal-to-noise (SNR) ratio, the smoothness, and quality of an image are directly dependent on the parameter h. Finally the proposed model is introduced by incorporating the new version of modified CONVEF-AD based P-M Eq. (13) within the variational framework of sinogram smoothing discussed in Eq. (10). By applying Euler–Lagrange combined with gradient descent minimization technique, the Eq. (10) reads as:

The proposed model in Eq. (15) is capable of dealing with the case of mixed noise sinogram data by introducing the CONVEF-AD regularization term. The value of k is set to \(\sigma_{e}\) which represents the minimum absolute deviation (MAD) of the image gradient. To make the adaptive or automatized nature of value of k by using the following formula:

For digital implementations, the Eq. (15) can be discretized using finite differences schemes [4]. After discretization of the proposed CONVEF-AD model it reads as:

where the index n represents the number of iteration. The Von Neumann analysis [4] shows that for the stability of discretized versions of Eq. (17), the following condition should be satisfied as \({{\Delta t} \mathord{\left/ {\vphantom {{\Delta t} {(\Delta f)^{2} }}} \right. \kern-0pt} {(\Delta f)^{2} }} < {1 \mathord{\left/ {\vphantom {1 4}} \right. \kern-0pt} 4}\). If the grid size is set to \(\Delta f = 1\) then \(\Delta t \le {1 \mathord{\left/ {\vphantom {1 4}} \right. \kern-0pt} 4}\).

4 Results and Discussion

In this work, two test cases are used as shown in Figs. 1a and 2a, both are computer-generated mathematical simulated phantom one is modified Shepp–Logan head phantom and another is CT phantom used to validate the result performance of the proposed CONVEF-AD based sinogram smoothing method for low-dose CT reconstruction. For simulation purposes, MATLAB v2013b has been used on a PC with Intel (R) Core (TM) i5 CPU 650 @ 3.20 GHz, 4.00 GB RAM, and 64-bit operating system. The brief description of the various parameters used for generation and reconstruction of the two test cases are as follows: Both test cases are of size 128 × 128 pixels and 120 projection angles were used. To simulate the noisy low-dose sinogram data, Eq. (2) was used, which is in mixed noise nature, i.e., both signal-dependent and signal-independent Gaussian distributed, and all are assumed to be 128 radial bins and 128 angular views evenly spaced over 1800. A mixed noise of magnitude 10 % is added to sinogram data. In simulation purposes fan-beam imaging geometry were used. By applying radon transform noise free sinogram were generated which is shown in Figs. 1b and 2b. After that isolated data from the noisy sinogram which are shown in Figs. 1c and 2c, were extracted by applying a \(3 \times 3\) median filter and choose the output as an initial guess estimator. The proposed model consists of many advantages over Gradient Vector Flow (GVF) and Inverse-GVF method, like improvised numerical stability and efficient estimation of high order derivatives. Therefore, the proposed model introduced in Eq. (13) may effectively remove mixed noise properties, i.e., signal dependent and signal independent present in low-dose sinogram data and acceptable computational cost. Also compute the mean and variance by using Eq. (3) and then calculate the gradient coefficient by applying Eq. (11). In this experiment, the parameters of the proposed models are as follows: The INGVF field parameters are set to 0.2. In our study, the whole algorithm is run for 100 iterations because visual result hardly changes for further iterations and within each iterations CONVEF_AD is run for three iterations. The CONVEF parameters: n = 5, h = 15 and the size of the kernel are defined to be one fourth of the test size images. In Eq. (14), E is calculated before the evolution of image, only \({\nabla }f\) has to be computed directly from the estimated image while the original Eq. (12) needs to compute the second-order derivative. The value of balancing parameter λ was set to 1 for each test case and the value of diffusion coefficient (Kappa) used by proposed CONVEF-AD based prior was calculated by using Eq. (16) for different test cases, within each iteration during sinogram smoothing. Update the value of the estimated image pixel-by-pixel using Eq. (17) until it reaches to a relative convergence. The reconstructed images produced by different algorithms have shown in Figs. 1d–f and 3d–f, respectively. From the figures, it can be observed that proposed method performs better in terms of noise removal as well as preservation of weak edges and structural fine detailed information. Also notice that, proposed method reduces the streaking artifacts and the results are close to the original phantom test cases. The graphs are plotted for different quantitative measures like SNR, RMSE, CP, and MSSIM for different algorithms as shown in Fig. 3a–d for both test cases. From Fig. 3a, it is observed that the SNR values associated with the proposed method are always higher than that produced by other algorithms such as Total Variation (TV) [5] and Anisotropic Diffusion (AD) [6] priors with traditional filtered backprojection (FBP), which indicates that the CONVEF-AD with FBP framework significantly improves the quality of reconstruction in terms of different quantitative measures like SNR, RMSE, CP, and MSSIM values. Figure 3b, shows that the RMSE values of proposed method are higher in comparison to other methods which indicate CONVEF-AD with FBP performs better than other methods. Figure 3c shows that the CP values of CONVEF-AD with FBP method are higher and close to unity in comparison to other methods which indicate that the CONVEF-AD with FBP framework is also well capable of preserving the fine edges and detailed structures during the reconstruction process. Figure 3d, shows that the MSSIM values of proposed method is higher which indicate better reconstruction; it also preserves the luminance, contrast, and other details of the image during the reconstruction processes. Table 1 shows that the quantification values of SNRs, RMSEs, CPs, and MSSIMs for both the test cases, respectively. The comparison tables indicate that proposed method produces images with prefect quality than other reconstruction methods in consideration.

The reconstructed results of modified Shepp–Logan phantom with similar standard methods from the noisy sinogram data. a Original Shepp–Logan phantom, b noise free sinogram, c noisy sinogram, d reconstructed image by TV+FBP, e reconstructed result by AD+FBP, and f reconstructed result by CONVEF_AD+FBP

5 Conclusions

This paper proposes an efficient method for low-dose X-ray CT reconstruction using statistical sinogram restoration techniques. The proposed method has been modeled into a variational framework. The solution of the method, based on minimization of an energy functional, consists of two terms, viz., data fidelity term and a regularization function. The data fidelity term was obtained by minimizing the negative log likelihood of the noise distribution modeled as Gaussian probability distribution as well as Poisson distribution which depicts the noise distribution in low-dose X-ray CT. The regularization term is nonlinear CONVEF-AD based filter which is a version of Perona–Malik (P–M) anisotropic diffusion filter. The proposed method was capable of dealing with mixed noise. The comparative study and performance evaluation of the proposed method exhibit better mixed noise removal capability than other methods in low-dose X-ray CT.

References

Lifeng Yu, Xin Liu, Shuai Leng, James M Kofler, Juan C Ramirez-Giraldo, Mingliang Qu, Jodie Christner, Joel G Fletcher, and Cynthia H McCollough: Radiation Dose Reduction in Computed Tomography: Techniques and Future Perspective. Imaging in medicine, Future Medicine, 1(1), 65–84, (2009).

Hao Zhang, Jianhua Ma, Jing Wang, Yan Liu, Hao Han, Hongbing Lu, William Moore, Zhengrong Liang: Statistical Image Reconstruction for Low-Dose CT Using Nonlocal Means-Based Regularization. Computerized medical imaging and graphics, Computerized Medical Imaging Society, 8(6), 423–435, (2014).

Yang Gao, Zhaoying Bian, Jing Huang, Yunwan Zhang, Shanzhou Niu, Qianjin Feng, Wufan Chen, Zhengrong Liang, and Jianhua Ma: Low-Dose X-Ray Computed Tomography Image Reconstruction with a Combined Low-mAs and Sparse-View Protocol. Optics Express, 2(12), 15190–15210, (2014).

Rajeev Srivastava, Subodh Srivastava, Restoration of Poisson noise corrupted digital images with nonlinear PDE based filters along with the choice of regularization parameter estimation. Pattern Recognition Letters, 34(10), 1175–1185, (2013).

Xueying Cui, Zhiguo Gui, Quan Zhang, Yi Liu, Ruifen Ma: The statistical sinogram smoothing via adaptive-weighted total variation regularization for low-dose X-ray CT. Optik - International Journal for Light and Electron Optics, 125(18), 5352–5356, (2014).

Mendrik, A.M., Vonken, E.-J., Rutten A., Viergever M.A., van Ginneken B.,: Noise Reduction in Computed Tomography Scans Using 3-D Anisotropic Hybrid Diffusion With Continuous Switch. Medical Imaging, IEEE Transactions, 28(10), 1585–1594, (2009).

Jing Wang; Hongbing Lu, Junhai Wen, Zhengrong Liang: Multiscale Penalized Weighted Least-Squares Sinogram Restoration for Low-Dose X-Ray Computed Tomography. Biomedical Engineering, IEEE Transactions, 55(3),1022–1031, (2008).

Perona, P.; Malik, J.: Scale-space and edge detection using anisotropic diffusion. Pattern Analysis and Machine Intelligence, IEEE Transactions, 12(7), 629–639, (1990).

Ovidiu Ghita, Paul F. Whelan: A new GVF-based image enhancement formulation for use in the presence of mixed noise. Pattern Recognition, 43(8), 2646–2658, (2010).

Niu, Shanzhou et al.,: Sparse-View X-Ray CT Reconstruction via Total Generalized Variation Regularization. Physics in medicine and biology, 59(12), 2997–3017, (2014).

Hongchuan Yu.,: Image Anisotropic Diffusion based on Gradient Vector Flow Fields. Computer Vision - ECCV 2004, Lecture notes in Computer Science 3023, 288–301, (2004).

Y, Zhu C, Zhang J, Jian Y,: Convolutional Virtual Electric Field for Image Segmentation Using Active Contours. PLoS ONE, 9(10), 1–10, (2014).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Science+Business Media Singapore

About this paper

Cite this paper

Tiwari, S., Srivastava, R., Arya, K.V. (2017). A Nonlinear Modified CONVEF-AD Based Approach for Low-Dose Sinogram Restoration. In: Raman, B., Kumar, S., Roy, P., Sen, D. (eds) Proceedings of International Conference on Computer Vision and Image Processing. Advances in Intelligent Systems and Computing, vol 459. Springer, Singapore. https://doi.org/10.1007/978-981-10-2104-6_6

Download citation

DOI: https://doi.org/10.1007/978-981-10-2104-6_6

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-2103-9

Online ISBN: 978-981-10-2104-6

eBook Packages: EngineeringEngineering (R0)