Abstract

Support Vector Machine (SVM) classifiers are binary classifiers in nature, which have to be coupled/assembled to solve multi-class problems. One-Versus-Rest (1-v-r) is a fast and accurate method for SVM multiclass classification. This paper investigates the effect of output grouping on multiclass classification with SVM and offers an even faster version of 1-v-r based on our output grouping algorithm.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Support Vector Machine (SVM) is a popular supervised machine learning algorithm for solving problems in classification and regression. An SVM takes a set of training samples (known observations belonging to a certain class/category) and yields a set of decision functions to determine which class a test sample (unknown observation) belongs to [1].

However a novel SVM classifier only solves two-class problems. To solve a multi-class problem, multiple binary SVM classifiers are coupled or assembled as a multi-class SVM. Such a decomposition method plays an important role on the multi-class SVM’s generalization performance and time consumption. One-Versus-One (1-v-1), One-Versus-Rest (1-v-r) and ECOC are three of the most commonly used ensemble methods [2, 3]. Empirical result shows 1-v-r is the fastest method in predicting unknown samples [4–6] with good performance.

This paper and investigates the effect of output (class) grouping, which were previously researched in neural networks [7–9], in multiclass SVM classifiers. Finally we offer an even faster version of 1-v-r with the help of the output-grouping algorithm.

2 Background

Output grouping is a learning strategy being applied in neural networks to improve the accuracy and reduce training cost. The strategy is based on observation of class correlation. In previous study in neural networks, strong correlation between two classes \( i,j \) implies high similarity. Then \( i,j \) can be trained together for better precision [8].

2.1 One-Versus-Rest (1-v-R)

The idea of 1-v-R classification is to build N classifiers for each of the classes. In the training process all the training data is used for every one of the N classifiers. If a classifier is built for class i then the classifier is trained with the samples that belong to class i against the rest of the samples from the training data set.

Once the model is built, an unknown sample goes through all the N classifiers. Each classifier decides whether or not this sample belongs to the corresponding class. Ideally one and only one classifier should respond ‘yes’ to the unknown sample and the corresponding class will be the class that the sample belongs to, otherwise there is either a clash or a total miss. Under such a circumstance the unknown sample goes to the class with the most training samples.

2.2 Output Grouping

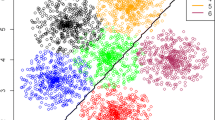

For SVM classifiers, if samples from two classes are ‘mixed’ together then it is difficult for SVM to draw a line or plane to distinguish the two classes. In the case of 1-v-r, suppose a class \( i \) needs to be distinguished from the remaining classes (the ‘rest’). If another class \( j \) among the rest is highly similar to \( i \) then it will also be difficult to tell \( i \) and the rest apart, even if other classes among the rest (excluding class \( j \)) are easy to be separated from \( i \). As a result, such interaction, correlation or similarity between classes \( i \) and \( j \) would contribute to a complex but less accurate model.

We tested this hypothesis using the UCI Iris dataset. We duplicated all samples from class 3 and labelled them as class 4, train a linear SVM model using 1-v-r method, ran a prediction on training set and ran a 10-fold cross prediction with classifier and the data set. We then merged samples from classes 3 and 4 and labelled them as a new class (grouping), trained and validated an additional 3-versus-4 classifier and merged the results from both classifiers (Table 1).

If the classifier cannot tell 3 and 4 apart, then the best chance of classification error between 3 and 4 should be 50 % (random guessing). Then the cross validated accuracy should be around 71 % instead of 65.86 %. In other words, the strong similarity between class 3 and 4 should not but has affected the performance of other classification tasks. While with class 3 and 4 grouped, we seem to eliminate such undesirable interference.

2.3 Output-Grouping-One-Versus-Rest (OG) Algorithm

We propose an algorithm, OG, which aggregates outputs (classes) by their ‘similarity’ in groups, then treats the groups as new classes and performs 1-v-r learning.

The algorithm has six components: (1) similarity scorer, (2) class grouper, (3) 1-v-r trainer, (4) intra-group classifier trainer, (5) 1-v-r predictor and (6) intra-group predictor (Figs. 1 and 2; Table 2).

OG attempts to group “hard-to-tell-apart” classes together so that it should be able to reduce the model complexity, improved the accuracy and further increase the prediction speed. However the speed-up is at the cost of an additional training/prediction, grouping and result merging.

3 Experiments

The OG was evaluated on seven different data sets: UCI Iris Plant, UCI Glass Identification, UCI Vowel, Statlog Handwritten Letters, Statlog Satimage, USPS and MNIST [11]. The values in each data set have been scaled to [0, 1].

Each data set was trained and tested with five multiclass SVM algorithms: OG, OGPG, 1-v-r, 1-v-1 and ECOC [11]. OG is the implementation of our proposed algorithm under the scikit-learn framework, while 1-v-r, 1-v-1 and ECOC are the existing implementations in scikit-learn [12]. All the algorithms use SVC [2] to train and test the SVM models.

To further address the effectiveness of grouping, we introduce a variant of OG, OG with pre-computed group (OGPG), by supplying the algorithm pre-computed groups.

The experiment for each method ran 50 times for the averaged measurements. Each time the model was trained by randomly-spit 2/3 of the data set and validated on the remaining 1/3 (Table 3).

4 Results

The accuracy is close for all algorithms except for ECOC in some occasions across all the datasets. OG and OGPG perform slightly better than 1-v-r algorithm on smaller datasets (iris, glass and vowel). However we cannot conclude which algorithm is the best in terms of accuracy (Table 4).

On large data sets (satimage, usps and mnist) OG and OGPG slightly reduced the training time and significantly reduced the prediction time by 0.8–29.2 % and 5.4–30.6 % respectively, compared with 1-v-r method. OGPG is the fastest in terms of prediction, seconded by OG.

On smaller data sets, OG and OGPG’s worse speed performance were caused by the fact that the overhead of additional grouping and merging dominates the time consumption of SVM training and prediction.

Even though OG performs an additional training/prediction to determine the grouping on another 33–50 % of the training set, its training time is still less than 1-v-r. The model size is also slightly decreased for all data sets except for usps and mnist.

5 Discussions and Conclusions

To our disappointment neither OG nor OGPG did improve the accuracy. Our speculation during the experiment shows that the accuracy tends to be saturated at a certain point when the number of training samples is large enough or the parameters of SVM are fairly well optimised.

We may conclude that OG and OGPG improve the speed performance of 1-v-r method even at the cost of performing additional classification tasks.

Our findings show that output grouping is effective at reducing the training complexity of 1-v-r multi-class classification tasks using support vector machines. Nevertheless output grouping can be used to boost the prediction speed of multiclass SVMs with no extra cost. We are now investigating the cause of the saturated accuracy and working on combining input and output grouping methods [8, 9, 13–16] for better classification accuracy.

References

Chang C-C, Lin C-J (2011) Libsvm. ACM Trans Intell Syst Technol 2(3):1–27

svm@scikit-learn.org (2016). Available: http://scikit-learn.org/stable/modules/svm.html

Hsu C-W, Lin C-J (2002) A comparison of methods for multi-class support vector machines. IEEE Trans Neural Networks 13(2):415–425

Mehra N, Gupta S (2013) Survey on multiclass classification methods. Int J Comput Sci Inf Technol 4(4):572–576

Rifkin R (2008) Multiclass classification. Lect Slides. February

Tewari A, Bartlett PL (2007) On the Consistency of multiclass classification methods. J Mach Learn Res 8:1007–1025

Guan S-U, Li S (2002) Parallel growing and training of neural networks using output parallelism. IEEE Trans Neural Netw 13(3):542–550

Yang S, Guan S-U, Guo SJ, Zhao LF, Li WF, Xue HX (2013) Neural Network output partitioning based on correlation. J Clean Energy Technol 1(4):342–345

Yang S, Guan SU, Li WF, Zhao LF (2013) Low-interference output partitioning for neural network training. J Clean Energy Technol 1(4)

LIBSVM data: classification, regression, and multi-label. Available https://www.csie.ntu.edu.tw/~cjlin/libsvmtools/datasets/. Accessed on 03 Feb 2016

Dietterich TG, Bakiri G (1994) Solving multiclass learning problems via error-correcting output codes. J Artif Intell Res 2:263–286

multiclass@scikit-learn.org (2016). Available: http://scikit-learn.org/stable/modules/multiclass.html

Guo SJ, Guan S-U, Yang S, Li WF, Zhao LF, Song JH (2013) Input partitioning based on correlation for neural network learning. J Clean Energy Technol 1(4):335–338

Guo S, Guan S-U, Li W, Man KL, Liu F, Qin AK (2013) Input space partitioning for neural network learning. Int J Appl Evol Comput 4(2):56–66

Guo S, Guan SU, Li W, Zhao L, Song J, Cao M (2012) Promotion-based input partitioning of neural network. In: International conference on computer, communication, automation and control 2012 (CCAC 2012)

Guo S, Guan SU, Li W, Zhao L, Song J, Cao M (2014) Promotion-based input partitioning of neural network. In: Proceedings of the 9th international symposium on linear drives for industry applications, vol 3, pp 179–186

Bordes A, Ertekin S, Weston J, Bottou L (2005) Fast kernel classifiers with online and active learning. J Mach Learn Res 6:1579–1619

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media Singapore

About this paper

Cite this paper

Zhao, X., Guan, S., Man, K.L. (2016). An Output Grouping Based Approach to Multiclass Classification Using Support Vector Machines. In: Park, J., Jin, H., Jeong, YS., Khan, M. (eds) Advanced Multimedia and Ubiquitous Engineering. Lecture Notes in Electrical Engineering, vol 393. Springer, Singapore. https://doi.org/10.1007/978-981-10-1536-6_51

Download citation

DOI: https://doi.org/10.1007/978-981-10-1536-6_51

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-1535-9

Online ISBN: 978-981-10-1536-6

eBook Packages: Computer ScienceComputer Science (R0)