Abstract

In this paper, an approach of hybrid technique is presented to derive Pareto optimal solutions of a multi-objective linear fractional programming problem (MOLFPP). Taylor series approximation along with the use of a hybrid technique comprising both weighting and \( \epsilon \)-constraint method is applied to solve the MOLFPP. It maintains both priority and achievement of possible aspired values of the objectives by the decision maker (DM) while producing Pareto optimal solutions. An illustrative numerical example is discussed to demonstrate the proposed method and to justify the effectiveness, the results so obtained are compared with existing fuzzy max–min operator method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Multi-objective linear fractional programming

- Taylor series approximation

- Hybrid method

- Fuzzy programming

- Pareto optimal solution

1 Introduction

Fractional programming belongs to the class of mathematical programming and deals with the optimization of a function existing in the form of ratio of two linear or nonlinear functions. Some common and practical instances of objectives belonging to the category of fractional programming are profit/cost, cost/time, output/employee, inventory/sale, risk assets/capital, debt/equity, and so forth. A linear fractional programming problem (LFPP) developed by Martos [1] optimizes an objective function (existing in form of ratio of two affine functions, i.e., linear plus constant) subject to a set of linear constraints as

\( x \in \Omega \), i.e., a set of linear constraints.

In numerous real-world decision-making situations, several fractional objectives are encountered which need to be optimized simultaneously restricted within a common set of constraints. Such mathematically modeled optimization problems are well known as multi-objective fractional programming or ratio optimization problem. Research activities in this field has been considerably accelerated since a few years because of its wide range of application in numerous important fields like engineering, economics, information theory, finance, management science, marine transportation, water resources, corporate planning, and so forth.

Charnes and Cooper [2] proposed a variable transformation technique to solve an LFPP with an additional constraint and a variable. Stancu-Minasian [3] discussed various methods and theoretical concepts on fractional programming. Costa [4] proposed an algorithm to solve MOLFPP which goes on dividing the no-dominated region to find the maximum value of the weighted sum of the objectives. Mishra [5] used weighting method to solve a bi-level LFPP. Toksar [6] proposed an approach to solve a fuzzy MOLFPP where membership functions are linearized using Taylor series expansion. Ojha and Ota [7] used a hybrid method to solve a multi-objective geometric programming problem. Valipour et al. [8] proposed parametric approach with weighting sum method to solve a MOLFPP. Ojha and Biswal [9] used \( \epsilon \)-Constraint method to produce a set of Pareto optimal solutions. [10–12] discussed basic concepts and many methods of solution to a multi-objective optimization problem.

This paper is organized as follows: Sect. 2 interprets basics of multi-objective optimization and some techniques for its solution. The concept of fuzzy programming with details of fuzzy max–min operator method is discussed in Sect 3. Section 4 describes the proposed method to solve a MOLFPP. A numerical example with its solution and some remarks are incorporated in Sects. 5 and 6 contains the concluding part.

2 Multi-objective Optimization Problem

A multi-objective optimization problem (MOOP) can be mathematically stated as follows:

where \( \Omega \) is the nonempty compact feasible region. Usually, a single optimal solution does not exist to satisfy all the objectives simultaneously with their best individual optimality level which generates the concept of Pareto optimal solutions.

Definition

(See [ 11 ]) \( x^{*} \in \Omega \) is a Pareto optimal solution of MOOP (1) if there does not exist another feasible solution \( \bar{x} \in \Omega \) such that \( f_{i} (\bar{x}) \le f_{i} \left( {x^{*} } \right) \forall i \) and \( f_{j} (\bar{x}) < f_{j} \left( {x^{*} } \right) \) for at least one j.

A set of Pareto optimal solutions comprising the most preferred optimal (best compromise) solution that satisfies all the objective functions with best possibility, can be generated using an appropriate method.

2.1 Weighting Sum Method

In this method [10, 11] nonnegative and normalized weights are assigned to all the objectives with smaller or bigger values regarding their importance at the DM as bigger and smaller weights represent greater and lesser importance of the objective, respectively. Finally, their weighted sum is optimized on the constraint feasible region to generate a set of Pareto optimal solutions by varying the weights. In other words, it scalarizes a vector optimization that converts a multi-objective to single-objective optimization problem. Mathematically, it can be stated for the MOOP (1) as

Convexity of the feasible region guarantees Pareto optimality of the solution generated due to this method whereas concavity does not.

2.2 ϵ-Constraint Method

This method [10, 11, 13] is based on preference which considers an objective as the best prioritized one and converts the rest objectives with their goals as constraints, i.e., it maximizes an objective and simultaneously maintains minimum acceptability level for other objective functions. Mathematically, it can be stated as

where \( f_{s} (x) \) is the best prioritized function and \( \epsilon_{i}^{L} \), \( \epsilon_{i}^{U} \) are, respectively, the relative minimum, maximum values of the objective \( f_{i} (x) \) with respect to other objectives. Substituting different values of \( \epsilon_{i} \in \left[\epsilon_{i}^{L} , \epsilon_{i}^{U} \right] \), a set of Pareto optimal solutions of (1) can be generated by solving (3).

2.3 Hybrid Method

This method [10] considers both priority of objective functions by assigning weights ‘\( w_{i} \)’ and achievement of minimum aspired objective values by the DM simultaneously. Mathematically, it can be stated as

where \( \bar{f}_{i} \) is the aspired value for the objective \( f_{i} (x) \).

3 Fuzzy Programming

Zadeh developed fuzzy set theory in 1965 that transforms imprecise information into precise mathematical form. Zimmermann [14] proposed fuzzy max–min operator method to solve various multi-objective optimization problems which is based on the concept of Bellman and Zadeh [15]. Each fuzzy set is associated with a membership function whose domain and range are the set of decision variables and [0, 1], respectively. An appropriate membership function is selected to determine the optimal solution. According to Zimmermanns fuzzy technique, best preferred optimal solution of a MOOP can be obtained using the following steps:

-

Step-1:

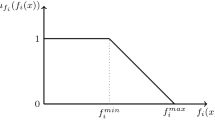

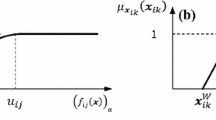

Determine the best and worst values, i.e., the aspired \( \left( {f_{i}^{\hbox{max} } } \right) \) and acceptable \( \left( {f_{i}^{\hbox{min} } } \right) \) values, respectively, for each objective function \( f_{i} (x), i = 1, \ldots ,k \) satisfying \( f_{i}^{\hbox{min} } \le f_{i} (x) \le f_{i}^{\hbox{max} } \), for \( x \in \Omega \).

-

Step-2:

Define following linear fuzzy membership function \( \mu_{i} (x) \) for each objective function \( f_{i} (x) \) to derive the best preferred optimal solution of the MOOP as

$$ \mu_{i} (x) = \left\{ {\begin{array}{*{20}l} {0,} \hfill & {f_{i} (x) \le f_{i}^{\hbox{min} } } \hfill \\ {\frac{{f_{i} (x) - f_{i}^{\hbox{min} } }}{{f_{i}^{\hbox{max} } - f_{i}^{\hbox{min} } }},} \hfill & {f_{i}^{\hbox{min} } \le f_{i} (x) \le f_{i}^{\hbox{max} } } \hfill \\ {1,} \hfill & {f_{i} (x) \ge f_{i}^{\hbox{max} } } \hfill \\ \end{array} } \right. $$(5) -

Step-3:

Construct the following crisp model to obtain the optimal solution as follows:

$$ \begin{aligned} {\text{Max}} & \left\{ {\mathop {\hbox{min} }\limits_{1 \le i \le k} \mu_{i} (x)} \right\} \\ & {\text{subject to}} \\ & x \in \Omega \\ \end{aligned} $$(6) -

Step-4:

The above crisp model can be transformed into an equivalent mathematical programming problem as follows:

$$ \begin{aligned} & {\text{Max}}\,\beta \\ & {\text{subjectto}} \\ \mu_{i} (x) = & \frac{{f_{i} (x) - f_{i}^{\hbox{min} } }}{{f_{i}^{\hbox{max} } - f_{i}^{\hbox{min} } }} \ge \beta , \quad i = 1,2, \ldots ,k \\ & x \in \Omega \\ \end{aligned} $$(7)where ‘\( \beta \)’ is an auxiliary variable assumed as the value of \( \mathop {\hbox{min} }\limits_{1 \le i \le k} \,\mu_{i} (x) \). The constraints \( \mu_{i} (x) \ge \beta \) can be replaced by \( f_{i} (x) - \beta \left( {f_{i}^{\hbox{max} } - f_{i}^{\hbox{min} } } \right) \ge f_{i}^{\hbox{min} } , i = 1,2, \ldots ,k \) for simplicity.

-

Step-5:

Solve the above maximization problem to obtain the best preferred optimal solution of the MOOP and evaluate the optimal objective values at this solution.

4 Proposed Method to Solve MOLFPP

A MOLFPP can be mathematically formulated as

Solution approach

Maximize the fractional objective function \( f_{i} (x) \) for each ‘\( i \)’ separately on the feasible region of constraints \( \Omega \) to obtain their individual optimal solutions.

Variable transformation technique [2] can be applied to obtain individual optimal solutions for each fractional objectives. For the \( k \)th objective function \( f_{k} (x) \), this technique is mathematically interpreted as

If \( \left( {y_{j} ,z} \right) \) is the optimal solution of (5) then \( \left( {x_{j} } \right) = \left( {\frac{{y_{j} }}{z}} \right) \) is considered as the individual optimal solution of the \( k \)th objective function \( f_{k} (x) \).

Let \( X_{1}^{*} ,X_{2}^{*} , \ldots ,X_{k}^{*} \) be the individual optimal solutions of the objective functions \( f_{1} (x),f_{2} (x), \ldots ,f_{k} (x) \), respectively, where \( X_{i}^{*} = \left( {x_{i}^{(j)*}, j = 1,2, \ldots ,n} \right), i = 1,2, \ldots ,k. \)

Evaluate relative minimum and maximum objective values, i.e., \( \epsilon_{i}^{L} \) and \( \epsilon_{i}^{U} \), respectively, for the \( i \)th objective function as

Approximate each fractional objective function \( f_{i} (x) \) by its first-order Taylor’s series expansion evaluated at its individual optimal solution \( X_{i}^{*} \) as

As each fractional objective functions get transformed into nonfractional linear functions, the MOLFPP is converted into a single-objective linear programming problem (SOLPP) using the Hybrid method, i.e., the combination of both weighting sum and \( \epsilon \)-constraint methods can be mathematically formulated as

Substituting different values of \( \epsilon_{i} \), i.e., minimum aspired objective values to be achieved by the objectives \( f_{i} (x) \) for different weights \( w_{i} \), a set of Pareto optimal solutions can be generated by solving the above problem (7) from which the DM can choose the most preferred optimal solution by comparing the objective values.

5 Numerical Example

Consider the following multi-objective linear fractional programming problem:

Individual optimal solutions of the objectives are obtained as

Using the criteria of the proposed method, relative minimum and maximum values of the objectives are obtained as

Fractional objectives are approximated by linear functions as

The multi-objective LFPP is transformed into the following single-objective LPP using the proposed method as

subject to

Substituting different weights ‘\( w_{i} \)’ on priority basis of objectives and aspired objective values \( \epsilon_{i} \), a set of Pareto optimal solutions \( \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) \) are generated in the following Table 1 by solving the above problem.

5.1 Result Due to Fuzzy Method

The relative minimum and maximum values of the objectives are the worst (acceptable) and best (aspired) values, respectively, i.e.,

For approximated linear objectives Since fractional objectives are approximated by linear objectives, constructing the linear membership functions as defined in Sect. 3 (step-2) and solving the corresponding optimization problem due to fuzzy max–min operator method as defined in Sect. 3 (step-4), the best preferred optimal solution is obtained as \( x^{*} = \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) = (0.7718,0.1411,0.0871) \). The values of the given fractional objective functions at this solution are \( f_{1} \left( {x^{*} } \right) = 2.2130 \) and \( f_{2} \left( {x^{*} } \right) = 1.1504 \).

For given fractional objectives Constructing membership functions with the given fractional objectives and solving the corresponding crisp model as defined in Sect. 3, the best preferred optimal solution is obtained as \( x^{*} = \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) = (0.7790,0.1052,0.1159) \). The values of the given fractional objective functions at this solution are \( f_{1} \left( {x^{*} } \right) = 2.0842 \) and \( f_{2} \left( {x^{*} } \right) = 1.2033 \).

Remark 1

Ascertaining the weights ‘\( w_{i} \)’ and changing the aspired objective values ‘\( \epsilon_{i} \)’ in the range \( \left[\epsilon_{i}^{L} ,\epsilon_{i}^{U} \right] \), a set of Pareto optimal solutions \( (x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} ) \) are generated which are same for the weights \( (1,0),(0.8,0.2) \) and \( (0.2,0.8),(0,1) \). \( f_{1}^{*} \) and \( f_{2}^{*} \) are the values of the fractional objectives evaluated at \( \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) \). Since, fractional objectives are approximated by linear functions, \( f_{i}^{*} \,{\succcurlyeq}\,\epsilon_{i} (i = 1,2) \) are satisfied where ‘\( { \succcurlyeq } \)’ represents “greater than or approximately equal to.” As \( f_{i}^{*} \in \left[\epsilon_{i}^{L},\epsilon_{i}^{U}\right] \) for each \( \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) \), it shows the correctness of the proposed method. DM can choose one Pareto optimal solution from Table 1 as the most preferred optimal solution on priority basis as required in the practical decision-making situation. If DM is still unsatisfied, more Pareto optimal solutions \( \left( {x_{1}^{*} ,x_{2}^{*} ,x_{3}^{*} } \right) \) can be generated by substituting more aspired objective values ‘\( \epsilon_{i} \)’ within the specified range \( \left[\epsilon_{i}^{L},\epsilon_{i}^{U}\right] \).

Remark 2

The highlighted objective values in Table 1 obtained due to the proposed method are considerably closer to the objective values obtained due to fuzzy max–min operator method using both cases of fractional and approximated linear objective functions. Besides this, using the proposed method DM obtains a set of solutions to choose the best preferred optimal solution as per the requirement of the system but in case of fuzzy, there is no choice to choose. It justifies the use of the proposed method.

6 Conclusions

This paper comprises conversion of fractional objectives into linear functions using Taylor’s series approximation and a hybrid method that combines the ideas “priority of objectives” and “achievement of possible aspired objective values” of both weighting sum and \( \epsilon \)-constraint methods, is implemented to generate a set of Pareto optimal solutions of a MOLFPP. As the proposed method offers numerous options to DM, we can select one solution as the most preferred optimal solution. “LINGO” and “MATLAB” softwares are used for computational works in the numerical example. Comparison of the results so obtained with existing fuzzy max–min operator method ensures the effectiveness of the proposed method.

References

Martos, B.: Hyperbolic programming. Publ. Res. Inst. Math. Sci. 5, 386–407 (1960)

Charnes, A., Cooper, W.W.: Programming with linear fractional functionals. Naval Res. logist. Q. 9, 181–186 (1962)

Stancu-Minasian, I.M.: Fractional programming: Theory, Methods and Applications. Kluwer Academic Publishers (1997)

Costa, J.P.: Computing non-dominated solutions in MOLFP. Eur. J. Oper. Res. 181, 1464–1475 (2007)

Mishra, S.: Weighting method for bi-level linear fractional programming problems. Eur. J. Oper. Res. 183, 296–302 (2007)

Toksar, M.D.: Taylor series approach to fuzzy multiobjective linear fractional programming. Inform. Sci. 178, 1189–1204 (2008)

Ojha, A.K., Ota, R.R.: A hybrid method for solving multi-objective geometric programming problem. Int. J. Math. Oper. Res. 7, 119–137 (2015)

Valipour, E., Yaghoobi, M.A., Mashinchi, M.: An iterative approach to solve multiobjective linear fractional programming problems. Appl. Math. Model. 38, 38–49 (2014)

Ojha, A.K., Biswal, K.K.: Multi-objective geometric programming problem with ϵ-constraint method. Appl. Math. Model. 38, 747–758 (2014)

Collette, Y., Siarry, P.: Multiobjective optimization: principles and case studies. Springer (2003)

Miettinen, K.M.: Nonlinear multiobjective optimization. Kluwer Academic Publisher (2004)

Ehrgott, M.: Multicriteria optimization. Springer (2005)

Haimes, Y.Y., Ladson, L.S., Wismer, D.A.: On a Bicriterion formulation of problems of integrated system identification and system optimization. IEEE Trans. Syst., Man, Cybern., Syst. 1, 296–297 (1971)

Zimmermann, H.-J.: Fuzzy programming and linear programming with several objective functions. Fuzzy Sets Syst. 1, 45–55, (1978)

Bellman, R.E., Zadeh, L.A.: Decision-making in a fuzzy environment. Mang. Sci. 17, B-141 (1970)

Acknowledgments

Authors are grateful to the Editor and anonymous referees for their valuable comments and suggestions to improve the quality of presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media Singapore

About this paper

Cite this paper

Suvasis Nayak, Ojha, A.K. (2016). An Approach to Solve Multi-objective Linear Fractional Programming Problem. In: Pant, M., Deep, K., Bansal, J., Nagar, A., Das, K. (eds) Proceedings of Fifth International Conference on Soft Computing for Problem Solving. Advances in Intelligent Systems and Computing, vol 436. Springer, Singapore. https://doi.org/10.1007/978-981-10-0448-3_59

Download citation

DOI: https://doi.org/10.1007/978-981-10-0448-3_59

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-0447-6

Online ISBN: 978-981-10-0448-3

eBook Packages: EngineeringEngineering (R0)