Abstract

Finite field arithmetic in residue number system (RNS) necessitates modular reductions, which can be carried out with RNS Montgomery algorithm. By transforming long-precision modular multiplications into modular multiplications with small moduli, the computational complexity has decreased much. In this work, two implementation methods of RNS Montgomery algorithm, residue recovery as well as parallel base conversion, are reviewed and compared. Then, we propose a new residue recovery method that directly employs binary system rather than mixed radix system to perform RNS modular multiplications. This improvement is appropriate for a series of long-precision modular multiplications with variant operands, in which it is more efficient than parallel base conversion method.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Modular multiplication is essential for popular Public Key cryptography that is defined in a finite field: RSA, Diffie-Hellman, and elliptic curve cryptography. In fact, a cryptosystem with enough security level often necessitates frequent large-operand modular multiplications.

As is known, additions, subtractions, and multiplications are parallel and carry-free in residue number system (RNS) [1–4]. Therefore, RNS arithmetic accelerates long-precision operations. However, modular reduction in RNS is harder than that in binary system.

An effective way to tackle RNS modular multiplication is to extend Montgomery algorithm from binary system to RNS [5–12]. Regular Montgomery algorithm breaks modular reductions in binary system into sequential additions and right shifts [13, 14], while the RNS Montgomery modular multiplication utilizes parallelism of RNS. As far as cryptography is concerned, the parallelism also provides natural immunity to side attacks, when the integral information is distributed into several channels. Besides, Phillips et al. [15] have proposed an algorithm directly based on the chinese remainder theorem, in which the constants are also represented in RNS.

In this paper, we will discuss the less-efficient RNS Montgomery algorithm with residue recovery [7, 10, 16], after an overview of the parallel base conversion method. It has been found that the usual residue recovery method can be built on binary system and RNS, without transformation into mixed radix system.

The remaining part of this paper is organized as follows: Sect. 2 reviews the RNS Montgomery algorithm with parallel base conversion; Sect. 3 discusses the RNS Montgomery algorithm with residue recovery; in Sect. 4, we propose our residue recovery method with binary system; and the last section concludes this paper.

2 RNS Montgomery Algorithm with Parallel Base Conversion

There are several approaches to implement RNS Montgomery modular multiplications, all of which obtain the quotients in parallel and then perform modular reduction of \( M^{- 1} \bmod P \) in an auxiliary RNS [5–9, 11, 17–19]. Among these methods, the parallel base conversion algorithm is the most efficient in computation.

2.1 Montgomery Algorithm

Usually, Montgomery algorithm is implemented in an interleaved form, and the multiplier is counted bit by bit while the multiplicand enters as a whole [14].

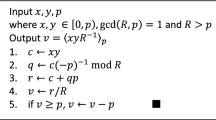

Algorithm 1 Montgomery algorithm [13] |

|---|

Input: Integers A, B, and P satisfy \( 0 \leq A < 2^{n} \), \( 0 \leq B < P \), \( 2^{n - 1} < P < 2^{n} \), and \( {\text{GCD}}(P,2) = 1 \). Let \( R = 2^{n} \) and \( R^{- 1} \) be the modular inverse of R modulo P. Then, \( RR^{- 1} - P\rho = 1 \), or \( \rho = (- P^{- 1}) \) mod R. |

Output: \( S \equiv A \cdot B \cdot R^{- 1} \) (mod P), where 0 ≤ S < 2P. |

1: \( T = A \cdot B; \) |

2: \( Q = T\rho \,\bmod \,R \); |

3: \( S = (T + PQ)/R \); |

4: return S. |

2.2 RNS Montgomery Algorithm with Parallel Base Conversion

At Line 1, \( P^{- 1} = {\text{RNS}}\left({(p_{1})_{{m_{1}}}^{- 1},(p_{2})_{{m_{2}}}^{- 1}, \ldots,(p_{n})_{{m_{n}}}^{- 1}} \right). \)

At Line 3, \( M^{- 1} = \left({|M^{- 1} |_{{m_{n + 1}}},|M^{- 1} |_{{m_{n + 2}}}, \ldots,|M^{- 1} |_{{m_{2n}}}} \right) \). There are two base conversions in such an RNS Montgomery modular multiplication: (1) Conversion of \( Q = (q_{1},q_{2}, \ldots,q_{n}) \) from \( \Upomega \) to \( Q^{\prime} = (q_{n + 1},q_{n + 2}, \ldots,q_{2n}) \) in \( \Upgamma \); (2) Conversion of \( R^{\prime} = (r_{n + 1},r_{n + 2}, \ldots,r_{2n}) \) from \( \Upgamma \) to \( R = (r_{1},r_{2}, \ldots,r_{n}) \) in \( \Upomega \).

Algorithm 2 RNS Montgomery algorithm with parallel base conversion [5, 7] |

|---|

Input: The moduli for residue number system \( \Upomega \) are \( \{m_{1},m_{2}, \ldots,m_{n} \} \), with \( M = \prod\nolimits_{i = 1}^{n} m_{i} \). Meanwhile, an auxiliary residue number system \( \Upgamma \) are defined by the other moduli \( \{m_{n + 1},m_{n + 2}, \ldots,m_{2n} \} \), with \( N = \prod\nolimits_{i = n + 1}^{2n} m_{i} \). Integers A, B, and P are represented in \( \Upomega\,\bigcup\,\Upgamma \) as \( A = (a_{1},a_{2}, \ldots,a_{2n}) \), \( B = (b_{1},b_{2}, \ldots,b_{2n}) \), \( P = (p_{1},p_{2}, \ldots,p_{2n}) \). Additionally, \( A \cdot B < M \cdot P \), \( 2P < M < N. \) |

Output: \( R \equiv A \cdot B \cdot M^{- 1} (\bmod \;P) \) with R < 2P. |

1: \( Q = (A \times B) \times (- p^{- 1}) :\Upomega; \) |

2: \( Q^{\prime} \leftarrow Q:\Upgamma \leftarrow \Upomega; \) |

3: \( R^{\prime} = (A \times B + P \times Q^{\prime}) \times M^{- 1} :\,\Upgamma; \) |

4: \( R \leftarrow R^{\prime} : \Upomega \leftarrow \Upgamma. \) |

5: return \( R \bigcup R^{\prime} :\Upomega \bigcup \Upgamma. \) |

Set \( M_{j} = M/m_{j} \), \( \sigma_{i} = \left| {q_{i} \left| {M_{i}^{- 1}} \right|_{{m_{i}}}} \right|_{{m_{i}}} \), and \( G_{i,j} = \left| {M_{j}} \right|_{{m_{n + i}}} \), then

where q j is obtained as modulo m j , and the integer factor α comes from the improved chinese remainder theorem [20]. Suppose that the moduli m i have k binary bits, i.e., \( 2^{k - 1} < m_{i} < 2^{k} \), for \( i = 1,2, \ldots,n \). With \( M_{i} = M/m_{i} \), the chinese remainder theorem can be written as

where \( \sigma_{i} = \left| {x_{i} M_{i}^{- 1}} \right|_{{m_{i}}} \), and the indefinite factor α can be fixed by an approximation method [5, 8] or by an extra modulus [7, 20].

3 RNS Montgomery Algorithm with Residue Recovery

Although a direct map of Montgomery algorithm to RNS suffers from a problem in losing residues, it is a good guide or bridge for subsequent algorithms.

Algorithm 3 Direct map of Montgomery algorithm in residue number system [16] |

|---|

Input: The integers A, B, and P are represented with residue number system \( \Upomega \): \( \{m_{1},m_{2}, \ldots,m_{n} \} \), with \( M = \prod\nolimits_{i = 1}^{n} m_{i} \) and \( M_{i} = M/m_{i} \). \( A = (a_{1},a_{2}, \ldots,a_{n}) \), \( B = (b_{1},b_{2}, \ldots,b_{n}) \), and \( P = (p_{1},p_{2}, \ldots,p_{n}) \). Meanwhile, A is also expressed in the mixed radix system as \( A = a_{1} + a_{2} \cdot m_{1} + \cdots + a_{n} \cdot \prod\nolimits_{i = 1}^{n - 1} m_{i} \). In addition, \( 0 \le A < \frac{{m_{n} - 1}}{2} \cdot \frac{M}{{m_{n}}} \), 0 ≤ B < 2P, \( 0 < P < \frac{M}{{\mathop {\max}\nolimits_{1 \le i \le n} \{m_{i} \}}} \). |

Output: \( R = A \cdot B \cdot M^{- 1} = {\text{RNS}}\left({r_{1},r_{2}, \ldots,r_{n}} \right) \). |

1: \( R: = (0,0, \ldots,0) \); |

2: for i = 0 to \( n - 1 \) do |

3: \( q_{i}^{\prime} : = (r_{i} + a_{i}^{\prime} \cdot b_{i})(m_{i} - p_{i})_{i}^{- 1} \bmod m_{i} \); |

4: \( R: = R + a_{i}^{\prime} \cdot B + q_{i}^{\prime} \cdot P \); |

5: \( R: = R \div m_{i} \); |

6: end for |

7: return R. |

3.1 Direct Map of Montgomery Algorithm into RNS

At Line 3, we make sure that

Given that \( R\bmod m_{i} = r_{i} \), \( B\bmod m_{i} = b_{i} \), and \( P\bmod m_{i} = p_{i} \), we have

Therefore, at Line 4 of the ith iteration, R is a multiple of m i . At the end of all iterations, as is shown in [7, 16], we have

Notice that \( M = m_{1} \cdot m_{2} \cdots m_{n} \) and \( A = \sum\nolimits_{i = 1}^{n} a_{i}^{\prime} \prod\nolimits_{j = 1}^{i - 1} m_{i} \), the above equation yields \( R \equiv A \cdot B \cdot M^{- 1} (\bmod \;P) \). At the last step of each loop, R will keep below 3P, which can be examined by induction [16]. Given that \( R_{n - 1} < 3P \) and \( A < \frac{{m_{n} - 1}}{2} \cdot \frac{M}{{m_{n}}} \), it should yield [16] \( R_{n} < 2P \).

However, there is a problem hidden at line L5: The residue \( r_{i} \) gets lost when \( r_{i} \div m_{i} \) appears [7]. In RNS, \( r_{i}/m_{j} \) is equivalent to \( r_{i} \times (m_{j})_{{m_{i}}}^{- 1} \bmod \;m_{i} \). However, \( (m_{i})_{{m_{i}}}^{- 1} \) does not exist because of \( m_{i} \times (m_{i})_{{m_{i}}}^{- 1} \equiv 0(\bmod \;m_{i}) \) (The product of an integer and its inverse modular m i should be equal 1 modulo m i ). Therefore, one more residue becomes meaningless after each cycle of the loop.

3.2 RNS Montgomery Algorithm with Residue Recovery

With the improved chinese remainder theorem [20], the lost residue can be recovered by the help of the redundant residue \( r_{n + 1} \). At Line 4,

Algorithm 4 RNS Montgomery algorithm with residue recovery [16] |

|---|

Input: The group of moduli: \( \{m_{1},m_{2}, \ldots,m_{n} \} \bigcup \{m_{n + 1} \} \) defines the residue number system \( \Upomega \), with \( M = \prod\nolimits_{i = 1}^{n} m_{i} \) and \( M_{i} = M/m_{i} \). A is represented in mixed radix system: \( A = a_{1}^{\prime} + \sum\nolimits_{i = 2}^{n} a_{i}^{\prime} \prod\nolimits_{j = 1}^{i - 1} m_{j} \). Meanwhile, \( B = (b_{1},b_{2}, \ldots,b_{n},b_{n + 1}) \) and \( P = (p_{1},p_{2}, \ldots,p_{n},p_{n + 1}) \). Also, \( A < \frac{{m_{n} - 1}}{2} \cdot \frac{M}{{m_{n}}} \), B < 2P. |

Output: \( R \equiv A \cdot B \cdot M^{- 1} (\bmod \;P) \). |

1: \( R: = (0,0, \ldots,0) \); |

2: for i = 1 to n do |

3: \( q_{i}^{\prime} : = (r_{i} + a_{i}^{\prime} \cdot b_{i})\left| {(m_{i} - p_{i})^{- 1}} \right|_{{m_{i}}} \bmod \;m_{i} \); |

4: \( R^{\prime} : = R + a_{i}^{\prime} \cdot B + q_{i}^{\prime} \cdot P \); |

5: \( R: = R^{\prime} \div m_{i} \); |

6: \( r_{i} : = {\text{restoreRNS}}(r_{1}, \ldots,r_{i - 1},r_{i + 1}, \ldots,r_{n + 1}) \); |

7: end for |

8: return R. |

At Line 5, \( R \div m_{i} \) is conducted for \( j \ne i,j \in \{1,2, \ldots,n + 1\} \) by multiplying the modular inverse, i.e., \( r_{j} : = r_{j} \cdot |m_{i}^{- 1} |_{{m_{j}}} \bmod m_{j} \) for j ≠ i.

At Line 6, the function ‘restore RNS’ just restores the lost residue r i at Line 5. Set \( \sigma_{i,j} = \left| {r_{j} \cdot \left| {\left({\frac{{M_{t,i}}}{{m_{j}}}} \right)^{- 1}} \right|_{{m_{j}}}} \right|_{{m_{j}}} \), then r i is obtained by the improved chinese remainder theorem as follows:

where

Taking the computation of a basic modular multiplication with m i as the unit, i.e., \( a_{i} \cdot b_{i} \bmod m_{i} \), then there are \( (6n + 5) \) modular multiplications at each iteration of the above algorithm. Then, a total Montgomery modular multiplication with residue recovery costs \( 6n^{2} + 5n \) basic modular multiplications.

4 Proposed Algorithm with Residue Recovery

The idea of RNS Montgomery algorithm is to keep the final result below some constants such as 2P with parallel or sequential modular reductions. The aforementioned residue recovery method performs sequential k-bit modular multiplications, in which one operand should be represented in mixed radix system. However, it is not convenient for conversions among binary system, RNS, and mixed radix system. Therefore, we propose a new residue recovery algorithm that avoids the use of mixed radix system.

4.1 Initial Algorithm

Algorithm 5 Proposed RNS Montgomery algorithm with residue recovery I |

|---|

Input: The residue number system \( \Upomega \) has a group of moduli: \( \{m_{1},m_{2}, \ldots,m_{n} \} \bigcup \{m_{n + 1} \} \), where \( m_{1} = 2^{t} \), \( 2^{k - 1} < m_{i} < m_{j} < 2^{k} \) for \( 2 \le i < j \le n + 1 \), and the integer \( t \le k - 1 \). All the moduli are relatively prime, with \( M = \prod\nolimits_{i = 1}^{n} m_{i} \) and \( M_{i} = M/m_{i} \). Also, \( A = \sum\nolimits_{i = 1}^{n} A_{i} \cdot 2^{(i - 1)t} \), where \( 0 \le A_{i} \le 2^{t} - 1 \) and \( A < 2^{nt - 1} \). B and P are represented in residue number system: \( B = (b_{1},b_{2}, \ldots,b_{n},b_{n + 1}) \), and \( P = (p_{1},p_{2}, \ldots,p_{n},p_{n + 1}) \), with \( T = 2^{nt} \), A < T, P < T, and B < 2P. |

Output: \( R \equiv A \cdot B \cdot T^{- 1} (\bmod \;P) \), where 0 ≤ R < 2P. |

1: \( R: = (0,0, \ldots,0) \); |

2: for i = 1 to n do |

3: \( q_{i}^{\prime} : = (r_{1} + A_{i} \cdot b_{i})\left| {(m_{1} - p_{1})^{- 1}} \right|_{{m_{1}}} \bmod \;m_{1} \); |

4: \( R^{\prime} : = R + A_{i} \cdot B + q_{i}^{\prime} \cdot P \); |

5: \( R: = R^{\prime} \div m_{1} \); |

6: \( r_{1} : = {\text{restoreRNS}}(r_{2},r_{3}, \ldots,r_{n + 1}) \); |

7: end for |

8: return R. |

With i = 1,

Assuming R < 3P at the end of the ith iteration, then the (i + 1)-th iteration yields

Then, the induction shows that R < 3P at last. However, we expect to get the final result within a smaller range [0, 2P). As

we can set

The above equation yields \( A_{n} < 2^{t - 1} - 1 \). Notice that \( A - A_{n} \cdot 2^{(n - 1)t} < 1 \cdot 2^{(n - 1)t} \), then as long as \( A < (A_{n} + 1) \cdot 2^{(n - 1)t} < 2^{nt - 1} \), there will be R < 2P.

At Line 6, we have

where \( \sigma_{1,j} = \left| {r_{j} \cdot \left| {\left({\frac{{M_{1}}}{{m_{j}}}} \right)^{- 1}} \right|_{{m_{j}}}} \right|_{{m_{j}}} \), and

4.2 Improved Algorithm

While Algorithm 5 is more efficient than Algorithm 4, it still requires more sequential steps to compute one RNS Montgomery modular multiplication than parallel base conversion method with Algorithm 2. However, we found out that a little revision will double the efficiency of our proposal, which is shown in Algorithm 6.

At first glance, Algorithm 6 differs from Algorithm 5 in the choice of t and \( m_{n + 1} \). By setting t = 2k rather than t = k, the number of loops is reduced by a half, while the computational complexity only increases a little. The range of m 1 then gets much larger than \( 2^{k} \) and other RNS moduli, but it does not impact the validity of the algorithm.

By setting \( m_{n + 1} = 2^{s} - 1 \), then the modular reduction in \( x \cdot y\,\,\bmod m_{n + 1} \) can be simplified. Take Eq. 6 as example, set \( x = \sigma_{i,j} < 2^{k} \), \( y = \left| {\frac{{M_{i}}}{{m_{j}}}} \right|_{{m_{n + 1}}} < 2^{s} \). Since \( k \gg s \), one can set \( l = \left\lceil {k/s} \right\rceil \), and there will be \( x = x_{l \cdot s - 1..0} \), with \( x_{i} = 0 \) for \( i \geq k \). As \( 2^{j \cdot s} \bmod (2^{s} - 1) = 1 \), then

Furthermore, the additions modulo \( 2^{s} - 1 \) can be simplified by setting the highest carry out as the lowest carry in. Assuming that \( x^{\prime} = x\bmod m_{n + 1} \), then \( x \cdot y\bmod m_{n + 1} = x^{\prime} \cdot y\bmod (2^{s} - 1) \) can be computed by one s × s-bit multiplication and one s-bit addition.

The sequential steps and computational complexity of Algorithm 6 can be measured as follows:

Algorithm 6 Proposed RNS Montgomery algorithm with residue recovery II |

|---|

Input: RNS \( \Upomega :\{m_{1},m_{2}, \ldots,m_{n} \} \bigcup \{m_{n + 1} \} \), where t = 2k, \( m_{1} = 2^{t} \), \( 2^{k - 1} < m_{i} < m_{j} < 2^{k} \) for \( 2 \le i < j \le n \), \( m_{n + 1} = 2^{s} - 1 \), \( s \ll k \). \( {\text{GCD}}(m_{i},m_{j}) = 1 \) for \( i \ne j \). \( M = \prod\nolimits_{i = 1}^{n} m_{i} \), \( M_{i} = M/m_{i} \). \( n^{\prime} = \left\lceil {n/2} \right\rceil \), \( A = \sum\nolimits_{i = 1}^{n\prime} A_{i} \cdot 2^{(i - 1)t} \), where \( 0 \le A_{i} \le 2^{t} - 1 \) and \( A < 2^{{n^{\prime} t - 1}} \). B and P are represented in residue number system: \( B = (b_{1},b_{2}, \ldots,b_{n},b_{n + 1}) \), and \( P = (p_{1},p_{2}, \ldots,p_{n},p_{n + 1}) \), with \( T = 2^{{2n^{\prime} t}} \), A < T, P < T, and B < 2P. |

Output: \( R \equiv A \cdot B \cdot T^{- 1} (\bmod \;P) \), where \( 0 \le R < 2P \). |

1: \( R: = (0,0, \ldots,0) \); |

2: for i = 1 to \( n^{\prime} \) do |

3: \( R^{\prime} : = R + A_{i} \cdot B \); |

4: \( q_{i}^{\prime} : = q_{1}^{\prime} \cdot \left| {(m_{1} - p_{1})^{- 1}} \right|_{{m_{1}}} \quad \bmod \,m_{1} \); |

5: \( R^{\prime \prime} : = R^{\prime} + q_{i}^{\prime} \cdot P \); |

6: \( R: = R^{\prime \prime} \div m_{1} \); |

7: \( r_{1} : = {\text{restoreRNS}}(r_{2},r_{3}, \ldots,r_{n + 1}) \); |

8: end for |

9: return R. |

-

1.

Assuming that the complexity of a k-bit modular multiplication is 1, and the complexity of a k × k multiplication is 1/3. As is well known, modular multiplication by Montgomery algorithm and Barrett modular reduction requires three multiplications. In addition, the computational complexity of a k × 2k multiplication is 2/3, and the complexity of an s-bit modular multiplication is \( \varepsilon, \varepsilon \ll 1 \).

-

2.

Neglecting the complexity of modular additions and modular subtractions, since it is small compared with modular multiplications.

-

3.

The computation of \( A_{i} \cdot B \) requires one sequential modular multiplication, and the total complexity is (n + ɛ) k-bit modular multiplications.

-

4.

The determination of \( q_{i}^{\prime} \) requires 2/3 sequential modular multiplication, and the total complexity is just 2/3. At Line 4, the value \( (m_{1} - p_{1})^{- 1} \) can be precomputed.

-

5.

The computation of \( q_{i}^{\prime} \cdot P \) requires 5/3 sequential modular multiplications, and the total complexity is \( (5n/3 + 2\varepsilon) \) k-bit modular multiplications. The increased computational complexity of 2/3 and ɛ corresponds to the modular reduction in \( q_{i}^{\prime} \) to k-bit and s-bit moduli \( m_{i} \), \( i = 2,3, \ldots,n + 1 \).

-

6.

The computation of \( R_{i}^{\prime \prime} \div m_{1} \) can be performed by modular multiplications of \( m_{1}^{- 1} = 2^{- t} \) modulo \( m_{i} \). It requires one sequential modular multiplication, and the total complexity is \( (n + \varepsilon) \) k-bit modular multiplications.

-

7.

As are shown in Eqs. (5) and (6), the recovery of residue r 1 after division of m 1 requires \( (2/3 + \varepsilon) \) sequential modular multiplication, and the total complexity is \( (2n/3 + 2 \varepsilon) \) k-bit modular multiplications. With i = 1 in Eq. 5, modular reduction over m 1 can be performed by truncation instead of multiplications, which leads to the complexity of 2/3 rather than 1.

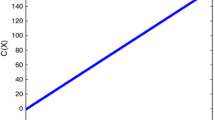

In total, Algorithm 6 merely needs

sequential modular multiplications, and the total computational complexity is about

k-bit modular multiplications.

4.3 Comparison with Parallel Conversion Method

By contrast, the parallel base conversion method in Algorithm 2 will need about 2n + 8 sequential k-bit modular multiplications [7, 15], and the total computational complexity is about \( (2n^{2} + 10n + 4) \) k-bit modular multiplications. While this result seems better than our proposals, it should be noticed that all the inputs of Algorithm 2 should be in residue number system. If only one input lies in RNS, the other should be transformed from binary number system to RNS [8], then the parallel base conversion method will require 3n + 8 sequential k-bit modular multiplications and \( (3n^{2} + 9n + 4) \) k-bit modular multiplications. Therefore, if one input of RNS modular multiplication keeps in binary number system, our proposed Algorithm 6 will be better than parallel base conversion method. The above comparison is shown in Table 1, where ‘Seq. Num. Mod-mult’ denotes sequential number of k-bit modular multiplications and `Tot. Num. Mod-mult’ denotes the total number of k-bit modular multiplications.

If there are a series of modular multiplications by variant long-precision integers, i.e., \( \tau_{1},\tau_{2}, \ldots,\tau_{h} \), with h be some regular index. The modulus is denoted as P. All \( \tau_{i} \) and P are long-precision binary numbers, e.g., between \( (2^{L - 1},2^{L}] \), with \( L \gg 1 \). Then, we intend to compute \( R = \prod\nolimits_{i = 1}^{h} \tau_{i} \bmod P \).

We just need \( \tau_{1} \) and P in RNS \( \Upomega :\{m_{1},m_{2}, \ldots,m_{n} \} \), where \( \tau_{1} = U_{\text{rns}}^{(0)} = (u_{1},u_{2}, \ldots,u_{n}) \), \( P = (p_{1},p_{2}, \ldots,p_{n}) \). By the new residue recovery method `MR’(Algorithm 6), one gets

At the last step, there is

Suppose that \( c = T^{h} \bmod P \) has been precomputed, then

If it is necessary, R can also be converted from RNS to binary system by the chinese remainder theorem:

where r i is the ith component of R, and α is obtained with the aforementioned approximation method [8].

Nevertheless, the proposed method is not less efficient than parallel conversion method [7] for modular exponentiation, in which the computational complexity can be greatly decreased in the latter since only one RNS-to-binary conversion with the base number is enough.

5 Conclusion

Long-precision modular multiplications can be performed in RNS with RNS Montgomery algorithm, for which there are mainly two implementation methods: parallel base conversion as well as residue recovery. The first method performs modular reductions with respect to the dynamic range in parallel, and base conversions between two RNSs are required. The second method, however, performs modular reduction in a number of sequential steps and requires more sequential steps.

Then, we propose a new residue recovery method that is based on binary system rather than mixed radix system, which is supposed to suit modular multiplications of many various large integers. In this case, it is more efficient than parallel base conversion method since little binary-to-RNS conversion is required.

References

Soderstrand, M., Jenkins, W., Jullien, G., Taylor, F. (eds.): Residue Number System Arithmetic: Modern Applications in Signal Processing, pp. 1–185, IEEE Press, New York (1986)

Mohan, P.A.: Residue Number Systems: Algorithms and Architectures. Kluwer Academic Publishers, Boston (2002)

Wu, A.: Overview of Residue Number Systems. National Taiwan University, Taipei (2002)

Taylor, F.: Residue arithmetic: a tutorial with examples. Computer 17(5), 50–62 (1984)

Posch, K., Posch, R.: Modulo reduction in residue number system. IEEE Trans. Parallel Distrib. Syst. 6, 449–454 (1995)

Ciet, M., Neve, M., Peeters, E., Quisquater, J.: Parallel FPGA implementation of RSA with residue number systems-can side-channel threats be avoided? In: 46th IEEE International Midwest Symposium on Circuits and Systems, vol. 2. pp. 806–810 (2003)

Bajard, J., Didier, L., Kornerup, P.: An RNS Montgomery modular multiplication algorithm. IEEE Trans. Comput. 47, 766–776 (1998)

Kawamura, S., Koike, M., Sano, F., Shimbo, A.: Cox-rower architecture for fast parallel Montgomery multiplication. In Preneel, B. (ed.) Advances in Cryptology-EuroCrypt’00. Volume 1807 of Lecture Notes in Computer Science, pp. 523–538, Springer-Verlag, Berlin (2000)

Nozaki, H., Motoyama, M., Shimbo, A., Kawamura, S.: Implementation of RSA algorithm based on RNS Montgomery modular multiplication. In: Third International Workshop on Cryptographic Hardware and embedded systems. Volume 2162 of Lecture Notes in Computer Science, pp. 364–376. Springer, Berlin (2001)

Bajard, J., Didier, L., Kornerup, P.: Modular multiplication and base extensions in residue number systems. In: 15th IEEE Symposium on Computer Arithmetic, pp. 59–65 (2001)

Bajard, J., Imbert, L.: A full RNS implementation of RSA. IEEE Trans. Comput. 53, 769–774 (2004)

Bajard, J., Imbert, L., Liardet, P., Yannick, T.: Leak resistant arithmetic. In: Cryptographic Hardware and Embedded Systems (CHES 2004). Volume 3156 of Lecture Notes in Computer Science, pp. 62–75 Springer, Berlin (2004)

Montgomery, P.: Modular multiplication without trial division. Math. Comput. 44, 519–521 (1985)

Orup, H.: Simplifying quotient determination in high-radix modular multiplication. In: 12th IEEE Symposium on Computer Arithmetic, pp. 193–199 (1995)

Phillips, B., Kong, Y., Lim, Z.: Highly parallel modular multiplication in the residue number system using sum of residues reduction. Appl. Algebra Eng. Commun. Comput. 21, 249–255 (2010)

Bajard, J., Didier, L., Kornerup, P.: An RNS Montgomery modular multiplication algorithm. In: 13th IEEE Sympsoium on Computer Arithmetic, pp. 234–239 (1997)

Yang, T., Dai, Z., Yang, X., Zhao, Q.: An improved RNS Montgomery modular multiplier. In: 2010 International Conference on Computer Application and System Modeling (ICCASM 2010), Vol. 10, pp. 144–147

Guillermin, N.: A high speed coprocessor for elliptic curve scalar multiplications over \( {\mathbb{F}}_{p} \). In: Cryptographic Hardware and Embedded Systems, CHES 2010. Vol. 6225 Lecture Notes in Computer Science, pp. 48–64 (2010)

Wu, T., Liu, L.: Elliptic curve point multiplication by generalized Mersenne numbers. J. Electro. Sci. Technol 10(3), 199–208 (2012)

Shenoy, A., Kumaresan, R.: Fast base extension using a redundant modulus in RNS. IEEE Trans. Comput. 38, 292–297 (1989)

Acknowledgments

The authors would like to thank the editor and the reviewers for their comments. This work was partly supported by the National High Technology Research and Development Program of China (No.2012AA012402), the National Natural Science foundation of China (No.61073173), and the Independent Research and Development Program of Tsinghua University (No. 2011Z05116).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer India

About this paper

Cite this paper

Wu, T., Li, S., Liu, L. (2014). Improved RNS Montgomery Modular Multiplication with Residue Recovery. In: Patnaik, S., Li, X. (eds) Proceedings of International Conference on Soft Computing Techniques and Engineering Application. Advances in Intelligent Systems and Computing, vol 250. Springer, New Delhi. https://doi.org/10.1007/978-81-322-1695-7_27

Download citation

DOI: https://doi.org/10.1007/978-81-322-1695-7_27

Published:

Publisher Name: Springer, New Delhi

Print ISBN: 978-81-322-1694-0

Online ISBN: 978-81-322-1695-7

eBook Packages: EngineeringEngineering (R0)