Abstract

We will begin by a quick but thorough look at the effects of fault s, error s and failure s, caused by terrestrial neutron s originating from cosmic rays , on the terrestrial electronic systems in the variety of industries. Mitigation measures, taken at various levels of design hierarchy from physical to systems level against neutron-induced adverse effects, are then introduced. Challenges for retaining robustness under future technology development are also discussed. Such challenges in mitigation approaches are featured for SRAMs (Static Random Access Memories), FFs (Flip-Flops), FPGAs (Field Programmable Gate Arrays) and computer systems as exemplified in the following articles: (i) Layout aware neutron-induced soft-error simulation and fault tolerant design techniques are introduced for 6T SRAMs. The PNP layout instead of conventional NPN layout is proposed and its robustness is demonstrated by using the MONTE CARLO simulator PHITS. (ii) RHBD (Radiation-Hardened By Design) FFs hardened by using specially designed redundant techniques are extensively evaluated. BCDMR (Bistable Cross-Coupled Dual Modular Redundancy) FFs is proposed in order to avoid MCU (Multi-Cell Upset) impacts on FF reliability. Its robustness is demonstrated thorough a set of neutron irradiation tests. (iii) CGRA (Coarse-Grained Reconfigurable Architecture) is proposed for an FPGA-chip-level tolerance. Prototype CGRA-FPGA chips are manufactured and their robustness is demonstrated under alpha particle/neutron irradiation tests. (iv) Simulation techniques for failures in heterogeneous computer system with memory hierarchy consisting of a register file, an L1 cache, an L2 cache and a main memory are also proposed in conjunction with masking effects of faults/errors.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Terrestrial neutron

- Soft-error

- Simulation

- SRAM

- BCDMR

- Flip-Flop

- ALU

- CGRA

- Heterogeneous computer system

- Register file

- Cache

- Mitigation measures

1 Fundamentals and Highlights in Radiation-Induced Soft-Errors

Eishi H. Ibe, Exapalette, LLC

1.1 Hierarchy of Faulty Conditions of an Electronic System

Reliability is gaining monumental spotlights as the foremost property that is indispensable for the overall worth of electronic products, in particular, with respect to radiation hardness at the ground [1,2,3]. Once failures take place in electronic systems in the market, the news is spread over the world immediately though the internet and massmedia and the products or even the vender companies may, in the worst case, lose their business chance for a long period of time.

It is believed that the failures should have some sequential steps of symptoms to result in failures in almost all the troubles. In the most electronic systems, the symptom starts with a simple fault in the substrate of a circuit board in the system. Before the fault would grow the fatal failure, there should be many kinds of symptoms towards failures in a variety of situations. In many cases, a substantial part of faults may be disappeared or eliminated during propagation by a certain logical/timing masking effects as illustrated in Fig. 3.1, but some may be captured and fixed in memory elements such as SRAM, DRAM, flash memory, flip-flops, and so on. Once these faults are fixed in the memory elements, they have a lot more chance to cause the system failed.

It is important, therefore, to detect the faults, error s, and onsets of the failure, and eliminate them at the early stage to prevent fatal failure.

In the present chapter, we will show the basic understandings and examples of countermeasures against evolving threat of soft-error in electronic systems induced by terrestrial neutron s in VLSI devices and systems.

1.2 Sources of Neutrons in the Field and Fundamentals of Terrestrial Neutrons

In space applications, primary cosmic-ray (electrons, protons, and heavy ions)-induced soft-errors and hard-error s (permanent errors by which the device is mechanically destructed) are major concerns in reliability, which may cause failures and eventually determine the life of a space craft [4].

Meanwhile, when energetic cosmic ray protons enter into the atmosphere (troposphere and stratosphere) of the Earth, some protons undergo nuclear spallation reaction with nuclei (mainly nitrogen and oxygen nuclei) in the atmosphere to produce a number of light particles or secondary cosmic rays including neutrinos, photons, electrons, muons, pions, protons, and neutrons. As the cosmic rays are deflected by Heliomagnetic field or the Sun’s activity whose intensity has about 11-year cycle, strength of neutrons at the ground has also about 11-year cycle [5]. The neutron flux at the ground is the lowest, during the solar maximum (states when the Sun is the most active), while it is the highest at the solar minimum (states when the Sun is the least active).

Since a secondary neutron causes a cascade of spallation reactions in the atmosphere, it produces a shower of secondary particles and radiations that reaches the ground of the Earth. As the thick air layer over the ground can shield neutrons, strength (both of flux and energy) of neutrons depends upon altitude with slight dependency on atmospheric pressure [6]. Compared to neutron flux at the avionics altitude (about 10,000 m), therefore, the neutron flux at terrestrial altitude is much lower by a factor of 100–300.

The cumulative flux, which is the sum of total flux from the minimum neutron energy determined by some practical reason (in some standards like JESD89A [7] as below, for example) to the maximum neutron energy, is summarized in Fig. 3.2 with respect to of the terrestrial neutron flux estimated at the NYC sea level [1]. Figure 3.2a can be used for evaluation of the effects of thermal (about 25 meV) or low-energy (<1 MeV) neutrons, and Fig. 3.2b can be used for evaluation of the effects of high-energy (>1 MeV) neutrons. The total flux beyond 10 meV can be estimated 13 n/cm2/h from Fig. 3.2b, which is consistent with JESD89A setting [7]. It is noteworthy that the energy of terrestrial neutrons ranges widely from thermal (25 meV) to high-energy (>1 GeV) and its total flux is about 50 n/cm2/h at the ground including NYC sea level.

Cumulative neutron flux at the NYC sea level. a Low-energy (<1 MeV) neutrons, b high-energy (>1 MeV) neutrons (2015©IEEE [1])

Neutrons themselves are not charged particles, thus do not interact directly with matters except for very limited cases such as nuclear reactions and as summarized in Table 3.1. Only some charged particles, which can be produced as a consequence of nuclear reaction depending on the type of reaction, may cause SEEs . Since the natural abundances of the target nuclei in (i) nuclear fission reaction (235U/238U) are negligibly low, the reactions (ii) neutron capture reaction and (iii) spallation nuclear reaction only matter in the terrestrial fields.

In particular, the reaction mechanism (iii) has major roles in SEEs in the terrestrial fields, and therefore, the neutron reaction (iii) is focused in the present chapter. Only when 10B is contained in the matter, reaction mechanism (ii) may be of concern as explained in Sect. 3.1.6(iv).

Energetic charged particles including secondary particles from the nuclear reactions above interact with matters via Coulomb interaction mechanism.

Namely, while an energetic charged particle passes through the material, an orbital electron (conduction-band electron in solid) is stripped from its orbit by Coulombic interaction with the impinging ion, leaving electron-hole pairs (deposited energy of 3.6 eV is required to produce one-pair of electron-hole pair in Si) along with the trajectory of the impinging ion as illustrated in Fig. 3.3a. This process is called “direct ionization” or “charge deposition”.

The original sum of charges of electrons or holes is called “deposited charge”.

Some or most parts of electrons and holes recombine to disappear in particular in metals and dielectrics because mobility of the carrier is very high (electrons in metals) or low (holes in dielectrics). Some part of electrons and holes can survive from recombination in semiconductor materials under a certain level of potential gradient. (This can take place even in dielectrics under very high potential field.)

Some part of the deposited charges generated in the semiconductor materials can be collected by applying a certain level of potential gradient as illustrated in Fig. 3.3b. This process is called “charge collection” (to the electrode).

1.3 Generation of Faults: Origin of Errors and Failure

There are number of sources of faults in electronic devices and circuits such as SET (SNT and MNT) , power disturbances [3], and EMI [3] as summarized in Table 3.2 and they play very crucial roles in reliability in the electronic systems for the ground applications.

SET is a single transient due to charge collected to a diffusion region in the chip by an energetic particle impingement. Pulse width is below a few nano-second, and can long more than two clock pulses, which may require special and additional design consideration [8].

When SETs take place in multiple diffusion regions, the phenomenon is called MNT (Multiple Node Transient) that may corrupt the protective functions in the redundant circuitries [9].

Cross talk is noise propagation between close wires via parasitic capacitance [10]. Disturbance in power supply [3], and EMI [3, 11] are very important fault sources in electronic systems.

The most important characteristics of faults caused by a single event effect compared to other fault sources mentioned above is that they are initially only localized in a single well or substrate. In other words, it can be said that SET has an internal origin in the transistor structure while other sources have external origins outside transistors. Keeping this difference in mind, we will focus only on faults caused by a single event effect in this book. Please have a look at [3] for details of the last two mechanisms, power disturbance and EMI.

1.4 Transformation of Faults to Errors and Failures

As an SET is a single rapid (pico- to nano- second order) pulse noise in the substrate or well so that it is very difficult, in general, to detect and locate it in the device. Suppression of them to low enough level is also very difficult, but they do not always transform to “error”. Three important concepts of faulty conditions of a system are explained in [1].

An error is an incorrect state in a digital circuit node that could be caused by a data flip in a memory element like an SRAM (Static Random Access Memory) [12], a DRAM (Dynamic Random Access Memory) [13], a flash memory [14], and an FF (Flip-Flop) [15] or an extra delay in a circuit [16]. When an error is caused by ionizing radiation, it is called SEU (Single Event Upset ) or SE (Soft-Error) [7]. When an SEU causes randomly distributed multiple errors, it is also called an MCU (Multi-Cell Upset) [7]. Failure is a malfunction or dysfunction of a system. It is to be noted that a fault may or may not give rise to an error and an error may or may not result in a failure; A fault may or may not be strong enough to cause a memory bit to flip; An errors in a circuit may or may not reflect in the arithmetic results on a system. Furthermore, it is possible to reduce the probability of escalation of faulty conditions. It is therefore very important to characterize the nature of faulty conditions and their behavior in escalation from lower levels to higher levels of hierarchy.

Whether an error causes a failure or not depends on its location and the particular function that the system happens to be performing. Only when an error(s) propagates to the final output and cause malfunction of the system, we call this consequence as “ failure ”. An error does not always cause a system failure, because it may disappear or may be masked during propagation in the chip or board by some masking effects. The real failure rate of a system, therefore, cannot be estimated from the total sum of memory SEUs or SERs (SE Rates) in the system. In order to estimate realistic failure rate of a computer system can be obtained through computer simulation and extended in Sect. 3.5. Some mitigation techniques like parity [17], ECC (Error Correction Code ) [18], and interleaving techniques [19] may be applied to reduce SER. In the parity technique, one extra bit is added to a word (a set of bits) that expresses a character like numbers and alphabets. The data in the extra bit is set according to whether the number of “1” in the word is odd or even. When an SBU takes place in the word, the datum in the extra bit and the word become inconsistent and thus the SBU can be detected. In the ECC, more extra bits are added to a word to correct the error(s) in the word. To reduce speed, area and power penalties, the simplest scheme, Hamming code [18], where an SBU can be corrected based on the coding theory, is commonly applied. Two or more errors in the same word, which is called an MBU (Multi-Bit Upset) cannot be corrected by the Hamming code. Interleaving technique, by which the intervals between the bit in the same word are made wide enough compared to the penetrating ion range, is applied to reduce MBUs.

Failures include shut-down, abnormal operation of the system. Incorrect calculation by using supercomputers can also be categorized into failure. Failure is not recovered by system itself without physical or economic damages.

1.5 Fundamentals of CMOS Semiconductor Devices

In order in primary importance to understand the physical aspects of soft errors in relation to real MOSFET structure. A brief look at the structsure of CMOS (Complementary Metal Oxide Semiconductor) devices [20], which are commonly recognized as the most vulnerable semiconductor structure to neutron-induced soft-error, such as SRAMs, FFs and most logic circuits is given here. CMOS circuits are basically built on the identical striped structure of p and n- dual wells as illustrated in Fig. 3.4a for one bit of an SRAM or one OR gate. Unlike dual well structure (this structure is often called “bulk”) as illustrated in Fig. 3.4b, triple-well structure has an additional deep n-well to isolate Si-body from electrical disturbance in the substrate. It is recognized that the triple-well structure have some benefit for soft-error resilience because the volume of charge collection is limited and reduced above the deep n-well.

In SOI (Silicon On Insulator ) devices [20], BOXs (Buried-OXides) [21] are made under the dual wells to isolate the Si-body vertically and completely from the substrate. Isolation oxides such as Shallow Trench Isolation (STI) oxides are also made to isolate each node in lateral direction for such a structure. The SOI structure, therefore, has more advantages in soft-error resilience than triple-well structure does.

Further challenges have been made on the SOI structure: When the thickness of the SOI layer is thinner than the depth of depletion region in the SD (Source-Drain) channel, the structure is called FD (Fully Depleted) SOI [22]. Meanwhile, when the thickness of the SOI layer is thicker than the depletion region, the structure is called as PD (Partially Depleted) SOI [23]. Since the upper surface of BOX in FDSOI is covered fully with the depletion region, parasitic capacitance can be largely reduced compared to bulk/PDSOI devices, resulting in steep sub-threshold characteristics, reduction in latency, and power consumption.

Sugii et al. develop Silicon on Thin BOX (SOTB) [24] structure by which backgate bias can be applied in Silicon substrate below the bottom of thin (about 10 nm thickness) BOX in order to control Vth [24] (see Sect. 3.3 for more details). This structure is also called as double-gate structure because the Si-body is sandwiched by the conventional gate and the backgate.

The structure of Si-body has been changed continuously year by year as mentioned above, and will also be changed even drastically in the future. The challenges to enhance the resilience of CMOS devices, however, have to be started continuously and simply with Si-body structure because it is the initial condition of the failures regardless of the memory or logical origins.

1.6 Effects of Ionizing Radiation on Semiconductor Circuits

-

(i)

Soft-Error

As first found in alpha particle-induced soft-error mechanism [25], a high-energy single charged particle that hits a semiconductor generates a number of electron-hole pairs along its trajectory as illustrated in Fig. 3.5a, which is similar to Fig. 3.2a used for general material. When a charged particle penetrates into a depletion region at the bottom of the storage node of semiconductor memory cell (diffusion region), electrons flow into the off-state storage node (with “high (1)” potential for an n+ node, for example) in accordance with electromagnetic field there as illustrated in Fig. 3.5a. The mechanism shown in Fig. 3.5b is again called charge collection mechanism. The potential in the node is eventually lowered down by charge collection below the threshold potential between “1” and “0”, resulting in an SEU or a soft-error to flip the data from “1” to “0”. The flipped state can be recovered without any physical damages in the semiconductor device simply by rewriting data. This is why the phenomenon is called “soft”-error. In addition, charge collection is intensified by funneling effect [26], in which electrostatic field in the depletion region is elongated beneath the original depletion region by flowing electrons themselves and more electrons than initially contained in the depletion region are collected. Charge collection may cause SEU in both DRAM and SRAM [12] when the collected charge exceeds the critical charge Qcrit, which can be estimated by

where,

- C s :

-

storage node capacitance;

- V cc :

-

supply voltage.

The principal metric that stands for the vulnerability of semiconductors is SER (Soft Error Rate), which is calculated by

The unit of SER is FIT (Failure In Time : number of events/109 h). Another unit, FIT/Mbit (of memories), is commonly used referring to the SER per memory bit capacity.

-

(ii)

Error modes other than soft-error

Unlike soft-error, there are other error modes that cannot be recovered by rewriting.

SEFI (Single Event Functional Interrupt) is an error mode in logic circuits by data flips in FFs contained in the logic circuits [27]. State in an FF can be flipped in two ways by an ion hit. One is capture of an SET from the input of an FF. The other is data flip by direct hit into an FF storage node by ionizing radiation. An SEFI may be related to the FF behavior but is not understood fully. It is said that it cannot be recovered by rewriting of memory data, but can be recovered by resetting FF data to default values [1].

SEL (Single Event Latchup) is a phenomenon in which a pnpn switch is turned on resulting in an Idd current increase accompanying a number of circuit errors [28]. SEL cannot be recovered by re-writing but can be recovered by power-cycling (power off and then power-on) [7]. SEB (Single Event Burnout) and SEGR (Single Event Gate Rupture) are permanent destructive modes of radiation effects in power devices [29,30,31].

-

(iii)

The influence of scaling on radiation-induced faults or SER

Scaling (shrinking) the feature size (linewidth and spacing) has been the key to reduce power consumption, increase density and performance of LSIs, and, therefore intensely pursued for many decades [32]. From a viewpoint of SEE, however, there are some conflicting impacts by scaling

-

(1)

The smaller a memory size becomes, the lower the probability to be hit by an energetic particle, resulting in reduction of SER.

-

(2)

In addition, volume for charge collection become smaller, the amount of collected charge gets smaller compared to Qcrit, resulting in reduction of SER.

-

(3)

Capacitance of a storage node Cs is, in principle, in proportion to the area of a storage node. Scaling of memory cell, therefore in general causes decrease in the capacitance and worsens susceptibility of a memory cell due to decrease in Qcrit (∝CsVcc).

-

(4)

The distance between adjacent storage nodes is shortened by scaling, which causes “charge-sharing” [33] between multiple nodes. This causes an increase in MCU ratio to total SEUs.

-

(5)

The distance between pn-junctions is also shortened, which causes increase in susceptibility to bipolar effects including latchup. Such bipolar effects are becoming dominant in CMOSFET (CMOS Field Effect Transistor) circuits as a result of scaling (see Sect. 3.1.7 for more details).

The impacts (1) and (2) are beneficial for SER reduction. The impacts (3)–(5) are adverse effects. They are in a trade-off relation and, therefore, SER may increase or decrease depending on which mechanism is dominant.

Next we will discuss how SER at which errors occur in a device, is estimated. When a single particle penetrates into a device(s), it can cause multiple transients or multiple errors. By definition, physical consequence due to one single particle including neutron is called an SEE . When an SEE causes an error(s), we call this phenomenon SEU. Therefore, SEU can consist of multiple errors. Important thing is that SER is defined by the number of SEUs, not by the number of errors.

Another important quantity used in this chapter is SEU cross section σseu that is defined by

where,

- N seu :

-

the number of SEUs (not errors!)/count;

- Φp:

-

fluence of particles (neutrons)/(n/cm2).

Fluence means the total number of particle passed through a unit area.

σseu can be measured in experiments using radio-isotopes or accelerators [1] and one can calculate SER by using the σseu as follows:

where,

- <σseu>:

-

is an average of σseu over particle energies to adjust for actual field (or packaging) environment;

- ϕp:

-

flux of the particle/(count h−1 cm−2);

- FIT:

-

Failure In Time, SER in 109 h.

Flux means the number of particles that pass through a unit area per unit time.

1.7 Bipolar Action: A Newly Found Mode of Radiation-Induced Faults

As explained in Sect. 3.1.5, electron-hole pairs are produced along the trajectory of a high-energy particle when it passes through a semiconductor device. If the particle passes through the depletion region of the pn-junction under an off-state n-diffusion at the potential Vcc, electrons in the depletion region are collected to the n-diffusion region to cause a single event fault or a SEU and holes are repulsed out of the depletion region.

Ibe et al. pointed out that, in addition to the charge collection described so far, there is a novel soft-error mechanism, which they called Multi-Coupled Bipolar Interaction (MCBI) [34]. When a high-energy charged particle passes through the pn-junctions, not below the diffusion region, for example, in the side wall of the p-well in the triple-well CMOS structure as shown in Fig. 3.6a, direct ionization or charge deposition, by which electron-hole pairs are produced along with the track, similarly to Fig. 3.5a. Then, as illustrated in Fig. 3.6b electrons produced in the well flow out of the well by the same funneling mechanism as the conventional soft-error mechanism, leaving the holes in the well. These holes can make the well potential high enough to turn the parasitic npn transistor in the well “on” as illustrated in Fig. 3.6c. As a substantial number of nodes are contained and flipped in a single common well, the “high” nodes in the MCBI region can be flipped simultaneously to cause MNT or MCU.

Physical phenomena in basic nMOSFET structure induced by a high-energy charged particle. a Charged particle penetrates through pn-junctions on the side wall of the p-well and generate electron-hole pairs. b Electrons flow out of the p-well and holes remain in the p-well. c Holes elevate the potential in the p-well, resulting in turning the parasitic transistor on to cause soft-error

1.8 A Perspective of Progresses in Research and Engineering of Radiation-Induced Soft Errors

In this subsection, a historical review will be given regarding how technology challenges were encountered in the issue of radiation-induced soft-errors in LSIs and how engineering have solved them. Ever since alpha particle soft error was first discovered in DRAMs in 1979, a few distinctive paradigm shifts have been experienced.

-

(i)

First paradigm (1979–1990s) where SRAM design rule and density are >250 nm and <64 kbits, respectively: It is well known that alpha particle-induced soft error in DRAM was discovered by May et al. in 1979 [25]. In the same year, the possibility of soft-error due to terrestrial neutron is pointed out by Ziegler and Lanford [35]. As the impact of alpha particles on DRAMs appeared to be most devastating at that time, engineering attention was focused on alpha particles. By the early 1990s, alpha particle soft error in DRAMs was overcome by several effective countermeasures such as stacked or trench capacitors to enhance storage capacitance [36], triple-well structure, usage of purified low-alpha materials, and shielding by package materials [37,38,39]. Thus, soft errors in LSIs for terrestrial applications did not get much attention until the late 1990s.

-

(ii)

Second paradigm (late 1990s–2000) was experienced when SRAM design rule and density were around 130 nm and 128 k–4 Mbits, respectively: The terrestrial neutron-induced SER of SRAM was found to have become much higher than DRAMs [40]. It is understood that SER in DRAMs, which have embedded capacitors to keep high Qcrit, naturally has decreased thanks to the beneficial effects of scaling as mentioned previously in Sect. 3.1.3 (iii) (1) and (2). Meanwhile, SRAMs do not implement any artificial capacitor, and, therefore, SER in SRAMs has drastically increased by the adverse effect mentioned in Sect. 3.1.3 (iii)(3).

-

(iii)

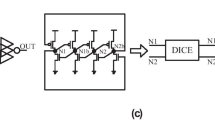

Third paradigm (2000–2005) was experienced when SRAM design rule and density were around 90 nm and 8 Mbits, respectively: Filing neutron irradiation data report to users was mandatory for memory venders, and this triggered discussions on neutron standard testing methods worldwide. As a consequence, JESD89 [41] for neutron, proton and α-ray SER testing method were issued as the de facto soft-error testing standard in 2001. In the third paradigm shift, concerns on neutron soft-error further spread over two directions from around 2004. One direction was concerns about MCU that emerged from about 130 nm process due to bipolar effects [42] and charge-sharing effects [43]. When a MCU takes place in more than two bits in the same word of SRAM, it cannot be recovered by using EDAC (Error Detection and Correction) or ECC (Error Checking and Correction) to result in system crash. EDAC or ECC can detect two-bit errors and correct one-bit error, but cannot correct two-bit errors in the same word (MBUs). It was found that almost all MCUs had taken place in one single MOSFET well and been aligned along with the only two adjacent bit lines. Based on this finding, newly found problem of neutron-induced MCUs in SRAMs was basically overcome by applying both ECC and interleaving with a small interval [34]. Another direction was to address concerns about single event transient (noise) in sequential and combinational logic devices. SERs (Soft-Error Rates) in flip-flops were predicted to be close to that in SRAM beyond 90 nm design rule [44], but there had been no effective detection and correction methods in logic devices except for redundancy techniques. Moreover, obvious threats of common-node failures or those due to MNT had been found in most space redundancy techniques, like TMR [45] and DICE (Dual-Interlocked storage Cell) [46]. If an MNT happens in two modules of TMR or two input nodes of DICE, recovery mechanism fails and may result in SDC (Silent Data Corruption) that cause unrecognizable system failure [47]. Uemura et al. [48] and Lee et al. [49] proposed ideas to harden DICE-like flip-flops by changing layout of nodes independently in 2010.

In addition to the two distinct directions described above, Baumann et al. pointed out soft-error caused by thermal neutron caption reaction by 10B that has natural abundance of 19.9% in Boron contained in the BPSG (Boron Phosphor Silicate Glass) used for the planarization of wafer surface [50]. When a thermal neutron, mostly of cosmic origins, which has a typical energy of 25 meV in equilibrium with atmospheric molecules, is captured by a 10B nucleus, a He ion (1.47 MeV) and a 7Li ion (0.84 MeV) are released to cause soft-errors by ionization. This type of soft-error seemed to have been overcome by changing the planarization process from BPSG to CMP (Chemical Mechanical Polishing), which does not use Boron.

-

(iv)

Fourth paradigm (2006–2009) was experienced where SRAM design rule and density were around 65 nm and 16 Mbits, respectively: Discussions on revision of JESD89 was started in 2003 because the original JESD89 had a number of limitations: the only testing facility assigned as the standard facility was the spallation neutron source in Los Alamos National Laboratory, for example. The new version JESD89A [7] was issued in 2006 with more practicable and reliable testing and analysis methods including quasi-monoenergetic neutron test method. Differential spectrum of terrestrial neutron was revised and a certain number of neutron irradiation facilities were added as the standard facilities. IEC60749-38 standard that is consistent with JESD89A was issued in 2008 as the de jure standard [51].

-

(v)

Fifth paradigm (2010) was experienced when SRAM design rule and density were <40 nm and >32 Mbits, respectively: the impact of soft-errors spread over large electronic systems. For big data-centers [52] or exa-scale supercomputers [53], power reduction had been one of the most important design issues. The space redundancy techniques that require large areas and power overheads would not be therefore applied to such big systems, in principle. Real-time (safety critical) systems like avionics or micro-control units in automobiles are also becoming under serious concerns and in-depth studies were widely undertaken [54, 55]. AEC Q100 G [56] and ISO26262 [57] for automobiles were also issued in 2008 and 2011, respectively.

With a common recognition that soft-error-induced system failure cannot be suppressed to satisfactory level by applying mitigation techniques only to a single design stack layer (device, circuit, chip, board, firmware, OS, middleware, and so on), communications between and combined mitigation techniques among stack layers have been encouraged as unavoidable direction [58,59,60,61]. In reality, such collaboration has turned out to be very difficult since the basic engineering skills in each stack layer are essentially and significantly different. Most engineers/researcher cannot expand their specialties beyond their own stack layers. Novel strategies to overcome this situation are needed to be explored and being proposed. Built-in communication scheme among the stack layers has been proposed by Ibe et al. in their LABIR (inter-Layer Built-In Reliability) concept [62, 63]. Evans et al. have proposed the RIIF (Reliability Information Interchange Format) as common format or protocol to be used in system design among stack layers [64].

In the possible sixth paradigm shift, other terrestrial particles, like muons, low-energy neutrons, protons, are being pointed out as a possible SER threat at the ground [65]. Alpha particle with VLA (Very Low-Alpha)-level package can again cause soft-errors in SRAMs [66]. Sub-100 nm SRAMs have a substantial susceptibility for soft-error due to terrestrial muons [67]. Low (thermal) energy neutron causes soft-error due to neutron capture reaction of 10B in device without BPSG processes [68, 69]. Concerns on electrons (beta rays) and gamma rays have been boosted after the severe accident in Fukushima-1 nuclear power plants [70, 71].

1.9 Spreading Concerns on Failures in Industries

Table 3.3 summarizes recent concerns in various fields of industry related to single event effects with applications, possible root causes, and observable failure symptoms [72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88].

In avionics, TMR is applied to critical components to avoid fatal failures and faults can be found through error flags from TMRs [72]. IGBTs (Insulated Gate Bipolar Transistors) are used in trains [73], automobiles [29,30,31] and avionics for inverters. They are subject to destructive SEE mode, SEB, due to electron avalanche by a charged particle penetration in pnp structures. SEEs in servers [74, 75] and routers [76] have been main concerns in networks because they use a tremendous number of memories, in particular, SRAMs. Power supplies for network systems like DC–DC converters are also vulnerable to terrestrial radiation in the destructive mode [77].

In large-scale supercomputers like TITAN in ORNL (Oak Ridge National Laboratory) , it has been reported that about 10 errors take place in a day [78]. In particular, SDC s by which wrong results are obtained without any error evidences after extremely massive and costly calculations [79, 80], is one of the most serious concern in resilience. DUEs (Detected Unrecoverable Errors) are also major concern in microprocessors, in particular, for the systems that are not allowed to stop. It is said that the rate of DUEs is higher than that of SDC in cache memory [81]. Capture and recovery of SDCs and DUEs impacts is top-priority issue in real time, safety critical systems.

Concerns about automobile systems reliability are spreading rapidly and widely, on the brake by wire [82], power steering [83], engine control [84], communication protocol in a car like CAN (Controller Area Network) [85] and LIN (Local Interconnect Network) [86]. GPUs (Graphic Processing Units) or GPGPUs (General-Purpose GPU) are being widely used for many purposes including nodes for supercomputers thank to its high ability in parallel computing. Rech et al. made a neutron beam testing at ISIS for 40 nm GeForce GTX480 and showed high susceptibility of GPUs to terrestrial neutrons [87]. Pedestrian detection at night is one of the most important safety requirements (“Five star” in Euro NCAP rating) where GPUs are used in future.

Even portable digital applications, like smartphones and tablet PCs, have been tested under neutron irradiation [88]. Obviously, no electronic equipment is free from threat due to SEE by terrestrial radiations, these days.

There are a number of fault/error/failure modes that reflect their root-cause mechanisms. Prediction and estimation techniques of faulty conditions and their rates of occurrence have a primary importance in designing devices, circuits and electronic systems. Detection techniques of faulty conditions must be advanced to know the nature of the faults/errors/failures based on their underlying physical mechanisms and to validate the prediction and estimation techniques. Cost-effective resilient techniques may be assigned according to each nature of the failure and application. The following sections introduce some examples of prediction/estimation, detection and classification techniques of fault/error/failures.

1.10 Conventional and Advanced Mitigation Techniques

As explained in Sects. 3.1.5 and 3.1.6, an SET takes places only in well/substrate region. Therefore, strategies to mitigate soft-errors in devices or failures in electronic systems boil down to simple strategies as summarized in Table 3.4 with some typical examples [1, 24, 89,90,91,92,93,94,95,96,97,98]:

-

Restriction of production of faults in wells or acceleration of recombination of electron-hole pairs.

Implantation of catalyst element like Au in wells is possible choice.

-

Restriction of production or collection of charges in the relevant volume. Triple-well structure is among such techniques. Volume of the triple well and depth of STI (Shallow Trench Isolation) can be important factor to reduce charge production and collection. Layout of pMOS and nMOS is also one of key design points in order to reduce MCUs in memory cells (see Sect. 3.2).

-

Recovery of errors in memory elements. ECC for memories and DICE, BISER (Built-In Soft Error Resilience), BCDMR (Bistable Cross-Coupled Dual Modular Redundancy) for FFs are among such techniques (see Sect. 3.3 for more details).

Failures take place when an error propagates to the final output of an electronic system. Therefore mitigation techniques for failures are

-

Enhancing masking effect to prevent propagation of errors in the circuitry.

-

Space redundancy techniques to cancel out errors. TMR (see Sects. 3.3 and 3.4) and DMR (see Sect. 3.3) are typical methods. DCC (Duplication + Comparison + Checkpointing) [98] is an extended version of DMR. Lock-step operation of dual processors is mainly applied to automobile MCUs (Micro-Control Units).

Power consumption is one of major metrics that determine the mitigation techniques to be applied in large-scale systems like data-centers and supercomputers [53, 58, 59, 79, 80]. “Dark silicon” refers such constraints on performance and reliability due to power consumption [52]. Space redundancy techniques such as TMR and DMR may not be applied to such large big data systems in order to avoid large power consumption.

MCU may cause deactivation of space redundancy technique by simultaneous errors in redundant nodes. Layout design of redundant node must be carefully conducted (see Sect. 3.3).

-

Time-redundancy techniques to cancel out errors. RAZOR [99] is one of simple solutions for FFs. RCC (Replication + Comparison + Checkpointing) is a typical time redundancy technique for an extra-large electronic systems from the viewpoint of suppressing power consumption, but concerns are addressed on even such time-redundancy techniques because error rate may exceed checkpointing frequency [79].

-

FPGA (Field Programmable Gate Array) s are being widely used in a variety of applications due to their versatility and adjustability in programming [100]. Programs for operation of electronic systems are initially written and fixed in CRAM (Configuration RAM). Errors in CRAM may cause fatal failure due to malfunction of operation. Prompt detection of errors in CRAM and reconfiguration of CRAM are key techniques for reliability in FPGA (refer to Sect. 3.4).

Shimbo et al. have recently developed non-stop recovery CRAM system by using an embedded controller into FPGA array [101]. This kind of approaches seems to be promising in future.

-

Reliability for the entire computer system is far more difficult to achieve and challenging because of its complexity and dependence on applications (refer to Sect. 3.5).

1.11 Symptom-Driven System Resilient Techniques

Ibe et al. [63, 64] proposed the LABIR approach that is a recovery method driven by a hardware error symptom . Symptom-driven techniques originally meant that predetermined optimization process for isolation of faulty conditions in electronic systems were initiated upon capturing any pretabulated “symptoms” of faulty conditions in OS [102, 103]. This approach utilizes the so-called fuzzy/intelligent expert systems. Unlike this approach, Ibe’s proposal is specific only to SEEs which evolve in a well as a detectable symptom. In this scheme, the recovery process is triggered by detecting the symptom before it grows to error/failure and the system is recovered in CPU or application level by means of time-redundancy techniques such as checkpointing and rollback [104]. It can avoid large area, power overheads because it does not apply space redundancy techniques such as TMR. In general, since time-redundancy techniques always apply the same procedure twice and make comparison, speed penalty is inevitable. Since terrestrial neutron-induced soft-errors and resulting symptoms do not take place so often (one per hour even for the largest super computers [105]), this approach can be adopted with a very small speed penalty. Software symptom based approaches are also proposed on the basis that only errors that affect execution of instructions have to be taken care of, while other errors can be left “don’t care” In this section, possible examples for such approaches are introduced.

-

(i)

Hardware symptom

LABIR proposes interactive or communicative mitigation techniques in which a recovery action such as rollback to the checkpoint is triggered when a layer finds any error symptom, not necessarily error or fault itself.

As illustrated in Fig. 3.7, immediately after capturing such a symptom, errors or failures can be removed by a rollback-and-replicate operation in CPU level of the chip. In the case of the example in Fig. 3.7, sense amplifiers are applied to detect slight potential differences between adjacent two p-wells, so that other source of noises like EMI and noise in power supply line [105] propagate in wider area than soft-error over many wells so that they can be eliminated as well by the differential method between adjacent wells. BIST (Built—In Self-Test) [106], Built-In Current (Pulse) Sensor (BICS [90, 107], BIPS)), SAW (Surface Acoustic Wave)-based symptom detector [81], on-chip monitor [108] can be used for such kind of technique. By using BIPS, a pulse propagated from a zone penetrated by a charged particle in p-well can be detected in Idd line by using an embedded current or pulse sensor. In the SAW-based symptom detector, the surface acoustic wave generated by a hazardous event at any location in the semiconductor device is captured by micro-cantilever type detectors. The location of charged particle penetration is calculated by the capture time difference among the detectors. In the on-chip monitor approach, multiple micro-pulse monitors are embedded in the peripheral area of a chip to monitor noises in selected locations.

-

(ii)

Software symptom

Li et al. has proposed a software–hardware co-designed resilient super-scalar system where failure symptom is detected mainly by software and recovery and diagnosis (software bug/permanent error/transient) are made during rollback from checkpoint [109]. Reconfiguration is made in fine grains based on the diagnosis. Fault injection is applied to Solaris-9 OS with the UltraSparc-III ISA. Application and OS crashes and hang-ups are monitored and classified by injected component. These authors have reported that a large fraction of the faults results in OS failures so that and that recovery methods for OSs must be developed.

Hari et al. proposed a symptom detector to detect fault including SDC in software behavior [110].

Wang et al. has proposed SBRM (Symptom Based Redundant Multithreading) where symptom of failure is monitored on software like deadlock, exception handling, miss-instruction in control flow, and error in the cache/translation look-aside buffer [97]. Instructions are executed simultaneously in two threads. Symptom monitoring is applied only to the redundant thread and results in two threads are compared. If the results do not match, an error signal is delivered and restore process is initiated. Checkpointing supported with input replication, symptom detector, and control logic for pipeline flush is used for restore process. Fault injection in the RTL model of Alpha microprocessor is made while SPEC2000 Integer benchmarks operated. By choosing deadlock and exception handling as symptoms, it has been demonstrated that 75% of errors can be recovered with a speed loss of only 2%.

1.12 Challenges in the Near Future

Table 3.5 shows future trends, conventional approaches against failures, challenges, and global standards being worked out relevant to the fault/error/failure modes actually encountered in various industry sectors.

In safety critical system or real-time system like avionics and automobiles, weight saving in structural materials is one of major trends to reduce fuel and power consumption. Usage of GPUs will be extended to much wider areas [87]. Millisecond recovery is one of the most challenging themes in real-time systems. In railway systems, further computerization will proceed to realize cost effective, connected, and resilient railway systems [111]. In exa-scale supercomputer system, paradigm shifts in dependability techniques including HW and SW must be realized to avoid failures due to very frequent errors including SDC and DUE (Detected Unrecoverable Error) [112].

In network system as well as supercomputer system, large power consumption will be a serious bottleneck for selection of mitigation techniques.

PDA (Personal Digital Assistance) s or smartphones are being increasingly utilized to control in-house digital appliances remotely. Noise disturbances and malicious attacks must be considered as possible causes of serious accidents [113].

Best combination of mitigation techniques as in LABIR/Cross layer reliability should vary by industry and need to be extensively pursued as well as development of novel key technologies. A list of global standards with their objects and category types such as IEC61508, ISO26262, JESD89A, and so on are listed in Table 3.5.

In addition, it is predicted by SRAM soft-error simulation that further scaling may have significant impacts of processor/computer system design [112]. Namely,

-

As scaling proceeds, Qcrit will decrease below 1 fC or even below 0.1 fC. This causes an extensive increase in area affected by an SEE: For 130 nm design rule, 100 bit × 100 bit SRAM memory matrix can be affected by an SEE, while 1000 bit × 1000 bit SRAM memory matrix would be affected by an SEE beyond 22 nm design rule. When the SRAM has six transistors, the area containing 6M transistors would be affected by an SEE for 22 nm design rule, resulting in unprecedented difficulty in reliability design of processor/computer systems. New breakthrough technologies are obviously required.

2 Soft-Error Tolerant SRAM Cell Layout

Shusuke Yoshimoto, Kobe University

Masahiko Yoshimoto, Kobe University

Hiroshi Kawaguchi, Kobe University

2.1 Introduction

Nano-scaled integrated circuits are susceptible to particle-induced single event effect (SEE) because of their low signal charge and noise margins [31, 65, 113, 114]. Particularly the effect of multi-cell upsets (MCUs), in which a single event results in simultaneous errors in more than one memory cells, have been closely investigated. MCUs are caused by collection of charges produced by secondary ions in neutron-induced nuclear reaction [1]. The ratio of MCUs to single event upsets (SEUs) is predicted to increase drastically in nano-scaled SRAM s [2, 33, 43, 114].

Figure 3.8 shows a schematic and a layout of a general 6T SRAM cell with a typical 65-nm CMOS logic design rule. In the design, the sizes of the transistors are relaxed to suppress threshold voltage variation so that the cell area is about twice as large as a commercial 65-nm 6T cell [115]. The 6T cell consists of PMOS load transistors (PL0, PL1), NMOS driver transistors (ND0, ND1) and access transistors (NA0, NA1). A wordline (WL) and two bitlines (BL, BLN) are horizontally and vertically connected among cells, respectively. In the layout of the general 6T cell, the PMOS transistors are centered in the memory cell; this structure is called an NMOS-PMOS-NMOS (NPN) layout hereafter.

Figure 3.9 shows sensitive nodes in the general 6T cell layout: a low-state (“L”) PMOS diffusion and a high-state (“H”) NMOS diffusion. We have observed that the NMOS has a four-times larger SEU cross section than a PMOS for a wide range of supply voltages (see Fig. 3.10) [116]. The simulation results come from an iRoC TFIT soft-error simulator [117] with a database of a generic 65-nm bulk CMOS process [118].

In this subsection, we present a neutron-induced soft-error rate simulation tool and two types of soft-error tolerant SRAM cell layouts. Results show that the proposed memory cell layouts favorably affects their reliability; they enhance the effectiveness of single error correcting-double error detecting ECC (SEC-DED ECC).

2.2 Neutron-Induced Soft-Error Rate Simulator

Figure 3.11 illustrates a flow chart of our neutron-induced soft error simulator [116] using PHITS, the Particle and Heavy Ion Transport Code System [119]. The input data for PHITS are neutron spectrum data, device structure data, and nucleus reaction models. The cosmic-ray neutron spectrum is calculated using an Excel-based Program for calculating Atmospheric Cosmic-ray Spectrum (EXPACS) [120], as shown in Fig. 3.12. The device structure is constructed as presented in Fig. 3.13.

Cosmic-ray neutron flux normalized to ground level in New York City. Flux is calculated with EXPACS [120]

Device structure based on a 65-nm general 6T SRAM cell layout (logic rule basis) [120]

The device structure includes the cell property (width, height, and position of sensitive nodes), a cell-to-cell pitch in a cell array, and data patterns. The cell height, width, and position of the sensitive nodes are modeled as presented in the figure. The diffusion area is derived from the transistor width and the cell height. The clearances between diffusion edges are all equal.

The PHITS can export secondary particle dump files presented in Fig. 3.14. In our simulation, the dump files are generated in respect to NMOS and PMOS sensitive volumes in an SRAM cell. The dump file includes nucleus reaction IDs (event numbers), atomic weight, geometry points (X, Y, Z), velocity vectors (dX, dY, dZ), and energy in the point (E). The deposit energy (Edeposit) is calculated with the dump files in the respective sensitive nodes. The deposit charge (Qdeposit) is calculated using the following equation, in which e is the elementary charge:

When a secondary particle deposits more charge than the critical charge (Qcrit) to at least one memory cell, that particle is classified as a single event upset (SEU) particle. Finally, single-bit-upset and multi-cell-upset soft-error rates (SBU and MCU SERs) are calculated at every SEU particle event.

2.3 PMOS-NMOS-PMOS (PNP) 6T Cell Layout

The proposed 6T cell is designed as a PMOS-NMOS-PMOS (PNP) layout in Fig. 3.15. The NMOS-centered 6T layout has the same transistors as the general one. The WLs and the BLs are assigned in horizontal and vertical directions, respectively.

Figure 3.16a shows an SRAM cell array using the general NPN 6T layout. In the conventional 6T SRAM, the sensitive NMOS nodes are in the same P-well in the horizontal direction; horizontal upsets can be easily incurred. In contrast, the proposed PNP 6T cell can lower a horizontal MCU rate because the NMOS-centered layout can separate the horizontally adjacent NMOS sensitive nodes with the N-well as shown in Fig. 3.16b.

The proposed layout has the same schematics and the cell area on the 65-nm logic rule basis, so that the proposed design can be implemented only by replacing its cell layout. Note that shared contacts, which are commonly used in an industrial SRAM rule, cannot be applied to the proposed 6T cell layout. This drawback incurs a certain area overhead in the SRAM rule basis design.

We designed and fabricated 1-Mb SRAM test chips consisting of 256-Kb macros of four types (NPN layout with twin well, PNP layout with twin well, NPN layout with triple well, and PNP layout with triple well). Figure 3.17 presents an experimental setup for a neutron-accelerated test. The neutron irradiation experiment was conducted at The Research Center for Nuclear Physics (RCNP), Osaka University. Spallation neutron beam generated by the 400-MeV proton beam irradiates a board under test (BUT) 7892-mm far from a tungsten target, on which three sample chips are placed in a BUT, for 30 h. The neutron flux is normalized to 13 cph/cm2 above 10 MeV at ground level in New York City [7], which incorporates scattering effect [121], attenuation effect [44], and board screening effect [122].

As presented in Fig. 3.18, an MCU SER in the vertical direction is called MCUBL=1 in this paper, and an MCU SER in the horizontal direction is called MCUBL>1. The MCUBL>1 is more important for designers to adopt the interleaving and/or ECC strategy.

Figure 3.19a–d illustrate measured MCU SER in the four data patterns at the supply voltage of 1.2 V. When using the CHB (checkerboard), CS (column stripe), and RS (raw stripe) patterns, the MCUBL>1 in the PNP 6T SRAM can be suppressed by 86–98% compared to the general NPN layout. The proposed PNP layout separates NMOSs from adjacent ones in the horizontal direction, which reduces the MCUBL>1 SER. In the ALL0 (all zero) pattern, the MCUBL>1 even in the general NPN cells is low in nature because the sensitive nodes are not horizontally adjacent in a single bitline. As a result, only 67% improvement is observed in the MCU SER. The proposed PNP layout with the dual-well structure achieves MCUBL>1 SERs of 5.78, 4.58, 9.48, and 4.70 FIT/Mb in the CHB, ALL0, CS and RS patterns and the PNP layout with the triple-well structure achieves MCUBL>1 SERs of 5.78, 4.58, 18.96, and 3.13 FIT/Mb.

2.4 N-P Reversed 6T Cell Layout

To scale CMOS transistors down to a 45-nm process or less, it is important to use compressive and tensile strain engineering for PMOS and NMOS, respectively, thereby increasing the drain current. Particularly, for a PMOS, embedded SiGe (eSiGe) in a source and drain boosts its saturation current (Isatp). The strain engineering is thereby more effective for PMOS (Isatp) than for NMOS (Isatn). Current enhancement using eSiGe strain for the PMOS increases more effective with the process scaling: +30% and +45% in 45-nm and 22-nm processes [123, 124]. Figure 3.20 shows the trend of the saturation current ratio of an NMOS to a PMOS (=Isatn/Isatp) along with a process node [125, 126]. The ratio approaches becomes unity when Isatp is comparable to Isatn at a 22-nm node. As previously described, the SEU cross section of the PMOS is 1/4 of that of the NMOS. Therefore, the conventional 6T cell suffers from the shortcomings of the soft-error vulnerability in the NMOS driver transistors. To cope with this NMOS problems and to leverage the PMOS benefit, we propose using PMOS access and driver transistors instead: an N-P (NMOS-PMOS) reversed structure. Soft-error tolerance is enhanced in the proposed N-P reversed 6T SRAM cell in future 22-nm node or advanced ones.

Figures 3.21a and 3.21b depict a schematic and a layout of the proposed NMOS-PMOS (N-P) reversed 6T SRAM cell, respectively. The 6T cell consists of NMOS load transistors (NL0 and NL1), PMOS driver transistors (PD0 and PD1), and PMOS access transistors (PA0 and PA1). The number of transistors and the poly-gate alignment are similar as the conventional one, although the N- and P-diffusions are swapped. The shared contacts are applicable to both 6T cells.

Therefore, the proposed cell has no area overhead over the conventional one. In a read operation, either bitline (BL or BLN in Fig. 3.21a) is pulled up by a cell current flowing through a PMOS access transistor. Generally, the read current of the proposed cell is degraded because Isatp is smaller than Isatn. However, as presented in Fig. 3.20, the saturation current ratio of Isatn to Isatp becomes smaller in the scaled process. Moreover, the random dopant fluctuation of the PMOS is less than that of the NMOS. Consequently, the cell current of the proposed cell will be larger than that of a conventional cell.

The device structure includes the cell property (width, height, and position of sensitive nodes), a cell-to-cell pitch in a cell array, and data patterns. The cell height, width, and position of the sensitive nodes are modeled as presented in Fig. 3.22. The ratio of the cell height to the cell width is set as 3.5. Each transistor width is doubled from the process node to suppress threshold voltage variation. The diffusion area is derived from the transistor width and the cell height. The clearances between diffusion edged are all equal. The parameters are presented in Table 3.6.

Models of SRAM cell width, height, and positions of sensitive nodes in 22-nm to 130-nm processes using parameters in Table 3.6

Figure 3.23a–d show neutron-induced soft-error simulation results obtained using CHB, ALL0, CS, and RS patterns. Although the critical charge is decreasing, the SBU SER is also decreasing with process scaling thanks to the smaller critical area. The MCU SER exhibits a similar tendency at the 65-nm and less nodes.

The proposed N-P reversed cell has 50% smaller NMOS diffusion area in our definition. Therefore, its SBU and MCU SERs are reduced by 11–51% and 34–70%, respectively, at the 22-nm node. Particularly for the column stripe pattern, the MCU SER is improved by 70% (but the SBU SER is decreased by only 11%) because NMOS diffusions in the conventional cells share the same p-substrate in the vertical direction and vertical MCUs occur easily. However, in the row stripe pattern, the MCU SER improvement is the smallest, 34% (but the SBU SER improvement is the largest, 51%), because the distance from a sensitive NMOS node to another in the conventional cells is the longest among the four patterns.

3 Radiation-Hardened Flip-Flops

Kazutoshi Kobayashi, Kyoto Institute of Technology

Jun Furuta, Kyoto Institute of Technology

Radiation-hardened flip-flops (FFs) are used to mitigate unwanted flips by radiation strikes. Here we first deal with several redundant FFs and then move to non-redundant ones. Finally, we discuss device/process/circuit-level mitigation techniques for nano-scaled CMOS.

3.1 Approaches for Radiation-Hardened Flip-Flops

It is mandatory to prevent soft errors in storage circuits such as SRAMs, latches and flip-flops. Aggressive process scaling relieves soft errors rates per bit. But semiconductor chips are utilized in the fields demanding high reliabilities such as automotive, aerospace and medical devices. It is mandatory to reduce soft error rates as small as possible.

Redundant flip-flops mitigate soft errors to prepare extra circuit elements. Usually, three storage cells are prepared. It is possible to correct an error in a storage cell by majority voting. Since redundant flip-flops have large area, delay and power overhead, non-redundant flip-flops are used to reduce soft error rates by adding extra circuit elements with smaller penalties.

Device and process approaches are also effective to reduce soft error rates. Silicon-On-Insulator (SOI) is one promising candidate. Compared with conventional bulk technologies, SOI is strong against soft errors since Buried-Oxide (BOX) layers prevent charge collections.

Those mitigation techniques are introduced in this section.

3.2 Redundant-Structured Flip-Flops

3.2.1 Triple Modular Redundancy Flip-Flop

Triple Modular Redundancy Flip-Flop (TMR-FF ) mitigates soft errors by majority voting. Three storage elements are prepared. Even if one of them is flipped due to a radiation strike, it outputs the correct value by majority voting. Figure 3.24 depicts a typical TMR-FF which contains tripled latches and a majority voter. If one wishes to build a TMR-FF utilizing cells available out there, the majority voter can be constructed with combinations of conventional logic gates as shown in Fig. 3.25a, or using a PDK (Process Design Kit) that includes a special logic cell typically called MAJ as shown in Fig. 3.25b.

TMR-FF is immune from Single Bit Upsets (SBUs) but can fail against Multi-Cell Upsets (MCUs). If two storage elements are flipped, it outputs an incorrect value. Due to the aggressive process scaling, the probability of MCUs is getting higher and higher [127]. It is caused by the fact that ranges of radiation particles are not scaled, while the sizes of storage elements are scaled. If a particle strikes a storage node, generated charge is shared with neighbors. Parasitic bipolar effects [128] also elevate well potential, which turn on parasitic bipolar transistors. To mitigate MCUs, layout-level techniques are effective.

Figure 3.26 shows an example which interleaves the storage elements in the TMR-FF that must not be flipped at the same time [129]. If two storage elements are flipped, it outputs an incorrect value. Due to the aggressive process scaling, the probability of MCUs is getting higher and higher [127]. It is caused by the fact that ranges of radiation particles are not scaled, while the sizes of storage elements are scaled. If a particle strikes a storage node, generated charge is shared with neighbors. Parasitic bipolar effects [128] also elevate well potential, which turn on parasitic bipolar transistors. To mitigate MCUs, layout-level techniques are effective.

Interleaving storage elements on the TMR-FF that must not be flipped at the same time. a w/o interleaving. b w/interleaving. SL0 and ML0 denote Master Latch 0 and Slave Latch 0 in Fig. 3.24

3.2.2 Dual-Interlocked Storage Cell (DICE) Flip-Flop

DICE stands for Dual-Interlocked storage cell. It is utilized in SoCs for High-Performance Computers (HPCs) [46, 130, 131].

The DICE structure as shown in Fig. 3.27a mitigates soft errors by duplicating latches implemented by the half C-elements and the clocked half C-elements as shown in Fig. 3.27b. The input and output signals of these half C-elements have cross-coupled connections to be automatically recovered from a flip on a single node. On the other hand, redundant FFs such as TMR, BISER [132] and BCDMR [133] mitigates soft errors by majority voting among three storage cells, in which a flipped node is left until the next clock signal is injected to supply an unflipped new value.

Compared with these majority-voter-based structures, the DICE structure is area-efficient since latches are not triplicated but duplicated.

3.2.3 Dual-Modulared-Redundancy Flip-Flop

Several dual-modulared-Redundancy (DMR) FFs are developed to achieve equivalent SEU tolerance to the TMR-FF. The DMR FFs explained here have two latches, a cross-coupled inverter called a keeper and C-elements. Compared with the TMR-FF with triplicated latches and voters, the DMR FFs has less area/power/delay penalties. Built-In Soft Error Resilience (BISER ) FF [132] is developed by Intel and Stanford Univ. It consists of dual-modulated latches, C-elements and weak keepers as shown in Fig. 3.28a. As shown in Fig. 3.28b, the C-element and the weak keeper keep the previous value even when one of duplicated latches is flipped. Therefore, BISER FF eliminates an unexpected flip caused by an SBU.

Bistable Cross-Coupled Dual Modular Redundancy (BCDMR ) FF [133] is a modified version of the BISER FF with the functionality which was developed at Kyoto Univ. and Kyoto Inst. of Tech. It has duplicated C-elements with cross-coupled connection as shown in Fig. 3.29, which protects the slave latches from simultaneous flips caused by an SET in the C-element. In the BISER structure, duplicated slave latches are simultaneously flipped when a particle hits on the C-element and an SET pulse is transferred to the slave latches. The probability to capture the SET pulse is increased according to the clock frequencies. On the other hand, in the duplicated C-elements of the BCDMR structure, the SET pulse is only captured by one of the slave latches. Table 3.7 compares area, delay and power of BISER and BCDMR normalized by a conventional DFF. BCDMR FF has the same area with BISER. At 0.5 V, the delay of BISER FF becomes much slower than BCDMR.

In neutron-accelerated tests, BCDMR FF showed over 100× more error resilience than non-redundant FF at clock frequencies up to 100 MHz thanks to the stability given by the cross-coupled dual C-elements [129], while BISER FF became more susceptible to SET at higher clock frequencies [129].

3.3 Non-redundant-Structured Flip-Flops

In this subsection, several non-redundant-structured FFs which pay less area/power/delay penalties but with less robustness against SEUs compared with redundant ones will be introduced.

3.3.1 Reinforcing Charge Collection Flip-Flop

The Reinforcing Charge Collection (RCC) FF [134] was proposed by Intel with dummy transistors to increase critical charge. Figure 3.30 shows an RCC latch schematic. There are two pairs of dummy CMOS transistors between n1 and n2. They are laid out to minimize victim-to-reinforcing diffusion separation as depicted in Fig. 3.31. The OFF device’s diffusion, referred as victim diffusion, collects charge that can flip the stored value. On the other hand, the ON devices diffusion, reffered as reinforcing diffusion, collects charge that can reinforce the stored value. In RCC, those diffusions are laid out besides the dummy gates DN1 and DN2.

3.3.2 Hysteresis Flip-Flop

Hysteresis Flip-Flop (HY-FF) was developed by Broadcom and Vanderbilt Univ. It is a non-redundant FF with additional weak keepers that restricts unwanted flips by radiation strikes [135]. Figure 3.32 shows a schematic diagram of HY-FF. It is non-redundant but its area penalty is relatively large. It is reported in [136] that its radiation hardness is 5× compared with a non-redundant FF and the area of the pulsed hysteresis latch is 108% of a non-redundant FF.

3.4 Device/Process/Circuit-Level Mitigation Techniques for Nano-Scaled CMOS

For nano-scaled CMOS technologies, MCUs, or multiple upsets of signal nodes in close vicinity caused by a single strike of energetic particles, have become more and more pronounced since ranges of radiation particles such as neutrons or alpha particles are not scaled while signal nodes are becoming closer to each other. MCUs may cancel out mitigation effect by redundancy; If two storage elements out of the two among the three in TMR are flipped, it becomes a fault. In nano-scaled CMOS down to 45 or 28 nm, device or process level mitigation techniques are therefore getting increasing attention. Fully Depleted Silicon-On-Insulator (FD-SOI) is one of promising candidates to supplant conventional bulk technologies to cope with. We introduce two FD-SOI processes which have thin BOX (Buried OXide) layers named 65-nm Silicon On Thin BOX (SOTB) and 28-nm Ultra-Thin Body and BOX (UTBB). We also introduce a non-redundant mitigation technique adequate for an anti-soft-error process such as FD-SOI.

3.4.1 Thin BOX FD-SOI Technologies

FD-SOI suppresses the short-channel effect (SCE) and process variations. Two semiconductor companies in Japan and in France are developing similar FD-SOI technologies with thin BOX layers. Sugii et al. from LEAP (Low-Power Electronics Association & Project), an industry consortium in Japan, have developed a 65 nm Thin BOX FD-SOI technology called SOTB [137, 138].

Figure 3.33 shows a cross section of an SOTB transistor. The thickness of the BOX layer is only 10 nm thick so it is possible to control the threshold voltages through backgate biases. Kamohara et al. developed an SoC integrating a 32 bit microprocessor and SRAMs for Internet of Things (IoTs) [139]. ST Microelectronics is also developing another 28 nm Thin BOX FD-SOI technology named UTBB [140]. The thickness of the BOX layers is 25 nm, which is 2.5× thicker than SOTB’s, and has less controllability of threshold voltages than SOTB. Jaquet et al. have implemented a dual-core ARM A9 processor with 2.66 GHz enabled by forward body biases in the UTBB process [141].

FD-SOI technologies have a distinct advantage in soft error immunity, because the bulk Si substrate is separated from the active SOI (Silicon-On-Insulator) regions. Charges generated in the substrate do not penetrate the BOX oxide. SOI The results obtained by the author’s group in spallation neutron irradiation experiment have shown that a conventional TGFF (Transmission Gate FF) on SOTB has the 16× lower SER than a similar TGFF built-in bulk Si [142]. In addition to that, it has been found out that redundant FFs such as DICE, BCDMR and TMR built-in SOTB have no errors during the neutron irradiation [142].

3.4.2 Mitigation Technique Without Redundancy

In the low-SER technologies such as FD-SOIs, non-redundant circuits are enough to suppress SERs. Thus, redundant circuits may not be required. In such technologies, slight difference in critical charge (Qcrit) makes a lot of difference in threshold energy of particles as qualitatively shown in Fig. 3.34. Thus, layout or circuit-level techniques to increase Qcrit without redundancy are good candidates. Figure 3.35 is a non-redundant FF called Tristate-Inverter FF (TIFF) that replaces the transmission gate between master and slave latches on TGFF with a tristate inverter. The area of TIFF is only 5% larger than a Transfer-Gate FF (TGFF), but the critical charge of TIFF is larger than that of TGFF. TIFF has better SERs than TGFF. We fabricated a test chip by 65 nm bulk and FD-SOI processes. Table 3.8 shows the error probabilities by alpha irradiation of TIFF and TGFF. In bulk, the error probabilities are almost equivalent, while in FD-SOI the probability of TIFF is 0.6× of that of TGFF.

4 Soft-Error-Tolerant Reconfigurable Architecture

Yukio Mitsuyama, Kochi University of Technology

Masanori Hashimoto, Osaka University

Takao Onoye, Osaka University

Hiroyuki Kanbara, Advanced Science, Technology & Management Research Institute of KYOTO

Hiroyuki Ochi, Ritsumeikan University

Kazutoshi Wakabayashi, NEC

Hidetoshi Onodera, Kyoto University

4.1 Soft Errors on Reconfigurable Architecture

Recently, the reliability of reconfigurable devices is drawing attentions, since implementing mission-critical applications with high reliability on reconfigurable devices is highly demanded for saving NRE (Non-Recurring Engineering ) costs. Especially, soft errors are one of serious concerns threatening reliability of mission-critical applications. In reconfigurable devices, the reliability of the configuration memory is often considered to be more critical than that of the computed data in data/pipeline registers, since an SEU in the configuration memory damages the functionality until the configuration data is reloaded again; we will refer to this as a permanent error. In the majority of terrestrial applications, on the other hand, requirements for radiation hardness may not be as stringent as in space applications but can vary to such an extent that a single processor architecture would be hard to accommodate.

Coarse-grained reconfigurable architecture s (CGRA) have been studied to fill the performance gap between FPGA and ASIC by reasonably limiting application domains and programmability. From reliability point of view, CGRA is inherently superior in soft error immunity to FPGA, since the amount of configuration bits is by orders of magnitude smaller than that of FPGA. Several groups have so far reported on CGRAs with reliability consideration [143,144,145].

Thus far, while CGRAs have been extensively discussed (e.g., [146,147,148]), their adoption for commercial use has been limited compared to FPGAs. FPGAs have dominated wide areas of applications that are rather popular despite of their large power dissipation and chip area for two major reasons. For one thing, CGRA is basically composed of an array of ALUs (Arithmetic Logical Units) handling multi-bit operands, and is therefore suitable for data-path implementation but not for efficiently implementing one-bit operations that are often used in flag computation, conditional branching and state machines. RTL (Register Transfer Level ) designers and existing behavioral synthesis tools for ASIC and FPGA commonly synthesize data-path circuits that are controlled by state machines. The incompatibility with state machine implementation is, therefore, a significant problem that has prevented CGRA from being widely used. Another reason is that no CGRAs have been provided so far with the full benefit of IP reuse or the standard ANSI C/C++ source codes available for designs. To overcome this issue, several CGRA architectures compatible with state machine implementation have been proposed [149,150,151]. To expand the application domains of CGRAs, an architecture having high compatibility with design automation tools and high flexibility that allows trade-offs between reliability, performance and cost has been highly demanded.

4.2 Proposed Reconfigurable Architecture

4.2.1 Design Concept

The concept of our reconfigurable architecture, which we call Flexible Reliability Reconfigurable Array (FRRA), is based on the above-mentioned recognition of the requirements for flexible reliability in architecture design and compatibility with behavioral synthesis .

-

(1)

Flexible reliability in architecture design

Reliability requirements depend on the application and operating environment, and hence, there is a growing demand for design scheme that would allow flexible choice of countermeasures to prevent reliability degradation. A reconfigurable device is suitable for a spatial redundancy based soft-error-tolerant design , which is applied in mission-critical applications with area costs. In order to achieve the desired level of reliability in a reconfigurable device, it is useful to be able to subject all its basic elements to trade-off between the sensitivity to soft errors and the chip area.

According to the reliability classifications against soft errors, each basic element of our reconfigurable architecture supports several operation modes. When an application is implemented on our reconfigurable device, the operation modes of each basic element are defined by our design tools, which can consider the trade-off between soft-error resilience and hardware cost.

-

(2)

Compatibility with behavioral synthesis

Compatibility with behavioral synthesis requires architectural supports that help provide a trade-off between latency and resource usage (area). Figure 3.36 demonstrates how a C program can be implemented in one cycle and in two cycles. Our architecture supports not only one-cycle implementation of Fig. 3.36a but also multi-cycle implementation. In the two-cycle implementation of Fig. 3.36b, one coarse-grained processing element including two multiplexers and a register, and fine-grained elements for implementing a state machine are necessary. Dynamic reconfiguration of the processing element is controlled by the state machine, which has two states, S0 and S1. In order to show a simple example of two types of implementations, Fig. 3.36 uses a small C program, and may not have been able to show an obvious trade-off between latency and area. In case of a practical C program, a large trade-off could be obtained. Such a trade-off between latency and area obtained by various implementations enables various types of desirable specifications.

In order to achieve this trade-off, the following elements are required: fine-grained elements to implement state machines, coarse-grained elements to perform various types of data processing with dynamic reconfiguration depending on the state signal, register files to save temporal data, and large memories to store large bulk data. Here, although an embedded CPU could be used to control the state of coarse-grained elements, we took the option of using the fine-grained elements built in the chip for pursuing low-latency state control. With these elements, behavioral synthesis allows designers to explore the solution space and select an implementation that satisfies the requirements of the design.

4.2.2 Architecture Design Overview

The proposed architecture is composed of coarse-grained elements as ALU clusters, fine-grained elements as LUT (Look-Up Table ) clusters, register blocks called as REG clusters, and memory blocks called as MEM clusters, where the basic element is noted as cluster. Figure 3.37 shows an example of cluster array, in which an LUT block is composed of a number of LUT clusters. When an application is mapped on the proposed architecture, data-paths are assigned to ALU clusters. Meanwhile, state machines and one-bit operations are assigned to LUT clusters. The temporal intermediate data across the different states could be stored in REG clusters.

The proposed reconfigurable array architecture has two global signals: context signal and state signal. Both of these global signals are generated by designated LUT clusters. The inter-cluster connections are changed according to the context signal, which switches the mapped application or algorithm. An ALU cluster changes its functionality and data-path/flag operands according to the broadcast state signals, while the inter-cluster interconnection is unchanged. All the inter-cluster routings to provide data to clusters are fixed for all the states, and each ALU cluster selects a few data as operands, depending on the state, from all the data delivered to the ALU cluster. Also, a REG cluster selects input data and changes write address depending on the state signal, since the store of intermediate data depends on the state. On the other hand, the functionalities of LUT and MEM clusters are unchanged by the state signal.

ALU, LUT and REG clusters all have an inner structure with three cells as illustrated in Fig. 3.38, while the execution modules (EM) in the cells are specific to each of them. The EMs for ALU, LUT and REG clusters are ALU, LUT and register file, respectively. On the other hand, an MEM cluster includes a single SRAM macro, and hence the structure inside the cluster is different. Several LUT clusters are organized in a two-dimensional array forming a LUT block. ALU, REG, and MEM clusters and LUT blocks are placed in a two-dimensional array. The details of each cluster are explained in Sect. 3.4.2.3.

4.2.3 Details of Reconfigurable Architecture

As shown in Fig. 3.38, an ALU cluster consists of a reconfigurable cell unit for processing various types of operations, a redundancy control unit (RDU) for flexible reliability, a comparing and voting unit, switches and wires. An RCU is composed of a configuration memory switching matrix (ConfSM) and three cells, each of which contains an execution module (EM), register files for storing configuration bits, and voters. In EM, arithmetic operations including multiplication, logic operation, and shift operation are performed.