Abstract

This paper focuses on designing an appropriate sampled-data controller to deal with the problem of master–slave synchronization for chaotic neural networks with discrete and distributed time varying delays in the presence of a constant input delay. The striking feature of this article is that we utilize the decomposition approach of delay interval when constructing a new Lyapunov functional. Besides, combining with the input delay approach and the linear matrix inequalities (LMIs) method, the desired sampled-data controller gain can be obtained by using the Matlab software to solve a series of LMIs. Finally, a numerical example is provided to verify the effectiveness of the given results.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Since the master–slave (drive response) concept which was proposed by Pecora and Carroll in their pioneering work [1], researchers have spent considerable time and efforts to achieve the master–slave synchronization of chaotic neural networks with time delays. We must notice that it cannot be avoided generating time delays when the neuron transmitting signals during real application. Moreover, the advent of time delays will cause the neural networks’ presented complicated and instable performance. In order to make the results more universal, many articles [2, 3] take the time delays into account while analyzing the chaotic neural networks. Compare with [2], the authors of [3] considered the time varying delays instead of constant time delays, and obtained less conservative results.

So far, various control schemes are applied to achieve the synchronization of chaotic neural networks, which include impulsive control [4], adaptive control [5], state feedback control [6], sampled data control [7], pinning control [8].

Among these control schemes, the sampled data control technology has enjoyed widespread adoption due to its own outstanding advantages. The sampled data controller takes up low communication channel capacity and has high resistance to anti-interference, which can accomplish the control task more efficiently. Thanks to the input delay approach [9], we can deal with the discrete term more easily. In [10], For the sake of getting sufficient exponential synchronization conditions, the authors made use of Lyapunov stability theory, input delay approach as well as linear matrix inequalities (LMI) technology. But this article did not take the signal transmission delay into account. To the best of the authors’ knowledge, Little literature has been investigated the master–slave synchronization schemes for neural networks with discrete and distributed time varying delays using sampled-data control in the presence of a constant input delay, and still remains challenging.

From the foregoing discussions, the major thrust of the paper is to discuss the problem of master–slave synchronization for neural networks with mixed time varying delays (discrete and distributed delays) by utilizing sampled data control in the presence of a constant input delay. The desired sampled data controller can be obtained through computing some LMIs which depend on the Lyapunov functionals. The usefulness of input delay approach is also considered. Besides, the introduction of decomposition approach of delay interval will make our results less conservative. The proposed synchronization control scheme is verified through simulation results.

Notation: The notations which are used in this article are defined as: \( R^{n} \) and \( = \) denote the \( n \)—dimensional Euclidean space and the set of all \( m \times n \) real matrices, respectively. The notation \( X > Y(X \ge Y) \), where \( X \) and \( Y \) are symmetric matrices, means that \( X - Y \) is positive definite (positive semidefinite). \( I \) and \( 0 \) represent the identity matrix and a zero matrix, respectively. The superscript “T” denotes matrix transposition, and \( {\text{diag}}\{ \ldots \} \) stands for a block diagonal matrix. \( \left\| \cdot \right\| \) denotes the Euclidean norm of a vector or the spectral norm of matrices. For an arbitrary matrix \( B \) and two symmetric matrices \( A \) and \( C \), \( \left[ {\begin{array}{*{20}c} A & B \\ * & C \\ \end{array} } \right] \) denotes a symmetric matrix, the “*” are symmetric elements that stand for the symmetric matrix. If the dimensions of matrices are not particularly pointed out, we will deem the matrices have appropriate dimension for mathematical operations.

2 Model and Preliminaries

Consider a neural network with mixed delay as follow:

where \( x(t) = [x_{1} (t)x_{2} (t)x_{3} (t) \cdots x_{n} (t)]^{T} \in R^{n} \) and \( f(x(k)) = [f_{1} (x_{1} (t))f_{2} (x_{2} (t))f_{3} (x_{3} (t)) \) \( \cdots f_{n} (x_{n} (t))]^{T} \) are, respectively, the state variable and the neuron activation function; \( C = {\text{diag}}\{ c_{1} ,c_{2} ,c_{3} , \ldots ,c_{n} \} \) is a diagonal matrix with positive entries; \( A = (a_{ij} )_{n \times n} , \) \( B = (b_{ij} )_{n \times n} , \) and \( D = (d_{ij} )_{n \times n} , \) are, respectively, the connection weight matrix, the discretely delayed connection weight matrix and the distributively delayed connection weight matrix; \( \tau (t) \) denotes the time varying delay, and satisfies \( 0 \le \tau (t) \le \tau ,\dot{\tau }(t) \le u \); \( \sigma (t) \) is expression of the distributed delay which is supposed to satisfied \( 0 \le \sigma (t) \le \sigma \). The mentioned definitions of \( \tau, \) \( u \) and \( \sigma \) are constants.

With regard to the neuron activation function, the following hypotheses will come into play.

Assumption 1

There exists some constants \( L_{i}^{ - } \), \( L_{i}^{ + } \), \( i = 1,2,3 \ldots n \), such that the activation function \( f( \cdot ) \) is satisfied with \( L_{i}^{ - } \le \frac{{f_{i} (\vartheta_{1} ) - f_{i} (\vartheta_{2} )}}{{\vartheta_{1} - \vartheta_{2} }} \le L_{i}^{ + } \) for \( \vartheta_{1} ,\vartheta_{2} \), and \( \vartheta_{1} \ne \vartheta_{2} \).

In this paper, neural networks system (1) is deemed as the master system and a slave system for (1) will be designed as

where \( D \), \( A \), \( B \) and \( C \) are matrices as in (1), and \( u(t) \in R^{n} \) is the control input to be designed.

The synchronization error signal is described as \( e(t) = y(t) - x(t) \), then the error signal system can be exhibited as

where \( g(e(t)) = f(y(t)) - f(x(t)) \).

In this paper, we define the updating signal time of Zero-Order-Hold (ZOH) by \( t_{k} \), and assume that the updating signal (successfully transmitted signal from the sampler to the controller and to the ZOH) at the instant \( t_{k} \) has experienced a constant signal transmission delay \( \eta \). Here, the sampling intervals are supposed to be less than a given bound and satisfy \( t_{k + 1} - t_{k} = h_{k} \le h \).

The \( h \) represents the largest sampling interval. Thus, we can obtain that \( t_{k + 1} - t_{k} + \eta \le h + \eta \le d \).

The main aim of this paper is to achieve the synchronization of the master system (1) and slave system (2) together with the following sampled-data controller

where \( K \) is the sampled data feedback controller gain matrix to be determined.

Applying control law (4) into the error signal system (3)

Defining \( d(t) = t - t_{k} + \eta \), \( t_{k} \le t < t_{k + 1} \), besides, \( 0 \le d(t) \le d \). Then error signal system can be described as the following condition

Next, we shall briefly introduce the lemmas which will be used in this paper.

Lemma 1

(Jensen inequality) [11] For any matrix \( \omega > 0 \), there existing scalars \( \alpha \) and \( \beta \) \( (\beta > \alpha ) \), a vector function \( \phi :[\alpha ,\beta ] \to R^{n} \) such that the integrations concerned are well defined, then

Lemma 2

(Extended Wirtinger inequality) [12] For any matrix \( Z > 0 \), if \( \varphi (t) \in \omega [a,b) \) and \( \varphi (a) = 0 \), then following inequality holds:

Lemma 3

The constant matrix \( Y \in R^{n \times n} \) is a positive definite symmetric matrix, if the positive scalar \( d \) is satisfied with \( 0 \le d(t) \le d \), and the vector-valued function \( \dot{y}:[ - d,0] \to R^{n} \) is existent, then the integral term \( - d\int\limits_{t - d}^{t} {\dot{y}^{T} (\zeta )Y\dot{y}(\zeta )} {\text{d}}\zeta \) can be defined as

3 Main Results

In this section, a synchronization criterion will be presented which can make sure that the slave system (2) is synchronized with master system (1). Above all, we are going to divide the sampling interval into three parts, respectively, \( \left[ { - \eta ,0} \right],\left[ { - \eta , - \left( {\eta + \frac{h}{2}} \right)} \right],\left[ { - \left( {\eta + h} \right), - \left( {\eta + \frac{h}{2}} \right)} \right] \)

Next, we will list a collection of notations which are used in this paper.

Theorem 1

Given scalar \( \gamma > 0 \), if there exist \( P > 0,\;R_{1} > 0,\;R_{2} > 0,\;R_{3} > 0,\;Z_{1} > 0,\;Z_{2} > 0,\;Z_{3} > 0,\;Z_{4} > 0,\;Z_{5} > 0,\;Z_{6} > 0,\;Q > 0,\;W > 0,\;G_{1} ,\;G_{2} ,\;G,\;F \)diagonal matrices \( M \ge 0,\;V_{1} > 0,\;V_{2} > 0 \) such that

then the slave system (1) is synchronized with master system (2). Furthermore, the sampled data controller gain can be obtained by \( K = G^{ - 1} F \).

Proof

Construct a discontinuous Lyapunov functional for the error system (7)

\( V_{7} (t) \) can be rewritten as

From the Lemma 2, we can infer \( V_{7} (t) \ge 0 \). Furthermore, \( V_{7} (t) \) will vanish at \( t = t_{k} \). Therefore, we can conclude that \( \mathop {\lim }\nolimits_{{t \to t_{k}^{ - } }} V(t) \ge V(t_{k} ) \).

Next, we will compute the derivative of \( V(t) \) with the corresponding trajectory of system (6)

According to Lemma 1

Consequently, the following inequality holds

According to Lemma1 and Lemma 3, if \( d(t) \in \left[ { - (h + \eta ), - \left(\eta + \frac{h}{2}\right)} \right] \), then the following inequalities hold

If \( d(t) \in \left[ { - \left( {\eta + \frac{h}{2}} \right), - \eta } \right] \), we have similar inequalities.

Based on the error system (6), for any appropriately dimensioned matrices \( G_{1} \) and \( G_{2} \), the following equations are true

where \( G_{1} \) and \( G_{2} \) are defined as \( G_{1} = G,\;G_{2} = \gamma G. \)

Besides, we can obtain from Assumption 1 that for \( j = 1,2,3, \ldots ,n: \)

where \( e_{j} \) stands for the unit column vector with 1 element on its \( j \)th row and zeros elsewhere. Therefore, the following inequality can be derived, for any appropriately dimensioned matrices \( V_{1} > 0 \) and \( V_{2} > 0 \).

Now substituting (16) and (18) to \( \dot{V}(t) \), and letting \( K = G^{ - 1} F \), then the following inequality will be achieved

where

Thus, based on (10) and (11), we can conclude that \( \dot{V}(t) \le - \zeta \left\| {e(t)} \right\|^{2} \) for a small scalar \( \zeta > 0 \).

According to the Lyapunov stability theory, it can be inferred that the slave system (2) is synchronized with the master system (1). This completes the proof.

Remark 1

A new synchronization criterion for the master system (1) and slave system (2) are introduced in Theorem 1 through constructing a novel Lyapunov functional. Numerous LMIs which are applied to derive sufficient conditions which can be calculated effectively by the Matlab LMI control toolbox.

Remark 2

Thanks to the term \( V_{7} (t) \) as well as the decomposition method of delay interval, the sawtooth structure characteristic of sampling input delay is used properly, and the existing results will be improved significantly.

4 Numerical Example

In this simulation, we choose the activation functions as \( f_{1} ( {\text{s)}} = f_{2} ( {\text{s)}} = \tanh ( {\text{s)}} . \) The parameters of master system (1) and slave system (2) are assumed as

It is clear that \( L_{1} = 0 \), \( L_{2} = 0.5I \).

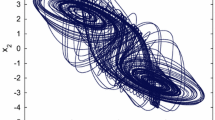

We suppose that \( J = 0, \) discrete delay \( \tau (t) = \frac{{{\text{e}}^{t} }}{{{\text{e}}^{t} + 1}}, \) distributed delay \( \sigma (t) = 0.5\sin^{2} (t) \). The other parameters are defined as \( \tau = 1 \), \( u = 0.25 \) and \( \sigma = 0.5 \). The initial values of master system and slave system are presented as \( x(0) = \left[ {\begin{array}{*{20}c} {0.3} & {0.4} \\ \end{array} } \right]^{T} ,y(0) = \left[ {\begin{array}{*{20}c} { - 0.2} & {0.7} \\ \end{array} } \right]^{T} \), respectively. The chaotic behavior of the master system and the slave system without controller are given in Figs. 1 and 2, respectively.

Chaotic behavior of the master system (1)

While employing Theorem 1, we create Table 1 to show the relationship between the transmission delay \( \eta \) and the maximum values of sampling interval \( h \). From Table 1, we can get the largest sampling interval \( h = 0.21 \) when the corresponding constant delay \( \eta = 0.01 \). Calculating the LMIs (10) and (11), the controller gain is presented as

Based on the mentioned controller gain, the response curves of control input (4) and system error (6) are exhibited in Figs. 3 and 4, respectively. Clearly, numerical simulations demonstrate that the designed controller can achieve master–slave synchronization.

5 Conclusions

In this paper, the problem of master–slave synchronization has been studied for chaotic neural networks with discrete and distributed time varying delays in the presence of a constant input delay. Based on the Lyapunov stability theory, input delay method as well as the decomposition approach of delay interval, we construct a new Lyapunov functional and derive the less conservative results. Ultimately, numerical simulations demonstrate the advantage and effectiveness of the obtained results.

References

Pecora LM, Carroll T (1990) Synchronization in chaotic systems. Phys Rev Lett 64:821–824

Yang XS, Zhu QX, Huang CX (2011) Lag stochastic synchronization of chaotic mixed time-delayed neural networks with uncertain parameters or perturbations. Neurocomputing 74:1617–1625

Wu ZG, Park JH, Su HY, Chu J (2012) Discontinuous Lyapunov functional approach to synchronization of time-delay neural networks using sampled-data. Nonlinear Dyn 69:2021–2030

Li XD, Rakkiyappan R (2013) Impulsive controller design for exponential synchronization of chaotic neural networks with mixed delays. Commun Nonlinear Sci Numer Simulat 18:1515–1523

Sun YH, Cao JD (2007) Adaptive lag synchronization of unknown chaotic delayed neural networks with noise perturbation. Phys Lett A 364:277–285

He GG, Shrimali MD, Aihara K (2007) Partial state feedback control of chaotic neural network and its application. Phys Lett A 371:228–233

Wu ZG, Park JH, Su HY, Chu J (2012) Discontinuous Lyapunov functional approach to synchronization of time-delay neural networks using sampled-data. Nonlinear Dyn 69:2021–2030

Li LL, Cao JD (2011) Cluster synchronization in an array of coupled stochastic delayed neural networks via pinning control. Neurocomputing 74:846–856

Fridmana E, Seuretb A, Richardb JP (2004) Robust sampled-data stabilization of linear systems: an input delay approach. Automatica 40:1441–1446

Zhang CK, He Y, Wu M (2010) Exponential synchronization of neural networks with time-varying mixed delays and sampled-data. Neurocomputing 74:265–273

Gu K, Kharitonov VK, Chen J (2003) Stability of time-delay systems. Birkhauser, Boston

Liu K, Suplin V, Fridman E (2011) Stability of linear systems with general sawtooth delay. IMA J Math Control Inf 27:419–436

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper

Nie, RX., Sun, ZY., Wang, JA., Lu, Y. (2014). Sampled-Data Synchronization for Chaotic Neural Networks with Mixed Delays. In: Wen, Z., Li, T. (eds) Practical Applications of Intelligent Systems. Advances in Intelligent Systems and Computing, vol 279. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-54927-4_68

Download citation

DOI: https://doi.org/10.1007/978-3-642-54927-4_68

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-54926-7

Online ISBN: 978-3-642-54927-4

eBook Packages: EngineeringEngineering (R0)