Abstract

Present-day systems for human movement analysis are not portable, have a limited capture volume and require a trained technician to analyze the data. To extend the use and benefits to non-laboratory settings the acquisition should be robust, reliable and easy to perform. Ideally, data collection and analysis would be automated to the point where no trained technicians are required. Over the last decade several inertial sensor approaches have been put forward that address most of the aforementioned limitations. Advancements in micro-electro-mechanical sensors (MEMS) and orientation estimation algorithms are boosting the use of inertial sensors in motion capture applications. These sensors currently are the most promising opportunity for non-restricted human motion analysis. In this chapter we will describe the types of sensors used, followed by an overview of their use in the biomechanics community (Sect. 16.1); provide the necessary background of basic mathematics for those that want to refresh the basics of kinematics (case studies and appendix). The limitations of traditional systems can be dealt with due to the redundant information available to obtain orientation estimates. There are several different methods to derive orientation from sensor information; we will highlight the main groups of algorithms and the various ways in which they use the available data (Sect. 16.3). The chapter furthermore contains two hands-on examples to derive orientation (case study 1) and extract joint angles (case study 2, Sect. 16.4).

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Human movement analysis can be defined as the interdisciplinary field that describes, analyzes, and assesses human movement. Movement analysis has become a valuable tool in clinical practice, e.g. instrumental gait analysis for children with cerebral palsy (see also Chap. 2). The data obtained from measuring and analyzing limb movement enables clinicians to assess the impaired function and prescribe surgical or rehabilitation interventions. Despite the recognized potential of human movement analysis for diagnosis of neurological movement disorders, rehabilitation from trauma, and performance enhancement its use is restricted to limited specialized medical or rehabilitation centers. The lack of existing applications is mainly due to limitations associated with current motion capture equipment.

The currently available motion capture systems can be divided into vision based and sensor based systems (Zhou and Hu 2007). The vision based systems can be further divided into marker based systems and markerless systems. The former are considered as the gold standard, providing with the most accurate measurements. However, these systems present additional limitations on top of the high financial investment required. Present-day marker based systems are not portable, have a limited capture volume and require a trained technician to analyze the data. The latter is due to a need for a pre-calibration procedure to convert the marker data to a model representing the subject.

Markerless vision based systems, such as the Kinect are not as costly but still suffer from occlusion and illumination problems and a limited capture volume. Furthermore, the repeatability of their measurements is often limited. Traditional sensor based systems (e.g. acoustic or magnetic motion capture systems) also have a restricted capture volume and are sensitive to environmental conditions such as illumination and air flow (depending on the type of sensor used).

To extend the use and benefits of human motion analysis to non-laboratory settings the acquisition should be robust, reliable and easy to perform. Cluttered scenes, changing environmental conditions and non-limited capture volumes are common outside of the laboratory. Ideally, data collection and analysis would be automated to the point where no trained technicians are required. Over the last decade several inertial sensor approaches have been put forward that address most of the aforementioned limitations. Advancements in micro-electro-mechanical sensors (MEMS) and orientation estimation algorithms are boosting the use of inertial sensors in motion capture applications.

Use of magnetic and inertial measurement units (MIMU) is growing in ambulatory human movement analysis. A MIMU consists of a variety of sensors; generally these are three accelerometers, three gyroscopes and three magnetometers. Data fusion of these sensors provides orientation of the MIMU and therefore can provide orientation of the segments to which they are attached. The popularity of MIMU stems from their low cost, light weight and sourceless orientation. MIMU obtain a reference orientation by using the earth gravitational force and the geomagnetic north. Therefore, MIMU do not need a fixed spatial reference in the lab (usually defined at a forceplate center/corner). MIMU are starting to demonstrate their potential in motion analysis applications in robotics, rehabilitation and clinical settings. In addition to being less obtrusive and relatively inexpensive, the main advantage of MIMU is that they are not restricted to a defined capture volume and relatively easy to use.

The objective of this chapter is to provide an outline on the potential of MIMU in human movement analysis (see Fig. 16.1). To accomplish this objective, the chapter is organized in four main sections. Section 16.1 provides a short overview of inertial sensing approaches used to obtain orientation. This overview is by no means a complete review of the literature on orientation estimation using inertial sensors, but rather an overview of sensor alternatives that preceded the current popular approach. In Sect. 16.2 you can refresh your knowledge on 3D kinematics and mathematics. The reader is considered to have prior knowledge in this area. References are provided for those in search of a more complete introduction to 3D kinematics. In Sect. 16.3 an introduction to orientation estimation algorithms is provided, including a first case study. The case study addresses extracting orientation from inertial and magnetic sensor data. Section 16.4 is dedicated to a case study on human movement analysis with MIMU. A theoretical basis for human movement analysis with MIMU is provided, followed by a practical example: estimation of 3D knee joint angles during overground walking. This last case study contains all the information provided earlier in this chapter and can be used as a guide to perform human movement analysis outside of a specialized laboratory.

Flow diagram of human motion analysis with inertial sensors. When the patient enters the lab he/she is equipped with inertial sensors (one on each body segment adjacent to a joint subjected to investigation). A two-phased calibration procedure (static and dynamic) precedes the actual data collection and analysis. The last step is to interpret the data

A summary in layman terms is provided in text boxes before each technical section in an attempt to improve readability for those lacking a strong mathematical background.

2 Inertial Sensing Approaches

Currently three different sensors are often combined to obtain more accurate and robust orientation estimates. The strengths of accelerometers, gyroscopes, and magnetometers are combined in an attempt to address their individual weaknesses. In this section the type of sensors are described, followed by an overview of their use in the biomechanics community. A case study estimating orientation from accelerometer and magnetometer data concludes this section.

2.1 Type of Sensors

Accelerometers measure linear accelerations, originating either from the earth gravitational field or inertial movement. Time mathematical integration of the acceleration signal yields the momentary velocity of the point to which the device is attached, and a second integration yields a spatial displacement of that point, potentially providing an alternative measurement to that generated by a more expensive position measurement system. Under static and quasi-static conditions the accelerometer can be used as an inclinometer. However, under more dynamic conditions it becomes very hard to impossible to accurately decompose the signal into inertial and gravitational components.

Gyroscopes measure angular velocity. Integrating the angular velocity provides us with the angular change over time. A tri-axial gyroscope setup can, given initial conditions, thus track changes in orientation. However, gyroscopes are prone to unbiased drift after integration, limiting their use in time. This error occurs upon integration of the gyroscope signal with the inherent small temperature related spikes. Over time, the integration of these spikes causes the gyroscope signal to drift further and further away from the actual tilt angle. This drift error is strongly affected by temperature, and much less by velocity or acceleration; gyroscopes can thus be applied in highly dynamic conditions, but only for short periods of time.

Magnetometers measure the geomagnetic field, and as such indicate the earth north direction in the absence of other ferromagnetic sources. Magnetometers are often combined with accelerometers, where the former provide the heading of the coordinate system.

2.2 Historical Overview

Inertial technology was introduced in biomechanics for impact analysis in the 60–70s. The first studies were done with uni-axial accelerometers, but quickly configurations with three, six, and nine accelerometers were considered (Morris 1973). The initial results indicated that there were severe restrictions in time duration. Giansanti et al. more recently investigated the feasibility to reconstruct position and orientation (pose) data based on configurations containing respectively six and nine accelerometers. They concluded that neither of these configurations was suitable for body segment pose estimation (Giansanti et al. 2003).

In the following decade inertial sensors made their way into motion analysis, in particular in the clinical assessment of gait. Accelerometers were still the preferred sensor type. Willemsen et al. (1991) performed an error and sensitivity analysis to examine the applicability of accelerometers to gait analysis. They concluded that the model assumptions and the limitations due to sensor to body attachment were the main sources of error. The model used was a planar (sagittal plane) lower extremity model consisting of rigid links coupled by perfect mechanical joints (i.e. hinge joint representing the knee). Willemsen et al. (1990) used this two-dimensional model to avoid integrating and thus avoid the troublesome integration drift. They placed four uni-axial accelerometers organized in two pairs on each segment. This method was deemed acceptable for slow movements but considerable errors were reported for higher frequencies (faster movements). Still without additional sensors, Luinge and Veltink applied a Kalman filter (more information on Kalman filters is provided later) to the accelerometer data to improve the orientation estimate (Luinge and Veltink 2004). Luinge and Veltink estimated the contribution to acceleration due to gravity and due to inertial acceleration and used these estimates in their subsequent calculations to derive orientation. Previously low pass filters (only letting that part of the signal through that has low frequency, in this case gravity) were used to eliminate as much as possible the unwanted inertial acceleration signal from the accelerometer data. The filter designed by Luinge and Veltink outperformed these low-pass filters, especially under more dynamic conditions, and might be one of the bases of the popularity of Kalman filters in current orientation estimation algorithms.

By the start of this century, both the cost and size of micro-electro-mechanical sensors (MEMS) had dropped severely. This led to an influx of their application in biomechanics and research in general, and allowed for novel methods in orientation estimation. In particular, it allowed researchers to combine various sensors and thus exploit their individual strengths. Initially accelerometers and gyroscopes were combined (Williamson and Andrews 2001), and later magnetometers were added (Bachmann 2004). Currently the most popular fusion method is based on a Kalman Filter where the information from all three sensors is taken into account. Accelerometers and magnetometers combined act as an electronic 3D compass. This information can be used to provide the initial condition and correct the drift error present in the gyroscope estimation. The gyroscopes in turn are used to smooth the previous estimate, which is especially valuable under dynamic conditions. More information on fusion algorithms is provided in Sect. 16.3. Despite the improvements realized by sensor fusion, there is still room and need for improvement. Additional sensors [GPS, Kinect, magnetic sources and sensors] and anatomical constraints (Luinge et al. 2007) are some of the approaches that have been put forward as potential solutions. Most efforts however are directed to improve the fusion and filtering algorithms.

Prior to start to work with the MIMU, an introduction to human movement analysis related algebra is given in the appendix. Readers that are already familiarized with this knowledge can go to the first practical example at the end of this section. For those in need for more basic or in depth information, we refer to the following publications (Winter 2004; Vaughan et al. 1999).

2.3 Case Study: Electronic Compass by Fusion of Accelerometer and Magnetometer Data

Kinematic technology allows measuring spatial segment movement. The type and format of data obtained depends on both the movement under investigation as well as on the technology used to record this movement. The type of sensors used and the way in which the information from these sensors is exploited determines the accuracy, reliability, and potential field of application.

This case study exists in determining three unit vectors (a vector is a representation of direction and magnitude of the quantity represented by its data (e.g. Gravity, voltage…); a unit vector is a vector that has a magnitude of 1, and can be obtained by normalizing or taking out the effect of magnitude by dividing a vector by its absolute value) that are perpendicular to each other (for three vectors A, B, C: A to B, A to C, and B to C). These three unit vectors together form a coordinate system from which we can extract orientation. We will make use of sensor data to provide us with two of the three desired vectors, and use a mathematical trick to obtain the third (cross product).

In the absence of motion it is assumed that the only acceleration measured by the accelerometers is gravity. Accelerometer data can thus be used to obtain a reference (\( \vec{Y} \)) of the global vertical axis, the gravity vector (Kemp et al. 1998). In the absence of ferromagnetic perturbations we can use a similar construct to obtain a horizontal vector based on the magnetometer data (\( \vec{H} \)). Since both gravity and the geomagnetic field are earth bound, it should be clear that we are obtaining sensor orientation with respect to the global or earth reference frame. Data from the accelerometers and magnetometers has to be normalized in order to obtain unit vectors. Taking the cross product of the unit vectors \( \vec{H} \) and \( \vec{Y} \) gives us a third unit vector (\( \vec{Z} \)), normal to both \( \vec{H} \) and \( \overrightarrow { Y} \). Consecutive cross products ensure that the obtained system is orthogonal. We can thus obtain \( \vec{X} \) by taking the cross product of \( \vec{Z} \) and \( \vec{Y} \). The obtained vectors can be organized in matrix format to obtain the rotation matrix (see appendix for more information on matrix and vectors). From this matrix we can then extract the Euler angles using the X–Y′–Z″ rotation sequence (see appendix: How to extract rotation angles using Euler convention). A pseudo-code version and numerical example are provided to further clarify this process. A pseudo-code is an easy way to give a steps sequence to achieve a given goal. The name pseudo-code comes from computer programming science, where “pseudo” is given since the code is not written in any computer language but in a sequence of steps.

Solving this for a numerical example gives us:

-

Get raw data

-

Sensor data of the individual sensors the TechMCS (Technaid, S.L.) consists of: accelerometer data is displayed in m/s2, gyroscope data in rad/s, magnetometer data in uT, and temperature in degrees Celsius.

AcceX | AcceY | AcceZ | Temp |

9.66E + 00 | 1.67E + 00 | 3.48E – 01 | 3.40E + 01 |

GyroX | GyroY | GyroZ | |

−1.10E − 02 | 6.73E − 03 | 1.55E − 03 | |

MagnX | MagnY | MagnZ | |

−3.18E + 01 | −7.91E + 00 | −1.83E + 01 |

The obtained vectors can be organized in matrix format; from this rotation matrix we can then extract the Euler angles (see Sect. 16.2).

As mentioned earlier, the method explained above is only valid in static or quasi-static situations. In motion trials, such as gait analysis, we can no longer assume that the acceleration due to movement is insignificantly small compared to gravity. Therefore, the accelerometer can no longer be used as a standalone inclinometer (providing with an attitude reference) and a more elaborate method should be used to obtain orientation with respect to the global reference system.

3 Orientation Estimation Algorithms

If, as is the case with the MIMU used in our case studies (Technaid 2013), sensor production is not fully automatic then axis misalignment and cross axis sensitivity have to be accounted for, on top of the sensor noise. One of the types of noise that is to be expected is drift error in the gyroscope signal. This error occurs upon integration of the gyroscope signal with the inherent small temperature related spikes. Over time the integration of these spikes causes the gyroscope signal to drift further and further away from the actual tilt angle.

Sensor fusion can be defined as “the conjoint use of various sensors to improve the accuracy of the measurements under situations where one or more sensors of the network are not behaving properly” (Olivares et al. 2011).

The listed difficulties can be dealt with due to the redundant information available to obtain orientation estimates. Orientation can either be obtained by integrating the gyroscope data or by combining the accelerometer and magnetometer data into an electronic compass.

There are several different methods to derive orientation from sensor information; in the following we briefly highlight the main groups of algorithms and the various ways in which they use the available data. A survey of all published methods would be too technical and lengthy to strive for in this section. We will therefore highlight the two main approaches and briefly explain (one of) the most popular solutions within each approach.

The deterministic approach is based on vector matching. To derive orientation three independent parameters are needed. Two, non-parallel, vector measurements are sufficient to generate these three parameters. This approach has been demonstrated earlier when we derived orientation from magnetometer (local magnetic field vector) and accelerometer (gravity vector) data. The example given closely corresponds to the TRIAD (tri-axial attitude determination) method. Other least-squares approaches are the QUEST (Quaternion estimation) methods, factorized quaternion methods and the q-method (Cheng and Shuster 2005; Shuster 2006). All aforementioned methods are single-frame methods, i.e. they rely on the data from the current frame to derive orientation in that same time frame. The TRIAD method has several limitations: it only allows 2 input vectors and is sensitive to the order in which they are presented; using only data from one time frame it is more sensitive to random error.

Sequential approaches, the most well-known being the Kalman filters, are able to attain better results by taking advantage of more data and thus reducing the sensitivity to random error. The Kalman filter is a recursive filter, meaning that it reuses data to improve the estimate of the state of the system and to moderate the noise present in the measurement data. It is since long the most commonly used orientation estimation algorithm (Yun and Bachmann 2006; Sabatini 2006; Roetenberg 2006; Park 2009). The most used version is the extended Kalman filter (EKF). The extended KF accounts for a certain degree of non-linearity by linearizing about the current best estimate. If the non-linearity is high then a different filter type, better fit to cope with non-linearity (e.g. Particle filter methods), should be chosen instead. The EKF is also the filter type used to obtain the orientation data in the second case study.

The equations behind the EKF can be separated into two groups: time update or predictor equations and measurement update or corrector equations (see Fig. 16.2). To be able to remove the drift error present after integrating the gyroscope data we have to estimate it. Upon removal of this drift the gyroscope signal will be closer to the actual rotations and changes in orientation. We furthermore need a reference to help us identify the drift in the gyroscope signal. We are using orientation data as input into our EKF, thus the reference will be provided by combining the data from the accelerometers and magnetometers (see case study 1). The filter parameters are altered depending on the movement or activity under investigation. The gains of each parameter are calculated continuously to indicate the importance (level of trust to be given to) of each input for the estimation. The initial tuning of the parameters has a strong impact on the performance of the filter. It is hard to impossible to find a configuration that is suitable for both static or slow movements and highly dynamic activities.

Scheme of a Kalman filtering algorithm. Stochastic filters such as the EKF use a model of the sensor measurements (measurement model) to produce an estimate of the system state. Stochastic filtering thus exist of two stages in a loop, a prediction stage of the new state (time update) and an update stage where this prediction is verified by the new measurements (measurement update)

The EKF thus balances the strengths and weaknesses of the various sensors to achieve a compromise of orientation estimation with higher accuracy and reliability. The most important limitation of the EKF is that, being an adaptive filter, its behavior depends on the tuning of the parameters and the motion being analyzed. The data provided and analyzed in this chapter was obtained using the on-board algorithm from the TechMCS MIMU (Technaid, S.L.).

4 Human Movement Analysis with MIMUs

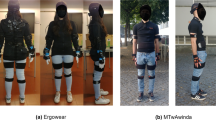

In this section we will start from both raw and orientation data provided by the TechMCS sensors, but you can apply the following to any sensor or system providing this data. The orientation estimation was obtained using the on-board algorithm from the TechMCS MIMU (Technaid, S.L.). The raw sensor data will be used in the sensor-to-body calibration. We will use the sensor orientation data to derive anatomical joint angles. In particular we will look at the right knee joint during normal over-ground walking. Two sensors are placed in an elastic strap and tightened with velcro on the lateral side of the right leg; one on the thigh (1/3 up from the knee joint) and the other on the shank (1/3 down from the knee joint) (see Fig. 16.3). It is important to create a significant, but not uncomfortable, pre-load while attaching the straps to avoid excessive motion artifacts during data collection. The same principles hold for other joints in the human body, as well as other movements. Starting from sensor orientation, we have to obtain anatomical segment orientation. Once we have the orientation of all of the segments involved we can calculate the rotation matrix between two adjacent segments and extract the relevant joint angles.

Two-step calibration procedure to calibrate the MIMU to their respective body segments of the lower limb. The first step (A) consists of maintaining an upright posture with the leg fully extended. In this posture the segment length is aligned with the earth gravity vector (vertical axis, in red). The second step (B) determines the second vector (green). We have opted for a planar movement around the hip joint (hip flexion–extension) with a straight leg. During the movement, both shank and thigh move in the same plane with a common flexion–extension axis (dotted green line at the hip). The third calibration axis (black) is then obtained by taking the cross product from the vectors measured in A and B. To correct for any misalignments due to measurement error (e.g. poor execution of the flexion–extension movement), one of the measurement axis is subsequently corrected by taking the cross-product between the third axis and the other measurement axis

4.1 Sensor-to-Body Calibration

To obtain anatomical segment orientation a sensor-to-body calibration is required (see Fig. 16.3). The purpose of the calibration is to identify, for each sensor attached to a segment, a constant rotation matrix relating the sensor frame to the anatomical frame of the segment to which it is attached. The ISB recommendations (see appendix) to quantify joint motion are based on systems providing position data (Grood and Suntay 1983; Wu et al. 2002). However, current IMUs and MIMU are unable to provide position data. When only orientations of body segments are available, positions have to be determined by linking segments to each other, using a linked-segment model based on segment orientation and fixed segment lengths (Faber et al. 2010; Van den Noort et al. 2012). Therefore, several calibration methods have been proposed that do not rely on or require position data of bony anatomical landmarks (Favre et al. 2009; O’Donovan et al. 2007; Picerno et al. 2008). Three main groups can be distinguished: reference posture or static methods, functional methods, and those requiring additional equipment. The static methods rely on one or several predefined postures and predominantly use accelerometer and magnetometer data. Functional uni-articular joint movements are added in the functional methods. The functional joint axis of rotation is derived from the gyroscope data (Luinge et al. 2007; Jovanov et al. 2005). The calibration method used here belongs to the latter category and can be divided into two parts, the first being static. The participant, equipped with a sensor on the thigh and shank, is required to stand still with both legs parallel and knees extended. It is assumed that the longitudinal axis of the segment (\( \vec{Y} \)) coincides with the gravity vector. To obtain this unit vector, the accelerometer data during a specific frame is extracted. It is recommended to verify the absence of motion artifact of amplitude spikes during the chosen frame, or alternatively average the accelerometer data over a short interval. After doing so, we have obtained the first axis of the anatomical coordinate system (ACS).

For the data provided in the previous section this gives us:

Subsequently a functional motion is executed; we have opted for a pure hip flexion without bending the knee (see Fig. 16.3). During this movement, thigh and shank are assumed to move strictly in the sagittal plane, perpendicular to the direction of rotation. Assuming a pure hip flexion–extension motion, for which the flexion–extension axis would be in the same plane as the knee flexion–extension axis. Other movements can also be executed, such as knee flexion–extension or leg adduction-abduction. Here, the mean value is taken over a single hip flexion motion.

Applied to the dataset provided,Footnote 1 the vectors derived from the dynamic calibration trials are:

The two obtained vectors, \( \vec{Y} \) and \( \overrightarrow { H} \), both originate from measurements and can thus be non-perpendicular due to measurement error. In patient populations performing a pure motion can be a demanding task, therefore the longitudinal vector (derived from the static trial) is chosen as the base of our calculations. Taking the cross product between (\( \vec{Y} \)) and (\( \vec{H} \)), we obtain a third vector (\( \vec{X} \)) that is orthogonal to the two original vectors. To ensure an orthogonal coordinate system we then compute the cross product between (\( \vec{Y} \)) and (\( \vec{X} \)), and obtain (\( \vec{Z} \)). (\( \vec{H} \)) is thus a temporary vector that is later corrected, resulting in (\( \vec{Z} \)) (see Fig. 16.4).

In the numerical example we obtain the following matrices for the thigh and shank:

The matrix GRsegment allows us to represent the sensor orientation data provided by the TechMCS in the local coordinate system of the segment to which it is attached. This is done by multiplying the constant calibration matrix GRs_segment by the inverse of the sensor data matrix Rs_segment at each frame. The CS in which the sensor data is obtained, is not conform the ISB guidelines. The output of the TechMCS is a measure of its orientation with respect to a reference frame fixed to the earth; we therefore need to multiply the data by an ISB_conversion matrix to comply with the ISB recommendations (Grood and Suntay 1983; Wu et al. 2002). It was deemed easier to correct this mathematically post-data collection, and prioritize optimal IMU to segment attachment during trials.

Applying this to the full data-set gives us the following knee joint angles (see Fig. 16.5)

5 Conclusion

Inertial/magnetic sensors are relatively robust to environmental factors, which is one of the drawbacks of traditional technologies for movement analysis. Fusion algorithms allow perform 3D movement analysis, but two main concerns must be taken into account. First, sensor performance and data reliability depends on the appropriate selection of filter parameters, depending on the nature of the movement under analysis. Second, the compatibility with position-based systems through ISB standards is not guaranteed yet, although novel methods for anatomical calibration are being proposed.

MIMU thus offer valuable opportunities to almost restriction-less motion capture and monitoring of health state and activities of daily living, for example in a telemedicine application (Jovanov et al. 2005).

Notes

- 1.

The flexion cycle we have identified resides in the interval between frames 431 and 450 in the datasheet provided.

References

Zhou H, Hu H (2007) Upper limb motion estimation from inertial measurements. J Inf Technol 13(1):1–14

Morris JRW (1973) Accelerometry: a technique for the measurement of human body movements. J Biomech 6:729–736

Giansanti D, Macellari V, Maccioni G, Cappozzo A (2003) Is it feasible to reconstruct body segment 3-D position and orientation using accelerometric data? IEEE Trans Biomed Eng 50(4):476–483

Willemsen ATM, Frigo C, Boom HBK (1991) Lower extremity angle measurement with accelerometers error and sensitivity analysis. IEEE Trans Biomed Eng 38:1186–1193

Willemsen AT, Van Alste JA, Boom HB (1990) Real-time gait assessment utilizing a new way of accelerometry. J Biomech 23(8):859–863

Luinge H, Veltink PH (2004) Inclination measurement of human movement using a 3-D accelerometer with autocalibration. IEEE Trans Neural Syst Rehabil Eng Pub IEEE Eng Med Biol Soc 12(1):112–121. doi:10.1109/TNSRE.2003.822759

Williamson R, Andrews BJ (2001) Detecting absolute human knee angle and angular velocity using accelerometers and rate gyroscopes. Med Biol Eng Compu 39(3):294–302

Bachmann E (2004) An investigation of the effects of magnetic variations on inertial/magnetic orientation sensors. In: IEEE international conference on robotics and automation, pp 1115–1122

Luinge H, Veltink PH, Baten CTM (2007a) Ambulatory measurement of arm orientation. J Biomech 40(1):78–85. doi:10.1016/j.jbiomech.2005.11.011

Winter DA (2004) The biomechanics and motor control of human movement, 3rd edn. Wiley, New York

Vaughan CL, Davis BL, O’Connor JC (1999) Dynamics of human gait. Kiboho, Cape, p 141

Kemp B, Janssen AJMW, van der Kamp B (1998) Body position can be monitored in 3D using miniature accelerometers and earth-magnetic field sensors. Electroencephalogr Clin Neurophysiol Electromyogr Motor Control 109:484–488

Technaid SL (2013) Spain. www.technaid.com

Olivares A, Górriz JM, Ramírez J, Olivares G (2011). Accurate human limb angle measurement: sensor fusion through Kalman, least mean squares and recursive least-squares adaptive filtering. Meas Sci Technol 22(2):025801. doi:10.1088/0957-0233/22/2/025801

Cheng Y, Shuster MD (2005) Quest and the anti-quest: good and evil attitude estimation. J Astronaut Sci 53(3):337–351

Shuster MD (2006) The quest for better attitudes. J Astronaut Sci 54(3-4):657–683

Yun X, Bachmann ER (2006) Design, implementation, and experimental results of a quaternion-based Kalman filter for human body motion tracking. Pattern Recogn 22(6):1216–1227

Sabatini AM (2006) Quaternion-based extended Kalman filter for determining orientation by inertial and magnetic sensing. IEEE Trans Biomed Eng 53(7):1346–1356. doi:10.1109/TBME.2006.875664

Roetenberg D (2006) Inertial and magnetic sensing of human motion. Ph.D. thesis, Twente University, Enschede, p 126

Park EJ (2009) Minimum-order Kalman filter with vector selector for accurate estimation of human body orientation. IEEE Trans Rob 25(5):1196–1201. doi:10.1109/TRO.2009.2017146

Faber GS, Kingma I, Martin Schepers H, Veltink PH, Van Dieën JH (2010) Determination of joint moments with instrumented force shoes in a variety of tasks. J Biomech 43(14):2848–2854. doi:10.1016/j.jbiomech.2010.06.005

Van den Noort JC, Ferrari A, Cutti AG, Becher JG, Harlaar J (2012) Gait analysis in children with cerebral palsy via inertial and magnetic sensors. Med Biol Eng Compu. doi:10.1007/s11517-012-1006-5

Favre J, Aissaoui R, Jolles BM, de Guise Ja, Aminian K (2009) Functional calibration procedure for 3D knee joint angle description using inertial sensors. J Biomech 42(14):2330–2335. doi:10.1016/j.jbiomech.2009.06.025

O’Donovan KJ, Kamnik R, O’Keeffe DT, Lyons GM (2007) An inertial and magnetic sensor based technique for joint angle measurement. J Biomech 40:2604–2611

Picerno P, Cereatti A, Cappozzo A (2008) Joint kinematics estimate using wearable inertial and magnetic sensing modules. Gait Posture 28:508–595

Luinge H, Veltink PH, Baten CTM (2007b) Ambulatory measurement of arm orientation. J Biomech 40(1):78–85. doi:10.1016/j.jbiomech.2005.11.011

Jovanov E, Milenkovic A, Otto C, De Groen PC (2005) A wireless body area network of intelligent motion sensors for computer assisted physical rehabilitation. J Neuroeng Rehabil 2(1):6. doi:10.1186/1743-0003-2-6

Grood ES, Suntay WJ (1983) A joint coordinate system for the clinical description of 3-Dimensional motions-application to the knee. J Biomech Eng Trans ASME 105:136–144

Wu G, Siegler S, Allard P, Kirtley C, Leardini A, Rosenbaum D, Whittle M, D’Lima DD, Cristofolini L, Witte H, Schmid O, Stokes I (2002) ISB recommendation on definitions of joint coordinate system of various joints for the reporting of human joint motion—Part I: ankle, hip, and spine international society of biomechanics. J Biomech 35:543–548

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendix: Theoretical Basis for Human Movement Analysis with Inertial Sensors

Kinematics is the branch of mechanics that describes the motion of points, bodies (objects) and systems of bodies (groups of objects) without consideration of the causes of motion. Therefore, kinematics is not concerned with the forces, either external or internal, that cause the movement. It includes the description of linear and angular displacements and its time-derivatives: velocities and accelerations. A complete and accurate quantitative description of the simplest movement requires a huge volume of data and a large number of calculations, resulting in an enormous number of graphic plots. Therefore, it should be kept in mind that any given analysis may use only a small fraction of the available kinematic variables.

1.1 Cartesian Reference Systems

A reference system is an adequate and arbitrary system where the position of any point (or solid) is referenced. A Cartesian reference system is formed by three perpendicular axes, which origin is located at the common intersection of the axes, determining the 3 dimensions of the space. Any point in the space is therefore located with respect to this reference system by three coordinates, one by each axis: \( \left( {x,\,y,\,z} \right) \) (Fig. 16.6).

Two types of reference systems are commonly defined for human movement analysis:

-

Fixed reference system, also called absolute or inertial, which is a Cartesian reference frame fixed to the world, coincident with the view of the external observer. In this reference system magnitudes related with global body movements are defined, as the movement of the body center of mass or trunk bending and rotations.

-

Relative reference system, also called segment reference system, is a Cartesian reference frame fixed to the moving segment. A common variable measured on this reference system is joint movement.

The relative system commonly defined for human movement analysis has its origin coincident with the body center of mass, whose directions axis \( (X - Y - Z) \) are coincident with the main body axis as follows: \( X \) is the anterior axis (also called direction), pointing forward, \( Y \) is the vertical axis (also called direction), pointing upwards, and \( Z \) is the medial–lateral axis (also called direction), pointing right.

This body-centered reference system also contains the main body planes:

-

Sagittal plane: divides any part of the body into right and left portions. It is perpendicular to z (medial–lateral) axis. Flexion and extension takes place in the sagittal plane.

-

Frontal plane: divides any part of the body into front and back portions. It is perpendicular to x (anterior) axis. Abduction and adduction take place in the frontal plane.

-

Transverse plane divides any part of the body into upper and lower portions. It is perpendicular to y (vertical) axis. Internal and external rotation takes place in the transverse plane. Also called medial and lateral rotation.

Three-Dimensional Kinematics

2.1 Matrix Notation for Reference Systems

The human musculoskeletal system is composed of a series of jointed links, which are commonly approximated as rigid bodies. Six independent parameters, the degrees of freedom, DOF, are needed to describe the location (\( (x,y,z) \) coordinates with respect to reference system axes) and orientation (\( (\alpha ,\beta ,\gamma ) \) angles with respect to reference system planes) of a segment in space. Those six coordinates \( (x,y,z,\alpha ,\beta ,\gamma ) \)constitute the degrees of freedom of a segment, and therefore uniquely define its spatial location and orientation at any time instant.

Most of the mechanical quantities one has to deal with in motion analysis, such as linear and angular position, velocity and acceleration of the markers and segments, are vectors. Because a vector has both magnitude and direction, one can describe the same vector in several different perspectives, depending on the intention or objective of the analysis. Describing a vector in a particular perspective is in essence equivalent to computing its components based on the coordinate system of the particular perspective.

Matrices are a form of mathematical notation suitable for operations among coordinate systems and vectors. A reference system can be defined using three vectors that represent each system’s axes, whose length is the unity. Therefore, the unit vector of the axes reference system, hence unit coordinate vectors (Fig. 16.7 right), can be expressed follows, where \( \varvec{i},\varvec{j},\varvec{k} \) are the unit vectors of the \( X - Y - Z \) respectively: \( \varvec{i} = [1,0,0] ,\, \varvec{j} = [0,1,0] ,\, \varvec{k} = [0,0,1] \). Using this notation, the global coordinate system can be expressed by sorting \( \varvec{i},\varvec{j},\varvec{k} \) vectors into a matrix as follows:

2.2 Rotation Matrix

As shown in Fig. 16.7 left, a vector can be expressed as the sum of the component vectors projected over the \( i,j,k \) vectors:

In other words, one can not only describe the same vector in several different perspectives, but also change the perspective from one to another depending on the situation and needs. This changing perspective of describing a vector is called vector transformation or axis transformation, and it is done through rotation matrices. A rotation matrix is the mathematical form for expressing in a compact way the orientation of a reference system, usually a mobile one, with respect to another, usually fixed reference system.

To transform a vector from one reference frame to another is equivalent to changing the perspective of describing the vector from one to another. A transformation alters not the vector, but the components as follows:

In those transformations, \( \varvec{i},\varvec{j},\varvec{k} \) are the unit vectors of the \( X - Y - Z \) system, and \( \varvec{i'},\varvec{j'},\varvec{k'} \) are the unit vectors of the \( X - Y - Z \) system. Therefore, the transformation matrix from the global reference frame (frame G) to a particular local reference frame (frame L) can be written as:

Obviously, in human movement analysis the local reference frame is typically fixed to a segment or a body part, whereas the global reference system is reference system fixed to the laboratory, the global reference system.

Similarly, \( {}_{{}}^{G} \varvec{T}_{L} \) is the inverse rotation matrix of \( {}_{{}}^{L} \varvec{T}_{G} \) which can also be derived as:

However, a special feature of the rotation matrices is that they are orthonormal, that is, the vectors \( \varvec{i},\varvec{j},\varvec{k} \) are orthogonal and unitary. Therefore, the inverse of those matrices are in fact their transpose, so the change is straightforward:

A series of transformations can be performed through successive multiplication of the transformation matrices from the right to the left. Once the transformation matrices from the global reference frame to the local reference frames are known, computation of the transformation matrices among the local reference frames is simply a matter of transposition and multiplication of the transformation matrices. Hence the transformation matrix from one local reference frame (A) to another (B) can be easily obtained through cascading of the transformation matrices:

2.3 How to Extract Rotation Angles Using Euler Convention

As shown above, the components of a free vector change as the reference frame changes. Figure 16.8 shows two different reference frames: the \( X - Y \) system and the \( X^{'} - Y' \) system. Vector \( \varvec{v} \) can be expressed as \( \varvec{v}(x, y) \) in the \( X - Y \) system, \( \varvec{ v}(x', y') \) in the \( X' - Y' \) system. The relationships between \( X - Y \) and \( X' - Y' \) can be obtained from the geometric relationships:

In matrix form:

It is straightforward to expand these manipulations between axes to the three-dimensional space:

In matrix form:

Therefore, the rotation around the z axis from \( X - Y - Z \) reference system to \( X^{'} - Y^{'} - Z' \) is expressed as follows:

Rotation around the \( X \) and \( Y \) axis can be obtained in a similar procedure:

Once rotation around each of the three reference system axis is defined, an arbitrary rotation, which is usually composed by rotations around all three axes, can be seen as a composition of three sequential rotations, which is actually what the Euler theorem states.

Using Euler theorem, any arbitrary rotation can be de-composed into three sequential rotations. For example, consider the sequence \( X - Y' - Z'' \). This means that we rotate about \( X \) axis first, \( \theta_{1} \) degrees. As result we get the new orientation, given by \( X' - Y' - Z' \). Then a rotation around the new \( Y' \) axis \( \theta_{2} \) degrees is made, resulting in the new orientation \( X'' - Y'' - Z'' \). A final rotation around the new \( Z'' \) axis \( \theta_{3} \) degrees is made, resulting in the resulting final orientation \( X''' - Y''' - Z''' \).

Taking each rotation matrix, any point \( \varvec{p}\left( {x,y,z} \right) \)expressed in the \( X - Y - Z \) reference system (local) can be transformed (expressed) in the global reference system \( X''^{'} - Y''^{'} - Z''' \) as follows:

Expanding the former, and using shorthand notation where \( c1 = cos\left( {\theta_{1} } \right) \) and \( s2 = sin\left( {\theta_{2} } \right) \)

From the above matrix, \( \theta_{1} \), \( \theta_{2} \) and \( \theta_{3} \) angles can be obtained as follows:

This example corresponds to a rotation sequence around \( X - Y' - Z' \) axes. However, any other rotating sequence can be used. In theory, there are 12 possible correct rotation sequences, by the combination of the \( X - Y - Z \) rotations.

2.4 International Society of Biomechanics Standards

One of the characteristics of Euler theorem, is that the value of \( \theta_{1} \), \( \theta_{2} \) and \( \theta_{3} \) angles depend on the rotation sequence assumed, this makes the comparison of data among various studies difficult, if not impossible. On the other hand, some rotation sequences are closer to representing joint rotations in clinically relevant terms, which makes the application and interpretation of biomechanical findings easier and more welcoming to clinicians.

The international society of biomechanics has made recommendations for the definitions of segment coordinate systems as well as for rotation sequences for reporting joint movement (Grood and Suntay 1983; Wu et al. 2002). The purpose was to present these definitions to the biomechanics community so as to encourage the use of these recommendations, to provide first hand feedback, and to facilitate the revisions. It was hoped that this process will help the biomechanics community to move towards the development and use of a set of widely acceptable standards for better communication among various research groups, and among biomechanists, physicians, physical therapists, and other related interest groups. Those recommendations include the definitions for major human joints as ankle, hip, spine, shoulder, elbow, wrist and hand.

Rights and permissions

Copyright information

© 2014 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Lambrecht, S., del-Ama, A.J. (2014). Human Movement Analysis with Inertial Sensors. In: Pons, J., Torricelli, D. (eds) Emerging Therapies in Neurorehabilitation. Biosystems & Biorobotics, vol 4. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-38556-8_16

Download citation

DOI: https://doi.org/10.1007/978-3-642-38556-8_16

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-38555-1

Online ISBN: 978-3-642-38556-8

eBook Packages: EngineeringEngineering (R0)