Abstract

We present here the core elements of a stochastic optimal foraging theory (SOFT), essentially, a random search theory for ecologists. SOFT complements classic optimal foraging theory (OFT) in that it assumes fully uninformed searchers in an explicit space. Mathematically, the theory quantifies the time spent by a random walker (the forager) on a spatial region delimited by absorbing boundaries (the targets). The walker starts from a given initial position and has no previous knowledge (nor the possibility to gain knowledge) on target/patch locations. Averages on such process can describe the dynamics of an uninformed forager looking for successive targets in a diverse and dynamical spatial environment. The framework provides a means to advance in the study of search uncertainty and animal information use in natural foraging systems.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Classic optimal foraging theory (OFT) assumes fully informed foragers. Hence, animals can recognize a patch instantaneously, knowing in advance the expected patch quality as well as the average travel time between patches [19]. Stephens and Krebs (1986) called such conceptual framework the complete information assumption [47, 48].

Based on simple cases, theoreticians have addressed the problem of incomplete information [47, 48], acknowledging the presence of environmental uncertainty in foraging processes. The key questions are related to how animals obtain information about the environment while foraging [1, 20, 21, 31, 34]. The use of information to both discriminate the properties of a given patch and to figure out large-scale environmental properties have been shown to modify patch-exploitation and patch-leaving strategies [48]. Simple memory rules based on previous environment exploration experiences [32] and potential acquaintance with the travel times between patches [13, 14, 17, 24] also impact on the foraging strategy.

Here we introduce a theoretical framework to study aspects of foraging processes rooted on the assumption of complete lack of knowledge and with the virtue of being spatially explicit (here we address the one-dimensional case). In its core formulation, SOFT quantifies the distance traveled (or equivalently time spent) by a random walker that starts moving from a given initial position within a spatial region delimited by absorbing boundaries. Each time the walker reaches the boundaries, the process starts all over again. Averages on the properties of many walks realizations are aimed to reproduce the dynamics of a forager looking for successive targets in a diverse and dynamical environment. This modeling approach differs from classic theory in a very important point: it switches the patch-encounter problem of foraging theory from the traveling salesman [1] to the random search optimization problem [4, 16, 49, 51].

While useful as analytic simplifications, classic theoretical studies on foraging usually lack the explicit inclusion of space and are not focused on the search optimization problem, in which a forager with limited information explores a landscape to find scarce cues [4, 16, 51]. In OFT patch locations are known in advance and the goal is to find the shortest path connecting them. In SOFT, the locations and travel distances between patches are unknown, and thus the task is to determine an uninformed exploration strategy (which necessarily use some element of randomness), maximizing the number of patch encounters [4, 51]. Out of doubt, the theory described here is at the far end of the spectrum that begins with the mean-field and full-knowledge assumptions of classic OFT [19, 47, 48].

It does not escape to us that the assumption of a foraging animal as a “brainless” random walker (i.e., with no internal states nor sensory or memory capabilities) should be viewed as a first-order approximation to the actual dynamics. Hence it does not represent the ultimate description of animal information use and movement complexity. Nevertheless, memory-less models can be realistic when the searcher looks for dynamic targets that move away from their original location on time scales shorter than typical revisiting times by the searcher. In any case, limiting models are good starting points to think on complex problems and have an extraordinary success in making general scientific predictions. Importantly, they play a complementary role to biologically more specific models and shed light on different aspects of movement phenomena [51]. In this chapter, we hope to demonstrate that a spatially explicit random search theory can serve as the seed for more realistic (yet still simple) models [15] to advance in the study of information use in natural foraging systems. New ideas and results on random searching [2, 9, 29, 41, 49] clearly show that random walk and diffusion theory [35, 43, 44, 51] can better fit the concepts of search and uncertainty in behavioral ecology. Routes to integrate both theories, the classical OFT and the recent SOFT, will be needed in order to properly answer questions about efficiency and uncertainty of animal foraging strategies [3, 4, 51].

2 Some Preliminary Assumptions of the Model

We begin by considering a random searcher looking for point-like target sites in a one-dimensional (1D) search space. We consider a lattice of targets separated by the distance λ, i.e. the targets positions are x = jλ, with j integer. Suppose, initially, that the walker starts from a distance x 0 to the closest target. The walker thus searches for the two nearest (boundary) targets by taking steps of length ℓ from a probability density function (pdf) p(ℓ), which is kept the same for all steps. In Sects. 3–6, every time an encounter occurs the search resets and restarts over again from the same distance x 0 to the last target found. For example, if the position of the n-th target found is, say, x = 10λ, then the next starting point will be 10λ + x 0 or 10λ − x 0. In this sense, the search for any target is statistically indistinguishable from the search for the very first target: in both cases, the closest and farthest targets are, respectively, at initial distances x 0 and λ − x 0 from the searcher, and the pdf p(ℓ) of step lengths is the same. Therefore, without loss of generality we can restrict our analysis to the region 0 ≤ x ≤ λ, with the targets at x = 0 and x = λ being the system absorbing boundaries. This is actually possible since leaps over targets without detection are not allowed in this study. For an interesting account of leapover statistics in the context of Lévy flights, see [27]. As a consequence, in the present framework the overall search trajectory can be viewed as the concatenated sum of partial paths between consecutive encounters. In Sect. 7, the constraint of always starting from the same distance x 0 to the last target found is relaxed, and searches in landscapes with targets heterogeneously distributed are considered (see below). In every case, averages over these partial paths will describe a random search process in an environment whose global density of targets is ρ ∼ 1 ∕ (mean distance between targets) = 1 ∕ λ.

As commented above, at each starting process to find a new target we may or may not assume distinct initial positions of the searcher, x 0. The analysis presented in Sects. 3–6 assumes that the forager always restarts at a fixed x 0 = a. However, in the most general case x 0 can be drawn from a pdf π(x 0). By considering a distribution of x 0 values, the relative distances from the initial position of the searcher to the targets change at each search, thus describing an heterogeneous environment (but of global density 1 ∕ λ). In Sect. 7 we consider various pdfs π(x 0), so to address more realistic foraging situations in which the search landscape presents several degrees of heterogeneity.

In particular, for the case of fixed x 0 = a two limiting situations are considered (see Fig. 1 and [23, 49]). The symmetric (or destructive) condition (i.e. a = λ ∕ 2) represents the situation in which, having located and consumed a food item, there are no other nearby food items available and the forager begins the next search positioned far away and relatively equidistant, on average, from the two closest food items (Fig. 1). The asymmetric (or non-destructive) condition represents the situation where, having located a food item, other nearby items exist, hence the forager begins the next search with a close and a faraway target (see Fig. 1). Non-destructive foraging, with a once-visited item always available for future searches, should be considered as the paradigmatic asymmetric condition. If the foraging dynamics is non-destructive but environmental uncertainty exists (such that the forager may repeatedly loose track of the items outside its perceptual range), it will systematically reproduce the asymmetric condition at each restarted search. Even though the idea of non-destructive stochastic search perfectly maps with the asymmetric condition, caution must be taken with the destructive searches, which can indeed accommodate both symmetric and asymmetric conditions, depending on the landscape structure (see Sect. 7). Importantly, in the context of foraging, the previous definitions of destructive/non-destructive search [49] have led to some misleading criticism [22, 36].

Diagrams showing the two key initial conditions for the one-dimensional stochastic search model: (a) symmetric (destructive), (b) asymmetric (non-destructive). We denote by x 0 = a the forager starting position at each search (a = λ ∕ 2 in the symmetric case and a r v + ε in the asymmetric case). r v denotes the forager’s perceptive range or radius of vision

In our model the pdf p(ℓ) of step lengths ℓ is the same for each statistically independent step of the walk. The normalization condition imposes

Notice that a “negative step length” just means that a step is taken to the left (negative) direction. We study the case in which it is equiprobable for the walker to go either to the left or to the right, so that p(ℓ) = p( − ℓ). In addition, we consider the minimum step length as ℓ 0, resulting in p(ℓ) = 0 for | ℓ | < ℓ 0. An important quantity is the radius of vision r v , i.e. the walker’s perceptive range. Whenever its distance to the nearest site is ≤ r v , it goes straight to the target. Events of finding a target actually lead to truncation of steps, as discussed below. In principle, ℓ 0 and r v are independent parameters. However, in some of our calculations we set \(r_{v} =\ell _{0}\). Here we are interested in the scarcity regime of low-food density, λ ≫ r v and λ ≫ ℓ 0, with the forager’s perception about the search landscape being limited. Hence, searches with stochastic character arise naturally.

We define the efficiency η of the search walk as the ratio between the total number of target sites found, N found, and the total distance traveled by the walker, L tot:

By writing \(L_{\mbox{ tot}} = N_{\mbox{ found}}\langle L\rangle\), where ⟨L⟩ denotes the average distance traveled between two successive target sites found, we obtain

In the following, we work out a closed analytical expression for ⟨L⟩ for any probability density p(ℓ). Nevertheless, the focus of this contribution is on asymptotically power-law Lévy distributions [33] of step lengths. In particular, we focus on Lévy walk and not Lévy flight models. In the former models, jumps are not instantaneous but a time interval related to a finite velocity to complete the jump is involved (see Sect. 5).

3 Calculation of < L > and < | l | >

We start by calculating the average distance ⟨L⟩ traversed by a walker starting at a fixed position x 0 = a until reaching one of the borders located at x = 0 and x = λ. In the foraging process this quantity represents the distance traveled between two successively found target sites. Due to the perceptive range of the forager, we demand that \(r_{v} < a < \lambda - r_{v}\). Here we follow the general method developed by Buldyrev et al. in [10, 11].

Let us consider a walker that finds either the boundary target at x = 0 or x = λ after n steps. The distance traveled in this walk is

where | ℓ i | denotes the length of the i-th step. Since the walker is not in free space, the possibility of truncation of steps makes | ℓ i | dependent on the position x i − 1 from which the step i starts. As a consequence, the last (n-th) step depends upon x 0 = a, since x n − 1 depends on x n − 2, which, in turn, depends on x n − 3, and so on, all the way down to x 0. Therefore, we must have \(L_{n} = L_{n}(a)\) as well.

By averaging over all possible walks that finds a target after n steps, we find

Observe now that n can take any integer value, from 1 to ∞, meaning that the targets at x = 0 or x = λ can be found just at the first step or after an infinitely long number of steps. We should also remark that the probability P n of finding a target after n steps is not uniform, being, instead, dependent on n. Thus, when we average over all possible walks with the same starting point x 0 = a in the interval of length λ, we must take into consideration the different weights of walks with distinct n’s, so that

The above equation implicity assumes the normalization condition \(\sum _{n=1}^{\infty }P_{n} = 1\), so to assure that a target site, either at x = 0 or x = λ, is always found at the end. In this sense, we emphasize that ⟨L⟩ can be also interpreted as the average distance traversed by the searcher in the first-passage-time problem to find a boundary target at either x = 0 or x = λ. We return to this point in Sect. 6.

In order to calculate P n we define \(\rho _{n}(x_{n})\) as the pdf to find the walker between x n and \(x_{n} + dx_{n}\) after n steps. Therefore, the probability that the walker has not yet encountered any of the targets after n steps is given by

Conversely, the complementary probability of finding any of the targets in some step n′ ≥ n + 1 is thus

As a consequence, the probability of finding a target precisely after n steps reads

which, by using (7) and dropping the subindexes in the dummy x n and x n − 1 variables of integration, leads to

Note that \(\rho _{n-1}(x) > \rho _{n}(x)\), since the probability that the walker finds one of the targets grows with increasing n. From (10), we thus interpret \(\rho _{n-1}(x) - \rho _{n}(x)\) as a pdf to encounter a target precisely after n steps.

By combining this fact with (6), we find

which can be conveniently broken into two sums:

The integration from r v to λ − r v takes into account all possible starting points x for the last n-th step. By changing the variable in the first sum, m = n − 1, and adding the n = 0 null term to the second sum (note that, by definition, ⟨L n = 0⟩ = 0), we obtain

By using (5) above, we find

To perform the integral (14), we need to work on ρ n (x) first. We note that, in general,

where we have recovered the subindexes to make explicit the positions of the walker after i and i − 1 steps, respectively x i and x i − 1. The above expression sums over all the possibilities of reaching the site x i from the site x i − 1, by performing a step of length \(\vert x_{i} - x_{i-1}\vert \) with probability \(p(x_{i} - x_{i-1})dx_{i-1}\). By recursively applying (15) down to the very first step, we find n integrals, associated to n − 1 steps, from x 0 up to x n − 1, which denotes the starting point of the last n-th step:

Since the initial position x 0 = a is fixed, with \(r_{v} < a < \lambda - r_{v}\), then from (16) for n = 1,

Above, \(\rho _{0}(x_{0})\) is the pdf to find the walker at zero time steps. Since its initial position is a, then we have

where δ denotes Dirac delta function.

Now, by substituting (16) into (14) we obtain

where, once again, we have recovered the notation x → x n from (14). This expression can be put in a much shorter form if one defines the following integral operator [10, 11]:

so that, by comparing with (15), \(\rho _{1}(x_{1}) = [\mathcal{L}\rho _{0}](x_{1})\), \(\rho _{2}(x_{2}) = [\mathcal{L}\rho _{1}](x_{2}) = [\mathcal{L}[\mathcal{L}\rho _{0}]](x_{2}) \equiv [{\mathcal{L}}^{2}\rho _{0}](x_{2})\), and so on. Using this definition, we rewrite (19) as

In formal analogy to Taylor’s series expansion, we write

where \(\mathcal{I}\) denotes the unitary operator: \([\mathcal{I}\rho ](x) = \rho (x)\). Equation (21) thus becomes

which, with the use of (18), leads to [10, 11]

This closed analytical expression is actually essential to determine the efficiency of the search, according to (3).

Now, in order to deal with (24), we need to calculate the average (modulus) length of a single step starting at x 0 = a in the interval of length λ, ⟨ | ℓ | ⟩(a). As discussed, in the presence of target sites at x = 0 and x = λ there is the possibility of truncation of steps. Thus, the usual average in free space, \(\langle \vert \ell\vert \rangle =\int _{ -\infty }^{\infty }\vert \ell\vert p(\ell)d\ell\), which does not depend on the starting position, must be replaced by

valid for \(r_{v} +\ell _{0} \leq a \leq \lambda - r_{v} -\ell_{0}\). The meaning of this expression becomes clearer if we make the change of variable ℓ = x − a is all above integrals, to obtain

The first two integrals represent flights to the left and to the right which are not truncated by the encounter of a target. The third and fourth represent flights truncated by the encounter of the targets, respectively, at x = 0 and x = λ. In fact, due to the perceptive range or radius of vision, these sites are detected as soon as the walker reaches the respective positions x = r v and x = λ − r v . In addition, since p(ℓ) = 0 if | ℓ | < ℓ 0, then ⟨ | ℓ | ⟩(a) is given only by the second, third and fourth (first, third and fourth) integrals in the case \(r_{v} < a \leq r_{v} +\ell _{0}\) \((\lambda - r_{v} -\ell_{0} \leq a < \lambda - r_{v})\).

4 Discrete Space Calculation

The exact formal expression (24) can be numerically solved through a spatial discretization of the continuous range 0 ≤ x ≤ λ. In order to accomplish it, we consider positions x which are multiple of some discretization length Δx, i.e. x = jΔx, with j = 0, 1, …, M and Δx much smaller than any relevant scale of the problem (ℓ 0, r v , λ). In this case, the targets at x = 0 and x = λ are respectively associated with the indexes j = 0 and j = M = λ ∕ Δx (M is the integer number of intervals of length Δx in which the range 0 ≤ x ≤ λ is subdivided). Similarly, we define \(\ell_{0} = m_{0}\Delta x\) and \(r_{v} = m_{r}\Delta x\), with m 0 and m r integers. The continuous limit is recovered by taking Δx → 0 and M → ∞, with λ = MΔx fixed.

Our first aim is to write (16) in the discrete space. First, the set of continuous variables, \(\{x_{0},x_{1},\ldots ,x_{n-1},x_{n}\}\), denoting, respectively, the position of the searcher after {0, 1, …, n − 1, n} steps, must be replaced by the following set of discrete indices: \(\{i_{0},i_{1},\ldots ,i_{n-1},i_{n}\}\), where \(x_{m} = i_{m}\Delta x\). It thus follows that each integral over a continuous space variable must be changed to a sum over the respective discrete index. The probability \(p(x_{m+1} - x_{m})dx_{m}\) of reaching the site x m + 1 from the site x m by performing the (i m + 1)-th step of length \(\vert x_{m+1} - x_{m}\vert = \vert i_{m+1} - i_{m}\vert \Delta x\) should be replaced by the quantity \(a_{i_{m+1},i_{m}}\), to be determined below. With these considerations in mind, (16) can be discretized to

We observe above that \(a_{i_{m},i_{m}} = 0\) and \(a_{i_{m+1},i_{m}} = a_{i_{m},i_{m+1}}\), since the probabilities of step lengths \(x_{m+1} - x_{m}\) and \(x_{m} - x_{m+1}\) are the same. In addition, we have also taken into account that the lower and upper limits of each integral, respectively x = r v and x = λ − r v , represent extreme positions which must not be considered in the above discrete summation, since at either of these sites the walker already detects a target and gets absorbed.

Notice that (27) has the structure of a sequence of matrix products. Indeed, we can regard the quantities a k, j as the matrix elements [A] k, j of a symmetric matrix A, with null diagonal elements and dimension \((M - 2m_{r} - 1) \times (M - 2m_{r} - 1)\) [note that \(M - 2m_{r} - 1 = (M - m_{r} - 1) - (m_{r} + 1) + 1\)]. Accordingly, \([\rho _{m}]_{i_{m}}\) denotes the i m -th element of the column vector ρ m of dimension M − 2m r − 1. Equation (27) can thus be written in the form

We further observe that, since the property \(\int _{r_{v}}^{\lambda -r_{v}}\delta (x - a)dx = 1\) becomes \(\sum _{j=m_{r}+1}^{M-m_{r}-1}\delta _{j,i_{ a}} = 1\) in the discrete limit, with the initial position index defined as i a = a ∕ Δx, then the Dirac delta relates to the Kronecker delta via

as

Observe now that by the same procedure (5) becomes, in the discrete limit,

In this sense, each element \([\langle L\rangle ]_{i_{a}}\) of the column vector ⟨L⟩ of dimension M − 2m r − 1 represents the average distance traversed by the walker starting at a discrete position i a until reaching one of the borders. By substituting (28) above, we obtain

The assignment of the index i a appears explicitly in (32) by using (18) and (29). Summing over i 0 and applying the symmetry property of matrix A we obtain

Finally, by summing over n we get the discrete equivalent of (24):

where we have renamed the dummy index i n simply by i. I is the \((M - 2m_{r} - 1) \times (M - 2m_{r} - 1)\) unity matrix and (I − A) − 1 is the inverse of the matrix (I − A).

In (34) we observe that [⟨ | ℓ | ⟩] i is determined by first calculating ⟨ | ℓ | ⟩(x) in continuous space from (25) or (26), and next applying the discretization of the parameters x, λ, ℓ 0 and r v , according to the previous prescription.

At last, we also need to determine the matrix elements [A] k, j . We observe that [A] k, j is the discrete representation of the probability p(x − x′)dx′ of performing a step of length between | x − x′ | = | k − j | Δx and | x − x′ | + dx′ = ( | k − j | + 1)Δx. Therefore, by considering

its discrete limit implies

and where [A] j, j = 0 and [A] k, j = 0 if | k − j | < m 0 due to the minimum step length ℓ 0. After the matrix elements [A] k, j are calculated for a given pdf p(ℓ) of step lengths, one must invert the matrix (I − A) so to determine the average distance ⟨L⟩ and the search efficiency η, (34) and (2), respectively, for a searcher starting from \(x_{0} = a = i_{a}\Delta x\). In the following we provide explicit calculations for Lévy random searchers.

5 Lévy Random Searchers

In this section we explicit the calculations for a (power-law) Lévy pdf of step lengths.

Our emphasis is on the the mentioned destructive and non-destructive cases, respectively corresponding to set symmetric and asymmetric initial conditions and identified with the starting positions x 0 = a = λ ∕ 2 and \(x_{0} = a = r_{v} + \Delta x\), as discussed.

For Lévy random walks in free space (i.e., with no a priori spatial truncations), the pdf of step lengths reads

where the theta function Θ( | ℓ | − ℓ 0) = 0 if | ℓ | < ℓ 0 and Θ( | ℓ | − ℓ 0) = 1 otherwise, assures the minimum step length ℓ 0. From (1) the normalization constant is given by:

Actually, the power-law dependence of (37) represents the long-range asymptotical limit of the complete family of Lévy stable distributions of index α = μ − 1 [43, 44]. Moreover, as the second moment of pdf (37) diverges for 1 < μ ≤ 3, the central limit theorem does not hold, and anomalous superduffisive dynamics takes place, governed by the generalized central limit theorem. Indeed, Lévy walks and flights in free space are related to a Hurst exponent [43, 44] H > 1 ∕ 2, whereas Brownian behavior (diffusive walks with H = 1 ∕ 2) emerges for μ > 3. In particular, for Lévy walks one finds H = 1 for 1 < μ ≤ 2, with ballistic dynamics emerging as μ → 1 (the case μ = 2 corresponds to the Cauchy distribution). For μ ≤ 1 the function (37) is not normalizable. Therefore, by varying the single parameter μ in (37) the whole range of search dynamics can be accessed (from Brownian to superdiffusive and ballistic).

We emphasize that these results for faster-than-diffusive dynamics hold in free space or, as in the present context, in the free part of the search path between consecutive target encounters. As one considers the total path as a whole, the truncation of steps by the finding of a statistically large number of target sites generates an effective truncated Lévy distribution [30], with finite moments and emergence of a crossover towards normal dynamics, as justified by the central limit theorem. This issue is discussed in more detail in Sect. 6.

By substituting (37) and (38) into (26), we find, for \(r_{v} +\ell _{0} \leq a \leq \lambda - r_{v} -\ell_{0}\),

and

Discrete space expressions associated with (39) and (40) are readily found by following the prescription of Sect. 4:

and

Moreover, as we mentioned in Sect. 3 (see discussion right after (26)), the results for the remaining intervals (\(r_{v} < a \leq r_{v} +\ell _{0}\) and \(\lambda - r_{v} -\ell_{0} \leq a < \lambda - r_{v}\)) can be obtained straightforwardly. Indeed, we quote them below in the continuous and discrete limits. For \(r_{v} < a \leq r_{v} +\ell _{0}\):

and

and their discrete limits:

and

For \(\lambda - r_{v} -\ell_{0} \leq a < \lambda - r_{v}\):

and

and their discrete limits:

and

These small intervals at the extremes of the search space generally only contribute in an important way when small steps are very frequent, as it happens for μ → 3.

Finally, the matrix A is determined by substituting (37) and (38) into (36), so that

with A ii = 0 and A ij = 0 if | i − j | < m 0.

Substitution of the expressions for \(\langle \vert \ell\vert \rangle _{\iota _{0}}\) in the respective intervals into (34), along with (51), leads to \(\langle L\rangle _{\iota _{0}}\) and, therefore, also to the efficiency η, (3), in the case of Lévy searches.

Figure 2a, b display the efficiency of the symmetric (destructive) (a = λ ∕ 2 or ι0 = M ∕ 2) and asymmetric (non-destructive) (a = r v + Δx or \(\iota _{0} = m_{r} + 1\)) cases, respectively.

Search efficiency, η, versus (power-law) Lévy exponent, μ, for both (a) symmetric (destructive) and (b) asymmetric (non-destructive) initial conditions. In each case, the optimal search strategy respectively corresponds to ballistic (μ → 1) and superdiffusive (μ ≈ 2) dynamics. Notice the striking agreement between the analytical Eqs. (3) and (24) or (34) and numerical results. Simulation parameters: Δx = 0. 2, \(r_{v} =\ell _{0} = 1\), λ = 103, a = λ ∕ 2 (symmetric) and a = 2r v (asymmetric)

It is striking the agreement between the analytical Eqs. (3) and (24) or (34) and numerical results. Obtained from simulations which closely resemble the features of the above search model. The optimal search strategy corresponds to ballistic (μ → 1) and superdiffusive (μ ≈ 2) dynamics, for the symmetric and asymmetric conditions respectively, in agreement with previous mathematical approximations [49].

6 Search Diffusivity

One way to characterize the dynamics generated by the search is by determining how the searcher’s root-mean-square (r.m.s.) distance R, defined as function of averages of the forager’s position x,

depends on time t, number of steps N and number of targets found N found. The asymptotic scaling relation,

implies normal (Brownian) diffusion for the diffusion exponent ν = 1 ∕ 2, superdiffusion with ν > 1 ∕ 2, and ballistic dynamics in the case of ν = 1.

Due to the truncation of steps and the long-term prediction of the central limit theorem (see Sects. 3 and 5), we can anticipate that a crossover should occur between two dynamical regimes during a Lévy random search. There is an initial regime with superdiffusive character due to the Lévy pdf of single step lengths, occurring up to the encounter of the first targets [50]. Then, it follows a subsequent Brownian behavior for the overall search trajectory, which, as discussed, is viewed as the concatenated sum of partial paths between consecutive encounters. Indeed, the initial superdiffusive dynamics could not remain as such indefinitely, once the truncated Lévy pdf presents well-defined (finite) first and second moments.

At this point we should also observe that if the typical time scale of the search is smaller than the crossover time then the foraging process appears as effectively superdiffusive [7].

In the following we discuss the dynamics of a Lévy random searcher in both regimes, starting with the initial superdiffusive one.

6.1 Characterizing First-Passage-Time Diffusivity

As discussed in Sect. 3, since finding a target either at x = 0 or x = λ is essentially a mean first-passage-time problem [38], we can initially ask about the r.m.s. distance associated with the encounter events. In other words, we start by considering the quantities ⟨x⟩fpt and \(\langle {x}^{2}\rangle _{\mbox{ fpt}}\), which represent the average positions x and x 2 over all walks departing from x 0 = a and ending either at x = 0 or x = λ by an encounter event. In fact, by taking into account the radius of vision r v , the detection of targets occurs at x = r v and x = λ − r v , respectively, so that we can actually write

and

Above, p 0(a) and p λ(a) denote, respectively, the probabilities for a walker starting at x 0 = a to find the target site at x = 0 or x = λ. Notice that

since an encounter always happens at the end of the process. By substituting (54)–(56) into the expression

we find the correspondent r.m.s. distance of the first time passage at positions x = 0 or x = λ:

It is clear now that the r.m.s. quantities R and R fpt are not the same. In particular, there is no first-passage-time restriction in the calculation of R. Nevertheless, the dynamics of these two quantities are interrelated. As we show below for Lévy random searchers, the diffusion exponent ν is essentially the same for random search walkers restricted to the interval \(r_{v} < x < \lambda - r_{v}\) and random walkers in free space, for which [18, 42]

We should stress, however, that no search activity takes place in free space, due to the absence of target sites.

The result of (58) still demands the knowledge of p 0 and p λ. For such calculation, we consider initially a walker that starts at x 0 = a and reaches the site x = λ after n steps. Following the approach [10, 11] of the preceding sections, we write

This expression can be understood as follows: first, \(\rho _{n-1}(x_{n-1})dx_{n-1}\) represents the probability for the walker to be located in the interval \([x_{n-1},x_{n-1} + dx_{n-1})\) after n − 1 steps; since, up to this point, no target has been found yet, then \(r_{v} < x_{n-1} < \lambda - r_{v}\). Second, we also have to multiply the probability that the next (n-th) step will reach the target at x = λ and terminate the walk; so, \(P(\ell\geq \lambda - r_{v} - x_{n-1})\) gives the probability that the n-th step has length \(\ell\geq \lambda - r_{v} - x_{n-1}\), and thus certainly finds the target at x = λ (recall that steps of length \(\ell> \lambda - r_{v} - x_{n-1}\) end up truncated). Finally, the integral above sums over all possible values of x n − 1, consistently with this reasoning.

Since all walks are statistically independent, the total probability of walks with any number n of steps that start at x 0 = a and terminate at x = λ is simply a sum of p λ, n over all possibilities:

that is,

Now, by changing the variable m = n − 1, we obtain

Note that the above equation is similar to (14). Thus, from the same procedure detailed in Sect. 3, we find [10, 11]

We now take the discrete limit of (64) by following the general procedure described in Sect. 4. First of all, as before, we set x = iΔx, where \(i = m_{r} + 1,\ldots ,M - m_{r} - 1\). Equation (64) thus becomes

where, now, \(p_{\lambda ,\iota _{0}}\) and \(P_{\iota _{0}}\) are (M − 2m r − 1) ×1 column vectors.

To calculate \(P_{\iota _{0}}\) in (65), we write in the continuous limit

which, after integration, should go through the discretization process, leading to \(P_{\iota _{0}}\) as function of the discrete settings for a, λ, r v and ℓ 0. Analogously to the example of Lévy walks in Sect. 5, we obtain in the continuous and discrete limits, respectively,

and

if \(r_{v} < a \leq \lambda - r_{v} -\ell_{0}\) (or \(m_{r} < \iota _{0} \leq M - m_{r} - m_{0}\)), and \(P(\ell\geq \lambda - r_{v} - a) = P_{\iota _{0}} = 1/2\) otherwise. The same protocol can also be used to calculate p 0(a) (or \(p_{0,\iota _{0}}\) in the discrete limit). However, we can always use (56), so that we actually only need to calculate either p λ or p 0.

In the short-term regime (i.e. first-passage-time diffusivity), we must also comment on the possible validity of (58) to times (or number of steps) in which a boundary target has not been reached yet. In fact, although the calculation described in (54)–(58) explicitly refers to the encounter of extreme sites at x = 0 and x = λ, any two sites at positions \(r_{v} < x_{1} < a\) and \(a < x_{2} < \lambda - r_{v}\) can be assumed in the mean first-passage-time formulation. Thus, one can actually “follow” the dynamics of the searcher as it passes for the first time at positions x 1 or x 2, apart each other by a distance \((x_{2} - x_{1})\). In particular, if the ratio between the initial and total distances, \((a - x_{1})/(x_{2} - x_{1}) = (a - r_{v})/(\lambda - 2r_{v})\), is kept fixed, the evolution of R fpt with the average distance traversed can be determined (see below).

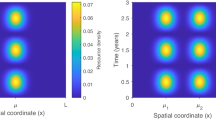

In Fig. 3 we compare the prediction of (58) with results from numerical simulation. By considering unity velocity for the Lévy searcher, the average time to encounter a target is identical to the average distance traversed ⟨L⟩. Thus, we can actually probe the asymptotic relation,

for several distances \((x_{2} - x_{1})\), as discussed above. As Fig. 3 indicates, we have a nice agreement between the analytical and numerical results. Importantly, just as in the case of a Lévy walker in free space [18, 42] (i.e. with no targets present, (59)), we identify ballistic, superdiffusive and Brownian short-term regimes in the respective ranges 1 < μ < 2, 2 < μ < 3 and μ > 3, with (analytical and numerical) diffusion exponents:

R.m.s. distance related to first-passage-time diffusivity, R fpt, defined in (57), versus average distance traveled by the searcher between consecutive encounters, ⟨L⟩, for both (a) symmetric (destructive) and (b) asymmetric (non-destructive) initial conditions. Notice the nice agreement between analytical (solid lines), Eq. (58), and numerical (symbols) results for all values of μ considered. Simulation parameters: Δx = 0. 2, \(r_{v} =\ell _{0} = 1\), λ = 103, a = λ ∕ 2 (symmetric) and a = 2r v (asymmetric). The diffusion exponents ν(μ), defined in (69), assume the values shown in (70), in close agreement with the theoretical prediction [18, 42] for Lévy walks in free space, (59)

Observe that, in this case in which searches and encounters are actually being performed, the effect of hitting the boundaries are more pronounced for intermediate values of μ. Indeed, for μ → 1 and μ → 3 there is a fair agreement between the values of ν given by (59) and (70). On the other hand, for intermediate μ = 2. 5 the value of ν above should be compared with that of the free-space Lévy walker, ν = 0. 75.

6.2 Characterizing Search Dynamics Diffusivity

The dynamics of the long-term regime (i.e., after the encounter of a large number of targets, N found ≫ 1) can be worked through a suitable random-walk mapping. We describe below such approach for the asymmetric (non-destructive) case, in which the walker starts from a fixed distance \(x_{0} = a = r_{v} + \Delta x\) to the closest target, with Δx ≪ r v ≪ λ. Generalization for any x 0 is possible.

We start by recalling that the set of targets are placed at positions x = iλ, where i is an integer (negative, null or positive) number. If the searcher begins the non-destructive walk at \(x_{0} = a = r_{v} + \Delta x\), then it can find either the target at x = 0 or x = λ. When the target at x = λ is encountered, the forager can restart the search walk from x = λ − r v − Δx or x = λ + r v + Δx (in both cases, the distance to the closest site at x = λ remains a = r v + Δx; here we take any of these two possibilities with 50 % probability). After, say, a sequence of N found = 5 encounters, one possible set of visited targets is {λ, λ, 0, − λ, − 2λ}. Notice that after the first target (located at x = λ) is found, the searcher returns to it in the next (i.e. second) encounter, as allowed in the non-destructive case. By recalling that p 0 and p λ denote, respectively, the probabilities to find the closest and farthest targets, and taking into account the radius of vision r v , one generally has four possibilities after the encounter of the first target at x = λ:

-

1.

Restarting from x = λ + r v + Δx and detecting the closest site at x = λ + r v (with probability p 0 ∕ 2).

-

2.

Restarting from x = λ + r v + Δx and detecting the distant site at x = 2λ − r v (with probability p λ ∕ 2).

-

3.

Restarting from x = λ − r v − Δx and detecting the closest site at x = λ − r v (with probability p 0 ∕ 2).

-

4.

Restarting from x = λ − r v − Δx and detecting the distant site at x = r v (with probability p λ ∕ 2).

These events correspond to respective displacements − Δx, (λ − 2r v − Δx), Δx and − (λ − 2r v − Δx). In the limit λ ≫ r v ≫ Δx, the generalization of this result for the possibilities that follow after the encounter of any target leads to a map of the search path onto a distinct random walk, which visits the sites x = iλ with “steps” of approximate length s = − λ, 0 or λ, drawn from the pdf

Now, from the standard theory of random walks [39], with statistically independent steps taken from a pdf of finite first and second moments such as (71), we write the actual r.m.s. distance after N found ≫ 1 “steps” (i.e. N found ≫ 1 targets found) as

where, by using (71), we find ⟨s⟩ = 0, reflecting the equiprobability to move left or right after each encounter, and \(\langle {s}^{2}\rangle = p_{\lambda }{\lambda }^{2}\), so that

Note the presence of Brownian dynamics (diffusion exponent ν = 1 ∕ 2) in the long-term regime, in agreement with the central limit theorem. In 2D or 3D, the rate of convergence to the Brownian diffusive regime may be slower than in 1D. This is so because higher spatial dimensions allow very large steps without encounter truncations. However, if infinite steps would be rigorously allowed, the possibility of non-convergence would exist, even in the long-run. Further analyses are needed to elucidate the robustness of the 1D analysis presented in this section at higher dimensional systems. Also important is to know up to which extent the 1D mapping between random walk steps and target encounters is valid at higher dimensions.

In Fig. 4 we compare the prediction of (73) with results from numerical simulation, with a nice agreement displayed. It is also worth to note that, even though the expected Brownian diffusion exponent, ν = 1 ∕ 2, arises for all values of μ considered, r.m.s. displacement values are larger for Lévy exponents μ closer to the ballistic (μ → 1) strategy.

R.m.s. distance related to the search dynamics diffusivity, R, defined in (52), versus the number of targets found, N found, for asymmetric (non-destructive) initial condition. Notice the nice agreement between analytical (solid lines), (73), and numerical (symbols) results for all values of μ considered, with Brownian diffusion exponent, ν = 1 ∕ 2, as predicted by the central limit theorem (see inset). Simulation details: we let 104 searchers look for 103 targets each. The landscape was configured with 100 targets interspersed by a distance λ. The restarting distance to the last target found is fixed, a = r v + Δx. Simulation parameters: Δx = 0. 2, \(r_{v} =\ell _{0} = 1\) and λ = 103

One last comment regards the connection of the r.m.s. distance, (73), written as function of the number of targets found, with its time dependence. Indeed, as expected from standard theory of random walks [39], both dependences should be asymptotically described by the same diffusion exponent, as in (53). Indeed, this fact can be justified since, on average, the number of targets found grows linearly with time. Therefore, a Brownian search dynamics, R ∼ t 1 ∕ 2, also emerges in the long run.

7 Search in Heterogeneous Landscapes: Distributions of Starting Points

Up to this point, no heterogeneity in the targets landscape has been taken into account. In other words, in each search scenario considered (either asymetric or symmetric), the forager has always restarted the search from the same departing position x 0 = a. Though useful as limiting cases, these situations generally do not correspond to actual search processes, which usually take place in environments with distances to the last target found distributed according to some pdf. Therefore, we now consider (see [37]) a random search model in which diverse degrees of landscape heterogeneity are taken into account by introducing fluctuations in the starting distances to target sites in a 1D search space, with absorbing boundaries separated by the distance λ. The targets’ positions remain fixed at the boundaries of the system. Fluctuations in the starting distances to the targets are introduced by sampling the searcher’s departing position after each encounter from a pdf π(x 0) of initial positions x 0. Importantly, π(x 0) also implies a distribution of starting (a)symmetry conditions with respect to the relative distances between the searcher and the boundary targets.

This approach allows the typification of landscapes that, on average, depress or boost the presence of nearby targets in the search process. Diverse degrees of landscape heterogeneity can thus be achieved through suitable choices of π(x 0).

For example, a pdf providing a distribution of nearly symmetric conditions can be assigned to a landscape with a high degree of homogeneity in the spatial arrangement of targets. In this sense, as discussed in Sect. 2, the destructive search represents the fully symmetric limiting situation, with the searcher’s starting location always equidistant from all boundary targets. On the other hand, a distribution π(x 0) which generates a set of asymmetric conditions is related to a patchy or aggregated landscape. Indeed, in a patchy landscape it is likely that a search process starts with an asymmetric situation in which the distances to the nearest and farthest targets are very dissimilar. Analogously, the non-destructive search corresponds to the highest asymmetric case, in which at every starting search the distance to the closest (farthest) target is minimum (maximum). Finally, a pdf π(x 0) giving rise to an heterogeneous set of initial conditions (combining symmetric and asymmetric situations) can be associated with heterogeneous landscapes of structure in between the homogeneous and patchy cases.

More specifically, the limiting case corresponding to the destructive search can be described by the pdf with fully symmetric initial condition,

This means that every destructive search starts exactly at half distance from the boundary targets, just as considered in the previous sections. In this context, it is possible to introduce fluctuations in x 0 by considering, e.g., a Poisson-like pdf exponentially decaying with the distance to the point at the center of the search space, x 0 = λ ∕ 2:

where \(r_{v} < x_{0} \leq \lambda /2\), A is the normalization constant and \(\pi (x_{0}) = \pi (\lambda - x_{0})\) due to the symmetry of the search space (see also below).

On the other hand, the highest asymmetric (non-destructive) limiting case is represented by

so that every search starts from the point of minimum distance in which the nearest target is undetectable, \(x_{0} = r_{v} + \Delta x\), with Δx ≪ r v , as also previously discussed. Similarly, fluctuations in x 0 regarding this case can be introduced by considering a Poisson-like pdf decreasing with respect to the point \(x_{0} = r_{v}\):

where \(r_{v} < x_{0} \leq \lambda /2\), B is the normalization constant, and \(\pi (x_{0}) = \pi (\lambda - x_{0})\). In (75) and (77), the parameter α controls the range and magnitude of the fluctuations. Actually, the smaller the value of α, the less disperse are the fluctuations around x 0 = λ ∕ 2 and \(x_{0} = r_{v}\), respectively.

In the case that, instead of always departing from the same location after an encounter, the searcher can restart from any initial position x 0 in the range \(r_{v} < x_{0} < \lambda - r_{v}\) chosen from a pdf π(x 0), the fluctuating values of x 0 imply a distribution w(⟨L⟩) of ⟨L⟩(x 0) values. Therefore, the average distance traversed between two successive target sites becomes

where ⟨L⟩min and ⟨L⟩max denote the minimum and maximum values of ⟨L⟩(x 0), respectively. Notice that

Above, the lower symbol in the first integral means that the integrand only contributes to w(⟨L⟩) if \(x_{0}^{{\prime}}\) is associated with a value in the range [⟨L⟩, ⟨L⟩ + d⟨L⟩). Since searches starting at x 0 are statistically indistinguishable from searches starting at λ − x 0 (in both cases the closest and farthest targets are at distances x 0 and λ − x 0 from the starting point), the symmetry of the search space with respect to the position x = λ ∕ 2 implies \(\langle L\rangle (x_{0}) =\langle L\rangle (\lambda - x_{0})\). As a consequence, any given value of ⟨L⟩ can be always obtained for two distinct starting positions x 0, one in each half of the search interval. By denoting these points as \(x_{0}^{{\prime}} = x_{0,A}\) and \(x_{0}^{{\prime}} = x_{0,B}\), with \(r_{v} < x_{0,A} < \lambda /2\) and \(\lambda /2 < x_{0,B} < \lambda - r_{v}\), where \(x_{0,A} = \lambda - x_{0,B}\), we write

which, when substituted into (78), leads to

Next, by dropping the subindices A and B, we obtain

where we have used that \(\pi (x_{0}) = \pi (\lambda - x_{0})\), from which the average efficiency with a distribution of starting positions can be calculated:

or explicitly [37],

In order to analyze the effect of fluctuations in the starting distances, the integral (84) must be evaluated. The detailed calculation of ⟨L⟩(x 0) has been described in Sects. 3 and 4 for any pdf p(ℓ) of step lengths, and in Sect. 5 for Lévy searchers in particular. Nevertheless, no explicit analytic expression for ⟨L⟩(x 0), (24), is known up to the present. This difficulty can be circumvented by successfully performing a multiple regression, so that

as indicated by the nice adjustment shown in Fig. 5c, obtained with N x = 10 and N μ = 8. Thus, the integral (84) can be done using (75) or (77) and (85). Results are respectively displayed in Fig. 5a, b for several values of the parameter α.

(a) Robustness of the ballistic optimal search strategy with respect to fluctuations in the distances to faraway target sites. In the case of Lévy random searchers, the average search efficiency \(\overline{\eta }\), (84), is always highest for μ → 1 (ballistic dynamics), for any value of the parameter α of the Poissonian fluctuations around the maximum allowed distance, x 0 = λ ∕ 2, (75). Cases with uniform and without any (δ-function) fluctuation are also shown. Solid lines are a visual guide. (b) Shift in the optimal search strategy towards an enhanced superdiffusive dynamical regime, as landscapes with distinct degrees of heterogeneity are considered. For Lévy random searchers (solid symbols), \(\overline{\eta }\) is maximized for smaller μopt(α) (faster diffusivity) in the case of wider (larger-α) Poissonian fluctuations in the distances to nearby target sites, (77). Solid lines are a visual guide. Empty symbols locate the maximum \(\overline{\eta }\) obtained from the condition \(f(\mu = \mu _{\mbox{ opt}},\alpha ) = \partial \overline{\langle L\rangle }/\partial \mu \vert _{\mu =\mu _{ \mbox{ opt}}} = 0\), with f(μ, α) given by Eq. (86). (c) Nice adjustment of the average distance ⟨L⟩ traversed between consecutive findings by a Lévy random searcher starting at position x 0. Results were obtained by numerical discretization of (24) (solid lines) and multiple regression (symbols), (85). (d) Determination of the optimal search strategy of Lévy random searchers with Poissonian fluctuations in the distances to nearby targets, (77). The above mentioned condition provides the optimal Lévy exponent, μopt, associated with the strategy of maximum average efficiency. Inset: since strategies with μ ≤ 1 are not allowed (non-normalizable pdf of step lengths), the highest efficiency is always obtained for μ → 1 as fluctuations with α > αcross ≈ 312. 2 are considered, marking the onset of a regime dominated by ballistic optimal search dynamics. We took λ = 103 and r v = 1 in plots (a)–(d)

By considering fluctuations in the starting distances to faraway targets through (75), we notice in Fig. 5a that the efficiency is qualitatively similar to that of the fully symmetric condition, (74). Indeed, in both cases the maximum efficiency is achieved as μ → 1. For 1 < μ < 3 the presence of fluctuations only slightly improves the efficiency. These results indicate that ballistic strategies remain robust to fluctuations in the distribution of faraway targets.

On the other hand, fluctuations in the starting distances to nearby targets, (77), are shown in Fig. 5b to decrease considerably the search efficiency, in comparison to the highest asymmetric case, (76). In this regime, since stronger fluctuations increase the weight of starting positions far from the target at x = 0, the compromising optimal Lévy strategy displays enhanced superdiffusion, observed in the location of the maximum efficiency in Fig. 5b, which shifts from μopt ≈ 2, for the delta pdf and (77) with small α, towards μopt → 1, for larger α (slower decaying π(x 0)). Indeed, both the pdf of (77) with a vanishing α and (76) are very acute at \(x_{0} = r_{v} + \Delta x\).

As even larger values of α are considered, fluctuations in the starting distances to the nearby target become non-local, and (77) approaches the α → ∞ limiting case of the uniform distribution, \(\pi (x_{0}) = {(\lambda - 2r_{v})}^{-1}\) (see Fig. 5b). In this situation, search paths departing from distinct x 0 are equally weighted in (84), so that the dominant contribution to the integral (and to the average efficiency \(\overline{\eta }\) as well) comes from search walks starting at positions near x 0 = λ ∕ 2. Since for these walks the most efficient strategy is ballistic, a crossover from superdiffusive to ballistic optimal searches emerges, induced by such strong fluctuations. Consequently, the efficiency curves for very large α (Fig. 5b) are remarkably similar to that of the fully symmetric case (Fig. 5a).

We can quantify this crossover shift in μopt by defining a function μopt(α) that identifies the location in the μ-axis of the maximum in the efficiency \(\overline{\eta }\), for each curve in Fig. 5b with fixed α. We notice that eventually a compromising solution with μopt(α) > 1 cannot be achieved, and an efficiency function \(\overline{\eta }\) monotonically decreasing with increasing μ arises for α > αcross. In this sense, the value αcross for which such crossover occurs marks the onset of a regime dominated by ballistic optimal search strategies.

The value of μopt for each α can be determined from the condition \(f(\mu = \mu _{\mbox{ opt}},\alpha ) = \partial \overline{\langle L\rangle }/\partial \mu \vert _{\mu =\mu _{ \mbox{ opt}}} = 0\), so that, by considering (77), (84) and (85),

with \(A =\{ 2\alpha {[\exp (-r_{v}/\alpha ) -\exp (-\lambda /(2\alpha ))]\}}^{-1}\). Solutions are displayed in Fig. 5d and also in Fig. 5b as empty symbols, locating the maximum of each efficiency curve. In addition, the crossover value can be determined through \(f(\mu \rightarrow {1}^{+},\alpha = \alpha _{\mbox{ cross}}) = 0\). In the case of the pdf (77), we obtain (Fig. 5d) αcross ≈ 312. 2 for λ = 103 and r v = 1 (regime λ ≫ r v ).

We also note that the scale-dependent interplay between the target density and the range of fluctuations implies a value of αcross which is a function of λ. For instance, a larger λ (i.e., a lower target density) leads to a larger αcross and a broader regime in which superdiffusive Lévy searchers are optimal. In fact, the above qualitative picture holds as long as low target densities are considered.

Moreover, since ballistic strategies lose efficiency in higher dimensional spaces [40] (see Sect. 8), it might be possible that in 2D and 3D the crossover to ballistic dynamics becomes considerably limited. In spite of this, enhanced superdiffusive searches, with 1 < μopt < 2, should still conceivably emerge due to fluctuations in higher-dimensional heterogeneous landscapes.

From these results we conclude that, in the presence of Poissonian-distributed fluctuating starting distances with α ≤ αcross, Lévy search strategies with faster superdiffusive properties, i.e. 1 < μopt ≲ 2, represent optimal compromising solutions. In this sense, as local fluctuations in nearby targets give rise to landscape heterogeneity, Lévy searches with enhanced superdiffusive dynamics actually maximize the search efficiency in aggregate and patchy environments. On the other hand, for strong enough fluctuations with α > αcross, a crossover to the ballistic strategy emerges in order to access efficiently the faraway region where targets are distributed.

A recent numerical study [23], looking at how different starting positions in the target landscape modify optimal Lévy search strategies, found equivalent results. Our more detailed analysis provides further mechanistic explanation linking landscape features with optimal random search strategies.

8 Discussion

Optimal foraging is one of the most extensively studied optimization theory in ecology and evolutionary biology [25, 26, 28, 46, 48]. To fully develop a comprehensive theory, it is necessary to understand separately (but not isolatedly) the contribution and the evolutionary trade-offs of the different components of the foraging process (e.g., search, taxis, patch exploitation, prey handling, digestion times). Indeed, the foraging sequence [45, 46] can be divided in: pre-encounter (search and taxis), and post-encounter (pursuit, handling, digestion, and ingestion) events [8, 12, 45].

The framework developed here is focused exclusively on the search component of the foraging process. SOFT clearly illustrates that neither ballistic nor Lévy strategies should be considered as universal [22, 36], since realistic fluctuations in the targets distribution may induce switches between these two regimes. Most importantly, SOFT shows which basic elements need to be accounted for to perform efficient stochastic searches, and identifies why optimal solutions can change with the environment conditions. In particular, the theory demonstrates that the quantities ⟨L⟩ and ⟨ | ℓ | ⟩ depend on the initial position of the searcher in the landscape (Sect. 3). Indeed, the initial searcher-to-target distances are essential to determine the best stochastic strategies (Sect. 7). When nearby and faraway targets exist, the trade-off between either scanning the closeby targets or exploring new territory for faraway ones needs to be efficiently solved. In such scenarios, stochastic laws governing run and tumble movement patterns come into play and have a clear impact on the search success, with Lévy-like features becoming beneficial [2, 5, 49, 51]. On the other hand, if such a trade-off does not exist, with all the targets being in average faraway, tumbling is not necessary at all, and ballistic strategies emerge as optimal.

In the overall search process, the diffusive properties of the searcher (Sect. 6) need to be considered both between targets (i.e. first passage times) and after encounter dynamics (i.e. flight truncation and reorientation due to encounter). Two regimes exist, a short-term superdiffusive optimal one, between encounters of successive targets, and a long-term Gaussian diffusive one, which describes the sum of the partial paths to find the targets. It is the balance between such diffusivities that sets the conditions for the emergence of particular optimal strategies.

Our main results are expected to hold in larger dimensions [6, 37, 49]. Although the random search problem in 1D is far from a mean field approximation, most of the results are qualitatively valid in higher-dimensions [6]. Indeed, in 2D and 3D the finding of targets does occur with much lower probability: the extra spatial directions yield a larger exploration space, thus smaller efficiencies. However, the straight lines, during single search steps, are radial 1D locomotions, hence, many of the properties observed in a 1D random search are kept at higher dimensions. Moreover, note that the proliferation of directions to go in high dimensional systems, can decrease the effect of fluctuations in target avaliability. In 2D and 3D systems, the influence of π(x 0) on optimal search strategies is expected to be qualitatively similiar but weaker than in 1D systems. Being simple enough to allow a general and complete analysis, the 1D case is thus very useful in establishing maximum limits for the influence of landscape spatio-temporal heterogeneities in random search processes.

Further extensions of the theory should involve a better understanding of the landscape structure contribution to the search efficiency [37] and to the build up of the efficiency curves. In the latter case we can consider the encounter efficiency of nearby and faraway targets separately in order to identify the different contribution of each partial efficiency to the total efficiency. We believe that exciting theoretical advances will be achieved by adequately scaling foraging processes in time and space, seeking a fertile middle ground between the concepts derived from classic OFT and the ones coming from the here presented Stochastic Optimal Foraging Theory (SOFT).

References

D.J. Anderson, Optimal foraging and the traveling salesman. Theor. Popul. Biol. 24, 145–159 (1983)

F. Bartumeus, M.G.E. da Luz, G.M. Viswanathan, J. Catalan, Animal search strategies: a quantitative random-walk analysis. Ecology 86, 3078–3087 (2005)

F. Bartumeus, Lévy processes in animal movement: an evolutionary hypothesis. Fractals 15, 151–162 (2007)

F. Bartumeus, J. Catalan, Optimal search behavior and classic foraging theory. J. Phys. A: Math. Theor. 42, 434002 (2009)

F. Bartumeus, S.A. Levin, Fractal intermittence in locomotion: linking animal behavior to statistical patterns of search. Proc. Natl. Acad. Sci. USA 105, 19072–19077 (2008)

F. Bartumeus, P. Fernández, M.G.E. da Luz, J. Catalan, R.V. Solé, S.A. Levin, Superdiffusion and encounter rates in diluted, low dimensional worlds. Eur. Phys. J. Spec. Top. 157, 157–166 (2008)

F. Bartumeus, J. Catalan, G.M. Viswanathan, E.P. Raposo, M.G.E. da Luz, The influence of turning angles on the success of non-oriented animal searches. J. Theor. Biol. 252, 43–55 (2008)

W.J. Bell, Searching Behaviour: The Behavioural Ecology of Finding Resources (Cambridge University Press, Cambridge, 1991)

O. Bénichou, C. Loverdo, M. Moreau, R. Voituriez, Two-dimensional intermittent search processes: an alternative to Lévy flight strategies. Phys. Rev. E 75, 020102(R) (2006)

S.V. Buldyrev, S. Havlin, A.Ya. Kazakov, M.G.E. da Luz, E.P. Raposo, H.E. Stanley, G.M. Viswanathan, Average time spent by Lévy flights and walks on an interval with absorbing boundaries. Phys. Rev. E 64, 041108 (2001)

S.V. Buldyrev, M. Gitterman, S. Havlin, A.Ya. Kazakov, M.G.E. da Luz, E.P. Raposo, H.E. Stanley, G.M. Viswanathan, Properties of Lévy fights on an interval with absorbing boundaries. Physica A 302, 148–161 (2001)

G.C. Collier, C.K. Rovee-Collier, A comparative analysis of optimal foraging behavior: laboratory simulations, in Foraging Behavior: Ecological, Ethological, and Psychological Approaches, ed. by A.C. Kamil, T.D. Sargent. Garland Series in Ethology (Garland STPM Press, New York, 1981)

I.C. Cuthill, P. Haccou, A. Kacelnick, J.R. Krebs, Y. Iwasa, Starlings exploiting patches: the effect of recent experience on foraging decisions. Anim. Behav. 40, 625–640 (1990)

I.C. Cuthill, P. Haccou, A. Kacelnick, Starlings Sturnus vulgaris exploiting patches: response to long-term changes in travel time. Behav. Ecol. 5, 81–90 (1994)

M.G.E. da Luz, S. Buldyrev, S. Havlin, E. Raposo, H. Stanley, G.M. Viswanathan, Improvements in the statistical approach to random Lévy flight searches. Physica A 295, 89–92 (2001)

M.G.E. da Luz, A. Grosberg, E.P. Raposo, G.M. Viswanathan (eds.), The random search problem: trends and perspectives. J. Phys. A 42(43), 430301 (2009)

A.S. Ferreira, E.P. Raposo, G.M. Viswanathan, M.G.E. da Luz, The influence of the Lévy random search efficiency: fractality and memory effects. Physica A 391, 3234–3246 (2012)

T. Geisel, J. Nierwetberg, A. Zacherl, Accelerated diffusion in Josephson junctions and related chaotic systems. Phys. Rev. Lett. 54, 616–619 (1985)

L.-A. Giraldeau, Solitary foraging strategies, in Behavioural Ecology, ed. by E. Danchin, L.-A. Giraldeau, F. Cézilly (Oxford University Press, Oxford, 2008)

R.F. Green, Bayesian birds: a simple example of Oaten’s stochastic model of optimal foraging. Theor. Popul. Biol. 18, 244–256 (1980)

Y. Iwasa, M. Higashi, N. Iamamura, Prey distribution as a factor determining the choice of optimal foraging strategy. Am. Nat. 117, 710–723 (1981)

A. James, M.J. Planck, R. Brown, Optimizing the encounter rate in biological interactions: ballistic versus Lévy versus Brownian strategies. Phys. Rev. E 78, 051128 (2008)

A. James, M.J. Planck, A.M. Edwards, Assessing Lévy walks as models of animal foraging. J. R. Soc. Interface 8(62), 1233–1247 (2011). doi:10.1098/rsif.2011.0200

A. Kacelnick, I.A. Todd, Psychological mechanisms and the marginal value theorem: effect of variability in travel time on patch exploitation. Anim. behav. 43, 313–322 (1992)

A.C. Kamil, T.D. Sargent (eds.), in Foraging Behavior: Ecological, Ethological, and Psychological Approaches. Garland Series in Ethology (Garland STPM Press, New York, 1981)

A.C. Kamil, J.R. Krebs, H.R. Pulliam (eds.), Foraging Behavior (Plenum Press, New York, 1987)

T. Koren, M.A. Lomholt, A.V. Chechkin, J. Klafter, R. Metzler, Leapover lengths and first passage time statistics for Lévy flights. Phys. Rev. Lett. 99, 160602 (2007)

J.R. Krebs, N.B. Davies, An Introduction to Behavioural Ecology, 3rd edn. (Blackwell, Oxford, 1993)

M.A. Lomholt, T. Koren, R. Metzler, J. Klafter, Lévy strategies in intermittent search processes are advantageous. Proc. Natl. Acad. Sci. USA 105, 1055–1059 (2008)

R.N. Mantegna, H.E. Stanley, Phys. Rev. Lett. 73, 2946–2949 (1994)

J.M. McNamara, Optimal patch use in a stochastic environment. Theor. Popul. Biol. 21, 269–288 (1982)

J.M. McNamara, Memory and the efficient use of information. J. Theor. Biol. 125, 385–395 (1987)

R. Metzler, J. Klafter, The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

A. Oaten, Optimal foraging in patches: a case for stochasticity. Theor. Popul. Biol. 12, 263–285 (1977)

A. Okubo, S.A. Levin, Diffusion and Ecological Problems: Modern Perspectives (Springer, Berlin/Plenum Press, New York, 2001)

M.J. Plank, A. James, Optimal foraging: Lévy pattern or process? J. R. Soc. Interface 5, 1077–1086 (2008)

E.P. Raposo, F. Bartumeus, M.G.E. da Luz, P.J. Ribeiro-Neto, T.A. Souza, G.M. Viswanathan, How landscape heterogeneity frames optimal diffusivity in searching processes. PLOS Comput. Biol. 7(11), e10002233 (2011)

S. Redner, A Guide to First-Passage Processes (Cambridge Univeristy Press, Cambridge, 2001)

F. Reif, Fundamentals of Statistical and Thermal Physics (Chap. 1) (McGraw-Hill, Singapore, 1985)

A.M. Reynolds, F. Bartumeus, Optimising the success of random destructive searches: Lévy walks can outperform ballistic motions. J. Theor. Biol. 260, 98–103 (2009)

A.M. Reynolds, A.D. Smith, R. Menzel, U. Greggers, D.R. Reynolds, J.R. Riley, Displaced honey bees perform optimal scale-free search flights. Ecology 88, 1955–1961 (2007)

M.F. Shlesinger, J. Klafter, Comment on accelerated diffusion in Josephson junctions and related chaotic systems. Phys. Rev. Lett. 54, 2551 (1985)

M.F. Shlesinger, J. Klafter, Lévy walks versus Lévy flights, in On Growth and Form, ed. by H.E. Stanley, N. Ostrowsky (Nijhoff, Dordrecht, 1986)

M.F. Shlesinger, G. Zaslavsky, U. Frisch (eds.), Lévy Flights and Related Topics in Physics (Springer, Berlin, 1995)

T.W. Shoener, Theory of feeding strategies. Ann. Rev. Ecol. Syst. 2, 369–404 (1971)

T.W. Shoener, A brief history of optimal foraging ecology, in Foraging Behavior, ed. by A.C. Kamil, J.R. Krebs, H.R. Pulliam (Plenum Press, New York, 1987)

D.W. Stephens, J.R. Krebs, Foraging Theory (Princeton University Press, Princeton, 1986)

D.W. Stephens, J.S. Brown, R.C. Ydenberg, Foraging: Behavior and Ecology (The University Chicago Press, Chicago, 2007)

G.M. Viswanathan, S. Buldyrev, S. Havlin, M.G.E. da Luz, E. Raposo, H. Stanley, Optimizing the success of random searches. Nature 401, 911–914 (1999)

G.M. Viswanathan, E.P. Raposo, F. Bartumeus, J. Catalan, M.G.E. da Luz, Necessary criterion for distinguishing true superdiffusion from correlated random walk processes. Phys. Rev. E 72, 011111(6) (2005)

G.M. Viswanathan, M.G.E. da Luz, E.P. Raposo, H.E. Stanley, The Physics of Foraging: An Introduction to Random Searches and Biological Encounters (Cambridge University Press, Cambridge, 2011)

Acknowledgements

We thank CNPq, CAPES, FACEPE (Brazilian agencies), the Spanish Ministry of Science and Innovation (RyC-2009-04133 and BFU2010-22337) for financial support. FB specially thanks A. Oltra for helping in the edition of this chapter.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer-Verlag Berlin Heidelberg

About this chapter

Cite this chapter

Bartumeus, F., Raposo, E.P., Viswanathan, G.M., da Luz, M.G.E. (2013). Stochastic Optimal Foraging Theory. In: Lewis, M., Maini, P., Petrovskii, S. (eds) Dispersal, Individual Movement and Spatial Ecology. Lecture Notes in Mathematics(), vol 2071. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-35497-7_1

Download citation

DOI: https://doi.org/10.1007/978-3-642-35497-7_1

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-35496-0

Online ISBN: 978-3-642-35497-7

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)