Abstract

In this chapter, we will describe the theoretical background of noise reduction in medical imaging as well as give some examples of noise reduction methods. To do so, we start with a fundamental description of digital image generation in medical imaging, since we will only focus on digital images and noise reduction by means of digital image processing. In the next part, we will discuss the corresponding processing in general before we describe the approaches typically used mainly based on linear filtering and some new approaches based on nonlinear approaches.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

In this chapter, we will describe the theoretical background of noise reduction in medical imaging as well as give some examples of noise reduction methods. To do so, we start with a fundamental description of digital image generation in medical imaging, since we will only focus on digital images and noise reduction by means of digital image processing. In the next part, we will discuss the corresponding processing in general before we describe the approaches typically used mainly based on linear filtering and some new approaches based on nonlinear approaches.

1 Idealized Model of Digital Image Generation

The simplified model of an imaging system can be characterized by the image space \( \mathbf{D} \), which can be assumed to be two dimensional without a loss of generality, and impulse response \( \varphi \). Let \( \bf D\subset {{\mathbb{R}^2}} \) be squared area of the size \( D\times D \), and let \( \varphi \) be a continuous function of fast decay whose Fourier transform \( \hat{\varphi}({\omega_x},{\omega_y}) \) is supported inside the circle \( \omega_x^2+\omega_y^2=\Omega_0^2 \), i.e., \( \hat{\varphi}({\omega_1},{\omega_2})=0 \) if \( \omega_1^2+\omega_2^2>\Omega_0^2 \). The output \( F:\bf D\to \mathbb {R} \) of the imaging system is modeled as a convolution

where \( f:\mathbf{D}\to \mathbb {R} \) is the input signal. It is supposed that \( f \) is integrable on \( \mathbf{D} \). Then \( F \) can be represented by the Fourier series

where \( {{\hat{F}}_{m,k }}={D^{-2 }}\hat{f}(\delta m,\delta k)\hat{\varphi}(\delta m,\delta k) \) and

Since the impulse response \( \varphi \) is band limited with the bandwidth \( {\Omega_0} \), the series (8.2) can be truncated:

with

and

That is, the output \( F \) is a trigonometric polynomial and, consequently, can be uniquely recovered from its samples (see, e.g., [1]) measured over the rectangular grid \( {{\{({x_m},{y_k})|{x_m}=m\Delta, {y_k}=k\Delta \}}_{{-N\leq m,k\leq N}}}. \) Hence, under certain idealizing assumptions, the continuous image can be identified with its samples.

We refer to the matrix \( {{\{{F_{m,k }}\}}_{{-N\leq m,k\leq N}}} \) as a digital image of the input signal \( f \). In the following, we call the number \( N \) the resolution limit of the imaging system, meaning that \( N\delta \) is the maximal resolution that can be achieved with this system. Clearly, for the case considered here, the value \( N\delta \) coincides with the Nyquist frequency of the imaging system.Footnote 1

Finally, we point out that extending the matrix \( \{{F_{j,n }}\} \) periodically with the period \( M\times M \), \( M=2N+1 \), we can write

which is the standard notation for the discrete 2D Fourier transform.

2 Image Processing

In practice, we do not have to deal with the image \( F \) but with its corrupted version \( I \)

where \( \eta \) is the random value referred to as system noise. For an X-ray imaging system, another substantial noise component, which is due to the Poisson statistics and scattering of the X-ray quanta, is referred to as quantum noise (see, e.g., Chap. 9 of [2]). In view of the fact that the quantum noise is an inherit feature of the input signal \( f \), the representation (8.8) can be replaced by

where \( q \) is the input quantum noise and \( g \) is the desired signal. One of the most important problems of the image processing is to recover \( g \) from the measured image \( I \). It is clear, that this can be done to some degree of accuracy only. The best possible approximation of \( g \) in terms of the mean squared error is given by

where, at the right, there is the Fourier series of \( g \) truncated up to the resolution limit of the given imaging system. Leaving aside details, we mention that the linear problem of recovering unknowns \( {{\hat{g}}_{k,l }} \) from \( I \) is sometimes referred to as the Wiener filtering.Footnote 2

As it follows from (8.9), the problem of recovering \( Ag \) from \( I \) can be looked at as the one consisting of two distinct tasks: the suppression of the noisy component \( q*\varphi +\eta \) (noise reduction) and the deconvolution of blurred image \( g*\varphi \) (de-blurring). In the following, we use this discrimination in two tasks and describe some approaches used for the reduction of noise in the image.

3 Linear Filtering

The general strategy of this approach is to separate in the spectral description of the medical image the frequencies corresponding mainly to signals and those mainly corresponding to noise. Afterwards it is tried to suppress the power of those spectral components which are related to noise. This is done via appropriate weighting of the frequency components of image \( I \). The result of the weighting is the image \( wI \), given by

The set \( {{\{{{\hat{w}}_{l,m }}\}}_{{-N\leq l,m\leq N}}} \) is called transfer matrix. The elements of transfer matrix are samples of the Fourier transform of the filter \( w \), in terms of which the right-hand side of (8.11) can be written as the 2D convolution

In the following, we call function \( w\in {L_2}({{\mathbb {R}}^2}) \) smoothing function if it is continuous and

Due to (8.12) the kernel \( w \) acts upon the image \( I \) as a local weighted averaging, which is uniform for the whole image and is independent from its current space context. Linear filtering is perfect if the power spectrum of the image \( I \) can be separated in two disjoint regions, the one of which contains the spectral component of the useful signal and, the other, of the noise. We illustrate this apparent property in Fig. 8.1, where in the middle, there is an image corrupted with additive noise, the spectral power of which is concentrated outside the circle \( \omega_x^2+\omega_y^2={\Omega^2} \). On the contrary, the spectral power of the useful signal (left) is concentrated inside this circle. The right image is the convolution of the corrupted image with the ideal filter, i.e., the filter, the transfer function of which is

Linear filtering of the band-limited image. Left: the image the power spectrum of which is inside the circle B. Middle: the same image with added noise, the power spectrum of which is outside the circle B. Right: convolution of the middle image with the ideal filter given by (8.14)

Clearly, in this case, the original image and the denoised one are identical. In fact, if the power spectrum of the useful signal is concentrated inside a bounded region of the frequency domain and the signal-to-noise ratio is big enough in this region, then the linear filtering is still excellent independently from the spectral distribution of the noise. The corresponding example is illustrated in Fig. 8.2. On the left of this figure, there is the same image as in Fig. 8.1 with added white noise and, on the right, the result of the filtering with Gaussian filter \( {g_{\varOmega }} \), the transfer function of which is

Linear filtering of the band-limited image. Left: image from Fig. 8.1 with added white noise. Right: the convolution with Gaussian kernel

Thus, the following short conclusion can characterize the linear filtering: it works well if applied to regular signals, i.e., such signals the power spectrum of which is concentrated within a bounded region of low frequencies and it vanishes fast outside this region. The filtering operation in this case is reduced to the suppression of high-frequency components of the image. If the Fourier transform of an image decays slowly as \( |\boldsymbol{ \upomega} |\to \infty \), the linear filtering is not efficient anymore. Since the spectral power of such images is high also in the high-frequency sub-band, suppressing within this sub-band affects the sharpness of edges and destroys fine details of the image. This results in overall blurring of the image (see Fig. 8.3).

There is a variety of filters and corresponding smoothing kernels which are available. However, all rely on the same principle and show similar behavior.

4 Adaptive Nonlinear Filtering

In contrast to methods based on linear filtering, many advanced noise reduction techniques assume a control over the local amount of smoothing. Appropriately choosing a smoothing kernel at the current positions, it is possible to avoid unnecessary blurring in regions of high variation of the useful signal. Usually in practice the desired kernel is chosen from a predefined family of kernels. As an example we consider the family \( \{{g_{\sigma }},\sigma \ge 0\} \), where

and the generating kernel \( g \) is a smoothing kernel [see definition above, (8.13)]. It can easily be seen that for σ > 0, \( {{\hat{g}}_{\sigma }}(0)=1 \), that is, \( {g_{\sigma }} \) is also a smoothing kernel. Besides, \( \mathop{\lim}\limits_{{\sigma \to 0}}F*{g_{\sigma }}(x,y)=F(x,y) \), which implies that \( \mathop{\lim}\limits_{{\sigma \to 0}}{g_{\sigma }}=\delta \), where \( \delta \) is a Dirac delta function. These properties allow to construct the image gF defined by

where we determine \( \sigma \) depending on current position \( (x,y) \). The challenging task is to establish a rule according to which the value \( \sigma \) can be adapted to the current context of the image. Usually, \( \sigma \) is required to change as a function of some decisive characteristic such as the gradient, the Laplacian, the curvature, or some other local characteristic of the image. An example of such filtering with the Gaussian function

as a generating kernel, and the squared deviation \( \xi (x,y) \) as a local decisive characteristic, is given in Fig. 8.4. Particularly for this example, the current value of \( \sigma \) was set to

where

and the function \({h_1}:[0,1]\to [0,1]\) is monotonically increasing from zero to one. For the local squared deviation, we have

where the ball \( {B_r}(x,y) \) is a ball of radius \( r \) located in \( (x,y) \); \( \bar{F}(x,y) \) is the local mean value of \( F \) inside the ball.

Filtering with adaptive bandwidth Gaussian kernel. Left: a patch of the X-ray image shown in Fig. 8.3; middle: the result of the filtering by given tuning parameters \( r \) and \( C=5r \); right: linear filtering with Gaussian kernel by \( \sigma =r \)

The smoothing considered in this example incorporates two tuning parameters, which are the constants \( C \) and \( r \). From (8.19) it follows that \( \sigma \) varies between \( r \) and \( r/(C+1) \), and the type of this variation depends on the type of the growth of the function h 1 defined in (8.20).

Another class of smoothing techniques is the so-called sigma filtering. Here we use smoothing kernels of the following general form:

where \( (x,y) \) is the current update position; \( {g_{\sigma }} \) and \( {w_{\tau }} \) are the smoothing kernels, the effective width of which are \( \sigma \) and \( \tau \), respectively; and the local constant \( C(x,y) \) is determined from the condition

Because of two kernels acting both in the space and the intensity domains, sigma filtering is sometimes referred to as bilateral filtering (see [4]). As it follows from (8.23), the sigma filter is represented by a family of kernels which depends on two scale parameters \( \sigma \) and \( \tau \). The parameter \( \sigma \) determines the radius of the ball \( B(x,y) \) centered in \( (x,y) \). Using the parameter \( \tau \), it has to be decided which values \( I(x-t,y-s) \) inside the ball \( B(x,y) \) have to be weighted down. Normally, these are values which deviate from the value \( I(x,y) \). In other words, the kernel \( w \) generates the local averaging mask that allocates those points within the ball \( B(x,y) \) where image values are close to \( I(x,y) \).

Bilateral filters are especially efficient if applied iteratively. Denoting the filtering operation with \( \rm F \), the iterative chain can formally be represented as \( {\rm F_{{{\sigma_n}{\tau_n}}}}{\rm F_{{{\sigma_{n-1 }}{\tau_{n-1 }}}}}\ldots {\rm F_{{{\sigma_1}{\tau_1}}}} \).

In [5], it has been shown that the best result by an iterative sigma filter can be achieved if \( {\sigma_1}>\ldots >{\sigma_{n-1 }}>{\sigma_n} \) and \( {\tau_1}<\ldots <{\tau_{n-1 }}<{\tau_n} \). It can be shown that such a sequence of filtered images converges to the image which is piecewise constant. As an example, in Fig. 8.5, there are three iterations of bilateral filtering where \( w \) and \( g \) are both Gaussian.

5 Wavelet-Based Nonlinear Filtering

A quite different principle for spatially adaptive noise reduction is based on the reconstruction from selected wavelet coefficients. For the sake of completeness, before describing the related techniques, we will review main points of the theory of the wavelet transform referring mainly to [6] and [7]. In doing so, we will use the terminology of the theory of functions, and therefore, we start by stating formal notations accepted in this theory and used in the text.

\( {C_m} \), \( m\geq 1 \), is a space whose elements are \( m \) times continuously differentiable functions; the space \( {C_0} \) is the space of continuous functions, and \( {C_{-1 }} \), the space of piecewise continuous functions.

Let us have \( j\in \mathbb {Z} \) and \( m\in \mathbb {N} \). As spline space \( S_m^j \), we will call the set of all such functions \( f\in {C_{m-2 }} \), the restriction of which to the interval \( [{2^j}k,{2^j}(k+1)] \) is the algebraic polynomial of order \( m-1 \); the points \( {2^j}k \) are called knots of the spline space \( S_m^j \).

A space with an inner product \( \left\langle {\cdot, \cdot } \right\rangle \) is called Hilbert space. In the following, the norm of element \( a\in H \) is defined by

\( {L_p}(T) \) is a space of functions satisfying \( \int_T {{{{\left| {f(x)} \right|}}^p}} <\infty \). The space \( {L_2}(T) \) is a Hilbert space with the inner product

where \( {b^{*}} \) is a complex conjugate of \( b \).

\( {\ell_p} \) is a set of all infinite sequences \( {{\{{a_k}\}}_{{k\in \mathbb {Z}}}} \) such that \( \sum\nolimits_k {{{{\left| {{a_k}} \right|}}^p}<\infty } \). The space \( {\ell_2} \) is a Hilbert space with the inner product

Span \( {{\{{g_n}\}}_n} \) is the minimal space spanned on the family \( {{\{{g_n}\}}_n} \), i.e., for any \( a\in {\ell_2} \),

Operators that map a Hilbert space \( E \) into Hilbert space \( G \) will be denoted as \( \mathbf{H}:E\to G \). With \( {{\mathbf{H}}^{*}} \), we denote the adjoint of \( \mathbf{H} \), i.e., such that \( {{\left\langle {\mathbf{H}/f,g} \right\rangle}_G}={{\left\langle {f,{{\mathbf{H}}^{*}}g} \right\rangle}_E} \) for any \( f\in E \) and any \( g\in G \). The operator is self-adjoint, if \( \mathbf{H}={{\mathbf{H}}^{*}} \).

A wavelet is a function \( \psi \in {L_2}(\mathbb {R}) \) that necessarily satisfies the condition

Everywhere in the text, it will be supposed that \( \psi \) is real and normalized, i.e., \( {{\left\| \psi \right\|}_{{{L_2}}}}=1 \). Wavelets are “famous” for being well localized both in the space domain and in the frequency domain. The localization properties of wavelets are usually expressed in terms of a so-called space-frequency window. This is a rectangle \( \sigma \times \hat{\sigma} \) in the space-frequency plane with \( \sigma \) and \( \hat{\sigma} \) defined by

The point \( (\bar{x},\bar{\omega}) \) is the center of the space-frequency window of the wavelet. Since \( \psi \) is real, the modulus of its Fourier transform is even, and this is why the integration in (8.31) is made over the positive half axis only.

Wavelet \( \psi \) is called the mother wavelet for the family \( {{\{{\psi_{s,u }}\}}_{{s>0,u\in \mathbb {R}}}} \), where the function

is the dilated and translated version of the mother wavelet \( \psi \). As an example, let us consider the family generated by so-called Mexican hat wavelet

In Fig. 8.6 wavelets \( {\lambda_{1,0 }} \) and \( {\lambda_{5,0 }} \) of this family (left) as well as their Fourier transforms (right) are shown.

Figure 8.7 shows space-frequency windows of these wavelets. One observes that the bigger is \( s \), the wider is the effective width of the wavelet in the space domain and the better is its spectral resolution. In contrary, we obtain better space resolution by smaller \( s \). Since \( {{\hat{\sigma}}_s}=\hat{\sigma}/s \) and \( {\sigma_s}=s\sigma \), the area of the space-frequency window does not change.

The bivariate function

is called a continuous wavelet transform of the function \( f \). Since

the wavelet coefficient \( \left\langle {f,{\psi_{s,u }}} \right\rangle \) by fixed \( s,u \) relates to the local contribution made by \( f \) within the space-frequency window of the wavelet \( {\psi_{s,u }} \).

Besides the space-frequency window, another important characteristic of a wavelet is the number of vanishing moments. A wavelet \( \psi \) is said to have \( m \) vanishing moments if \( \int {{x^k}\psi (x)\mathrm{ d}x} =0 \) for \( 0\leq k< m \). Since

the number of vanishing moments of \( \psi \) is equal to the number of zeros of its Fourier transform at \( \omega =0 \). Using this property, it can be shown that a compactly supported wavelet \( \psi \) has \( m \) vanishing moments if and only if there exists a compactly supported smoothing function \( \theta \) such that

(see Chap. 6 of [7] for details); the corresponding wavelet transform of \( f \) is consequently

where \( {{\bar{\theta}}_s}(t)={\theta_s}(-t) \) and \(\theta_s (t) = s^{-1} \theta (t/s).\) Hence, the number of vanishing moments is crucial in those applications where the local regularity of the signal has to be measured.

The identity

with

is valid and makes sense for any \( f\in {L_2}\mathbb {R} \) if \( {C_{\psi }}<\infty \). Therefore, the wavelet \( \psi \) is called admissible if \( {C_{\psi }}<\infty \). Condition (8.29) formulated above is necessary for \( \psi \) to be admissible. However, assuming that \( \psi \) is “reasonable,” that is, \( \psi \) is such that it makes sense in the practice, this condition is also sufficient.

There are different possibilities to choose wavelets in 2D. For example, this can be a wavelet \( \psi \in {L_2}({{\mathbb {R}}^2}) \) that is radially symmetric. Since the wavelet in this case depends effectively on one variable only, the situation is essentially the same as in the 1D case: the 2D wavelet transform is the function that depends on two parameters, the shift (which is now 2D vector) and the dilation. The reconstruction formula is in this case

It is also possible to choose a 2D wavelet that is not radially symmetric. Such wavelets are often called oriented wavelets. The wavelet transform in this case is a function that depends on three parameters, dilation, translation, and rotation, and the corresponding reconstruction formula is

In practice, one often applies separable 2D wavelets, i.e., a wavelet of the form \( \varphi (t)\psi (s) \), where both \( \varphi \) and \( \psi \) are functions from \( {L_2}(\mathbb {R}) \), and at least the one of which is a wavelet.

The continuous wavelet transform provides an extremely redundant description of the function \( f \). In fact, even a discrete subset of \( {{\{Wf(s,u)\}}_{s,u }} \) can be used to recover functions exactly. A related discrete wavelet transform is \( W{f_{m,n }}=\left\langle {f,{\psi_{m,n }}} \right\rangle \) where

for some fixed positive \( a,b \).

For a wide range of choices for \( \psi \), \( a \) and \( b \) discrete families of wavelets constitute frames of the functional space \( {L_2}(\mathbb {R}) \). The family \( {{\{{g_n}\}}_n} \) is called a frame of the Hilbert space \( H \) if there exist constants \( 0< A\leq B<\infty \) such that

for any \( f\in H \). The equivalency \( \left\langle {f,{g_n}} \right\rangle \equiv 0\Leftrightarrow f=0 \) that follows from this definition implies that frames are complete in \( H \), which means that any function in \( H \) can be approximated by linear combination of frame elements with any degree of accuracy. The frame is called redundant if its elements are linearly dependent.

In general, recovering a function from the frame coefficients is equivalent to the inversion of a self-adjoint operator \( \mathbf{H}:H\to H \) that relates to the frame \( {{\{{g_n}\}}_n} \) of \( H \) via

It is easy to see that the frame condition (8.44) is equivalent to the bounding condition

that holds for any \( f\in H \). This means that the inverse \( {{\mathbf{H}}^{-1 }} \) exists and is stable. Applying \( {{\mathbf{H}}^{-1 }} \) to both sides of (8.45) yields

with

The family \( {{\{{{\sim{g}}_n}\}}_n} \) is called dual frame. Indeed, let us introduce the operator \( \sim{\mathbf{H}} \) that relates to \( {{\{{{\sim{g}}_n}\}}_n} \) via

One directly verifies that \( \sim{\mathbf{H}} ={{\mathbf{H}}^{-1 }} \), and since \( {{\mathbf{H}}^{-1 }} \) is bounded by \( {B^{-1 }} \) and \( {A^{-1 }} \), \( \{{{\sim{g}}_n}\} \) satisfies the frame bounding conditions with the bounds \( {B^{-1 }} \) and \( {A^{-1 }} \). Moreover, the dual of \( {{\{{{\sim{g}}_n}\}}_n} \) is \( {{\{{g_n}\}}_n} \), and therefore,

The inverse of \( \mathbf{H} \) can be determined by means of the auxiliary operator \( \mathbf{R}=\mathbf{I}-2{(A+B)^{-1 }}\mathbf{H} \) where \( \mathbf{I} \) is the identity operator. The operator \( \mathbf{R} \) is self-adjoint and satisfies the bounding condition

which means that \( \left\| \mathbf{R} \right\|<1 \). Then,

In practice, one truncates the series (8.52) up to some N and uses the approximation

It is known (see Chap. 3 of [6]) that

That is, the norm in (8.54) converges exponentially to zero at a rate depending on the value \( (B-A)/(A+B) \). If \( A\approx B \), one can truncate the series (8.52) up to zeroth term avoiding the computation of the dual frame and still have a high quality of reconstruction of arbitrary function.

If \( A=B \), the frame is called tight. In this case, we can rearrange the identity

to the identity

that holds for any \( h\in H \) and any \( f\in H \), and as a result, obtain the inversion formula

that holds for any \( f\in H \) at least in the weak sense.Footnote 3 The simplicity of (8.57) makes tight frames especially interesting in practice. Note that in spite of the similarity between (8.57) and a decomposition in an orthonormal basis, tight frames can be redundant. In the following, we show that a tight frame is either redundant or orthonormal. Indeed, setting \( f={g_k} \) in (8.56), we obtain that for any \( k \), \( \sum\nolimits_n {{\alpha_n}{g_n}} =0 \) where

This means that if \( \{{g_n}\} \) are linearly independent, then \( \left\langle {{g_n},{g_k}} \right\rangle =A{\delta_{n,k }} \), i.e., \( {{\{{g_n}\}}_n} \) is orthonormal. On the other side, if \( \left\| g \right\|_H^2=A \) for all \( n \), then

which is possible only if \( \left\langle {{g_k},{g_n}} \right\rangle =A{\delta_{k,n }} \). It follows that if for some \( n \), \( ||{g_n}||_H^2=C\ne A \), then the tight frame is redundant and \(C \le A\). For a tight frame, elements of which are of the same norm, the value \( A/C \), where \( ||{g_n}||_H^2=C \), can be interpreted as a measure of the redundancy of the frame.

Redundant tight frames can be used for noise reduction. Suppose that the signal \( f \) is measured over a redundant frame \( {{\{{g_n}\}}_n} \), and let the measured coefficients \( {f_n} \) be contaminated with noise, i.e., \( {f_n}=\left\langle {f,{g_n}} \right\rangle +{q_n} \), where \( q \) is a random variable. Let \( \mathbf{U}:H\to {\ell_2} \) be the frame operator

Since the frame is redundant, there exists nontrivial \( c\in {\ell_2} \), so that \( \sum\nolimits_n {{c_n}{g_n}} =0 \). In other words, the orthogonal complement of \( \operatorname{Im}\mathbf{U} \) in \( {\ell_2} \), where we denote the image of the operator \( \mathbf{U} \) as \( \operatorname{Im}\mathbf{U} \), is not empty. Let \( \mathbf{P}:{\ell_2}\to {\ell_2} \) be the orthoprojector onto \( \operatorname{Im}\mathbf{U} \). Then, \( \mathbf{P}(\mathbf{U}f+q)=\mathbf{U}f+\mathbf{P}q \). Decomposing \( q={q_1}+{q_2} \), where \( {q_1}\in \operatorname{Im} \), and \( {q_2}\in {{(\operatorname{Im}\mathbf{U})}^{\bot }} \), where \( {{(\operatorname{Im}\mathbf{U})}^{\bot }} \) is the orthogonal complement of \( \operatorname{Im}\mathbf{U} \) in \( {\ell_2} \), we obtain that \( \mathbf{P}q={q_1} \), which in turn implies that \( {{\left\| {\mathbf{P}q} \right\|}_{{{\ell_2}}}}\leq {{\left\| q \right\|}_{{{\ell_2}}}} \). The following result is due to [7, Chap. 5]: let \( \left\| {{g_n}} \right\|_{{{L_2}}}^2=C \) for all \( n \), and let \( q \) be a zero-mean white noise of variance \( \sigma_q^2 \). Then,

This approach can be applied, e.g., in the edge-based technique used for the determination of the pre-sampling modulation transfer function (MTF) of digital detectors. The setup used within the framework of this technique is depicted in Fig. 8.8, left.

The samples of the edge profile can be thought of to be the coefficients measured over the set \( {{\{\mathrm{ Sinc}(\Omega (x-bn))\}}_n} \), which is the frame of the space of band-limited functions. Usually, the edge profile is highly oversampled. As a consequence, the frame is redundant, and the redundancy factor \( A/C \) is very high. Let \( E \) be the smallest subspace of \( {\ell_2} \) such that \( \operatorname{Im}\mathbf{U}\subset E \). Then, due to (8.61), the orthogonal projection onto \( E \) is supposed to reduce the noisy fraction of the edge profile significantly.

The linear independent frames constitute a special class of families called Riesz basis: the family \( \{{g_n}\} \) is called Riesz basis of \( H \) if \( H=\overline{{\mathrm{ span}\{{g_n}\}}} \) and there exist constants \( A>0 \) and \( B<\infty \) such that

for any \( c\in {\ell_2} \). Note that the equivalency \( \sum\nolimits_n {{c_n}{g_n}} =0\Leftrightarrow c=0 \) that follows from (8.62) implies that elements of the Riesz basis are linearly independent.

For the Riesz basis constituted by integer translates of the function \( g\in {L_2}(\mathbb {R}) \), the condition (8.62) can be written as

Since

one concludes that translates of \( g \) are orthonormal if and only if

In a similar way, it is verified that \( \{g(\cdot -n)\} \) and \( \{\sim{g}(\cdot -n)\} \) are biorthogonal, i.e., \(\left \langle {g (\cdot -n), \sim {g} (\cdot -k)} \right \rangle = {\delta_{n,k }}\), if and only if

Returning to wavelets, we accent that most known wavelet bases in \( {L_2}(\mathbb {R}) \) have been constructed by means of so-called multi-resolution analysis (MRA) formulated first in [8]. The key role within this approach is played by the so-called scaling functions: function \( \varphi \in {L_2}(\mathbb {R}) \) is called a scaling function if for any fixed \( j\in \mathbb {Z} \) the family \( {{\{{\varphi_{j,n }}\}}_n}, \)

is the Riesz basis of the subspace \( {V_j}\subset {L_2}(\mathbb {R}) \), and

-

(i)

$$ {V_j}\subset {V_{j-1 }}. $$

-

(ii)

$$ f(\cdot )\in {V_j}\Leftrightarrow f(2\cdot )\in {V_{j-1 }}. $$

-

(iii)

$$ \overline{{\bigcup\limits_{{j\in \mathbb {Z}}} {{V_j}} }}={L_2}(\mathbb {R}),\bigcap\limits_{{j\in \mathbb {Z}}} {{V_j}} =\{0\}. $$

Three necessary conditions for \( \varphi \) to be a scaling function are:

-

(1)

The Fourier transform \( \hat{\varphi} \) must satisfy (8.63).

-

(2)

It satisfies the two-scale relation

$$ \varphi =\sum\limits_n {{h_n}{\varphi_{-1,n }}}. $$(8.68) -

(3)

$$ \hat{\varphi}(0)\ne 0. $$

In particular from (3), it follows that \( \varphi \) is a smoothing function. Therefore, \( {V_j} \) is called approximation, and the ladder \( \ldots \subset {V_{j+1 }}\subset {V_j}\subset {V_{j-1 }}\subset \ldots \) as a multi-resolution approximation of \( {L_2}(\mathbb {R}) \).

Suppose that the Fourier transform of the scaling function \( \varphi \) satisfies (8.65), i.e., its integer translates are orthonormal. Let \( {W_0} \) be the orthogonal compliment of \( {V_0} \) in \( {V_{-1 }} \), that is, \( {V_{-1 }}={V_0}\oplus {W_0} \). Then there exists \( \psi \in {L_2}(\mathbb {R}) \), the integer translates of which constitute a basis of \( {W_0} \). Moreover, \( \psi \) is necessarily a wavelet, the Fourier transform of which satisfies (8.65). In order to show this, let us rewrite the two-scale relation (8.68) in the Fourier domain:

where

is \( 2\pi \) periodic. The orthonormality of \( \varphi (\cdot -n) \) implies the condition

which can be checked using (8.65). For any \( f\in {W_0} \), the inclusion \( f\in {V_{-1 }} \) takes place, and consequently, there exists \( a\in {\ell_2}, \) so that \( f=\sum\nolimits_n {{a_n}{\varphi_{-1,n }}} \), or in the Fourier domain

where \( \hat{a}(\omega )=\sum\nolimits_n {{a_n}{{\mathrm{ e}}^{{-\mathrm{ i}\omega n}}}} \) is \( 2\pi \) periodic. The condition \( {W_0}\bot {V_0} \) implies that \( \left\langle {f,\varphi (\cdot -k)} \right\rangle =0 \) for any \( k\in \mathbb {Z} \), which in turn leads to the identity

The possible solution of (8.73) is

where \( \hat{v} \) is any 2π-periodic function. Substitution of (8.74) into (8.72) yields

or equivalently

where \( \psi \) is the function, the Fourier transform of which is

Hence, we have shown that there exists \( \psi \), translates of which constitute the basis in \( {W_0} \). In order to see that \( \psi \) is a wavelet, note first that from (8.69), it follows that \( \hat{h}(0)=\sqrt{2} \). Together with (8.71), this implies \( \hat{h}(\pi )=0 \). Using this in (8.77) and taking into account that \( \hat{\varphi}(0)\ne 0 \) yields \( \hat{\psi}(0)=0.\) That is, \( \psi \) is a wavelet (see condition (8.29)). The orthonormality of its translates can be checked by means of (8.65). Substituting (8.77) into (8.65), we obtain that

Representing the sum on the right-hand side of (8.78) by two sums over odd and even indices, using (8.71) and accounting for the \( 2\pi \) periodicity of \( \hat{h} \), we obtain

that is, \( {{\left\{ {\psi (\cdot -n)} \right\}}_n} \) is an orthonormal basis of \( {W_0} \). This basis is not unique. Any \( \lambda \) defined by

where \( \hat{\alpha} \) is \( 2\pi \) periodic and \( |\hat{\alpha}(\omega )|\equiv 1 \), is a wavelet whose integer translates constitute the orthonormal basis in \( {W_0} \). Indeed, \( \hat{\lambda}(0)=0 \); moreover,

In fact, any two functions, integer translates of which constitute orthogonal bases in \( {W_0} \), relate to each other via (8.80) (see Chap. 8 of [6]). Therefore,

where \( \hat{\alpha} \) is \( 2\pi \) periodic and \( |\hat{\alpha}(\omega )|\equiv 1 \), characterizes all possible wavelets whose translates constitute the orthonormal basis in \( {W_0} \). The choice of \( \hat{\alpha} \) substantiates the wavelet. Usually, one sets \( \hat{\alpha}(\omega )={{\mathrm{ e}}^{{-\mathrm{ i}\omega /2}}} \). Then

where

is the transfer function of coefficients of the decomposition of \( \psi \) in the basis of \( {V_{-1 }} \), that is,

with

From (i) and (ii) of MRA, it follows that the decomposition

is valid for any \( j \). Together with (iii), this yields

Since \( {{\{{\psi_{j,k }}\}}_k} \) with

is the orthonormal basis of \( {W_j} \), the whole family \( {{\{{\psi_{j,k }}\}}_{j,k }} \) is the orthonormal basis of \( {L_2}(\mathbb {R}) \).

In order to construct the orthonormal wavelet basis in \( {L_2}({{\mathbb {R}}^2}) \), we first notice that the family \( {{\{\varphi (\cdot -l)\varphi (\cdot -m)\}}_{l,m }} \) is the orthonormal basis of \( V_0^2={V_0}\otimes {V_0} \). It is also easy to see that for any \( j, \) the family \( {{\{{2^{-j }}\varphi ({2^{-j }}\cdot -n)\varphi ({2^{-j }}\cdot -k)\}}_{n,k }} \) is the orthonormal basis of \( V_j^2={V_j}\otimes {V_j} \) and \( V_j^2\subset V_{j-1}^2 .\) Using the fact that the set of all functions of view \( f(x)g(y) \) is dense in \( {L_2}({{\mathbb {R}}^2}) \), we conclude that subspaces \( V_j^2 \) constitute multi-resolution approximation of \( {L_2}({{\mathbb {R}}^2}) \) in the sense (i)–(iii). Then, representing \( V_{j-1}^2 \) by the orthogonal sum

and recalling that \( {V_{j-1 }}={V_j}\oplus {W_j} \), we obtain

which yields

Taking into account that \( {L_2}({{\mathbb {R}}^2})=\mathop{\oplus}\limits_jW_j^2, \) we conclude that the family \( {{\{\psi_{j,k}^1,\psi_{j,k}^2,\psi_{j,k}^3\}}_{{j\in \mathbb {Z},k\in {{\mathbb {Z}}^2}}}} \), where \( \psi_{j,k}^i={2^{-j }}{\psi^i}({2^{-j }}x-{k_1},{2^{-j }}y-{k_2}) \), and

constitutes the orthonormal basis in \( {L_2}({{\mathbb {R}}^2}) \).

MRA allows to fulfill the wavelet transformation of \( f \) by means of the cascade filter bank algorithm. We describe this algorithm for 1D. The extension to 2D is straightforward.

Rescaling (8.68) and (8.85) to \( {\varphi_{{j,k}}}\sum\nolimits_n {{h_{n-2k }}{\varphi_{{j-1,n}}}} \) and to \( {\psi_{{j,k}}}\sum\nolimits_n {{g_{n-2k }}{\varphi_{{j-1,n}}}} \), respectively, and denoting \( {f_{j,k }}=\left\langle {f,{\varphi_{j,k }}} \right\rangle \), \( {d_{j,k }}=\left\langle {f,{\psi_{j,k }}} \right\rangle \), one obtains the filter bank decomposition at step \( j \):

On the other hand, denoting with \( \mathbf{P}A \) the operator of the orthogonal projection onto the subspace \( A \), we can write

Therefore, for any \( f\in {L_2}(\mathbb {R}) \),

Building the inner product of both sides of (8.98) with \( {\varphi_{j-1,k }} \) yields the reconstruction formula

which is fulfilled recursively down to \( j=0 \).

Let us consider an example of constructing orthonormal bases with box splines \( {{\mathcal{B}}_m} \). Per definition, \( {{\mathcal{B}}_m} \) is the \( m-1 \) times convolution of \( {{\mathcal{B}}_1} \) with itself, and

For \( {{\mathcal{B}}_m} \), it is known that its integer translates constitute Riesz basis of \( V_0^m=\overline{{{L_2}(\mathbb {R})\cap S_m^0}} \) with the Riesz-bounds \( {A_m} \) and \( {B_m} \)

(see, e.g., Chap. 4 of [9]). The two-scale relation for \( {\rm B_m} \) is known to be

Since \( {{\hat{\mathcal{B}}}_m}(\omega )={{({{\hat{\mathcal{B}}}_1}(\omega ))}^{m-1 }} \) and

we obtain that \( {{\hat{\mathcal{B}}}_m}(0)=1 \). That is, \( {{\mathcal{B}}_m} \) is a scaling function that generates a multi-resolution approximation \( \ldots \subset V_j^m\subset V_{j-1}^m\subset \ldots \), where \( V_j^m=\overline{{{L_2}(\mathbb {R})\cap S_m^j}} \).

By \( m=1 \), the coefficients of the two-scale relation (8.102) are \( {h_0}={h_1}={2^{-1/2 }} \). The wavelet associated with \( {{\mathcal{B}}_1} \), constructed with the help of (8.85) and (8.86), is

This is the well-known Haar wavelet. Since Riesz-bounds \( {A_1}={B_1}=1 \), the translates \( {{\mathcal{B}}_1}(\cdot -n) \) are orthonormal (see above (8.63) and (8.65), and consequently so are the translates \( \psi (\cdot -n) \). The whole family \( {{\{{2^{-j/2 }}\psi ({2^{-j }}\cdot -n)\}}_{{j,n}}} \) is the orthonormal basis of \( {L_2}(\mathbb {R}) \).

As it follows from (8.101), the lower Riesz-bound \( {A_m} \) decays with \( m \). Therefore, \( {A_m}<1 \) for any \( m>1 \), and as a consequence, the integer translates of \( {{\mathcal{B}}_m} \) are not orthonormal. Applying to \( {{\mathcal{B}}_m} \) the orthogonalization trick

one obtains functions \( \mathcal{B}_m^{\#} \) which satisfy (8.65), and as a consequence, their integer translates are orthonormal. However, these functions are no more compactly supported, and neither are the associated wavelets called Battle-Lemarié wavelets.

In practical applications, numerically advantageous compactly supported wavelets are preferable. In applications where the perceptual assessment of the signal is important, e.g., medical imaging, the symmetry of wavelets is another important feature of preference: applying the asymmetric wavelets can yield asymmetric errors,Footnote 4 and it is known that the human visual system is less tolerant to asymmetric errors than to symmetric ones. Additionally, symmetric or antisymmetric wavelets are more efficient while treating boundaries of the compactly supported signal, such as an image. For such applications, the basis of \( {L_2}(T) \), where \( T\subset \mathbb {R}^n \) is supposed to be the support of the signal, has to be constructed. Normally this is done by modifying wavelets of the basis of \( {L_2}({{\mathbb {R}}^n}) \): wavelets, the support of which is entirely inside \( T \) are not changed; the wavelets, the support of which is entirely outside \( T \) are skipped; and those whose support overlaps the boundaries of \( T \) are modified. There are different ways to modify the boundary wavelets (see, e.g., Chap. 7 of [7]). For symmetric or antisymmetric wavelets, boundary wavelets are simply folded back away from boundaries. The decomposition in the so-obtained basis is equivalent to the decomposition of the signal that is symmetrically extended beyond \( T \). An example of such extension for 1D signal supported on \( T=[a,b] \) is given in Fig. 8.9.

It is well known (see, e.g., [6]) that constructing the orthonormal basis from compactly supported wavelet is possible only if the wavelet is asymmetric. The exception is the discontinuous Haar wavelet. For any continuous compactly supported wavelet, the symmetry and orthonormality are inconsistent. But compactly supported symmetric or antisymmetric wavelets can be used to construct biorthogonal bases of \( {L_2}(\mathbb {R}) \).

For the following we accept (without proof) several important facts about compactly supported scaling functions. Let \( h \) be the filter the transfer function of which is the trigonometric polynomial

In other words, \( h \) is a finite impulse response (FIR) filter, i.e., the filter finitely many taps of which are not zero. Let in addition

Then \( \varphi \), the Fourier transform of which is

is a compactly supported square integrable scaling function. The function defined by (8.108) will be referred to as the one related to \( h \). A pair of FIR low-pass filters \( h \) and \( \sim{h} \), both satisfying (8.107), are called dual if the families \( {{\{\varphi (\cdot -n)\}}_n} \) and \( {{\{\sim\varphi (\cdot -n)\}}_n} \), where \( \varphi \) and \( \sim{\varphi} \) are related scaling functions, are biorthogonal. The necessary condition for \( h \) and \( \sim{h} \) to be dual is

(for sufficient conditions, see [10]).

The multi-resolution analysis can be reformulated in terms of biorthogonal bases. Let \( h \) and \( \sim{h} \) be dual filters, and let the related scaling functions generate multi-scale approximations of \( {L_2}(\mathbb {R}) \)

and

respectively.

Denote with \( {W_j} \) the subspace that completes the subspace \( {V_j} \) in \( {V_{j-1 }} \) and with \( {{\sim{W}}_j} \) the complement subspace of \( {{\sim{V}}_j} \). Apparently \( {W_0}\bot {{\sim{V}}_0} \) and \( {{\sim{W}}_0}\bot {V_0} \). In the similar way as we did in the orthonormal case, but using (8.66) instead of (8.65), it is possible to show that there exist biorthogonal Riesz bases of \( {W_0} \) and \( {{\sim{W}}_0} \) that are constituted with integer translates of wavelets \( \psi \) and \( \sim{\psi} \), the Fourier transform of which are

with \( \hat{\alpha} \) and \( \hat{\beta} \) being \( 2\pi \) periodic, \( |\hat{\alpha}(\omega )|\equiv 1 \), \( |\hat{\beta}(\omega )|\equiv 1 \), and \( \hat{\alpha}(\omega )\hat{\beta}{{(\omega )}^{*}}\equiv 1 \). The families \( {{\{{2^{-j/2 }}\psi ({2^{-j }}\cdot -n)\}}_{j,n }} \) and \( {{\{{2^{-j/2 }}\sim{\psi}({2^{-j }}\cdot -n)\}}_{j,n }} \) are biorthogonal Riesz bases of \( {L_2}(\mathbb {R}). \)

For \( \hat{\alpha}(\omega )={{\mathrm{ e}}^{{-\mathrm{ i}\omega /2}}} \), the equalities (8.112) and (8.113) in the space domain are

with

The extension to the 2D case is made in a similar way as we did it in the orthonormal case. One defines three wavelets \( {\psi^1} \), \( {\psi^2} \), and \( {\psi^3} \) exactly as in (8.93)–(8.95), which in this case generate the Riesz basis of \( {L_2}({{\mathbb {R}}^2}) \). The wavelets \( {{\sim{\psi}}^1}(x,y)=\sim{\varphi}(x)\sim{\psi}(y) \), \( {{\sim{\psi}}^2}(x,y)=\sim{\psi}(x)\sim{\varphi}(y) \), and \( {{\sim{\psi}}^3}(x,y)=\sim{\psi}(x)\sim{\psi}(y) \) generate the dual Riesz basis of \( {L_2}({{\mathbb {R}}^2}) \).

In the same way as in the orthonormal case, the two-scale relation on the one hand and the relations (8.114) on the other imply the fast filter bank algorithm of the wavelet transform of \( f \):

In order to obtain the reconstruction formula, let us introduce the operator

where \( {{\{{\lambda_k}\}}_k} \), \( {{\{{{\sim{\lambda}}_k}\}}_k} \) are biorthonormal Riesz bases of the subspace \( V \) and \( \sim{V} \), respectively. The operator \( {{\mathbf{P}}_V} \) is an orthoprojector. Since the families \( {{\{{\varphi_{j,n }},{\psi_{j,n }}\}}_n} \) and \( {{\{{{\sim{\varphi}}_{j,n }},{{\sim{\psi}}_{j,n }}\}}_n} \) are biorthogonal Riesz bases of \( {V_{j-1 }} \) and \( {{\sim{V}}_{j-1 }} \), respectively, we obtain that \( \mathbf{P}{V_{j-1 }}=\mathbf{P}{V_j}+\mathbf{P}{W_j} \), that is, for any \( f\in {L_2}(\mathbb {R}) \), the identity

is valid. The inner product of (8.119) with \( {\varphi_{j-1,k }} \) yields the reconstruction formula

We conclude our review by considering the redundant dyadic wavelet transform that is defined by

where \( {\psi_j}(x)={2^{-j/2 }}\psi ({2^{-j }}x) \). In a similar way as we did before, let us introduce the self-adjoint operator \( \mathbf{H}:{L_2}(\mathbb {R})\to {L_2}(\mathbb {R}) \), defined by

If there exist constants \( 0< A\leq B<\infty \) such that the bounding condition

holds for all \( f\in {L_2}(\mathbb {R}) \), then the operator \( \mathbf{H} \) is invertible with the inverse \( {{\mathbf{H}}^{-1 }} \) bounded by \( {B^{-1 }} \) and \( {A^{-1 }} \). The sufficient condition for \( \mathbf{H} \) to satisfy (8.123) is

In order to see this, the inequality (8.124) has to be multiplied by \( |\hat{f}(\omega ){|^2} \) and integrated over \( \omega \). Then, using the representation

and taking into account the Parseval’s identity, one obtains

which is another equivalent notation of (8.123).

Applying \( {{\mathbf{H}}^{-1 }} \) to both sides of (8.122) yields

where \( \sim{\psi}={{\mathbf{H}}^{-1 }}\psi \) is called a dual wavelet. In order to determine \( {{\mathbf{H}}^{-1 }} \), one has to notice that

that is, in the Fourier domain, the operator \( \mathbf{H} \) is associated with the operator \( \hat{\mathbf{H}} \), which is simply the multiplicator \( \sum\limits_j {|\hat{\psi}({2^j}\omega ){|^2}} \). Therefore,

and consequently,

which makes sense due to (8.124). In fact, any \( \sim{\psi} \) satisfying

satisfies also (8.127). This follows from the fact that in the Fourier domain, the relation (8.127) is represented by

Let \( h \) and \( g \) be a pair of FIR filters, and let \( \hat{h}(0)=\sqrt{2} \). Let \( \varphi \) be the scaling function that relates to \( h \) via (8.108). Then, apparently,

The Fourier transform of the corresponding wavelet is defined by

Let \( \sim{h} \) and \( \sim{g} \) be, in turn, another pair of FIR filters, and \( \sim{\varphi} \) and \( \sim{\psi} \) are the related scaling function and the corresponding wavelet. Since both \( \varphi \) and \( \sim{\varphi} \) are compactly supported, they are from \( {L_1}(\mathbb {R})\cap {L_2}(\mathbb {R}) \). Therefore, their Fourier transforms are continuous. This fact can be used to show that the condition

is sufficient for \( \psi \) and \( \sim{\psi} \) to satisfy (8.131).

Hence, given \( h \) and \( g \), one has a certain freedom in choosing filters \( \sim{h} \) and \( \sim{g} \). For example, one can set \( \sim{h}=h \). Then from (8.135), we obtain that

The relation (8.136) imposes certain restrictions on g. Namely, zeros of \( \hat{g} \) must coincide with zeros of \( 2-|\hat{h}(\omega ){|^2} \) and be of the corresponding order. In particular at \( \omega =0 \), there must be one zero of \( \hat{g} \). Since the number of zeros of \( \hat{g} \) at \( \omega =0 \) coincides with the number of zeros of \( \hat{\psi} \) at \( \omega =0 \), it follows that the corresponding wavelet \( \psi \) must have one vanishing moment.

A described strategy was applied in [11] for the construction of the so-called Mallat-wavelet. Redundant wavelet family generated with this wavelet has been proven to be efficient for the reconstruction of the image from the wavelet coefficients measured over multi-scale edges. The starting point while constructing the wavelet in [11] was to set

where \( {{\mathcal{B}}_3} \) is the box spline of degree 2. The Fourier transform of \( \varphi \) is

We know that \( {{\mathcal{B}}_3} \) satisfies the two-scale relation (see (8.102)), and so consequently does \( \varphi \). That is, there exists a filter \( h \) such that \( \varphi =\sum\nolimits_n {{h_n}{\varphi_{-1,n }}} \). The transfer function of \( h \) is yielded from (8.133):

Let us turn our attention to the fact, that the Mallat-wavelet was constructed using the additional requirement to be efficient for the detection of edges. From (8.134) and the fact that both \( g \) and \( h \) are FIR filter, it follows that \( \psi \) is compactly supported. Since \( \psi \) defined by (8.136) must have one vanishing moment, then due to (8.37), there must exist compactly supported smoothing function \( \theta \) such that

Due to (8.38), we obtain then that \( W{f_j}(u)={2^j}\tfrac{\mathrm{ d}}{{\mathrm{ d}t}}f*{{\bar{\theta}}_j}(t) \), so that local maxima of \( |W{f_j}(u)| \) are exactly local maxima of \( |\mathrm{ d}/\mathrm{ d}x(f*{{\bar{\theta}}_j})| \). The latter are edge points within Canny’s approach of an edge detection [12]. Hence, the desired wavelet is determined by the choice of \( \theta \). For example, one can set

However, this choice leads to a piecewise linear wavelet. Since the regularity of the wavelet is crucial for the quality of reconstruction, in [11], \( \theta \) was set to

The Fourier transform of the wavelet \( \psi =-{\theta}^{\prime} \), referred to as the Mallat-wavelet, is

The transfer function of the corresponding filter \( g \), obtained using (8.134) and (8.138), is

Finally using (8.136), for the transfer function of the reconstruction filter \( \sim{g} \), one obtains

Using pairs of filters \( h,g \) and \( \sim{h},\sim{g} \), a fast dyadic wavelet transform can be calculated with a filter bank algorithm called algorithme à trous [13]. The corresponding filter bank algorithm in 2D is

where \( \alpha \beta (m,n) \) is a separable two-dimensional filter, i.e., \( \alpha \beta (m,n)=\alpha (m)\beta (n) \); \( \delta (n) \) is a discrete Dirac; and \( {\alpha_j} \) is the filter obtained from \( \alpha \) by inserting \( {2^j}-1 \) zeros (trous) between its samples. The reconstruction is made recursively by means of

where \( \sim{g} \) is defined by (8.136), and for \( \sim{q} \), we have

(see [11]).

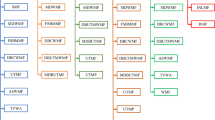

We have described several of the most often applied wavelet decomposition-reconstruction frameworks. All of them can be implemented with fast filter bank algorithms, the common principal scheme of which is depicted in Fig. 8.10.

In the following, we give some heuristic considerations concerning the question of how these schemes can be used for the reduction of noise. As one can see in Fig. 8.10, during the analysis step, the coarse approximation \( {I_{j-1 }} \) is further decomposed in the coarser approximation \( {I_j} \) and the details image \( {d_j} \) that contains the wavelet coefficients \( \left\langle {I,{\psi_{j,k }}} \right\rangle \). Due to the localization properties of wavelets, one can change the wavelet coefficients within some region \( R \) of the image \( {d_j} \), not bothering much about what effect this would have for those structures of the synthesized image \( {{\sim{I}}_{j-1 }} \) which lie apart from \( R \). If, for example, we want to reproduce the region \( R \) of the image \( {I_j} \) in the image \( {{\sim{I}}_{j-1 }} \), we should suppress all details of \( {d_j} \) located in \( R \), that is, the corresponding wavelet coefficients have to be set to zero. In order to suppress noisy details of the image \( {I_{j-1 }} \), we need to know which detail is due to noise.

There are pretty different scenarios to decide whether (or not) the given wavelet coefficient is due to noise. We mention an approach developed in [14]. The idea lying in the background of the method proposed there is to extract relevant details by means of comparative analysis of two or several images of the same object. It will be not quite far from the truth to say, that the comparative analysis is what the radiologist does while investigating the image. Evaluating the X-ray image, the observer automatically extracts features which he qualifies as relevant. Speaking figuratively, the skilled observer correlates a real image with an imaginary one that was created by his visual system. Motivated by the wish to facilitate the daily task of the radiologist, the authors have decided to apply the “correlation approach” in clinical practice. As soon as we have two images of the same object, we can define as relevant those details, which are present in both of them. The other details are qualified as noise and suppressed. In [14] the results of the method applied to quasi identical as well as to those which are slightly deformed relative to each other were reported. The technical aspect of the method is as follows. The both images, say A and B, are decomposed using the dyadic wavelet frame generated by the Mallat mother wavelet. The reason for the choice of this wavelet is the shift-invariance of the related wavelet transform. After the decomposition, details of image A and image B are correlated with each other at each resolution level. The result of the correlation is the weighting matrix, each element of which expresses a measure of similarity between the detail of image A and the detail of image B at the corresponding location. Denoting the measure of similarity with \( w_{m,k}^j \), where \( j \) is the resolution level and \( (m,k) \) the location within the image, we have

If \( w_{m,k}^j=1 \), details of A and B at the level \( j \) and location \( (m,n) \) coincide; the case \( w_{m,k}^j=0 \) means that the corresponding detail is due to noise; the intermediate cases should point at the portion of the noise at the location. Here we demonstrate the functionality of the method applying it to images of a lung phantom, the patch from the one of which is shown on the left of Fig. 8.4. Figure 8.11 shows weighting matrices of the first two resolution levels.

During the following step, elements of detail images are weighted by elements of corresponding weighting matrices. The final image is reconstructed from weighted coefficients. The result of the reconstruction is shown on the right-hand side of Fig. 8.12.

Denoising of the lung phantom. Left: one of the two images of the same object. Middle: the sum of both images. Right: the image reconstructed from details weighted with masks shown in Fig. 8.11

In spite of the seemingly disadvantage expressed by the necessity to have two or more images, the method provides a reduction of noise with minimal risk to lose useful information. In practice, this method could be applied to two images each acquired at half doses, for example, by means of detector based on CCD camera.

In this chapter, we described the mathematical foundations of image denoising and its practical implications. The basic principles are also valid for three-dimensional imaging and can in general be performed in all types of clinical diagnostic imaging. In nuclear medical imaging, the consequences of image denoising have to be studied in very much detail due to the fact that in nuclear medical imaging, the signal-to-noise ratio of the raw data is very poor to avoid high doses of radiation to the patient. This implies that unreflected use of denoising methods particularly also those based on compressing information can result in mainly reducing relevant image structures together with or even instead of the noise. Which kind of noise reduction can be used without deteriorating necessary image information has to be studied for each single application.

Notes

- 1.

It is not always the case in real systems.

- 2.

Norbert Wiener had stated and solved this problem under special conditions for stationary stochastic time series (see [3]).

- 3.

The equality \( f=g \) holds in the weak sense if \( \left\langle {f,h} \right\rangle =\left\langle {g,h} \right\rangle \) for any \( h\in H \).

- 4.

That is, errors that are described with asymmetric density distribution.

References

Zygmund A (1959) Trigonometric series, chapter X, vol 2. Cambridge University, Cambridge

Oppelt A (2005) Imaging systems for medical diagnostics: fundamentals, technical solutions, and applications for systems applying ionizing radiation, nuclear magnetic resonance, and ultrasound. Wiley-VCH Verlag GmbH, New York, 2/E

Wiener N (1949) Extrapolation, interpolation, and smoothing of stationary time series. Wiley, New York

Tomasi C, Manduchi R (1998) Bilateral filtering for gray and color images. In: ICCV’98: proceedings of the sixth international conference on computer vision. IEEE Computer Society, Washington, DC, p 839

Aurich V, Weule J (1995) Non-linear Gaussian filters performing edge preserving diffusion. In: Proceedings of the 7th DAGM-Symposium, Bielefeld, Springer, pp 538–545

Daubechies I (1992) Ten lectures on wavelets. SIAM, Philadelphia, PA

Mallat S (1999) A wavelet tour of signal processing. Academic, San Diego, CA

Mallat S (1989) A theory for multiresolution signal decomposition: the wavelet representation. IEEE Trans Pattern Anal Mach Intell 11(7):674–693

Chui CK (1992) Introduction to wavelets. Academic, London

Cohen A, Daubechies I, Feauveau JC (1992) Orthonormal bases of compactly supported wavelets. Commun Pure Appl Math 45:485–560

Mallat S, Zhong S (1992) Characterization of signals from multiscale edges. IEEE Trans Pattern Anal Mach Intell 4(7):70–732

Canny J (1986) A computational approach to edge detection. IEEE Trans Pattern Anal Mach Intell 36:961–1005

Holschneider M, Kronland-Martinet R, Morlet J, Tchamitchian P (1989) A real time algorithm for signal analysis with help of the wavelet transform. In: Combes JM, Grossmann A, Tchamitchian P (eds) Wavelets: time frequency methods and phase space. Springer, Berlin, pp 286–297

Tischenko O, Hoeschen C, Buhr E (2005) Reduction of anatomical noise in medical X-ray images. Radiat Prot Dosimetry 4(Nos ß3):69–74

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer Berlin Heidelberg

About this chapter

Cite this chapter

Tischenko, O., Hoeschen, C. (2013). Noise Reduction. In: Giussani, A., Hoeschen, C. (eds) Imaging in Nuclear Medicine. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-31415-5_8

Download citation

DOI: https://doi.org/10.1007/978-3-642-31415-5_8

Published:

Publisher Name: Springer, Berlin, Heidelberg

Print ISBN: 978-3-642-31414-8

Online ISBN: 978-3-642-31415-5

eBook Packages: MedicineMedicine (R0)