Abstract

Automated essay scoring (AES) attempts to rate essays automatically using machine learning and natural language processing techniques, hoping to dramatically reduce the manual efforts involved. Given a target prompt and a set of essays (for the target prompt) to rate, established AES algorithms are mostly prompt-dependent, thereby heavily relying on labeled essays for the particular target prompt as training data, making the availability and the completeness of the labeled essays essential for an AES model to perform. In aware of this, this paper sets out to investigate the impact of data sparsity on the effectiveness of several state-of-the-art AES models. Specifically, on the publicly available ASAP dataset, the effectiveness of different AES algorithms is compared relative to different levels of data completeness, which are simulated with random sampling. To this end, we show that the classical RankSVM and KNN models are more robust to the data sparsity, compared with the end-to-end deep neural network models, but the latter leads to better performance after being trained on sufficient data.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Automated essay scoring (AES) is usually considered as a machine learning problem [3, 7, 20] where learning algorithms such as k-nearest neighbor (KNN) and support vector machines for ranking (RankSVM) are applied to learn a rating model for a given essay prompt, after being trained on a set of labeled essays rated by human assessors [5]. Currently, the AES systems have been widely used in large-scale English writing tests, e.g. Graduate Record Examination (GRE), to reduce the human efforts in the writing assessments.

Existing AES systems rely on handcrafted features which encode intuitive dimensions of semantics or writing quality, including lexical complexity, grammar errors, syntactic complexity, organization and development, and coherence etc. [21]. Such AES systems are mostly prompt-dependent in the sense that they can function well only on the essays for the prompt on which manually labeled essays are available for training. Such prompt-dependent natures sometimes make it hard to apply AES in reality especially when limited rated essays, if any, are available for training. For example, in a writing test, students are asked to write essays for a target prompt without any rated examples, where the prompt-dependent methods are unlikely to perform well due to the lack of training data. Actually, as suggested by a recent study on the task-independent features, the lack of prompt-specific training data is one of the major causes for the degrading performance of current AES methods [23], highlighting the importance of the completeness of training data for AES models. As far as our knowledge, however, it is still not clear to what extent the incompleteness could affect the performance of an AES model and, more importantly, what is the prerequisites for an AES model to function in terms of the number of training data. To mitigate this gap, this paper attempts to investigate the influences of the incompleteness of training data over several AES models by answering following research questions.

-

RQ1: How the data sparsity problem affects the performance of different AES methods?

-

RQ2: To make an AES model perform, how many rated essays are requested at least for training?

By answering the above questions, we hope to understand the reliances on the completeness of training data in different models and attempt to estimate the least manual workloads that required to make an AES system to perform. To this end, extensive empirical experiments are conducted on the standard Automated Student Assessment Prize (ASAP) datasetFootnote 1. As shown by the results, the classical RankSVM and KNN models can learn effective AES models with as few as 20 labeled essays, and they tend to converge when being trained on 200 rated essays. Meanwhile, the AES models based on deep neural network normally require more training data, but could outperform the classical models right after being trained on enough labeled data.

2 Related Work

2.1 Automated Essay Scoring Algorithms

Most of existed Automated Essay Scoring (AES) algorithms view automated essay scoring as classification or regression problem [3, 12,13,14,15]. They usually directly learn a classification or regression model based on hand crafted features, such as lexical and syntactic features, and estimate the score of an unseen essay with the prediction of the learned model. In addition, there are also works seeing AES as a ranking problem by applying pairwise learning to ranking algorithms on AES problem [5, 22]. Instead of directly utilizing the prediction of learned model as the estimation of an unseen essay as classification or regression, they transform the ranking score given by learning to rank model into the estimation score of the unseen essay by heuristic or learning methods. Intuitively, AES algorithms based on learn to rank take the relative writing quantity between a given pair of essays compared to algorithms based on classification or regression. Experimental results in [5, 22] also show the improvement of learning to rank approaches over traditional classification and regression algorithms. Specifically, Chen and He propose to incorporate the evaluation metric in AES into the loss function to directly optimize the agreement between human and machine raters [4].

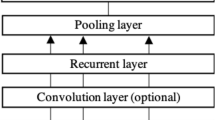

Traditional AES models require much work on feature engineering to be effective. Recent years there have been efforts in developing AES approaches based on deep neural networks (DNN), for which feature engineering is no longer required. Alikaniotis et al. propose to learn score-specific word embeddings and utilize a two-layer bi-directional Long-Short Term Memory networks (bi-LSTM) followed by several dense layers to predict essay score [1]. Taghipour and Ng explore a variety of neural network model architectures based on recurrent neural networks [17]. Tay et al. further extend [17] by incorporating neural coherence features [18]. Some works first utilize CNN layers to learn representations of sentences and then CNN [8] or RNN [9] layers are applied to further learn representations of essays.

There are also some works working on the adaption problems between different essay prompts. Phandi et al. propose to apply correlated linear regression to solve the domain adaption problem in AES [14]. They train their AES model using the data from source prompt and a few target prompt essays, such as 10, 25, 50, 100 target prompt essays. Cummins et al. use a constrained multi-task pairwise preference learning approach to combine the data from different prompts to improve the performance [6]. They also find a few target prompt essays is needed to obtain effective results in terms of kappa, a prime evaluation metric for AES.

2.2 Pre-defined Essay Features

In this section, we introduce the hand crafted features used in this work for traditional AES models, such as RankSVM and KNN. These features are widely used in previous works [3, 4, 10, 16] and are concluded in Table 1. The detailed description of the pre-defined hand crafted features are as follows:

Lexical Features

-

Statistics of word length: The number of words with length in characters larger than 4, 6, 8, 10, 12 in each essay respectively. The mean and variance of word length in characters in each essay. In general, hard words are likely much longer in length. Statistics of word length are expected to reflect one’s grasp of complex words.

-

Unique words: The number of unique words in each essay, normalized by the essay length in words. This is expected to reflect a student’s quantity of vocabulary.

-

Grammatical/Spelling errors: The number of grammatical or spelling errors in each essay. An essay with too many grammatical or spelling errors usually hints a bad grasp of word spelling.

Syntactical Features

-

Statistics of sentence length: The number of sentences whose length in words are larger than 10, 18 and 25 respectively. The mean and variance of sentence length in words. The variety of the length of sentences potentially reflects the complexity of syntactics.

-

Clauses: The mean number of clauses in each sentence, normalized by the number of sentence in an essay. The maximum number of clauses of a sentence in an essay.

-

Sentence structure: The average height of the parser tree of each sentence in an essay. The average of the sum of the depth of all nodes in a parser tree of each sentence in an essay.

-

Preposition and Comma: The number of prepositions and commas in each sentence, normalized by sentence length in words.

Grammar and Fluency Features

-

Word bigram and trigram: The grammar and fluency of an essay can be measured by the mean tf/TF of n-grams where tf is the frequency of n-gram in a single essay and TF is the corresponding frequency in the whole essay collection. A n-gram with high tf/TF generally indicates it is wrong use. We utilize bigram and trigram tf/TF feature in this work.

-

POS bigram and trigram: Similar with bigram and trigram features, the mean tf/TF of POS bigrams and trigrams are also incorporated.

Content and Prompt-Specific Features

-

Essay length: The number of words and characters in an essay. Since the fourth root of essay length in words is proved to be highly correlated with the essay score [16], we use the fourth root of the essay length measured by the number of words and characters in experiments.

-

Word vector similarity: Mean cosine similarity of word vectors, in which the element is the term frequency multiplied by inverse document frequency (tf-idf) of each word. It is calculated as the weighted mean of cosine similarities and the weight is set as the corresponding essay score.

-

Semantic vector similarity: Semantic vectors are generated by Latent Semantic Analysis. The calculation of mean cosine similarity of semantic vectors is the same with word vector similarity.

All the features are normalized through min-max method to avoid several features are dominant compared to other features. For traditional models, it is important to have elaborate feature engineering procedures if we want obtain effective performance. These features are proved to be effective in previous works [3, 4, 10, 16], thus chosen in this work.

3 Data Sparsity Simulation

In this section, we introduce the simulation method of data sparsity in automated essay scoring. We start with a brief introduction to the dataset and the evaluation metric used, followed by the data sparsity simulation method.

Dataset. The dataset used in the experiments is the Automated Student Assessment Prize (ASAP) dataset, the dataset used in the ASAP competition by Kaggle, which is widely used for AES [1, 4, 9]. The statistics of the dataset are summarized in Table 2. There are 8 sets of essays in the dataset from different prompts. All essays are written by students ranging in grade 7 to grade 10. And the essays are different in essay length and score range.

Evaluation Metric. Quadratic weighted Kappa (QWK) is used to measure the agreement between the predicted scores and the corresponding scores from human raters. It is the official evaluation metric in the ASAP competition and is widely used in previous works [1, 4, 22]. QWK is calculated as follows:

where w, O and E are matrices of weights, observed scores and expected scores. \(O_{i,j}\) is the number of essays that receive a score i from the first rater and a score j from the second rater. \(w_{i,j}=(i-j)^2/(N-1)^2\), where N is the number of possible scores. E is the outer product between the score vectors of the two raters, normalized to have the same sum as O.

Simulating Data Sparsity. For each of the eight essay sets in ASAP, we divide the dataset into training, validation and testing subsets in line with [1] by utilizing the ids set of validation and test set essays released by them. Concretely, 80% of each essay set are used for training and the remaining 20% for testing. Additionally, 20% of the training data are reserved for validation. The remaining training essays are then deemed a complete training set, out of which essays are sampled to simulate data incompleteness. A simple way of doing so is to randomly select essays from the training set. However, a drawback of this simple simulation would be that the random sample may not reflect the actual score distribution in the complete training set, which in turn biases the training of the AES model. Therefore, in this paper, in order to simulate the data sparsity, the training essays are first sorted by their actual scores, and then partitioned into 5 equal-size bins. From each bin, equal number of essays are randomly sampled as the incomplete training set. In this work, the following training set sizes are sampled to simulate the data sparsity: [5, 10, 15, 20, 25, 30, 50, 100, 150, 200, 300, 400, 500, 600, 700, 800, 900, 1000, 1100]. In consideration of the randomness may lead to large variance especially when training set size is small, the random sampling is repeated 10 time for each sample size, and the average performance trained from the 10 random samples are reported. Compared to the simple simulation that samples from the whole training set, sampling from the sorted bins is expected to preserve the score distribution such that the effect of the random sampling on learned AES model can be minimized.

4 Experimental Setup

In this section, we first introduce the dataset, AES models and evaluation metric. Then we describe the details about how we simulate the data sparsity.

4.1 AES Models

This study uses two classical AES methods based on RankSVM and k-nearest neighbor algorithms, respectively. In addition, a recent state-of-the-art AES model based on end-to-end deep neural networks is also involved in this study. Details of the AES models used are given below.

-

RankSVM is a pairwise learning to rank algorithm and is applied on AES problem in many works [5, 22]. RankSVM regards AES problem as a ranking problem by taking the relative writing quality for a given essay pair into consideration. First, it assigns a ranking score to each essay in both training and testing essay sets. Then the predicted score of an essay in the testing set is estimated by averaging the human rated score of the K training essays whose ranking scores are nearest the testing essay’s. The value of K is chosen from [6, 8, 10, 12, 14] by maximizing the performance on validation set. The linear kernel RankSVMFootnote 2 is used with the parameter C amongst [3, 4, 5, 6, 7].

-

K nearest neighbors. K nearest neighbors (KNN) [2] is a simple non-parametric algorithm, of which the main idea is to estimate one’s properties by considering its K nearest neighbors. In this work, we estimate the score of an essay in testing set by averaging the human rated scores of it’s K nearest neighbor essays in training set. The distance is measured by euclidean distance. The value of K is also amongst [4, 6, 8, 10, 12, 14].

-

Neural Model. Deep Neural network (DNN) is popular in recent years for their good performance on many tasks without feature engineering and has already been successfully applied on AES [1, 9, 17, 18]. In this work, we utilize the architecture of the neural network in [17], which is a state-of-art neural model for AES. First, a LSTM network is applied on the word embedding sequence of a given essay to obtain a list of hidden states. Then these hidden states are averaged and fed into a dense layer with sigmoid activation to get the predicted score, ranging in [0, 1]. The RMSProp optimization algorithm is used to minimized the mean squared error (MSE) loss. The batch size are set to 32. Since the human rated scores are not in [0, 1], we transform the original scores into [0, 1] for training and back into the original range for evaluation. Word embeddings are initialized by the pre-trained embeddings released by [24]. Other setups are also completely in line with [17], we recommend to refer to [17] for space reason.

5 Results and Analysis

In this section, we present the experimental results. The kappa performance of RankSVM, KNN and DNN on incomplete training sets with different sizes are presented in Tables 3, 4 and 5, respectively. As it is widely accepted that the human-machine agreement of an AES system, measured by Kappa, should be at least 0.70 [19], a training data set is considered sufficient if the learned model results in a performance that meets the 0.70 requirement. Therefore, in the tables, the kappa values larger than 0.70 are in italic, and the results improved from below 0.70 are in bold. From the experimental results in Tables 3, 4 and 5, we attempt to answer the two main research questions raised in Sect. 1 as follows.

For RQ1, the performance of traditional classification models (RankSVM and KNN) increases fast even if the number of training essays are very small, from 5 to 30. A likely cause for this observation is that the classical RankSVM and KNN models have relatively high tolerance to data sparsity, and can achieve reasonable performance with a small number of training data. Compared to RankSVM and KNN, the performance of the deep neural network model (DNN) appears to be sensitive to data sparsity. When the training set is small, the performance of DNN is much lower than RankSVM and KNN, and the Kappa value is lower than 0.70 on all 8 prompts until the training set size is increased to 200 (see Table 5). We believe this is due to the fact that, compared to RankSVM and KNN, the deep neural network model is complicated, with many parameters to learn, and consequently, requires a large amount of training data. On the other hand, the neural network model outperforms the two classical models with the use of all training data available, showing that the deep model has strong ability in learning rating patterns out of sufficient training data.

For RQ2, we find that the Kappa values of RankSVM on prompts 1 and 5 are larger than 0.7 when the training set size is only 20. In addition, RankSVM achieves Kappa \(\ge \) 0.7 on prompts 6 and 7 with training set sizes of 100 and 200, respectively. As for KNN, it achieves Kappa \(\ge \) 0.7 on prompts 1 and 5 with training set size of 100 and 20. Compared to traditional methods, DNN needs much more training data to surpass the 0.70 threshold, of which the sizes are 800, 300, 200, 600 and 300 for prompts 1, 4, 5, 6, 7, respectively. Overall, the minimal number of training essays required to learn an effective prompt-specific AES model depends on the data and the learning algorithm used. For example, RankSVM requires only 20 training essays to reach the Kappa = 0.70 threshold on prompts 1 and 5, but is unable to outperform this threshold on prompts 2–4 even if all training data available are used. To investigate this issue, we plot the real score distribution of essays for all 8 prompts in Fig. 1. As we can see from this figure, the score distributions of essays written for prompts 1 and 5 are more diverse than those written for prompts 2–4, and it is likely the case that a majority of essays written for prompts 2–4 are of medium quality such that the learned model is unable to differentiate between essays with similar quality. Similar observation can also be made from the score distribution of prompt 8, where most essays receive scores from 30 to 50, and in particular, more than 20% of the training essays (100 out of 500) are rated 40. As a result, it is difficult for the AES model to learn the nuances between essays with very close ratings (e.g. 39 and 40). Note that for some of the prompts, the performance of the AES model can never reach the kappa = 0.70 threshold. We suggest that this is caused by the biased distribution of essays scores, and in this case, more training essays with diverse quality are needed for learning an effective AES model.

To further investigate, we present the performance loss in Kappa, with respect to the use of all training data available, with training data sizes of 30, 50, 100, 150, and 200 essays, in Table 6. When training set sizes are too small, we can find the performances of DNN are far from the corresponding best performance, i.e. the last row in Table 5. From Table 6 we find that when there are only 30 essays in training set, RankSVM is able to achieve good performances on prompts 2 and 5, of which the kappa losses compared to the best performance obtained on much more training essays are only 6.82% and 7.73% respectively. For KNN, the kappa losses on prompts 3, 4 and 5 at training set size 30 are 3.62%, 7.26% and 8.57%, showing that this model converges with only a small number of training essays. The kappa losses on all eight prompts at training sizes 100 and 200 are within 10% and 5% respectively for RankSVM. This demonstrates that traditional methods are able to achieve relatively stable performance with a few training essays at the cost of small kappa losses, whereas the DNN model requires a large amount of training data to learn, and can indeed lead to the performance that is better than RankSVM and KNN with the availability of enough training data, as shown in the results in Tables 3, 4 and 5.

6 Conclusions

This paper has conducted comprehensive experiments to investigate two key research questions (RQs) regarding the data sparsity problem in automated essay scoring. According to the results, for RQ1, compared to the classical RankSVM and KNN models, the recent deep neural networks are much more sensitive to data sparsity due to its model complexity. For RQ2, in general, the classical models like RankSVM and KNN require a small number of training essays due to their tolerance to data sparsity. They can learn an effective AES model with as few as 20 training essays. Both RankSVM and KNN converge with about 200 training essays at most. The DNN model, in contrast, is relatively data-hungry due to its model complexity. According to the results, the DNN model does not appear to converge even if all training data available are used. Even though, the performance of the AES model learned by DNN is better than RankSVM and KNN in most cases when more than 1,000 training essays are used.

As indicated by the results, in real-life applications, for a given essay prompt, i.e. there are only very few rated essays for training, or even no rated essays at all, it is recommended to use RankSVM for its high tolerance to data sparsity. It is also recommended to rate 20–100 essays by human assessors in order to learn an effective AES model by RankSVM. On the other hand, if there are enough, namely more than 1,000 rated essays available for a given prompt, it is recommended to use neural network model to learn the AES model to fully utilize DNN’s power in learning patterns out of sufficient training data. Finally, the results indicate that the performance of prompt-independent AES [11] can be potentially improved by including a small number of labeled essays written for the target prompt.

References

Alikaniotis, D., Yannakoudakis, H., Rei, M.: Automatic text scoring using neural networks. In: ACL (1). The Association for Computer Linguistics (2016)

Altman, N.S.: An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 46(3), 175–185 (1992)

Attali, Y., Burstein, J.: Automated essay scoring with e-rater® v. 2. J. Technol. Learn. Assess. 4(3), 1–31 (2006)

Chen, H., He, B.: Automated essay scoring by maximizing human-machine agreement. In: EMNLP, pp. 1741–1752. ACL (2013)

Chen, H., Jungang, X., He, B.: Automated essay scoring by capturing relative writing quality. Comput. J. 57(9), 1318–1330 (2014)

Cummins, R., Zhang, M., Briscoe, T.: Constrained multi-task learning for automated essay scoring. In: ACL (1), pp. 789–799. The Association for Computer Linguistics (2016)

Dikli, S.: An overview of automated scoring of essays. J. Technol. Learn. Assess. 5(1) (2006)

Dong, F., Zhang, Y.: Automatic features for essay scoring - an empirical study. In: EMNLP, pp. 1072–1077. The Association for Computational Linguistics (2016)

Dong, F., Zhang, Y., Yang, J.: Attention-based recurrent convolutional neural network for automatic essay scoring. In: CoNLL, pp. 153–162. Association for Computational Linguistics (2017)

Foltz, P.W., Laham, D., Landauer, T.K.: Automated essay scoring: applications to educational technology. In: World Conference on Educational Multimedia, Hypermedia and Telecommunications, pp. 939–944 (1999)

Jin, C., He, B., Hui, K., Sun, L.: TDNN: a two-stage deep neural network for prompt-independent automated essay scoring. In: ACL. The Association for Computer Linguistics (2018)

Larkey, L.S.: Automatic essay grading using text categorization techniques. In: SIGIR, pp. 90–95. ACM (1998)

Mcnamara, D.S., Crossley, S.A., Roscoe, R.D., Allen, L.K., Dai, J.: A hierarchical classification approach to automated essay scoring. Assess. Writ. 23, 35–59 (2015)

Phandi, P., Chai, K.M.A., Ng, H.T.: Flexible domain adaptation for automated essay scoring using correlated linear regression. In: EMNLP, pp. 431–439. The Association for Computational Linguistics (2015)

Rudner, L.M.: Automated essay scoring using Bayes’ theorem. Nat. Counc. Measur. Educ. New Orleans La 1(2), 3–21 (2002)

Shermis, M.D., Burstein, J. (eds.): Automated Essay Scoring: A Cross Disciplinary Perspective. Lawrence Erlbaum Associates, Hillsdale (2003)

Taghipour, K., Ngm H.T.: A neural approach to automated essay scoring. In: EMNLP, pp. 1882–1891. The Association for Computational Linguistics (2016)

Tay, Y., Phan, M.C., Tuan, L.A., Hui, S.C.: SkipFlow: Incorporating neural coherence features for end-to-end automatic text scoring. CoRR, abs/1711.04981 (2017)

Williamson, D.M., Xi, X., Jay Breyer, F.: A framework for evaluation and use of automated scoring. Educ. Measur.: Issues Pract. 31(1), 2–13 (2012)

Williamson, D.M.: A framework for implementing automated scoring. In: Annual Meeting of the American Educational Research Association and the National Council on Measurement in Education, San Diego, CA (2009)

Yang, Y., Buckendahl, C.W., Juszkiewicz, P.J., Bhola, D.S.: A review of strategies for validating computer-automated scoring. Appl. Measur. Educ. 15(4), 391–412 (2002)

Yannakoudakis, H., Briscoe, T., Medlock, B.: A new dataset and method for automatically grading ESOL texts. In: ACL, pp. 180–189. The Association for Computer Linguistics (2011)

Zesch, T., Wojatzki, M., Scholten-Akoun, D.: Task-independent features for automated essay grading. In: BEA@NAACL-HLT, pp. 224–232. The Association for Computer Linguistics (2015)

Zou, W.Y., Socher, R., Cer, D.M., Manning, C.D.: Bilingual word embeddings for phrase-based machine translation. In: EMNLP, pp. 1393–1398. ACL (2013)

Acknowledgments

This work is supported by the National Natural Science Foundation of China (61472391).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Ran, Y., He, B., Xu, J. (2018). A Study on Performance Sensitivity to Data Sparsity for Automated Essay Scoring. In: Liu, W., Giunchiglia, F., Yang, B. (eds) Knowledge Science, Engineering and Management. KSEM 2018. Lecture Notes in Computer Science(), vol 11061. Springer, Cham. https://doi.org/10.1007/978-3-319-99365-2_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-99365-2_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-99364-5

Online ISBN: 978-3-319-99365-2

eBook Packages: Computer ScienceComputer Science (R0)